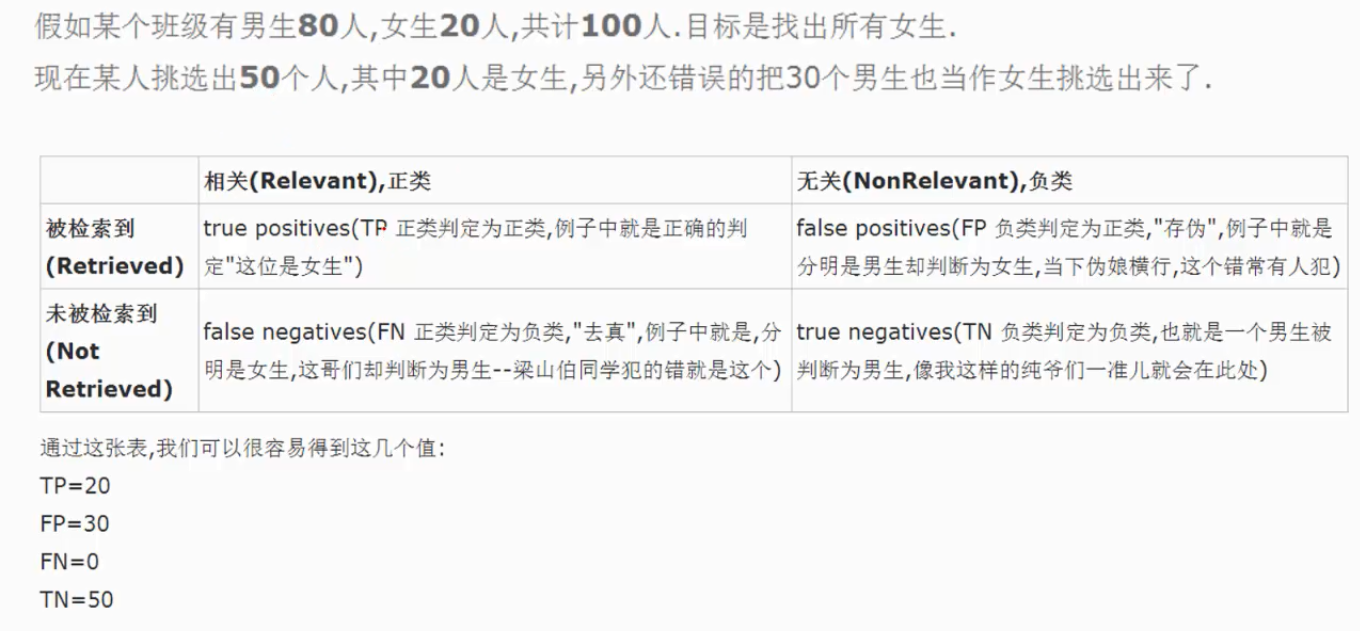

机器学习_线性回归和逻辑回归_案例实战:Python实现逻辑回归与梯度下降策略_项目实战:使用逻辑回归判断信用卡欺诈检测

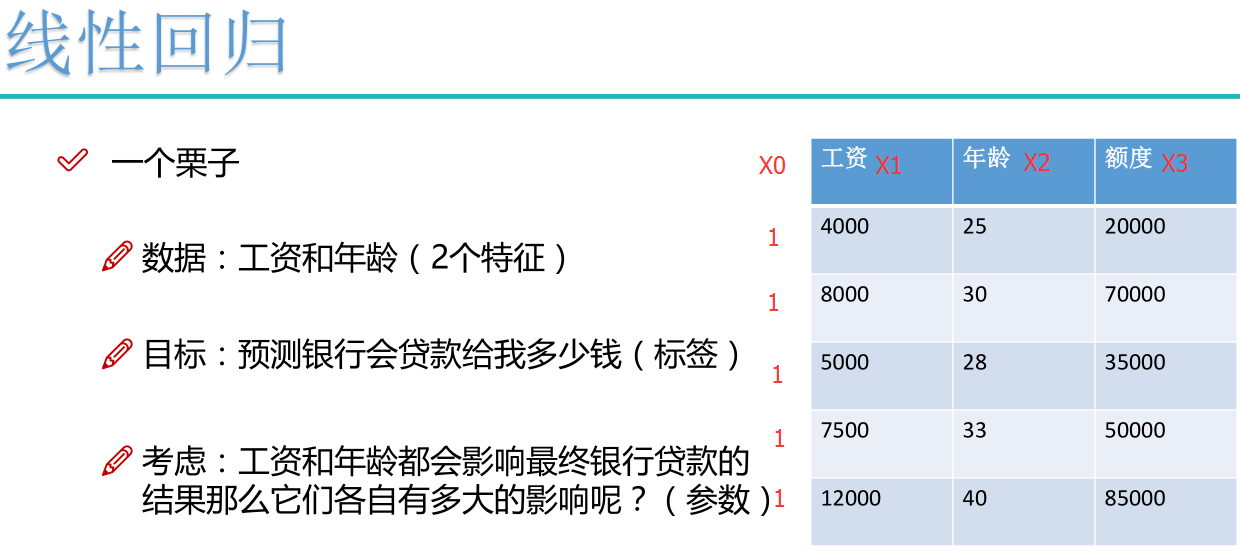

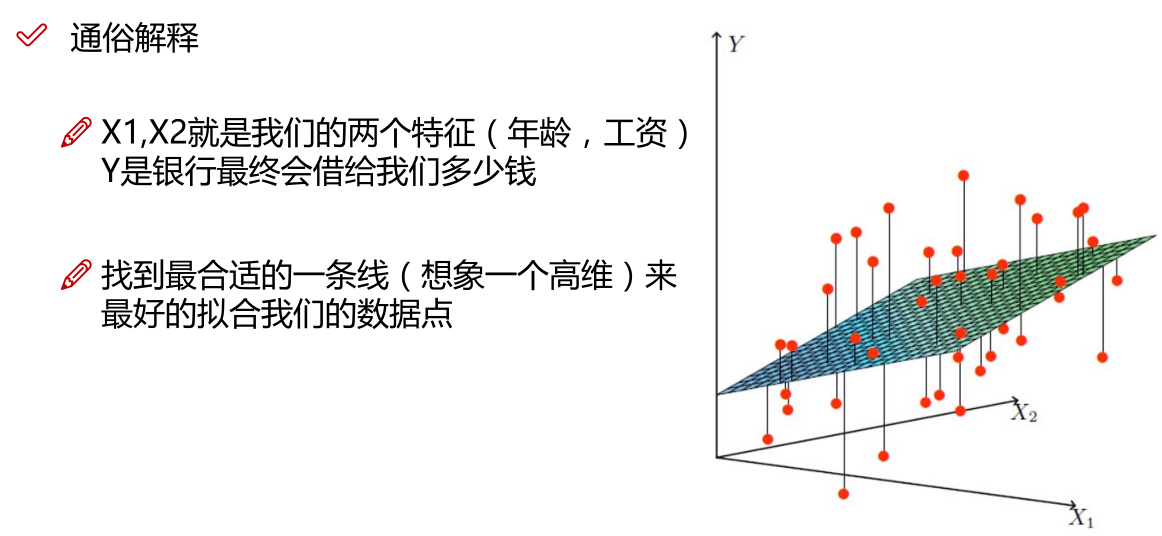

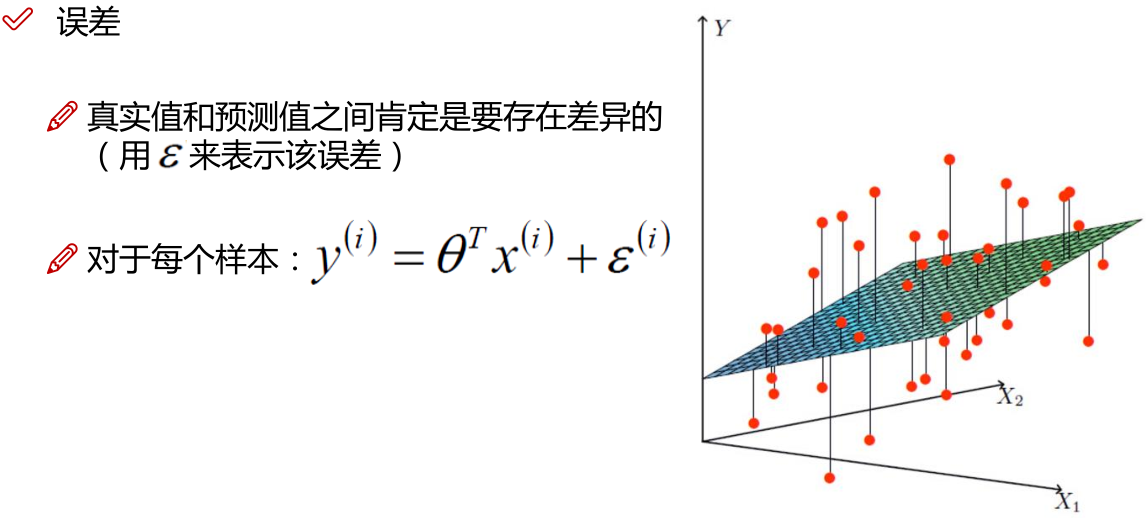

线性回归:

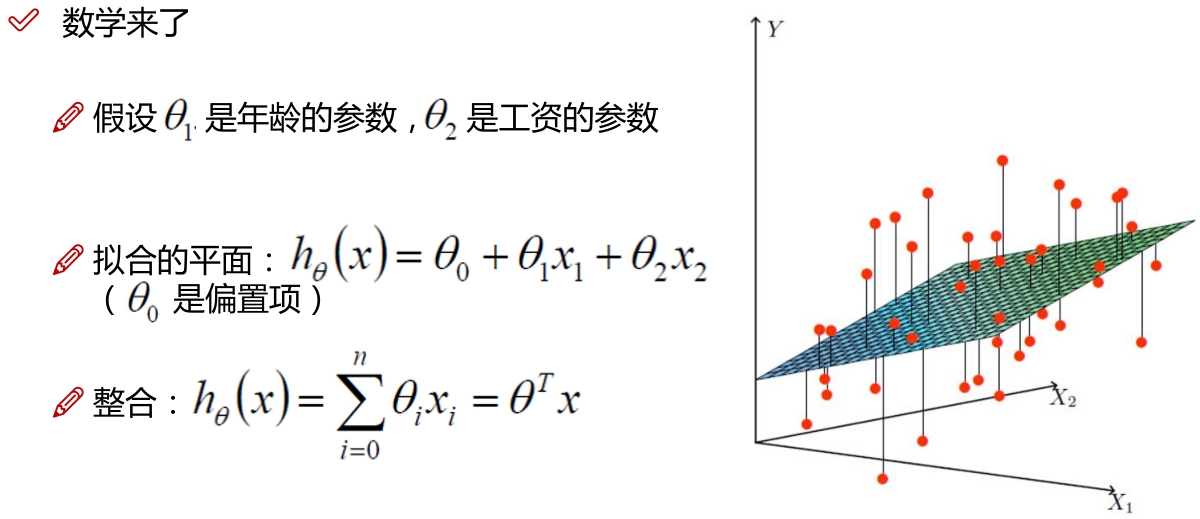

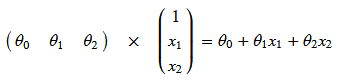

注: 为偏置项,这一项的x的值假设为[1,1,1,1,1....]

为偏置项,这一项的x的值假设为[1,1,1,1,1....]

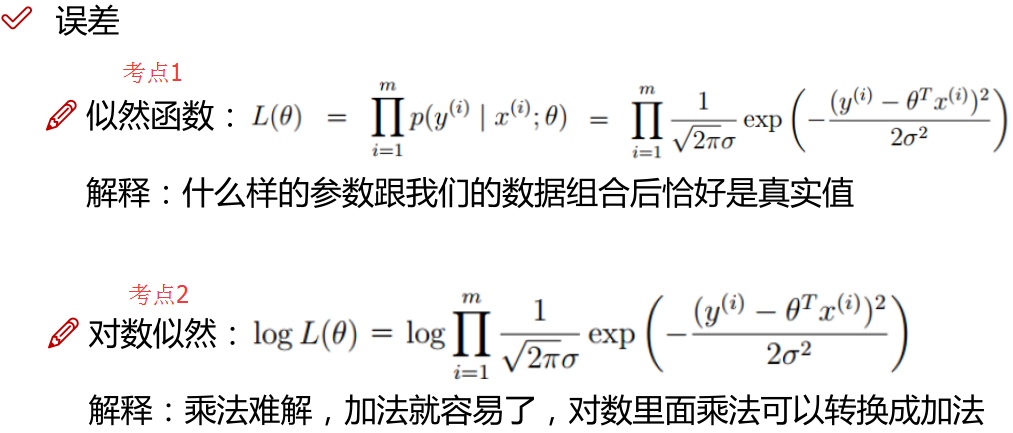

注:为使似然函数越大,则需要最小二乘法函数越小越好

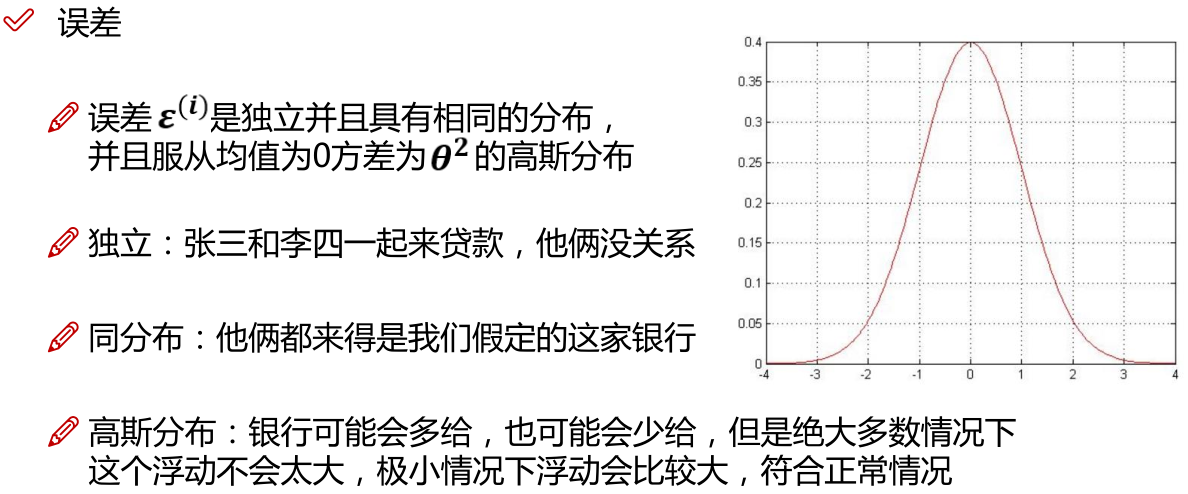

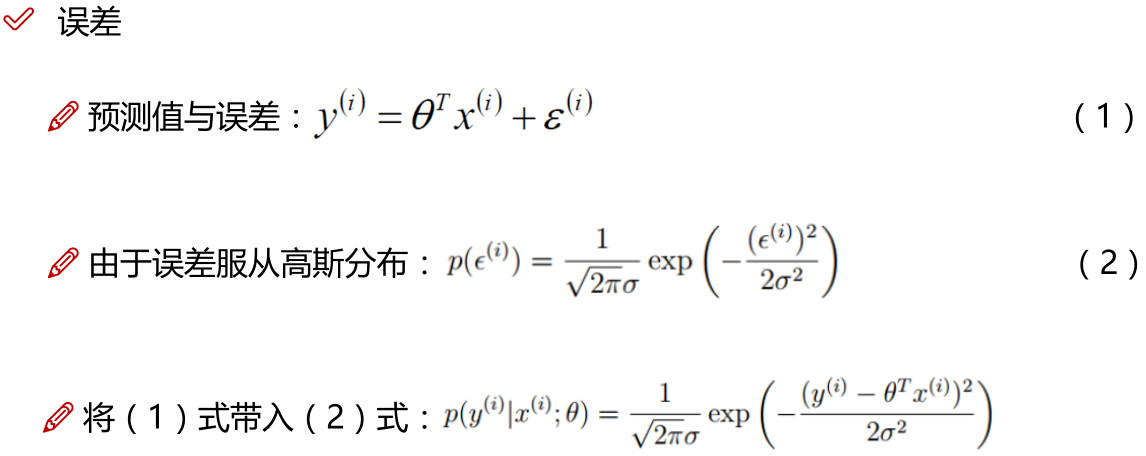

线性回归中为什么选用平方和作为误差函数?假设模型结果与测量值 误差满足,均值为0的高斯分布,即正态分布。这个假设是靠谱的,符合一般客观统计规律。若使 模型与测量数据最接近,那么其概率积就最大。概率积,就是概率密度函数的连续积,这样,就形成了一个最大似然函数估计。对最大似然函数估计进行推导,就得出了推导后结果: 平方和最小公式

注:

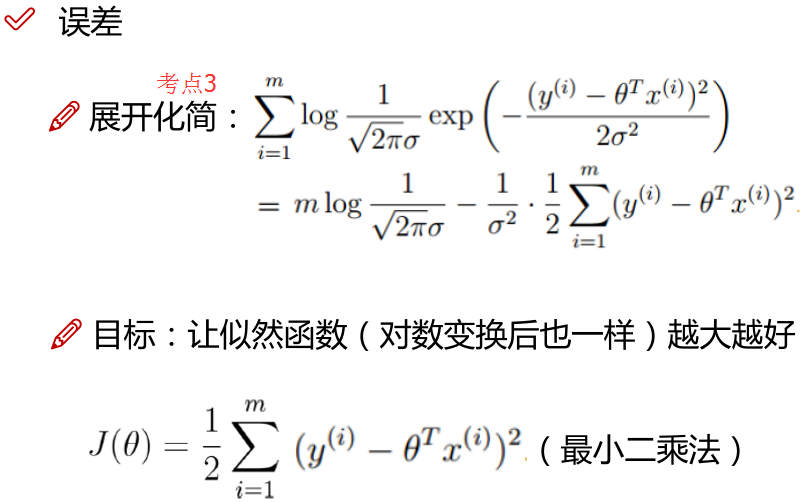

1.x的平方等于x的转置乘以x。

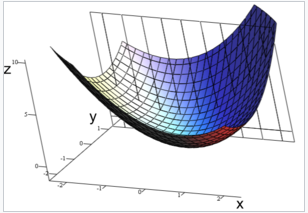

2.机器学习中普遍认为函数属于凸函数(凸优化问题),函数图形如下,从图中可以看出函数要想取到最小值或者极小值,就需要使偏导等于0。

3.一些问题上 没办法直接求解,则可以在上图中选一个点,依次一步步优化,取得最小值(梯度优化)

没办法直接求解,则可以在上图中选一个点,依次一步步优化,取得最小值(梯度优化)

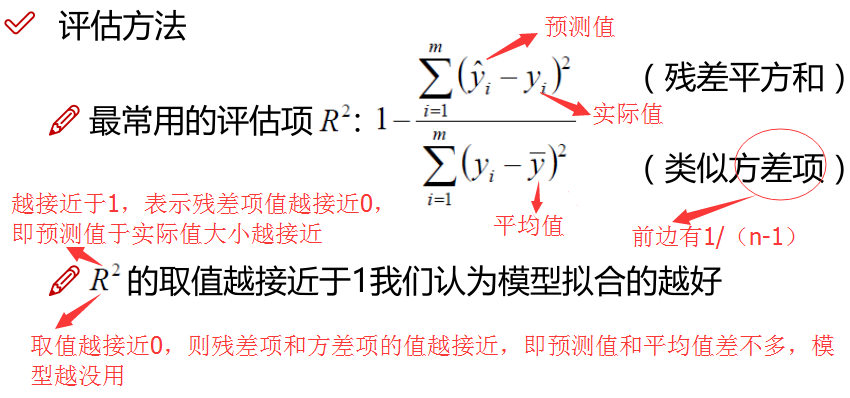

R平方是多元回归中的回归平方和占总平方和的比例,它是度量多元回归方程中拟合程度的一个统计量,反映了在因变量y的变差中被估计的回归方程所解释的比例。

R平方越接近1,表明回归平方和占总平方和的比例越大,回归线与各观测点越接近,用x的变化来解释y值变差的部分就越多,回归的拟合程度就越好。

R平方取值范围是负无穷到1,越是接近于1越好。

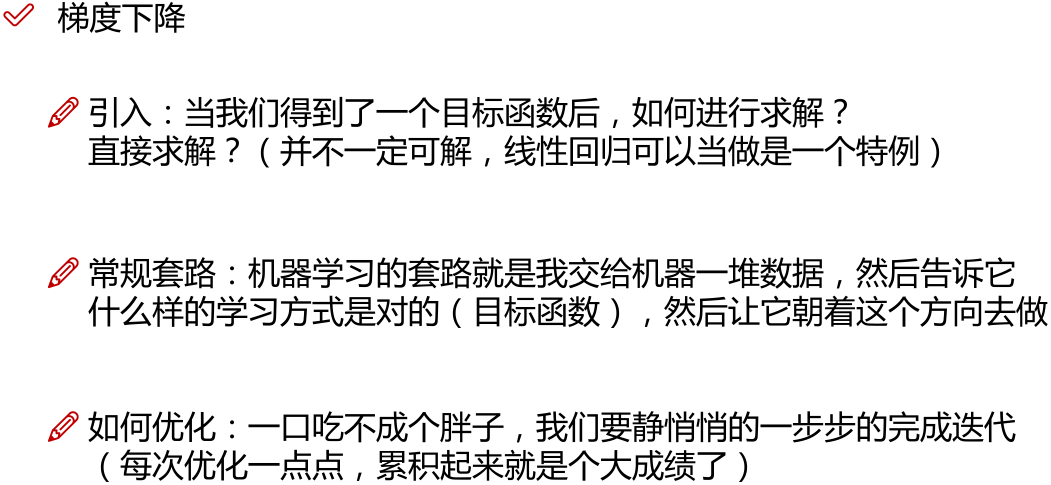

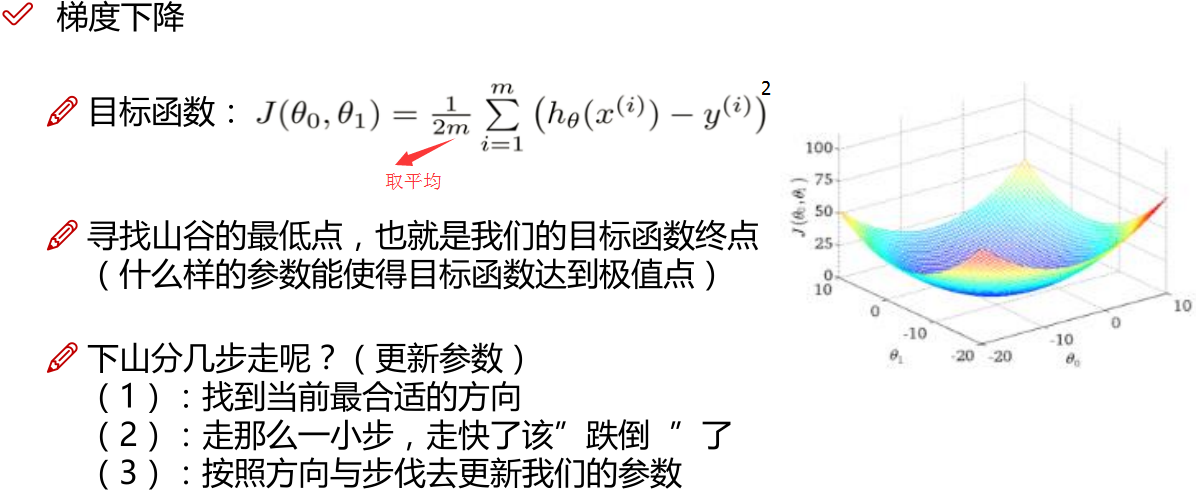

当 没办法直接求解是:

没办法直接求解是:

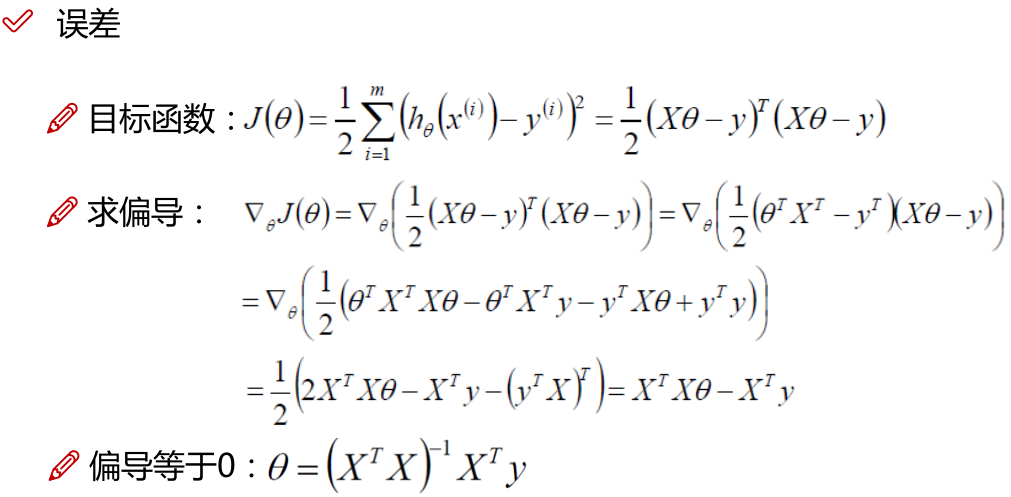

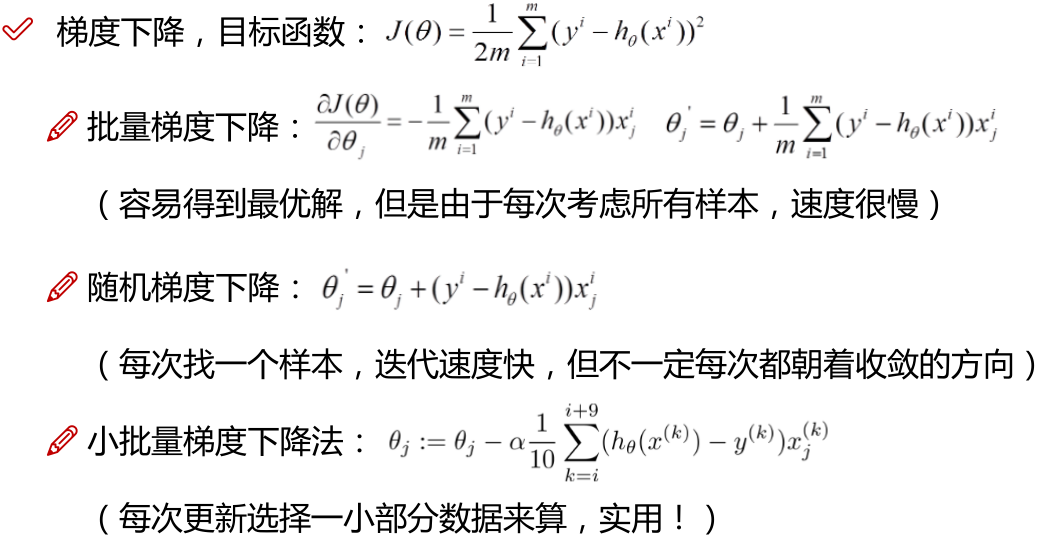

注:批量梯度下降法BGD;

随机梯度下降法SGD;

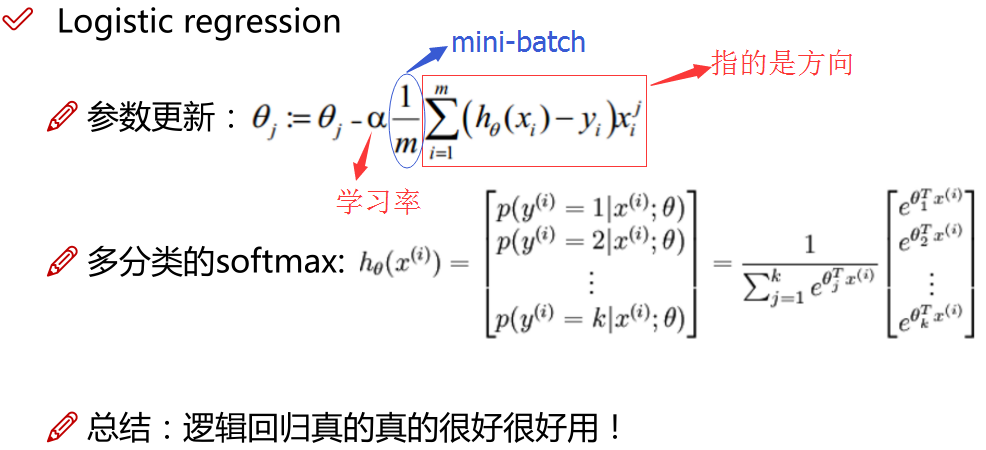

小批量梯度下降法MBGD(在上述的批量梯度的方式中每次迭代都要使用到所有的样本,对于数据量特别大的情况,如大规模的机器学习应用,每次迭代求解所有样本需要花费大量的计算成本。是否可以在每次的迭代过程中利用部分样本代替所有的样本呢?基于这样的思想,便出现了mini-batch的概念。 假设训练集中的样本的个数为1000,则每个mini-batch只是其一个子集,假设,每个mini-batch中含有10个样本,这样,整个训练数据集可以分为100个mini-batch。)

点击查看:

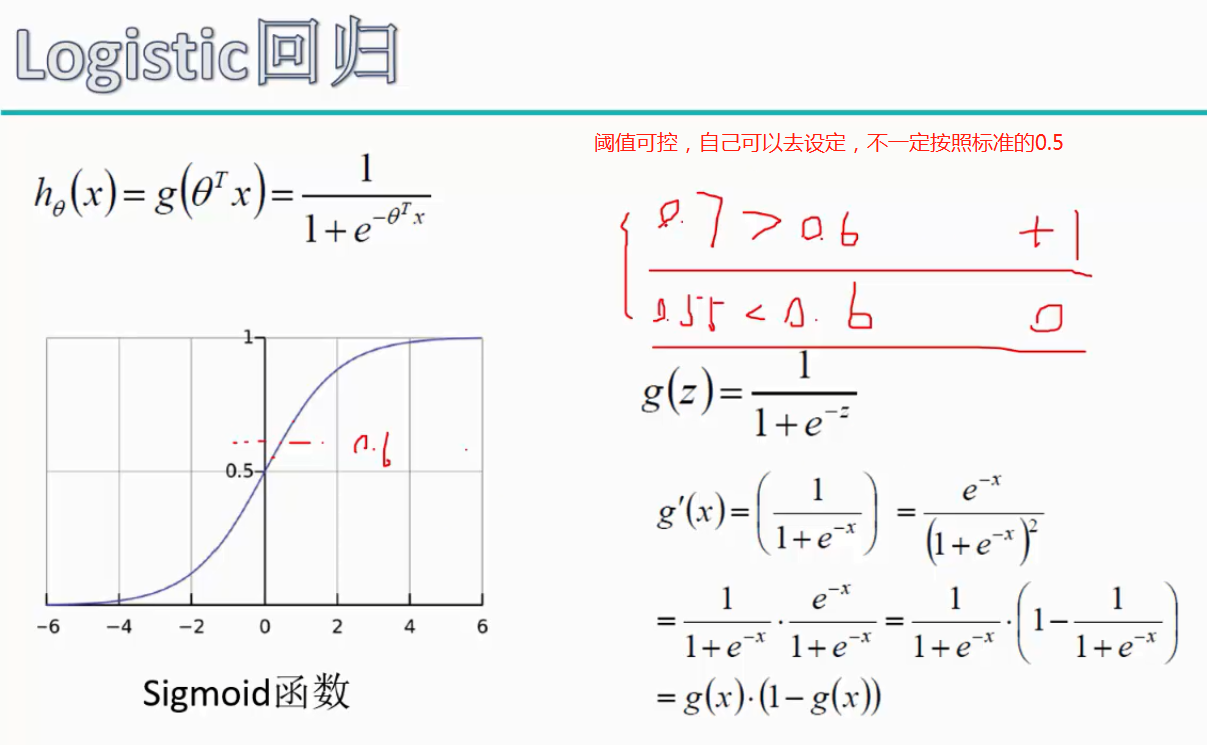

逻辑回归:

线性回归的应用场合大多是回归分析,一般不用在分类问题上,原因可以概括为以下两个:

1)回归模型是连续型模型,即预测出的值都是连续值(实数值),非离散值;

2)预测结果受样本噪声的影响比较大。

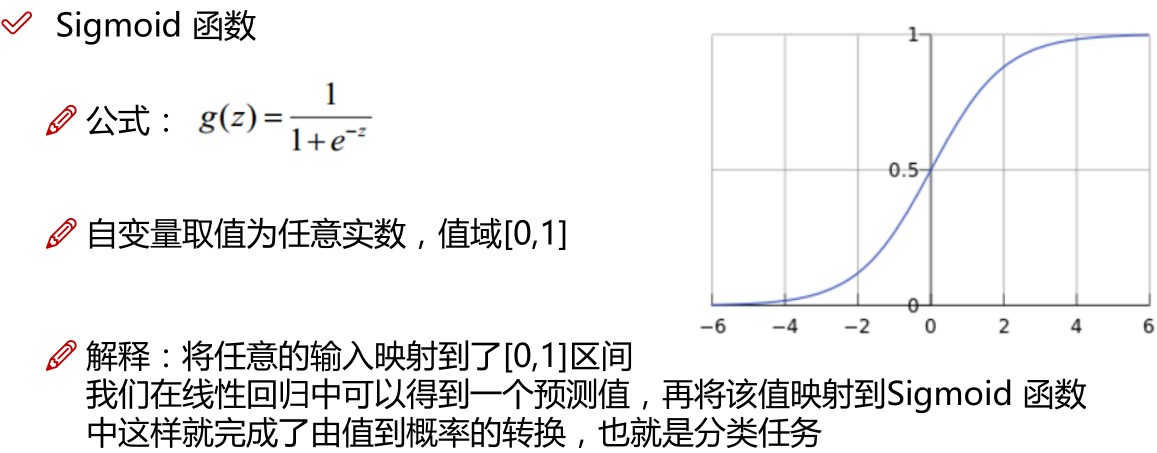

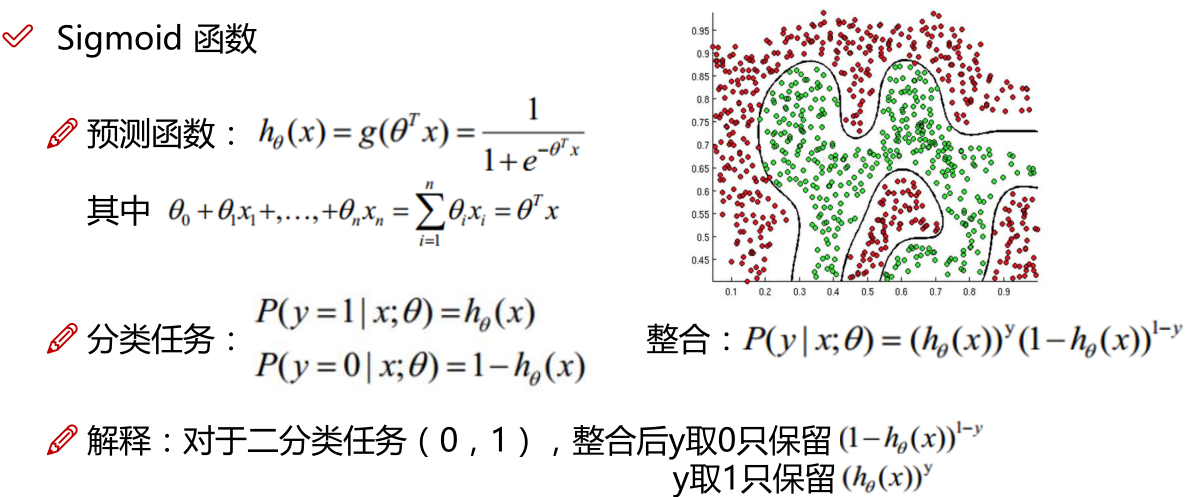

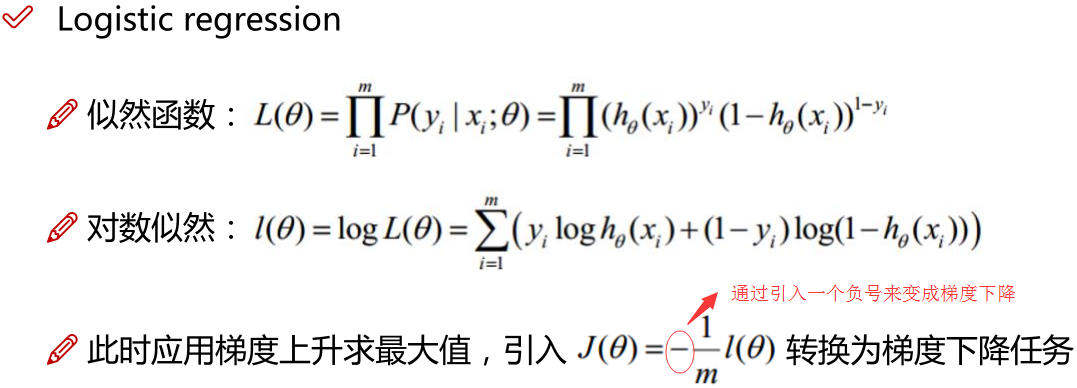

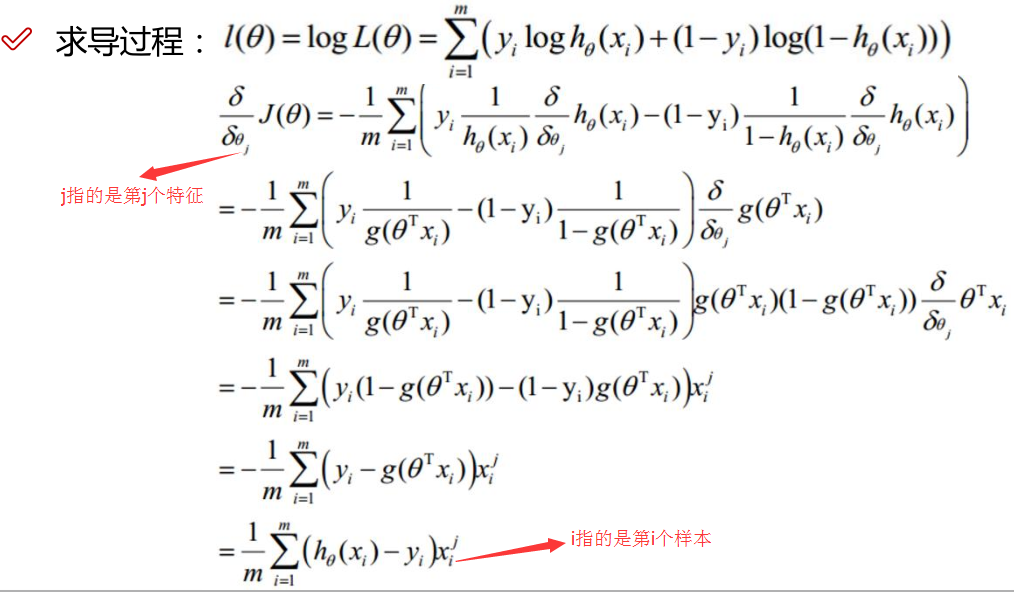

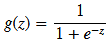

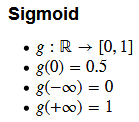

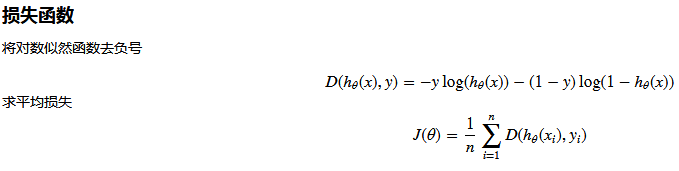

LR本质上还是线性回归,只是特征到结果的映射过程中加了一层函数映射,即sigmoid函数,即先把特征线性求和,然后使用sigmoid函数将线性和约束至(0,1)之间,结果值用于二分类。线性回归,采用的是平方损失函数。而逻辑回归采用的是 对数 损失函数。

注:Z指的是线性回归的输出

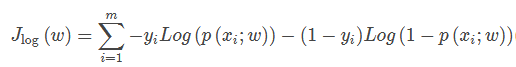

注:对数似然加负号为逻辑回归的损失函数,如下所示

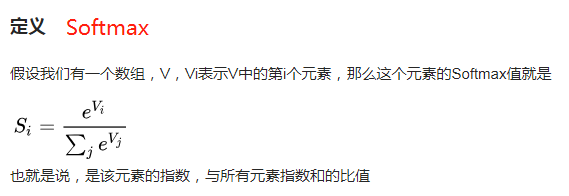

sigmoid用来解决二分类问题,softmax解决多分类问题,sigmoid是softmax的特殊情况。

核函数的物理意义?

映射到高维,使其变得线性可分。什么是高维?如一个一维数据特征x,转换为(x,x^2, x^3),就成为了一个三维特征,且线性无关。一个一维特征线性不可分的特征,在高维,就可能线性可分了。

对于非线性问题逻辑Regression问题的常规步骤为:

- 寻找h函数(即hypothesis);对非线性问题的处理方式不同,LR主要靠特征构造,必须组合交叉特征,特征离散化。SVM也可以这样,还可以通过kernel

- 构造J函数(损失函数);

- 想办法使得J函数最小并求得回归参数(θ)

LR的优缺点

优点

一、预测结果是界于0和1之间的概率;

二、可以适用于连续性和类别性自变量;

三、容易使用和解释;

缺点

1)对模型中自变量多重共线性较为敏感,例如两个高度相关自变量同时放入模型,可能导致较弱的一个自变量回归符号不符合预期,符号被扭转。需要利用因子分析或者变量聚类分析等手段来选择代表性的自变量,以减少候选变量之间的相关性;

2)预测结果呈“S”型,因此从log(odds)向概率转化的过程是非线性的,在两端随着log(odds)值的变化,概率变化很小,边际值太小,slope太小,而中间概率的变化很大,很敏感。 导致很多区间的变量变化对目标概率的影响没有区分度,无法确定阀值。

为什么做回归分析和逻辑回归时要考虑消除多重共线性?

回归方程的解释,比如第一个beta1 意义是在保持其他自变量不变的情况下,X1每增加一个单位,y平均增加一个单位,记得是平均。我们进行回归分析需要了解每个自变量对因变量的单纯效应,多重共线性就是说自变量间存在某种函数关系,如果你的两个自变量间(X1和X2)存在函数关系,那么X1改变一个单位时,X2也会相应地改变,此时你无法做到固定其他条件,单独考查X1对因变量Y的作用,你所观察到的X1的效应总是混杂了X2的作用,这就造成了分析误差,使得对自变量效应的分析不准确,所以做回归分析时需要排除多重共线性的影响,就是自变量间存在很严重的相关关系。

在利用Scikit-Learn对数据进行逻辑回归之前。首先进行特征筛选。特征筛选的方法很多,主要包含在Scikit-Learn的feature-selection库中,比较简单的有通过 F 检验(f_regression)来给出各个特征的 F 值和 p 值,从而可以筛选变量(选择 F 值大的或者 p 值较小的特征)。

多重共线性的检验;

1、相关性分析,相关系数高于0.8,表明存在多重共线性;但相关系数低,并不能表示不存在多重共线性;

2、容忍度(tolerance)与方差扩大因子(VIF)。某个自变量的容忍度等于1减去该自变量为因变量而其他自变量为预测变量时所得到的线性回归模型的判定系数。容忍度越小,多重共线性越严重。通常认为容忍度小于0.1时,存在严重的多重共线性。方差扩大因子等于容忍度的倒数。显然,VIF越大,多重共线性越严重。一般认为VIF大于10时,存在严重的多重共线性。

3、回归系数的正负号与预期的相反。

多重共线性的处理方法:

(一)删除不重要的自变量

自变量之间存在共线性,说明自变量所提供的信息是重叠的,可以删除不重要的自变量减少重复信息。但从模型中删去自变量时应该注意:从实际经济分析确定为相对不重要并从偏相关系数检验证实为共线性原因的那些变量中删除。如果删除不当,会产生模型设定误差,造成参数估计严重有偏的后果。

(二)追加样本信息(不过实际操作中,这个方法实现率不高)

多重共线性问题的实质是样本信息的不充分而导致模型参数的不能精确估计,因此追加样本信息是解决该问题的一条有效途径。但是,由于资料收集及调查的困难,要追加样本信息在实践中有时并不容易。

(三)利用非样本先验信息

非样本先验信息主要来自经济理论分析和经验认识。充分利用这些先验的信息,往往有助于解决多重共线性问题。

(四)改变解释变量的形式

改变解释变量的形式是解决多重共线性的一种简易方法,例如对于横截面数据采用相对数变量,对于时间序列数据采用增量型变量。

(五)逐步回归法(此法最常用的,也最有效)

逐步回归(Stepwise

Regression)是一种常用的消除多重共线性、选取“最优”回归方程的方法。其做法是将逐个引入自变量,引入的条件是该自变量经F检验是显著的,每引入一个自变量后,对已选入的变量进行逐个检验,如果原来引入的变量由于后面变量的引入而变得不再显著,那么就将其剔除。引入一个变量或从回归方程中剔除一个变量,为逐步回归的一步,每一步都要进行F

检验,以确保每次引入新变量之前回归方程中只包含显著的变量。这个过程反复进行,直到既没有不显著的自变量选入回归方程,也没有显著自变量从回归方程中剔除为止。

(六)可以做主成分回归

主成分分析法作为多元统计分析的一种常用方法在处理多变量问题时具有其一定的优越性,其降维的优势是明显的,主成分回归方法对于一般的多重共线性问题还是适用的,尤其是对共线性较强的变量之间。当采取主成分提取了新的变量后,往往这些变量间的组内差异小而组间差异大,起到了消除共线性的问题。

逻辑回归和线性回归的联系、异同? 经验风险、期望风险、经验损失、结构风险之间的区别与联系?

案例实战:Python实现逻辑回归与梯度下降策略

The data

我们将建立一个逻辑回归模型来预测一个学生是否被大学录取。假设你是一个大学系的管理员,你想根据两次考试的结果来决定每个申请人的录取机会。你有以前的申请人的历史数据,你可以用它作为逻辑回归的训练集。对于每一个培训例子,你有两个考试的申请人的分数和录取决定。为了做到这一点,我们将建立一个分类模型,根据考试成绩估计入学概率。

#三大件 import numpy as np import pandas as pd import matplotlib.pyplot as plt %matplotlib inline

data文件夹下 LogiReg_data.txt内容:

34.62365962451697,78.0246928153624,0 30.28671076822607,43.89499752400101,0 35.84740876993872,72.90219802708364,0 60.18259938620976,86.30855209546826,1 79.0327360507101,75.3443764369103,1 45.08327747668339,56.3163717815305,0 61.10666453684766,96.51142588489624,1 75.02474556738889,46.55401354116538,1 76.09878670226257,87.42056971926803,1 84.43281996120035,43.53339331072109,1 95.86155507093572,38.22527805795094,0 75.01365838958247,30.60326323428011,0 82.30705337399482,76.48196330235604,1 69.36458875970939,97.71869196188608,1 39.53833914367223,76.03681085115882,0 53.9710521485623,89.20735013750205,1 69.07014406283025,52.74046973016765,1 67.94685547711617,46.67857410673128,0 70.66150955499435,92.92713789364831,1 76.97878372747498,47.57596364975532,1 67.37202754570876,42.83843832029179,0 89.67677575072079,65.79936592745237,1 50.534788289883,48.85581152764205,0 34.21206097786789,44.20952859866288,0 77.9240914545704,68.9723599933059,1 62.27101367004632,69.95445795447587,1 80.1901807509566,44.82162893218353,1 93.114388797442,38.80067033713209,0 61.83020602312595,50.25610789244621,0 38.78580379679423,64.99568095539578,0 61.379289447425,72.80788731317097,1 85.40451939411645,57.05198397627122,1 52.10797973193984,63.12762376881715,0 52.04540476831827,69.43286012045222,1 40.23689373545111,71.16774802184875,0 54.63510555424817,52.21388588061123,0 33.91550010906887,98.86943574220611,0 64.17698887494485,80.90806058670817,1 74.78925295941542,41.57341522824434,0 34.1836400264419,75.2377203360134,0 83.90239366249155,56.30804621605327,1 51.54772026906181,46.85629026349976,0 94.44336776917852,65.56892160559052,1 82.36875375713919,40.61825515970618,0 51.04775177128865,45.82270145776001,0 62.22267576120188,52.06099194836679,0 77.19303492601364,70.45820000180959,1 97.77159928000232,86.7278223300282,1 62.07306379667647,96.76882412413983,1 91.56497449807442,88.69629254546599,1 79.94481794066932,74.16311935043758,1 99.2725269292572,60.99903099844988,1 90.54671411399852,43.39060180650027,1 34.52451385320009,60.39634245837173,0 50.2864961189907,49.80453881323059,0 49.58667721632031,59.80895099453265,0 97.64563396007767,68.86157272420604,1 32.57720016809309,95.59854761387875,0 74.24869136721598,69.82457122657193,1 71.79646205863379,78.45356224515052,1 75.3956114656803,85.75993667331619,1 35.28611281526193,47.02051394723416,0 56.25381749711624,39.26147251058019,0 30.05882244669796,49.59297386723685,0 44.66826172480893,66.45008614558913,0 66.56089447242954,41.09209807936973,0 40.45755098375164,97.53518548909936,1 49.07256321908844,51.88321182073966,0 80.27957401466998,92.11606081344084,1 66.74671856944039,60.99139402740988,1 32.72283304060323,43.30717306430063,0 64.0393204150601,78.03168802018232,1 72.34649422579923,96.22759296761404,1 60.45788573918959,73.09499809758037,1 58.84095621726802,75.85844831279042,1 99.82785779692128,72.36925193383885,1 47.26426910848174,88.47586499559782,1 50.45815980285988,75.80985952982456,1 60.45555629271532,42.50840943572217,0 82.22666157785568,42.71987853716458,0 88.9138964166533,69.80378889835472,1 94.83450672430196,45.69430680250754,1 67.31925746917527,66.58935317747915,1 57.23870631569862,59.51428198012956,1 80.36675600171273,90.96014789746954,1 68.46852178591112,85.59430710452014,1 42.0754545384731,78.84478600148043,0 75.47770200533905,90.42453899753964,1 78.63542434898018,96.64742716885644,1 52.34800398794107,60.76950525602592,0 94.09433112516793,77.15910509073893,1 90.44855097096364,87.50879176484702,1 55.48216114069585,35.57070347228866,0 74.49269241843041,84.84513684930135,1 89.84580670720979,45.35828361091658,1 83.48916274498238,48.38028579728175,1 42.2617008099817,87.10385094025457,1 99.31500880510394,68.77540947206617,1 55.34001756003703,64.9319380069486,1 74.77589300092767,89.52981289513276,1

import os path = 'data' + os.sep + 'LogiReg_data.txt' print(path)#打印出路径 data\LogiReg_data.txt pdData = pd.read_csv(path, header=None, names=['Exam 1', 'Exam 2', 'Admitted'])#header=None不从数据中读取列名,自己指定 pdData.head()

| Exam 1 | Exam 2 | Admitted | |

|---|---|---|---|

| 0 | 34.623660 | 78.024693 | 0 |

| 1 | 30.286711 | 43.894998 | 0 |

| 2 | 35.847409 | 72.902198 | 0 |

| 3 | 60.182599 | 86.308552 | 1 |

| 4 | 79.032736 | 75.344376 | 1 |

pdData.shape#查看数据维度 (100, 3)

positive = pdData[pdData['Admitted'] == 1] # returns the subset of rows such Admitted = 1, i.e. the set of *positive* examples

negative = pdData[pdData['Admitted'] == 0] # returns the subset of rows such Admitted = 0, i.e. the set of *negative* examples

print(positive.head())

print(negative.head())

fig, ax = plt.subplots(figsize=(10,5))#设置图的大小

ax.scatter(positive['Exam 1'], positive['Exam 2'], s=30, c='b', marker='o', label='Admitted')#c指得是颜色,s指的是点大小

ax.scatter(negative['Exam 1'], negative['Exam 2'], s=30, c='r', marker='x', label='Not Admitted')

ax.legend()

ax.set_xlabel('Exam 1 Score')

ax.set_ylabel('Exam 2 Score')

结果:

Exam 1 Exam 2 Admitted 3 60.182599 86.308552 1 4 79.032736 75.344376 1 6 61.106665 96.511426 1 7 75.024746 46.554014 1 8 76.098787 87.420570 1 Exam 1 Exam 2 Admitted 0 34.623660 78.024693 0 1 30.286711 43.894998 0 2 35.847409 72.902198 0 5 45.083277 56.316372 0 10 95.861555 38.225278 0

项目实战:使用逻辑回归判断信用卡欺诈检测

故事背景:

原始数据为个人交易记录,但是考虑数据本身的隐私性,已经对原始数据进行了类似PCA的处理,现在已经把特征数据提取好了,接下来的目的就是如何建立模型使得检测的效果达到最好,这里我们虽然不需要对数据做特征提取的操作,但是面对的挑战还是蛮大的。

import pandas as pd import matplotlib.pyplot as plt import numpy as np %matplotlib inline

data = pd.read_csv("creditcard.csv")

data.head()

结果:

注:损失函数为目标函数

注:损失函数为目标函数