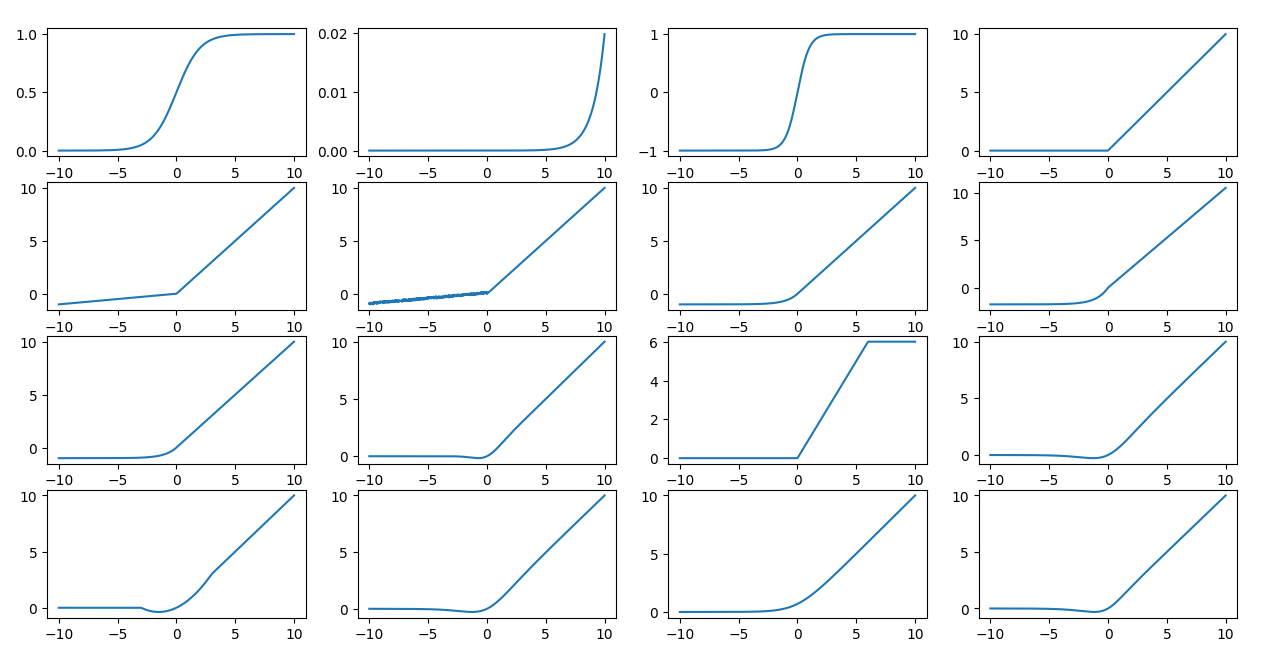

深度学习(激活函数)

这里实现了一下常见的激活函数。

其中prelu参数是一个可学习参数,这里设成前向传播类。

代码如下:

import numpy as np import matplotlib.pyplot as plt import torch import torch.nn as nn def sigmoid(x): return 1.0/(1.0 + np.exp(-x)) def softmax(x): return np.exp(x)/np.sum(np.exp(x)) def tanh(x): return (np.exp(x) - np.exp(-x))/(np.exp(x) + np.exp(-x)) def relu(x): return np.where(x > 0, x, 0) def leaky_relu(x,a=0.1): return np.where(x > 0, x, a * x) def rrelu(x,lower=0.1,upper=0.3): return np.where(x > 0, x, np.random.rand(len(x))*(upper-lower)+lower * x) def elu(x,a=1.0): return np.where(x > 0, x, a * (np.exp(x) - 1)) def selu(x,a=1.67,l=1.05): return np.where(x > 0, l * x, l * a * (np.exp(x) - 1)) def celu(x,a=1.0): return np.where(x > 0, x, a * (np.exp(x/a) - 1)) def gelu(x): return 0.5*x*(1+tanh(np.sqrt(2/np.pi)*(x+0.044715*x**3))) def relu6(x): return np.minimum(np.maximum(0, x), 6) def swish(x,b=1.0): return x*sigmoid(b*x) def hardswish(x): return x * relu6(x+3) / 6.0 def silu(x): return x*sigmoid(x) def softplus(x): return np.log(1+np.exp(x)) def mish(x): return x*tanh(softplus(x)) class prelu(nn.Module): def __init__(self): super(prelu, self).__init__() self.a = nn.Parameter(torch.ones(1)*0.1) def forward(self, x): return torch.where(x > 0, x, self.a * x) if __name__ == '__main__': x = np.linspace(-10,10,1000) functions = [sigmoid,softmax,tanh,relu, leaky_relu,rrelu,elu,selu,celu, gelu,relu6,swish,hardswish, silu,softplus,mish] for i,func in enumerate(functions): plt.subplot(4,4,i+1) plt.plot(x,func(x)) plt.show() model = prelu() tensor_x = torch.tensor(x) tensor_y = model(tensor_x) plt.plot(tensor_x.numpy(),tensor_y.detach().numpy()) plt.show()

结果如下:

· 全网最简单!3分钟用满血DeepSeek R1开发一款AI智能客服,零代码轻松接入微信、公众号、小程

· .NET 10 首个预览版发布,跨平台开发与性能全面提升

· 《HelloGitHub》第 107 期

· 全程使用 AI 从 0 到 1 写了个小工具

· 从文本到图像:SSE 如何助力 AI 内容实时呈现?(Typescript篇)