深度学习(学习率)

Pytorch做训练的时候,可以调整训练学习率。

通过调整合适的学习率曲线可以提高模型训练效率和优化模型性能。

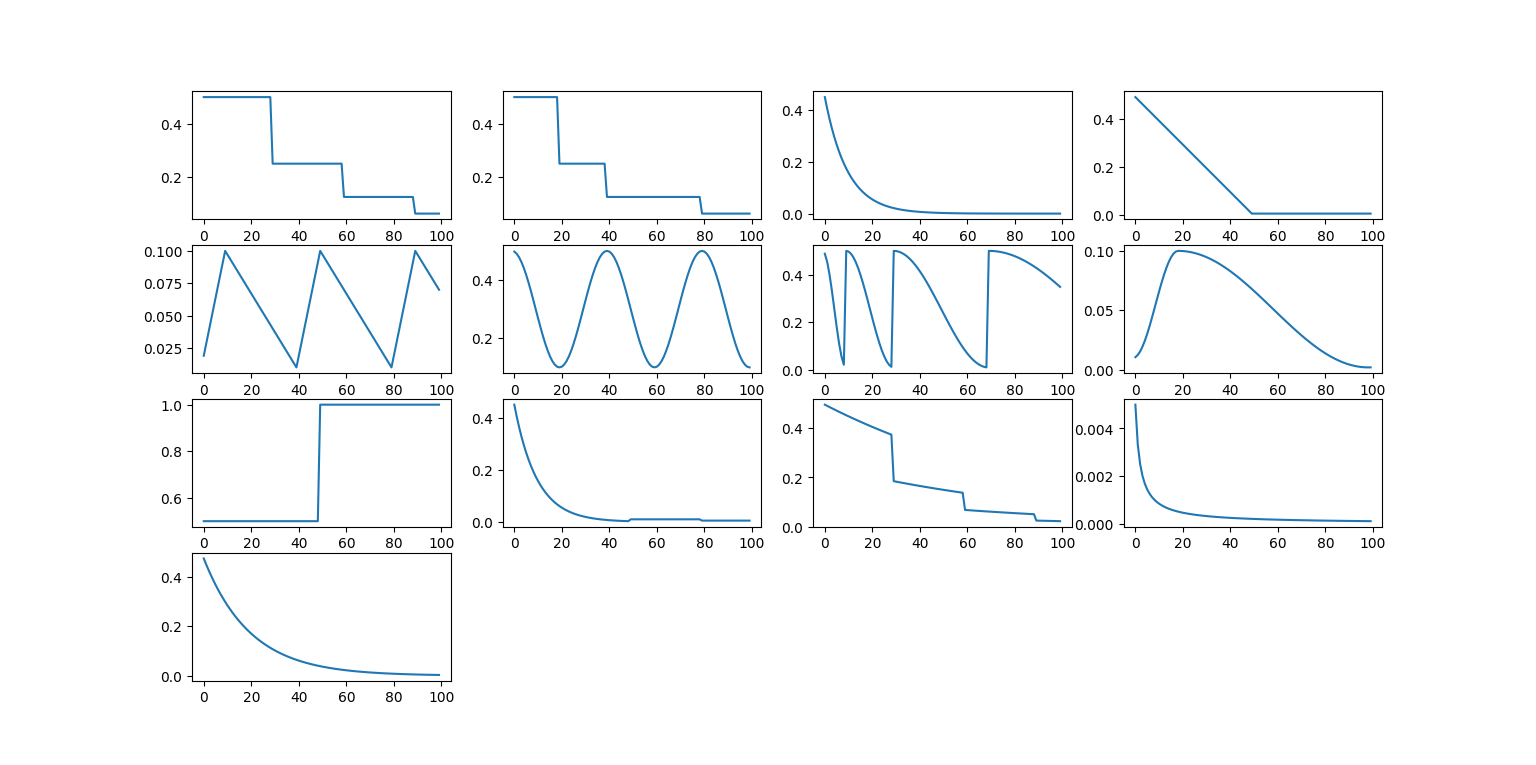

各种学习率曲线示例代码如下:

import torch import torch.optim as optim import torch.nn as nn import matplotlib.pyplot as plt import numpy as np if __name__ == '__main__': lr_init = 0.5 #初始学习率 parameter = [nn.Parameter(torch.tensor([1, 2, 3], dtype=torch.float32))] optimizer = optim.SGD(parameter, lr=lr_init) scheduler_list=[] #每迭代step_size次,学习率乘以gamma scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=30, gamma=0.5) scheduler_list.append(scheduler) #在迭代到millestones次时,学习率乘以gamma scheduler = torch.optim.lr_scheduler.MultiStepLR(optimizer, milestones=[20,40,80], gamma=0.5) scheduler_list.append(scheduler) #每次学习率是上一次的gamma倍 scheduler = torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma=0.9) scheduler_list.append(scheduler) #前total_inters次迭代,学习率从lr_init*(start_factor~end_factor)线性下降,total_iters次之后稳定在end_factor scheduler = torch.optim.lr_scheduler.LinearLR(optimizer,start_factor=1,end_factor=0.01,total_iters=50) scheduler_list.append(scheduler) #学习率在base_lr~max_lr之间循环,上升step_size_up个周期,下降step_size_down个周期 scheduler = torch.optim.lr_scheduler.CyclicLR(optimizer,base_lr=0.01,max_lr=0.1,step_size_up=10,step_size_down=30) scheduler_list.append(scheduler) #学习率为cos曲线,T_max为半个周期,最小为eta_min,最大为lr_init scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer,T_max=20,eta_min=0.1) scheduler_list.append(scheduler) #cos退火学习率,第一个周期为T_0,后面每一个周期为前一个的T_mult倍,最小值为eta_min,最大值为lr_init scheduler = torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(optimizer, T_0=10, T_mult=2, eta_min=0.01) scheduler_list.append(scheduler) #学习率先上升后下降,pct_start学习率上升部分占比,最大学习率=max_lr,初始学习率=max_lr/div_factor,最终学习率=初始学习率/final_div_factor scheduler = torch.optim.lr_scheduler.OneCycleLR(optimizer,max_lr=0.1,pct_start=0.2,total_steps=100,div_factor=10,final_div_factor=5) scheduler_list.append(scheduler) #total_iters次以内学习率为lr_init,total_iters之后学习率为lr_init/factor scheduler = torch.optim.lr_scheduler.ConstantLR(optimizer,factor=0.5,total_iters=50) scheduler_list.append(scheduler) #多个学习率组合,将学习率在milestones次循环处分割为两端 scheduler = torch.optim.lr_scheduler.SequentialLR(optimizer,schedulers=[ torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma=0.9), torch.optim.lr_scheduler.StepLR(optimizer, step_size=30, gamma=0.5)], milestones=[50]) scheduler_list.append(scheduler) #同样多种学习率组合,可以给出连续学习率 scheduler = torch.optim.lr_scheduler.ChainedScheduler([ torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma=0.99), torch.optim.lr_scheduler.StepLR(optimizer, step_size=30, gamma=0.5)]) scheduler_list.append(scheduler) #自定义lambda函数设定学习率,这里是lr_init * 1.0/(step+1) scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda=lambda step: 1.0 /(step+1)) scheduler_list.append(scheduler) #自定义lambad函数设定学习率,这里是lr[t] = 0.95*lr[t-1] scheduler = torch.optim.lr_scheduler.MultiplicativeLR(optimizer,lr_lambda=lambda epoch:0.95) scheduler_list.append(scheduler) #当指标度量停止改进时,ReduceLROnPlateau会降低学习率,scheduler.step中需要设置loss参数 # scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer,mode='min',factor=0.5,patience=5,threshold=1e-4,threshold_mode='abs',cooldown=0,min_lr=0.001,eps=1e-8) # scheduler_list.append(scheduler) learning_rates = [] for sch in scheduler_list: rates=[] optimizer.param_groups[0]['lr'] = lr_init for _ in range(100): optimizer.step() sch.step() rates.append(sch.get_last_lr()[0]) learning_rates.append(rates) numpy_rates = np.array(learning_rates) for i in range(numpy_rates.shape[0]): plt.subplot(4,4,i+1) plt.plot(numpy_rates[i,:]) plt.show()

各种学习率曲线如下:

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!

2014-08-02 多核加速处理图像