Unity Scriptable Render Pipeline - SRP 可编程渲染管线

参考链接 :

Unity新特性里面, 最牛逼的肯定就是它了SRP, 可编程渲染管线, Unity不能做3A游戏的原因不外乎就是它.

虽然前面看了CommandBuffer可以用来实现很多视觉效果, 可是只能说是扩展了原有的功能, 可编程渲染管线才是革命性的, 举个栗子来说就像一个人在某个数学框架下做出了新发现, 和那个发明数学框架的大神.

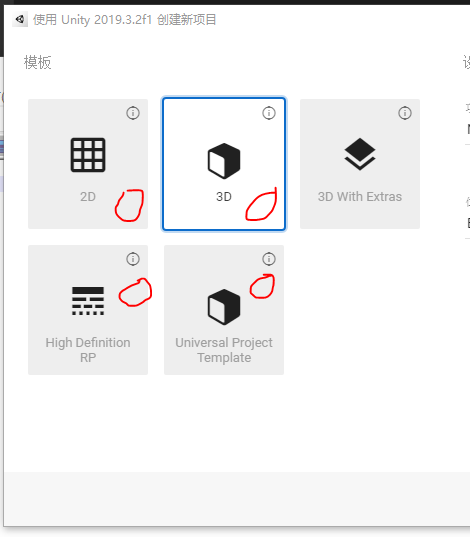

刚开始SRP分为 轻量渲染管线(LWRP) 和 高清渲染管线(HDRP) , 现在LWRP改为叫 通用渲染管线URP(Universal), 不知道为啥, 可能LW叫法比较low吧...还是老样子先把工程搭建起来再看看效果, 因为它已经跟普通工程分道扬镳了, 从创建工程就能看的出来:

这些都独立出来成了工程创建时的选项了, 前面的文章也有说到怎样升级普通工程到高清工程, 需要把所有材质置换到高清管线的材质, 不可逆, 这里先创建个URP工程看看, 创建过程中报错, 忽略...

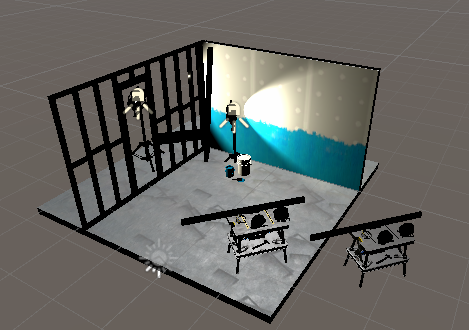

它工程中自带了一些Prefab, 拖出来以后渲染失败:

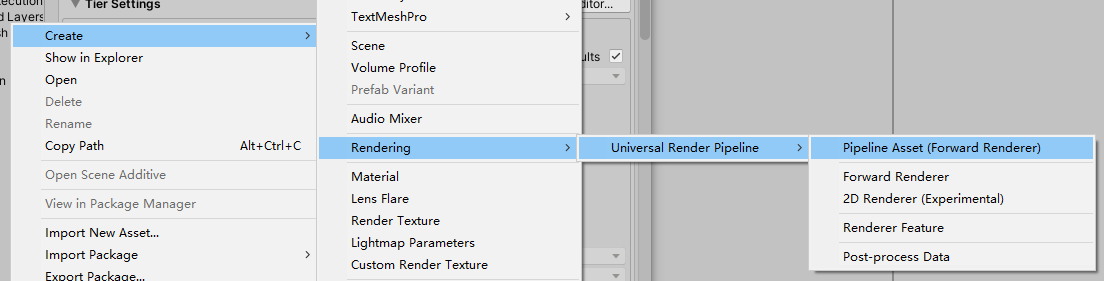

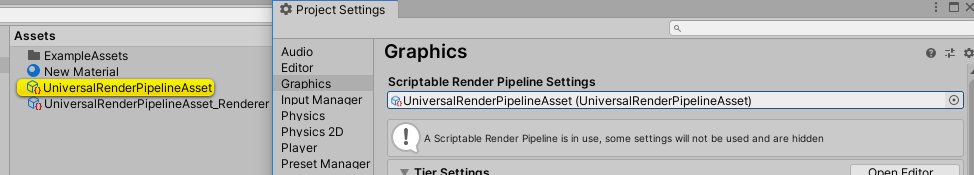

不管URP还是HDRP都要自己设定一个RenderPiplineAsset才行, 创建一个放进去 :

这样才可以看到正确的渲染. 那么这个RenderPipelineAsset是个啥东西呢? 查看它就是一些设置, 是否渲染深度图啊之类的, 没有什么特别的.

因为我找到的案例是早期版本的, 现在用2019.3测试, API变了, 现在外网又很难打开, 连GitHub都难打开, 直接复制它的源代码来看看它有哪些可编程内容吧, 例子又下不来, 往后慢慢看吧哎...

1. UniversalRenderPipelineCore.cs

using System; using System.Collections.Generic; using Unity.Collections; using UnityEngine.Scripting.APIUpdating; using UnityEngine.Experimental.GlobalIllumination; using UnityEngine.Experimental.Rendering; using Lightmapping = UnityEngine.Experimental.GlobalIllumination.Lightmapping; using UnityEngine.Rendering.LWRP; using UnityEngine.Rendering.Universal; namespace UnityEngine.Rendering.Universal { [MovedFrom("UnityEngine.Rendering.LWRP")] public enum MixedLightingSetup { None, ShadowMask, Subtractive, }; [MovedFrom("UnityEngine.Rendering.LWRP")] public struct RenderingData { public CullingResults cullResults; public CameraData cameraData; public LightData lightData; public ShadowData shadowData; public PostProcessingData postProcessingData; public bool supportsDynamicBatching; public PerObjectData perObjectData; /// <summary> /// True if post-processing effect is enabled while rendering the camera stack. /// </summary> public bool postProcessingEnabled; } [MovedFrom("UnityEngine.Rendering.LWRP")] public struct LightData { public int mainLightIndex; public int additionalLightsCount; public int maxPerObjectAdditionalLightsCount; public NativeArray<VisibleLight> visibleLights; public bool shadeAdditionalLightsPerVertex; public bool supportsMixedLighting; } [MovedFrom("UnityEngine.Rendering.LWRP")] public struct CameraData { // Internal camera data as we are not yet sure how to expose View in stereo context. // We might change this API soon. Matrix4x4 m_ViewMatrix; Matrix4x4 m_ProjectionMatrix; internal void SetViewAndProjectionMatrix(Matrix4x4 viewMatrix, Matrix4x4 projectionMatrix) { m_ViewMatrix = viewMatrix; m_ProjectionMatrix = projectionMatrix; } /// <summary> /// Returns the camera view matrix. /// </summary> /// <returns></returns> public Matrix4x4 GetViewMatrix() { return m_ViewMatrix; } /// <summary> /// Returns the camera projection matrix. /// </summary> /// <returns></returns> public Matrix4x4 GetProjectionMatrix() { return m_ProjectionMatrix; } /// <summary> /// Returns the camera GPU projection matrix. This contains platform specific changes to handle y-flip and reverse z. /// Similar to <c>GL.GetGPUProjectionMatrix</c> but queries URP internal state to know if the pipeline is rendering to render texture. /// For more info on platform differences regarding camera projection check: https://docs.unity3d.com/Manual/SL-PlatformDifferences.html /// </summary> /// <seealso cref="GL.GetGPUProjectionMatrix(Matrix4x4, bool)"/> /// <returns></returns> public Matrix4x4 GetGPUProjectionMatrix() { return GL.GetGPUProjectionMatrix(m_ProjectionMatrix, IsCameraProjectionMatrixFlipped()); } public Camera camera; public CameraRenderType renderType; public RenderTexture targetTexture; public RenderTextureDescriptor cameraTargetDescriptor; internal Rect pixelRect; internal int pixelWidth; internal int pixelHeight; internal float aspectRatio; public float renderScale; public bool clearDepth; public bool isSceneViewCamera; public bool isDefaultViewport; public bool isHdrEnabled; public bool requiresDepthTexture; public bool requiresOpaqueTexture; /// <summary> /// True if the camera device projection matrix is flipped. This happens when the pipeline is rendering /// to a render texture in non OpenGL platforms. If you are doing a custom Blit pass to copy camera textures /// (_CameraColorTexture, _CameraDepthAttachment) you need to check this flag to know if you should flip the /// matrix when rendering with for cmd.Draw* and reading from camera textures. /// </summary> public bool IsCameraProjectionMatrixFlipped() { // Users only have access to CameraData on URP rendering scope. The current renderer should never be null. var renderer = ScriptableRenderer.current; Debug.Assert(renderer != null, "IsCameraProjectionMatrixFlipped is being called outside camera rendering scope."); if(renderer != null) { bool renderingToTexture = renderer.cameraColorTarget != BuiltinRenderTextureType.CameraTarget || targetTexture != null; return SystemInfo.graphicsUVStartsAtTop && renderingToTexture; } return true; } public SortingCriteria defaultOpaqueSortFlags; public bool isStereoEnabled; internal int numberOfXRPasses; internal bool isXRMultipass; public float maxShadowDistance; public bool postProcessEnabled; public IEnumerator<Action<RenderTargetIdentifier, CommandBuffer>> captureActions; public LayerMask volumeLayerMask; public Transform volumeTrigger; public bool isStopNaNEnabled; public bool isDitheringEnabled; public AntialiasingMode antialiasing; public AntialiasingQuality antialiasingQuality; internal ScriptableRenderer renderer; /// <summary> /// True if this camera is resolving rendering to the final camera render target. /// When rendering a stack of cameras only the last camera in the stack will resolve to camera target. /// </summary> public bool resolveFinalTarget; } [MovedFrom("UnityEngine.Rendering.LWRP")] public struct ShadowData { public bool supportsMainLightShadows; public bool requiresScreenSpaceShadowResolve; public int mainLightShadowmapWidth; public int mainLightShadowmapHeight; public int mainLightShadowCascadesCount; public Vector3 mainLightShadowCascadesSplit; public bool supportsAdditionalLightShadows; public int additionalLightsShadowmapWidth; public int additionalLightsShadowmapHeight; public bool supportsSoftShadows; public int shadowmapDepthBufferBits; public List<Vector4> bias; } public static class ShaderPropertyId { public static readonly int scaledScreenParams = Shader.PropertyToID("_ScaledScreenParams"); public static readonly int worldSpaceCameraPos = Shader.PropertyToID("_WorldSpaceCameraPos"); public static readonly int screenParams = Shader.PropertyToID("_ScreenParams"); public static readonly int projectionParams = Shader.PropertyToID("_ProjectionParams"); public static readonly int zBufferParams = Shader.PropertyToID("_ZBufferParams"); public static readonly int orthoParams = Shader.PropertyToID("unity_OrthoParams"); public static readonly int viewMatrix = Shader.PropertyToID("unity_MatrixV"); public static readonly int projectionMatrix = Shader.PropertyToID("glstate_matrix_projection"); public static readonly int viewAndProjectionMatrix = Shader.PropertyToID("unity_MatrixVP"); public static readonly int inverseViewMatrix = Shader.PropertyToID("unity_MatrixInvV"); // Undefined: // public static readonly int inverseProjectionMatrix = Shader.PropertyToID("unity_MatrixInvP"); public static readonly int inverseViewAndProjectionMatrix = Shader.PropertyToID("unity_MatrixInvVP"); public static readonly int cameraProjectionMatrix = Shader.PropertyToID("unity_CameraProjection"); public static readonly int inverseCameraProjectionMatrix = Shader.PropertyToID("unity_CameraInvProjection"); public static readonly int worldToCameraMatrix = Shader.PropertyToID("unity_WorldToCamera"); public static readonly int cameraToWorldMatrix = Shader.PropertyToID("unity_CameraToWorld"); } public struct PostProcessingData { public ColorGradingMode gradingMode; public int lutSize; } class CameraDataComparer : IComparer<Camera> { public int Compare(Camera lhs, Camera rhs) { return (int)lhs.depth - (int)rhs.depth; } } public static class ShaderKeywordStrings { public static readonly string MainLightShadows = "_MAIN_LIGHT_SHADOWS"; public static readonly string MainLightShadowCascades = "_MAIN_LIGHT_SHADOWS_CASCADE"; public static readonly string AdditionalLightsVertex = "_ADDITIONAL_LIGHTS_VERTEX"; public static readonly string AdditionalLightsPixel = "_ADDITIONAL_LIGHTS"; public static readonly string AdditionalLightShadows = "_ADDITIONAL_LIGHT_SHADOWS"; public static readonly string SoftShadows = "_SHADOWS_SOFT"; public static readonly string MixedLightingSubtractive = "_MIXED_LIGHTING_SUBTRACTIVE"; public static readonly string DepthNoMsaa = "_DEPTH_NO_MSAA"; public static readonly string DepthMsaa2 = "_DEPTH_MSAA_2"; public static readonly string DepthMsaa4 = "_DEPTH_MSAA_4"; public static readonly string DepthMsaa8 = "_DEPTH_MSAA_8"; public static readonly string LinearToSRGBConversion = "_LINEAR_TO_SRGB_CONVERSION"; public static readonly string SmaaLow = "_SMAA_PRESET_LOW"; public static readonly string SmaaMedium = "_SMAA_PRESET_MEDIUM"; public static readonly string SmaaHigh = "_SMAA_PRESET_HIGH"; public static readonly string PaniniGeneric = "_GENERIC"; public static readonly string PaniniUnitDistance = "_UNIT_DISTANCE"; public static readonly string BloomLQ = "_BLOOM_LQ"; public static readonly string BloomHQ = "_BLOOM_HQ"; public static readonly string BloomLQDirt = "_BLOOM_LQ_DIRT"; public static readonly string BloomHQDirt = "_BLOOM_HQ_DIRT"; public static readonly string UseRGBM = "_USE_RGBM"; public static readonly string Distortion = "_DISTORTION"; public static readonly string ChromaticAberration = "_CHROMATIC_ABERRATION"; public static readonly string HDRGrading = "_HDR_GRADING"; public static readonly string TonemapACES = "_TONEMAP_ACES"; public static readonly string TonemapNeutral = "_TONEMAP_NEUTRAL"; public static readonly string FilmGrain = "_FILM_GRAIN"; public static readonly string Fxaa = "_FXAA"; public static readonly string Dithering = "_DITHERING"; public static readonly string HighQualitySampling = "_HIGH_QUALITY_SAMPLING"; } public sealed partial class UniversalRenderPipeline { static List<Vector4> m_ShadowBiasData = new List<Vector4>(); /// <summary> /// Checks if a camera is a game camera. /// </summary> /// <param name="camera">Camera to check state from.</param> /// <returns>true if given camera is a game camera, false otherwise.</returns> public static bool IsGameCamera(Camera camera) { if(camera == null) throw new ArgumentNullException("camera"); return camera.cameraType == CameraType.Game || camera.cameraType == CameraType.VR; } /// <summary> /// Checks if a camera is rendering in stereo mode. /// </summary> /// <param name="camera">Camera to check state from.</param> /// <returns>Returns true if the given camera is rendering in stereo mode, false otherwise.</returns> public static bool IsStereoEnabled(Camera camera) { if(camera == null) throw new ArgumentNullException("camera"); bool isGameCamera = IsGameCamera(camera); bool isCompatWithXRDimension = true; #if ENABLE_VR && ENABLE_VR_MODULE isCompatWithXRDimension &= (camera.targetTexture ? camera.targetTexture.dimension == UnityEngine.XR.XRSettings.deviceEyeTextureDimension : true); #endif return XRGraphics.enabled && isGameCamera && (camera.stereoTargetEye == StereoTargetEyeMask.Both) && isCompatWithXRDimension; } /// <summary> /// Returns the current render pipeline asset for the current quality setting. /// If no render pipeline asset is assigned in QualitySettings, then returns the one assigned in GraphicsSettings. /// </summary> public static UniversalRenderPipelineAsset asset { get => GraphicsSettings.currentRenderPipeline as UniversalRenderPipelineAsset; } /// <summary> /// Checks if a camera is rendering in MultiPass stereo mode. /// </summary> /// <param name="camera">Camera to check state from.</param> /// <returns>Returns true if the given camera is rendering in multi pass stereo mode, false otherwise.</returns> static bool IsMultiPassStereoEnabled(Camera camera) { if(camera == null) throw new ArgumentNullException("camera"); #if ENABLE_VR && ENABLE_VR_MODULE return IsStereoEnabled(camera) && XR.XRSettings.stereoRenderingMode == XR.XRSettings.StereoRenderingMode.MultiPass; #else return false; #endif } void SortCameras(Camera[] cameras) { if(cameras.Length <= 1) return; Array.Sort(cameras, new CameraDataComparer()); } static RenderTextureDescriptor CreateRenderTextureDescriptor(Camera camera, float renderScale, bool isStereoEnabled, bool isHdrEnabled, int msaaSamples, bool needsAlpha) { RenderTextureDescriptor desc; GraphicsFormat renderTextureFormatDefault = SystemInfo.GetGraphicsFormat(DefaultFormat.LDR); // NB: There's a weird case about XR and render texture // In test framework currently we render stereo tests to target texture // The descriptor in that case needs to be initialized from XR eyeTexture not render texture // Otherwise current tests will fail. Check: Do we need to update the test images instead? if(isStereoEnabled) { desc = XRGraphics.eyeTextureDesc; renderTextureFormatDefault = desc.graphicsFormat; } else if(camera.targetTexture == null) { desc = new RenderTextureDescriptor(camera.pixelWidth, camera.pixelHeight); desc.width = (int)((float)desc.width * renderScale); desc.height = (int)((float)desc.height * renderScale); } else { desc = camera.targetTexture.descriptor; } if(camera.targetTexture != null) { desc.colorFormat = camera.targetTexture.descriptor.colorFormat; desc.depthBufferBits = camera.targetTexture.descriptor.depthBufferBits; desc.msaaSamples = camera.targetTexture.descriptor.msaaSamples; desc.sRGB = camera.targetTexture.descriptor.sRGB; } else { bool use32BitHDR = !needsAlpha && RenderingUtils.SupportsGraphicsFormat(GraphicsFormat.B10G11R11_UFloatPack32, FormatUsage.Linear | FormatUsage.Render); GraphicsFormat hdrFormat = (use32BitHDR) ? GraphicsFormat.B10G11R11_UFloatPack32 : GraphicsFormat.R16G16B16A16_SFloat; desc.graphicsFormat = isHdrEnabled ? hdrFormat : renderTextureFormatDefault; desc.depthBufferBits = 32; desc.msaaSamples = msaaSamples; desc.sRGB = (QualitySettings.activeColorSpace == ColorSpace.Linear); } desc.enableRandomWrite = false; desc.bindMS = false; desc.useDynamicScale = camera.allowDynamicResolution; return desc; } static Lightmapping.RequestLightsDelegate lightsDelegate = (Light[] requests, NativeArray<LightDataGI> lightsOutput) => { // Editor only. #if UNITY_EDITOR LightDataGI lightData = new LightDataGI(); for(int i = 0; i < requests.Length; i++) { Light light = requests[i]; switch(light.type) { case LightType.Directional: DirectionalLight directionalLight = new DirectionalLight(); LightmapperUtils.Extract(light, ref directionalLight); lightData.Init(ref directionalLight); break; case LightType.Point: PointLight pointLight = new PointLight(); LightmapperUtils.Extract(light, ref pointLight); lightData.Init(ref pointLight); break; case LightType.Spot: SpotLight spotLight = new SpotLight(); LightmapperUtils.Extract(light, ref spotLight); spotLight.innerConeAngle = light.innerSpotAngle * Mathf.Deg2Rad; spotLight.angularFalloff = AngularFalloffType.AnalyticAndInnerAngle; lightData.Init(ref spotLight); break; case LightType.Area: RectangleLight rectangleLight = new RectangleLight(); LightmapperUtils.Extract(light, ref rectangleLight); rectangleLight.mode = LightMode.Baked; lightData.Init(ref rectangleLight); break; case LightType.Disc: DiscLight discLight = new DiscLight(); LightmapperUtils.Extract(light, ref discLight); discLight.mode = LightMode.Baked; lightData.Init(ref discLight); break; default: lightData.InitNoBake(light.GetInstanceID()); break; } lightData.falloff = FalloffType.InverseSquared; lightsOutput[i] = lightData; } #else LightDataGI lightData = new LightDataGI(); for (int i = 0; i < requests.Length; i++) { Light light = requests[i]; lightData.InitNoBake(light.GetInstanceID()); lightsOutput[i] = lightData; } #endif }; } internal enum URPProfileId { StopNaNs, SMAA, GaussianDepthOfField, BokehDepthOfField, MotionBlur, PaniniProjection, UberPostProcess, Bloom, } }

2. UniversalRenderPipeline.cs

using System; using Unity.Collections; using System.Collections.Generic; #if UNITY_EDITOR using UnityEditor; using UnityEditor.Rendering.Universal; #endif using UnityEngine.Scripting.APIUpdating; using Lightmapping = UnityEngine.Experimental.GlobalIllumination.Lightmapping; namespace UnityEngine.Rendering.LWRP { [Obsolete("LWRP -> Universal (UnityUpgradable) -> UnityEngine.Rendering.Universal.UniversalRenderPipeline", true)] public class LightweightRenderPipeline { public LightweightRenderPipeline(LightweightRenderPipelineAsset asset) { } } } namespace UnityEngine.Rendering.Universal { public sealed partial class UniversalRenderPipeline : RenderPipeline { internal static class PerFrameBuffer { public static int _GlossyEnvironmentColor; public static int _SubtractiveShadowColor; public static int _Time; public static int _SinTime; public static int _CosTime; public static int unity_DeltaTime; public static int _TimeParameters; } public const string k_ShaderTagName = "UniversalPipeline"; const string k_RenderCameraTag = "Render Camera"; static ProfilingSampler _CameraProfilingSampler = new ProfilingSampler(k_RenderCameraTag); public static float maxShadowBias { get => 10.0f; } public static float minRenderScale { get => 0.1f; } public static float maxRenderScale { get => 2.0f; } // Amount of Lights that can be shaded per object (in the for loop in the shader) public static int maxPerObjectLights { // No support to bitfield mask and int[] in gles2. Can't index fast more than 4 lights. // Check Lighting.hlsl for more details. get => (SystemInfo.graphicsDeviceType == GraphicsDeviceType.OpenGLES2) ? 4 : 8; } // These limits have to match same limits in Input.hlsl const int k_MaxVisibleAdditionalLightsMobile = 32; const int k_MaxVisibleAdditionalLightsNonMobile = 256; public static int maxVisibleAdditionalLights { get { return (Application.isMobilePlatform || SystemInfo.graphicsDeviceType == GraphicsDeviceType.OpenGLCore) ? k_MaxVisibleAdditionalLightsMobile : k_MaxVisibleAdditionalLightsNonMobile; } } // Internal max count for how many ScriptableRendererData can be added to a single Universal RP asset internal static int maxScriptableRenderers { get => 8; } public UniversalRenderPipeline(UniversalRenderPipelineAsset asset) { SetSupportedRenderingFeatures(); PerFrameBuffer._GlossyEnvironmentColor = Shader.PropertyToID("_GlossyEnvironmentColor"); PerFrameBuffer._SubtractiveShadowColor = Shader.PropertyToID("_SubtractiveShadowColor"); PerFrameBuffer._Time = Shader.PropertyToID("_Time"); PerFrameBuffer._SinTime = Shader.PropertyToID("_SinTime"); PerFrameBuffer._CosTime = Shader.PropertyToID("_CosTime"); PerFrameBuffer.unity_DeltaTime = Shader.PropertyToID("unity_DeltaTime"); PerFrameBuffer._TimeParameters = Shader.PropertyToID("_TimeParameters"); // Let engine know we have MSAA on for cases where we support MSAA backbuffer if(QualitySettings.antiAliasing != asset.msaaSampleCount) { QualitySettings.antiAliasing = asset.msaaSampleCount; #if ENABLE_VR && ENABLE_VR_MODULE XR.XRDevice.UpdateEyeTextureMSAASetting(); #endif } #if ENABLE_VR && ENABLE_VR_MODULE XRGraphics.eyeTextureResolutionScale = asset.renderScale; #endif // For compatibility reasons we also match old LightweightPipeline tag. Shader.globalRenderPipeline = "UniversalPipeline,LightweightPipeline"; Lightmapping.SetDelegate(lightsDelegate); CameraCaptureBridge.enabled = true; RenderingUtils.ClearSystemInfoCache(); } protected override void Dispose(bool disposing) { base.Dispose(disposing); Shader.globalRenderPipeline = ""; SupportedRenderingFeatures.active = new SupportedRenderingFeatures(); ShaderData.instance.Dispose(); #if UNITY_EDITOR SceneViewDrawMode.ResetDrawMode(); #endif Lightmapping.ResetDelegate(); CameraCaptureBridge.enabled = false; } protected override void Render(ScriptableRenderContext renderContext, Camera[] cameras) { BeginFrameRendering(renderContext, cameras); GraphicsSettings.lightsUseLinearIntensity = (QualitySettings.activeColorSpace == ColorSpace.Linear); GraphicsSettings.useScriptableRenderPipelineBatching = asset.useSRPBatcher; SetupPerFrameShaderConstants(); SortCameras(cameras); for(int i = 0; i < cameras.Length; ++i) { var camera = cameras[i]; if(IsGameCamera(camera)) { RenderCameraStack(renderContext, camera); } else { BeginCameraRendering(renderContext, camera); #if VISUAL_EFFECT_GRAPH_0_0_1_OR_NEWER //It should be called before culling to prepare material. When there isn't any VisualEffect component, this method has no effect. VFX.VFXManager.PrepareCamera(camera); #endif UpdateVolumeFramework(camera, null); RenderSingleCamera(renderContext, camera); EndCameraRendering(renderContext, camera); } } EndFrameRendering(renderContext, cameras); } /// <summary> /// Standalone camera rendering. Use this to render procedural cameras. /// This method doesn't call <c>BeginCameraRendering</c> and <c>EndCameraRendering</c> callbacks. /// </summary> /// <param name="context">Render context used to record commands during execution.</param> /// <param name="camera">Camera to render.</param> /// <seealso cref="ScriptableRenderContext"/> public static void RenderSingleCamera(ScriptableRenderContext context, Camera camera) { UniversalAdditionalCameraData additionalCameraData = null; if(IsGameCamera(camera)) camera.gameObject.TryGetComponent(out additionalCameraData); if(additionalCameraData != null && additionalCameraData.renderType != CameraRenderType.Base) { Debug.LogWarning("Only Base cameras can be rendered with standalone RenderSingleCamera. Camera will be skipped."); return; } InitializeCameraData(camera, additionalCameraData, true, out var cameraData); RenderSingleCamera(context, cameraData, cameraData.postProcessEnabled); } /// <summary> /// Renders a single camera. This method will do culling, setup and execution of the renderer. /// </summary> /// <param name="context">Render context used to record commands during execution.</param> /// <param name="cameraData">Camera rendering data. This might contain data inherited from a base camera.</param> /// <param name="anyPostProcessingEnabled">True if at least one camera has post-processing enabled in the stack, false otherwise.</param> static void RenderSingleCamera(ScriptableRenderContext context, CameraData cameraData, bool anyPostProcessingEnabled) { Camera camera = cameraData.camera; var renderer = cameraData.renderer; if(renderer == null) { Debug.LogWarning(string.Format("Trying to render {0} with an invalid renderer. Camera rendering will be skipped.", camera.name)); return; } if(!camera.TryGetCullingParameters(IsStereoEnabled(camera), out var cullingParameters)) return; ScriptableRenderer.current = renderer; ProfilingSampler sampler = (asset.debugLevel >= PipelineDebugLevel.Profiling) ? new ProfilingSampler(camera.name) : _CameraProfilingSampler; CommandBuffer cmd = CommandBufferPool.Get(sampler.name); using(new ProfilingScope(cmd, sampler)) { renderer.Clear(cameraData.renderType); renderer.SetupCullingParameters(ref cullingParameters, ref cameraData); context.ExecuteCommandBuffer(cmd); cmd.Clear(); #if UNITY_EDITOR // Emit scene view UI if(cameraData.isSceneViewCamera) { ScriptableRenderContext.EmitWorldGeometryForSceneView(camera); } #endif var cullResults = context.Cull(ref cullingParameters); InitializeRenderingData(asset, ref cameraData, ref cullResults, anyPostProcessingEnabled, out var renderingData); renderer.Setup(context, ref renderingData); renderer.Execute(context, ref renderingData); } context.ExecuteCommandBuffer(cmd); CommandBufferPool.Release(cmd); context.Submit(); ScriptableRenderer.current = null; } /// <summary> // Renders a camera stack. This method calls RenderSingleCamera for each valid camera in the stack. // The last camera resolves the final target to screen. /// </summary> /// <param name="context">Render context used to record commands during execution.</param> /// <param name="camera">Camera to render.</param> static void RenderCameraStack(ScriptableRenderContext context, Camera baseCamera) { baseCamera.TryGetComponent<UniversalAdditionalCameraData>(out var baseCameraAdditionalData); // Overlay cameras will be rendered stacked while rendering base cameras if(baseCameraAdditionalData != null && baseCameraAdditionalData.renderType == CameraRenderType.Overlay) return; // renderer contains a stack if it has additional data and the renderer supports stacking var renderer = baseCameraAdditionalData?.scriptableRenderer; bool supportsCameraStacking = renderer != null && renderer.supportedRenderingFeatures.cameraStacking; List<Camera> cameraStack = (supportsCameraStacking) ? baseCameraAdditionalData?.cameraStack : null; bool anyPostProcessingEnabled = baseCameraAdditionalData != null && baseCameraAdditionalData.renderPostProcessing; // We need to know the last active camera in the stack to be able to resolve // rendering to screen when rendering it. The last camera in the stack is not // necessarily the last active one as it users might disable it. int lastActiveOverlayCameraIndex = -1; if(cameraStack != null) { // TODO: Add support to camera stack in VR multi pass mode if(!IsMultiPassStereoEnabled(baseCamera)) { var baseCameraRendererType = baseCameraAdditionalData?.scriptableRenderer.GetType(); for(int i = 0; i < cameraStack.Count; ++i) { Camera currCamera = cameraStack[i]; if(currCamera != null && currCamera.isActiveAndEnabled) { currCamera.TryGetComponent<UniversalAdditionalCameraData>(out var data); if(data == null || data.renderType != CameraRenderType.Overlay) { Debug.LogWarning(string.Format("Stack can only contain Overlay cameras. {0} will skip rendering.", currCamera.name)); } else if(data?.scriptableRenderer.GetType() != baseCameraRendererType) { Debug.LogWarning(string.Format("Only cameras with the same renderer type as the base camera can be stacked. {0} will skip rendering", currCamera.name)); } else { anyPostProcessingEnabled |= data.renderPostProcessing; lastActiveOverlayCameraIndex = i; } } } } else { Debug.LogWarning("Multi pass stereo mode doesn't support Camera Stacking. Overlay cameras will skip rendering."); } } bool isStackedRendering = lastActiveOverlayCameraIndex != -1; BeginCameraRendering(context, baseCamera); #if VISUAL_EFFECT_GRAPH_0_0_1_OR_NEWER //It should be called before culling to prepare material. When there isn't any VisualEffect component, this method has no effect. VFX.VFXManager.PrepareCamera(baseCamera); #endif UpdateVolumeFramework(baseCamera, baseCameraAdditionalData); InitializeCameraData(baseCamera, baseCameraAdditionalData, !isStackedRendering, out var baseCameraData); RenderSingleCamera(context, baseCameraData, anyPostProcessingEnabled); EndCameraRendering(context, baseCamera); if(!isStackedRendering) return; for(int i = 0; i < cameraStack.Count; ++i) { var currCamera = cameraStack[i]; if(!currCamera.isActiveAndEnabled) continue; currCamera.TryGetComponent<UniversalAdditionalCameraData>(out var currCameraData); // Camera is overlay and enabled if(currCameraData != null) { // Copy base settings from base camera data and initialize initialize remaining specific settings for this camera type. CameraData overlayCameraData = baseCameraData; bool lastCamera = i == lastActiveOverlayCameraIndex; BeginCameraRendering(context, currCamera); #if VISUAL_EFFECT_GRAPH_0_0_1_OR_NEWER //It should be called before culling to prepare material. When there isn't any VisualEffect component, this method has no effect. VFX.VFXManager.PrepareCamera(currCamera); #endif UpdateVolumeFramework(currCamera, currCameraData); InitializeAdditionalCameraData(currCamera, currCameraData, lastCamera, ref overlayCameraData); RenderSingleCamera(context, overlayCameraData, anyPostProcessingEnabled); EndCameraRendering(context, currCamera); } } } static void UpdateVolumeFramework(Camera camera, UniversalAdditionalCameraData additionalCameraData) { // Default values when there's no additional camera data available LayerMask layerMask = 1; // "Default" Transform trigger = camera.transform; if(additionalCameraData != null) { layerMask = additionalCameraData.volumeLayerMask; trigger = additionalCameraData.volumeTrigger != null ? additionalCameraData.volumeTrigger : trigger; } else if(camera.cameraType == CameraType.SceneView) { // Try to mirror the MainCamera volume layer mask for the scene view - do not mirror the target var mainCamera = Camera.main; UniversalAdditionalCameraData mainAdditionalCameraData = null; if(mainCamera != null && mainCamera.TryGetComponent(out mainAdditionalCameraData)) layerMask = mainAdditionalCameraData.volumeLayerMask; trigger = mainAdditionalCameraData != null && mainAdditionalCameraData.volumeTrigger != null ? mainAdditionalCameraData.volumeTrigger : trigger; } VolumeManager.instance.Update(trigger, layerMask); } static bool CheckPostProcessForDepth(in CameraData cameraData) { if(!cameraData.postProcessEnabled) return false; if(cameraData.antialiasing == AntialiasingMode.SubpixelMorphologicalAntiAliasing) return true; var stack = VolumeManager.instance.stack; if(stack.GetComponent<DepthOfField>().IsActive()) return true; if(stack.GetComponent<MotionBlur>().IsActive()) return true; return false; } static void SetSupportedRenderingFeatures() { #if UNITY_EDITOR SupportedRenderingFeatures.active = new SupportedRenderingFeatures() { reflectionProbeModes = SupportedRenderingFeatures.ReflectionProbeModes.None, defaultMixedLightingModes = SupportedRenderingFeatures.LightmapMixedBakeModes.Subtractive, mixedLightingModes = SupportedRenderingFeatures.LightmapMixedBakeModes.Subtractive | SupportedRenderingFeatures.LightmapMixedBakeModes.IndirectOnly, lightmapBakeTypes = LightmapBakeType.Baked | LightmapBakeType.Mixed, lightmapsModes = LightmapsMode.CombinedDirectional | LightmapsMode.NonDirectional, lightProbeProxyVolumes = false, motionVectors = false, receiveShadows = false, reflectionProbes = true }; SceneViewDrawMode.SetupDrawMode(); #endif } static void InitializeCameraData(Camera camera, UniversalAdditionalCameraData additionalCameraData, bool resolveFinalTarget, out CameraData cameraData) { cameraData = new CameraData(); InitializeStackedCameraData(camera, additionalCameraData, ref cameraData); InitializeAdditionalCameraData(camera, additionalCameraData, resolveFinalTarget, ref cameraData); } /// <summary> /// Initialize camera data settings common for all cameras in the stack. Overlay cameras will inherit /// settings from base camera. /// </summary> /// <param name="baseCamera">Base camera to inherit settings from.</param> /// <param name="baseAdditionalCameraData">Component that contains additional base camera data.</param> /// <param name="cameraData">Camera data to initialize setttings.</param> static void InitializeStackedCameraData(Camera baseCamera, UniversalAdditionalCameraData baseAdditionalCameraData, ref CameraData cameraData) { var settings = asset; cameraData.targetTexture = baseCamera.targetTexture; cameraData.isStereoEnabled = IsStereoEnabled(baseCamera); cameraData.isSceneViewCamera = baseCamera.cameraType == CameraType.SceneView; cameraData.numberOfXRPasses = 1; cameraData.isXRMultipass = false; #if ENABLE_VR && ENABLE_VR_MODULE if (cameraData.isStereoEnabled && !cameraData.isSceneViewCamera && XR.XRSettings.stereoRenderingMode == XR.XRSettings.StereoRenderingMode.MultiPass) { cameraData.numberOfXRPasses = 2; cameraData.isXRMultipass = true; } #endif /////////////////////////////////////////////////////////////////// // Environment and Post-processing settings / /////////////////////////////////////////////////////////////////// if(cameraData.isSceneViewCamera) { cameraData.volumeLayerMask = 1; // "Default" cameraData.volumeTrigger = null; cameraData.isStopNaNEnabled = false; cameraData.isDitheringEnabled = false; cameraData.antialiasing = AntialiasingMode.None; cameraData.antialiasingQuality = AntialiasingQuality.High; } else if(baseAdditionalCameraData != null) { cameraData.volumeLayerMask = baseAdditionalCameraData.volumeLayerMask; cameraData.volumeTrigger = baseAdditionalCameraData.volumeTrigger == null ? baseCamera.transform : baseAdditionalCameraData.volumeTrigger; cameraData.isStopNaNEnabled = baseAdditionalCameraData.stopNaN && SystemInfo.graphicsShaderLevel >= 35; cameraData.isDitheringEnabled = baseAdditionalCameraData.dithering; cameraData.antialiasing = baseAdditionalCameraData.antialiasing; cameraData.antialiasingQuality = baseAdditionalCameraData.antialiasingQuality; } else { cameraData.volumeLayerMask = 1; // "Default" cameraData.volumeTrigger = null; cameraData.isStopNaNEnabled = false; cameraData.isDitheringEnabled = false; cameraData.antialiasing = AntialiasingMode.None; cameraData.antialiasingQuality = AntialiasingQuality.High; } /////////////////////////////////////////////////////////////////// // Settings that control output of the camera / /////////////////////////////////////////////////////////////////// int msaaSamples = 1; if(baseCamera.allowMSAA && settings.msaaSampleCount > 1) msaaSamples = (baseCamera.targetTexture != null) ? baseCamera.targetTexture.antiAliasing : settings.msaaSampleCount; cameraData.isHdrEnabled = baseCamera.allowHDR && settings.supportsHDR; Rect cameraRect = baseCamera.rect; cameraData.pixelRect = baseCamera.pixelRect; cameraData.pixelWidth = baseCamera.pixelWidth; cameraData.pixelHeight = baseCamera.pixelHeight; cameraData.aspectRatio = (float)cameraData.pixelWidth / (float)cameraData.pixelHeight; cameraData.isDefaultViewport = (!(Math.Abs(cameraRect.x) > 0.0f || Math.Abs(cameraRect.y) > 0.0f || Math.Abs(cameraRect.width) < 1.0f || Math.Abs(cameraRect.height) < 1.0f)); // If XR is enabled, use XR renderScale. // Discard variations lesser than kRenderScaleThreshold. // Scale is only enabled for gameview. const float kRenderScaleThreshold = 0.05f; float usedRenderScale = XRGraphics.enabled ? XRGraphics.eyeTextureResolutionScale : settings.renderScale; cameraData.renderScale = (Mathf.Abs(1.0f - usedRenderScale) < kRenderScaleThreshold) ? 1.0f : usedRenderScale; var commonOpaqueFlags = SortingCriteria.CommonOpaque; var noFrontToBackOpaqueFlags = SortingCriteria.SortingLayer | SortingCriteria.RenderQueue | SortingCriteria.OptimizeStateChanges | SortingCriteria.CanvasOrder; bool hasHSRGPU = SystemInfo.hasHiddenSurfaceRemovalOnGPU; bool canSkipFrontToBackSorting = (baseCamera.opaqueSortMode == OpaqueSortMode.Default && hasHSRGPU) || baseCamera.opaqueSortMode == OpaqueSortMode.NoDistanceSort; cameraData.defaultOpaqueSortFlags = canSkipFrontToBackSorting ? noFrontToBackOpaqueFlags : commonOpaqueFlags; cameraData.captureActions = CameraCaptureBridge.GetCaptureActions(baseCamera); bool needsAlphaChannel = Graphics.preserveFramebufferAlpha; cameraData.cameraTargetDescriptor = CreateRenderTextureDescriptor(baseCamera, cameraData.renderScale, cameraData.isStereoEnabled, cameraData.isHdrEnabled, msaaSamples, needsAlphaChannel); } /// <summary> /// Initialize settings that can be different for each camera in the stack. /// </summary> /// <param name="camera">Camera to initialize settings from.</param> /// <param name="additionalCameraData">Additional camera data component to initialize settings from.</param> /// <param name="resolveFinalTarget">True if this is the last camera in the stack and rendering should resolve to camera target.</param> /// <param name="cameraData">Settings to be initilized.</param> static void InitializeAdditionalCameraData(Camera camera, UniversalAdditionalCameraData additionalCameraData, bool resolveFinalTarget, ref CameraData cameraData) { var settings = asset; cameraData.camera = camera; bool anyShadowsEnabled = settings.supportsMainLightShadows || settings.supportsAdditionalLightShadows; cameraData.maxShadowDistance = Mathf.Min(settings.shadowDistance, camera.farClipPlane); cameraData.maxShadowDistance = (anyShadowsEnabled && cameraData.maxShadowDistance >= camera.nearClipPlane) ? cameraData.maxShadowDistance : 0.0f; if(cameraData.isSceneViewCamera) { cameraData.renderType = CameraRenderType.Base; cameraData.clearDepth = true; cameraData.postProcessEnabled = CoreUtils.ArePostProcessesEnabled(camera); cameraData.requiresDepthTexture = settings.supportsCameraDepthTexture; cameraData.requiresOpaqueTexture = settings.supportsCameraOpaqueTexture; cameraData.renderer = asset.scriptableRenderer; } else if(additionalCameraData != null) { cameraData.renderType = additionalCameraData.renderType; cameraData.clearDepth = (additionalCameraData.renderType != CameraRenderType.Base) ? additionalCameraData.clearDepth : true; cameraData.postProcessEnabled = additionalCameraData.renderPostProcessing; cameraData.maxShadowDistance = (additionalCameraData.renderShadows) ? cameraData.maxShadowDistance : 0.0f; cameraData.requiresDepthTexture = additionalCameraData.requiresDepthTexture; cameraData.requiresOpaqueTexture = additionalCameraData.requiresColorTexture; cameraData.renderer = additionalCameraData.scriptableRenderer; } else { cameraData.renderType = CameraRenderType.Base; cameraData.clearDepth = true; cameraData.postProcessEnabled = false; cameraData.requiresDepthTexture = settings.supportsCameraDepthTexture; cameraData.requiresOpaqueTexture = settings.supportsCameraOpaqueTexture; cameraData.renderer = asset.scriptableRenderer; } // Disable depth and color copy. We should add it in the renderer instead to avoid performance pitfalls // of camera stacking breaking render pass execution implicitly. bool isOverlayCamera = (cameraData.renderType == CameraRenderType.Overlay); if(isOverlayCamera) { cameraData.requiresDepthTexture = false; cameraData.requiresOpaqueTexture = false; } // Disables post if GLes2 cameraData.postProcessEnabled &= SystemInfo.graphicsDeviceType != GraphicsDeviceType.OpenGLES2; cameraData.requiresDepthTexture |= cameraData.isSceneViewCamera || CheckPostProcessForDepth(cameraData); cameraData.resolveFinalTarget = resolveFinalTarget; Matrix4x4 projectionMatrix = camera.projectionMatrix; // Overlay cameras inherit viewport from base. // If the viewport is different between them we might need to patch the projection to adjust aspect ratio // matrix to prevent squishing when rendering objects in overlay cameras. if(isOverlayCamera && !camera.orthographic && !cameraData.isStereoEnabled && cameraData.pixelRect != camera.pixelRect) { // m00 = (cotangent / aspect), therefore m00 * aspect gives us cotangent. float cotangent = camera.projectionMatrix.m00 * camera.aspect; // Get new m00 by dividing by base camera aspectRatio. float newCotangent = cotangent / cameraData.aspectRatio; projectionMatrix.m00 = newCotangent; } cameraData.SetViewAndProjectionMatrix(camera.worldToCameraMatrix, projectionMatrix); } static void InitializeRenderingData(UniversalRenderPipelineAsset settings, ref CameraData cameraData, ref CullingResults cullResults, bool anyPostProcessingEnabled, out RenderingData renderingData) { var visibleLights = cullResults.visibleLights; int mainLightIndex = GetMainLightIndex(settings, visibleLights); bool mainLightCastShadows = false; bool additionalLightsCastShadows = false; if(cameraData.maxShadowDistance > 0.0f) { mainLightCastShadows = (mainLightIndex != -1 && visibleLights[mainLightIndex].light != null && visibleLights[mainLightIndex].light.shadows != LightShadows.None); // If additional lights are shaded per-pixel they cannot cast shadows if(settings.additionalLightsRenderingMode == LightRenderingMode.PerPixel) { for(int i = 0; i < visibleLights.Length; ++i) { if(i == mainLightIndex) continue; Light light = visibleLights[i].light; // UniversalRP doesn't support additional directional lights or point light shadows yet if(visibleLights[i].lightType == LightType.Spot && light != null && light.shadows != LightShadows.None) { additionalLightsCastShadows = true; break; } } } } renderingData.cullResults = cullResults; renderingData.cameraData = cameraData; InitializeLightData(settings, visibleLights, mainLightIndex, out renderingData.lightData); InitializeShadowData(settings, visibleLights, mainLightCastShadows, additionalLightsCastShadows && !renderingData.lightData.shadeAdditionalLightsPerVertex, out renderingData.shadowData); InitializePostProcessingData(settings, out renderingData.postProcessingData); renderingData.supportsDynamicBatching = settings.supportsDynamicBatching; renderingData.perObjectData = GetPerObjectLightFlags(renderingData.lightData.additionalLightsCount); bool isOffscreenCamera = cameraData.targetTexture != null && !cameraData.isSceneViewCamera; renderingData.postProcessingEnabled = anyPostProcessingEnabled; } static void InitializeShadowData(UniversalRenderPipelineAsset settings, NativeArray<VisibleLight> visibleLights, bool mainLightCastShadows, bool additionalLightsCastShadows, out ShadowData shadowData) { m_ShadowBiasData.Clear(); for(int i = 0; i < visibleLights.Length; ++i) { Light light = visibleLights[i].light; UniversalAdditionalLightData data = null; if(light != null) { light.gameObject.TryGetComponent(out data); } if(data && !data.usePipelineSettings) m_ShadowBiasData.Add(new Vector4(light.shadowBias, light.shadowNormalBias, 0.0f, 0.0f)); else m_ShadowBiasData.Add(new Vector4(settings.shadowDepthBias, settings.shadowNormalBias, 0.0f, 0.0f)); } shadowData.bias = m_ShadowBiasData; shadowData.supportsMainLightShadows = SystemInfo.supportsShadows && settings.supportsMainLightShadows && mainLightCastShadows; // We no longer use screen space shadows in URP. // This change allows us to have particles & transparent objects receive shadows. shadowData.requiresScreenSpaceShadowResolve = false;// shadowData.supportsMainLightShadows && supportsScreenSpaceShadows && settings.shadowCascadeOption != ShadowCascadesOption.NoCascades; int shadowCascadesCount; switch(settings.shadowCascadeOption) { case ShadowCascadesOption.FourCascades: shadowCascadesCount = 4; break; case ShadowCascadesOption.TwoCascades: shadowCascadesCount = 2; break; default: shadowCascadesCount = 1; break; } shadowData.mainLightShadowCascadesCount = shadowCascadesCount;//(shadowData.requiresScreenSpaceShadowResolve) ? shadowCascadesCount : 1; shadowData.mainLightShadowmapWidth = settings.mainLightShadowmapResolution; shadowData.mainLightShadowmapHeight = settings.mainLightShadowmapResolution; switch(shadowData.mainLightShadowCascadesCount) { case 1: shadowData.mainLightShadowCascadesSplit = new Vector3(1.0f, 0.0f, 0.0f); break; case 2: shadowData.mainLightShadowCascadesSplit = new Vector3(settings.cascade2Split, 1.0f, 0.0f); break; default: shadowData.mainLightShadowCascadesSplit = settings.cascade4Split; break; } shadowData.supportsAdditionalLightShadows = SystemInfo.supportsShadows && settings.supportsAdditionalLightShadows && additionalLightsCastShadows; shadowData.additionalLightsShadowmapWidth = shadowData.additionalLightsShadowmapHeight = settings.additionalLightsShadowmapResolution; shadowData.supportsSoftShadows = settings.supportsSoftShadows && (shadowData.supportsMainLightShadows || shadowData.supportsAdditionalLightShadows); shadowData.shadowmapDepthBufferBits = 16; } static void InitializePostProcessingData(UniversalRenderPipelineAsset settings, out PostProcessingData postProcessingData) { postProcessingData.gradingMode = settings.supportsHDR ? settings.colorGradingMode : ColorGradingMode.LowDynamicRange; postProcessingData.lutSize = settings.colorGradingLutSize; } static void InitializeLightData(UniversalRenderPipelineAsset settings, NativeArray<VisibleLight> visibleLights, int mainLightIndex, out LightData lightData) { int maxPerObjectAdditionalLights = UniversalRenderPipeline.maxPerObjectLights; int maxVisibleAdditionalLights = UniversalRenderPipeline.maxVisibleAdditionalLights; lightData.mainLightIndex = mainLightIndex; if(settings.additionalLightsRenderingMode != LightRenderingMode.Disabled) { lightData.additionalLightsCount = Math.Min((mainLightIndex != -1) ? visibleLights.Length - 1 : visibleLights.Length, maxVisibleAdditionalLights); lightData.maxPerObjectAdditionalLightsCount = Math.Min(settings.maxAdditionalLightsCount, maxPerObjectAdditionalLights); } else { lightData.additionalLightsCount = 0; lightData.maxPerObjectAdditionalLightsCount = 0; } lightData.shadeAdditionalLightsPerVertex = settings.additionalLightsRenderingMode == LightRenderingMode.PerVertex; lightData.visibleLights = visibleLights; lightData.supportsMixedLighting = settings.supportsMixedLighting; } static PerObjectData GetPerObjectLightFlags(int additionalLightsCount) { var configuration = PerObjectData.ReflectionProbes | PerObjectData.Lightmaps | PerObjectData.LightProbe | PerObjectData.LightData | PerObjectData.OcclusionProbe; if(additionalLightsCount > 0) { configuration |= PerObjectData.LightData; // In this case we also need per-object indices (unity_LightIndices) if(!RenderingUtils.useStructuredBuffer) configuration |= PerObjectData.LightIndices; } return configuration; } // Main Light is always a directional light static int GetMainLightIndex(UniversalRenderPipelineAsset settings, NativeArray<VisibleLight> visibleLights) { int totalVisibleLights = visibleLights.Length; if(totalVisibleLights == 0 || settings.mainLightRenderingMode != LightRenderingMode.PerPixel) return -1; Light sunLight = RenderSettings.sun; int brightestDirectionalLightIndex = -1; float brightestLightIntensity = 0.0f; for(int i = 0; i < totalVisibleLights; ++i) { VisibleLight currVisibleLight = visibleLights[i]; Light currLight = currVisibleLight.light; // Particle system lights have the light property as null. We sort lights so all particles lights // come last. Therefore, if first light is particle light then all lights are particle lights. // In this case we either have no main light or already found it. if(currLight == null) break; if(currLight == sunLight) return i; // In case no shadow light is present we will return the brightest directional light if(currVisibleLight.lightType == LightType.Directional && currLight.intensity > brightestLightIntensity) { brightestLightIntensity = currLight.intensity; brightestDirectionalLightIndex = i; } } return brightestDirectionalLightIndex; } static void SetupPerFrameShaderConstants() { // When glossy reflections are OFF in the shader we set a constant color to use as indirect specular SphericalHarmonicsL2 ambientSH = RenderSettings.ambientProbe; Color linearGlossyEnvColor = new Color(ambientSH[0, 0], ambientSH[1, 0], ambientSH[2, 0]) * RenderSettings.reflectionIntensity; Color glossyEnvColor = CoreUtils.ConvertLinearToActiveColorSpace(linearGlossyEnvColor); Shader.SetGlobalVector(PerFrameBuffer._GlossyEnvironmentColor, glossyEnvColor); // Used when subtractive mode is selected Shader.SetGlobalVector(PerFrameBuffer._SubtractiveShadowColor, CoreUtils.ConvertSRGBToActiveColorSpace(RenderSettings.subtractiveShadowColor)); } } }

.....................................

我从GitHub上找到了URP的主要代码, 有点长的样子, 百度的例子大部分是2018的, Git上的是2020的, 我装的是2019的, 跨三个版本发现API差别真是太大了...而且里面都是以前没用过的东西(废话, 以前是引擎内部调用的), 先整体来看看它的原理吧 :

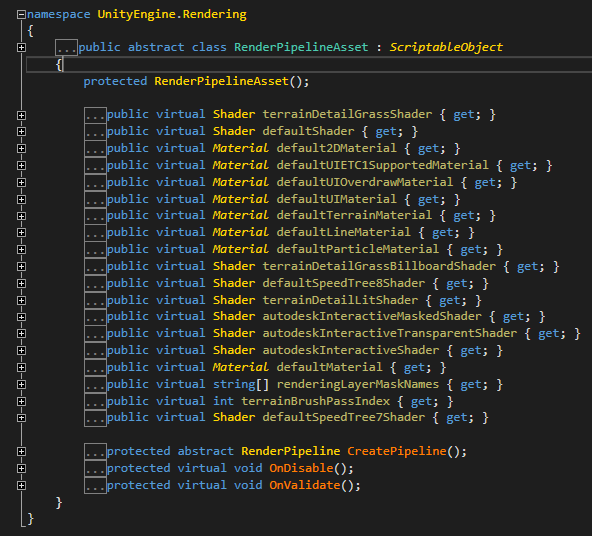

首先是序列化数据 RenderPipelineAsset, 它是渲染相关的序列化数据, 在上面我们看到可以选择HDR之类的, 基类是这样的 :

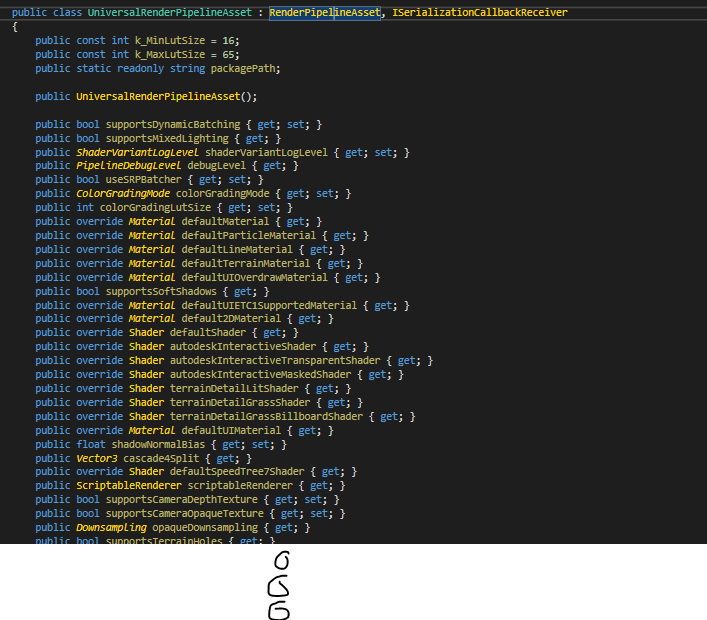

它只是为了提供一些渲染设置的存储, 主要的还是继承的方法 CreatePipeline, 它才是运行时使用的渲染流程代码. 看看URP自己添加的设置有哪些 :

省略...

我们可以看到基类的里面有一些系统自带的组件需要的东西, 比如地形着色器, 甚至编辑器下的 OverDraw 显示材质, 而UniversalRenderPipelineAsset把这些实现了一遍, 还添加了不少自身要用的序列化对象进去, 比如supportsDynamicBatching这些可设置, 可以想见如果全部自己写的话, 也是要兼顾编辑器和运行时的, 毕竟开发测试占了大头, 还真不好搞.

知道原理了我就来搞一个自定义渲染管线吧, 从最简单的着手, 用继承的方式做URP :

using UnityEngine; using UnityEngine.Rendering; using System.Reflection; // 序列化资源, 也是渲染的入口, 继承于UniversalRenderPipelineAsset [ExecuteInEditMode] public class MyUniversalRenderPipelineAsset : UnityEngine.Rendering.Universal.UniversalRenderPipelineAsset { public static MyUniversalRenderPipelineAsset instance = null; protected override RenderPipeline CreatePipeline() { instance = this; return new MyUniversalRenderPipeline(); } #if UNITY_EDITOR [UnityEditor.MenuItem("Test/Create MyUniversalRenderPipelineAsset")] static void CreateBasicAssetPipeline() { var instance = ScriptableObject.CreateInstance<MyUniversalRenderPipelineAsset>(); UnityEditor.AssetDatabase.CreateAsset(instance, "Assets/MyUniversalRenderPipelineAsset.asset"); UnityEditor.AssetDatabase.SaveAssets(); UnityEditor.AssetDatabase.Refresh(); } #endif } // 渲染逻辑, 因为UniversalRenderPipeline不可继承, 需要创建新实例, 并且使用反射调用Render方法 [ExecuteInEditMode] public class MyUniversalRenderPipeline : RenderPipeline { UnityEngine.Rendering.Universal.UniversalRenderPipeline pipeline = null; System.Action<ScriptableRenderContext, Camera[]> render = null; protected override void Render(ScriptableRenderContext context, Camera[] cameras) { if(pipeline == null) { pipeline = new UnityEngine.Rendering.Universal.UniversalRenderPipeline(MyUniversalRenderPipelineAsset.instance); var method = pipeline.GetType().GetMethod("Render", BindingFlags.Instance | BindingFlags.NonPublic | BindingFlags.InvokeMethod); if(method != null) { render = (_context, _cameras) => { method.Invoke(pipeline, new object[] { _context, _cameras }); }; } } if(render != null) { render.Invoke(context, cameras); Debug.Log("渲染了"); } } }

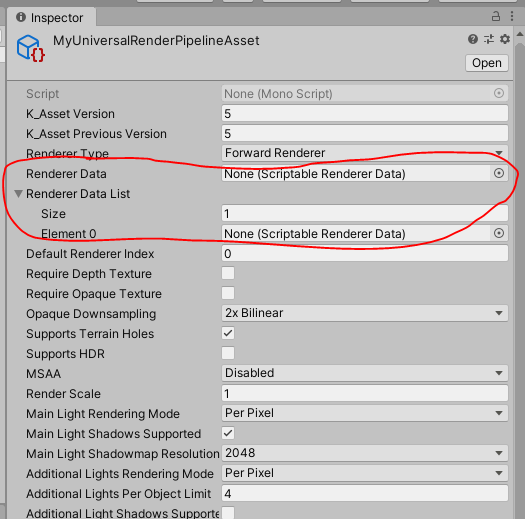

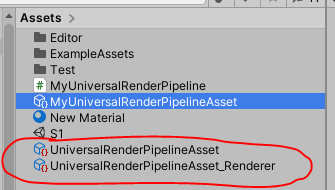

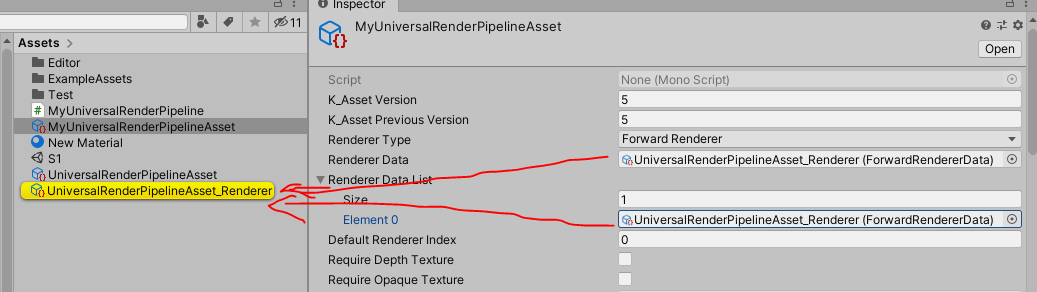

资源继承于 UniversalRenderPipelineAsset 我们在编辑器创建之后, 别忘了手动添加一些资源, 要不然会报错 :

我们在创建默认 RenderPipelineAsset 的时候, 也自动创建了一个相关的RendererData, 把它拖进来 :

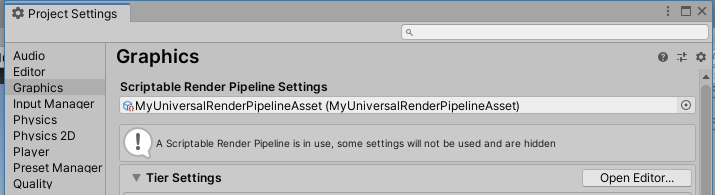

这样就可以渲染了, 把MyUniversalRenderPipelineAsset拖到 SRP Settings里面去就行了 :

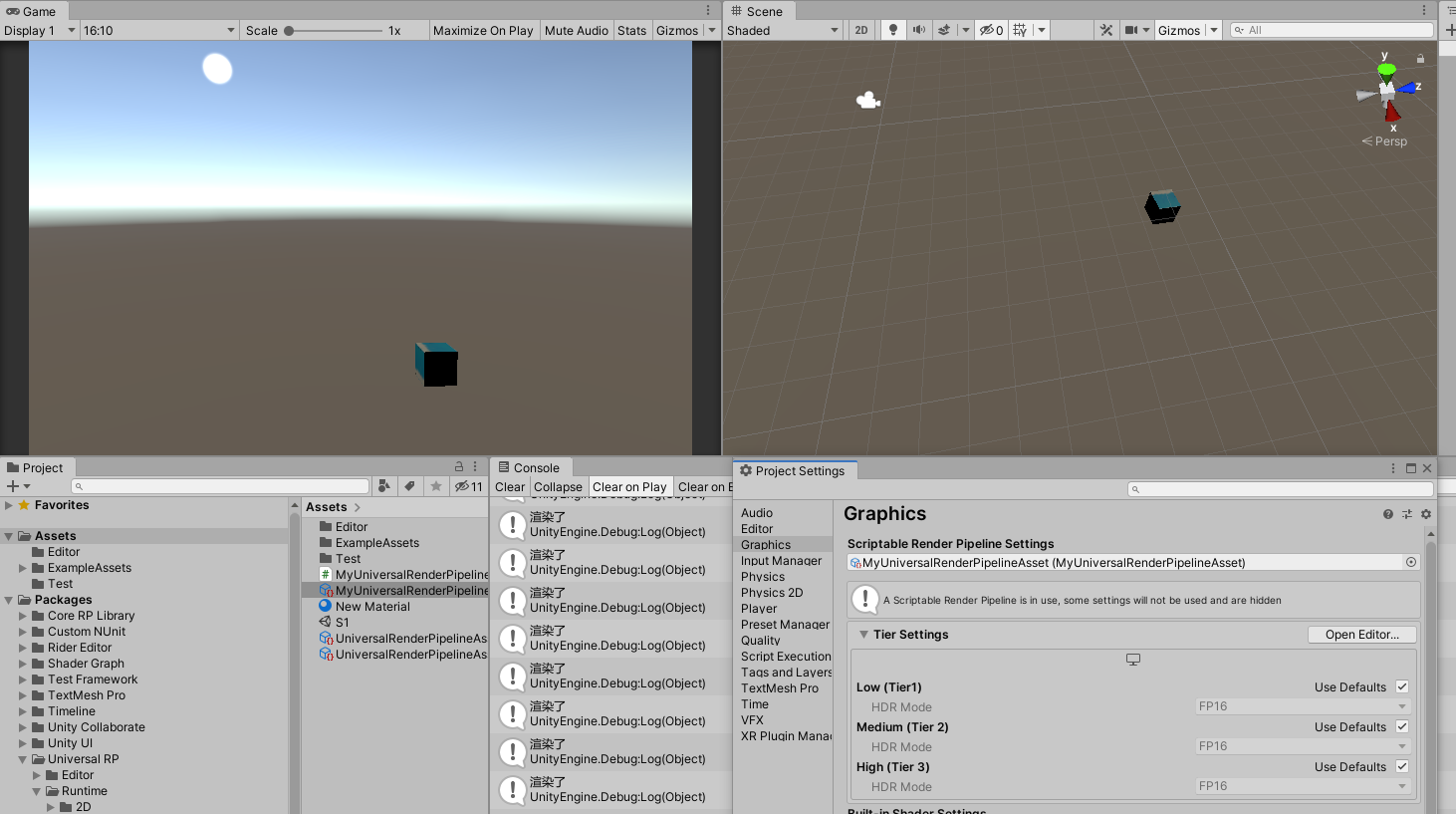

运行起来看看, 结果跟原有URP一模一样, 只不过每次渲染都会打LOG, 在编辑器下也一样 :

这就是一个可编程渲染管线的制作流程了, 至于怎样写一个自己需要的渲染管线, 后面还有很长的路吧, 需要长期摸索和实践测试, 国内基本都是手游, 只要摸索通用渲染管线就行了, 高清渲染管线只在PC和游戏主机上用, 我们接触的机会渺茫了, 可能以后国内做动画电影的会接触到吧.

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 单元测试从入门到精通

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律

2019-03-12 Json To CSharp