前提:某个字段是中文,要用这个字段做升序,结果es默认的是unicode编码排序,与需求按拼音排序不符,故而引入了拼音分词器实现

1、下载拼音分词器插件

bin/elasticsearch-plugin install https://github.com/medcl/elasticsearch-analysis-pinyin/releases/download/v7.17.3/elasticsearch-analysis-pinyin-7.17.3.zip

2、创建索引添加sort内容

put new_index/ { "settings": { "analysis": { "analyzer": { "pinyin_analyzer": { "tokenizer": "pinyin_tokenizer" } }, "tokenizer": { "pinyin_tokenizer": { "type": "pinyin", "keep_first_letter": "true", "keep_none_chinese_in_first_letter": "true", "keep_separate_first_letter": "false", "keep_full_pinyin": "false", "keep_joined_full_pinyin": "false", "keep_none_chinese": "false", "keep_none_chinese_together": "false", "keep_none_chinese_in_joined_full_pinyin": "false", "none_chinese_pinyin_tokenize": "false", "keep_original": "false", "lowercase": "false", "trim_whitespace": "false", "remove_duplicated_term": "false" } } } }, "mappings" : { "properties" : { "name" : { "type" : "keyword", "fields": { "pinyin": { "type": "text", "analyzer": "pinyin_analyzer", "search_analyzer": "standard", "fielddata": true } } } } } }

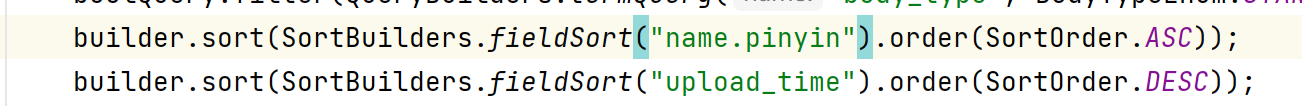

3、查询方式

POST test/_search { "sort": [ { "name.pinyin": { "order": "asc" } } ] }

主要问题:

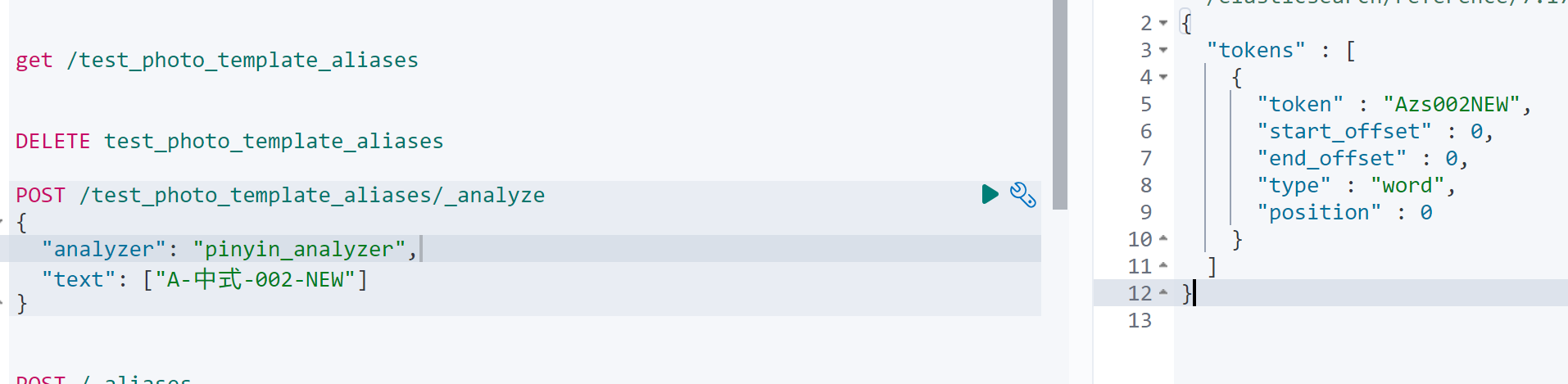

1、tokenizer的各种设置会导致分词的结果是一个list,sort里面的mode的默认值是min会导致按分词结果升序再按更新时间降序的结果不准确,故只设置 "keep_first_letter": "true", "keep_none_chinese_in_first_letter": "true"即只会有按拼音和非中文的字符展示的首字母分词结果

2、在已有的index上调整字段按分词结果排序,需要新增一个index设置name字段分词,然后把已存在的index数据转移到新增的index,然后删除原来的索引,再新增原来索引同名索引并以新索引为别名

put new_index/ { "settings": { "analysis": { "analyzer": { "pinyin_analyzer": { "tokenizer": "pinyin_tokenizer" } }, "tokenizer": { "pinyin_tokenizer": { "type": "pinyin", "keep_first_letter": "true", "keep_none_chinese_in_first_letter": "true", "keep_separate_first_letter": "false", "keep_full_pinyin": "false", "keep_joined_full_pinyin": "false", "keep_none_chinese": "false", "keep_none_chinese_together": "false", "keep_none_chinese_in_joined_full_pinyin": "false", "none_chinese_pinyin_tokenize": "false", "keep_original": "false", "lowercase": "false", "trim_whitespace": "false", "remove_duplicated_term": "false" } } } }, "mappings" : { "properties" : { "name" : { "type" : "keyword", "fields": { "pinyin": { "type": "text", "analyzer": "pinyin_analyzer", "search_analyzer": "standard", "fielddata": true } } } } } }

POST /_reindex { "source": { "index": "old_index" }, "dest": { "index": "new_index" } }

DELETE old_index

POST /_aliases { "actions": [ { "add": { "index": "old_index", "alias": "new_index" } } ] }

附拼音分词属性简介

| 属性 | 说明 |

|---|---|

| keep_first_letter | 启用此选项时,例如:刘德华> ldh,默认值:true |

| keep_separate_first_letter | 启用该选项时,将保留第一个字母分开,例如:刘德华> l,d,h,默认:false,注意:查询结果也许是太模糊,由于长期过频 |

| limit_first_letter_length | 设置first_letter结果的最大长度,默认值:16 |

| keep_full_pinyin | 当启用该选项,例如:刘德华> [ liu,de,hua],默认值:true |

| keep_joined_full_pinyin | 当启用此选项时,例如:刘德华> [ liudehua],默认值:false |

| keep_none_chinese | 在结果中保留非中文字母或数字,默认值:true |

| keep_none_chinese_together | 保持非中国信一起,默认值:true,如:DJ音乐家- > DJ,yin,yue,jia,当设置为false,例如:DJ音乐家- > D,J,yin,yue,jia,注意:keep_none_chinese必须先启动 |

| keep_none_chinese_in_first_letter | 第一个字母保持非中文字母,例如:刘德华AT2016- > ldhat2016,默认值:true |

| keep_none_chinese_in_joined_full_pinyin | 保留非中文字母加入完整拼音,例如:刘德华2016- > liudehua2016,默认:false |

| none_chinese_pinyin_tokenize | 打破非中国信成单独的拼音项,如果他们拼音,默认值:true,如:liudehuaalibaba13zhuanghan- > liu,de,hua,a,li,ba,ba,13,zhuang,han,注意:keep_none_chinese和keep_none_chinese_together应首先启用 |

| keep_original | 当启用此选项时,也会保留原始输入,默认值:false |

| lowercase | 小写非中文字母,默认值:true |

| trim_whitespace | 默认值:true |

| remove_duplicated_term | 当启用此选项时,将删除重复项以保存索引,例如:de的> de,默认值:false,注意:位置相关查询可能受影响 |

参考链接:https://blog.csdn.net/qq_41444892/article/details/129685138

https://blog.csdn.net/jifgnie/article/details/130938996

https://blog.csdn.net/qq_31286957/article/details/130842632

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· AI编程工具终极对决:字节Trae VS Cursor,谁才是开发者新宠?

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!