VGG模拟器实现

此代码用于学习模拟器,和上一篇博客的代码相同,因重要重新整理。

node_list=[] edge_list=[] kernel_h = 3 kernel_w = 3 kernel_size = kernel_h*kernel_w pool_size = 2*2 data_per_pix = 1 def jsonout(fileout, nodes, edges): with open(fileout, "w") as result: outItem = "{\n \"nodes\":\n [\n" result.write(outItem) # Write nodes for i in range(len(nodes)-1): outItem = "\t{\"id\":\""+(str)(nodes[i][0])+"\",\n\t\"comDmd\":\""+(str)(nodes[i][1])+"\",\n\t\"dataDmd\":\""+(str)(nodes[i][2])+"\"\n\t},\n" result.write(outItem) outItem = "\t{\"id\":\""+(str)(nodes[-1][0])+"\",\n\t\"comDmd\":\""+(str)(nodes[-1][1])+"\",\n\t\"dataDmd\":\""+(str)(nodes[-1][2])+"\"\n\t}\n" result.write(outItem) outItem = " ],\n \"edges\":\n [\n" result.write(outItem) for i in range(len(edges)-1): outItem = "\t{\"src\":\""+(str)(edges[i][0])+"\",\n\t\"dst\":\""+(str)(edges[i][1])+"\",\n\t\"weight\":\""+(str)(edges[i][2])+"\"\n\t},\n" result.write(outItem) outItem = "\t{\"src\":\""+(str)(edges[-1][0])+"\",\n\t\"dst\":\""+(str)(edges[-1][1])+"\",\n\t\"weight\":\""+(str)(edges[-1][2])+"\"\n\t}\n" result.write(outItem) outItem = " ]\n}\n" result.write(outItem) #last_c 和present_c 应该指的是channel #type 下面给出解释了, #0表示data的输入层 #1表示卷积层或全连接层 #2表示池化层之后所跟随的卷积层 #comm通信量 def conv_map(img_h, img_w, last_c, h_per_node_last_layer, w_per_node_last_layer, h_per_node, w_per_node, present_c, type): comm = kernel_size*last_c*present_c*h_per_node*w_per_node #感觉是本层一个node的卷积的计算量 #这么看来4是人为规定的数据流算子大小?就是本层当中一个node大小的节点所需要的计算量 data = float(kernel_size*last_c*present_c + (h_per_node+2)*(w_per_node+2)*last_c*data_per_pix)/1024 #data也很迷 #(h_per_node+2)*(w_per_node+2)*last_c就是当前层的一个node,在上一层的感受野 #kernel_size*last_c*present_c 感觉是卷积大小的运算次数,这两个怎么结合的? #那么data的作用呢? node_per_row = int(img_w/w_per_node) node_per_col = int(img_h/h_per_node) if type == 0: w_per_node_last_layer = w_per_node h_per_node_last_layer = h_per_node ##########################################################################分界线,下面的代码三种情况都会运行 node_per_row_last_layer = int(img_w/w_per_node_last_layer)#上一层的列数(img基于node划分的行列) node_per_col_last_layer = int(img_h/h_per_node_last_layer)#上一层的行数 node_num_last_layer = node_per_row_last_layer*node_per_col_last_layer#上一层的节点数目 node_part_num_row = int(node_per_row/node_per_row_last_layer) #本层列数除以上一层列数??????? node_part_num_col = int(node_per_col/node_per_col_last_layer) pre_node_num = len(node_list)#在这层节点加入之前,之前的所有节点的数目 for raw_num in range(node_per_col):#一列当中的node数目,即按行遍历 for col_num in range(node_per_row):#一行当中的node数目,即按列遍历 node_list.append([raw_num*node_per_row+col_num+pre_node_num, comm, data]) #raw_num*node_per_row+col_num 就是当前图片中该节点下标,然后加上之前节点数+pre_node_num # #three kind of conv-layer dependencies: #type=0 means null-conv (first conv layer, no data dependencies) #type=1 means conv-conv (last layer is conv layer) #type=2 means pool-conv (last layer is pool layer) if type == 1: #one-to-one data dependencies, conv-conv weight1 = float(h_per_node*w_per_node*last_c*data_per_pix)/1024 #感觉是因为是需要上面同样的一个位置的node完全算完的计算量 weight2 = float(h_per_node*last_c*data_per_pix)/1024 weight3 = float(w_per_node*last_c*data_per_pix)/1024 weight4 = float(1*last_c*data_per_pix)/1024 #我懂了,这里的weight不是实际的计算量,而是当前node在上一layer的感受野!!!! #这里不管上面一层的感受野是如何通过卷积运算得到的这一层的node #而是管的是上面一层的感受野大小。weight大小的上面一层感受野的数据决定了本层的node这块的数据。(padding补零的不算) for raw_num in range(node_per_col): for col_num in range(node_per_row): node_index = raw_num*node_per_row+col_num#如上面所说,这是当前节点在当前层当中的下标 last_node_index = node_index #感觉这样设置是因为type==1的时候,卷积层的图片形状没有改变,所以可以这样吧。 edge_list.append([last_node_index+pre_node_num-node_num_last_layer, node_index+pre_node_num, weight1]) #(last_layer[i], pre_layer[i]) if col_num > 0: #has the left data dependencies,即需要等待左边先算完 edge_list.append([last_node_index+pre_node_num-node_num_last_layer-1, node_index+pre_node_num, weight2]) #(last_layer[i-1], pre_layer[i]) if col_num < node_per_row-1: #has the right data dependencies edge_list.append([last_node_index+pre_node_num-node_num_last_layer+1, node_index+pre_node_num, weight2]) #(last_layer[i+1], pre_layer[i]) if raw_num > 0: #has the upper data dependencies edge_list.append([last_node_index+pre_node_num-node_num_last_layer-node_per_row, node_index+pre_node_num, weight3]) #(last_layer[i-node_per_row], pre_layer[i]) if raw_num < node_per_col-1: #has the lower data dependencies edge_list.append([last_node_index+pre_node_num-node_num_last_layer+node_per_row, node_index+pre_node_num, weight3]) #(last_layer[i+node_per_row], pre_layer[i]) if col_num > 0 and raw_num > 0: #has the left and upper data dependencies edge_list.append([last_node_index+pre_node_num-node_num_last_layer-node_per_row-1, node_index+pre_node_num, weight4]) #(last_layer[i-node_per_row-1], pre_layer[i]) if col_num > 0 and raw_num < node_per_col-1: #has the left and lower data dependencies edge_list.append([last_node_index+pre_node_num-node_num_last_layer+node_per_row-1, node_index+pre_node_num, weight4]) #(last_layer[i+node_per_row-1], pre_layer[i]) if col_num < node_per_row-1 and raw_num > 0: #has the upper and left data dependencies edge_list.append([last_node_index+pre_node_num-node_num_last_layer-node_per_row+1, node_index+pre_node_num, weight4]) #(last_layer[i-node_per_row+1], pre_layer[i]) if col_num < node_per_row-1 and raw_num < node_per_col-1: #has the lower and left data dependencies edge_list.append([last_node_index+pre_node_num-node_num_last_layer+node_per_row+1, node_index+pre_node_num, weight4]) #(last_layer[i+node_per_row+1], pre_layer[i]) if type == 2: #one-to-many data dependencies, pool-conv weight0 = float((h_per_node+2)*(w_per_node+2)*last_c*data_per_pix)/1024 weight1 = float(h_per_node*w_per_node*last_c*data_per_pix)/1024 weight2 = float(h_per_node*last_c*data_per_pix)/1024 weight3 = float(w_per_node*last_c*data_per_pix)/1024 weight4 = float(1*last_c*data_per_pix)/1024 for raw_num in range(node_per_col): for col_num in range(node_per_row): node_index = raw_num*node_per_row+col_num last_node_index = int(raw_num/node_part_num_col)*node_per_row_last_layer+int(col_num/node_part_num_row) if col_num%node_part_num_row == 0 and col_num > 0: #left edge_list.append([last_node_index+pre_node_num-node_num_last_layer-1, node_index+pre_node_num, weight2]) if col_num%node_part_num_row == node_part_num_row-1 and col_num < node_per_row-1: #right edge_list.append([last_node_index+pre_node_num-node_num_last_layer+1, node_index+pre_node_num, weight2]) if raw_num%node_part_num_col == 0 and raw_num > 0: #upper edge_list.append([last_node_index+pre_node_num-node_num_last_layer-node_per_row_last_layer, node_index+pre_node_num, weight3]) if raw_num%node_part_num_col == node_part_num_col-1 and raw_num < node_per_col-1: #lower edge_list.append([last_node_index+pre_node_num-node_num_last_layer+node_per_row_last_layer, node_index+pre_node_num, weight3]) if col_num%node_part_num_row == 0 and col_num > 0 and raw_num%node_part_num_col == 0 and raw_num > 0: #left and upper edge_list.append([last_node_index+pre_node_num-node_num_last_layer-node_per_row_last_layer-1, node_index+pre_node_num, weight4]) if col_num%node_part_num_row == 0 and col_num > 0 and raw_num%node_part_num_col == node_part_num_col-1 and raw_num < node_per_col-1: #left and lower edge_list.append([last_node_index+pre_node_num-node_num_last_layer+node_per_row_last_layer-1, node_index+pre_node_num, weight4]) if col_num%node_part_num_row == node_part_num_row-1 and col_num < node_per_row-1 and raw_num%node_part_num_col == 0 and raw_num > 0: #right and upper edge_list.append([last_node_index+pre_node_num-node_num_last_layer-node_per_row_last_layer+1, node_index+pre_node_num, weight4]) if col_num%node_part_num_row == node_part_num_row-1 and col_num < node_per_row-1 and raw_num%node_part_num_col == node_part_num_col-1 and raw_num < node_per_col-1: #right and lower edge_list.append([last_node_index+pre_node_num-node_num_last_layer+node_per_row_last_layer+1, node_index+pre_node_num, weight4]) if col_num%node_part_num_row > 0 and col_num%node_part_num_row < node_part_num_row-1 \ and raw_num%node_part_num_col > 0 and raw_num%node_part_num_col < node_part_num_col-1: edge_list.append([last_node_index+pre_node_num-node_num_last_layer, node_index+pre_node_num, weight0]) elif col_num%node_part_num_row > 0 and col_num%node_part_num_row < node_part_num_row-1 \ and ((raw_num%node_part_num_col == 0) or (raw_num%node_part_num_col == node_part_num_col-1)): edge_list.append([last_node_index+pre_node_num-node_num_last_layer, node_index+pre_node_num, weight1+2*weight2+weight3]) elif ((col_num%node_part_num_row == 0) or (col_num%node_part_num_row == node_part_num_row-1)) \ and raw_num%node_part_num_col > 0 and raw_num%node_part_num_col < node_part_num_col-1: edge_list.append([last_node_index+pre_node_num-node_num_last_layer, node_index+pre_node_num, weight1+weight2+2*weight3]) else: edge_list.append([last_node_index+pre_node_num-node_num_last_layer, node_index+pre_node_num, weight1+weight2+weight3+weight4]) def pool_map(img_h, img_w, last_c, h_per_node_last_layer, w_per_node_last_layer, h_per_node, w_per_node): #many-to-one data dependencies, conv-pool comm = int(pool_size*h_per_node/2*w_per_node/2*last_c) #comm一个节点池化操作所需要的计算量 #h_per_node/2*w_per_node/2 是该node池化之后的尺寸,一个池化操作的预算量是pool_size data = float(h_per_node*w_per_node*last_c*data_per_pix)/1024 #data是该池化层节点对应前一层的感受野 node_per_row = int(img_w/w_per_node) node_per_col = int(img_h/h_per_node) node_per_row_last_layer = int(img_w/w_per_node_last_layer)#上一层列数 node_per_col_last_layer = int(img_h/h_per_node_last_layer)#上一层行数 node_num_last_layer = node_per_row_last_layer*node_per_col_last_layer #上一层节点数 node_part_num_row = int(node_per_row_last_layer/node_per_row) node_part_num_col = int(node_per_col_last_layer/node_per_col)#在池化层当中得出的就是池化压缩的尺寸 pre_node_num = len(node_list) for raw_num in range(node_per_col): for col_num in range(node_per_row): node_list.append([raw_num*node_per_row+col_num+pre_node_num, comm, data]) #####上面这些都和前面的是一样的 weight = float(h_per_node_last_layer*w_per_node_last_layer*last_c*data_per_pix)/1024 #明显池化层的node比上一层卷积层的node要大很多啊,为什么这里的weight使用上一层的一个小节点来表示? #因为这里的weight就是上一层(最后一个池化层)的一个节点。 #下面的循环有四层,前两层确定了该池化层所对应的最上面一层的最左上角node的编号last_node_index #然后在后两层循环中 part_node_index是池化层节点内部所对应的上层node的索引 for raw_num in range(node_per_col): for col_num in range(node_per_row): node_index = raw_num*node_per_row+col_num last_node_index = raw_num*node_part_num_col*node_per_row_last_layer+col_num*node_part_num_row for part_raw_num in range(node_part_num_col): for part_col_num in range(node_part_num_row): part_node_index = part_raw_num*node_per_row_last_layer+part_col_num edge_list.append([last_node_index+pre_node_num-node_num_last_layer+part_node_index, node_index+pre_node_num, weight]) #这里的池化层就是node很大,这里的node框柱的上一层(最后的卷积层)里的所有node,连接到这个node,都成为了数据依赖。 def fc_map(img_h, img_w, last_c, node_num_last_layer, present_c, fc_level): comm = img_h*img_w*last_c*fc_level data = float(img_h*img_w*last_c*data_per_pix+img_h*img_w*last_c*fc_level)/1024 node_num = int(present_c/fc_level) pre_node_num = len(node_list) for fc_num in range(node_num): node_list.append([pre_node_num+fc_num, comm, data]) weight = float(img_h*img_w/node_num_last_layer*last_c*data_per_pix)/1024 for node_index in range(node_num): for last_node_index in range(node_num_last_layer): edge_list.append([last_node_index+pre_node_num-node_num_last_layer, node_index+pre_node_num, weight]) def generage_dag(): print ("***start generation***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) conv_map(224, 224, 3, 1, 1, 4, 4, 64, 0) print ("\n***conv1_1***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) conv_map(224, 224, 64, 4, 4, 4, 4, 64, 1) print ("\n***conv1_2***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) pool_map(224, 224, 64, 4, 4, 56, 56) print ("\n***pool1***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) conv_map(112, 112, 64, 28, 28, 4, 4, 128, 2) print ("\n***conv2_1***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) conv_map(112, 112, 128, 4, 4, 4, 4, 128, 1) print ("\n***conv2_2***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) pool_map(112, 112, 128, 4, 4, 56, 56) print ("\n***pool2***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) conv_map(56, 56, 128, 28, 28, 4, 4, 256, 2) print ("\n***conv3_1***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) conv_map(56, 56, 256, 4, 4, 4, 4, 256, 1) print ("\n***conv3_2***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) conv_map(56, 56, 256, 4, 4, 4, 4, 256, 1) print ("\n***conv3_3***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) # conv_map(56, 56, 256, 7, 7, 7, 7, 256, 1) # print ("\n***conv3_4***") # print ("\nlen(node_list):\t", len(node_list)) # print ("\nlen(edge_list):\t", len(edge_list)) pool_map(56, 56, 256, 4, 4, 28, 28) print ("\n***pool3***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) conv_map(28, 28, 256, 14, 14, 4, 4, 512, 2) print ("\n***conv4_1***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) conv_map(28, 28, 512, 4, 4, 4, 4, 512, 1) print ("\n***conv4_2***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) conv_map(28, 28, 512, 4, 4, 4, 4, 512, 1) print ("\n***conv4_3***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) # conv_map(28, 28, 512, 7, 7, 7, 7, 512, 1) # print ("\n***conv4_4***") # print ("\nlen(node_list):\t", len(node_list)) # print ("\nlen(edge_list):\t", len(edge_list)) pool_map(28, 28, 512, 4, 4, 14, 14) print ("\n***pool4***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) conv_map(14, 14, 512, 7, 7, 2, 2, 512, 2) #前面一层池化层,在缩小之前的node大小为14*14,缩小之后为7*7,而这里开始也改变了卷积层的node尺寸为2 ,适应图片尺寸的改变 print ("\n***conv5_1***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) conv_map(14, 14, 512, 2, 2, 2, 2, 512, 1) print ("\n***conv5_2***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) conv_map(14, 14, 512, 2, 2, 2, 2, 512, 1) print ("\n***conv5_3***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) # conv_map(14, 14, 512, 7, 7, 7, 7, 512, 1) # print ("\n***conv5_4***") # print ("\nlen(node_list):\t", len(node_list)) # print ("\nlen(edge_list):\t", len(edge_list)) pool_map(14, 14, 512, 2, 2, 14, 14) print ("\n***pool5***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) fc_map(7, 7, 512, 1, 4096, 1) print ("\n***fc1***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) fc_map(1, 1, 4096, 1, 4096, 1) print ("\n***fc2***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) fc_map(1, 1, 4096, 1, 1000, 1) print ("\n***fc3***") print ("\nlen(node_list):\t", len(node_list)) print ("\nlen(edge_list):\t", len(edge_list)) jsonout("4_unit_vgg16_graph.json", node_list, edge_list) with open("graph.json", "w") as f: for i in range(len(node_list)): f.write("id:") f.write(str(node_list[i][0])) f.write("\tcomDmd:") f.write(str(node_list[i][1])) f.write("\tdataDmd:") f.write(str(node_list[i][2])) f.write("\n") for i in range(len(edge_list)): f.write("src:") f.write(str(edge_list[i][0])) f.write("\tdst:") f.write(str(edge_list[i][1])) f.write("\tweight:") f.write(str(edge_list[i][2])) f.write("\n") generage_dag()

整个图没有卷积参数什么的,有的只是对于VGG网络运行过程当中的数据流图的构建。最终输出的就是一个构建好的DAG数据流图,

首先,卷积层和池化层的节点node大小都是自己定义的。节点是对于原图尺寸(从224*224到112*112再到56*56。。。)的一种划分。一开始卷积层的node大小为4,可以看到到了后面因为图片尺寸变为了14*14,卷积层的node大小也改变为了2。而且池化层一开始的node大小为56,惊呆我了,后面才发现这些都是自己定义的。

然后比较迷的几个点就是node的comm和data这两个参数以及edge的weight这个参数。

node的comm表示的是通信量大小,data表示的是数据量的大小,但是实际运算的时候我并没有发现有什么科学的解释。(可能我现在暂时发现不了)

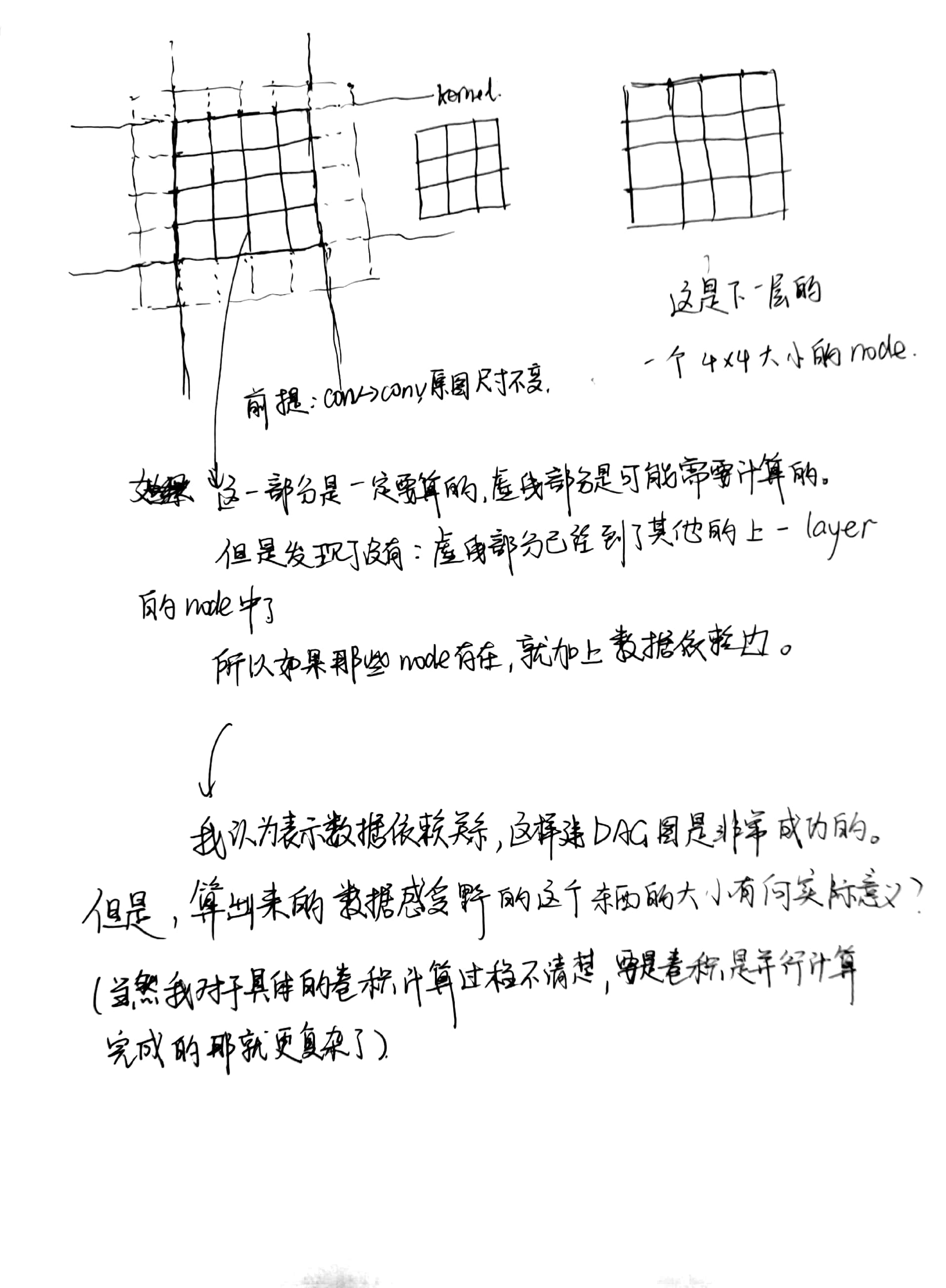

edge的weight我看明白了,表示的是边的dst节点对应上一layer当中的感受野的大小。也就是weight大小的上一层的这么多的数据决定了下一层当中的这个node的运算结果。必须等待前面这些数据运算完才能算完后面的数据。(但是这个我感觉还是不太靠谱,一方面因为实际卷积的时候,上一层感受野上面的每个数据参与卷积的次数是不一定的)。如下图:

原因如上所示。

由于池化层的node大小设置的很大,VGG16当中最后一个卷积层到池化层,边是多点对少点的,池化层到第一个卷积层,边是少点对多点的。而且池化当中图片尺寸没有先缩小,等待池化完全之后图片尺寸才缩小了。

在这里还有一个不太搞得懂的地方,那就是卷积层到池化层的连接,情况特别复杂,又要考虑数据依赖关系,编码又要考虑很多种情况。

之后遇到相关的问题再来读这一段代码吧!