Faster-RCNN tensorflow源码阅读笔记

源码地址:https://github.com/endernewton/tf-faster-rcnn

看到一个博客写了对源码的解析,写的很简洁全面,估计再写也不可能比他写的好了,不过还是简单写下源码的解析及阅读后的感受吧。https://blog.csdn.net/u012457308/article/details/79566195

代码主要部分为输入处理,网络搭建及loss处理。最难的地方是各种reshape,如果不注意很容易就乱了,这个一定要理清

上一篇笔记简单介绍了Faster-RCNN,这篇主要介绍下其tensorflow源码阅读笔记。下载后工程如下,主要程序都存储在lib这个文件夹里面。接下来诸葛介绍该文件夹的内容。

1.数据读取

Datasets文件夹里主要是数据读取程序。这部分不做介绍,下一篇文章会介绍系列代码如何制作数据集等工作。

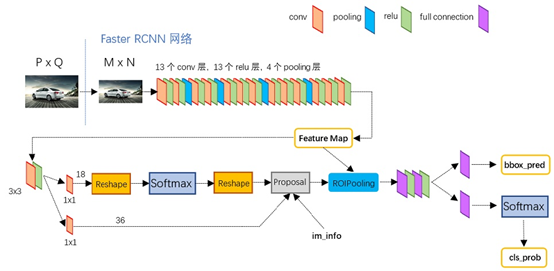

2.Nets文件夹包含特征提取网络(mobilenet,resnet_v1,vgg_16),即RPN网络之前部分。第二部分为RPN网络。此部分作用是对特征提取网络进一步处理,产生porposes.第三部分为分类与预测框回归网络。RPN网络结构在network.py中。网络结构如下:

网络搭建部分主程序:

1 def _build_network(self, is_training=True): 2 # select initializers进行初始化 3 if cfg.TRAIN.TRUNCATED: 4 initializer = tf.truncated_normal_initializer(mean=0.0, stddev=0.01) 5 initializer_bbox = tf.truncated_normal_initializer(mean=0.0, stddev=0.001) 6 else: 7 initializer = tf.random_normal_initializer(mean=0.0, stddev=0.01) 8 initializer_bbox = tf.random_normal_initializer(mean=0.0, stddev=0.001) 9 10 net_conv = self._image_to_head(is_training)##经过特征提取网络,初步提取特征 11 with tf.variable_scope(self._scope, self._scope): 12 # build the anchors for the image 13 self._anchor_component()###产生anchor 14 # region proposal network ###产生proposal的坐标 15 rois = self._region_proposal(net_conv, is_training, initializer) 16 # region of interest pooling 17 if cfg.POOLING_MODE == 'crop': 18 pool5 = self._crop_pool_layer(net_conv, rois, "pool5") ###对产生的porposal进行ROI池化,统一格式 19 else: 20 raise NotImplementedError 21 22 fc7 = self._head_to_tail(pool5, is_training) 23 with tf.variable_scope(self._scope, self._scope): 24 # region classification 输入到Fast-RCNN网络中,对样本进行分类和预测框回归 25 cls_prob, bbox_pred = self._region_classification(fc7, is_training, 26 initializer, initializer_bbox) 27 28 self._score_summaries.update(self._predictions) 29 30 return rois, cls_prob, bbox_pred

逐步进行代码分析。

1. 特征提取网络,有三个备选项,论文好像选的是VGG-16,这部分就是输入网络,获得某卷积层的输出,没有特别的地方。

2.RPN网络,此部分是重点,也是Faster-RCNN与前面几种方法最大的不同

anchor产生方式:每个特征点周围产生9个anchor,分别为3种面积,3种比例。程序为generate_anchors.py。程序很好理解,就是(0,0,15,15)为基准点,有三种比例(0.5,1,2)求得基准anchor的坐标后,分别乘(8,16,32)倍的scal,就得到9种anchor的坐标,之后再平移,就得到所有特征点周围anchor的坐标。

产生proposal。

接下来是RPN网络部分主函数,产生目标的porposal:

1 def _region_proposal(self, net_conv, is_training, initializer): 2 rpn = slim.conv2d(net_conv, cfg.RPN_CHANNELS, [3, 3], trainable=is_training, weights_initializer=initializer, 3 scope="rpn_conv/3x3") ##经过一个3X3卷积,之后分两条线 4 self._act_summaries.append(rpn) 5 rpn_cls_score = slim.conv2d(rpn, self._num_anchors * 2, [1, 1], trainable=is_training, 6 weights_initializer=initializer, 7 padding='VALID', activation_fn=None, scope='rpn_cls_score') ###第一条线产生预测类别确定是背景还是类别 8 # change it so that the score has 2 as its channel size 9 rpn_cls_score_reshape = self._reshape_layer(rpn_cls_score, 2, 'rpn_cls_score_reshape') 10 rpn_cls_prob_reshape = self._softmax_layer(rpn_cls_score_reshape, "rpn_cls_prob_reshape") 11 rpn_cls_pred = tf.argmax(tf.reshape(rpn_cls_score_reshape, [-1, 2]), axis=1, name="rpn_cls_pred") 12 rpn_cls_prob = self._reshape_layer(rpn_cls_prob_reshape, self._num_anchors * 2, "rpn_cls_prob") 13 rpn_bbox_pred = slim.conv2d(rpn, self._num_anchors * 4, [1, 1], trainable=is_training, ###第二条线产生预测框坐标,对预测框坐标进行预测 14 weights_initializer=initializer, 15 padding='VALID', activation_fn=None, scope='rpn_bbox_pred') 16 if is_training: 17 rois, roi_scores = self._proposal_layer(rpn_cls_prob, rpn_bbox_pred, "rois") ###根据预测的类别和预测框坐标对porposa进行筛选,对前N个进行NMS 18 rpn_labels = self._anchor_target_layer(rpn_cls_score, "anchor") 19 # Try to have a deterministic order for the computing graph, for reproducibility 20 with tf.control_dependencies([rpn_labels]): 21 rois, _ = self._proposal_target_layer(rois, roi_scores, "rpn_rois") 22 else: 23 if cfg.TEST.MODE == 'nms': 24 rois, _ = self._proposal_layer(rpn_cls_prob, rpn_bbox_pred, "rois") 25 elif cfg.TEST.MODE == 'top': 26 rois, _ = self._proposal_top_layer(rpn_cls_prob, rpn_bbox_pred, "rois") 27 else: 28 raise NotImplementedError 29 30 self._predictions["rpn_cls_score"] = rpn_cls_score 31 self._predictions["rpn_cls_score_reshape"] = rpn_cls_score_reshape 32 self._predictions["rpn_cls_prob"] = rpn_cls_prob 33 self._predictions["rpn_cls_pred"] = rpn_cls_pred 34 self._predictions["rpn_bbox_pred"] = rpn_bbox_pred 35 self._predictions["rois"] = rois 36 37 return rois

产生目标porposals的坐标后,因为size大小不一样,需要进行ROI池化,使输出为统一维度。程序如下:

1 def _crop_pool_layer(self, bottom, rois, name): ####bottom为convert层卷积输出, feat_stride为补偿乘积,用来求得原图的w,h.rois为选出的256个anchor的坐标 2 with tf.variable_scope(name) as scope: 3 batch_ids = tf.squeeze(tf.slice(rois, [0, 0], [-1, 1], name="batch_id"), [1]) 4 # Get the normalized coordinates of bounding boxes 5 bottom_shape = tf.shape(bottom) 6 height = (tf.to_float(bottom_shape[1]) - 1.) * np.float32(self._feat_stride[0]) 7 width = (tf.to_float(bottom_shape[2]) - 1.) * np.float32(self._feat_stride[0]) 8 x1 = tf.slice(rois, [0, 1], [-1, 1], name="x1") / width 9 y1 = tf.slice(rois, [0, 2], [-1, 1], name="y1") / height 10 x2 = tf.slice(rois, [0, 3], [-1, 1], name="x2") / width 11 y2 = tf.slice(rois, [0, 4], [-1, 1], name="y2") / height###得到相对位置 12 # Won't be back-propagated to rois anyway, but to save time 13 bboxes = tf.stop_gradient(tf.concat([y1, x1, y2, x2], axis=1)) 14 pre_pool_size = cfg.POOLING_SIZE * 2 15 crops = tf.image.crop_and_resize(bottom, bboxes, tf.to_int32(batch_ids), [pre_pool_size, pre_pool_size], name="crops")##利用tensorflow的自带函数作用类似于ROI池化 16 17 return slim.max_pool2d(crops, [2, 2], padding='SAME')

之后就是Fast-RCNN部分的网络结构了,即根据产生的porposal,进行分类及预测。网络部分代码:

1 def _region_classification(self, fc7, is_training, initializer, initializer_bbox): 2 cls_score = slim.fully_connected(fc7, self._num_classes, 3 weights_initializer=initializer, 4 trainable=is_training, 5 activation_fn=None, scope='cls_score') 6 cls_prob = self._softmax_layer(cls_score, "cls_prob") 7 cls_pred = tf.argmax(cls_score, axis=1, name="cls_pred") 8 bbox_pred = slim.fully_connected(fc7, self._num_classes * 4, 9 weights_initializer=initializer_bbox, 10 trainable=is_training, 11 activation_fn=None, scope='bbox_pred') 12 13 self._predictions["cls_score"] = cls_score 14 self._predictions["cls_pred"] = cls_pred 15 self._predictions["cls_prob"] = cls_prob 16 self._predictions["bbox_pred"] = bbox_pred 17 18 return cls_prob, bbox_pred

最后说一下loss函数:分为RPN部分loss和RCNN部分loss。整体网络训练是联合训练的。

1 def _add_losses(self, sigma_rpn=3.0): 2 with tf.variable_scope('LOSS_' + self._tag) as scope: 3 # RPN, class loss 4 rpn_cls_score = tf.reshape(self._predictions['rpn_cls_score_reshape'], [-1, 2]) 5 rpn_label = tf.reshape(self._anchor_targets['rpn_labels'], [-1]) 6 rpn_select = tf.where(tf.not_equal(rpn_label, -1)) 7 rpn_cls_score = tf.reshape(tf.gather(rpn_cls_score, rpn_select), [-1, 2]) 8 rpn_label = tf.reshape(tf.gather(rpn_label, rpn_select), [-1]) 9 rpn_cross_entropy = tf.reduce_mean( 10 tf.nn.sparse_softmax_cross_entropy_with_logits(logits=rpn_cls_score, labels=rpn_label)) 11 12 # RPN, bbox loss 13 rpn_bbox_pred = self._predictions['rpn_bbox_pred'] 14 rpn_bbox_targets = self._anchor_targets['rpn_bbox_targets'] 15 rpn_bbox_inside_weights = self._anchor_targets['rpn_bbox_inside_weights'] 16 rpn_bbox_outside_weights = self._anchor_targets['rpn_bbox_outside_weights'] 17 rpn_loss_box = self._smooth_l1_loss(rpn_bbox_pred, rpn_bbox_targets, rpn_bbox_inside_weights, 18 rpn_bbox_outside_weights, sigma=sigma_rpn, dim=[1, 2, 3]) 19 20 # RCNN, class loss 21 cls_score = self._predictions["cls_score"] 22 label = tf.reshape(self._proposal_targets["labels"], [-1]) 23 cross_entropy = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(logits=cls_score, labels=label)) 24 25 # RCNN, bbox loss 26 bbox_pred = self._predictions['bbox_pred'] 27 bbox_targets = self._proposal_targets['bbox_targets'] 28 bbox_inside_weights = self._proposal_targets['bbox_inside_weights'] 29 bbox_outside_weights = self._proposal_targets['bbox_outside_weights'] 30 loss_box = self._smooth_l1_loss(bbox_pred, bbox_targets, bbox_inside_weights, bbox_outside_weights) 31 32 self._losses['cross_entropy'] = cross_entropy 33 self._losses['loss_box'] = loss_box 34 self._losses['rpn_cross_entropy'] = rpn_cross_entropy 35 self._losses['rpn_loss_box'] = rpn_loss_box 36 37 loss = cross_entropy + loss_box + rpn_cross_entropy + rpn_loss_box 38 regularization_loss = tf.add_n(tf.losses.get_regularization_losses(), 'regu') 39 self._losses['total_loss'] = loss + regularization_loss 40 41 self._event_summaries.update(self._losses) 42 43 return loss