Netty学习

一、BIO

Server.java:

package com.mashibing.io.bio; import java.io.IOException; import java.net.InetSocketAddress; import java.net.ServerSocket; import java.net.Socket; public class Server { public static void main(String[] args) throws IOException { ServerSocket ss = new ServerSocket(); ss.bind(new InetSocketAddress("127.0.0.1", 8888)); while(true){ Socket s = ss.accept(); //阻塞方法 new Thread(() -> handle(s)).start(); } } static void handle(Socket s){ try { byte[] bytes = new byte[1024]; int len = s.getInputStream().read(bytes); System.out.println(new String(bytes, 0, len)); s.getOutputStream().write(bytes, 0, len); s.getOutputStream().flush(); } catch (IOException e) { e.printStackTrace(); } } }

Client.java:

package com.mashibing.io.bio; import java.io.IOException; import java.net.Socket; public class Client { public static void main(String[] args) throws IOException { Socket s = new Socket("127.0.0.1", 8888); s.getOutputStream().write("HelloServer".getBytes()); s.getOutputStream().flush(); System.out.println("write over, waiting for msg back..."); byte[] bytes = new byte[1024]; int len = s.getInputStream().read(bytes); System.out.println(new String(bytes, 0, len)); s.close(); } }

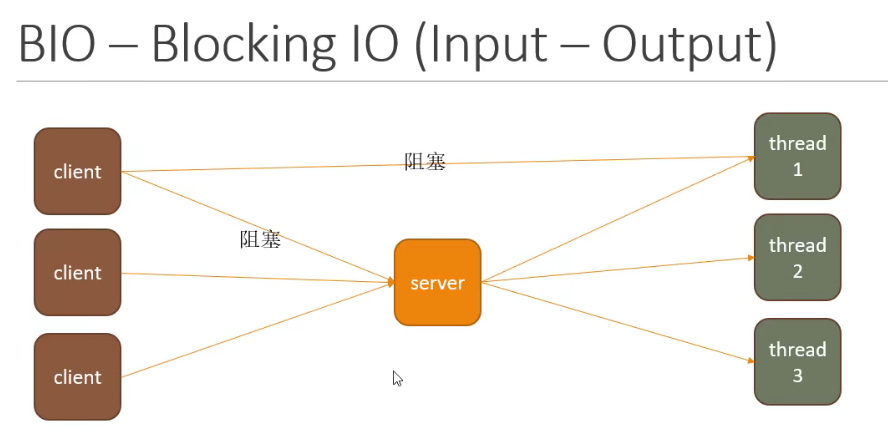

Blocking IO

1.在服务端建立了一个ServerSocket,等待客户端连接插座插上来,跟我们建立连接。每个客户端都准备一个插座。ServerSocket就是总的大面板上有好多插座。先只考虑TCP。监听在8888端口。

2.为什么叫他阻塞,因为ss.accept()是一个阻塞方法,服务器到ss.accept()这里就会停住,什么时候方法会继续往下运行?有一个客户端连上来才可以。客户端连上来就会建立一个通道,Socket s就是和客户端联系的一个通道。

ss.accept()之后,启一个新的线程来处理数据。为什么要启新的线程呢?能不能在accept之后马上对通道的数据进行处理?——不可以,服务器在等着连接呢,但是accept之后马上进行数据处理了,如果对这些数据的处理非常耗时间,下一次accept的代码就运行不了了,新的客户端连不上来了,这就是Blocking IO为什么要每一个连接启一个新的线程的原因。

3.Server端,handle中s.getInputStream().read中的read()方法也是阻塞的,如果客户端只是连上了没有给你写,server端read不进来,线程就停这了,只有读进来了,cpu把你唤醒你才能继续往下走。s.getOutputStream().write()的write方法也是阻塞的,如果客户端那边不接收,也会停住。所以Blocking IO的效率特别低,并发性也不好。在真正的网络程序里头,BIO很少使用。

应用场景:

BIO模型效率低,但是不代表没用,如果确定连你客户端人特别少,用这种模型简单处理,相当方便。

二、NIO

1).单线程模型:

Server.java:

package com.mashibing.io.nio; import java.io.IOException; import java.net.InetSocketAddress; import java.nio.ByteBuffer; import java.nio.channels.SelectionKey; import java.nio.channels.Selector; import java.nio.channels.ServerSocketChannel; import java.nio.channels.SocketChannel; import java.util.Iterator; import java.util.Set; /** * NIO 单线程server端 */ public class Server { public static void main(String[] args) throws IOException { ServerSocketChannel ssc = ServerSocketChannel.open(); ssc.socket().bind(new InetSocketAddress("127.0.0.1", 8888)); ssc.configureBlocking(false); //设置非阻塞,就是非阻塞模型 System.out.println("Server started, Listening on : " + ssc.getLocalAddress()); Selector selector = Selector.open(); ssc.register(selector, SelectionKey.OP_ACCEPT); // while (true) { selector.select(); Set<SelectionKey> keys = selector.selectedKeys(); Iterator<SelectionKey> it = keys.iterator(); while (it.hasNext()) { SelectionKey key = it.next(); it.remove(); handle(key); } } } static void handle(SelectionKey key) { if (key.isAcceptable()) { //如果是isAcceptable,说明是有客户端想连上来 try { ServerSocketChannel ssc = (ServerSocketChannel) key.channel(); SocketChannel sc = ssc.accept(); sc.configureBlocking(false); sc.register(key.selector(), SelectionKey.OP_READ); } catch (IOException e) { e.printStackTrace(); } finally { } } else if (key.isReadable()) { SocketChannel sc = null; try { sc = (SocketChannel) key.channel(); ByteBuffer buffer = ByteBuffer.allocate(512); buffer.clear(); int len = sc.read(buffer); if (len != -1) { System.out.println(new String(buffer.array(), 0, len)); } ByteBuffer bufferToWrite = ByteBuffer.wrap("HelloClient".getBytes()); sc.write(bufferToWrite); } catch (IOException e) { e.printStackTrace(); } finally { if (sc != null) { try { sc.close(); } catch (IOException e) { e.printStackTrace(); } } } } } }

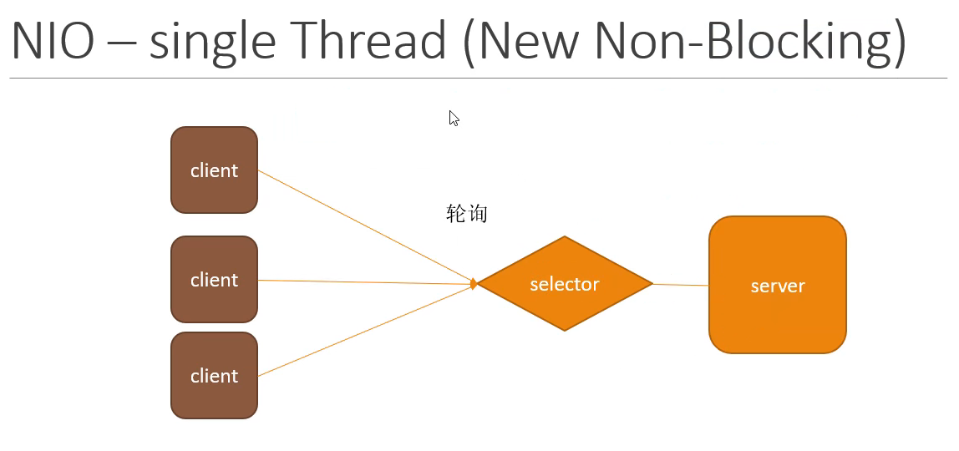

1.在NIO中有一种单线程的模型,selector会盯在server这个大插座上,每隔一段时间看一眼,如果有client来,就把这客户端连上来。selector除了管客户端的连接之外,还会盯着每一个已经连上来的客户端这条通道有没有需要读的数据?有没有需要写的数据?如果需要读写selector就进行读写。selector发现一件事就处理一件事,一个人就可以处理好多好多客户端。

2.ServerSocketChannel是NIO对于ServerSocker进行的封装,只是逻辑上的封装,还是BIO那条通道,只不过这个通道是双向的,可以读可以写。ssc.register是我要注册对哪件事感兴趣,过段时间就轮询一次,首先感兴趣的是accept,就是有客户端要连上来了。

selector.select()也是一个阻塞方法。过来之后轮询一下,一直等到有一件事发生了,他才会进行处理。keys:作为serverSocket来说可以把它想象成上面有好多插座的一块面板,selector会注册自己的很多key,每一个key如果上面有事件发生了,就会把这个key放到Set集合里面。

所谓的key就是selector往每一个插座上都扔了一个监听器,这个监听器一旦有事件发生的时候,会被selectedKeys()选出来,选出来后会依次处理,处理完后要remove掉,不然会重复处理。

3.在NIO模型里面,所有的通道都是和buffer绑在一起的,buffer就是内存中的一个字节数组,读的时候直接从字节数组读或写。

2)

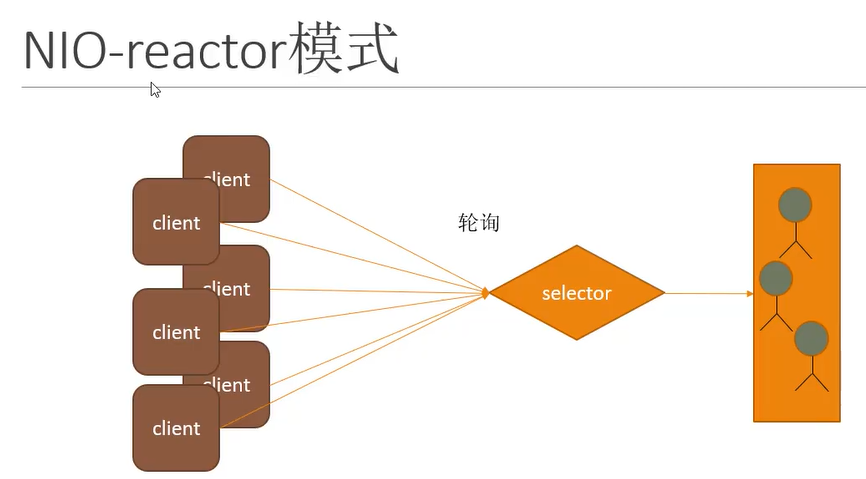

reactor模式:

上面1)是一个线程负责所有的,现在selector仍然是注册到面板上关心有没有客户端连接这件事,一旦有一个客户端连接上来,下一个事件它要读或者写的时候交给另外的线程去干,把很多客户端分配给一个线程池。相当于一个大管家selector领着一帮工人(线程池)。selector可以认为是boss,他不干别的事就负责客户端的连接,连上来之后要读要写的交给工人worker去做。

PoolServer.java:

package com.mashibing.io.nio; import java.io.ByteArrayOutputStream; import java.io.IOException; import java.net.InetSocketAddress; import java.nio.ByteBuffer; import java.nio.channels.SelectionKey; import java.nio.channels.Selector; import java.nio.channels.ServerSocketChannel; import java.nio.channels.SocketChannel; import java.util.Iterator; import java.util.concurrent.ExecutorService; import java.util.concurrent.Executors; public class PoolServer { ExecutorService pool = Executors.newFixedThreadPool(50); //线程池里面有50个工人 private Selector selector; public static void main(String[] args) throws IOException { PoolServer server = new PoolServer(); server.initServer(8000); server.listen(); } public void initServer(int port) throws IOException { ServerSocketChannel serverChannel = ServerSocketChannel.open(); serverChannel.configureBlocking(false); serverChannel.socket().bind(new InetSocketAddress(port)); this.selector = Selector.open(); serverChannel.register(selector, SelectionKey.OP_ACCEPT); System.out.println("服务端启动成功!"); } public void listen() throws IOException { //轮询访问selector while (true) { selector.select(); Iterator<SelectionKey> iterator = this.selector.selectedKeys().iterator(); while (iterator.hasNext()) { SelectionKey key = iterator.next(); iterator.remove(); if (key.isAcceptable()) { ServerSocketChannel server = (ServerSocketChannel) key.channel(); SocketChannel channel = server.accept(); channel.configureBlocking(false); channel.register(this.selector, SelectionKey.OP_READ); } else if (key.isReadable()) { key.interestOps(key.interestOps() & (~SelectionKey.OP_READ)); pool.execute(new ThreadHandlerChannel(key)); } } } } } class ThreadHandlerChannel extends Thread { private SelectionKey key; ThreadHandlerChannel(SelectionKey key) { this.key = key; } @Override public void run() { SocketChannel channel = (SocketChannel) key.channel(); ByteBuffer buffer = ByteBuffer.allocate(1024); ByteArrayOutputStream baos = new ByteArrayOutputStream(); try { int size = 0; while ((size = channel.read(buffer)) > 0) { buffer.flip(); baos.write(buffer.array(), 0, size); buffer.clear(); } baos.close(); byte[] content = baos.toByteArray(); ByteBuffer writeBuffer = ByteBuffer.allocate(content.length); writeBuffer.put(content); writeBuffer.flip(); channel.write(writeBuffer); if (size == -1) { channel.close(); } else { key.interestOps(key.interestOps() | SelectionKey.OP_READ); key.selector().wakeup(); } } catch (IOException e) { e.printStackTrace(); } } }

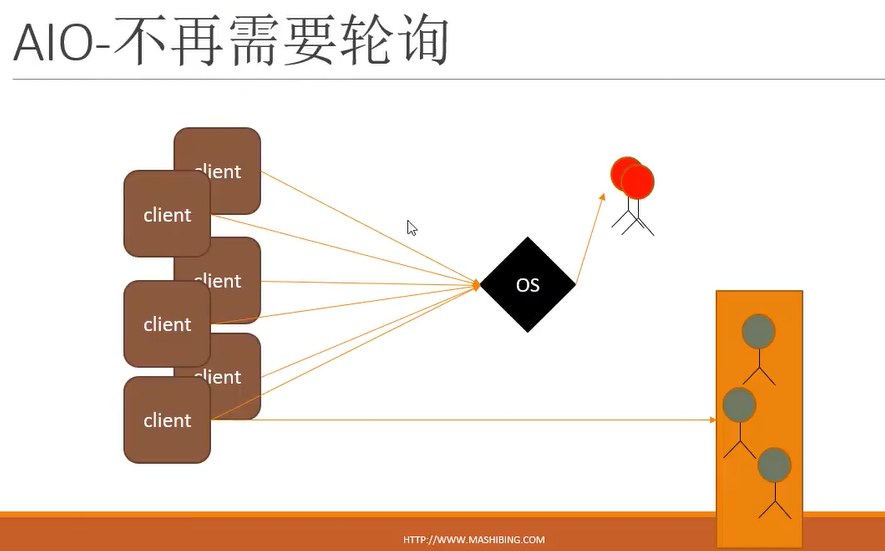

三、AIO

NIO里面有个不可少的过程是轮询,你得主动的没事就看看有啥事发生没有。能不能等有事的时候通知我行不行?实际上是操作系统或者内核来通知你。这种模型就是AIO

A: asynchronous

AIO:当客户端需要连接的时候,交给操作系统去连接,操作系统一旦连上客户端才会给大管家发消息说有客户端要来连接了,交给大管家去处理,大管家只负责去连接客户端,大管家坐着等就行,不需要主动去轮询的。大管家将客户端连上来之后,连好的通道再交给相应的工人去处理通道里的读写信息。

1)单线程的AIO:

Server.java:

package com.mashibing.io.aio; import java.io.IOException; import java.net.InetSocketAddress; import java.nio.ByteBuffer; import java.nio.channels.AsynchronousServerSocketChannel; import java.nio.channels.AsynchronousSocketChannel; import java.nio.channels.CompletionHandler; public class Server { public static void main(String[] args) throws Exception { final AsynchronousServerSocketChannel serverChannel = AsynchronousServerSocketChannel.open() .bind(new InetSocketAddress(8888)); serverChannel.accept(null, new CompletionHandler<AsynchronousSocketChannel, Object>() { @Override public void completed(AsynchronousSocketChannel client, Object attachment) { serverChannel.accept(null, this); try{ System.out.println(client.getRemoteAddress()); ByteBuffer buffer = ByteBuffer.allocate(1024); client.read(buffer, buffer, new CompletionHandler<Integer, ByteBuffer>() { @Override public void completed(Integer result, ByteBuffer attachment) { attachment.flip(); System.out.println(new String(attachment.array(), 0, result)); client.write(ByteBuffer.wrap("HelloClient".getBytes())); } @Override public void failed(Throwable exc, ByteBuffer attachment) { exc.printStackTrace(); } }); } catch (IOException e) { e.printStackTrace(); } } @Override public void failed(Throwable exc, Object attachment) { exc.printStackTrace(); } }); while (true){ Thread.sleep(1000); } } }

代码分析:

BIO叫ServerSocket,NIO叫ServerSocketChannel,AIO叫AsynchronousServerSocketChannel,不管它怎么叫本质上还是那块面板,open()面板打开绑定在8888端口,准备迎接客户端的连接。

serverChannel.accept(),accept这个方法原来是阻塞在这里,必须等客户端连上来进行处理之后才能继续往下运行。现在不是阻塞了,调了accept后就可以走了,这里简单处理while(true)(或者用countDownLautch),总之不能让main方法退出。

客户端连接上来了,由completionHandler处理,本质上是观察者模式。completed代表客户端已经连上来了,failed代表没有连上来失败了。

client.read()也是非阻塞的。

2)线程池的AIO:

ServerWithThreadGroup.java:

package com.mashibing.io.aio; import java.io.IOException; import java.net.InetSocketAddress; import java.nio.ByteBuffer; import java.nio.channels.AsynchronousChannelGroup; import java.nio.channels.AsynchronousServerSocketChannel; import java.nio.channels.AsynchronousSocketChannel; import java.nio.channels.CompletionHandler; import java.util.concurrent.ExecutorService; import java.util.concurrent.Executors; public class ServerWithThreadGroup { public static void main(String[] args) throws Exception{ ExecutorService executorService = Executors.newCachedThreadPool(); AsynchronousChannelGroup threadGroup = AsynchronousChannelGroup.withCachedThreadPool(executorService, 1); final AsynchronousServerSocketChannel serverChannel = AsynchronousServerSocketChannel.open(threadGroup) .bind(new InetSocketAddress(8888)); serverChannel.accept(null, new CompletionHandler<AsynchronousSocketChannel, Object>() { @Override public void completed(AsynchronousSocketChannel client, Object attachment) { serverChannel.accept(null, this); try{ System.out.println(client.getRemoteAddress()); ByteBuffer buffer = ByteBuffer.allocate(1024); client.read(buffer, buffer, new CompletionHandler<Integer, ByteBuffer>() { @Override public void completed(Integer result, ByteBuffer attachment) { attachment.flip(); System.out.println(new String(attachment.array(), 0, result)); client.write(ByteBuffer.wrap("HelloClient".getBytes())); } @Override public void failed(Throwable exc, ByteBuffer attachment) { exc.printStackTrace(); } }); } catch (IOException e) { e.printStackTrace(); } } @Override public void failed(Throwable exc, Object attachment) { exc.printStackTrace(); } }); while (true){ Thread.sleep(1000); } } }

四、Netty:

Netty:

实现对于NIO BIO的封装,也可以使用BIO,只不过用的少

封装成AIO的样子

Linux下的AIO的效率未必比NIO高(底层实现一样,AIO多了一层封装)

windows的server比较少,netty未做重点

pom.xml配置:

<!-- https://mvnrepository.com/artifact/io.netty/netty-all --> <dependency> <groupId>io.netty</groupId> <artifactId>netty-all</artifactId> <version>4.1.72.Final</version> </dependency>

server端,HelloNetty.java:

package com.mashibing.netty; import io.netty.bootstrap.ServerBootstrap; import io.netty.buffer.ByteBuf; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelHandlerContext; import io.netty.channel.ChannelInboundHandlerAdapter; import io.netty.channel.ChannelInitializer; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioServerSocketChannel; import io.netty.util.CharsetUtil; public class HelloNetty { public static void main(String[] args) { new NettyServer(8888).serverStart(); } } class NettyServer{ int port = 8888; public NettyServer(int port) { this.port = port; } public void serverStart(){ EventLoopGroup bossGroup = new NioEventLoopGroup(); EventLoopGroup workerGroup = new NioEventLoopGroup(); ServerBootstrap b = new ServerBootstrap(); b.group(bossGroup, workerGroup) .channel(NioServerSocketChannel.class) .childHandler(new ChannelInitializer<SocketChannel>() { protected void initChannel(SocketChannel ch) throws Exception { ch.pipeline().addLast(new Handler()); } }); try{ ChannelFuture f = b.bind(port).sync(); f.channel().closeFuture().sync(); } catch (InterruptedException e) { e.printStackTrace(); }finally { workerGroup.shutdownGracefully(); bossGroup.shutdownGracefully(); } } } class Handler extends ChannelInboundHandlerAdapter{ @Override public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception { System.out.println("server: channel read"); ByteBuf buf = (ByteBuf) msg; System.out.println(buf.toString(CharsetUtil.UTF_8)); ctx.writeAndFlush(msg); ctx.close(); } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { cause.printStackTrace(); ctx.close(); } }

client端:

package com.mashibing.netty; import io.netty.bootstrap.Bootstrap; import io.netty.buffer.ByteBuf; import io.netty.buffer.Unpooled; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelFutureListener; import io.netty.channel.ChannelHandlerContext; import io.netty.channel.ChannelInboundHandlerAdapter; import io.netty.channel.ChannelInitializer; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioSocketChannel; import io.netty.util.ReferenceCountUtil; public class Client { public static void main(String[] args) { new Client().clientStart(); } private void clientStart() { EventLoopGroup workers = new NioEventLoopGroup(); Bootstrap b = new Bootstrap(); b.group(workers) .channel(NioSocketChannel.class) .handler(new ChannelInitializer<SocketChannel>() { protected void initChannel(SocketChannel ch) throws Exception { System.out.println("channel initialized!"); ch.pipeline().addLast(new ClientHandler()); } }); try { System.out.println("start to connect..."); ChannelFuture f = b.connect("127.0.0.1", 8888).sync(); f.channel().closeFuture().sync(); } catch (InterruptedException e) { e.printStackTrace(); } finally { workers.shutdownGracefully(); } } } class ClientHandler extends ChannelInboundHandlerAdapter { @Override public void channelActive(ChannelHandlerContext ctx) throws Exception { System.out.println("channel is activated."); final ChannelFuture f = ctx.writeAndFlush(Unpooled.copiedBuffer("HelloNetty".getBytes())); f.addListener(new ChannelFutureListener() { public void operationComplete(ChannelFuture future) throws Exception { System.out.println("msg send!"); } }); } @Override public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception { try { ByteBuf buf = (ByteBuf) msg; System.out.println(buf.toString()); } finally { ReferenceCountUtil.release(msg); } } }

代码解释:

bossGroup和workerGroup这两个group可以理解为两个线程池;bossGroup相当于NIO里面的大管家selector,workerGroup工人。

ServerBootStrap我们要对server进行启动配置上的一些东西,通过他对server启动之前的一些配置,b.group(bossGroup, workerGroup)第一个group就负责客户端的连接,第二个线程组负责连接之后的IO处理.

.channel()是说连接建立的通道是什么类型的,这里指定是NioServerSocketChannel类型。

.childHandler()的意思是当我们客户端每一个连上来之后,给他一个监听器,来处理。ch.pipeline().addLast(new Handler())表示在这个通道上加一个对这个通道的处理器。

initChannel(){} 只要这个通道一旦init初始化了,在这个通道上就添加对这个通道的处理器。

Handler我们自己的处理器怎么处理的呢?

处理过程就是重写channelRead方法以及一些其他的状态方法,channelRead说的是通道上的数据已经读过来了之后,直接拿来用、解析就可以了。

exceptionCaught对于异常的处理,这个通道产生的异常,一般需要将此通道close。

Client端:

wokers=new NioEventLoopGroup(),NioEventLoopGroup里面是可以写数字的,代表多少个,默认只有1个。

--