【Real Time Rendering 1】

1、RTR是一本导论。官网:http://www.realtimerendering.com。

2、At around 6 fps, a sense of interactivity starts to grow.

An application displaying at 15 fps is certainly real-time; the user focuses on action and reaction.

There is a useful limit, however. From about 72 fps and up, differences in the display rate are effectively undetectable.

3、The plane π is said to divide the space into 2 spaces:

1) a positive half-space, where n · x + d > 0,

2) anegative half-space, where n · x + d < 0.

All other points are said to lie in the plane.

4、(x,y) 垂直于 (-y, x).

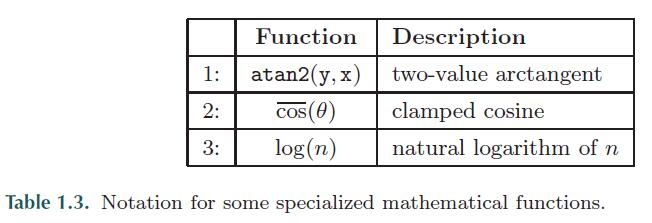

5、-PI/2 < arctan(x) < PI/2,0<=atan2(y, x)<2*PI. x = y/x, except when x =0

6、A chain is no stronger than its weakest link.

7、It is possible to have several model transforms associated with a single model. This allows several copies (called instances) of the same model to have different locations, orientations, and sizes in the same scene, without requiring replication of the basic geometry.

Some of the tasks traditionally performed on the CPU include collision detection, global acceleration algorithms, animation, physics simulation, and many others.

8、几何阶段

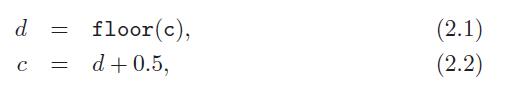

OpenGL has always used this scheme, and DirectX 10 and its successors use it. The center of this pixel is at 0.5.

Primitives are clipped against the unit cube.

how integer and floating point values relate to pixel (and texture) coordinates?

DirectX 9 and its predecessors use a coordinate system where 0.0 is the center of the pixel, meaning that a range of pixels [0, 9] cover a span from [−0.5, 9.5).

OpenGL and Dx10:center of this pixel is at 0.5. The center of this pixel is at 0.5. So a range of pixels [0, 9] cover a span from [0.0, 10.0).

OpenGL 左下角为原点,DX左上角为原点。

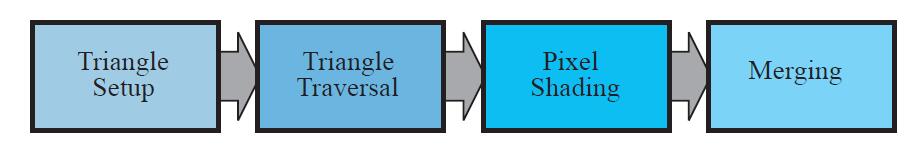

9、光栅化阶段

Finding which samples or pixels are inside a triangle is often called triangle traversal or scan conversion.

Partially transparent primitives cannot be rendered in just any order.

9、Stencil Buffer typically contains eight bits per pixel.

All of these functions(Alpha Blend、Alpha Test、Stencil Buffer) at the end of the pipeline are called raster operations (ROP) or blend operations.

Accumulation buffe, A set of images showing an object in motion can be accumulated and averaged in order to generate motion blur. Other effects that can be generated include depth of field, antialiasing, soft shadows.

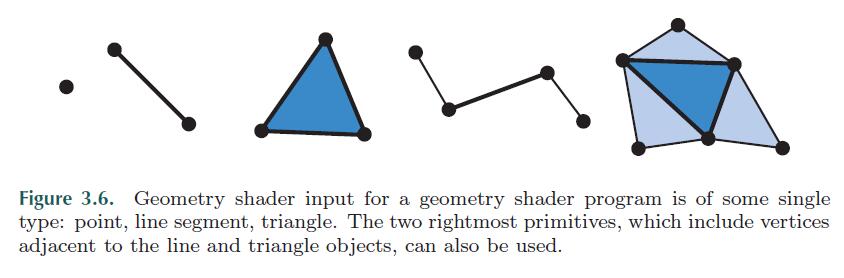

10、The geometry shader is an optional, fully programmable stage that operates on the vertices of a primitive (point, line or triangle). It can be used to perform per-primitive shading operations, to destroy primitives, or to create new ones.

The geometry shader is an optional, fully programmable stage that operates on the vertices of a primitive (point, line or triangle). It can be used to perform per-primitive shading operations, to destroy primitives, or to create new ones.

【3.2 The Programmable Shader Stage】

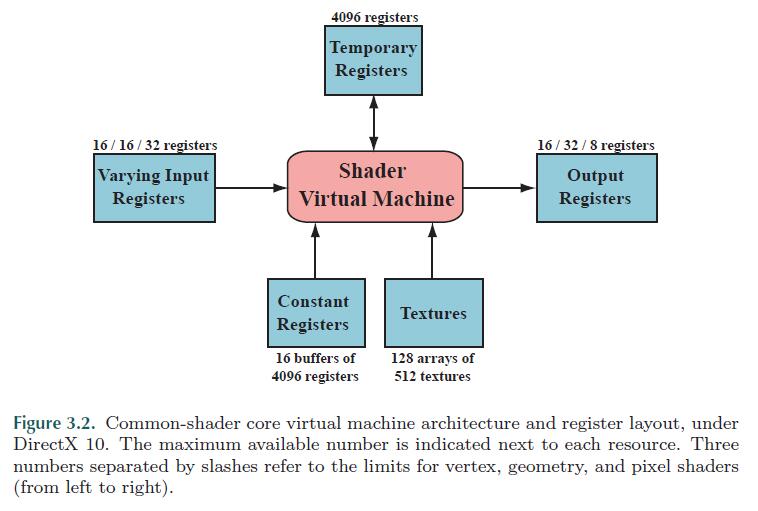

11、We differentiate in this book between the common-shader core, the functional description seen by the applications programmer, and unified shaders, a GPU architecture that maps well to this core.

1)common-shader core,程序员看见的,比较 ShaderLab。

2)unified shaders,GPU执行的shader。

Shaders are compiled to a machine-independent assembly language, also called the intermediate language (IL). This assembly language is converted to the actual machine language in a separatestep, usually in the drivers.

12、Each programmable shader stage has two types of inputs: uniform inputs, with values that remain constant throughout a draw call (but can be changed between draw calls), and varying inputs, which are different for each vertex or pixel processed by the shader.

The number of available constant registers is much larger than the number of registers available for varying inputs or outputs.

13、Shaders support two types of flow control. Static flow control branches are based on the values of uniform inputs. This means that the flow of the code is constant over the draw call.

Dynamic flow control is based on the values of varying inputs. This is much more powerful than static flow control but is more costly, especially if the code flow changes erratically between shader invocations.

14、a shader is evaluated on a number of vertices or pixels at a time. If the flow selects the “if” branch for some elements and the “else” branch for others, both branches must be evaluated for all elements.

在一次渲染中,如果有些pixel执行if,而另一些pixel执行else,那么所有的pixel将会执行两次shader。

【3.3 The Evolution of Programmable Shading】

15、In early 2001, NVIDIA’s GeForce 3 was the first GPU to support programmable vertex shaders [778], exposed through DirectX 8.0. DirectX defined the concept of a Shader Model to distinguish hardware with different shader capabilities. The GeForce 3 supported vertex shader model 1.1 and pixel shader model. 1.1

16、The year 2002 saw the release of DirectX 9.0 including Shader Model 2.0, which featured truly programmable vertex and pixel shaders. DirectX 9.0 also included a new shader programming language called HLSL (High Level Shading Language). HLSL was developed by Microsoft in collaboration with NVIDIA, which released a cross-platform variant called Cg.

17、Shader Model 3.0 was introduced in 2004. When a new generation of game consoles was introduced in late 2005 (Microsoft’s Xbox 360) and 2006 (Sony Computer Entertainment’s PLAYSTATION R⃝ 3 system), they were equipped with Shader Model 3.0–level GPUs. The fixed-function pipeline is not entirely dead: Nintendo’s Wii console shipped in late 2006 with a fixed-function GPU). However, this is almost certainly the last console of this type.

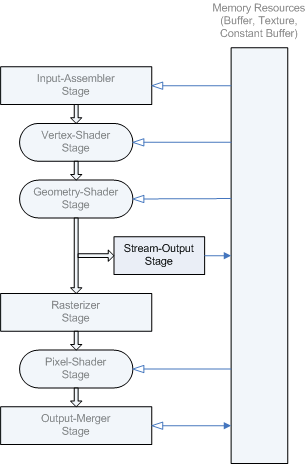

18、In 2007. Shader Model 4.0 introduced several major features, such as the geometry shader and stream output.

19、 Besides new versions of existing APIs, new programming models such as NVIDIA’s CUDA [211] and AMD’s CTM [994] have been targeted at non-graphics applications.

【3.5 The Geometry Shader】

20、The geometry shader was added to the hardware-accelerated graphics pipeline with the release of DirectX 10, in late 2006. and its use is optional. The input to the geometry shader is a single object and its associated vertices. The object is typically a triangle in a mesh, a line segment, or simply a point. three additional vertices outside of a triangle can be passed in, and the two adjacent vertices on a polyline can be used. The geometry shader processes this primitive and outputs zero or more primitives. The geometry shader is guaranteed to output results from primitives in the same order as they are input.

The geometry shader program is set to input one type of object and output one type of object, and these types do not have to match.

The geometry shader is guaranteed to output results from primitives in the same order as they are input.

【3.5.1 Stream Output】

21、Stream Output Stage as introduced in Shader Model 4.0.

22、In SM 2.0 and on, a pixel shader can also discard incoming fragment data, i.e., generate no output. Such operations can cost performance, as optimizations normally performed by the GPU cannot then be used. See Section 18.3.7 for details.

fog computation and alpha testing have moved from being merge operations to being pixel shader computations in SM 4.0.

23、The one case in which the pixel shader can access information for adjacent pixels (albeit indirectly) is the computation of gradient or derivative information.

The pixel shader has the ability to take any value and compute the amount by which it changes per pixel along the x and y screen axes.

也即 ddx / ddy.

The ability to access gradient information is a unique capability of the pixel shader, not shared by any of the other programmable shader stages.

只有 ps 才能访问渐变纹理。通过访问纹理,使得ps拥有访问相邻元素部分信息(纹理)的能力。