openEuler22.03(LTS-SP3)安装kubernetesV1.29

一、环境配置

配置

| 主机 | 配置 | 角色 | 系统版本 | IP |

| master01 | 2核4G | master | openEuler22.03(LTS-SP3) | 192.168.0.111 |

| master02 | 2核4G | master | openEuler22.03(LTS-SP3) | 192.168.0.112 |

| master03 | 2核4G | master | openEuler22.03(LTS-SP3) | 192.168.0.113 |

| worker01 | 2核4G | worker | openEuler22.03(LTS-SP3) | 192.168.0.114 |

| worker01 | 2核4G | worker | openEuler22.03(LTS-SP3) | 192.168.0.115 |

安装组件

| 角色 | 组件 |

| master |

kubelet、容器运行时(docker、cri-docker) apiserver、controller-manager、scheduler、etcd、kube-proxy calico |

| worker |

kubelet、容器运行时(docker、cri-docker) kube-proxy、coredns、 calico |

部署步骤:

1、每个节点部署选定的容器运行时:docker、cri-docker

2、每个节点部署kubeadm、kubelet和kubectl

3、部署控制平面第一个节点:kubeadm init

4、将其他控制节点和工作节点加入控制平面第一个节点创建出来的集群:kubeadm join

5、部署选定的网络插件:calico

二、环境准备

1、配置hosts,集群内主机都需要执行。

cat >>/etc/hosts<<EOF 192.168.0.111 master01 192.168.0.112 master02 192.168.0.113 master03 192.168.0.114 worker01 192.168.0.115 worker02 EOF

2、集群主机配置互信,集群内主机都需要执行。

ssh-keygen ssh-copy-id 192.168.0.111 ssh-copy-id 192.168.0.112 ssh-copy-id 192.168.0.113 ssh-copy-id 192.168.0.114 ssh-copy-id 192.168.0.115

3、关闭防火墙、selinux,集群内主机都需要执行。

systemctl stop firewalld && systemctl disable firewalld setenforce 0 sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config sestatus

4、关闭系统的交换分区swap,集群内主机都需要执行。

sed -ri 's/^([^#].*swap.*)$/#\1/' /etc/fstab && grep swap /etc/fstab && swapoff -a && free -h

5、配置和加载ipvs模块,ipvs比iptable更强大。集群内主机都需要执行。

dnf -y install ipvsadm ipset sysstat conntrack libseccomp cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack EOF chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack

6、配置时间台同步,集群内主机都需要执行。

dnf install -y ntpdate crontab -e 0 */1 * * * ntpdate time1.aliyun.com

7、开启主机路由转发及网桥过滤,集群内主机都需要执行。

配置内核加载br_netfilter和iptables放行ipv6和ipv4的流量,确保集群内的容器能够正常通信。

cat > /etc/sysctl.d/k8s.conf <<EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_nonlocal_bind = 1 net.ipv4.ip_forward = 1 vm.swappiness=0 EOF

加载模块:

modprobe br_netfilter

让配置生效:

sysctl -p /etc/sysctl.d/k8s.conf

8、创建应用使用的文件系统,为了防止根目录爆满,不利于后期维护,建议给kubelet和docker创建单独文件系统:

#如果是重搭,则先删掉老vg: vgremove datavg -y

pvcreate /dev/sdb vgcreate datavg /dev/sdb lvcreate -L 13G -n dockerlv datavg -y lvcreate -L 13G -n kubeletlv datavg -y lvcreate -L 10G -n etcdlv datavg -y mkfs.ext4 /dev/datavg/dockerlv mkfs.ext4 /dev/datavg/kubeletlv mkfs.xfs /dev/datavg/etcdlv mkdir /var/lib/docker mkdir /var/lib/kubelet mkdir /var/lib/etcd

配置机器重启自动挂文件系统:

lsblk -f

vi /etc/fstab 格式参考以下:

/dev/datavg/dockerlv /var/lib/docker ext4 defaults,nofail 1 1 /dev/datavg/kubeletlv /var/lib/kubelet ext4 defaults,nofail 1 1 /dev/datavg/etcdlv /var/lib/etcd xfs defaults,nofail 1 1 mount -a

三、安装docker和cri-dockerd

所有主机都需要执行

1、配置yum源:

cd /etc/yum.repos.d/

curl -O https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's/$releasever/8/g' docker-ce.repo

2、创建docker用户,可尝试不创建此用户,之前cri-dockerd无论如何都无法启动,最后创建docker用户后启动成功,所以创建docker用户:

groupadd docker

useradd -g docker docker

3、安装cri-dockerd:

cri-dockerd插件在此工程:https://github.com/Mirantis/cri-dockerd/

先准备cri-dockerd的rpm包,再使用dnf命令安装会自动安装相关依赖包,cri-dockerd用新旧版本都可以,我这里使用v0.3.14-3版本

dnf install cri-dockerd-0.3.14-3.el8.x86_64.rpm -y

4、安装docker:

dnf install docker -y

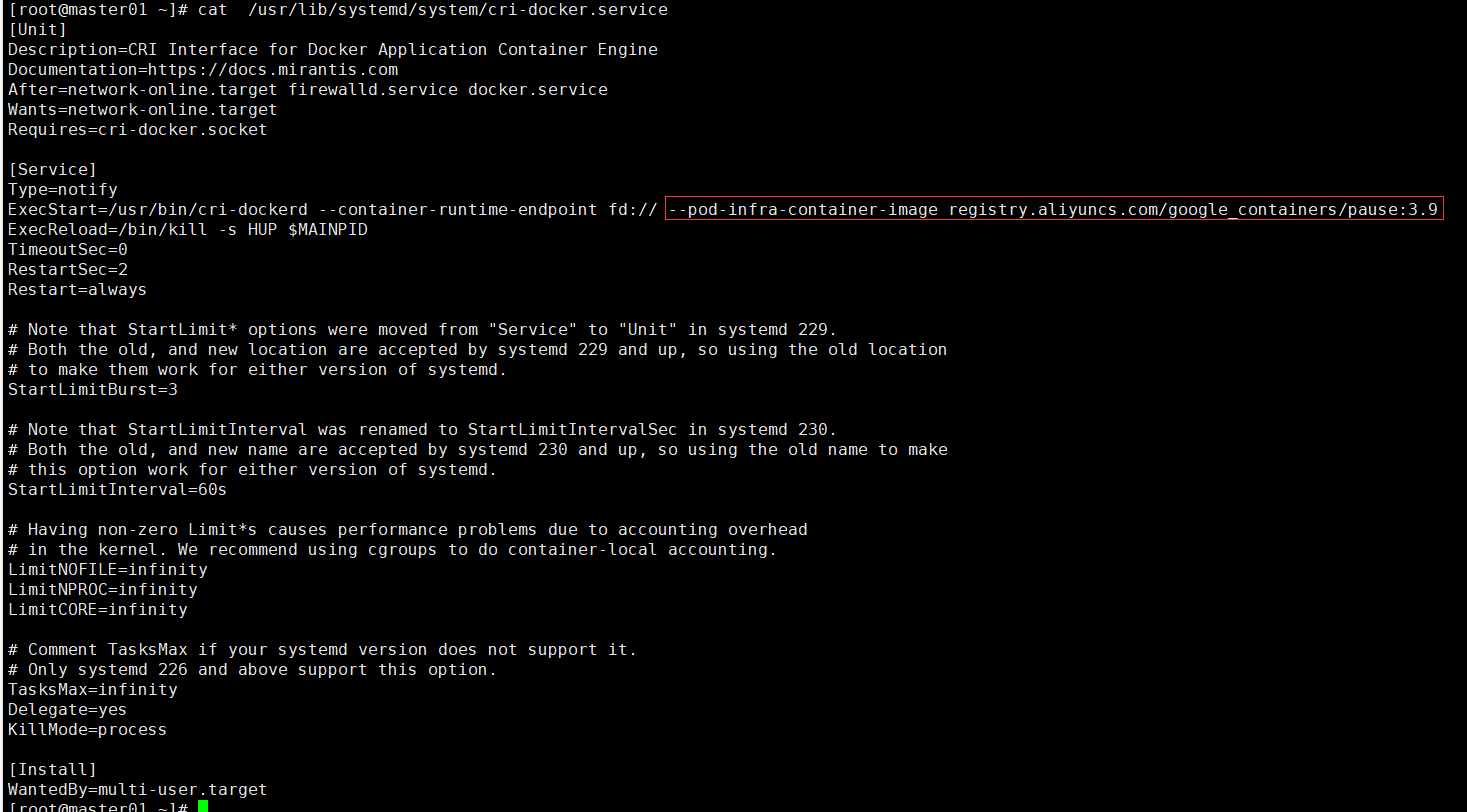

5、配置 cri-dockerd

从国内 cri-dockerd 服务无法下载 k8s.gcr.io上面相关镜像,导致无法启动,所以需要修改cri-dockerd 使用国内镜像源

sed -ri 's@^(.*fd://).*$@\1 --pod-infra-container-image registry.aliyuncs.com/google_containers/pause:3.9@' /usr/lib/systemd/system/cri-docker.service

修改后效果如下:

6、启动和设置docker和cri-dockerd开机自启

systemctl daemon-reload && systemctl start docker && systemctl enable docker

systemctl start cri-docker && systemctl enable cri-docker

7、配置镜像加速:

现在dockerhub仓库被禁用了,配置加速器也没啥用了。exec-opts参数可能还是需要配置。

cat > /etc/docker/daemon.json <<EOF { "registry-mirrors": [ "https://docker.mirrors.ustc.edu.cn", "https://hub-mirror.c.163.com", "https://reg-mirror.qiniu.com", "https://registry.docker-cn.com" ], "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "200m", "max-file": "5" } } EOF systemctl daemon-reload && systemctl restart docker

四、安装kubernetes

1、配置yum源,所有主机都需要执行

cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.29/rpm/ enabled=1 gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.29/rpm/repodata/repomd.xml.key EOF dnf makecache

2、安装 kubelet、kubeadm、kubectl组件,设置systemctl enable kubelet开机自启,所有主机都需要执行

dnf install -y kubelet kubeadm kubectl

此时还不能启动kubelet,因为集群还没有配置起来,现在仅仅设置开机自启动

systemctl enable kubelet

3、生成初始化配置文件,在其中一个master执行。

kubeadm config print init-defaults > kubeadm.yaml

4、修改kubeadm.yaml配置文[root@master01 ~]# cat kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint:

# 修改成本master的ip advertiseAddress: 192.168.0.111 bindPort: 6443 nodeRegistration:

# 修改成cri-dockerd的sock criSocket: unix:///run/cri-dockerd.sock imagePullPolicy: IfNotPresent

# 修改成本master的主机名 name: master01 taints: null --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta3 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {} dns: {} etcd: local:

# 修改etcd的数据目录,默认是/etc/lib/etcd。 dataDir: /var/lib/etcd

# 修改拉取镜像的仓库地址,改为阿里云镜像仓库 imageRepository: registry.aliyuncs.com/google_containers kind: ClusterConfiguration

# 修改成具体要安装的版本号 kubernetesVersion: 1.29.5

# 如果是多master节点,就需要添加这项,指向代理的地址,这里就设置成master的节点controlPlaneEndpoint: "master01:6443" networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 # 添加pod的IP地址

podSubnet: 10.244.0.0/16 scheduler: {} ---

# 在最后添加上下面两部分, apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: ipvs --- apiVersion: kubelet.config.k8s.io/v1beta1 kind: KubeletConfiguration cgroupDriver: systemd

4、执行kubeadm config images list会列出安装kubernetes需要哪些镜像

kubeadm config images list

5、拉取安装kubernetes所需要用到的镜像,在其中一个master执行。

kubeadm config images pull --config=kubeadm.yaml

6、初始化集群,在其中一个master执行。

kubeadm init --config=kubeadm.yaml

出现下面这种类似的情况,就说明初始化成功了

[root@master01 ~]# kubeadm init --config=kubeadm.yaml [init] Using Kubernetes version: v1.29.5 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master01] and IPs [10.96.0.1 192.168.0.111] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [localhost master01] and IPs [192.168.0.111 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [localhost master01] and IPs [192.168.0.111 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "super-admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 23.502197 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace [upload-certs] Using certificate key: 08c16995b0ac15a581c720018a1a25096b776a2592729a27265339a81db4252b [mark-control-plane] Marking the node master01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node master01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] [bootstrap-token] Using token: abcdef.0123456789abcdef [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ You can now join any number of the control-plane node running the following command on each as root: kubeadm join master01:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:aa930347f65820ba0f77a66b3f31d16906c04fe355cf369397456ca7f02b3ff0 \ --control-plane --certificate-key 08c16995b0ac15a581c720018a1a25096b776a2592729a27265339a81db4252b Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join master01:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:aa930347f65820ba0f77a66b3f31d16906c04fe355cf369397456ca7f02b3ff0

7、如果想在其他机器,其他用户能够执行kubectl命令,则执行以下操作:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

8、在master02和master03执行,加入master节点,注意一定要加上--cri-socket unix:///run/cri-dockerd.sock

kubeadm join master01:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:aa930347f65820ba0f77a66b3f31d16906c04fe355cf369397456ca7f02b3ff0 \ --control-plane --certificate-key 08c16995b0ac15a581c720018a1a25096b776a2592729a27265339a81db4252b \ --cri-socket unix:///run/cri-dockerd.sock

9、在worker01和worker02执行,加入node节点,注意一定要加上--cri-socket unix:///run/cri-dockerd.sock

kubeadm join master01:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:aa930347f65820ba0f77a66b3f31d16906c04fe355cf369397456ca7f02b3ff0 \

--cri-socket unix:///run/cri-dockerd.sock

10、如果安装失败,重置初始化

kubeadm reset --cri-socket=unix:///var/run/cri-dockerd.sock

11、如果工作节点不够用,过一段时间后想要添加新的工作节点,先根据以上步骤完成节点初始化,再在master执行以下命令获取加入添加节点命令

kubeadm token create --print-join-command

五、安装网络插件

1、下载网络插件

wget https://docs.projectcalico.org/manifests/calico.yaml

2、修改资源清单文件

找到下面的容器containers部分来修改

containers:

# Runs calico-node container on each Kubernetes node. This

# container programs network policy and routes on each

# host.

- name: calico-node

image: docker.io/calico/node:v3.25.0 imagePullPolicy: IfNotPresent envFrom: - configMapRef: # Allow KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT to be overridden for eBPF mode. name: kubernetes-services-endpoint optional: true env: # Use Kubernetes API as the backing datastore. - name: DATASTORE_TYPE value: "kubernetes" # Wait for the datastore. - name: WAIT_FOR_DATASTORE value: "true" # Set based on the k8s node name. - name: NODENAME valueFrom: fieldRef: fieldPath: spec.nodeName # Choose the backend to use. - name: CALICO_NETWORKING_BACKEND valueFrom: configMapKeyRef: name: calico-config key: calico_backend # Cluster type to identify the deployment type - name: CLUSTER_TYPE value: "k8s,bgp" # Auto-detect the BGP IP address. - name: IP value: "autodetect" # Enable IPIP - name: CALICO_IPV4POOL_IPIP value: "Always"

# 在这里指定网卡 添加下面两行 - name: IP_AUTODETECTION_METHOD value: "interface=ens33" # Enable or Disable VXLAN on the default IP pool. - name: CALICO_IPV4POOL_VXLAN value: "Never" # Enable or Disable VXLAN on the default IPv6 IP pool. - name: CALICO_IPV6POOL_VXLAN value: "Never" # Set MTU for tunnel device used if ipip is enabled - name: FELIX_IPINIPMTU valueFrom: configMapKeyRef: name: calico-config key: veth_mtu # Set MTU for the VXLAN tunnel device. - name: FELIX_VXLANMTU valueFrom: configMapKeyRef: name: calico-config key: veth_mtu # Set MTU for the Wireguard tunnel device. - name: FELIX_WIREGUARDMTU valueFrom: configMapKeyRef: name: calico-config key: veth_mtu # The default IPv4 pool to create on startup if none exists. Pod IPs will be # chosen from this range. Changing this value after installation will have # no effect. This should fall within `--cluster-cidr`.

# 这部分原本是注释的,需要去掉#号,将192.168.0.0/16修改成10.244.0.0/16。这里对应的是kubeadm.yaml的podSubnet配置 - name: CALICO_IPV4POOL_CIDR value: "10.244.0.0/16" # Disable file logging so `kubectl logs` works. - name: CALICO_DISABLE_FILE_LOGGING value: "true" # Set Felix endpoint to host default action to ACCEPT. - name: FELIX_DEFAULTENDPOINTTOHOSTACTION value: "ACCEPT" # Disable IPv6 on Kubernetes. - name: FELIX_IPV6SUPPORT value: "false" - name: FELIX_HEALTHENABLED value: "true" securityContext: privileged: true

3、安装网络插件

kubectl apply -f calico.yaml

可用命令观察各服务容器的状态

watch kubectl get pods --all-namespaces -o wide

4、查看节点状态

kubectl get node

[root@master01 ~]# kubectl get nodes -owide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME master01 Ready control-plane 3d19h v1.29.5 192.168.0.111 <none> openEuler 22.03 (LTS-SP3) 5.10.0-182.0.0.95.oe2203sp3.x86_64 docker://24.0.6 master02 Ready control-plane 3d19h v1.29.5 192.168.0.112 <none> openEuler 22.03 (LTS-SP3) 5.10.0-182.0.0.95.oe2203sp3.x86_64 docker://24.0.6 master03 Ready control-plane 3d19h v1.29.5 192.168.0.113 <none> openEuler 22.03 (LTS-SP3) 5.10.0-182.0.0.95.oe2203sp3.x86_64 docker://24.0.6 worker01 Ready <none> 3d19h v1.29.5 192.168.0.114 <none> openEuler 22.03 (LTS-SP3) 5.10.0-182.0.0.95.oe2203sp3.x86_64 docker://24.0.6 worker02 Ready <none> 3d19h v1.29.5 192.168.0.115 <none> openEuler 22.03 (LTS-SP3) 5.10.0-182.0.0.95.oe2203sp3.x86_64 docker://24.0.6

如果镜像难以拉取,可先将镜像加载到docker。这里提供k8s v1.29.5版本部署需要用到的镜像:

链接:https://pan.baidu.com/s/11cQI-dn7xk-hiqDu57y5MA?pwd=dv03

提取码:dv03

浙公网安备 33010602011771号

浙公网安备 33010602011771号