二进制 & 工具部署Kubernetes

Kubernetes架构篇

Kubernetes 是一个可移植的、可扩展的开源平台,用于管理容器化的工作负载和服务,可促进声明式配置和自动化。Kubernetes 拥有一个庞大且快速增长的生态系统。Kubernetes 的服务、支持和工具广泛可用。

一、简介

Kubernetes 是一个可移植的、可扩展的开源平台,用于管理容器化的工作负载和服务,可促进声明式配置和自动化。Kubernetes 拥有一个庞大且快速增长的生态系统。Kubernetes 的服务、支持和工具广泛可用。 一、简介Kubernetes是一个全新的基于容器技术的分布式领先方案。简称:K8S。它是Google开源的容器集群管理系统,它的设计灵感来自于Google内部的一个叫作Borg的容器管理系统。继承了Google十余年的容器集群使用经验。它为容器化的应用提供了部署运行、资源调度、服务发现和动态伸缩等一些列完整的功能,极大地提高了大规模容器集群管理的便捷性。

kubernetes是一个完备的分布式系统支撑平台。具有完备的集群管理能力,多扩多层次的安全防护和准入机制、多租户应用支撑能力、透明的服务注册和发现机制、內建智能负载均衡器、强大的故障发现和自我修复能力、服务滚动升级和在线扩容能力、可扩展的资源自动调度机制以及多粒度的资源配额管理能力。

在集群管理方面,Kubernetes将集群中的机器划分为一个Master节点和一群工作节点Node,其中,在Master节点运行着集群管理相关的一组进程kube-apiserver、kube-controller-manager和kube-scheduler,这些进程实现了整个集群的资源管理、Pod调度、弹性伸缩、安全控制、系统监控和纠错等管理能力,并且都是全自动完成的。Node作为集群中的工作节点,运行真正的应用程序,在Node上Kubernetes管理的最小运行单元是Pod。Node上运行着Kubernetes的kubelet、kube-proxy服务进程,这些服务进程负责Pod的创建、启动、监控、重启、销毁以及实现软件模式的负载均衡器。

在Kubernetes集群中,它解决了传统IT系统中服务扩容和升级的两大难题。如果今天的软件并不是特别复杂并且需要承载的峰值流量不是特别多,那么后端项目的部署其实也只需要在虚拟机上安装一些简单的依赖,将需要部署的项目编译后运行就可以了。但是随着软件变得越来越复杂,一个完整的后端服务不再是单体服务,而是由多个职责和功能不同的服务组成,服务之间复杂的拓扑关系以及单机已经无法满足的性能需求使得软件的部署和运维工作变得非常复杂,这也就使得部署和运维大型集群变成了非常迫切的需求。

Kubernetes 的出现不仅主宰了容器编排的市场,更改变了过去的运维方式,不仅将开发与运维之间边界变得更加模糊,而且让 DevOps 这一角色变得更加清晰,每一个软件工程师都可以通过 Kubernetes 来定义服务之间的拓扑关系、线上的节点个数、资源使用量并且能够快速实现水平扩容、蓝绿部署等在过去复杂的运维操作。

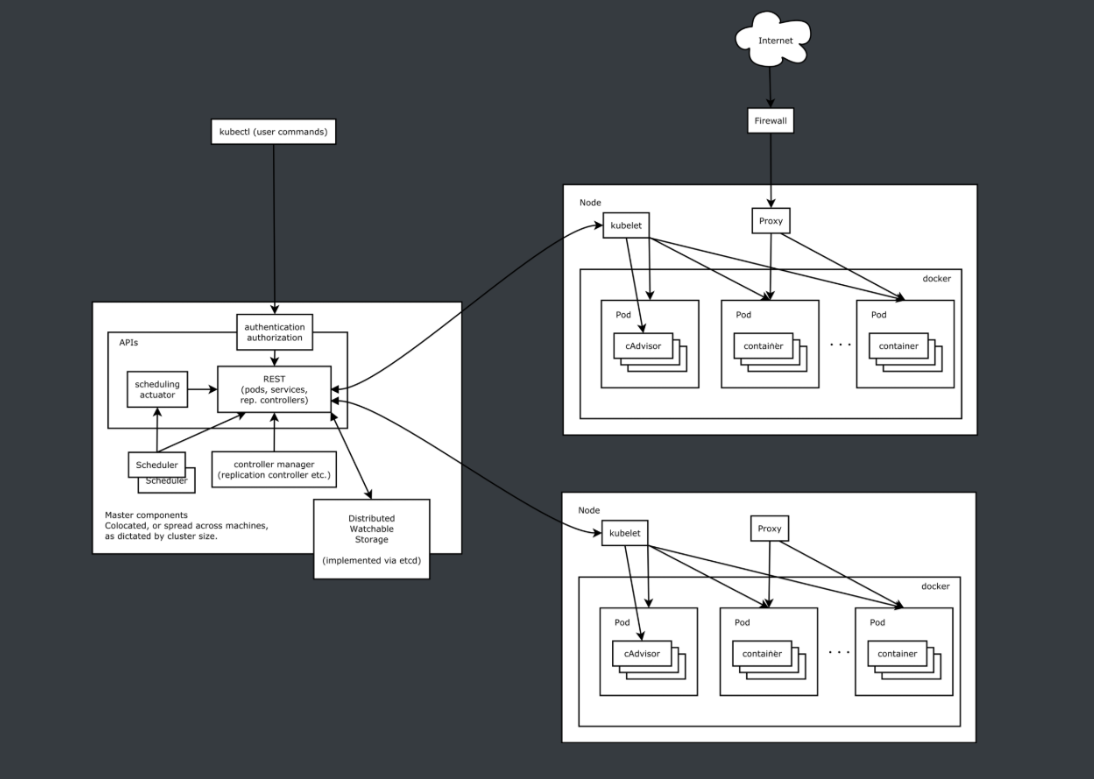

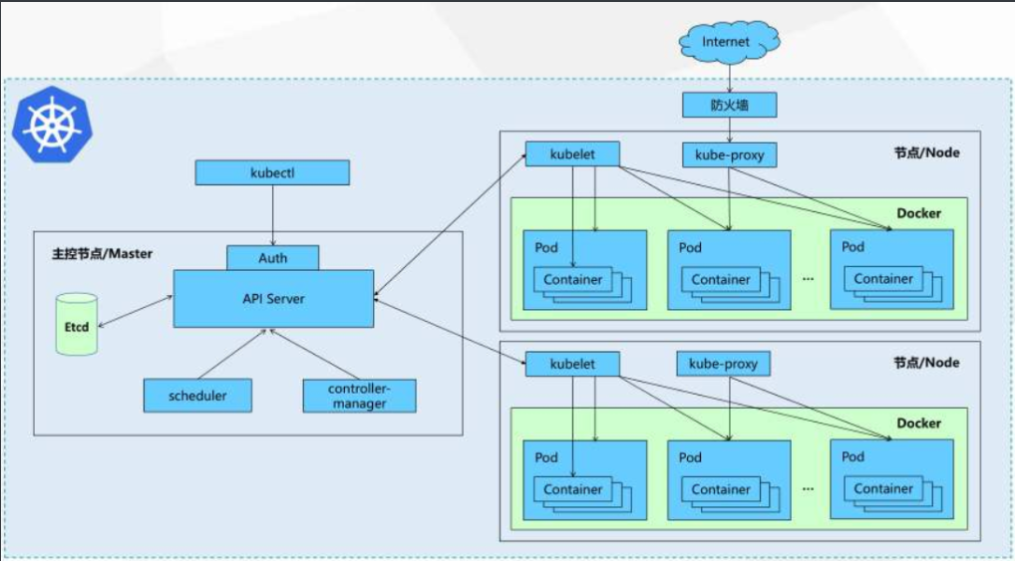

二、架构

Kubernetes 遵循非常传统的客户端服务端架构,客户端通过 RESTful 接口或者直接使用 kubectl 与 Kubernetes 集群进行通信,这两者在实际上并没有太多的区别,后者也只是对 Kubernetes 提供的 RESTful API 进行封装并提供出来。每一个 Kubernetes 集群都由一组 Master 节点和一系列的 Worker 节点组成,其中 Master 节点主要负责存储集群的状态并为 Kubernetes 对象分配和调度资源。

Master

它主要负责接收客户端的请求,安排容器的执行并且运行控制循环,将集群的状态向目标状态进行迁移,Master 节点内部由三个组件构成:

-

API Server

负责处理来自用户的请求,其主要作用就是对外提供 RESTful 的接口,包括用于查看集群状态的读请求以及改变集群状态的写请求,也是唯一一个与 etcd 集群通信的组件。

-

ControllerController

管理器运行了一系列的控制器进程,这些进程会按照用户的期望状态在后台不断地调节整个集群中的对象,当服务的状态发生了改变,控制器就会发现这个改变并且开始向目标状态迁移。

-

SchedulerScheduler

调度器其实为 Kubernetes 中运行的 Pod 选择部署的 Worker 节点,它会根据用户的需要选择最能满足请求的节点来运行 Pod,它会在每次需要调度 Pod 时执行。

Node

Node节点实现相对简单一点,主要是由kubelet和kube-proxy两部分组成: kubelet 是一个节点上的主要服务,它周期性地从 API Server 接受新的或者修改的 Pod 规范并且保证节点上的 Pod 和其中容器的正常运行,还会保证节点会向目标状态迁移,该节点仍然会向 Master 节点发送宿主机的健康状况。 kube-proxy 负责宿主机的子网管理,同时也能将服务暴露给外部,其原理就是在多个隔离的网络中把请求转发给正确的 Pod 或者容器。

Kubernetes架构图

在这张系统架构图中,我们把服务分为运行在工作节点上的服务和组成集群级别控制板的服务。

Kubernetes主要由以下几个核心组件组成:

- etcd保存了整个集群的状态

- apiserver提供了资源操作的唯一入口,并提供认证、授权、访问控制、API注册和发现等机制

- controller manager负责维护集群的状态,比如故障检测、自动扩展、滚动更新等

- scheduler负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上

- kubelet负责维护容器的生命周期,同时也负责Volume(CVI)和网络(CNI)的管理

- Container runtime负责镜像管理以及Pod和容器的真正运行(CRI)

- kube-proxy负责为Service提供cluster内部的服务发现和负载均衡

除了核心组件,还有一些推荐的组件:

- kube-dns负责为整个集群提供DNS服务

- Ingress Controller为服务提供外网入口

- Heapster提供资源监控

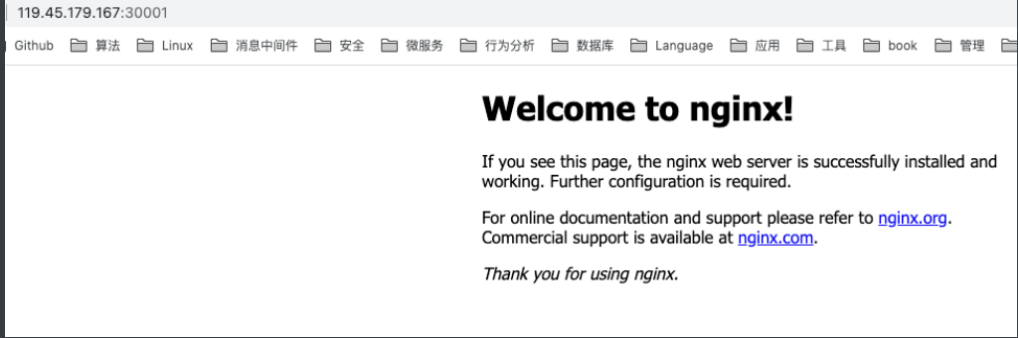

- Dashboard提供GUIFederation提供跨可用区的集群

- Fluentd-elasticsearch提供集群日志采集、存储与查询

三、安装部署

Kubernetes有两种方式,第一种是二进制的方式,可定制但是部署复杂容易出错;第二种是kubeadm工具安装,部署简单,不可定制化。

环境初始化

在开始之前,我们需要集群所用到的所有机器进行初始化。

系统环境

| 软件 | 版本 |

|---|---|

| CentOS | CentOS Linux release 7.7.1908 (Core) |

| Docker | 19.03.12 |

| kubernetes | v1.18.8 |

| etcd | 3.3.24 |

| flannel | v0.11.0 |

| cfssl | |

| kernel-lt | 4.18+ |

| kernel-lt-devel | 4.18+ |

软件规划

| IP | 安装软件 |

|---|---|

| kubernetes-master-01 | kube-apiserver,kube-controller-manager,kube-scheduler,etcd, kubelet,docker |

| kubernetes-master-02 | kube-apiserver,kube-controller-manager,kube-scheduler,etcd, kubelet,docker |

| kubernetes-master-03 | kube-apiserver,kube-controller-manager,kube-scheduler,etcd, kubelet,docker |

| kubernetes-node-01 | kubelet,kube-proxy,etcd,docker |

| kubernetes-node-02 | kubelet,kube-proxy,etcd,docker |

| kubernetes-master-vip | kubectl,haproxy, Keepalive |

集群规划

| 主机名 | IP |

|---|---|

| kubernetes-master-01 | 172.16.0.50 |

| kubernetes-master-02 | 172.16.0.51 |

| kubernetes-master-03 | 172.16.0.52 |

| kubernetes-node-01 | 172.16.0.53 |

| kubernetes-node-02 | 172.16.0.54 |

| kubernetes-master-vip | 172.16.0.55 |

关闭selinux

setenforce 0Copy to clipboardErrorCopied

关闭防火墙

systemctl disable --now firewalld

setenforce 0Copy to clipboardErrorCopied

关闭swap分区

swapoff -a

sed -i.bak 's/^.*centos-swap/#&/g' /etc/fstab

echo 'KUBELET_EXTRA_ARGS="--fail-swap-on=false"' > /etc/sysconfig/kubeletCopy to clipboardErrorCopied

修改主机名

# Master 01节点

$ hostnamectl set-hostname kubernetes-master-01

# Master 02节点

$ hostnamectl set-hostname kubernetes-master-02

# Master 03节点

$ hostnamectl set-hostname kubernetes-master-03

# Node 01节点

$ hostnamectl set-hostname kubernetes-master-01

# Node 02节点

$ hostnamectl set-hostname kubernetes-node-02

# 负载均衡 节点

$ hostnamectl set-hostname kubernetes-master-vipCopy to clipboardErrorCopied

配置HOSTS解析

cat >> /etc/hosts <<EOF

172.16.0.50 kubernetes-master-01

172.16.0.51 kubernetes-master-02

172.16.0.52 kubernetes-master-03

172.16.0.53 kubernetes-node-01

172.16.0.54 kubernetes-node-02

172.16.0.56 kubernetes-master-vip

EOF

Copy to clipboardErrorCopied

集群各节点免密登录

ssh-keygen -t rsa

for i in kubernetes-master-01 kubernetes-master-02 kubernetes-master-03 kubernetes-node-01 kubernetes-node-02 kubernetes-master-vip ; do ssh-copy-id -i ~/.ssh/id_rsa.pub root@$i ; doneCopy to clipboardErrorCopied

升级内核版本

yum localinstall -y kernel-lt*

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

grubby --default-kernel

# 重启

rebootCopy to clipboardErrorCopied

安装IPVS模块

# 安装IPVS

yum install -y conntrack-tools ipvsadm ipset conntrack libseccomp

# 加载IPVS模块

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in \${ipvs_modules}; do

/sbin/modinfo -F filename \${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe \${kernel_module}

fi

done

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vsCopy to clipboardErrorCopied

内核优化

cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp.keepaliv.probes = 3

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp.max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp.max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.top_timestamps = 0

net.core.somaxconn = 16384

EOF

# 立即生效

sysctl --systemCopy to clipboardErrorCopied

配置yum源

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

curl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

# 刷新缓存

yum makecache

# 更新系统

yum update -y --exclud=kernel* Copy to clipboardErrorCopied

安装基础软件

yum install wget expect vim net-tools ntp bash-completion ipvsadm ipset jq iptables conntrack sysstat libseccomp -yCopy to clipboardErrorCopied

关闭防火墙

[root@localhost ~]# systemctl disable --now dnsmasq

[root@localhost ~]# systemctl disable --now firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@localhost ~]# systemctl disable --now NetworkManager

Removed symlink /etc/systemd/system/multi-user.target.wants/NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.NetworkManager.service.

Removed symlink /etc/systemd/system/dbus-org.freedesktop.nm-dispatcher.service.

Removed symlink /etc/systemd/system/network-online.target.wants/NetworkManager-wait-online.service.Copy to clipboardErrorCopied

kubeadm安装

kubeadm是官方提供的Kubernetes自动化部署工具。简单易用,不过不方便自定制。

安装Docker

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install docker-ce -y

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://8mh75mhz.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload ; systemctl restart docker;systemctl enable --now docker.serviceCopy to clipboardErrorCopied

Master节点安装

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

setenforce 0

yum install -y kubelet kubeadm kubectlCopy to clipboardErrorCopied

Node节点安装

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

setenforce 0

yum install -y kubelet kubeadm kubectCopy to clipboardErrorCopied

设置开机自启动

systemctl enable --now docker.service kubelet.service Copy to clipboardErrorCopied

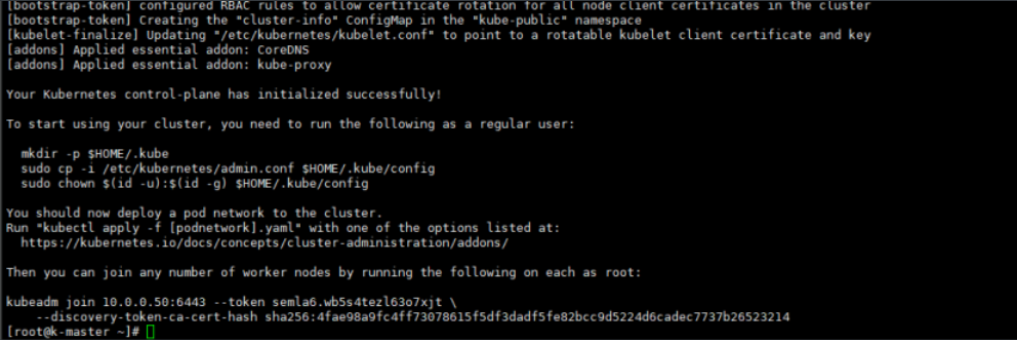

初始化

kubeadm init \

--image-repository=registry.cn-hangzhou.aliyuncs.com/k8sos \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 Copy to clipboardErrorCopied

- 根据命令提示

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configCopy to clipboardErrorCopied

- 命令提示

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrcCopy to clipboardErrorCopied

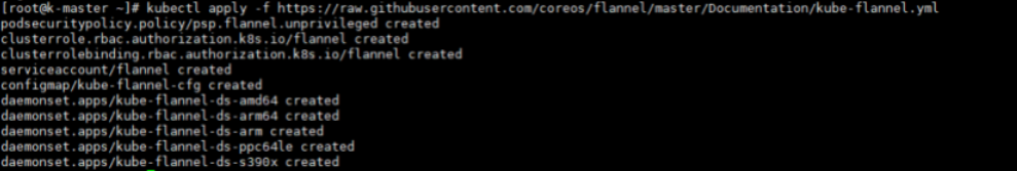

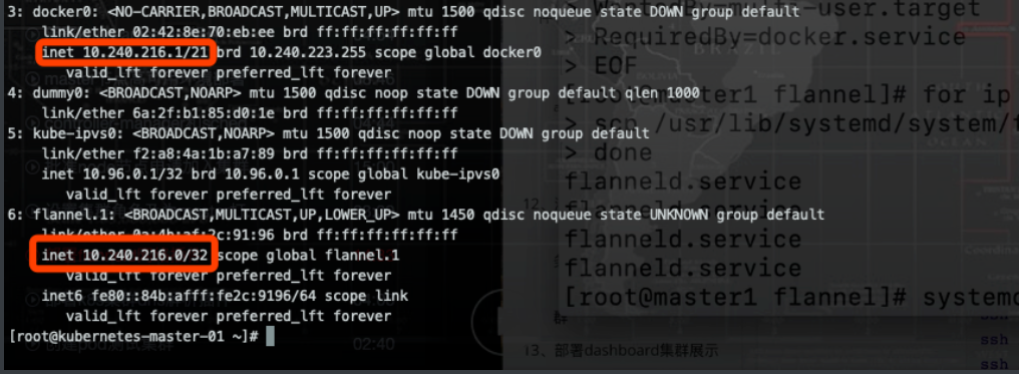

安装Flannel网络插件

kubernetes需要使用第三方的网络插件来实现kubernetes的网络功能,这样一来,安装网络插件成为必要前提;第三方网络插件有多种,常用的有flanneld、calico和cannel(flanneld+calico),不同的网络组件,都提供基本的网络功能,为各个Node节点提供IP网络等。

- 下载网络插件配置文件

docker pull registry.cn-hangzhou.aliyuncs.com/k8sos/flannel:v0.12.0-amd64 ; docker tag registry.cn-hangzhou.aliyuncs.com/k8sos/flannel:v0.12.0-amd64 quay.io/coreos/flannel:v0.12.0-amd64

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.ymlCopy to clipboardErrorCopied

加入集群

Node节点加入集群,需要Token。

# 创建TOKEN

kubeadm token create --print-join-command

# node节点加入集群

kubeadm join 10.0.0.50:6443 --token 038qwm.hpoxkc1f2fkgti3r --discovery-token-ca-cert-hash sha256:edcd2c212be408f741e439abe304711ffb0adbb3bedbb1b93354bfdc3dd13b04Copy to clipboardErrorCopied

Master节点加入集群

同上,master节点需要安装好k8s组件及Docker,然后Master节点接入集群需要提前做高可用。

Master节点高可用

- 安装高可用软件

# 需要在Master节点安装高可用软件

yum install -y keepalived haproxy Copy to clipboardErrorCopied

-

配置

haproxy-

修改配置文件

cat > /etc/haproxy/haproxy.cfg <<EOF global maxconn 2000 ulimit-n 16384 log 127.0.0.1 local0 err stats timeout 30s defaults log global mode http option httplog timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15s frontend monitor-in bind *:33305 mode http option httplog monitor-uri /monitor listen stats bind *:8006 mode http stats enable stats hide-version stats uri /stats stats refresh 30s stats realm Haproxy\ Statistics stats auth admin:admin frontend k8s-master bind 0.0.0.0:8443 bind 127.0.0.1:8443 mode tcp option tcplog tcp-request inspect-delay 5s default_backend k8s-master backend k8s-master mode tcp option tcplog option tcp-check balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server kubernetes-master-01 172.16.0.50:6443 check inter 2000 fall 2 rise 2 weight 100 server kubernetes-master-02 172.16.0.51:6443 check inter 2000 fall 2 rise 2 weight 100 server kubernetes-master-03 172.16.0.52:6443 check inter 2000 fall 2 rise 2 weight 100 EOFCopy to clipboardErrorCopied

-

-

分发配置至其他节点

for i in kubernetes-master-01 kubernetes-master-02 kubernetes-master-03; do ssh root@$i "mv /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg_bak" scp haproxy.cfg root@$i:/etc/haproxy/haproxy.cfg doneCopy to clipboardErrorCopied -

配置

keepalive-

修改

keepalive配置mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf_bak cat > /etc/keepalived/keepalived.conf <<EOF ! Configuration File for keepalived global_defs { router_id LVS_DEVEL } vrrp_script chk_kubernetes { script "/etc/keepalived/check_kubernetes.sh" interval 2 weight -5 fall 3 rise 2 } vrrp_instance VI_1 { state MASTER interface eth0 mcast_src_ip 172.16.0.50 virtual_router_id 51 priority 100 advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 172.16.0.55 } # track_script { # chk_kubernetes # } } EOFCopy to clipboardErrorCopied -

编写监控脚本

cat > /etc/keepalived/check_kubernetes.sh <<EOF #!/bin/bash function chech_kubernetes() { for ((i=0;i<5;i++));do apiserver_pid_id=$(pgrep kube-apiserver) if [[ ! -z $apiserver_pid_id ]];then return else sleep 2 fi apiserver_pid_id=0 done } # 1:running 0:stopped check_kubernetes if [[ $apiserver_pid_id -eq 0 ]];then /usr/bin/systemctl stop keepalived exit 1 else exit 0 fi EOF chmod +x /etc/keepalived/check_kubernetes.shCopy to clipboardErrorCopied

-

-

分发至其他节点

for i in kubernetes-master-02 kubernetes-master-03; do ssh root@$i "mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf_bak" scp /etc/keepalived/keepalived.conf root@$i:/etc/keepalived/keepalived.conf scp /etc/keepalived/check_kubernetes.sh root@$i:/etc/keepalived/check_kubernetes.sh doneCopy to clipboardErrorCopied -

修改备用节点配置

sed -i 's#state MASTER#state BACKUP#g' /etc/keepalived/keepalived.conf sed -i 's#172.16.0.50#172.16.0.51#g' /etc/keepalived/keepalived.conf sed -i 's#priority 100#priority 99#g' /etc/keepalived/keepalived.confCopy to clipboardErrorCopied -

启动

# 在所有的master节点上执行 [root@kubernetes-master-01 ~]# systemctl enable --now keepalived.service haproxy.serviceCopy to clipboardErrorCopied -

配置master 节点加入集群文件

apiVersion: kubeadm.k8s.io/v1beta2 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 172.16.0.55 # 负载均衡VIP IP bindPort: 6443 nodeRegistration: criSocket: /var/run/dockershim.sock name: kubernetes-master-01 # 节点名字 taints: - effect: NoSchedule key: node-role.kubernetes.io/master --- apiServer: certSANs: # DNS地址 - "172.16.0.55" timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta2 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {} dns: type: CoreDNS etcd: local: dataDir: /var/lib/etcd imageRepository: registry.cn-hangzhou.aliyuncs.com/k8sos # 集群镜像下载地址 controlPlaneEndpoint: "172.16.0.55:8443" # 监听地址 kind: ClusterConfiguration kubernetesVersion: v1.18.8 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 # service网段 podSubnet: 10.244.0.0/16 # pod网段 scheduler: {}Copy to clipboardErrorCopied -

下载镜像

[root@kubernetes-master-02 ~]# kubeadm config images pull --config kubeam-init.yaml W0905 15:42:34.095990 27731 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io] [config/images] Pulled registry.cn-hangzhou.aliyuncs.com/k8sos/kube-apiserver:v1.18.8 [config/images] Pulled registry.cn-hangzhou.aliyuncs.com/k8sos/kube-controller-manager:v1.18.8 [config/images] Pulled registry.cn-hangzhou.aliyuncs.com/k8sos/kube-scheduler:v1.18.8 [config/images] Pulled registry.cn-hangzhou.aliyuncs.com/k8sos/kube-proxy:v1.18.8 [config/images] Pulled registry.cn-hangzhou.aliyuncs.com/k8sos/pause:3.2 [config/images] Pulled registry.cn-hangzhou.aliyuncs.com/k8sos/etcd:3.4.3-0 [config/images] Pulled registry.cn-hangzhou.aliyuncs.com/k8sos/coredns:1.7.0Copy to clipboardErrorCopied -

初始化节点

kubeadm init --config kubeam-init.yaml --upload-certsCopy to clipboardErrorCopied

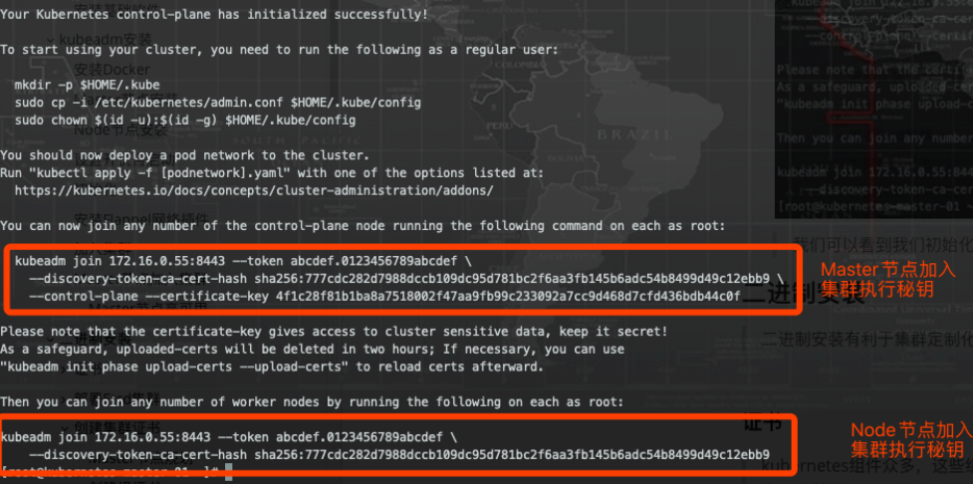

我们可以看到我们初始化出来的完成之后展示出来的信息是负载均衡的节点信息,而非当亲节点信息。

[kubeadm高可用安装

用kubeadm的方式,高可用安装kubernetes集群。

部署docker

添加docker软件yum源

在阿里云镜像站下载对应的docker源

安装docker-ce

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install docker-ce -yCopy to clipboardErrorCopied

检查是否部署成功

[root@kubernetes-master-01 ~]# docker version

Client: Docker Engine - Community

Version: 19.03.12

API version: 1.40

Go version: go1.13.10

Git commit: 48a66213fe

Built: Mon Jun 22 15:46:54 2020

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 19.03.12

API version: 1.40 (minimum version 1.12)

Go version: go1.13.10

Git commit: 48a66213fe

Built: Mon Jun 22 15:45:28 2020

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.2.13

GitCommit: 7ad184331fa3e55e52b890ea95e65ba581ae3429

runc:

Version: 1.0.0-rc10

GitCommit: dc9208a3303feef5b3839f4323d9beb36df0a9dd

docker-init:

Version: 0.18.0

GitCommit: fec3683Copy to clipboardErrorCopied

换docker镜像源

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"registry-mirrors": ["https://8mh75mhz.mirror.aliyuncs.com"]

}

EOFCopy to clipboardErrorCopied

重新加载并启动

sudo systemctl daemon-reload ; systemctl restart docker;systemctl enable --now docker.serviceCopy to clipboardErrorCopied

部署kubernetes

部署kubernetes,首先在阿里云镜像站下载源。

下载源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOFCopy to clipboardErrorCopied

最好重新生成yum缓存

yum makecacheCopy to clipboardErrorCopied

安装kubeadm、kubelet和kubectl组件

-

查看镜像版本

[root@kubernetes-master-01 ~]# yum list kubeadm --showduplicates | grep 1.18.8 Repository epel is listed more than once in the configuration kubeadm.x86_64 1.18.8-0 @kubernetes kubeadm.x86_64 1.18.8-0 kubernetesCopy to clipboardErrorCopied -

安装适合的版本

yum install -y kubelet-1.18.8 kubeadm-1.18.8 kubectl-1.18.8Copy to clipboardErrorCopied -

设置开机自启动

systemctl enable --now kubeletCopy to clipboardErrorCopied

部署Master节点

Master节点是整个集群的核心节点,其中主要包括apiserver、controller manager、scheduler、kubelet以及kube-proxy等组件

生成配置文件

kubeadm config print init-defaults > kubeadm-init.yamlCopy to clipboardErrorCopied

修改初始化配置文件

[root@kubernetes-master-01 ~]# cat kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.16.0.44 # 修改成集群VIP

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: kubernetes-master-01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

certSANs:

- "172.16.0.44" #VIP地址

- "127.0.0.1",

- "172.16.0.50",

- "172.16.0.51",

- "172.16.0.52",

- "172.16.0.55",

- "10.96.0.1",

- "kubernetes",

- "kubernetes.default",

- "kubernetes.default.svc",

- "kubernetes.default.svc.cluster",

- "kubernetes.default.svc.cluster.local"

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/k8sos # 修改成你的镜像仓库

controlPlaneEndpoint: "172.16.0.44:8443" #VIP的地址和端口

kind: ClusterConfiguration

kubernetesVersion: v1.18.8 # 修改成需要安装的版本

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 #添加pod网段

scheduler: {}Copy to clipboardErrorCopied

1、上面的

advertiseAddress字段的值,这个值并非当前主机的网卡地址,而是高可用集群的VIP的地址。2、上面的

controlPlaneEndpoint这里填写的是VIP的地址,端口填写负载均衡的端口。

拉取镜像

[root@kubernetes-master-01 ~]# kubeadm config images pull --config kubeadm-init.yaml

W0910 00:24:00.828524 13149 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/k8sos/kube-apiserver:v1.18.8

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/k8sos/kube-controller-manager:v1.18.8

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/k8sos/kube-scheduler:v1.18.8

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/k8sos/kube-proxy:v1.18.8

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/k8sos/pause:3.2

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/k8sos/etcd:3.4.3-0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/k8sos/coredns:1.6.7Copy to clipboardErrorCopied

初始化Master节点

[root@kubernetes-master-01 ~]# systemctl enable --now haproxy.service

Created symlink from /etc/systemd/system/multi-user.target.wants/haproxy.service to /usr/lib/systemd/system/haproxy.service.

[root@kubernetes-master-01 ~]#

apiVersion: kubeadm.k8s.io/v1beta2

[root@kubernetes-master-01 ~]# kubeadm reset

[reset] Reading configuration from the cluster...

[reset] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

W0912 02:18:15.553917 31660 reset.go:99] [reset] Unable to fetch the kubeadm-config ConfigMap from cluster: failed to get config map: configmaps "kubeadm-config" not found

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

W0912 02:18:17.546319 31660 removeetcdmember.go:79] [reset] No kubeadm config, using etcd pod spec to get data directory

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

[reset] Deleting contents of stateful directories: [/var/lib/etcd /var/lib/kubelet /var/lib/dockershim /var/run/kubernetes /var/lib/cni]

The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually by using the "iptables" command.

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.

[root@kubernetes-master-01 ~]# rm -rf kubeadm-init.yaml

[root@kubernetes-master-01 ~]# rm -rf /etc/kubernetes/

[root@kubernetes-master-01 ~]# rm -rf /var/lib/etcd/

[root@kubernetes-master-01 ~]# kubeadm config print init-defaults > kubeadm-init.yaml

W0912 02:19:15.842407 32092 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[root@kubernetes-master-01 ~]# vim kubeadm-init.yaml

[root@kubernetes-master-01 ~]# kubeadm init --config kubeadm-init.yaml --upload-certs

W0912 02:21:43.282656 32420 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.18.8

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes-master-01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 172.16.0.44 172.16.0.44 172.16.0.44 127.0.0.1 172.16.0.50 172.16.0.51 172.16.0.52 10.96.0.1]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [kubernetes-master-01 localhost] and IPs [172.16.0.44 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [kubernetes-master-01 localhost] and IPs [172.16.0.44 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "admin.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W0912 02:21:47.217408 32420 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W0912 02:21:47.218263 32420 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 22.017997 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.18" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

4148edd0a9c6e678ebca46567255522318b24936b9da7e517f687719f3dc33ac

[mark-control-plane] Marking the node kubernetes-master-01 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node kubernetes-master-01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 172.16.0.44:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:06df91bcacf6b5f206378a11065e2b6948eb2529503248f8e312fc0b913bec62 \

--control-plane --certificate-key 4148edd0a9c6e678ebca46567255522318b24936b9da7e517f687719f3dc33ac

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.16.0.44:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:06df91bcacf6b5f206378a11065e2b6948eb2529503248f8e312fc0b913bec62Copy to clipboardErrorCopied

大概半分钟的时间就会初始化完成。接下来就是加入我们的其他Master节点,以及Node节点组成我们的集群。

Master用户配置

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configCopy to clipboardErrorCopied

Master节点加入集群

我们可以使用集群初始化信息中的加入命令加入Master节点。

kubeadm join 172.16.0.44:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:06df91bcacf6b5f206378a11065e2b6948eb2529503248f8e312fc0b913bec62 \

--control-plane --certificate-key 4148edd0a9c6e678ebca46567255522318b24936b9da7e517f687719f3dc33acCopy to clipboardErrorCopied

Node节点加入集群

加入Node节点同加入Master节点。

kubeadm join 172.16.0.44:8443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:06df91bcacf6b5f206378a11065e2b6948eb2529503248f8e312fc0b913bec62Copy to clipboardErrorCopied

部署Node节点

二进制安装

二进制安装有利于集群定制化,可根据特定组件的负载进行进行自定制。

证书

kubernetes组件众多,这些组件之间通过 HTTP/GRPC 相互通信,以协同完成集群中应用的部署和管理工作。尤其是master节点,更是掌握着整个集群的操作。其安全就变得尤为重要了,在目前世面上最安全的,使用最广泛的就是数字证书。kubernetes正是使用这种认证方式。

安装cfssl证书生成工具

本次我们使用cfssl证书生成工具,这是一款把预先的证书机构、使用期等时间写在json文件里面会更加高效和自动化。cfssl采用go语言编写,是一个开源的证书管理工具,

cfssljson用来从cfssl程序获取json输出,并将证书,密钥,csr和bundle写入文件中。

- 注意:

在做二进制安装k8s前,最好在每一台主机中安装好docker

- 下载

# 下载

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

# 设置执行权限

chmod +x cfssljson_linux-amd64

chmod +x cfssl_linux-amd64

# 移动到/usr/local/bin

mv cfssljson_linux-amd64 cfssljson

mv cfssl_linux-amd64 cfssl

mv cfssljson cfssl /usr/local/binCopy to clipboardErrorCopied

创建根证书

从整个架构来看,集群环境中最重要的部分就是etcd和API server。

所谓根证书,是CA认证中心与用户建立信任关系的基础,用户的数字证书必须有一个受信任的根证书,用户的数字证书才是有效的。

从技术上讲,证书其实包含三部分,用户的信息,用户的公钥,以及证书签名。

CA负责数字证书的批审、发放、归档、撤销等功能,CA颁发的数字证书拥有CA的数字签名,所以除了CA自身,其他机构无法不被察觉的改动。

- 创建请求证书的json配置文件

mkdir -p cert/ca

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "8760h"

}

}

}

}

EOFCopy to clipboardErrorCopied

default是默认策略,指定证书默认有效期是1年

profiles是定义使用场景,这里只是kubernetes,其实可以定义多个场景,分别指定不同的过期时间,使用场景等参数,后续签名证书时使用某个profile;

signing: 表示该证书可用于签名其它证书,生成的ca.pem证书

server auth: 表示client 可以用该CA 对server 提供的证书进行校验;

client auth: 表示server 可以用该CA 对client 提供的证书进行验证。

- 创建根CA证书签名请求文件

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names":[{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai"

}]

}

EOFCopy to clipboardErrorCopied

C:国家

ST:省

L:城市

O:组织

OU:组织别名

- 生成证书

[root@kubernetes-master-01 ca]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2020/08/28 23:51:50 [INFO] generating a new CA key and certificate from CSR

2020/08/28 23:51:50 [INFO] generate received request

2020/08/28 23:51:50 [INFO] received CSR

2020/08/28 23:51:50 [INFO] generating key: rsa-2048

2020/08/28 23:51:50 [INFO] encoded CSR

2020/08/28 23:51:50 [INFO] signed certificate with serial number 66427391707536599498414068348802775591392574059

[root@kubernetes-master-01 ca]# ll

总用量 20

-rw-r--r-- 1 root root 282 8月 28 23:41 ca-config.json

-rw-r--r-- 1 root root 1013 8月 28 23:51 ca.csr

-rw-r--r-- 1 root root 196 8月 28 23:41 ca-csr.json

-rw------- 1 root root 1675 8月 28 23:51 ca-key.pem

-rw-r--r-- 1 root root 1334 8月 28 23:51 ca.pemCopy to clipboardErrorCopied

gencert:生成新的key(密钥)和签名证书

--initca:初始化一个新CA证书

部署Etcd集群

Etcd是基于Raft的分布式key-value存储系统,由CoreOS团队开发,常用于服务发现,共享配置,以及并发控制(如leader选举,分布式锁等等)。Kubernetes使用Etcd进行状态和数据存储!

Etcd节点规划

| Etcd名称 | IP |

|---|---|

| etcd-01 | 172.16.0.50 |

| etcd-02 | 172.16.0.51 |

| etcd-03 | 172.16.0.52 |

创建Etcd证书

hosts字段中IP为所有etcd节点的集群内部通信IP,有几个etcd节点,就写多少个IP。

mkdir -p /root/cert/etcd

cd /root/cert/etcd

cat > etcd-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"172.16.0.50",

"172.16.0.51",

"172.16.0.52",

"172.16.0.53",

"172.16.0.54"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai"

}

]

}

EOFCopy to clipboardErrorCopied

生成证书

[root@kubernetes-master-01 etcd]# cfssl gencert -ca=../ca/ca.pem -ca-key=../ca/ca-key.pem -config=../ca/ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

2020/08/29 00:02:20 [INFO] generate received request

2020/08/29 00:02:20 [INFO] received CSR

2020/08/29 00:02:20 [INFO] generating key: rsa-2048

2020/08/29 00:02:20 [INFO] encoded CSR

2020/08/29 00:02:20 [INFO] signed certificate with serial number 71348009702526539124716993806163559962125770315

[root@kubernetes-master-01 etcd]# ll

总用量 16

-rw-r--r-- 1 root root 1074 8月 29 00:02 etcd.csr

-rw-r--r-- 1 root root 352 8月 28 23:59 etcd-csr.json

-rw------- 1 root root 1675 8月 29 00:02 etcd-key.pem

-rw-r--r-- 1 root root 1460 8月 29 00:02 etcd.pemCopy to clipboardErrorCopied

gencert: 生成新的key(密钥)和签名证书-initca:初始化一个新ca-ca:指明ca的证书-ca-key:指明ca的私钥文件 -config:指明请求证书的json文件-profile:与config中的profile对应,是指根据config中的profile段来生成证书的相关信息

分发证书至etcd服务器

for ip in kubernetes-master-01 kubernetes-master-02 kubernetes-master-03

do

ssh root@${ip} "mkdir -pv /etc/etcd/ssl"

scp ../ca/ca*.pem root@${ip}:/etc/etcd/ssl

scp ./etcd*.pem root@${ip}:/etc/etcd/ssl

done

for ip in kubernetes-master-01 kubernetes-master-02 kubernetes-master-03; do

ssh root@${ip} "ls -l /etc/etcd/ssl";

doneCopy to clipboardErrorCopied

部署etcd

wget https://mirrors.huaweicloud.com/etcd/v3.3.24/etcd-v3.3.24-linux-amd64.tar.gz

tar xf etcd-v3.3.24-linux-amd64

for i in kubernetes-master-02 kubernetes-master-01 kubernetes-master-03

do

scp ./etcd-v3.3.24-linux-amd64/etcd* root@$i:/usr/local/bin/

done

[root@kubernetes-master-01 etcd-v3.3.24-linux-amd64]# etcd --version

etcd Version: 3.3.24

Git SHA: bdd57848d

Go Version: go1.12.17

Go OS/Arch: linux/amd64Copy to clipboardErrorCopied

用systemd管理Etcd

mkdir -pv /etc/kubernetes/conf/etcd

ETCD_NAME=`hostname`

INTERNAL_IP=`hostname -i`

INITIAL_CLUSTER=kubernetes-master-01=https://172.16.0.50:2380,kubernetes-master-02=https://172.16.0.51:2380,kubernetes-master-03=https://172.16.0.52:2380

cat << EOF | sudo tee /usr/lib/systemd/system/etcd.service

[Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

ExecStart=/usr/local/bin/etcd \\

--name ${ETCD_NAME} \\

--cert-file=/etc/etcd/ssl/etcd.pem \\

--key-file=/etc/etcd/ssl/etcd-key.pem \\

--peer-cert-file=/etc/etcd/ssl/etcd.pem \\

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \\

--trusted-ca-file=/etc/etcd/ssl/ca.pem \\

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \\

--peer-client-cert-auth \\

--client-cert-auth \\

--initial-advertise-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-client-urls https://${INTERNAL_IP}:2379,https://127.0.0.1:2379 \\

--advertise-client-urls https://${INTERNAL_IP}:2379 \\

--initial-cluster-token etcd-cluster \\

--initial-cluster ${INITIAL_CLUSTER} \\

--initial-cluster-state new \\

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOFCopy to clipboardErrorCopied

配置项解释

| 配置选项 | 选项说明 |

|---|---|

name |

节点名称 |

data-dir |

指定节点的数据存储目录 |

listen-peer-urls |

与集群其它成员之间的通信地址 |

listen-client-urls |

监听本地端口,对外提供服务的地址 |

initial-advertise-peer-urls |

通告给集群其它节点,本地的对等URL地址 |

advertise-client-urls |

客户端URL,用于通告集群的其余部分信息 |

initial-cluster |

集群中的所有信息节点 |

initial-cluster-token |

集群的token,整个集群中保持一致 |

initial-cluster-state |

初始化集群状态,默认为new |

--cert-file |

客户端与服务器之间TLS证书文件的路径 |

--key-file |

客户端与服务器之间TLS密钥文件的路径 |

--peer-cert-file |

对等服务器TLS证书文件的路径 |

--peer-key-file |

对等服务器TLS密钥文件的路径 |

--trusted-ca-file |

签名client证书的CA证书,用于验证client证书 |

--peer-trusted-ca-file |

签名对等服务器证书的CA证书。 |

启动Etcd

# 在三台及节点上执行

systemctl enable --now etcdCopy to clipboardErrorCopied

测试Etcd集群

ETCDCTL_API=3 etcdctl \

--cacert=/etc/etcd/ssl/etcd.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem \

--endpoints="https://172.16.0.50:2379,https://172.16.0.51:2379,https://172.16.0.52:2379" \

endpoint status --write-out='table'

ETCDCTL_API=3 etcdctl \

--cacert=/etc/etcd/ssl/etcd.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem \

--endpoints="https://172.16.0.50:2379,https://172.16.0.51:2379,https://172.16.0.52:2379" \

member list --write-out='table'Copy to clipboardErrorCopied

创建集群证书

Master节点是集群当中最为重要的一部分,组件众多,部署也最为复杂。

Master节点规划

| 主机名(角色) | IP |

|---|---|

| Kubernetes-master-01 | 172.16.0.50 |

| Kubernetes-master-02 | 172.16.0.51 |

| Kubernetes-master-03 | 172.16.0.52 |

创建根证书

创建一个临时目录来制作证书。

- 创建根证书认证中心配置

[root@kubernetes-master-01 k8s]# mkdir /opt/cert/k8s

[root@kubernetes-master-01 k8s]# cd /opt/cert/k8s

[root@kubernetes-master-01 k8s]# pwd

/opt/cert/k8s

[root@kubernetes-master-01 k8s]# cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOFCopy to clipboardErrorCopied

- 创建根证书签名

[root@kubernetes-master-01 k8s]# cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

[root@kubernetes-master-01 k8s]# ll

total 8

-rw-r--r-- 1 root root 294 Sep 13 19:59 ca-config.json

-rw-r--r-- 1 root root 212 Sep 13 20:01 ca-csr.jsonCopy to clipboardErrorCopied

- 生成根证书

[root@kubernetes-master-01 k8s]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2020/09/13 20:01:45 [INFO] generating a new CA key and certificate from CSR

2020/09/13 20:01:45 [INFO] generate received request

2020/09/13 20:01:45 [INFO] received CSR

2020/09/13 20:01:45 [INFO] generating key: rsa-2048

2020/09/13 20:01:46 [INFO] encoded CSR

2020/09/13 20:01:46 [INFO] signed certificate with serial number 588993429584840635805985813644877690042550093427

[root@kubernetes-master-01 k8s]# ll

total 20

-rw-r--r-- 1 root root 294 Sep 13 19:59 ca-config.json

-rw-r--r-- 1 root root 960 Sep 13 20:01 ca.csr

-rw-r--r-- 1 root root 212 Sep 13 20:01 ca-csr.json

-rw------- 1 root root 1679 Sep 13 20:01 ca-key.pem

-rw-r--r-- 1 root root 1273 Sep 13 20:01 ca.pem

Copy to clipboardErrorCopied

签发kube-apiserver证书

- 创建kube-apiserver证书签名配置

cat > server-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"172.16.0.50",

"172.16.0.51",

"172.16.0.52",

"172.16.0.55",

"10.96.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai"

}

]

}

EOFCopy to clipboardErrorCopied

host:localhost地址 +master部署节点的ip地址 +etcd节点的部署地址 + 负载均衡指定的VIP(172.16.0.55) +service ip段的第一个合法地址(10.96.0.1) + k8s默认指定的一些地址

- 生成证书

[root@kubernetes-master-01 k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

2020/08/29 12:29:41 [INFO] generate received request

2020/08/29 12:29:41 [INFO] received CSR

2020/08/29 12:29:41 [INFO] generating key: rsa-2048

2020/08/29 12:29:41 [INFO] encoded CSR

2020/08/29 12:29:41 [INFO] signed certificate with serial number 701177072439793091180552568331885323625122463841Copy to clipboardErrorCopied

签发kube-controller-manager证书

- 创建kube-controller-manager证书签名配置

cat > kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"hosts": [

"127.0.0.1",

"172.16.0.50",

"172.16.0.51",

"172.16.0.52",

"172.16.0.55"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:kube-controller-manager",

"OU": "System"

}

]

}

EOFCopy to clipboardErrorCopied

- 生成证书

[root@kubernetes-master-01 k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

2020/08/29 12:40:21 [INFO] generate received request

2020/08/29 12:40:21 [INFO] received CSR

2020/08/29 12:40:21 [INFO] generating key: rsa-2048

2020/08/29 12:40:22 [INFO] encoded CSR

2020/08/29 12:40:22 [INFO] signed certificate with serial number 464924254532468215049650676040995556458619239240Copy to clipboardErrorCopied

签发kube-scheduler证书

- 创建kube-scheduler签名配置

cat > kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"172.16.0.50",

"172.16.0.51",

"172.16.0.52",

"172.16.0.55"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:kube-scheduler",

"OU": "System"

}

]

}

EOFCopy to clipboardErrorCopied

- 创建证书

[root@kubernetes-master-01 k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

2020/08/29 12:42:29 [INFO] generate received request

2020/08/29 12:42:29 [INFO] received CSR

2020/08/29 12:42:29 [INFO] generating key: rsa-2048

2020/08/29 12:42:29 [INFO] encoded CSR

2020/08/29 12:42:29 [INFO] signed certificate with serial number 420546069405900774170348492061478728854870171400Copy to clipboardErrorCopied

签发kube-proxy证书

- 创建kube-proxy证书签名配置

cat > kube-proxy-csr.json << EOF

{

"CN":"system:kube-proxy",

"hosts":[],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"system:kube-proxy",

"OU":"System"

}

]

}

EOFCopy to clipboardErrorCopied

- 生成证书

[root@kubernetes-master-01 k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

2020/08/29 12:45:11 [INFO] generate received request

2020/08/29 12:45:11 [INFO] received CSR

2020/08/29 12:45:11 [INFO] generating key: rsa-2048

2020/08/29 12:45:11 [INFO] encoded CSR

2020/08/29 12:45:11 [INFO] signed certificate with serial number 39717174368771783903269928946823692124470234079

2020/08/29 12:45:11 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").Copy to clipboardErrorCopied

签发操作用户证书

为了能让集群客户端工具安全的访问集群,所以要为集群客户端创建证书,使其具有所有的集群权限。

- 创建证书签名配置

cat > admin-csr.json << EOF

{

"CN":"admin",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"system:masters",

"OU":"System"

}

]

}

EOFCopy to clipboardErrorCopied

- 生成证书

[root@kubernetes-master-01 k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

2020/08/29 12:50:46 [INFO] generate received request

2020/08/29 12:50:46 [INFO] received CSR

2020/08/29 12:50:46 [INFO] generating key: rsa-2048

2020/08/29 12:50:46 [INFO] encoded CSR

2020/08/29 12:50:46 [INFO] signed certificate with serial number 247283053743606613190381870364866954196747322330

2020/08/29 12:50:46 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").Copy to clipboardErrorCopied

颁发证书

Master节点所需证书:ca、kube-apiservver、kube-controller-manager、kube-scheduler、用户证书、Etcd证书

Node节点证书:ca、用户证书、kube-proxy证书

VIP节点:用户证书

- 颁发Master节点证书

mkdir -pv /etc/kubernetes/ssl

cp -p ./{ca*pem,server*pem,kube-controller-manager*pem,kube-scheduler*.pem,kube-proxy*pem,admin*.pem} /etc/kubernetes/ssl

for i in kubernetes-master-02 kubernetes-master-03; do

ssh root@$i "mkdir -pv /etc/kubernetes/ssl"

scp /etc/kubernetes/ssl/* root@$i:/etc/kubernetes/ssl

done

[root@kubernetes-master-01 k8s]# for i in kubernetes-master-02 kubernetes-master-03; do

> ssh root@$i "mkdir -pv /etc/kubernetes/ssl"

> scp /etc/kubernetes/ssl/* root@$i:/etc/kubernetes/ssl

> done

mkdir: created directory ‘/etc/kubernetes/ssl’

admin-key.pem 100% 1679 562.2KB/s 00:00

admin.pem 100% 1359 67.5KB/s 00:00

ca-key.pem 100% 1679 39.5KB/s 00:00

ca.pem 100% 1273 335.6KB/s 00:00

kube-controller-manager-key.pem 100% 1679 489.6KB/s 00:00

kube-controller-manager.pem 100% 1472 69.4KB/s 00:00

kube-proxy-key.pem 100% 1679 646.6KB/s 00:00

kube-proxy.pem 100% 1379 672.8KB/s 00:00

kube-scheduler-key.pem 100% 1679 472.1KB/s 00:00

kube-scheduler.pem 100% 1448 82.7KB/s 00:00

server-key.pem 100% 1675 898.3KB/s 00:00

server.pem 100% 1554 2.2MB/s 00:00

mkdir: created directory ‘/etc/kubernetes/ssl’

admin-key.pem 100% 1679 826.3KB/s 00:00

admin.pem 100% 1359 1.1MB/s 00:00

ca-key.pem 100% 1679 127.4KB/s 00:00

ca.pem 100% 1273 50.8KB/s 00:00

kube-controller-manager-key.pem 100% 1679 197.7KB/s 00:00

kube-controller-manager.pem 100% 1472 833.7KB/s 00:00

kube-proxy-key.pem 100% 1679 294.6KB/s 00:00

kube-proxy.pem 100% 1379 94.9KB/s 00:00

kube-scheduler-key.pem 100% 1679 411.2KB/s 00:00

kube-scheduler.pem 100% 1448 430.4KB/s 00:00

server-key.pem 100% 1675 924.0KB/s 00:00

server.pem 100% 1554 126.6KB/s 00:00 Copy to clipboardErrorCopied

- 颁发Node节点证书

for i in kubernetes-node-01 kubernetes-node-02; do

ssh root@$i "mkdir -pv /etc/kubernetes/ssl"

scp -pr ./{ca*.pem,admin*pem,kube-proxy*pem} root@$i:/etc/kubernetes/ssl

done

[root@kubernetes-master-01 k8s]# for i in kubernetes-node-01 kubernetes-node-02; do

> ssh root@$i "mkdir -pv /etc/kubernetes/ssl"

> scp -pr ./{ca*.pem,admin*pem,kube-proxy*pem} root@$i:/etc/kubernetes/ssl

> done

The authenticity of host 'kubernetes-node-01 (172.16.0.53)' can't be established.

ECDSA key fingerprint is SHA256:5N7Cr3nku+MMnJyG3CnY3tchGfNYhxDuIulGceQXWd4.

ECDSA key fingerprint is MD5:aa:ba:4e:29:5d:81:0f:be:9b:cd:54:9b:47:48:e4:33.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'kubernetes-node-01,172.16.0.53' (ECDSA) to the list of known hosts.

root@kubernetes-node-01's password:

mkdir: created directory ‘/etc/kubernetes’

mkdir: created directory ‘/etc/kubernetes/ssl’

root@kubernetes-node-01's password:

ca-key.pem 100% 1679 361.6KB/s 00:00

ca.pem 100% 1273 497.5KB/s 00:00

admin-key.pem 100% 1679 98.4KB/s 00:00

admin.pem 100% 1359 116.8KB/s 00:00

kube-proxy-key.pem 100% 1679 494.7KB/s 00:00

kube-proxy.pem 100% 1379 45.5KB/s 00:00

The authenticity of host 'kubernetes-node-02 (172.16.0.54)' can't be established.

ECDSA key fingerprint is SHA256:5N7Cr3nku+MMnJyG3CnY3tchGfNYhxDuIulGceQXWd4.

ECDSA key fingerprint is MD5:aa:ba:4e:29:5d:81:0f:be:9b:cd:54:9b:47:48:e4:33.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'kubernetes-node-02,172.16.0.54' (ECDSA) to the list of known hosts.

root@kubernetes-node-02's password:

mkdir: created directory ‘/etc/kubernetes’

mkdir: created directory ‘/etc/kubernetes/ssl’

root@kubernetes-node-02's password:

ca-key.pem 100% 1679 211.3KB/s 00:00

ca.pem 100% 1273 973.0KB/s 00:00

admin-key.pem 100% 1679 302.2KB/s 00:00

admin.pem 100% 1359 285.6KB/s 00:00

kube-proxy-key.pem 100% 1679 79.8KB/s 00:00

kube-proxy.pem 100% 1379 416.5KB/s 00:00

Copy to clipboardErrorCopied

- 颁发VIP节点证书

[root@kubernetes-master-01 k8s]# ssh root@kubernetes-master-vip "mkdir -pv /etc/kubernetes/ssl"

mkdir: 已创建目录 "/etc/kubernetes"

mkdir: 已创建目录 "/etc/kubernetes/ssl"

[root@kubernetes-master-01 k8s]# scp admin*pem root@kubernetes-master-vip:/etc/kubernetes/ssl

admin-key.pem 100% 1679 3.8MB/s 00:00

admin.pemCopy to clipboardErrorCopied

部署master节点

kubernetes现托管在github上面,我们所需要的安装包可以在GitHub上下载。

下载二进制组件

# 下载server安装包

wget https://dl.k8s.io/v1.19.0/kubernetes-server-linux-amd64.tar.gz

# 下载client安装包

wget https://dl.k8s.io/v1.19.0/kubernetes-client-linux-amd64.tar.gz

# 下载Node安装包

wget https://dl.k8s.io/v1.19.0/kubernetes-node-linux-amd64.tar.gz

[root@kubernetes-master-01 ~]# ll

-rw-r--r-- 1 root root 13237066 8月 29 02:51 kubernetes-client-linux-amd64.tar.gz

-rw-r--r-- 1 root root 97933232 8月 29 02:51 kubernetes-node-linux-amd64.tar.gz

-rw-r--r-- 1 root root 363943527 8月 29 02:51 kubernetes-server-linux-amd64.tar.gz

# 如果无法下载,可用下方方法

[root@kubernetes-master-01 k8s]# docker pull registry.cn-hangzhou.aliyuncs.com/k8sos/k8s:v1.18.8.1

v1.18.8.1: Pulling from k8sos/k8s

75f829a71a1c: Pull complete

183ee8383f81: Pull complete

a5955b997bb4: Pull complete

5401bb259bcd: Pull complete

0c05c4d60f48: Pull complete

6a216d9c9d7c: Pull complete

6711ab2c0ba7: Pull complete

3ff1975ab201: Pull complete

Digest: sha256:ee02569b218a4bab3f64a7be0b23a9feda8c6717e03f30da83f80387aa46e202

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/k8sos/k8s:v1.18.8.1

registry.cn-hangzhou.aliyuncs.com/k8sos/k8s:v1.18.8.1

# 紧接着在容器当中复制出来即可。

Copy to clipboardErrorCopied

分发组件

[root@kubernetes-master-01 bin]# for i in kubernetes-master-01 kubernetes-master-02 kubernetes-master-03; do scp kube-apiserver kube-controller-manager kube-scheduler kubectl root@$i:/usr/local/bin/; done

kube-apiserver 100% 115MB 94.7MB/s 00:01

kube-controller-manager 100% 105MB 87.8MB/s 00:01

kube-scheduler 100% 41MB 88.2MB/s 00:00

kubectl 100% 42MB 95.7MB/s 00:00

kube-apiserver 100% 115MB 118.4MB/s 00:00

kube-controller-manager 100% 105MB 107.3MB/s 00:00

kube-scheduler 100% 41MB 119.9MB/s 00:00

kubectl 100% 42MB 86.0MB/s 00:00

kube-apiserver 100% 115MB 120.2MB/s 00:00

kube-controller-manager 100% 105MB 108.1MB/s 00:00

kube-scheduler 100% 41MB 102.4MB/s 00:00

kubectl 100% 42MB 124.3MB/s 00:00Copy to clipboardErrorCopied

配置TLS bootstrapping

TLS bootstrapping 是用来简化管理员配置kubelet 与 apiserver 双向加密通信的配置步骤的一种机制。当集群开启了 TLS 认证后,每个节点的 kubelet 组件都要使用由 apiserver 使用的 CA 签发的有效证书才能与 apiserver 通讯,此时如果有很多个节点都需要单独签署证书那将变得非常繁琐且极易出错,导致集群不稳。

TLS bootstrapping 功能就是让 node节点上的kubelet组件先使用一个预定的低权限用户连接到 apiserver,然后向 apiserver 申请证书,由 apiserver 动态签署颁发到Node节点,实现证书签署自动化。

- 生成TLS bootstrapping所需token

# 必须要用自己机器创建的Token

TLS_BOOTSTRAPPING_TOKEN=`head -c 16 /dev/urandom | od -An -t x | tr -d ' '`

cat > token.csv << EOF

${TLS_BOOTSTRAPPING_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

[root@kubernetes-master-01 k8s]# TLS_BOOTSTRAPPING_TOKEN=`head -c 16 /dev/urandom | od -An -t x | tr -d ' '`

[root@kubernetes-master-01 k8s]#

[root@kubernetes-master-01 k8s]# cat > token.csv << EOF

> ${TLS_BOOTSTRAPPING_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

> EOF

[root@kubernetes-master-01 k8s]# cat token.csv

1b076dcc88e04d64c3a9e7d7a1586fe5,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

Copy to clipboardErrorCopied

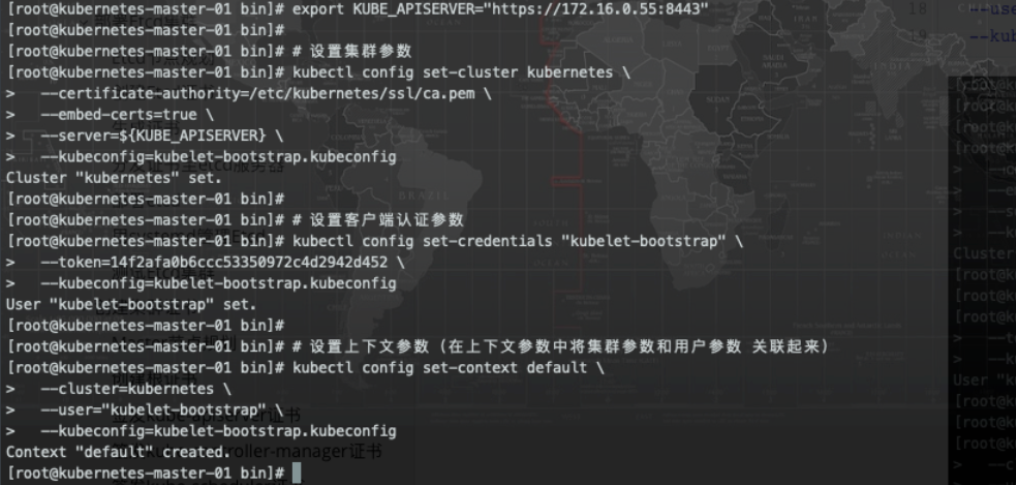

创建集群配置文件

在kubernetes中,我们需要创建一个配置文件,用来配置集群、用户、命名空间及身份认证等信息。

创建kubelet-bootstrap.kubeconfig文件

export KUBE_APISERVER="https://172.16.0.55:8443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kubelet-bootstrap.kubeconfig

# 设置客户端认证参数,此处token必须用上叙token.csv中的token

kubectl config set-credentials "kubelet-bootstrap" \

--token=3ac791ff0afab20f5324ff898bb1570e \ # 这里的tocken要注意为之前的tocken

--kubeconfig=kubelet-bootstrap.kubeconfig

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=kubelet-bootstrap.kubeconfig

# 配置默认上下文

kubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfigCopy to clipboardErrorCopied

创建kube-controller-manager.kubeconfig文件

export KUBE_APISERVER="https://172.16.0.55:8443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-controller-manager.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials "kube-controller-manager" \

--client-certificate=/etc/kubernetes/ssl/kube-controller-manager.pem \

--client-key=/etc/kubernetes/ssl/kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="kube-controller-manager" \

--kubeconfig=kube-controller-manager.kubeconfig

# 配置默认上下文

kubectl config use-context default --kubeconfig=kube-controller-manager.kubeconfigCopy to clipboardErrorCopied

--certificate-authority:验证kube-apiserver证书的根证书--client-certificate、--client-key:刚生成的kube-controller-manager证书和私钥,连接kube-apiserver时使用--embed-certs=true:将ca.pem和kube-controller-manager证书内容嵌入到生成的kubectl.kubeconfig文件中(不加时,写入的是证书文件路径)

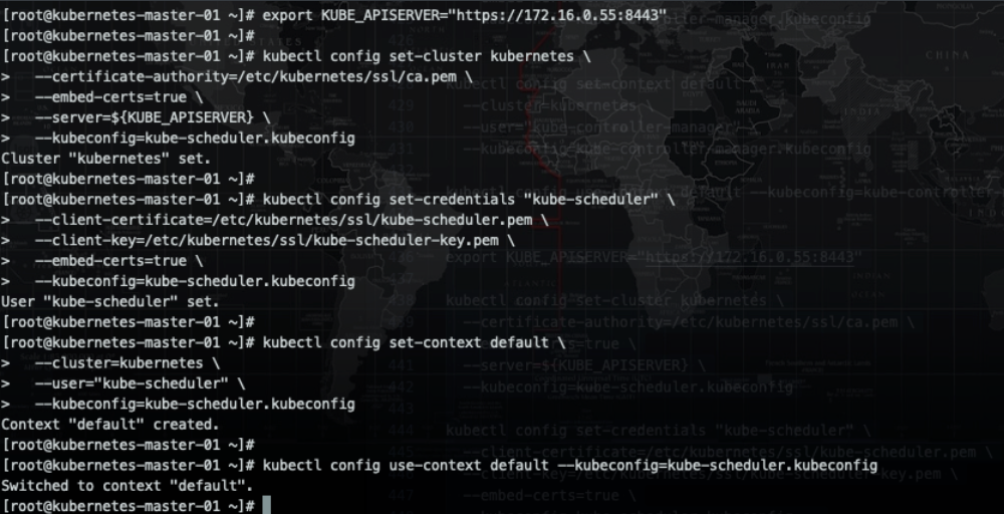

创建kube-scheduler.kubeconfig文件

export KUBE_APISERVER="https://172.16.0.55:8443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-scheduler.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials "kube-scheduler" \

--client-certificate=/etc/kubernetes/ssl/kube-scheduler.pem \

--client-key=/etc/kubernetes/ssl/kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="kube-scheduler" \

--kubeconfig=kube-scheduler.kubeconfig

# 配置默认上下文

kubectl config use-context default --kubeconfig=kube-scheduler.kubeconfigCopy to clipboardErrorCopied

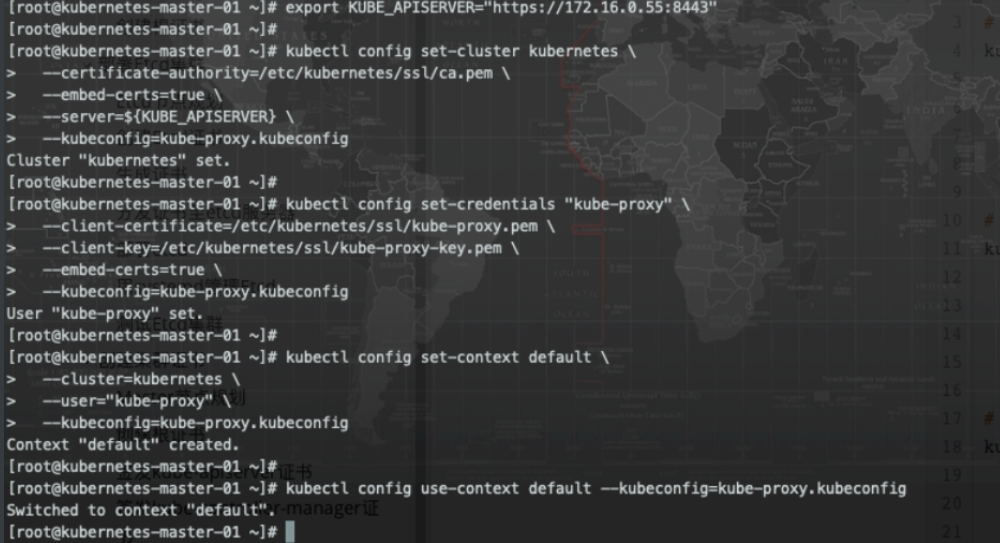

创建kube-proxy.kubeconfig文件

export KUBE_APISERVER="https://172.16.0.55:8443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials "kube-proxy" \

--client-certificate=/etc/kubernetes/ssl/kube-proxy.pem \

--client-key=/etc/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="kube-proxy" \

--kubeconfig=kube-proxy.kubeconfig

# 配置默认上下文

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfigCopy to clipboardErrorCopied

创建admin.kubeconfig文件

export KUBE_APISERVER="https://172.16.0.55:8443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=admin.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials "admin" \

--client-certificate=/etc/kubernetes/ssl/admin.pem \

--client-key=/etc/kubernetes/ssl/admin-key.pem \

--embed-certs=true \

--kubeconfig=admin.kubeconfig

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="admin" \

--kubeconfig=admin.kubeconfig

# 配置默认上下文

kubectl config use-context default --kubeconfig=admin.kubeconfigCopy to clipboardErrorCopied

分发集群配置文件至Master节点

[root@kubernetes-master-01 ~]# for i in kubernetes-master-01 kubernetes-master-02 kubernetes-master-03;

do

ssh root@$i "mkdir -p /etc/kubernetes/cfg";

scp token.csv kube-scheduler.kubeconfig kube-controller-manager.kubeconfig admin.kubeconfig kube-proxy.kubeconfig kubelet-bootstrap.kubeconfig root@$i:/etc/kubernetes/cfg;

done

token.csv 100% 84 662.0KB/s 00:00

kube-scheduler.kubeconfig 100% 6159 47.1MB/s 00:00

kube-controller-manager.kubeconfig 100% 6209 49.4MB/s 00:00

admin.conf 100% 6021 51.0MB/s 00:00

kube-proxy.kubeconfig 100% 6059 52.7MB/s 00:00

kubelet-bootstrap.kubeconfig 100% 1985 25.0MB/s 00:00

token.csv 100% 84 350.5KB/s 00:00

kube-scheduler.kubeconfig 100% 6159 20.0MB/s 00:00

kube-controller-manager.kubeconfig 100% 6209 20.7MB/s 00:00

admin.conf 100% 6021 23.4MB/s 00:00

kube-proxy.kubeconfig 100% 6059 20.0MB/s 00:00

kubelet-bootstrap.kubeconfig 100% 1985 4.4MB/s 00:00

token.csv 100% 84 411.0KB/s 00:00

kube-scheduler.kubeconfig 100% 6159 19.6MB/s 00:00

kube-controller-manager.kubeconfig 100% 6209 21.4MB/s 00:00

admin.conf 100% 6021 19.9MB/s 00:00

kube-proxy.kubeconfig 100% 6059 20.1MB/s 00:00

kubelet-bootstrap.kubeconfig 100% 1985 9.8MB/s 00:00

[root@kubernetes-master-01 ~]#Copy to clipboardErrorCopied

分发集群配置文件至Node节点

[root@kubernetes-master-01 ~]# for i in kubernetes-node-01 kubernetes-node-02;

do

ssh root@$i "mkdir -p /etc/kubernetes/cfg";

scp kube-proxy.kubeconfig kubelet-bootstrap.kubeconfig root@$i:/etc/kubernetes/cfg;

done

kube-proxy.kubeconfig 100% 6059 18.9MB/s 00:00

kubelet-bootstrap.kubeconfig 100% 1985 8.1MB/s 00:00

kube-proxy.kubeconfig 100% 6059 16.2MB/s 00:00

kubelet-bootstrap.kubeconfig 100% 1985 9.9MB/s 00:00

[root@kubernetes-master-01 ~]#Copy to clipboardErrorCopied

部署kube-apiserver

- 创建kube-apiserver服务配置文件(三个节点都要执行,不能复制,注意api server IP)

KUBE_APISERVER_IP=`hostname -i`

cat > /etc/kubernetes/cfg/kube-apiserver.conf << EOF

KUBE_APISERVER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes \\

--advertise-address=${KUBE_APISERVER_IP} \\

--default-not-ready-toleration-seconds=360 \\

--default-unreachable-toleration-seconds=360 \\

--max-mutating-requests-inflight=2000 \\

--max-requests-inflight=4000 \\

--default-watch-cache-size=200 \\

--delete-collection-workers=2 \\

--bind-address=0.0.0.0 \\

--secure-port=6443 \\

--allow-privileged=true \\

--service-cluster-ip-range=10.96.0.0/16 \\

--service-node-port-range=10-52767 \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--enable-bootstrap-token-auth=true \\

--token-auth-file=/etc/kubernetes/cfg/token.csv \\

--kubelet-client-certificate=/etc/kubernetes/ssl/server.pem \\

--kubelet-client-key=/etc/kubernetes/ssl/server-key.pem \\

--tls-cert-file=/etc/kubernetes/ssl/server.pem \\

--tls-private-key-file=/etc/kubernetes/ssl/server-key.pem \\

--client-ca-file=/etc/kubernetes/ssl/ca.pem \\

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/log/kubernetes/k8s-audit.log \\

--etcd-servers=https://172.16.0.50:2379,https://172.16.0.51:2379,https://172.16.0.52:2379 \\

--etcd-cafile=/etc/etcd/ssl/ca.pem \\

--etcd-certfile=/etc/etcd/ssl/server.pem \\

--etcd-keyfile=/etc/etcd/ssl/server-key.pem"

EOFCopy to clipboardErrorCopied

| 配置选项 | 选项说明 |

|---|---|

--logtostderr=false |

输出日志到文件中,不输出到标准错误控制台 |

--v=2 |

指定输出日志的级别 |

--advertise-address |

向集群成员通知 apiserver 消息的 IP 地址 |

--etcd-servers |

连接的 etcd 服务器列表 |

--etcd-cafile |

用于etcd 通信的 SSL CA 文件 |

--etcd-certfile |

用于 etcd 通信的的 SSL 证书文件 |

--etcd-keyfile |

用于 etcd 通信的 SSL 密钥文件 |

--service-cluster-ip-range |

Service网络地址分配 |

--bind-address |

监听 --seure-port 的 IP 地址,如果为空,则将使用所有接口(0.0.0.0) |

--secure-port=6443 |

用于监听具有认证授权功能的 HTTPS 协议的端口,默认值是6443 |

--allow-privileged |

是否启用授权功能 |

--service-node-port-range |

Service使用的端口范围 |

--default-not-ready-toleration-seconds |

表示 notReady状态的容忍度秒数 |

--default-unreachable-toleration-seconds |

表示 unreachable状态的容忍度秒数: |

--max-mutating-requests-inflight=2000 |

在给定时间内进行中可变请求的最大数量,0 值表示没有限制(默认值 200) |

--default-watch-cache-size=200 |

默认监视缓存大小,0 表示对于没有设置默认监视大小的资源,将禁用监视缓存 |

--delete-collection-workers=2 |

用于 DeleteCollection 调用的工作者数量,这被用于加速 namespace 的清理( 默认值 1) |

--enable-admission-plugins |

资源限制的相关配置 |

--authorization-mode |

在安全端口上进行权限验证的插件的顺序列表,以逗号分隔的列表。 |

--enable-bootstrap-token-auth |

启用此选项以允许 'kube-system' 命名空间中的 'bootstrap.kubernetes.io/token' 类型密钥可以被用于 TLS 的启动认证 |

--token-auth-file |

声明bootstrap token文件 |

--kubelet-certificate-authority |

证书 authority 的文件路径 |

--kubelet-client-certificate |

用于 TLS 的客户端证书文件路径 |

--kubelet-client-key |

用于 TLS 的客户端证书密钥文件路径 |

--tls-private-key-file |

包含匹配--tls-cert-file 的 x509 证书私钥的文件 |

--service-account-key-file |

包含 PEM 加密的 x509 RSA 或 ECDSA 私钥或公钥的文件 |

--audit-log-maxage |

基于文件名中的时间戳,旧审计日志文件的最长保留天数 |

--audit-log-maxbackup |

旧审计日志文件的最大保留个数 |

--audit-log-maxsize |

审计日志被轮转前的最大兆字节数 |

--audit-log-path |

如果设置,表示所有到apiserver的请求都会记录到这个文件中,‘-’表示写入标准输出 |

- 创建kube-apiserver服务脚本

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kube-apiserver.conf

ExecStart=/usr/local/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=10

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOFCopy to clipboardErrorCopied

- 分发kube-apiserver服务脚本

for i in kubernetes-master-02 kubernetes-master-03;

do

scp /usr/lib/systemd/system/kube-apiserver.service root@$i:/usr/lib/systemd/system/kube-apiserver.service

doneCopy to clipboardErrorCopied

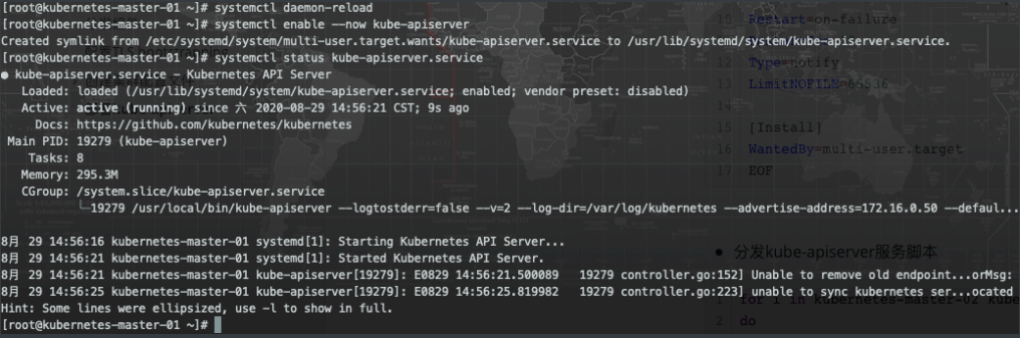

- 启动

# 创建kubernetes日志目录

mkdir -p /var/log/kubernetes/

systemctl daemon-reload

systemctl enable --now kube-apiserverCopy to clipboardErrorCopied

kube-apiserver高可用部署

负载均衡器有很多种,这里我们采用官方推荐的

haproxy+keepalived。

-

安装

haproxy和keeplived(在三个master节点上安装)yum install -y keepalived haproxy Copy to clipboardErrorCopied -

配置

haproxy服务cat > /etc/haproxy/haproxy.cfg <<EOF global maxconn 2000 ulimit-n 16384 log 127.0.0.1 local0 err stats timeout 30s defaults log global mode http option httplog timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15s frontend monitor-in bind *:33305 mode http option httplog monitor-uri /monitor listen stats bind *:8006 mode http stats enable stats hide-version stats uri /stats stats refresh 30s stats realm Haproxy\ Statistics stats auth admin:admin frontend k8s-master bind 0.0.0.0:8443 bind 127.0.0.1:8443 mode tcp option tcplog tcp-request inspect-delay 5s default_backend k8s-master backend k8s-master mode tcp option tcplog option tcp-check balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server kubernetes-master-01 172.16.0.50:6443 check inter 2000 fall 2 rise 2 weight 100 server kubernetes-master-02 172.16.0.51:6443 check inter 2000 fall 2 rise 2 weight 100 server kubernetes-master-03 172.16.0.52:6443 check inter 2000 fall 2 rise 2 weight 100 EOFCopy to clipboardErrorCopied -

分发配置至其他节点

for i in kubernetes-master-01 kubernetes-master-02 kubernetes-master-03; do ssh root@$i "mv /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg_bak" scp haproxy.cfg root@$i:/etc/haproxy/haproxy.cfg doneCopy to clipboardErrorCopied -

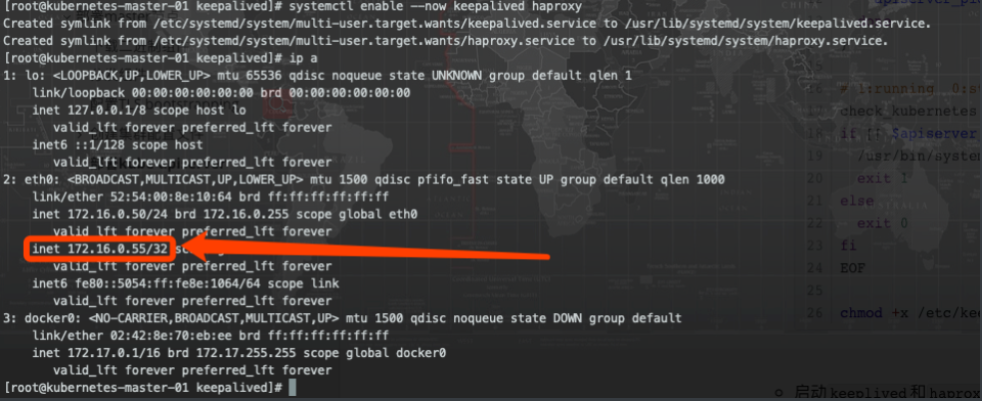

配置

keepalived服务mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf_bak cat > /etc/keepalived/keepalived.conf <<EOF ! Configuration File for keepalived global_defs { router_id LVS_DEVEL } vrrp_script chk_kubernetes { script "/etc/keepalived/check_kubernetes.sh" interval 2 weight -5 fall 3 rise 2 } vrrp_instance VI_1 { state MASTER interface eth0 mcast_src_ip 172.16.0.50 virtual_router_id 51 priority 100 advert_int 2 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } virtual_ipaddress { 172.16.0.55 } # track_script { # chk_kubernetes # } } EOFCopy to clipboardErrorCopied-

分发

keepalived配置文件for i in kubernetes-master-02 kubernetes-master-03; do ssh root@$i "mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf_bak" scp /etc/keepalived/keepalived.conf root@$i:/etc/keepalived/keepalived.conf doneCopy to clipboardErrorCopied

-

-

配置kubernetes-master-02节点

sed -i 's#state MASTER#state BACKUP#g' /etc/keepalived/keepalived.conf sed -i 's#172.16.0.50#172.16.0.51#g' /etc/keepalived/keepalived.conf sed -i 's#priority 100#priority 99#g' /etc/keepalived/keepalived.confCopy to clipboardErrorCopied -

配置kubernetes-master-03节点

sed -i 's#state MASTER#state BACKUP#g' /etc/keepalived/keepalived.conf sed -i 's#172.16.0.50#172.16.0.52#g' /etc/keepalived/keepalived.conf sed -i 's#priority 100#priority 98#g' /etc/keepalived/keepalived.confCopy to clipboardErrorCopied -

配置健康检查脚本

cat > /etc/keepalived/check_kubernetes.sh <<EOF #!/bin/bash function chech_kubernetes() { for ((i=0;i<5;i++));do apiserver_pid_id=$(pgrep kube-apiserver) if [[ ! -z $apiserver_pid_id ]];then return else sleep 2 fi apiserver_pid_id=0 done } # 1:running 0:stopped check_kubernetes if [[ $apiserver_pid_id -eq 0 ]];then /usr/bin/systemctl stop keepalived exit 1 else exit 0 fi EOF chmod +x /etc/keepalived/check_kubernetes.shCopy to clipboardErrorCopied -

启动

keeplived和haproxy服务systemctl enable --now keepalived haproxyCopy to clipboardErrorCopied

-

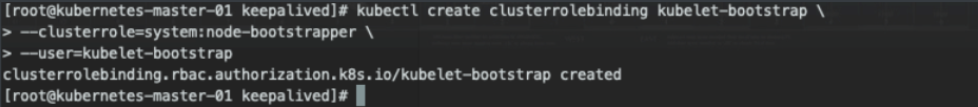

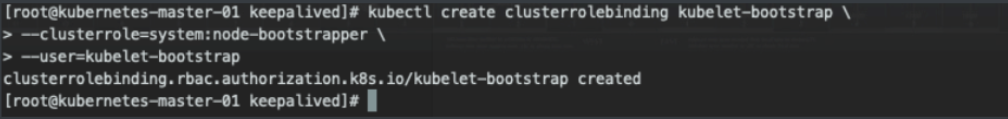

授权TLS Bootrapping用户请求

kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrapCopy to clipboardErrorCopied

部署kube-controller-manager服务

Controller Manager作为集群内部的管理控制中心,负责集群内的Node、Pod副本、服务端点(Endpoint)、命名空间(Namespace)、服务账号(ServiceAccount)、资源定额(ResourceQuota)的管理,当某个Node意外宕机时,Controller Manager会及时发现并执行自动化修复流程,确保集群始终处于预期的工作状态。如果多个控制器管理器同时生效,则会有一致性问题,所以

kube-controller-manager的高可用,只能是主备模式,而kubernetes集群是采用租赁锁实现leader选举,需要在启动参数中加入--leader-elect=true。

- 创建kube-controller-manager配置文件

cat > /etc/kubernetes/cfg/kube-controller-manager.conf << EOF

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes \\

--leader-elect=true \\

--cluster-name=kubernetes \\

--bind-address=127.0.0.1 \\

--allocate-node-cidrs=true \\

--cluster-cidr=10.244.0.0/12 \\

--service-cluster-ip-range=10.96.0.0/16 \\

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/etc/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--kubeconfig=/etc/kubernetes/cfg/kube-controller-manager.kubeconfig \\

--tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \\

--tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \\

--experimental-cluster-signing-duration=87600h0m0s \\

--controllers=*,bootstrapsigner,tokencleaner \\

--use-service-account-credentials=true \\

--node-monitor-grace-period=10s \\

--horizontal-pod-autoscaler-use-rest-clients=true"

EOFCopy to clipboardErrorCopied

配置文件详细解释如下:

| 配置选项 | 选项意义 |

|---|---|

--leader-elect |

高可用时启用选举功能。 |

--master |

通过本地非安全本地端口8080连接apiserver |

--bind-address |

监控地址 |

--allocate-node-cidrs |

是否应在node节点上分配和设置Pod的CIDR |

--cluster-cidr |

Controller Manager在启动时如果设置了--cluster-cidr参数,防止不同的节点的CIDR地址发生冲突 |

--service-cluster-ip-range |

集群Services 的CIDR范围 |

--cluster-signing-cert-file |

指定用于集群签发的所有集群范围内证书文件(根证书文件) |

--cluster-signing-key-file |

指定集群签发证书的key |

--root-ca-file |

如果设置,该根证书权限将包含service acount的toker secret,这必须是一个有效的PEM编码CA 包 |

--service-account-private-key-file |

包含用于签署service account token的PEM编码RSA或者ECDSA私钥的文件名 |

--experimental-cluster-signing-duration |

证书签发时间 |

- 配置脚本

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/usr/local/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOFCopy to clipboardErrorCopied

- 分发配置

for i in kubernetes-master-02 kubernetes-master-03;

do

scp /etc/kubernetes/cfg/kube-controller-manager.conf root@$i:/etc/kubernetes/cfg

scp /usr/lib/systemd/system/kube-controller-manager.service root@$i:/usr/lib/systemd/system/kube-controller-manager.service

root@$i "systemctl daemon-reload"

doneCopy to clipboardErrorCopied

部署kube-scheduler服务

kube-scheduler是 Kubernetes 集群的默认调度器,并且是集群 控制面 的一部分。对每一个新创建的 Pod 或者是未被调度的 Pod,kube-scheduler 会过滤所有的node,然后选择一个最优的 Node 去运行这个 Pod。kube-scheduler 调度器是一个策略丰富、拓扑感知、工作负载特定的功能,调度器显著影响可用性、性能和容量。调度器需要考虑个人和集体的资源要求、服务质量要求、硬件/软件/政策约束、亲和力和反亲和力规范、数据局部性、负载间干扰、完成期限等。工作负载特定的要求必要时将通过 API 暴露。

-

创建kube-scheduler配置文件

cat > /etc/kubernetes/cfg/kube-scheduler.conf << EOF KUBE_SCHEDULER_OPTS="--logtostderr=false \\ --v=2 \\ --log-dir=/var/log/kubernetes \\ --kubeconfig=/etc/kubernetes/cfg/kube-scheduler.kubeconfig \\ --leader-elect=true \\ --master=http://127.0.0.1:8080 \\ --bind-address=127.0.0.1 " EOFCopy to clipboardErrorCopied -

创建启动脚本

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF [Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes After=network.target [Service] EnvironmentFile=/etc/kubernetes/cfg/kube-scheduler.conf ExecStart=/usr/local/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS Restart=on-failure RestartSec=5 [Install] WantedBy=multi-user.target EOFCopy to clipboardErrorCopied -

分配配置文件

for ip in kubernetes-master-02 kubernetes-master-03; do scp /usr/lib/systemd/system/kube-scheduler.service root@${ip}:/usr/lib/systemd/system scp /etc/kubernetes/cfg/kube-scheduler.conf root@${ip}:/etc/kubernetes/cfg doneCopy to clipboardErrorCopied -

启动

systemctl daemon-reload systemctl enable --now kube-schedulerCopy to clipboardErrorCopied

查看集群Master节点状态

至此,master所有节点均安装完毕。

[root@kubernetes-master-01 ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok