Mongodb分片高可用 & 密码配置副本集

目录

一、mongodb的分片

1.分片的概念

mongodb的副本集跟redis的高可用相同,只能读,分担不了主库的压力,只能在主库出现故障的时候接替主库的工作

mongodb能够使用的内存,只是主库的内存和磁盘,当副本集中机器配置不一致时也会有问题

Mongodb的分片机制允许你创建一个包含许多台机器(分片)的集群。将数据子集分散在集群中,每个分片维护着一个数据集合的子集。与单个服务器和副本集相比,使用集群架构可以使应用程序具有更大的数据处理能力。

2.分片的介绍

#优点:

1.提高机器资源的利用率

2.减轻主库的压力

#缺点:

1.机器需要的更多

2.配置和管理更加的复杂和困难

3.分片配置好之后想修改很困难

3.分片的原理

1)路由服务 mongos server

类似于代理,跟数据库的atlas类似,可以将客户端的数据分配到后端的mongo服务器上

2)分片配置服务器信息 config server

mongos server是不知道后端服务器mongo有几台,地址是什么,他只能连接到这个config server,而config server就是记录后端服务器地址和数据的一个服务

作用:

1.记录后端mongo节点的信息

2.记录数据写入存到了哪个节点

3.提供给mongos后端服务器的信息

3)片键

config server只存储信息,而不会主动将数据写入节点,所以还有一个片键的概念,片键就是索引

作用:

1.将数据根据规则分配到不同的节点

2.相当于建立索引,加快访问速度

分类:

1.区间片键(很有可能出现数据分配不均匀的情况)

可以以时间区间分片,根据时间建立索引

可以以地区区间分片,根据地区建立索引

2.hash片键(足够平均,足够随机)

根据id或者数据数量进行分配

4)分片

存储数据的节点,这种方式就是分布式集群

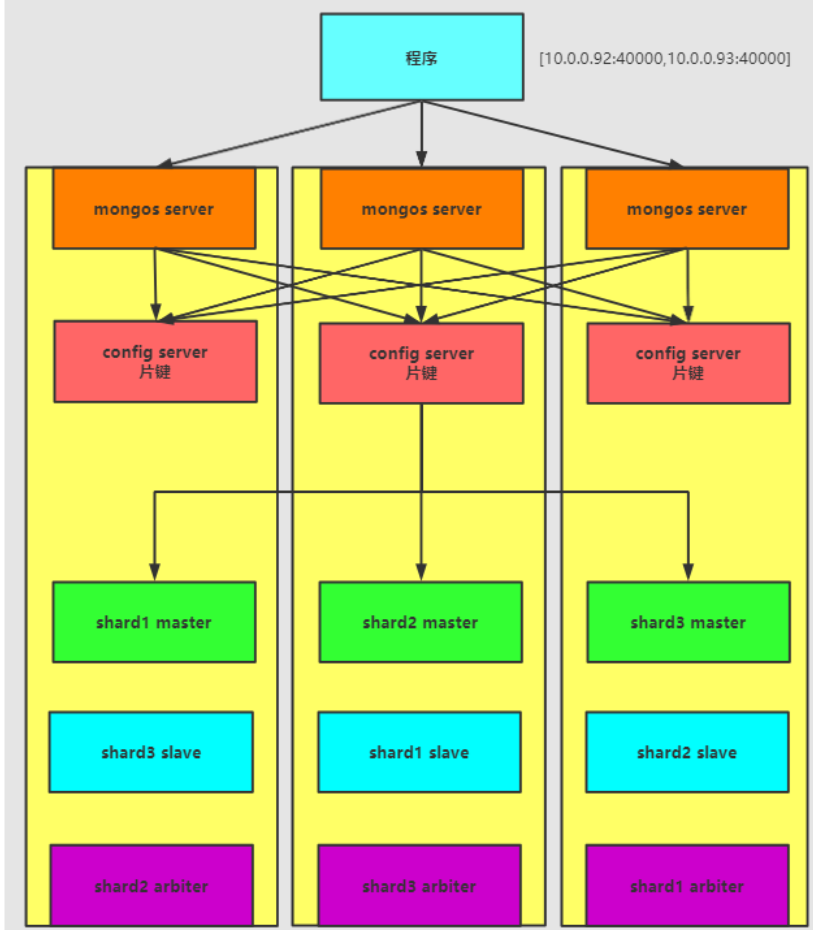

二、分片的高可用

做分片只是针对单节点,mongo服务相当于还是只有一个,所以我们还有对分片进行副本集的操作

跟ES一样,我们不能一台机器上部署多节点,自己做自己的副本,那当机器挂了时,还是有问题

所以我们要错开进行副本集的建立,而且一台机器上不能有相同的数据节点,否则选举又会出现问题

1.服务器规划

| 主机 | ip | 部署 | 端口 |

|---|---|---|---|

| mongodb01 | 10.0.0.81 | Shard1_Master Shard2_Slave Shard3_Arbiter Config Server Mongos Server | 20010 28020 28030 40000 60000 |

| mongodb02 | 10.0.0.82 | Shard2_Master Shard3_Slave Shard1_Arbiter Config Server Mongos Server | 20010 28020 28030 40000 60000 |

| mongodb03 | 10.0.0.83 | Shard3_Master Shard1_Slave Shard2_Arbiter Config Server Mongos Server | 20010 28020 28030 40000 60000 |

2.目录规划

#服务目录

mkdir /server/mongodb/master/{conf,log,pid,data} -p

mkdir /server/mongodb/slave/{conf,log,pid,data} -p

mkdir /server/mongodb/arbiter/{conf,log,pid,data} -p

mkdir /server/mongodb/config/{conf,log,pid,data} -p

mkdir /server/mongodb/mongos/{conf,log,pid} -p

3.安装mongo

#安装依赖

yum install -y libcurl openssl

#上传或下载包

rz mongodb-linux-x86_64-3.6.13.tgz

#解压

tar xf mongodb-linux-x86_64-3.6.13.tgz -C /usr/local/

#做软连接

ln -s /usr/local/mongodb-linux-x86_64-3.6.13 /usr/local/mongodb

4.配置mongodb01

1)配置master

vim /server/mongodb/master/conf/mongo.conf

systemLog:

destination: file

logAppend: true

path: /server/mongodb/master/log/master.log

storage:

journal:

enabled: true

dbPath: /server/mongodb/master/data/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /server/mongodb/master/pid/master.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28010

bindIp: 127.0.0.1,10.0.0.81

replication:

oplogSizeMB: 1024

replSetName: shard1

sharding:

clusterRole: shardsvr

2)配置slave

vim /server/mongodb/slave/conf/mongo.conf

systemLog:

destination: file

logAppend: true

path: /server/mongodb/slave/log/slave.log

storage:

journal:

enabled: true

dbPath: /server/mongodb/slave/data/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /server/mongodb/slave/pid/slave.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28020

bindIp: 127.0.0.1,10.0.0.81

replication:

oplogSizeMB: 1024

replSetName: shard2

sharding:

clusterRole: shardsvr

3)配置arbiter

vim /server/mongodb/arbiter/conf/mongo.conf

systemLog:

destination: file

logAppend: true

path: /server/mongodb/arbiter/log/arbiter.log

storage:

journal:

enabled: true

dbPath: /server/mongodb/arbiter/data/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /server/mongodb/arbiter/pid/arbiter.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28030

bindIp: 127.0.0.1,10.0.0.81

replication:

oplogSizeMB: 1024

replSetName: shard3

sharding:

clusterRole: shardsvr

5.配置mongodb02

1)配置master

vim /server/mongodb/master/conf/mongo.conf

systemLog:

destination: file

logAppend: true

path: /server/mongodb/master/log/master.log

storage:

journal:

enabled: true

dbPath: /server/mongodb/master/data/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /server/mongodb/master/pid/master.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28010

bindIp: 127.0.0.1,10.0.0.82

replication:

oplogSizeMB: 1024

replSetName: shard2

sharding:

clusterRole: shardsvr

2)配置slave

vim /server/mongodb/slave/conf/mongo.conf

systemLog:

destination: file

logAppend: true

path: /server/mongodb/slave/log/slave.log

storage:

journal:

enabled: true

dbPath: /server/mongodb/slave/data/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /server/mongodb/slave/pid/slave.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28020

bindIp: 127.0.0.1,10.0.0.82

replication:

oplogSizeMB: 1024

replSetName: shard3

sharding:

clusterRole: shardsvr

3)配置arbiter

vim /server/mongodb/arbiter/conf/mongo.conf

systemLog:

destination: file

logAppend: true

path: /server/mongodb/arbiter/log/arbiter.log

storage:

journal:

enabled: true

dbPath: /server/mongodb/arbiter/data/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /server/mongodb/arbiter/pid/arbiter.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28030

bindIp: 127.0.0.1,10.0.0.82

replication:

oplogSizeMB: 1024

replSetName: shard1

sharding:

clusterRole: shardsvr

6.配置mongodb03

1)配置master

vim /server/mongodb/master/conf/mongo.conf

systemLog:

destination: file

logAppend: true

path: /server/mongodb/master/log/master.log

storage:

journal:

enabled: true

dbPath: /server/mongodb/master/data/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /server/mongodb/master/pid/master.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28010

bindIp: 127.0.0.1,10.0.0.83

replication:

oplogSizeMB: 1024

replSetName: shard3

sharding:

clusterRole: shardsvr

2)配置slave

vim /server/mongodb/slave/conf/mongo.conf

systemLog:

destination: file

logAppend: true

path: /server/mongodb/slave/log/slave.log

storage:

journal:

enabled: true

dbPath: /server/mongodb/slave/data/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /server/mongodb/slave/pid/slave.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28020

bindIp: 127.0.0.1,10.0.0.83

replication:

oplogSizeMB: 1024

replSetName: shard1

sharding:

clusterRole: shardsvr

3)配置arbiter

vim /server/mongodb/arbiter/conf/mongo.conf

systemLog:

destination: file

logAppend: true

path: /server/mongodb/arbiter/log/arbiter.log

storage:

journal:

enabled: true

dbPath: /server/mongodb/arbiter/data/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /server/mongodb/arbiter/pid/arbiter.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 28030

bindIp: 127.0.0.1,10.0.0.83

replication:

oplogSizeMB: 1024

replSetName: shard2

sharding:

clusterRole: shardsvr

7.配置环境变量

[root@redis01 ~]# vim /etc/profile.d/mongo.sh

export PATH="/usr/local/mongodb/bin:$PATH"

[root@redis01 ~]# source /etc/profile

8.优化警告

useradd mongo -s /sbin/nologin -M

echo "never" > /sys/kernel/mm/transparent_hugepage/enabled

echo "never" > /sys/kernel/mm/transparent_hugepage/defrag

9.配置system管理

1)配置master管理

vim /usr/lib/systemd/system/mongod-master.service

[Unit]

Description=MongoDB Database Server

Documentation=https://docs.mongodb.org/manual

After=network.target

[Service]

User=mongo

Group=mongo

ExecStart=/usr/local/mongodb/bin/mongod -f /server/mongodb/master/conf/mongo.conf

ExecStartPre=/usr/bin/chown -R mongo:mongo /server/mongodb/master/

ExecStop=/usr/local/mongodb/bin/mongod -f /server/mongodb/master/conf/mongo.conf --shutdown

PermissionsStartOnly=true

PIDFile=/server/mongodb/master/pid/master.pid

Type=forking

[Install]

WantedBy=multi-user.target

2)配置管理salve

vim /usr/lib/systemd/system/mongod-slave.service

[Unit]

Description=MongoDB Database Server

Documentation=https://docs.mongodb.org/manual

After=network.target

[Service]

User=mongo

Group=mongo

ExecStart=/usr/local/mongodb/bin/mongod -f /server/mongodb/slave/conf/mongo.conf

ExecStartPre=/usr/bin/chown -R mongo:mongo /server/mongodb/slave/

ExecStop=/usr/local/mongodb/bin/mongod -f /server/mongodb/slave/conf/mongo.conf --shutdown

PermissionsStartOnly=true

PIDFile=/server/mongodb/slave/pid/slave.pid

Type=forking

[Install]

WantedBy=multi-user.target

3)配置管理arbiter

vim /usr/lib/systemd/system/mongod-arbiter.service

[Unit]

Description=MongoDB Database Server

Documentation=https://docs.mongodb.org/manual

After=network.target

[Service]

User=mongo

Group=mongo

ExecStart=/usr/local/mongodb/bin/mongod -f /server/mongodb/arbiter/conf/mongo.conf

ExecStartPre=/usr/bin/chown -R mongo:mongo /server/mongodb/arbiter/

ExecStop=/usr/local/mongodb/bin/mongod -f /server/mongodb/arbiter/conf/mongo.conf --shutdown

PermissionsStartOnly=true

PIDFile=/server/mongodb/arbiter/pid/arbiter.pid

Type=forking

[Install]

WantedBy=multi-user.target

4)刷新启动程序

systemctl daemon-reload

10.启动mongodb所有节点

systemctl start mongod-master.service

systemctl start mongod-slave.service

systemctl start mongod-arbiter.service

11.配置副本集

1)mongodb01初始化副本集

#连接主库

mongo --port 28010

rs.initiate()

rs.add("10.0.0.83:28020")

rs.addArb("10.0.0.82:28030")

2)mongodb02初始化副本集

#连接主库

mongo --port 28010

rs.initiate()

rs.add("10.0.0.81:28020")

rs.addArb("10.0.0.83:28030")

3)mongodb03初始化副本集

#连接主库

mongo --port 28010

rs.initiate()

rs.add("10.0.0.82:28020")

rs.addArb("10.0.0.81:28030")

4)检查所有节点副本集状态

#三台主节点

mongo --port 28010

rs.status()

rs.isMaster()

12.配置config server

1)创建目录

这里的目录创建在最开始创建其他目录时已经创建了。

2)配置config server(三台机器都需要操作)

vim /server/mongodb/config/conf/mongo.conf

systemLog:

destination: file

logAppend: true

path: /server/mongodb/config/log/mongodb.log

storage:

journal:

enabled: true

dbPath: /server/mongodb/config/data/

directoryPerDB: true

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /server/mongodb/config/pid/mongod.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 40000

bindIp: 127.0.0.1,10.0.0.81 # 三台机器中需要对应写自己的ip

replication:

replSetName: configset # 这里为配置集群名称(三台需要保持一致)

sharding:

clusterRole: configsvr

3)启动(三台都启动)

/usr/local/mongodb/bin/mongod -f /server/mongodb/config/conf/mongo.conf

4)mongodb01上初始化副本集(在一台中配置即可)

mongo --port 40000

rs.initiate({

_id:"configset",

configsvr: true,

members:[

{_id:0,host:"10.0.0.51:40000"},

{_id:1,host:"10.0.0.52:40000"},

{_id:2,host:"10.0.0.53:40000"},

] })

5)检查

rs.status()

rs.isMaster()

13.配置mongos

1)创建目录

2)配置mongos(三台都需配置)

vim /server/mongodb/mongos/conf/mongo.conf

systemLog:

destination: file

logAppend: true

path: /server/mongodb/mongos/log/mongos.log

processManagement:

fork: true

pidFilePath: /server/mongodb/mongos/pid/mongos.pid

timeZoneInfo: /usr/share/zoneinfo

net:

port: 60000

bindIp: 127.0.0.1,10.0.0.81

sharding:

configDB:

configset/10.0.0.81:40000,10.0.0.82:40000,10.0.0.83:40000

3)启动

mongos -f /server/mongodb/mongos/conf/mongo.conf

4)添加分片成员

#登录mongos

mongo --port 60000

#添加成员(告诉mongos后端分片的成员有哪些,在一台中配置就行了,另外两台会自己同步)

use admin

db.runCommand({addShard:'shard1/10.0.0.81:28010,10.0.0.83:28020,10.0.0.82:28030'})

db.runCommand({addShard:'shard2/10.0.0.82:28010,10.0.0.81:28020,10.0.0.83:28030'})

db.runCommand({addShard:'shard3/10.0.0.83:28010,10.0.0.82:28020,10.0.0.81:28030'})

5)查看分片信息

db.runCommand( { listshards : 1 } )

14.配置区间分片

1)区间分片

#数据库开启分片

mongo --port 60000

use admin

#指定库开启分片

db.runCommand( { enablesharding : "test" } )

2)创建集合索引

mongo --port 60000

use test

db.range.ensureIndex( { id: 1 } )

3)对集合开启分片,片键是id

use admin

db.runCommand( { shardcollection : "test.range",key : {id: 1} } )

4)插入测试数据

use test

for(i=1;i<10000;i++){ db.range.insert({"id":i,"name":"shanghai","age":28,"date":new Date()}); }

db.range.stats()

db.range.count()

15.设置hash分片

#数据库开启分片

mongo --port 60000

use admin

db.runCommand( { enablesharding : "testhash" } )

1)集合创建索引

use testhash

db.hash.ensureIndex( { id: "hashed" } )

2)集合开启哈希分片

use admin

sh.shardCollection( "testhash.hash", { id: "hashed" } )

3)生成测试数据

use testhash

for(i=1;i<10000;i++){ db.hash.insert({"id":i,"name":"shanghai","age":70}); }

4)验证数据

分片验证

#mongodb01

mongo --port 28010

use testhash

db.hash.count()

33755

#mongodb01

mongo --port 28010

use testhash

db.hash.count()

33142

#mongodb01

mongo --port 28010

use testhash

db.hash.count()

33102

16.分片集群常用管理命令

1.列出分片所有详细信息

db.printShardingStatus()

sh.status()

2.列出所有分片成员信息

use admin

db.runCommand({ listshards : 1})

3.列出开启分片的数据库

use config

db.databases.find({"partitioned": true })

4.查看分片的片键

use config

db.collections.find().pretty()

三、mongo配置密码做副本集

openssl rand -base64 123 > /server/mongodb/mongo.key

chown -R mongod.mongod /server/mongodb/mongo.key

chmod -R 600 /server/mongodb/mongo.key

scp -r /server/mongodb/mongo.key 192.168.1.82:/server/mongodb/

scp -r /server/mongodb/mongo.key 192.168.1.83:/server/mongodb/