从零开始搭建神经网络并将准确率提升至85%

我们在看一些关于深度学习的教材或者视频时,作者(讲解者)总是喜欢使用MNIST数据集进行讲解,不仅是因为MNIST数据集小,还因为MNSIT数据集图片是单色的。在讲解时很的容易达到深度学习的效果。

但是学习不能只止于此,接下来我们就使用彩色图片去训练一个模型。

最初我在设置网络结构去训练时,准确率才40%的样子,同时不能够收敛。后来结合着一些论文对神经网络有了一定的了解,接着就开始对网络进行优化,使得准确率逐渐的到了60%、70%、80%、90%……(最终训练准确率为99%,测试准确率为85%)

-

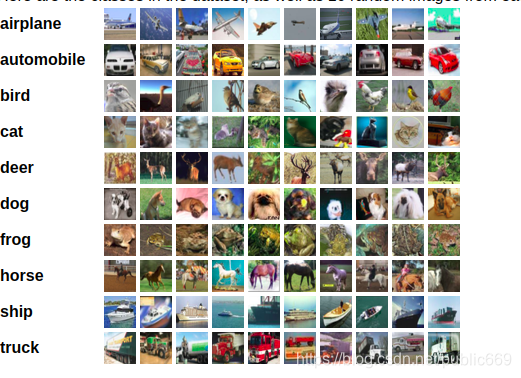

数据集介绍:

我相信接触过深度学习的小伙伴对这个数据集一定不陌生吧,这个就是CIFAR-10,CIFAR-10数据集由 ‘airplane’, ‘automobile’, ‘bird’, ‘cat’, ‘deer’, ‘dog’, ‘frog’, ‘horse’, ‘ship’, ‘truck’ 组成的共10类32x32的彩色图片,一共包含60000张图片,每一类包含6000图片。其中50000张图片作为训练集,10000张图片作为测试集。

下面就开始我们的训练:(说明:训练框架是pytorch) -

第一步:加载数据集

如何加载呢:

1 . 导入必要的第三方库import torch from torch import nn import torch.optim as optim import torch.nn.functional as F from torch.autograd import Variable import matplotlib.pyplot as plt from torchvision import transforms,datasets from torch.utils.data import DataLoader- 下载数据集 (pytorch或者tensorflow都是预留了下载数据集的接口的,所以不需要我们再另外去下载)

def plot_curve(data): fig = plt.figure() plt.plot(range(len(data)), data, color='blue') plt.legend(['value'], loc='upper right') plt.xlabel('step') plt.ylabel('value') plt.show() transTrain=transforms.Compose([transforms.RandomHorizontalFlip(), transforms.RandomGrayscale(), transforms.ToTensor(), transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))]) transTest=transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))])上述的代码中transforms是对数据进行预处理

plot_curve函数是对后面的loss和acc进行简单的可视化处理# 这行代码是对数据进行加强 transforms.RandomHorizontalFlip() transforms.RandomGrayscale()3.定义网络结构

首先我们使用Lenet-5网络结构class Lenet5(nn.Module): def __init__(self): super(Lenet5, self).__init__() self.conv_unit = nn.Sequential( nn.Conv2d(3, 16, kernel_size=5, stride=1, padding=0), nn.MaxPool2d(kernel_size=2, stride=2, padding=0), nn.Conv2d(16, 32, kernel_size=5, stride=1, padding=0), nn.MaxPool2d(kernel_size=2, stride=2, padding=0), ) self.fc_unit = nn.Sequential( nn.Linear(32*5*5, 32), nn.ReLU(), nn.Linear(32, 10) ) tmp = torch.randn(2, 3, 32, 32) out = self.conv_unit(tmp) print('conv out:', out.shape) def forward(self, x): batchsz = x.size(0) x = self.conv_unit(x) x = x.view(batchsz, 32*5*5) logits = self.fc_unit(x) return logits在 PyTorch 中可以通过继承 nn.Module 来自定义神经网络,在 init() 中设定结构,在 forward() 中设定前向传播的流程。 因为 PyTorch 可以自动计算梯度,所以不需要特别定义 backward 反向传播。

-

定义 Loss 函数和优化器

Loss使用CrossEntropyLoss (交叉熵损失函数)

优化器使用Adam,当然使用SGD也可以loss = nn.CrossEntropyLoss() #optimizer = optim.SGD(self.parameters(),lr=0.01) optimizer = optim.Adam(self.parameters(), lr=0.0001) -

训练

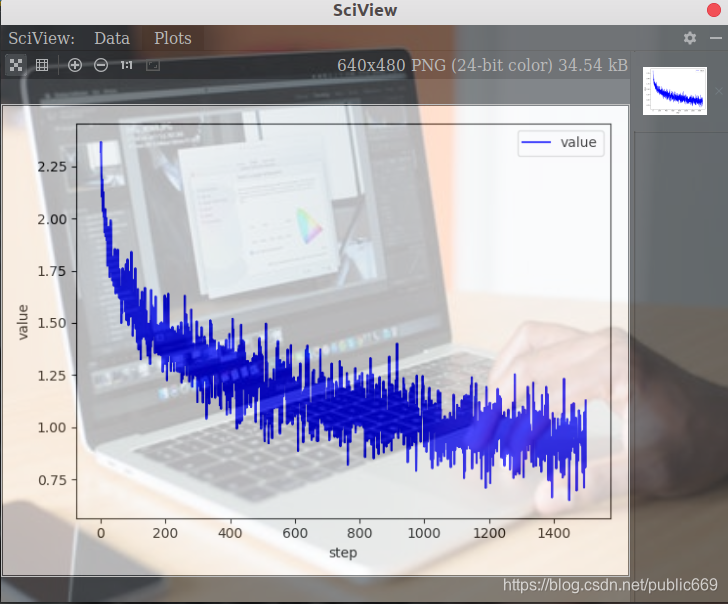

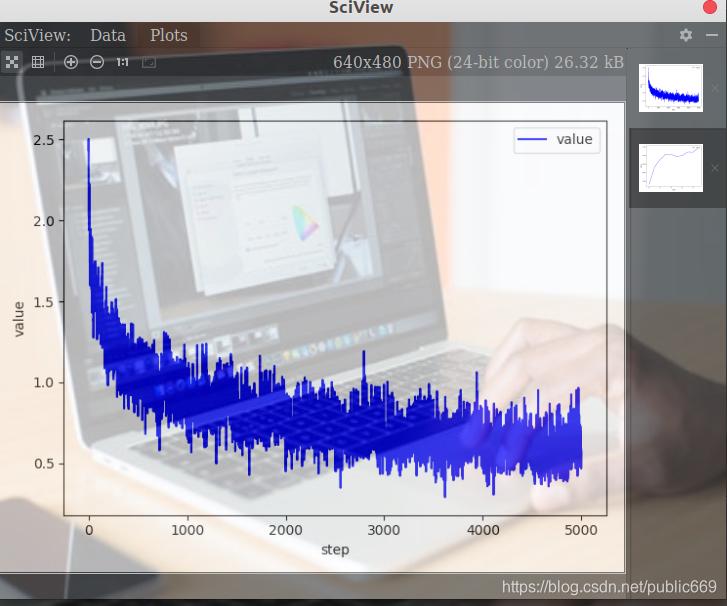

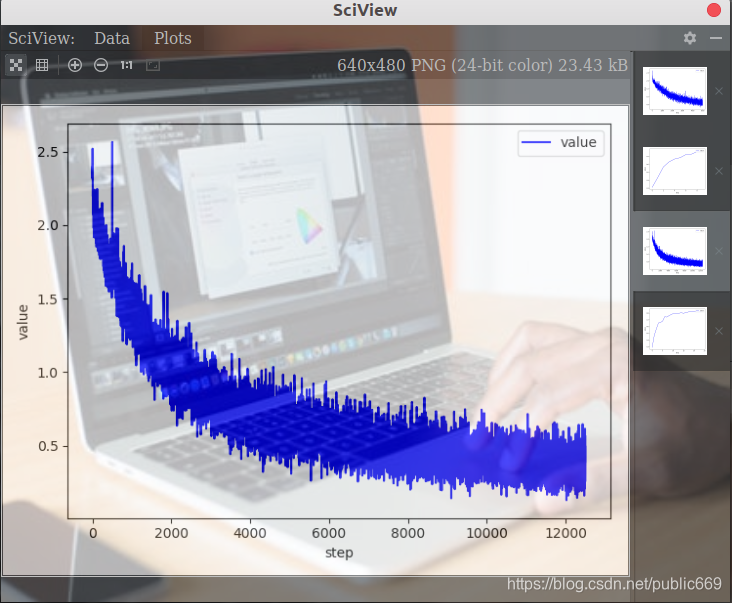

for epoch in range(100): for i, (x, label) in enumerate(train_data_load): x, label = x.to(device), label.to(device) logits = net(x) loss = name(logits, label) optimizer.zero_grad() loss.backward() optimizer.step() trans_loss.append(loss.item()) net.eval() with torch.no_grad(): # test total_correct = 0 total_num = 0 for x, label in test_data_load: # [b, 3, 32, 32] # [b] x, label = x.to(device), label.to(device) # [b, 10] logits = net(x) # [b] pred = logits.argmax(dim=1) # [b] vs [b] => scalar tensor correct = torch.eq(pred, label).float().sum().item() total_correct += correct total_num += x.size(0) # print(correct) acc = total_correct / total_num test_acc.append(acc) print(epoch+1,'loss:',loss.item(),'test acc:',acc) plot_curve(trans_loss) plot_curve(test_acc)程序设定训练过程要经过 100 个 epoch,然后结束。 结束之后我们来查看训练结果:

可以看到训练结果并不是很理想,所以接下来我们就需要对网络结构进行调整 -

调整方案一

下面是笔者手动建立的三层卷积网络结构class CNN(nn.Module): def __init__(self): super(CNN,self).__init__() self.conv1=nn.Sequential( nn.Conv2d(3,16,kernel_size=3,stride=1,padding=1), nn.ReLU(True), ) self.conv2=nn.Sequential( nn.Conv2d(16,32,kernel_size=5,stride=1,padding=2), nn.ReLU(True), ) self.conv3=nn.Sequential( nn.Conv2d(32,64,kernel_size=5,stride=1,padding=2), nn.ReLU(True), nn.MaxPool2d(kernel_size=2,stride=2), nn.BatchNorm2d(64) ) self.function=nn.Linear(15*15*64,10) def forward(self, x): out = self.conv1(x) out = self.conv2(out) out = self.conv3(out) out = out.view(out.size(0), -1) out = self.function(out) return out我们再来看看训练结果:

可以看出来这个网络结构和上一个相比好了一点,但是不是很明显。 -

方案二

开始是想着使用牛津大学VGG-16网络模型的,但是显存不够,只能自己去写一个了class CNN(nn.Module): def __init__(self): super(CNN,self).__init__() self.conv1 = nn.Conv2d(3, 64, 3, padding=1) self.conv2 = nn.Conv2d(64, 64, 3, padding=1) self.pool1 = nn.MaxPool2d(2, 2) self.bn1 = nn.BatchNorm2d(64) self.relu1 = nn.ReLU() self.conv3 = nn.Conv2d(64, 128, 3, padding=1) self.conv4 = nn.Conv2d(128, 128, 3, padding=1) self.pool2 = nn.MaxPool2d(2, 2, padding=1) self.bn2 = nn.BatchNorm2d(128) self.relu2 = nn.ReLU() self.conv5 = nn.Conv2d(128, 128, 3, padding=1) self.conv6 = nn.Conv2d(128, 128, 3, padding=1) self.conv7 = nn.Conv2d(128, 128, 1, padding=1) self.pool3 = nn.MaxPool2d(2, 2, padding=1) self.bn3 = nn.BatchNorm2d(128) self.relu3 = nn.ReLU() self.conv8 = nn.Conv2d(128, 256, 3, padding=1) self.conv9 = nn.Conv2d(256, 256, 3, padding=1) self.conv10 = nn.Conv2d(256, 256, 1, padding=1) self.pool4 = nn.MaxPool2d(2, 2, padding=1) self.bn4 = nn.BatchNorm2d(256) self.relu4 = nn.ReLU() self.conv11 = nn.Conv2d(256, 512, 3, padding=1) self.conv12 = nn.Conv2d(512, 512, 3, padding=1) self.conv13 = nn.Conv2d(512, 512, 1, padding=1) self.pool5 = nn.MaxPool2d(2, 2, padding=1) self.bn5 = nn.BatchNorm2d(512) self.relu5 = nn.ReLU() self.fc14 = nn.Linear(512 * 4 * 4, 1024) self.drop1 = nn.Dropout2d() self.fc15 = nn.Linear(1024, 1024) self.drop2 = nn.Dropout2d() self.fc16 = nn.Linear(1024, 10) def forward(self, x): x = self.conv1(x) x = self.conv2(x) x = self.pool1(x) x = self.bn1(x) x = self.relu1(x) x = self.conv3(x) x = self.conv4(x) x = self.pool2(x) x = self.bn2(x) x = self.relu2(x) x = self.conv5(x) x = self.conv6(x) x = self.conv7(x) x = self.pool3(x) x = self.bn3(x) x = self.relu3(x) x = self.conv8(x) x = self.conv9(x) x = self.conv10(x) x = self.pool4(x) x = self.bn4(x) x = self.relu4(x) x = self.conv11(x) x = self.conv12(x) x = self.conv13(x) x = self.pool5(x) x = self.bn5(x) x = self.relu5(x) x = x.view(-1, 512 * 4 * 4) x = F.relu(self.fc14(x)) x = self.drop1(x) x = F.relu(self.fc15(x)) x = self.drop2(x) x = self.fc16(x) return x -

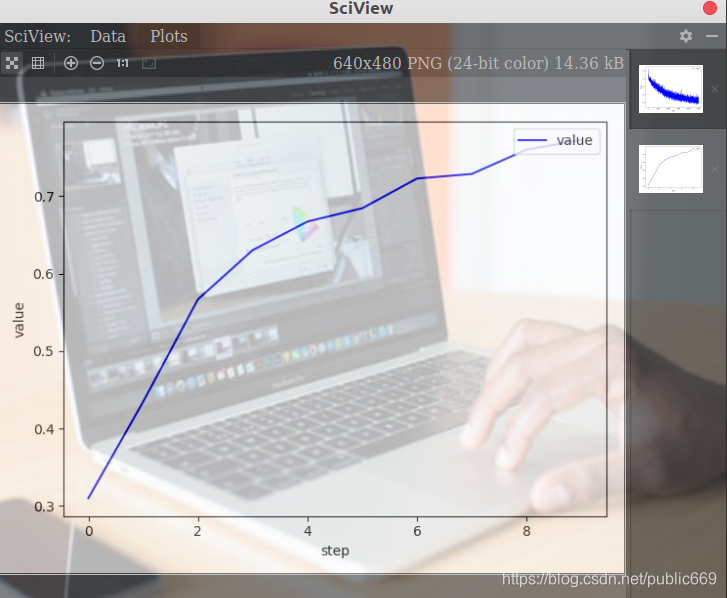

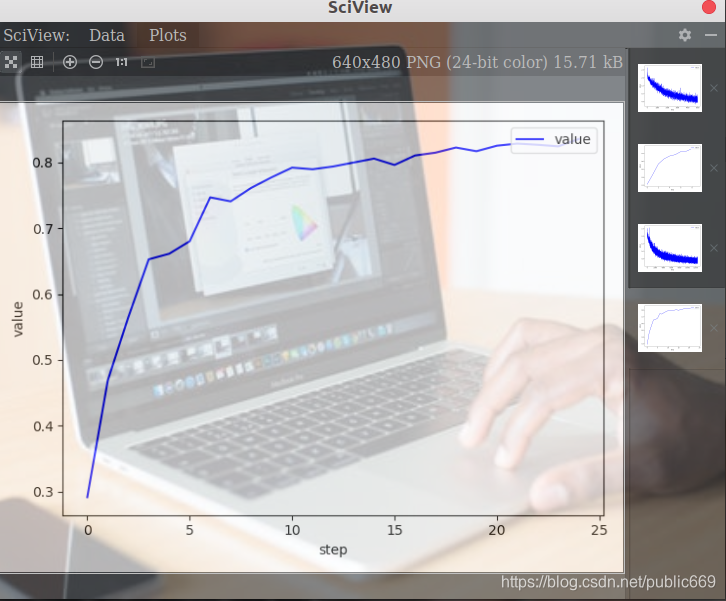

方案二的训练结果

方案二只训练了25个epoch,,比较无奈显卡不好,GTX1050训练尽然用了45分钟,给我等死了,而且显卡温度还很高,哎,都是穷呀~~~~

看看我的显卡温度,我都害怕

得让我的电脑降降温才行,咳咳,好了温度降下来了

不废话了,上最终的运行结果

可以看到这个结果比前两个有了很大的提升,test acc已近到了83%,这仅仅只训练了25个epoch -

最终代码如下

import torch from torch import nn import torch.optim as optim import torch.nn.functional as F from torch.autograd import Variable import matplotlib.pyplot as plt from torchvision import transforms,datasets from torch.utils.data import DataLoader def plot_curve(data): fig = plt.figure() plt.plot(range(len(data)), data, color='blue') plt.legend(['value'], loc='upper right') plt.xlabel('step') plt.ylabel('value') plt.show() transTrain=transforms.Compose([transforms.RandomHorizontalFlip(), transforms.RandomGrayscale(), transforms.ToTensor(), transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))]) transTest=transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))]) # download data train_data=datasets.CIFAR10(root='./CIFAR',train=True,transform=transTrain,download=True) test_data=datasets.CIFAR10(root='./CIFAR',train=False,transform=transTest,download=True) train_data_load=DataLoader(train_data,batch_size=100,shuffle=True,num_workers=2) test_data_load=DataLoader(test_data,batch_size=100,shuffle=False,num_workers=2) # definde CNN class CNN(nn.Module): def __init__(self): super(CNN,self).__init__() self.conv1 = nn.Conv2d(3, 64, 3, padding=1) self.conv2 = nn.Conv2d(64, 64, 3, padding=1) self.pool1 = nn.MaxPool2d(2, 2) self.bn1 = nn.BatchNorm2d(64) self.relu1 = nn.ReLU() self.conv3 = nn.Conv2d(64, 128, 3, padding=1) self.conv4 = nn.Conv2d(128, 128, 3, padding=1) self.pool2 = nn.MaxPool2d(2, 2, padding=1) self.bn2 = nn.BatchNorm2d(128) self.relu2 = nn.ReLU() self.conv5 = nn.Conv2d(128, 128, 3, padding=1) self.conv6 = nn.Conv2d(128, 128, 3, padding=1) self.conv7 = nn.Conv2d(128, 128, 1, padding=1) self.pool3 = nn.MaxPool2d(2, 2, padding=1) self.bn3 = nn.BatchNorm2d(128) self.relu3 = nn.ReLU() self.conv8 = nn.Conv2d(128, 256, 3, padding=1) self.conv9 = nn.Conv2d(256, 256, 3, padding=1) self.conv10 = nn.Conv2d(256, 256, 1, padding=1) self.pool4 = nn.MaxPool2d(2, 2, padding=1) self.bn4 = nn.BatchNorm2d(256) self.relu4 = nn.ReLU() self.conv11 = nn.Conv2d(256, 512, 3, padding=1) self.conv12 = nn.Conv2d(512, 512, 3, padding=1) self.conv13 = nn.Conv2d(512, 512, 1, padding=1) self.pool5 = nn.MaxPool2d(2, 2, padding=1) self.bn5 = nn.BatchNorm2d(512) self.relu5 = nn.ReLU() self.fc14 = nn.Linear(512 * 4 * 4, 1024) self.drop1 = nn.Dropout2d() self.fc15 = nn.Linear(1024, 1024) self.drop2 = nn.Dropout2d() self.fc16 = nn.Linear(1024, 10) def forward(self, x): x = self.conv1(x) x = self.conv2(x) x = self.pool1(x) x = self.bn1(x) x = self.relu1(x) x = self.conv3(x) x = self.conv4(x) x = self.pool2(x) x = self.bn2(x) x = self.relu2(x) x = self.conv5(x) x = self.conv6(x) x = self.conv7(x) x = self.pool3(x) x = self.bn3(x) x = self.relu3(x) x = self.conv8(x) x = self.conv9(x) x = self.conv10(x) x = self.pool4(x) x = self.bn4(x) x = self.relu4(x) x = self.conv11(x) x = self.conv12(x) x = self.conv13(x) x = self.pool5(x) x = self.bn5(x) x = self.relu5(x) x = x.view(-1, 512 * 4 * 4) x = F.relu(self.fc14(x)) x = self.drop1(x) x = F.relu(self.fc15(x)) x = self.drop2(x) x = self.fc16(x) return x device = torch.device('cuda') net=CNN().to(device) name = nn.CrossEntropyLoss().to(device) optimizer = optim.Adam(net.parameters(), lr=0.001) loss_num=0.0 trans_loss=[] test_acc=[] for epoch in range(25): for i, (x, label) in enumerate(train_data_load): x, label = x.to(device), label.to(device) logits = net(x) loss = name(logits, label) optimizer.zero_grad() loss.backward() optimizer.step() trans_loss.append(loss.item()) net.eval() with torch.no_grad(): total_correct = 0 total_num = 0 for x, label in test_data_load: x, label = x.to(device), label.to(device) logits = net(x) pred = logits.argmax(dim=1) # [b] vs [b] => scalar tensor correct = torch.eq(pred, label).float().sum().item() total_correct += correct total_num += x.size(0) acc = total_correct / total_num test_acc.append(acc) print(epoch+1,'loss:',loss.item(),'test acc:',acc) plot_curve(trans_loss) plot_curve(test_acc) -

总结

由于笔者的设备问题(显卡太low)

所以笔者优化的网络结构最终准确率能达到多少我也知道,我这里仅仅训练了25个epoch,有兴趣的小伙伴可以在自己的设备上运行运行,可以把最终的结果告诉我一下,万分感谢!!!!(后来在云端运行了一下,训练准确率为99%、 测试准确率为85.4%)也还不错,哈哈~~~

这样一个神经网络的搭建和优化就结束了,由于笔者能力有限,也许上述阐述有误,请多多包含,有错误的地方欢迎指正,谢谢~~~~

希望大家可以动手实践实践

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· 【自荐】一款简洁、开源的在线白板工具 Drawnix