Spark 2.43读取.json文件入库到MySQL 8

-

如果没有安装包,可以用我的这个

百度网盘链接 点击进入 提取码: eku1

-

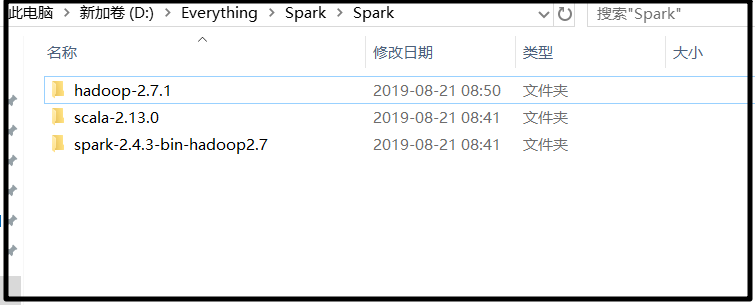

解压之后

-

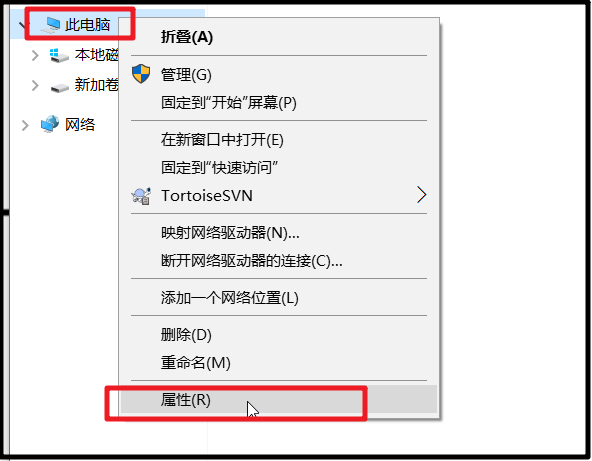

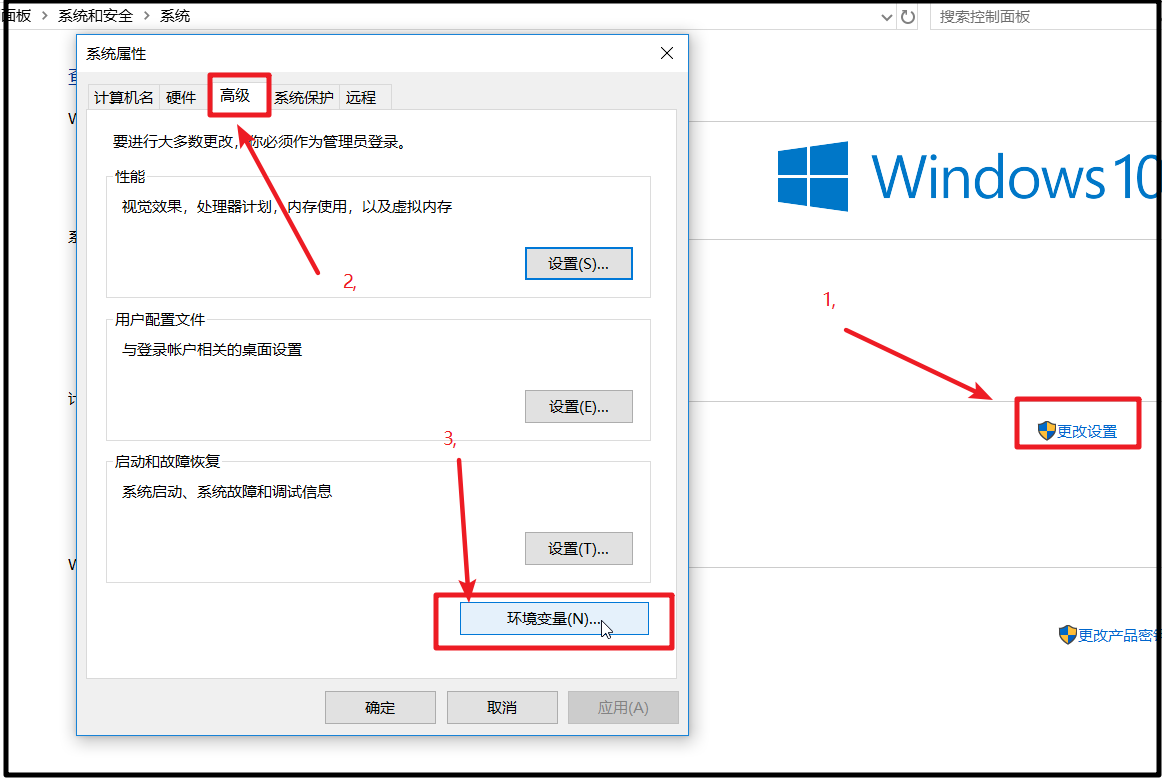

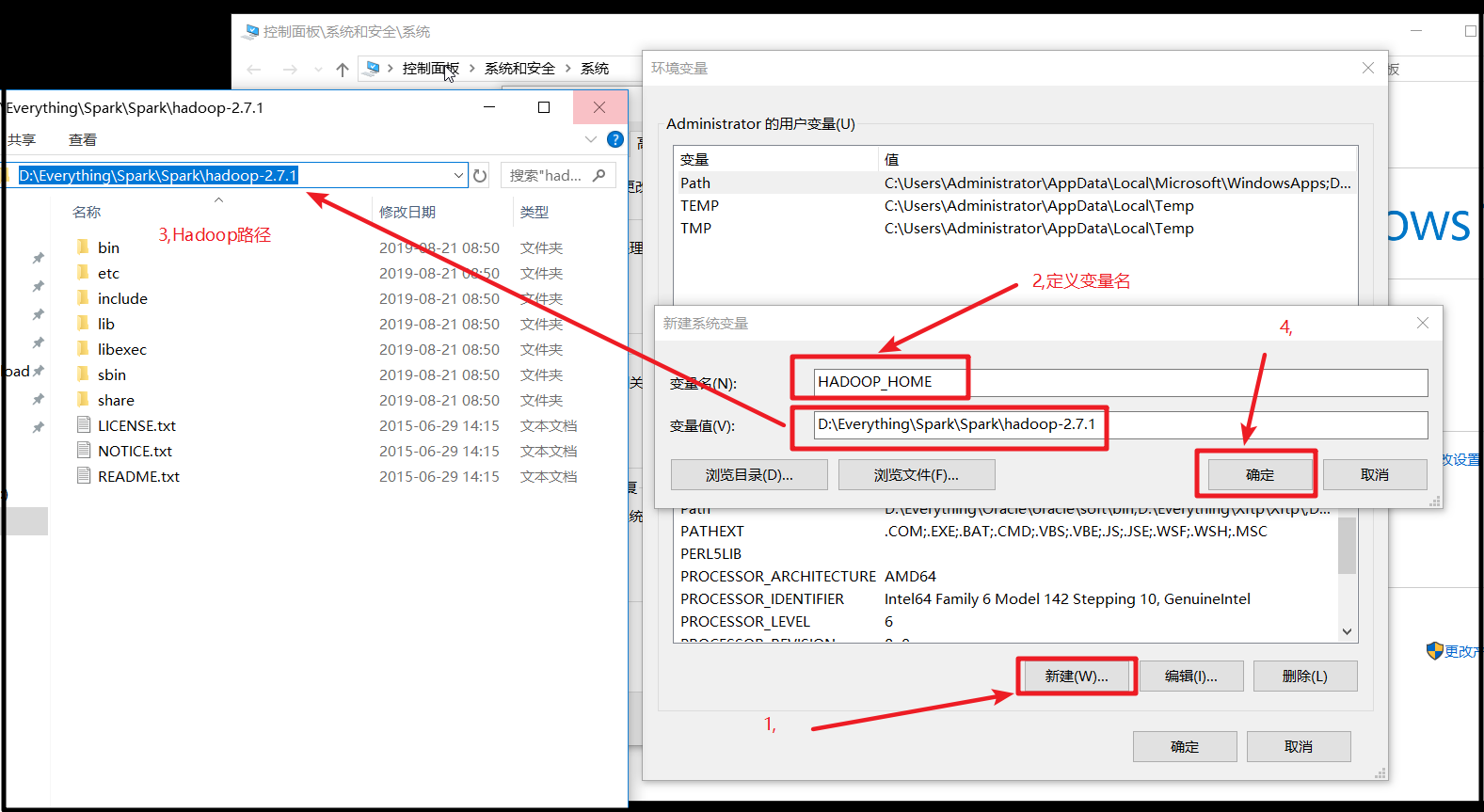

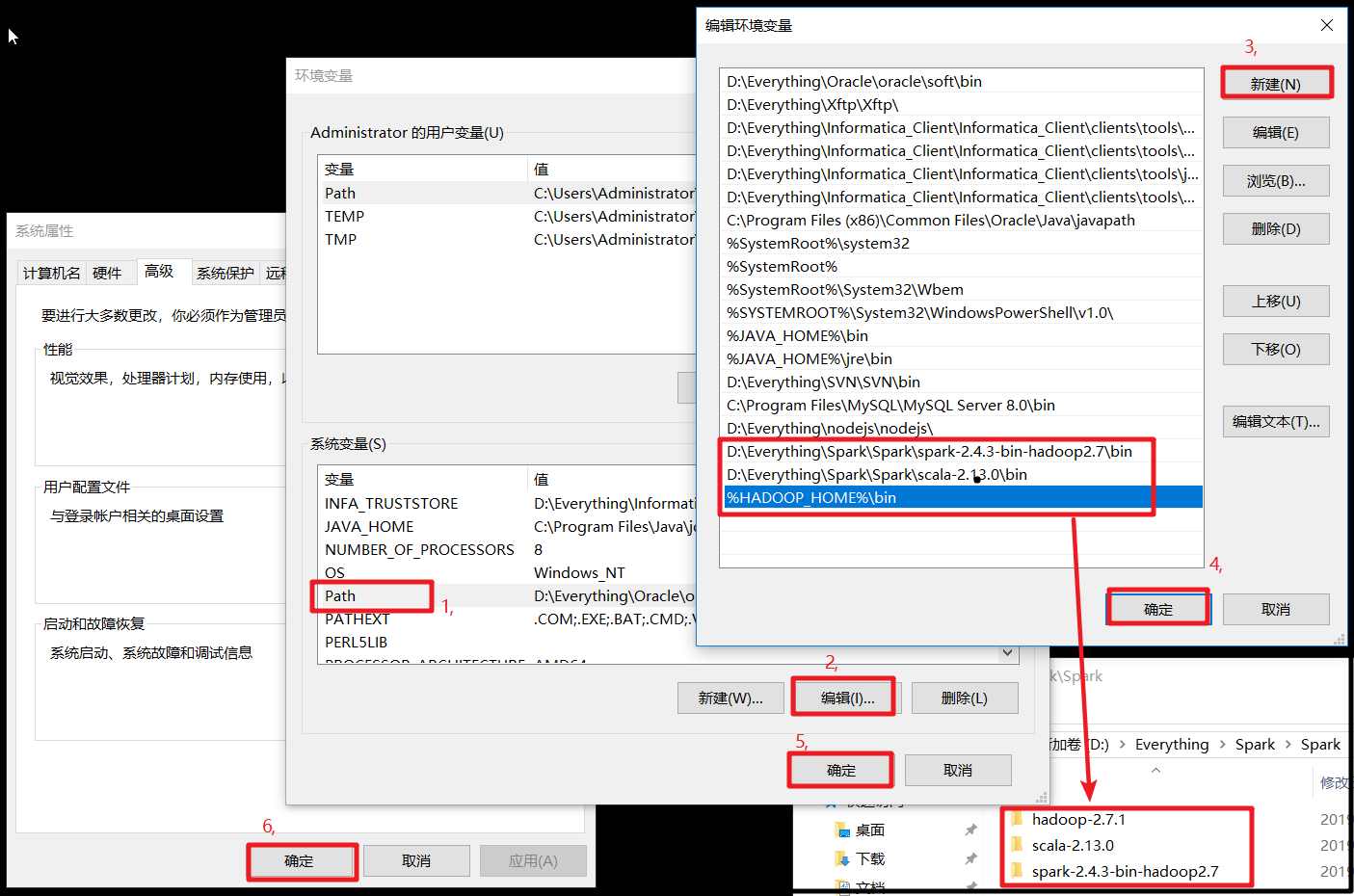

准备开始配置环境变量

-

-

-

-

-

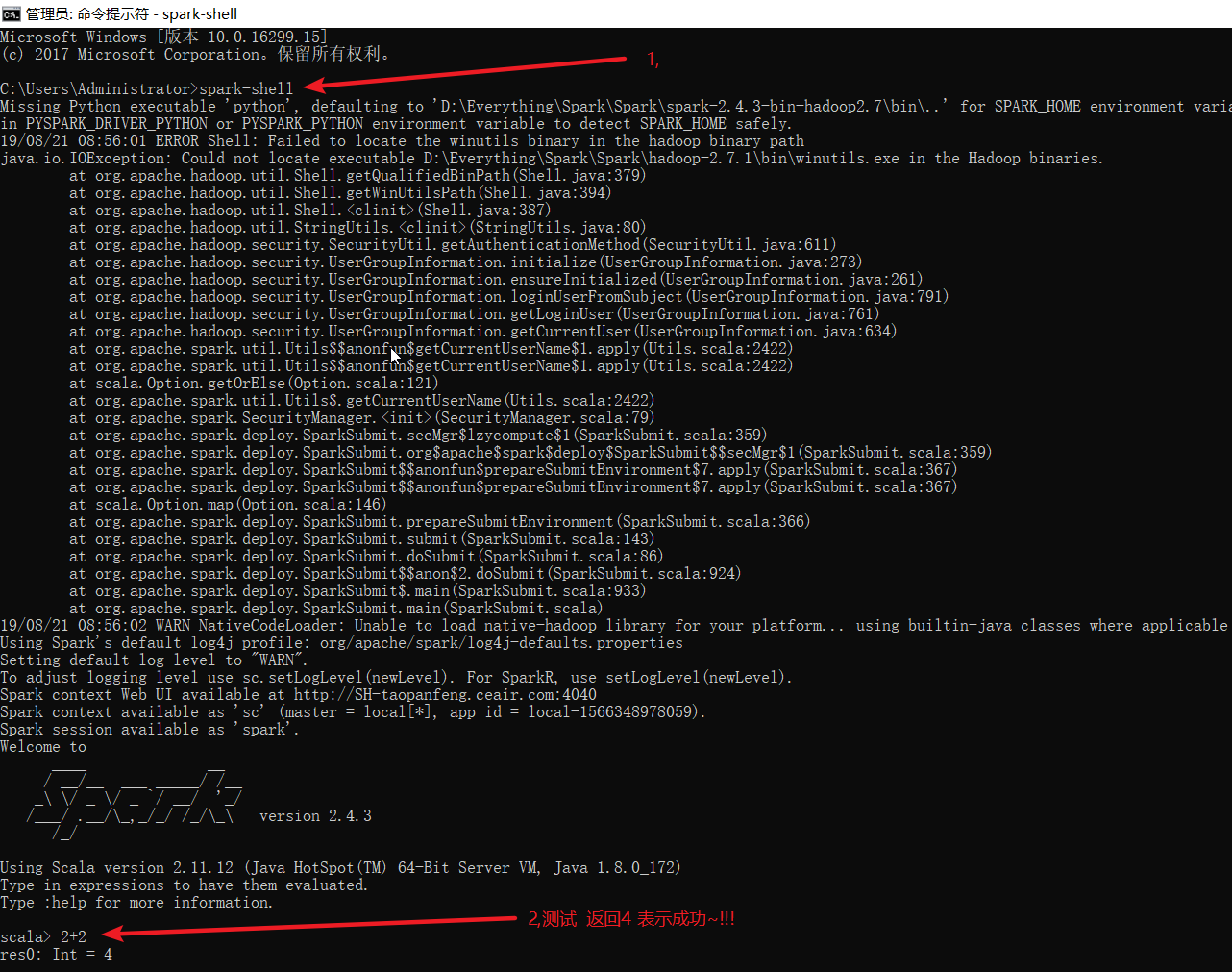

如果运行时候报错参考 (java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.) 点击进入

-

先看运行项目的结构

- pom.xml

pom.xml

pom.xml<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>spark</groupId> <artifactId>spark-test</artifactId> <version>1.0.0-SNAPSHOT</version> <dependencies> <!--spark-core--> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.12</artifactId> <version>2.4.3</version> </dependency> <!--spark-sql--> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_2.12</artifactId> <version>2.4.3</version> </dependency> <!--mysql驱动--> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>8.0.17</version> </dependency> </dependencies> </project>

- TestSpark.java

TestSpark.java

TestSpark.javapackage panfeng; import org.apache.spark.sql.Dataset; import org.apache.spark.sql.Row; import org.apache.spark.sql.SparkSession; import java.util.Properties; public class TestSpark { /* {"name":"大牛","age":"12","address":"吉林省长春市二道区临河街万兴小区4栋2门303","phone":"18935642561"} {"name":"二蛋","age":"26","address":"河南省郑州市金水区名字二蛋403","phone":"19658758562"} {"name":"三驴","age":"53","address":"安徽省阜阳市太和县陶楼村605","phone":"13245756523"} {"name":"四毛","age":"17","address":"天津空间室内发你手机8806","phone":"17546231544"} {"name":"五虎","age":"98","address":"谁都不会做哪些技能聚划算505","phone":"16523546825"} 文件位置 D:\Everything\IDEA\Project\spark\tools\JSON_TEST.json */ public static void main(String[] args) { SparkSession sparkSession = SparkSession.builder().master("local").appName("user").getOrCreate(); //Dataset<Row> row_dataset = sparkSession.read().json("D:\\Everything\\IDEA\\Project\\spark\\tools\\JSON_TEST.json");//绝对路径 //Dataset<Row> row_dataset = sparkSession.read().json("spark-test/src/main/resources/JSON_TEST.json");//相对路径 Dataset<Row> row_dataset = sparkSession.read().json("*/src/main/resources/json/JSON_TEST.json");//*相对路径 Properties properties = new Properties(); properties.put("user", "root"); properties.put("password", "123456"); // *驱动不指定,系统会自动识别url //properties.put("driver","com.mysql.jdbc.Driver");//mysql5 //properties.put("driver","com.mysql.cj.jdbc.Driver");//mysql8 // *如果是本地,localhost:3306可以省略 //row_dataset.write().mode("append").jdbc("jdbc:mysql://localhost:3306/spark_test?serverTimezone=UTC", "t_user", properties); // 1,无论怎样都添加 //row_dataset.write().mode("append").jdbc("jdbc:mysql:///spark_test?serverTimezone=UTC", "t_user", properties); // 2,如果有重写,会覆盖以前的内容,如果没有就添加 row_dataset.write().mode("overwrite").jdbc("jdbc:mysql:///spark_test?serverTimezone=UTC", "t_user", properties); sparkSession.stop(); System.out.println("Complete..."); } }

- JSON_TEST.json

JSON_TEST.json

JSON_TEST.json{"name":"大牛","age":"12","address":"吉林省长春市二道区临河街万兴小区4栋2门303","phone":"18935642561"} {"name":"二蛋","age":"26","address":"河南省郑州市金水区名字二蛋403","phone":"19658758562"} {"name":"三驴","age":"53","address":"安徽省阜阳市太和县陶楼村605","phone":"13245756523"} {"name":"四毛","age":"17","address":"天津空间室内发你手机8806","phone":"17546231544"} {"name":"五虎","age":"98","address":"谁都不会做哪些技能聚划算505","phone":"16523546825"}