openstack集群部署

#centos7手动部署openstack的T版本

1.基础环境准备

#模板需要nat网卡和仅主机网卡。并配置内核参数net.ifnames=0 biosdevname=0。时间同步计划任务自动同步阿里云的时间服务器。关闭防火墙和selinux。

#注意:VMwareworkstation开启cpu的虚拟化功能,否则cpu不支持虚拟化后期配置nova需要配置virt-type=qemu进行模拟,性能非常差。此配置后面就是使用的qemu,走了弯路。

仅主机网络vmnet1:eth0:192.168.33.0/24 NAT网络vmnet8:eth1:10.0.0.0/24

主机名 eth0网卡 eth1网卡

openstack-controller1.tan.local 192.168.33.101 10.0.0.101

openstack-mysql.tan.local 192.168.33.103 10.0.0.103

openstack-ha1.tan.local 192.168.33.105 10.0.0.105

openstack-compute1.tan.local 192.168.33.107 10.0.0.107

#修改主机名

[root@localhost ~]#hostnamectl set-hostname openstack-controller1.tan.local

[root@localhost ~]#hostnamectl set-hostname openstack-mysql.tan.local

[root@localhost ~]#hostnamectl set-hostname openstack-ha1.tan.local

[root@localhost ~]#hostnamectl set-hostname openstack-compute1.tan.local

#修改网卡配置

[root@openstack-controller1 ~]#cat /etc/sysconfig/network-scripts/ifcfg-eth0

TYPE=Ethernet

BOOTPROTO=static

NAME=eth0

DEVICE=eth0

IPADDR=192.168.33.101

PREFIX=24

ONBOOT=yes

[root@openstack-controller1 ~]#cat /etc/sysconfig/network-scripts/ifcfg-eth1

TYPE=Ethernet

BOOTPROTO=static

NAME=eth1

DEVICE=eth1

IPADDR=10.0.0.101

GATEWAY=10.0.0.2

DNS1=10.0.0.2

DNS2=180.76.76.76

PREFIX=24

ONBOOT=yes

[root@openstack-controller1 ~]#systemctl restart network

#其他主机参考此配置修改IPADDR即可。

#时间同步

[root@openstack-controller1 ~]#ntpdate time1.aliyun.com

[root@openstack-controller1 ~]#hwclock -w

[root@openstack-controller1 ~]#crontab -e

*/10 * * * * /usr/sbin/ntpdate time1.aliyun.com && hwclock -w

#停止防火墙

[root@openstack-controller1 ~]#systemctl stop firewalld

#禁止开机自启

[root@openstack-controller1 ~]#systemctl disable firewalld

#临时禁用selinux

[root@openstack-controller1 ~]#setenforce 0

#永久禁用selinux

[root@openstack-controller1 ~]#sed -i "s/SELINUX=enforcing/SELINUX=disabled/" /etc/selinux/config

#禁用epel源,因为epel中的更新会破坏向后兼容性。添加enabled=0来禁用epel源(所有节点执行)

[root@openstack-controller1 ~]# vim /etc/yum.repos.d/base.repo

[epel]

name=epel

baseurl=https://mirrors.aliyun.com/epel/$releasever/$basearch/

https://repo.huaweicloud.com/epel/$releasever/$basearch/

https://mirrors.cloud.tencent.com/epel/$releasever/$basearch/

https://mirrors.tuna.tsinghua.edu.cn/epel/$releasever/$basearch/

https://mirrors.sohu.com/fedora-epel/$releasever/$basearch/

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/epel/RPM-GPG-KEY-EPEL-$releasever

enabled=0

#官方参考文档

https://docs.openstack.org/install-guide/environment-packages-rdo.html

#启用 OpenStack 存储库

#安装 Train 版本时,运行(在所有节点执行):

yum install centos-release-openstack-train -y

#下载并安装 RDO 存储库 RPM 以启用 OpenStack 存储库(在所有节点执行):

yum install -y https://rdoproject.org/repos/rdo-release.rpm

#为您的版本安装适当的 OpenStack 客户端(仅controller和compute节点执行):

yum install python-openstackclient -y

#RHEL 和 CentOS 默认启用SELinux。安装 openstack-selinux软件包以自动管理 OpenStack 服务的安全策略(仅controller和compute节点执行):

yum install openstack-selinux -y

#在controller1节点安装连接mysql和memcache的驱动包

[root@openstack-controller1 ~]# yum install -y python2-PyMySQL python-memcached

2.在mysql节点安装并配置mysql,rabbitMQ,memcached

#在mysql节点安装并配置mysql,rabbitMQ,memcached

#安装sql官方参考文档

https://docs.openstack.org/install-guide/environment-sql-database-rdo.html

[root@openstack-mysql ~]#yum install -y mariadb mariadb-server

[root@openstack-mysql ~]# vim /etc/my.cnf.d/openstack.cnf

[mysqld]

bind-address = 0.0.0.0

default-storage-engine = innodb

innodb_file_per_table =on

max_connections =4096

collation-server = utf8_general_ci

character-set-server =utf8

[root@openstack-mysql ~]# systemctl restart mariadb

[root@openstack-mysql ~]# systemctl enable mariadb

[root@openstack-mysql ~]# vim /etc/hosts

10.0.0.103 openstack-mysql.tan.local openstack-mysql

#安装rabbitmq官方参考文档

https://docs.openstack.org/install-guide/environment-messaging-rdo.html

[root@openstack-mysql ~]# yum install -y rabbitmq-server

[root@openstack-mysql ~]# systemctl enable --now rabbitmq-server

[root@openstack-mysql ~]# rabbitmqctl add_user openstack openstack123

Creating user "openstack"

[root@openstack-mysql ~]# rabbitmqctl set_permissions openstack ".*" ".*" ".*"

Setting permissions for user "openstack" in vhost "/"

[root@openstack-mysql ~]# rabbitmq-plugins enable rabbitmq_management

#浏览器可以访问http://10.0.0.103:15672/,账户密码guest/guest

#安装memcached官方参考文档

https://docs.openstack.org/install-guide/environment-memcached-rdo.html

[root@openstack-mysql ~]# yum install -y memcached

[root@openstack-mysql ~]# vim /etc/sysconfig/memcached

PORT="11211"

USER="memcached"

MAXCONN="1024"

CACHESIZE="1024"

OPTIONS="-l 0.0.0.0,::1"

[root@openstack-mysql ~]# systemctl enable memcached --now

3.在ha1节点安装keepalived+haproxy实现高可用

[root@openstack-ha1 ~]# yum install -y keepalived haproxy

[root@openstack-ha1 ~]# vim /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state MASTER

interface eth1

virtual_router_id 58

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.188 dev eth1 label eth1:0

}

}

#以上修改interface,virtual_router_id,virtual_ipaddress,以下删除

[root@openstack-ha1 ~]# systemctl enable keepalived --now

[root@openstack-ha1 ~]# iptables -L

Chain INPUT (policy ACCEPT)

target prot opt source destination

DROP all -- anywhere anywhere match-set keepalived dst

[root@openstack-ha1 ~]# iptables-save > /tmp/iptables.txt

[root@openstack-ha1 ~]# vim /tmp/iptables.txt

-A INPUT -m set --match-set keepalived dst -j DROP #修改为:

-A INPUT -m set --match-set keepalived dst -j ACCEPT

[root@openstack-ha1 ~]# iptables-restore /tmp/iptables.txt

[root@openstack-ha1 ~]# iptables -L

Chain INPUT (policy ACCEPT)

target prot opt source destination

ACCEPT all -- anywhere anywhere match-set keepalived dst

[root@openstack-ha1 ~]# vim /etc/haproxy/haproxy.cfg

#末尾添加

listen openstack-mysql-3306

bind 10.0.0.188:3306

mode tcp

server 10.0.0.103 10.0.0.103:3306 check inter 3s fall 3 rise 5

listen openstack-rabbitmq-5672

bind 10.0.0.188:5672

mode tcp

server 10.0.0.103 10.0.0.103:5672 check inter 3s fall 3 rise 5

listen openstack-memcached-11211

bind 10.0.0.188:11211

mode tcp

server 10.0.0.103 10.0.0.103:11211 check inter 3s fall 3 rise 5

[root@openstack-ha1 ~]# systemctl enable haproxy --now

4.认证服务keystone安装

#安装keystone官方参考文档

https://docs.openstack.org/keystone/train/install/index-rdo.html

#在mysql节点创建keystone数据库

[root@openstack-mysql ~]# mysql

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 10

Server version: 10.3.20-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> CREATE DATABASE keystone;

Query OK, 1 row affected (0.000 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY 'keystone123';

Query OK, 0 rows affected (0.000 sec)

#在controller1节点安装mariadb,使用vip连接测试

[root@openstack-controller1 ~]# mysql -h10.0.0.188 -ukeystone -pkeystone123

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 11

Server version: 10.3.20-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]>

#在controller1节点安装keystone

#运行以下命令来安装软件包:

[root@openstack-controller1 ~]#yum install openstack-keystone httpd mod_wsgi -y

#这里使用keepalived的vip作为连接数据库的主机,添加dns主机记录或者在本地添加host主机解析

[root@openstack-controller1 ~]# vim /etc/hosts

10.0.0.188 openstack-vip.tan.local

#配置keystone.conf文件

[root@openstack-controller1 ~]#vim /etc/keystone/keystone.conf

#在该[database]部分中,配置数据库访问

[database]

connection mysql+pymysql://keystone:keystone123@openstack-vip.tan.local/keystone

#在[token]部分中,配置 Fernet 令牌提供程序:

[token]

provider = fernet

#填充身份服务数据库,会在mysql节点的keystone数据库创建很多空表:

[root@openstack-controller1 ~]# su -s /bin/sh -c "keystone-manage db_sync" keystone

#在mysql服务器验证

MariaDB [keystone]> show tables;

+------------------------------------+

| Tables_in_keystone |

+------------------------------------+

| access_rule |

| access_token |

| application_credential |

| application_credential_access_rule |

| application_credential_role |

| assignment |

| config_register |

| consumer |

| credential |

| endpoint |

| endpoint_group |

| federated_user |

| federation_protocol |

| group |

| id_mapping |

| identity_provider |

| idp_remote_ids |

| implied_role |

| limit |

| local_user |

| mapping |

| migrate_version |

| nonlocal_user |

| password |

| policy |

| policy_association |

| project |

| project_endpoint |

| project_endpoint_group |

| project_option |

| project_tag |

| region |

| registered_limit |

| request_token |

| revocation_event |

| role |

| role_option |

| sensitive_config |

| service |

| service_provider |

| system_assignment |

| token |

| trust |

| trust_role |

| user |

| user_group_membership |

| user_option |

| whitelisted_config |

+------------------------------------+

48 rows in set (0.000 sec)

#回到controller1节点配置:

#初始化 Fernet 密钥存储库:

[root@openstack-controller1 ~]# keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

[root@openstack-controller1 ~]# keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

#会在/etc/keystone目录下生成两个密钥库目录

[root@openstack-controller1 ~]# ll /etc/keystone/

total 124

drwx------ 2 keystone keystone 24 Sep 20 12:58 credential-keys

-rw-r----- 1 root keystone 2303 Jun 7 2021 default_catalog.templates

drwx------ 2 keystone keystone 24 Sep 20 12:58 fernet-keys

-rw-r----- 1 root keystone 106516 Sep 20 12:56 keystone.conf

-rw-r----- 1 root keystone 1046 Jun 7 2021 logging.conf

-rw-r----- 1 root keystone 3 Jun 8 2021 policy.json

-rw-r----- 1 keystone keystone 665 Jun 7 2021 sso_callback_template.html

#引导身份服务,也即是初始化keystone服务:

[root@openstack-controller1 ~]#keystone-manage bootstrap --bootstrap-password admin \

--bootstrap-admin-url http://openstack-vip.tan.local:5000/v3/ \

--bootstrap-internal-url http://openstack-vip.tan.local:5000/v3/ \

--bootstrap-public-url http://openstack-vip.tan.local:5000/v3/ \

--bootstrap-region-id RegionOne

######设置管理员密码为admin,

######admin:管理网络 比如:192.168.0.0/16

######Internal:内部网络 比如:10.20.0.0/16

######Public:共有网络 比如:172.16.0.0/16

######这里使用vip的域名来连接控制节点的5000端口实现高可用,需要在haproxy中加入一个listen语句块实现转发。多个keystone服务可以写多个server。重启haproxy,并ss -tnl确认监听了5000端口

#在ha1节点配置

[root@openstack-ha1 ~]# vim /etc/haproxy/haproxy.cfg

listen openstack-keystone-5000

bind 10.0.0.188:5000

mode tcp

server 10.0.0.101 10.0.0.101:5000 check inter 3s fall 3 rise 5

[root@openstack-ha1 ~]#systemctl restart haproxy

#在mysql节点验证数据

MariaDB [keystone]> select * from service;

+----------------------------------+----------+---------+----------------------+

| id | type | enabled | extra |

+----------------------------------+----------+---------+----------------------+

| a0b83f4adae046b7a5a4aa0a14df1964 | identity | 1 | {"name": "keystone"} |

+----------------------------------+----------+---------+----------------------+

1 row in set (0.000 sec)

MariaDB [keystone]> select * from user;

+----------------------------------+-------+---------+--------------------+---------------------+----------------+-----------+

| id | extra | enabled | default_project_id | created_at | last_active_at | domain_id |

+----------------------------------+-------+---------+--------------------+---------------------+----------------+-----------+

| 317a4ea405d745bbb9e5d76fc87a0751 | {} | 1 | NULL | 2022-09-20 05:04:33 | NULL | default |

+----------------------------------+-------+---------+--------------------+---------------------+----------------+-----------+

1 row in set (0.000 sec)

MariaDB [keystone]> select * from endpoint;

+----------------------------------+--------------------+-----------+----------------------------------+-----------------------------------------+-------+---------+-----------+

| id | legacy_endpoint_id | interface | service_id | url | extra | enabled | region_id |

+----------------------------------+--------------------+-----------+----------------------------------+-----------------------------------------+-------+---------+-----------+

| 251fa40f1ad7443c9257ca96e2e462a7 | NULL | public | a0b83f4adae046b7a5a4aa0a14df1964 | http://openstack-vip.tan.local:5000/v3/ | {} | 1 | RegionOne |

| 3cefae7f1cfa40d482121387ddfd1712 | NULL | internal | a0b83f4adae046b7a5a4aa0a14df1964 | http://openstack-vip.tan.local:5000/v3/ | {} | 1 | RegionOne |

| df69d6e2a5704b7db7df18b8615b013c | NULL | admin | a0b83f4adae046b7a5a4aa0a14df1964 | http://openstack-vip.tan.local:5000/v3/ | {} | 1 | RegionOne |

+----------------------------------+--------------------+-----------+----------------------------------+-----------------------------------------+-------+---------+-----------+

3 rows in set (0.000 sec)

#编辑/etc/httpd/conf/httpd.conf文件并配置 ServerName选项以引用控制器节点:

[root@openstack-controller1 ~]# vim /etc/httpd/conf/httpd.conf

ServerName 10.0.0.101:80

#创建/usr/share/keystone/wsgi-keystone.conf文件的链接,会监听5000端口做一个http的虚拟主机:

[root@openstack-controller1 ~]# ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

[root@openstack-controller1 ~]# systemctl enable httpd --now

#测试连接

[root@openstack-controller1 ~]# curl 10.0.0.101:5000

{"versions": {"values": [{"status": "stable", "updated": "2019-07-19T00:00:00Z", "media- types": [{"base": "application/json", "type": "application/vnd.openstack.identity-v3+jso n"}], "id": "v3.13", "links": [{"href": "http://10.0.0.101:5000/v3/", "rel": "self"}]}]} }

[root@openstack-controller1 ~]# curl 10.0.0.188:5000

{"versions": {"values": [{"status": "stable", "updated": "2019-07-19T00:00:00Z", "media-types": [{"base": "application/json", "type": "application/vnd.openstack.identity-v3+json"}], "id": "v3.13", "links": [{"href": "http://10.0.0.188:5000/v3/", "rel": "self"}]}]}}

[root@openstack-controller1 ~]# curl openstack-vip.tan.local:5000

{"versions": {"values": [{"status": "stable", "updated": "2019-07-19T00:00:00Z", "media-types": [{"base": "application/json", "type": "application/vnd.openstack.identity-v3+json"}], "id": "v3.13", "links": [{"href": "http://openstack-vip.tan.local:5000/v3/", "rel": "self"}]}]}}

#通过设置适当的环境变量来配置管理帐户:

[root@openstack-controller1 ~]# vim admin.sh

export OS_USERNAME=admin

export OS_PASSWORD=admin

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://openstack-vip.tan.local:5000/v3

export OS_IDENTITY_API_VERSION=3

[root@openstack-controller1 ~]# source admin.sh

#创建域、项目、用户和角色。Identity 服务为每个 OpenStack 服务提供身份验证服务。身份验证服务使用域、项目、用户和角色的组合。尽管本指南中的keystone-manage 引导步骤中已经存在“默认”域,但创建新域的正式方法是:

[root@openstack-controller1 ~]# openstack domain create --description "An Example Domain" example

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | An Example Domain |

| enabled | True |

| id | dbea5c0381a74cfca6353a502f77d7c4 |

| name | example |

| options | {} |

| tags | [] |

+-------------+----------------------------------+

#本指南使用的服务项目包含您添加到环境中的每项服务的唯一用户。创建service 项目:

[root@openstack-controller1 ~]# openstack project create --domain default --description "Service Project" service

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Service Project |

| domain_id | default |

| enabled | True |

| id | 4e78b1a0a343412d8854ede9dac3ba14 |

| is_domain | False |

| name | service |

| options | {} |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

#常规(非管理员)任务应使用非特权项目和用户。例如,本指南创建myproject项目和myuser 用户。

#创建myproject项目:

[root@openstack-controller1 ~]# openstack project create --domain default \

> --description "Demo Project" myproject

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Demo Project |

| domain_id | default |

| enabled | True |

| id | 5d63c6d0daaf4b6798f3a0336aa038db |

| is_domain | False |

| name | myproject |

| options | {} |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

#创建myuser用户:

[root@openstack-controller1 ~]# openstack user create --domain default --password-prompt myuser

User Password:myuser

Repeat User Password:myuser

The passwords entered were not the same

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | b107b3fb4ab445d093db6730fc794b4b |

| name | myuser |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

#创建myrole角色:

[root@openstack-controller1 ~]# openstack role create myrole

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | None |

| domain_id | None |

| id | 26ca8abc2b7648cfb96a6497a444be7c |

| name | myrole |

| options | {} |

+-------------+----------------------------------+

#将myrole角色添加到myproject项目和myuser用户:

[root@openstack-controller1 ~]# openstack role add --project myproject --user myuser myrole

#keystone验证

[root@openstack-controller1 ~]# cat admin.sh

export OS_USERNAME=admin

export OS_PASSWORD=admin

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://openstack-vip.tan.local:5000/v3

export OS_IDENTITY_API_VERSION=3

[root@openstack-controller1 ~]# unset OS_AUTH_URL OS_PASSWORD

#可以使用以下方法获取token验证创建的用户,项目。Token的有效期是keystone配置文件中默认的3600秒,也就是一个小时(我们在东八区,时间加8小时。查到的时间是2022-09-20T06:30:27。加八小时,也就是有效期到14:40,现在时间是13:30,因此有一个小时有效期)

[root@openstack-controller1 ~]# date

Tue Sep 20 13:34:01 CST 2022

[root@openstack-controller1 ~]# openstack --os-auth-url http://openstack-vip.tan.local:5000/v3 --os-project-domain-name Default --os-user-domain-name Default --os-project-name admin --os-username admin token issue

Password:admin

Password:admin

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2022-09-20T06:34:23+0000 |

| id | gAAAAABjKVDfF1HrrqysMpRXdNx3YXlbiXP5qYMyOnJf4ordOTR0EUXf6CTO66dyKKH6HqZSax_hsAF6eKcokRXkGDXTPAequTxZBCRbgv5SVkFshuFdOE94b3efX7EEJVl5csta6vAjDd8l0Lpg45nqwNyecHI5Tk3xuWgI84P-MotCjQ51Nw0 |

| project_id | f7c80417780a4bd59beaa38a9d36271e |

| user_id | 317a4ea405d745bbb9e5d76fc87a0751 |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

#创建 OpenStack 客户端环境脚本

#创建并编辑admin-openrc文件并添加以下内容:

[root@openstack-controller1 ~]# vim admin-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=admin

export OS_AUTH_URL=http://openstack-vip.tan.local:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

替换为您在身份服务中ADMIN_PASS为用户选择的密码。admin

#创建并编辑demo-openrc文件并添加以下内容:

[root@openstack-controller1 ~]# vim demo-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=myproject

export OS_USERNAME=myuser

export OS_PASSWORD=myuser

export OS_AUTH_URL=http://openstack-vip.tan.local:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

替换为您在身份服务中DEMO_PASS为用户选择的密码。Myuser

#要将客户端作为特定项目和用户运行,您只需在运行它们之前加载关联的客户端环境脚本。例如:

[root@openstack-controller1 ~]# . admin-openrc

[root@openstack-controller1 ~]# openstack token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2022-09-20T06:38:11+0000 |

| id | gAAAAABjKVHDMgiffLBqRwJl9fHg7hB2vAL59_fdrW0hK2ZxFEV9dMC6H9n4ifK0aoDGkLK_IaCYPfKVXV7BvEnQdFrWu_9FSa-RB6knr3AMgIhTyEjH2ro_wUU8-zEgjSrr6ezAvIV2s-Qq5mgZLPCMpSdAy6H9tP2bwB9PgObVEuvC0iaCEGs |

| project_id | f7c80417780a4bd59beaa38a9d36271e |

| user_id | 317a4ea405d745bbb9e5d76fc87a0751 |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

[root@openstack-controller1 ~]# . demo-openrc

[root@openstack-controller1 ~]# openstack token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2022-09-20T06:38:24+0000 |

| id | gAAAAABjKVHQI6-mwJ_N7KBo-kjQ1Ds-1RpF18_eWD9EEAdOL0BVex3z1wmh1WL-6exC_X_mJyaeTZt_poGSMndKXPO5jAGnmv2iGa204BkBKXfqLicObfpEMTxeX4AQsiG-J0Vf3iapyxaTEQpItefXKFteQah0BAChBH23TrLe6dFxKfO8VcQ |

| project_id | 5d63c6d0daaf4b6798f3a0336aa038db |

| user_id | b107b3fb4ab445d093db6730fc794b4b |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

5.镜像服务glance安装

#glance安装官方参考文档

https://docs.openstack.org/glance/train/install/install-rdo.html

#在ha1节点配置nfs服务

[root@openstack-ha1 ~]# mkdir /data/glance -p

[root@openstack-ha1 ~]# yum install -y nfs-utils

[root@openstack-ha1 ~]# vim /etc/exports

[root@openstack-ha1 ~]# systemctl enable nfs --now

Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.

#在controller1节点测试连接性

[root@openstack-controller1 ~]# yum install -y nfs-utils

[root@openstack-controller1 ~]# showmount -e 10.0.0.105

Export list for 10.0.0.105:

/data/glance *

#在mysql节点创建并授权数据库

[root@openstack-mysql ~]# mysql

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 26

Server version: 10.3.20-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> CREATE DATABASE glance;

Query OK, 1 row affected (0.000 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY 'glance123';

Query OK, 0 rows affected (0.000 sec)

#在controller1节点创建用户

[root@openstack-controller1 ~]# . admin-openrc

[root@openstack-controller1 ~]# openstack user create --domain default --password-prompt glance

User Password:glance

Repeat User Password:glance

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 5e66cc26e56b443996d24f0d687b2cdc |

| name | glance |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

#将admin角色添加到glance用户和 service项目:

[root@openstack-controller1 ~]# openstack role add --project service --user glance admin

#创建glance服务实体:

[root@openstack-controller1 ~]# openstack service create --name glance --description "OpenStack Image" image

#验证

[root@openstack-controller1 ~]# openstack service list

+----------------------------------+----------+----------+

| ID | Name | Type |

+----------------------------------+----------+----------+

| a0b83f4adae046b7a5a4aa0a14df1964 | keystone | identity |

| a86be9572c2f406cbfbf4bd8e0746f80 | glance | image |

+----------------------------------+----------+----------+

#创建图像服务 API 端点:

######openstack service create用于创建后端服务器,相当于k8s中的service name。Openstack endpoint create 用于创建接入点,相当于k8s当中的pod

[root@openstack-controller1 ~]#openstack endpoint create --region RegionOne image public http://openstack-vip.tan.local:9292

+--------------+-------------------------------------+

| Field | Value |

+--------------+-------------------------------------+

| enabled | True |

| id | 91c6fa880fe844c39297bae0df09de6e |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | a86be9572c2f406cbfbf4bd8e0746f80 |

| service_name | glance |

| service_type | image |

| url | http://openstack-vip.tan.local:9292 |

+--------------+-------------------------------------+

[root@openstack-controller1 ~]# openstack endpoint create --region RegionOne image internal http://openstack-vip.tan.local:9292

[root@openstack-controller1 ~]# openstack endpoint create --region RegionOne image internal http://openstack-vip.tan.local:9292

+--------------+-------------------------------------+

| Field | Value |

+--------------+-------------------------------------+

| enabled | True |

| id | ce328fef8c204cfc909d3d88fd6f194e |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | a86be9572c2f406cbfbf4bd8e0746f80 |

| service_name | glance |

| service_type | image |

| url | http://openstack-vip.tan.local:9292 |

+--------------+-------------------------------------+

[root@openstack-controller1 ~]# openstack endpoint create --region RegionOne image admin http://openstack-vip.tan.local:9292

+--------------+-------------------------------------+

| Field | Value |

+--------------+-------------------------------------+

| enabled | True |

| id | ec4c7dc05a524b8b8fd444636e1be4ad |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | a86be9572c2f406cbfbf4bd8e0746f80 |

| service_name | glance |

| service_type | image |

| url | http://openstack-vip.tan.local:9292 |

+--------------+-------------------------------------+

####我们需要openstack-vip.magedu.local可以处理9292端口的请求

#在105 ha1上添加一个listen语句块,转发9292端口的请求。末尾增加如下配置:

[root@openstack-ha1 ~]# vim /etc/haproxy/haproxy.cfg

listen openstack-glance-9292

bind 10.0.0.188:9292

mode tcp

server 10.0.0.101 10.0.0.101:9292 check inter 3s fall 3 rise 5

[root@openstack-ha1 ~]# systemctl restart haproxy

[root@openstack-ha1 ~]# ss -tnl |grep 9292

LISTEN 0 128 10.0.0.188:9292 *:*

#在controller1节点配置:

安装和配置组件

默认配置文件因发行版而异。您可能需要添加这些部分和选项,而不是修改现有的部分和选项。此外,配置片段中的省略号 ( ...) 表示您应该保留的潜在默认配置选项。

#安装软件包:

[root@openstack-controller1 ~]# yum install openstack-glance -y

编辑/etc/glance/glance-api.conf文件并完成以下操作:

[root@openstack-controller1 ~]#vim /etc/glance/glance-api.conf

在该[database]部分中,配置数据库访问:

[database]

# ...

connection = mysql+pymysql://glance:glance123@openstack-vip.tan.local/glance

替换GLANCE_DBPASS为您为图像服务数据库选择的密码。

在[keystone_authtoken]和[paste_deploy]部分中,配置身份服务访问:

[keystone_authtoken]

# ...

www_authenticate_uri = http://openstack-vip.tan.local:5000

auth_url = http://openstack-vip.tan.local:5000

memcached_servers = copenstack-vip.tan.local:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = glance

注释掉或删除该 [keystone_authtoken]部分中的任何其他选项。

[paste_deploy]

# ...

flavor = keystone

在该[glance_store]部分中,配置本地文件系统存储和图像文件的位置:

[glance_store]

# ...

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

#挂在nfs存储到/var/lib/glance/images目录

[root@openstack-controller1 ~]# showmount -e 10.0.0.105

Export list for 10.0.0.105:

/data/glance *

[root@openstack-controller1 ~]# mkdir /var/lib/glance/images

[root@openstack-controller1 ~]# mount -t nfs 10.0.0.105:/data/glance /var/lib/glance/images

[root@openstack-controller1 ~]# ll /var/lib/glance/images -d

drwxr-xr-x 2 root root 6 Sep 20 13:48 /var/lib/glance/images

[root@openstack-controller1 ~]# id glance

uid=161(glance) gid=161(glance) groups=161(glance)

[root@openstack-controller1 ~]# chown 161.161 /var/lib/glance/images -R

#配置开机自动挂载nfs目录

[root@openstack-controller1 ~]# vim /etc/fstab

10.0.0.105:/data/glance /var/lib/glance/images nfs defaults,_netdev 0 0

[root@openstack-controller1 ~]# mount -a

#在ha1服务器上验证/data/glance目录的权限是161

[root@openstack-ha1 ~]# ll -d /data/glance/

drwxr-xr-x 2 161 161 6 Sep 20 13:48 /data/glance/

#填充图像服务数据库:

[root@openstack-controller1 ~]# su -s /bin/sh -c "glance-manage db_sync" glance

#在mysql数据库验证

MariaDB [glance]> show tables;

+----------------------------------+

| Tables_in_glance |

+----------------------------------+

| alembic_version |

| image_locations |

| image_members |

| image_properties |

| image_tags |

| images |

| metadef_namespace_resource_types |

| metadef_namespaces |

| metadef_objects |

| metadef_properties |

| metadef_resource_types |

| metadef_tags |

| migrate_version |

| task_info |

| tasks |

+----------------------------------+

15 rows in set (0.000 sec)

#启动映像服务并将它们配置为在系统启动时启动:

[root@openstack-controller1 ~]# systemctl enable openstack-glance-api.service --now

Created symlink from /etc/systemd/system/multi-user.target.wants/openstack-glance-api.service to /usr/lib/systemd/system/openstack-glance-api.service.

#验证服务监听端口

[root@openstack-controller1 ~]# ss -tnl |grep 9292

LISTEN 0 128 *:9292 *:*

#glance验证

#使用CirrOS验证映像服务的操作,这是一个小型 Linux 映像,可帮助您测试 OpenStack 部署

[root@openstack-controller1 ~]# . admin-openrc

[root@openstack-controller1 ~]# wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

#在做的时候github挂了,所以也可以自己在网上下载cirros镜像。

[root@openstack-controller1 ~]# ls cirros-0.4.0-x86_64-disk.img

cirros-0.4.0-x86_64-disk.img

#使用QCOW2磁盘格式、bare container格式和公共可见性,将图像上传到图像服务 ,以便所有项目都可以访问它:

[root@openstack-controller1 ~]# glance image-create --name "cirros-0.4.0" --file cirros-0.4.0-x86_64-disk.img --disk-format qcow2 --container-format bare --visibility public

+------------------+----------------------------------------------------------------------------------+

| Property | Value |

+------------------+----------------------------------------------------------------------------------+

| checksum | 443b7623e27ecf03dc9e01ee93f67afe |

| container_format | bare |

| created_at | 2022-09-20T06:20:05Z |

| disk_format | qcow2 |

| id | 18f96a10-1e9a-431a-981c-69496dab4531 |

| min_disk | 0 |

| min_ram | 0 |

| name | cirros-0.4.0 |

| os_hash_algo | sha512 |

| os_hash_value | 6513f21e44aa3da349f248188a44bc304a3653a04122d8fb4535423c8e1d14cd6a153f735bb0982e |

| | 2161b5b5186106570c17a9e58b64dd39390617cd5a350f78 |

| os_hidden | False |

| owner | f7c80417780a4bd59beaa38a9d36271e |

| protected | False |

| size | 12716032 |

| status | active |

| tags | [] |

| updated_at | 2022-09-20T06:20:06Z |

| virtual_size | Not available |

| visibility | public |

+------------------+----------------------------------------------------------------------------------+

[root@openstack-controller1 ~]# glance image-list

+--------------------------------------+--------------+

| ID | Name |

+--------------------------------------+--------------+

| 18f96a10-1e9a-431a-981c-69496dab4531 | cirros-0.4.0 |

+--------------------------------------+--------------+

6.placement服务安装

#placement服务安装官方参考文档

https://docs.openstack.org/placement/train/install/

#从s版本开始,从nova中拆分出来的服务。placement作用是起到node节点的可用资源统计

#在mysql节点创建并授权placement数据库

MariaDB [glance]> CREATE DATABASE placement;

Query OK, 1 row affected (0.000 sec)

MariaDB [glance]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY 'placement123';

Query OK, 0 rows affected (0.000 sec)

#在controller1节点配置用户和端点

[root@openstack-controller1 ~]# . admin-openrc

You have new mail in /var/spool/mail/root

[root@openstack-controller1 ~]# openstack user create --domain default --password-prompt placement

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | ff53352e7b714d899b5a90f34054cefd |

| name | placement |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

#将 Placement 用户添加到具有管理员角色的服务项目中:

[root@openstack-controller1 ~]# openstack role add --project service --user placement admin

#在服务目录中创建 Placement API 条目

[root@openstack-controller1 ~]#openstack service create --name placement --description "Placement API" placement

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Placement API |

| enabled | True |

| id | e522f232bba1408bb6ecae4c9fe01051 |

| name | placement |

| type | placement |

+-------------+----------------------------------+

#创建 Placement API 服务端点:

[root@openstack-controller1 ~]# openstack endpoint create --region RegionOne placement public http://openstack-vip.tan.local:8778

+--------------+-------------------------------------+

| Field | Value |

+--------------+-------------------------------------+

| enabled | True |

| id | 28b3c00a0b754afc8e43daa4cb97fe53 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | e522f232bba1408bb6ecae4c9fe01051 |

| service_name | placement |

| service_type | placement |

| url | http://openstack-vip.tan.local:8778 |

+--------------+-------------------------------------+

[root@openstack-controller1 ~]# openstack endpoint create --region RegionOne placement internal http://openstack-vip.tan.local:8778

+--------------+-------------------------------------+

| Field | Value |

+--------------+-------------------------------------+

| enabled | True |

| id | e023840f4d68445f83bc5a609d2402c6 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | e522f232bba1408bb6ecae4c9fe01051 |

| service_name | placement |

| service_type | placement |

| url | http://openstack-vip.tan.local:8778 |

+--------------+-------------------------------------+

[root@openstack-controller1 ~]# openstack endpoint create --region RegionOne placement admin http://openstack-vip.tan.local:8778

+--------------+-------------------------------------+

| Field | Value |

+--------------+-------------------------------------+

| enabled | True |

| id | d525078636dd4ce6a3822b418f9081f3 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | e522f232bba1408bb6ecae4c9fe01051 |

| service_name | placement |

| service_type | placement |

| url | http://openstack-vip.tan.local:8778 |

+--------------+-------------------------------------+

#在ha1节点添加一个listen,配置文件末尾添加如下配置:

[root@openstack-ha1 ~]# vim /etc/haproxy/haproxy.cfg

listen openstack-placement-8778

bind 10.0.0.188:8778

mode tcp

server 10.0.0.101 10.0.0.101:8778 check inter 3s fall 3 rise 5

[root@openstack-ha1 ~]# systemctl restart haproxy

[root@openstack-ha1 ~]# ss -tnl |grep 8778

LISTEN 0 128 10.0.0.188:8778 *:*

#安装和配置组件

#安装软件包

[root@openstack-controller1 ~]# yum install openstack-placement-api -y

#编辑/etc/placement/placement.conf文件并完成以下操作:

[root@openstack-controller1 ~]# vim /etc/placement/placement.conf

在该[placement_database]部分中,配置数据库访问:

[placement_database]

# ...

connection = mysql+pymysql://placement:placement123@openstack-vip.tan.local/placement

替换PLACEMENT_DBPASS为您为放置数据库选择的密码。

在[api]和[keystone_authtoken]部分中,配置身份服务访问:

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

auth_url = http://openstack-vip.tan.local:5000/v3

memcached_servers = openstack-vip.tan.local:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = placement

#填充placement数据库:

[root@openstack-controller1 ~]# su -s /bin/sh -c "placement-manage db sync" placement

#在mysql节点验证数据

MariaDB [glance]> use placement

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

MariaDB [placement]> show tables;

+------------------------------+

| Tables_in_placement |

+------------------------------+

| alembic_version |

| allocations |

| consumers |

| inventories |

| placement_aggregates |

| projects |

| resource_classes |

| resource_provider_aggregates |

| resource_provider_traits |

| resource_providers |

| traits |

| users |

+------------------------------+

12 rows in set (0.000 sec)

######Due to a packaging bug, you must enable access to the Placement API by adding the following configuration to /etc/httpd/conf.d/00-placement-api.conf:

#编辑/etc/httpd/conf.d/00-placement-api.conf将下面配置加入到最下面。

[root@openstack-controller1 ~]# vim /etc/httpd/conf.d/00-placement-api.conf

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

#重启httpd服务:

[root@openstack-controller1 ~]# systemctl restart httpd

######因为会在安装placement服务时在httpd服务的目录放一个配置文件,配置了监听8778端口的虚拟主机

######因为此httpd监听8778的配置文件有问题。在r版本的nova-controller中有写解决此bug的方法。

#placement验证

[root@openstack-controller1 ~]# . admin-openrc

[root@openstack-controller1 ~]# placement-status upgrade check

+----------------------------------+

| Upgrade Check Results |

+----------------------------------+

| Check: Missing Root Provider IDs |

| Result: Success |

| Details: None |

+----------------------------------+

| Check: Incomplete Consumers |

| Result: Success |

| Details: None |

+----------------------------------+

7.计算服务nova简介及安装(nova-controller和nova-compute)

#nova服务安装官方参考文档

https://docs.openstack.org/nova/train/install/

#创建数据库并授权

#创建nova_api、nova和nova_cell0数据库:

[root@openstack-mysql ~]# mysql

MariaDB [(none)]> CREATE DATABASE nova_api;

MariaDB [(none)]> CREATE DATABASE nova;

MariaDB [(none)]> CREATE DATABASE nova_cell0;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY 'nova123';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY 'nova123';

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY 'nova123';

#在controller1节点安装nova-controller

[root@openstack-controller1 ~]# . admin-openrc

#创建nova用户:

[root@openstack-controller1 ~]# openstack user create --domain default --password-prompt nova

User Password:nova

Repeat User Password:nova

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 3ab7cf5eea184cb480adcb0aa770abd5 |

| name | nova |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

#将admin角色添加到nova用户:

[root@openstack-controller1 ~]# openstack role add --project service --user nova admin

#创建nova服务实体:

[root@openstack-controller1 ~]# openstack service create --name nova --description "OpenStack Compute" compute

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Compute |

| enabled | True |

| id | d6a20c8865004583a96a8221778281bc |

| name | nova |

| type | compute |

+-------------+----------------------------------+

#创建计算 API 服务端点:

[root@openstack-controller1 ~]# openstack endpoint create --region RegionOne compute public http://openstack-vip.tan.local:8774/v2.1

+--------------+------------------------------------------+

| Field | Value |

+--------------+------------------------------------------+

| enabled | True |

| id | 84619a59905c460f8688b6cfc1a296dd |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | d6a20c8865004583a96a8221778281bc |

| service_name | nova |

| service_type | compute |

| url | http://openstack-vip.tan.local:8774/v2.1 |

+--------------+------------------------------------------+

[root@openstack-controller1 ~]# openstack endpoint create --region RegionOne compute internal http://openstack-vip.tan.local:8774/v2.1

+--------------+------------------------------------------+

| Field | Value |

+--------------+------------------------------------------+

| enabled | True |

| id | b3176373b2b446d0b7075310b3bca8c3 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | d6a20c8865004583a96a8221778281bc |

| service_name | nova |

| service_type | compute |

| url | http://openstack-vip.tan.local:8774/v2.1 |

+--------------+------------------------------------------+

[root@openstack-controller1 ~]# openstack endpoint create --region RegionOne compute admin http://openstack-vip.tan.local:8774/v2.1

+--------------+------------------------------------------+

| Field | Value |

+--------------+------------------------------------------+

| enabled | True |

| id | 441388195ca24de4ac3950c33ffed66a |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | d6a20c8865004583a96a8221778281bc |

| service_name | nova |

| service_type | compute |

| url | http://openstack-vip.tan.local:8774/v2.1 |

+--------------+------------------------------------------+

#在ha1节点添加一个listen

[root@openstack-ha1 ~]# vim /etc/haproxy/haproxy.cfg

listen openstack-novacontroller-8774

bind 10.0.0.188:8774

mode tcp

server 10.0.0.101 10.0.0.101:8774 check inter 3s fall 3 rise 5

[root@openstack-ha1 ~]# systemctl restart haproxy

[root@openstack-ha1 ~]# ss -tnl |grep 8774

LISTEN 0 128 10.0.0.188:8774 *:*

#安装软件包

[root@openstack-controller1 ~]# yum install openstack-nova-api openstack-nova-conductor openstack-nova-novncproxy openstack-nova-scheduler -y

#编辑/etc/nova/nova.conf文件并完成以下操作:

[root@openstack-controller1 ~]# vim /etc/nova/nova.conf

在该[DEFAULT]部分中,仅启用计算和元数据 API:

[DEFAULT]

# ...

enabled_apis = osapi_compute,metadata

在[api_database]和[database]部分中,配置数据库访问:

[api_database]

# ...

connection = mysql+pymysql://nova:nova123@openstack-vip.tan.local/nova_api

[database]

# ...

connection = mysql+pymysql://nova:nova123@openstack-vip.tan.local/nova

在该[DEFAULT]部分中,配置RabbitMQ消息队列访问:

[DEFAULT]

# ...

transport_url = rabbit://openstack:openstack123@openstack-vip.tan.local:5672/

在[api]和[keystone_authtoken]部分中,配置身份服务访问:

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

www_authenticate_uri = http://openstack-vip.tan.local:5000/

auth_url = http://openstack-vip.tan.local:5000/

memcached_servers = openstack-vip.tan.local:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = nova

在该[DEFAULT]部分中,配置my_ip选项以使用控制器节点的管理接口 IP 地址:

[DEFAULT]

# ...

#my_ip = 10.0.0.11

#####这里不配置my_ip后面在VNC语句块中直接写本机ip

在该[DEFAULT]部分中,启用对网络服务的支持:

[DEFAULT]

# ...

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

#ll /usr/lib/python2.7/site-packages/nova/virt/firewall.py

[vnc]

enabled = true

# ...

server_listen = 10.0.0.101

server_proxyclient_address = 10.0.0.101

######这里直接写本机ip,不要前面的$my_ip

在该[glance]部分中,配置图像服务 API 的位置:

[glance]

# ...

api_servers = http://openstack-vip.tan.local:9292

在该[oslo_concurrency]部分中,配置锁定路径:

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp

在该[placement]部分中,配置对 Placement 服务的访问:

[placement]

# ...

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://openstack-vip.tan.local:5000/v3

username = placement

password = placement

#填充nova-api数据库:

[root@openstack-controller1 ~]# su -s /bin/sh -c "nova-manage api_db sync" nova

#注册cell0数据库:

[root@openstack-controller1 ~]# su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

#创建cell1单元格:

[root@openstack-controller1 ~]# su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

#填充nova数据库:

[root@openstack-controller1 ~]# su -s /bin/sh -c "nova-manage db sync" nova

#忽略此输出中的任何弃用消息。

#验证 nova cell0 和 cell1 是否正确注册:

[root@openstack-controller1 ~]# su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

+-------+--------------------------------------+-------------------------------------------------------+--------------------------------------------------------------+----------+

| Name | UUID | Transport URL | Database Connection | Disabled |

+-------+--------------------------------------+-------------------------------------------------------+--------------------------------------------------------------+----------+

| cell0 | 00000000-0000-0000-0000-000000000000 | none:/ | mysql+pymysql://nova:****@openstack-vip.tan.local/nova_cell0 | False |

| cell1 | 95ddf807-8c97-4ac2-a2c2-04b881eed987 | rabbit://openstack:****@openstack-vip.tan.local:5672/ | mysql+pymysql://nova:****@openstack-vip.tan.local/nova | False |

+-------+--------------------------------------+-------------------------------------------------------+--------------------------------------------------------------+----------+

#启动计算服务并将它们配置为在系统启动时启动:

[root@openstack-controller1 ~]# systemctl enable openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service --now

#在105 haproxy节点添加一个listen.#配置超时时间长一点,maxconn改为30000防止重启nova服务报连接不上数据库的超时错误

[root@openstack-ha1 ~]# vim /etc/haproxy/haproxy.cfg

listen openstack-nova-novncproxy-6080

bind 10.0.0.188:6080

mode tcp

server 10.0.0.101 10.0.0.101:6080 check inter 3s fall 3 rise 5

[root@openstack-ha1 ~]# systemctl restart haproxy

[root@openstack-ha1 ~]# ss -tnl |grep 6080

LISTEN 0 128 10.0.0.188:6080 *:*

#将启动命令放入脚本,一键执行重启nova服务。

[root@openstack-controller1 ~]# vim nova_restart.sh

[root@openstack-controller1 ~]# cat nova_restart.sh

#!/bin/bash

systemctl restart openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

#nova-compute安装

安装软件包:

[root@openstack-compute1 ~]#yum install openstack-nova-compute -y

#编辑/etc/nova/nova.conf文件并完成以下操作:

在该[DEFAULT]部分中,仅启用计算和元数据 API:

[DEFAULT]

# ...

enabled_apis = osapi_compute,metadata

在该[DEFAULT]部分中,配置RabbitMQ消息队列访问:

[DEFAULT]

# ...

transport_url = rabbit://openstack:openstack123@openstack-vip.tan.local

替换为您在 中为 帐户RABBIT_PASS选择的密码。openstackRabbitMQ

在[api]和[keystone_authtoken]部分中,配置身份服务访问:

[api]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

www_authenticate_uri = http://openstack-vip.tan.local:5000/

auth_url = http://openstack-vip.tan.local:5000/

memcached_servers = openstack-vip.tan.local:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = nova

替换为您在身份服务中NOVA_PASS为用户选择的密码。nova

在该[DEFAULT]部分中,配置my_ip选项:

[DEFAULT]

# ...

#my_ip = MANAGEMENT_INTERFACE_IP_ADDRESS

#####my_ip可以不配置,后面直接写ip

在该[DEFAULT]部分中,启用对网络服务的支持:

[DEFAULT]

# ...

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

在该[vnc]部分中,启用和配置远程控制台访问:

[vnc]

# ...

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = 10.0.0.107

novncproxy_base_url = http://openstack-vip.tan.local:6080/vnc_auto.html

####$my_ip不写,这里写node的本机ip地址,其他node写其他node的本机ip

#####这里使用haproxy域名的6080端口已经在上面配置过了

服务器组件侦听所有 IP 地址,代理组件仅侦听计算节点的管理接口 IP 地址。基本 URL 指示您可以使用 Web 浏览器访问此计算节点上实例的远程控制台的位置。

在该[glance]部分中,配置图像服务 API 的位置:

[glance]

# ...

api_servers = http://openstack-vip.tan.local:9292

在该[oslo_concurrency]部分中,配置锁定路径:

[oslo_concurrency]

# ...

lock_path = /var/lib/nova/tmp

在该[placement]部分中,配置 Placement API:

[placement]

# ...

region_name = RegionOne

project_domain_name = Default

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://openstack-vip.tan.local:5000/v3

username = placement

password = placement

#确定您的计算节点是否支持虚拟机的硬件加速:

[root@openstack-compute1 ~]# egrep -c '(vmx|svm)' /proc/cpuinfo

0

#后期开启了VMwareworkstation的cpu虚拟化之后返回值为cpu个数

[root@openstack-compute2 ~]# egrep -c '(vmx|svm)' /proc/cpuinfo

4

#如果此命令返回值不为0,则您的计算节点支持硬件加速,通常不需要额外配置。如果此命令返回值zero,则您的计算节点不支持硬件加速,您必须配置libvirt为使用 QEMU 而不是 KVM。

#编辑/etc/nova/nova.conf文件中的[libvirt]部分,如下所示:

[root@openstack-compute1 ~]# vim /etc/nova/nova.conf

[libvirt]

# ...

virt_type = qemu

#####加上域名解析,否则配置文件中的openstack-vip.magedu.local解析不了。Tail -f /var/log/nova/nova-compute.log会报错

[root@openstack-compute1 ~]# vim /etc/hosts

10.0.0.188 openstack-vip.tan.local

#启动 Compute 服务及其依赖项,并将它们配置为在系统启动时自动启动:

[root@openstack-compute1 ~]# systemctl enable libvirtd.service openstack-nova-compute.service

[root@openstack-compute1 ~]# systemctl start libvirtd.service openstack-nova-compute.service

如果nova-compute服务无法启动,请检查 /var/log/nova/nova-compute.log. 该错误消息可能表明控制器节点上的防火墙正在阻止访问端口 5672。将防火墙配置为打开控制器节点上的端口 5672 并重新启动 计算节点上的服务。

#在controller1节点上运行以下命令。

#将计算节点添加到单元数据库

[root@openstack-controller1 ~]# . admin-openrc

[root@openstack-controller1 ~]# openstack compute service list --service nova-compute

+----+--------------+------------------------------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+--------------+------------------------------+------+---------+-------+----------------------------+

| 7 | nova-compute | openstack-compute1.tan.local | nova | enabled | up | 2022-09-20T09:13:50.000000 |

+----+--------------+------------------------------+------+---------+-------+----------------------------+

#发现计算主机:

[root@openstack-controller1 ~]# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': 95ddf807-8c97-4ac2-a2c2-04b881eed987

Checking host mapping for compute host 'openstack-compute1.tan.local': c4966015-28aa-4401-9223-73f97da6ef59

Creating host mapping for compute host 'openstack-compute1.tan.local': c4966015-28aa-4401-9223-73f97da6ef59

Found 1 unmapped computes in cell: 95ddf807-8c97-4ac2-a2c2-04b881eed987

#添加新计算节点时,必须在控制器节点上运行nova-manage cell_v2 discover_hosts 以注册这些新计算节点。或者,您可以在/etc/nova/nova.conf中设置适当的间隔 :

[scheduler]

discover_hosts_in_cells_interval = 300

[root@openstack-controller1 ~]# bash nova_restart.sh

#Nova服务验证

在控制器节点上执行这些命令。

列出服务组件以验证每个进程的成功启动和注册:

[root@openstack-controller1 ~]# . admin-openrc

[root@openstack-controller1 ~]# openstack compute service list

+----+----------------+---------------------------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+----------------+---------------------------------+----------+---------+-------+----------------------------+

| 4 | nova-conductor | openstack-controller1.tan.local | internal | enabled | up | 2022-09-20T09:16:43.000000 |

| 5 | nova-scheduler | openstack-controller1.tan.local | internal | enabled | up | 2022-09-20T09:16:43.000000 |

| 7 | nova-compute | openstack-compute1.tan.local | nova | enabled | up | 2022-09-20T09:16:50.000000 |

+----+----------------+---------------------------------+----------+---------+-------+----------------------------+

#此输出应指示在控制器节点上启用了两个服务组件,在计算节点上启用了一个服务组件。

#列出身份服务中的 API 端点以验证与身份服务的连接:

[root@openstack-controller1 ~]# openstack catalog list

+-----------+-----------+------------------------------------------------------+

| Name | Type | Endpoints |

+-----------+-----------+------------------------------------------------------+

| keystone | identity | RegionOne |

| | | public: http://openstack-vip.tan.local:5000/v3/ |

| | | RegionOne |

| | | internal: http://openstack-vip.tan.local:5000/v3/ |

| | | RegionOne |

| | | admin: http://openstack-vip.tan.local:5000/v3/ |

| | | |

| glance | image | RegionOne |

| | | public: http://openstack-vip.tan.local:9292 |

| | | RegionOne |

| | | internal: http://openstack-vip.tan.local:9292 |

| | | RegionOne |

| | | admin: http://openstack-vip.tan.local:9292 |

| | | |

| nova | compute | RegionOne |

| | | admin: http://openstack-vip.tan.local:8774/v2.1 |

| | | RegionOne |

| | | public: http://openstack-vip.tan.local:8774/v2.1 |

| | | RegionOne |

| | | internal: http://openstack-vip.tan.local:8774/v2.1 |

| | | |

| placement | placement | RegionOne |

| | | public: http://openstack-vip.tan.local:8778 |

| | | RegionOne |

| | | admin: http://openstack-vip.tan.local:8778 |

| | | RegionOne |

| | | internal: http://openstack-vip.tan.local:8778 |

| | | |

+-----------+-----------+------------------------------------------------------+

#列出图像服务中的图像以验证与图像服务的连接性:

[root@openstack-controller1 ~]# openstack image list

+--------------------------------------+--------------+--------+

| ID | Name | Status |

+--------------------------------------+--------------+--------+

| 18f96a10-1e9a-431a-981c-69496dab4531 | cirros-0.4.0 | active |

+--------------------------------------+--------------+--------+

#检查单元格和placement API 是否成功运行,以及其他必要的先决条件是否到位:

[root@openstack-controller1 ~]# nova-status upgrade check

+--------------------------------+

| Upgrade Check Results |

+--------------------------------+

| Check: Cells v2 |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Placement API |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Ironic Flavor Migration |

| Result: Success |

| Details: None |

+--------------------------------+

| Check: Cinder API |

| Result: Success |

| Details: None |

+--------------------------------+

8.网络服务neutron简介及安装(neutron-controller和neutron-compute)

#neutron服务安装官方参考文档

https://docs.openstack.org/neutron/train/install/install-rdo.html

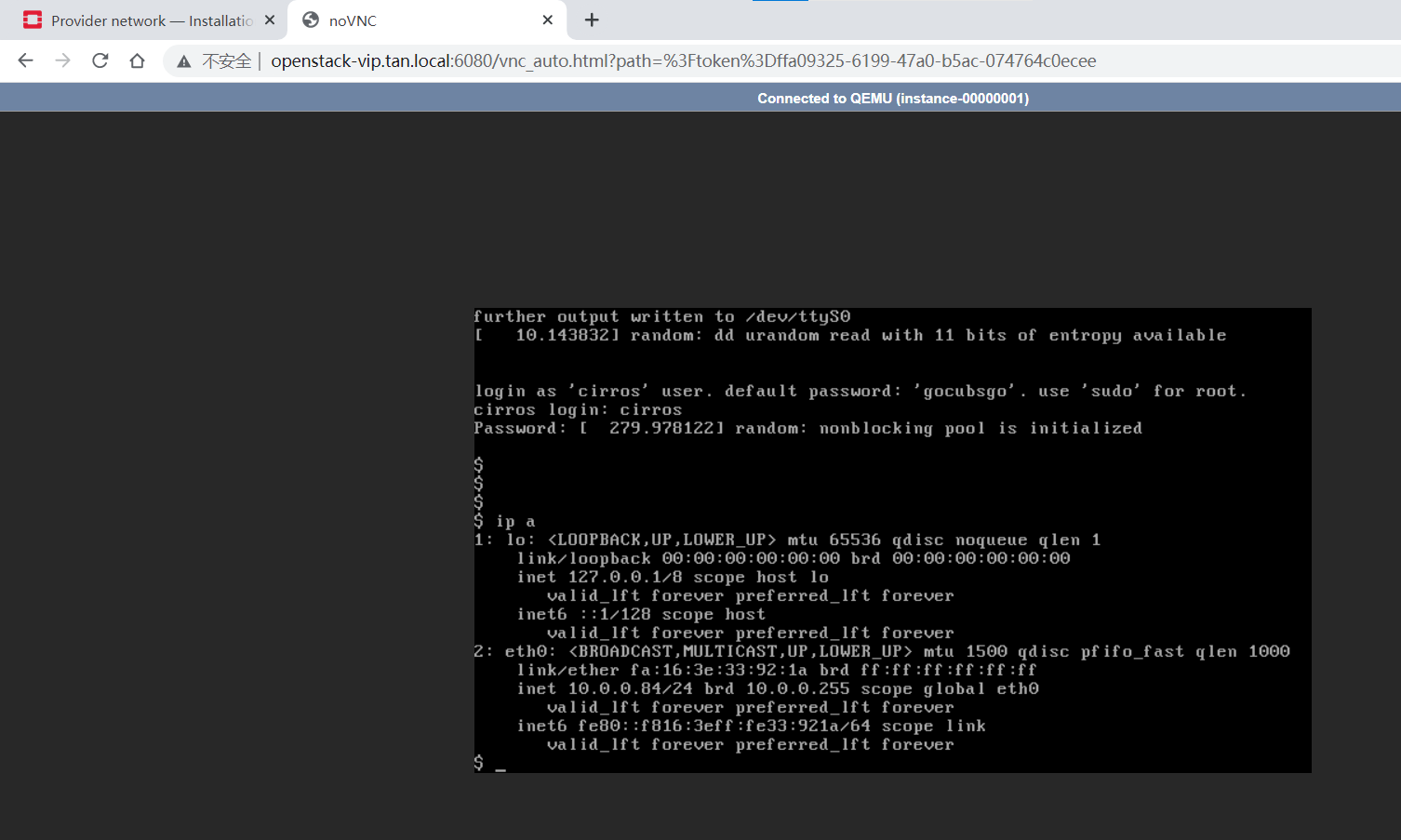

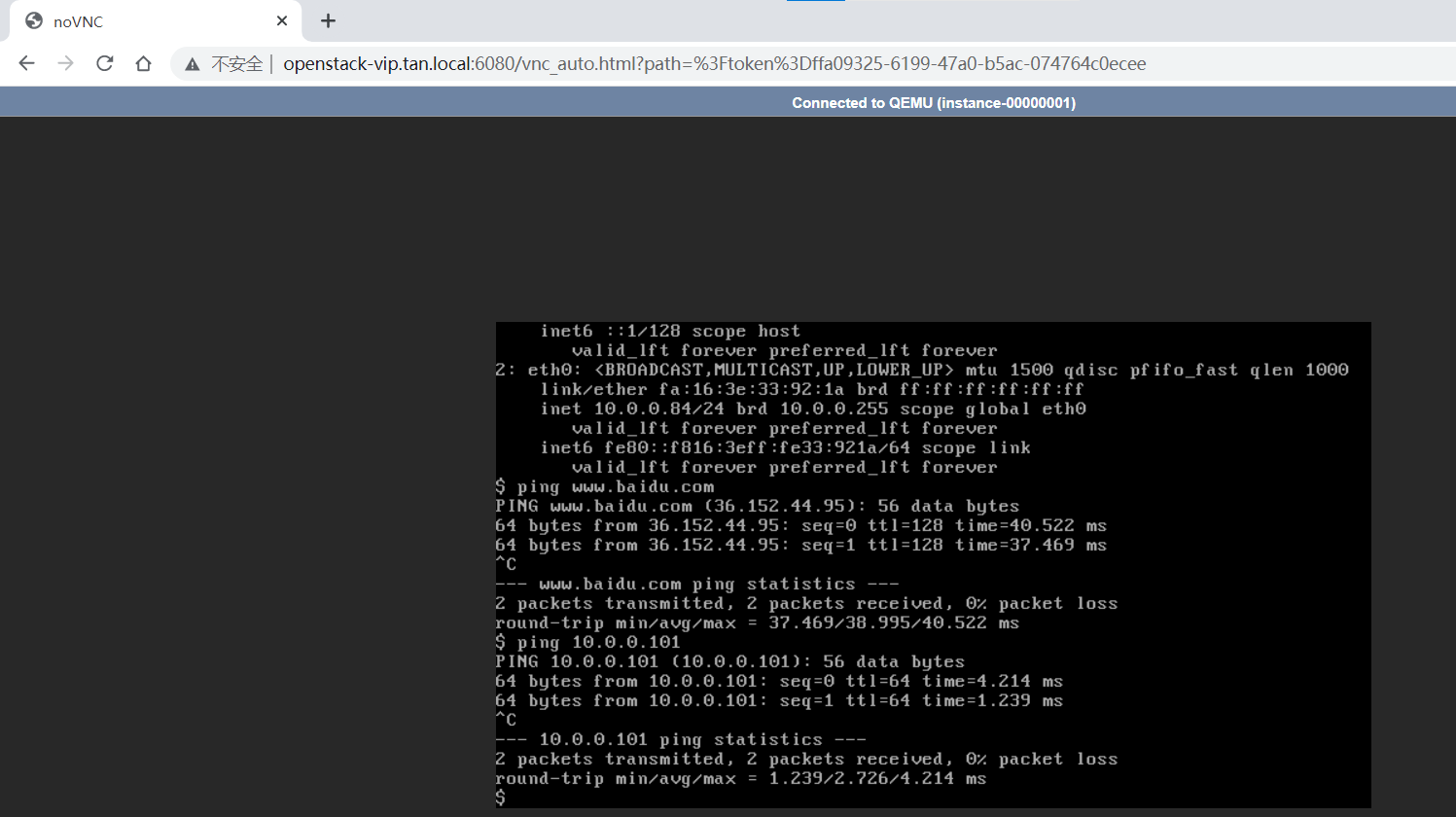

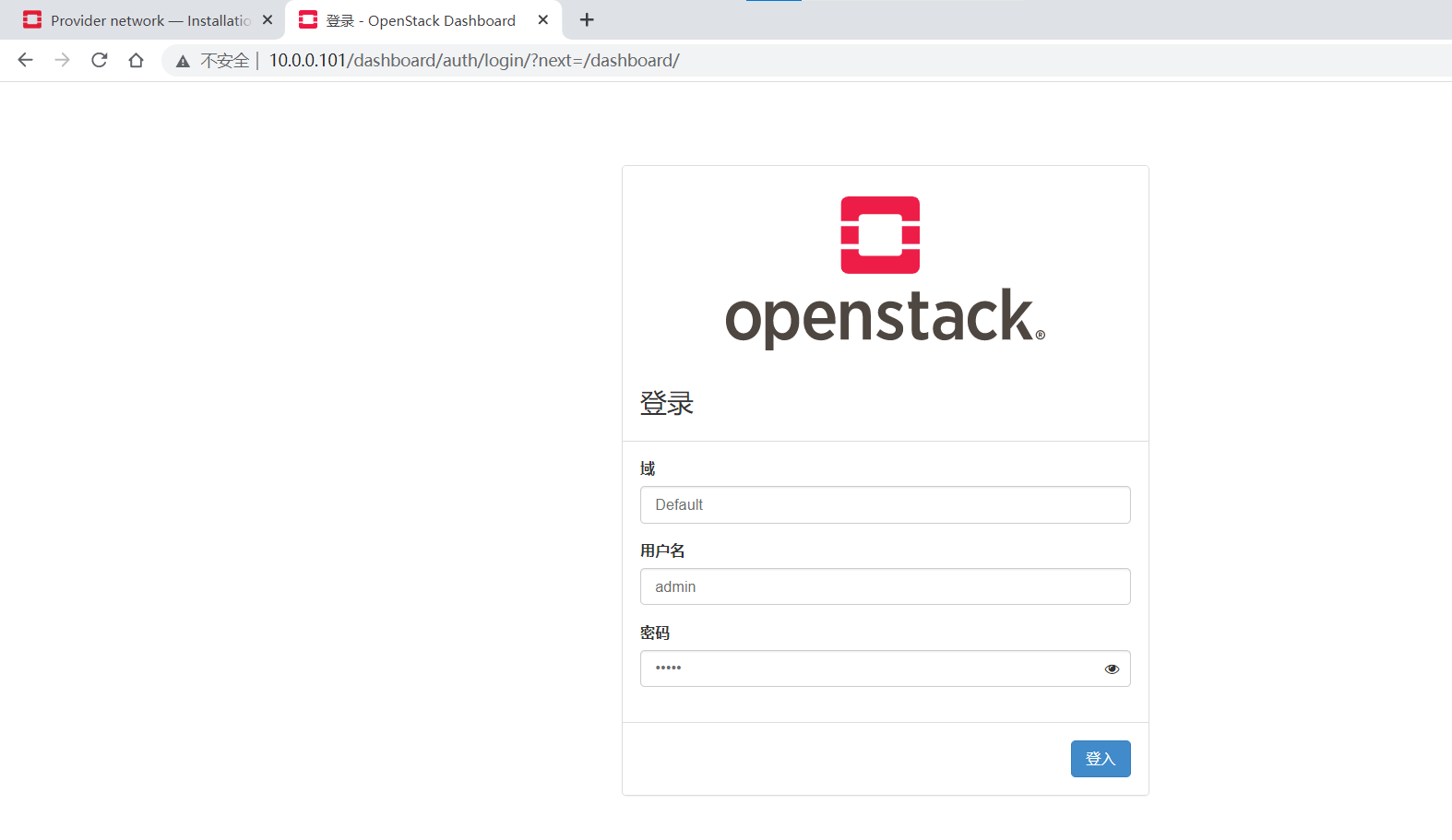

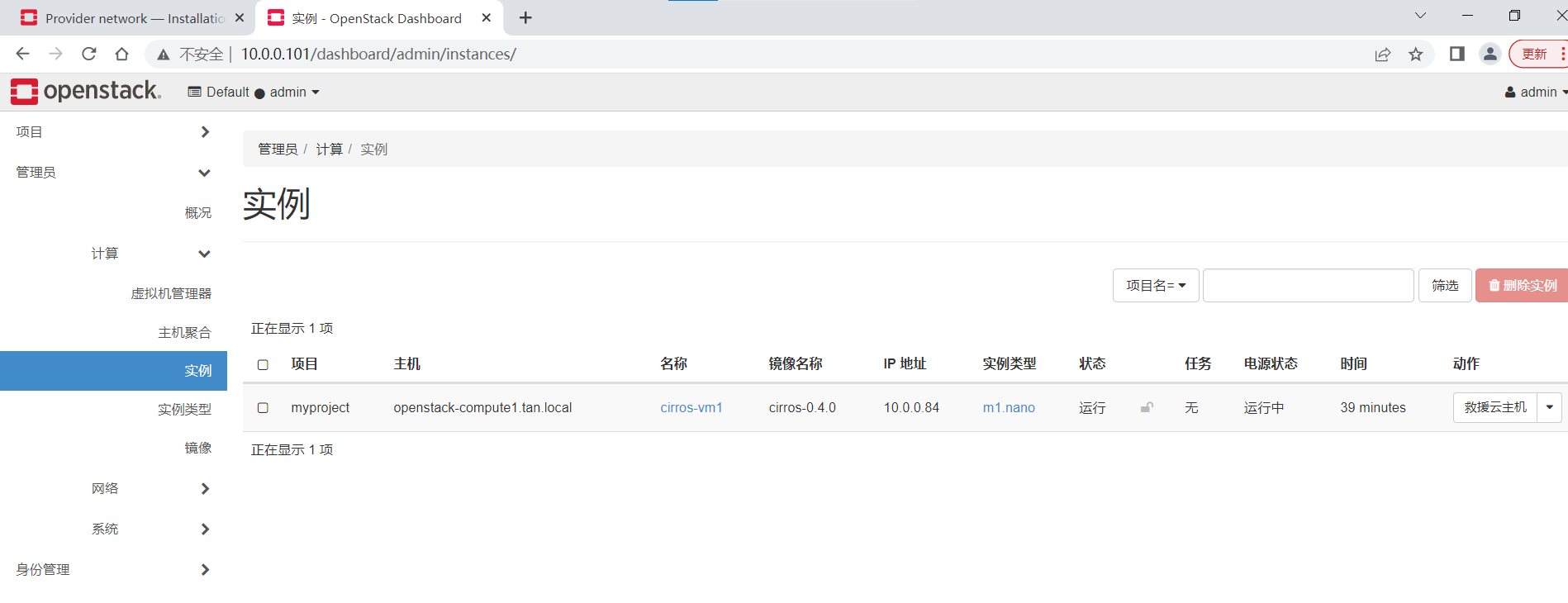

#有两种网络类型可选:

#提供者网络:虚拟机桥接到物理机,并且虚拟机必须和物理机在一个网络范围内。

网络选项 1:提供商网络

提供者网络选项以最简单的方式部署 OpenStack 网络服务,主要包括第 2 层(桥接/交换)服务和网络 VLAN 分段。本质上,它将虚拟网络连接到物理网络,并依赖物理网络基础设施提供第 3 层(路由)服务。此外,DHCP<动态主机配置协议 (DHCP) 服务向实例提供 IP 地址信息。

警告:此选项不支持自助服务(专用)网络、第 3 层(路由)服务和高级服务,例如防火墙即服务 (FWaaS)。如果您需要这些功能,请考虑以下自助服务网络选项。

#自服务网络:可以自己创建网络,最终会通过虚拟路由器连接外网。

自助服务网络选项通过第 3 层(路由)服务增强了提供商网络选项,这些服务支持使用虚拟可扩展 LAN (VXLAN) 等覆盖分段方法的自助服务网络。本质上,它使用网络地址转换 (NAT) 将虚拟网络路由到物理网络。此外,此选项为 FWaaS 等高级服务奠定了基础。

OpenStack 用户可以在不了解数据网络底层基础设施的情况下创建虚拟网络。如果相应地配置了第 2 层插件,这也可以包括 VLAN 网络。

#私有云一般使用提供者网络,公有云使用自服务网络。这里仅演示提供者网络,自服务网络请参考官网文档

#在mysql节点创建数据库并授权

#创建neutron数据库:

[root@openstack-mysql ~]# mysql

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 194

Server version: 10.3.20-MariaDB MariaDB Server

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> CREATE DATABASE neutron;

Query OK, 1 row affected (0.000 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'neutron123';

Query OK, 0 rows affected (0.000 sec)

MariaDB [(none)]> exit

Bye

#在controller1节点执行:

获取admin凭据以访问仅限管理员的 CLI 命令:

[root@openstack-controller1 ~]# . admin-openrc

#创建neutron用户:

[root@openstack-controller1 ~]# openstack user create --domain default --password-prompt neutron

User Password:neutron

Repeat User Password:neutron

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 3cf452cb70e74bdbb39a9597aced8f75 |

| name | neutron |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

#将admin角色添加到neutron用户:

[root@openstack-controller1 ~]# openstack role add --project service --user neutron admin

#创建neutron服务实体:

[root@openstack-controller1 ~]# openstack service create --name neutron --description "OpenStack Networking" network

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Networking |

| enabled | True |

| id | 0b6650fa33914c5bb8b1088d34fa868f |

| name | neutron |

| type | network |

+-------------+----------------------------------+

#创建网络服务 API 端点:

[root@openstack-controller1 ~]# openstack endpoint create --region RegionOne network public http://openstack-vip.tan.local:9696

+--------------+-------------------------------------+

| Field | Value |

+--------------+-------------------------------------+

| enabled | True |

| id | d8135136ffa4410bb957d1dbb8faae1b |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 0b6650fa33914c5bb8b1088d34fa868f |

| service_name | neutron |

| service_type | network |

| url | http://openstack-vip.tan.local:9696 |

+--------------+-------------------------------------+

[root@openstack-controller1 ~]# openstack endpoint create --region RegionOne network internal http://openstack-vip.tan.local:9696

+--------------+-------------------------------------+

| Field | Value |

+--------------+-------------------------------------+

| enabled | True |

| id | aff3cc788bf1497bb16b39af7945f123 |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 0b6650fa33914c5bb8b1088d34fa868f |

| service_name | neutron |

| service_type | network |

| url | http://openstack-vip.tan.local:9696 |

+--------------+-------------------------------------+

[root@openstack-controller1 ~]# openstack endpoint create --region RegionOne network admin http://openstack-vip.tan.local:9696

+--------------+-------------------------------------+

| Field | Value |

+--------------+-------------------------------------+

| enabled | True |

| id | b68d96ebce7a4cfeba8c668c96a13a1b |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 0b6650fa33914c5bb8b1088d34fa868f |

| service_name | neutron |

| service_type | network |

| url | http://openstack-vip.tan.local:9696 |

+--------------+-------------------------------------+

#在105 haproxy节点添加一个listen

[root@openstack-ha1 ~]# vim /etc/haproxy/haproxy.cfg

listen openstack-neutron-controller-9696

bind 10.0.0.188:9696

mode tcp

server 10.0.0.101 10.0.0.101:9696 check inter 3s fall 3 rise 5

[root@openstack-ha1 ~]# systemctl restart haproxy

[root@openstack-ha1 ~]# ss -tnl |grep 9696

LISTEN 0 128 10.0.0.188:9696 *:*

#您可以使用选项 1 和 2 表示的两种架构之一来部署网络服务。这里仅演示提供者网络,自服务网络请参考官网文档

#选项 1 部署了最简单的架构,该架构仅支持将实例附加到提供者(外部)网络。没有自助服务(专用)网络、路由器或浮动 IP 地址。只有该admin或其他特权用户可以管理提供商网络。

#选项 2 通过支持将实例附加到自助服务网络的第 3 层服务来扩充选项 1。该demo或其他非特权用户可以管理自助服务网络,包括提供自助服务和提供商网络之间的连接的路由器。此外,浮动 IP 地址使用来自外部网络(如 Internet)的自助服务网络提供与实例的连接.自助服务网络通常使用覆盖网络。VXLAN 等覆盖网络协议包括额外的标头,这些标头会增加开销并减少可用于有效负载或用户数据的空间。在不了解虚拟网络基础架构的情况下,实例会尝试使用 1500 字节的默认以太网最大传输单元 (MTU) 发送数据包。网络服务通过 DHCP 自动为实例提供正确的 MTU 值。但是,某些云映像不使用 DHCP 或忽略 DHCP MTU 选项,并且需要使用元数据或脚本进行配置。

#网络选项 1:提供商网络

安装组件

[root@openstack-controller1 ~]#yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables -y

#编辑/etc/neutron/neutron.conf文件并完成以下操作:

[root@openstack-controller1 ~]# vim /etc/neutron/neutron.conf

在该[database]部分中,配置数据库访问:

[database]

# ...

connection = mysql+pymysql://neutron:neutron123@openstack-vip.tan.local/neutron

在该[DEFAULT]部分中,启用 Modular Layer 2 (ML2) 插件并禁用其他插件:

[DEFAULT]

# ...

core_plugin = ml2

service_plugins =

在该[DEFAULT]部分中,配置RabbitMQ 消息队列访问:

[DEFAULT]

# ...

transport_url = rabbit://openstack:openstack123@openstack-vip.tan.local

在[DEFAULT]和[keystone_authtoken]部分中,配置身份服务访问:

[DEFAULT]

# ...

auth_strategy = keystone

[keystone_authtoken]

# ...

www_authenticate_uri = http://openstack-vip.tan.local:5000

auth_url = http://openstack-vip.tan.local:5000

memcached_servers = openstack-vip.tan.local:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = neutron

在[DEFAULT]和[nova]部分中,配置 Networking 以通知 Compute 网络拓扑更改:

[DEFAULT]

# ...

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

#这个配置块没有,在文件最后加

[nova]

# ...

auth_url = http://openstack-vip.tan.local:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = nova

在该[oslo_concurrency]部分中,配置锁定路径:

[oslo_concurrency]

# ...

lock_path = /var/lib/neutron/tmp

配置 Modular Layer 2 (ML2) 插件

ML2 插件使用 Linux 桥接机制为实例构建第 2 层(桥接和交换)虚拟网络基础架构。

##########此文件缺失,可以从官网拷贝一份此文件

编辑/etc/neutron/plugins/ml2/ml2_conf.ini文件并完成以下操作:

[root@openstack-controller1 ~]# vim /etc/neutron/plugins/ml2/ml2_conf.ini

在该[ml2]部分中,启用平面和 VLAN 网络:

[ml2]

# ...

type_drivers = flat,vlan

#####单一扁平网络flat,也就是桥接网络。其他还有local,flat,vlan,gre,vxlan,geneve网络

在该[ml2]部分中,禁用自助服务网络:

[ml2]

# ...

tenant_network_types =

在该[ml2]部分中,启用 Linux 桥接机制:

[ml2]

# ...

mechanism_drivers = linuxbridge

警告

配置 ML2 插件后,删除 type_drivers选项中的值可能会导致数据库不一致。

在该[ml2]部分中,启用端口安全扩展驱动程序:

[ml2]

# ...

extension_drivers = port_security

在该[ml2_type_flat]部分中,将提供者虚拟网络配置为平面网络:

[ml2_type_flat]

# ...

flat_networks = external ###配置网络名称,下一个配置文件绑定物理网卡

在该[securitygroup]部分中,启用 ipset 以提高安全组规则的效率:

[securitygroup]

# ...

enable_ipset = true

配置 Linux 网桥代理

Linux 桥接代理为实例构建第 2 层(桥接和交换)虚拟网络基础架构并处理安全组。

#编辑/etc/neutron/plugins/ml2/linuxbridge_agent.ini文件并完成以下操作:

[root@openstack-controller1 ~]# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

在该[linux_bridge]部分中,将提供者虚拟网络映射到提供者物理网络接口:

[linux_bridge]

physical_interface_mappings = external:eth1

替换PROVIDER_INTERFACE_NAME为底层提供者物理网络接口的名称。有关详细信息,请参阅主机网络 。

在该[vxlan]部分中,禁用 VXLAN 覆盖网络:

[vxlan]

enable_vxlan = false

在该[securitygroup]部分中,启用安全组并配置 Linux 网桥 iptables 防火墙驱动程序:

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

#sysctl通过验证以下所有值都设置为1,确保您的 Linux 操作系统内核支持网桥过滤器:

[root@openstack-controller1 ~]# vim /etc/sysctl.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables =1

#modprobe br_netfilter####可以先加载这个模块,否则sysctl -p报错

[root@openstack-controller1 ~]# modprobe br_netfilter

[root@openstack-controller1 ~]# sysctl -p

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

配置 DHCP 代理

DHCP 代理为虚拟网络提供 DHCP 服务。

编辑/etc/neutron/dhcp_agent.ini文件并完成以下操作:

[root@openstack-controller1 ~]# vim /etc/neutron/dhcp_agent.ini

在该[DEFAULT]部分中,配置 Linux 网桥接口驱动程序、Dnsmasq DHCP 驱动程序,并启用隔离元数据,以便提供商网络上的实例可以通过网络访问元数据:

[DEFAULT]

# ...

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

#配置元数据代理

元数据代理向实例提供配置信息,例如凭据。

编辑/etc/neutron/metadata_agent.ini文件并完成以下操作:

[root@openstack-controller1 ~]# vim /etc/neutron/metadata_agent.ini

在该[DEFAULT]部分中,配置元数据主机和共享密钥:

[DEFAULT]

# ...

nova_metadata_host = openstack-vip.tan.local

metadata_proxy_shared_secret = tan20220920

替换METADATA_SECRET为元数据代理的合适密钥。

配置计算服务以使用网络服务

编辑/etc/nova/nova.conf文件并执行以下操作:

在该[neutron]部分中,配置访问参数、启用元数据代理和配置密钥:

[neutron]

# ...

auth_url = http://openstack-vip.tan.local:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = neutron

service_metadata_proxy = true

metadata_proxy_shared_secret = tan20220920

#密码和上面/etc/neutron/metadata_agent.ini[DEFAULT]部分中配置对应

#完成安装

网络服务初始化脚本需要一个 /etc/neutron/plugin.ini指向 ML2 插件配置文件的符号链接,/etc/neutron/plugins/ml2/ml2_conf.ini. 如果此符号链接不存在,请使用以下命令创建它:

[root@openstack-controller1 ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

#填充数据库:

[root@openstack-controller1 ~]# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

#重启nova API 服务:

[root@openstack-controller1 ~]# systemctl restart openstack-nova-api.service

#启动网络服务并将它们配置为在系统启动时启动。

[root@openstack-controller1 ~]# systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

[root@openstack-controller1 ~]# systemctl start neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

#在compute1节点配置

安装组件

[root@openstack-compute1 ~]# yum install openstack-neutron-linuxbridge ebtables ipset -y

配置通用组件

Networking 通用组件配置包括认证机制、消息队列和插件。

编辑/etc/neutron/neutron.conf文件并完成以下操作:

[root@openstack-compute1 ~]# vim /etc/neutron/neutron.conf

在该[database]部分中,注释掉任何connection选项,因为计算节点不直接访问数据库。

在该[DEFAULT]部分中,配置RabbitMQ 消息队列访问:

[DEFAULT]

transport_url = rabbit://openstack:openstack123@openstack-vip.tan.local

在[DEFAULT]和[keystone_authtoken]部分中,配置身份服务访问:

[DEFAULT]

auth_strategy = keystone

[keystone_authtoken]

www_authenticate_uri = http://openstack-vip.tan.local:5000

auth_url = http://openstack-vip.tan.local:5000

memcached_servers = openstack-vip.tan.local:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = neutron

在该[oslo_concurrency]部分中,配置锁定路径:

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

选择您为控制器节点选择的相同网络选项以配置特定于它的服务。之后,返回此处并继续 配置计算服务以使用网络服务。

网络选项 1:提供商网络

配置 Linux 网桥代理

Linux 桥接代理为实例构建第 2 层(桥接和交换)虚拟网络基础架构并处理安全组。

编辑/etc/neutron/plugins/ml2/linuxbridge_agent.ini文件并完成以下操作:

[root@openstack-compute1 ~]# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

在该[linux_bridge]部分中,将提供者虚拟网络映射到提供者物理网络接口:

[linux_bridge]

physical_interface_mappings = external:eth1

####绑定本机的网卡,如果和controller不一样做了bind0就写bind0

替换PROVIDER_INTERFACE_NAME为底层提供者物理网络接口的名称

在该[vxlan]部分中,禁用 VXLAN 覆盖网络:

[vxlan]

enable_vxlan = false

在该[securitygroup]部分中,启用安全组并配置 Linux 网桥 iptables 防火墙驱动程序:

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

#sysctl通过验证以下所有值都设置为1,确保您的 Linux 操作系统内核支持网桥过滤器:

[root@openstack-compute1 ~]# vim /etc/sysctl.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

[root@openstack-compute1 ~]# modprobe br_netfilter

[root@openstack-compute1 ~]# sysctl -p

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

#要启用网络桥接支持,通常br_netfilter需要加载内核模块。有关启用此模块的更多详细信息,请查看您的操作系统文档。

#配置计算服务以使用网络服务

编辑/etc/nova/nova.conf文件并完成以下操作:

[root@openstack-compute1 ~]# vim /etc/nova/nova.conf

在该[neutron]部分中,配置访问参数:

[neutron]

# ...

auth_url = http://openstack-vip.tan.local:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = neutron

#完成安装

#重启计算服务:

[root@openstack-compute1 ~]# systemctl restart openstack-nova-compute.service

#启动 Linux 网桥代理并将其配置为在系统引导时启动:

[root@openstack-compute1 ~]# systemctl enable neutron-linuxbridge-agent.service

Created symlink from /etc/systemd/system/multi-user.target.wants/neutron-linuxbridge-agent.service to /usr/lib/systemd/system/neutron-linuxbridge-agent.service.

[root@openstack-compute1 ~]# systemctl start neutron-linuxbridge-agent.service

#neutron服务验证

在控制器节点上执行这些命令。

#获取admin凭据以访问仅限管理员的 CLI 命令:

[root@openstack-controller1 ~]# . admin-openrc

#列出加载的扩展以验证 neutron-server进程是否成功启动:

[root@openstack-controller1 ~]# openstack extension list --network

+----------------------------------------------------------------------------------------------------------------------------------------------------------------+--------------------------------+----------------------------------------------------------------------------------------------------------------------------------------------------------+

| Name | Alias | Description |

+----------------------------------------------------------------------------------------------------------------------------------------------------------------+--------------------------------+----------------------------------------------------------------------------------------------------------------------------------------------------------+

| Subnet Pool Prefix Operations | subnetpool-prefix-ops | Provides support for adjusting the prefix list of subnet pools |

| Default Subnetpools | default-subnetpools | Provides ability to mark and use a subnetpool as the default. |

| Network IP Availability | network-ip-availability | Provides IP availability data for each network and subnet. |

| Network Availability Zone | network_availability_zone | Availability zone support for network. |

| Subnet Onboard | subnet_onboard | Provides support for onboarding subnets into subnet pools |

| Network MTU (writable) | net-mtu-writable | Provides a writable MTU attribute for a network resource. |

| Port Binding | binding | Expose port bindings of a virtual port to external application |

| agent | agent | The agent management extension. |

| Subnet Allocation | subnet_allocation | Enables allocation of subnets from a subnet pool |

| DHCP Agent Scheduler | dhcp_agent_scheduler | Schedule networks among dhcp agents |

| Neutron external network | external-net | Adds external network attribute to network resource. |

| Empty String Filtering Extension | empty-string-filtering | Allow filtering by attributes with empty string value |

| Neutron Service Flavors | flavors | Flavor specification for Neutron advanced services. |

| Network MTU | net-mtu | Provides MTU attribute for a network resource. |

| Availability Zone | availability_zone | The availability zone extension. |

| Quota management support | quotas | Expose functions for quotas management per tenant |

| Tag support for resources with standard attribute: subnet, trunk, network_segment_range, router, network, policy, subnetpool, port, security_group, floatingip | standard-attr-tag | Enables to set tag on resources with standard attribute. |

| Availability Zone Filter Extension | availability_zone_filter | Add filter parameters to AvailabilityZone resource |

| If-Match constraints based on revision_number | revision-if-match | Extension indicating that If-Match based on revision_number is supported. |

| Filter parameters validation | filter-validation | Provides validation on filter parameters. |

| Multi Provider Network | multi-provider | Expose mapping of virtual networks to multiple physical networks |

| Quota details management support | quota_details | Expose functions for quotas usage statistics per project |

| Address scope | address-scope | Address scopes extension. |

| Agent's Resource View Synced to Placement | agent-resources-synced | Stores success/failure of last sync to Placement |

| Subnet service types | subnet-service-types | Provides ability to set the subnet service_types field |