使用kubeadm安装k8s

k8s中文文档:http://docs.kubernetes.org.cn/

Kubernetes是容器集群管理系统,是一个开源的平台,可以实现容器集群的自动化部署、自动扩缩容、维护等功能。

通过Kubernetes你可以:

- 快速部署应用

- 快速扩展应用

- 无缝对接新的应用功能

- 节省资源,优化硬件资源的使用

2.1:k8s概念及介绍

k8s的设计理念-分层架构:

●云原生生态系统

●接口层:客户端库和实用工具

●管理层:自动化和策略管理

●应用层:部署和路由

●核心层:kubernetesAPI和执行环境 (包括容器运行时接口CRI,容器网络接口CNI,容器存储接口CSI,镜像仓库,云提供商,身份提供商)

k8s设计理念-API设计理念:

●所有API应该是声明式的

●API对象是彼此互补而且可组合的

●高层API操作意图为基础设计

●低层API根据高层API的控制需要设计

●尽量避免简单封装,不要有在外部API无法显式知道的内部隐藏机制

●API操作复杂度与对象数量成正比

●API对象状态不能依赖于网络连接状态

●尽量避免让操作机制依赖于全局状态,因为在分布式系统中保证全局状态的同步是非常困难的

k8s的核心优势

基于yaml 文件实现容器 的 自动创建、删除

更快速实现业务的弹性横向扩容

动态发现新扩容的容器 并对用户提供访问

更简单、更快速的实现业务代码升级和回滚

组件一览

#核心组件:

kube-apiserver:提供了 资源操作的唯 一 入 口,并提供认证、授权、访问控制、API注册和发现等机制

kube-controller-manager:负责维护集群的状态, 比如故障检测、 自动扩展、滚动更新等

kube-scheduler:负责资源的调度,按照预定的调度策略 将Pod调度到相应的机器

kubelet:负责维护容器 的 生命周期,同时也负责Volume(CVI)和 网络(CNI)的管理 ;

Container runtime:负责镜像管理 以及Pod和容器 的真正运 行 (CRI);

kube-proxy:网络代理 , node 上,维护iptables或lvs规则,负责为Service提供cluster内部的服务发现和负载均衡;

etcd:保存了 整个集群的状态,存储k8s集群的数据

#可选组件:

kube-dns:负责为整个集群提供DNS服务

Ingress Controller:为服务提供外 网 入 口

Heapster:提供资源监控

Dashboard:提供GUI

Federation:提供跨可用区的集群

Fluentd-elasticsearch:提供集群 日志采集、存储与查询

kubectl命令使用:

●基础命令:create/delete/edit/get/describe/logs/exec/scale增删改查。explain命令说明。

●配置命令:label标签管理。apply动态配置。

●集群管理命令:cluster-info/top集群状态。cordon/uncordon/drain/taint node节点管理。api-resources/api-versions/version API资源。config客户端kube-config配置。

k8s几个重要概念 :

●对象 k8s是和什么什么打交道? k8s声明式api

●yaml文件 怎么打交道? 调用声明式api

●必需字段 怎么声明?

1.apiVersion

2.kind

3.metadata

4.spec

5.status

POD:

1.pod是k8s中的最小单元

2.一个pod中可以运行一个或多个容器

3.运行多个容器的话,这些容器是一起被调度的

4.pid的生命周期是短暂的,不会自愈,是用完就销毁的实体

5.一般我们是通过controller来创建和管理pod的

controller:控制器

replication controlle #第一代pod副本控制器

replicaset #第二代pod副本控制器

deployment #第三代pod副本控制器

replication controller副本控制器简称rc

1.会自动保持副本数量和文件中一致,

2.监控多个节点上的多个pod

3.与手动创建pod不同的是由rc创建的pod在失败、被删除和被终止时会被自动替换

4.selector = !=

replicaset副本控制集

1.与副本控制器的区别是:对选择器的支持(selector还支持in notin)

2.replicaset下一代的rc

3.确保任何时间都有指定数量的pod在运行

deployment:

1.比rs更高一级的控制器,除了rs的功能之外,还有很多高级功能,比如滚动升级,回滚等

2.它管理replicaset和pod

daemonset:

会在当前k8s集群的每个node创建相同的pod,主要用于在所有的容器执行所有相同的操作的场景

●日志收集

●prometheus

●flannel

statefulset

●基于statefulset来实现如mysql、mongodb集群等有状态服务

●statefulset本质上是deployment的一种变体,在v1.9版本中已成为GA版本,它为了解决有状态服务的问题,它所管理的pod拥有固定的pod名称,启停顺序在statefulset中,pod的名字为网络标识(hostname),还必须要用到共享存储。

service

1.why:pod重启之后ip就变了,pod之间访问会有问题

2.what:解耦了服务和应用

3.how:声明一个service对象

4.一般常用的有两种:

a.k8s集群内的service:selector指定pod,自动创建endpoints

b.k8s集群外的service:手动创建endpoints,指定外部服务的ip,端口和协议

volume

1.why:容器和数据解耦,以及容器间共享数据

2.what:k8s抽象出的一个对象,用来保存数据,做存储用

3.常用的几种卷:

●emptydir:本地临时卷

●hostpath:本地卷

●nfs等:共享卷

●configmap:配置文件

PV和PVC简介

●PV(持久卷)基于已有存储ceph,nfs,nas,P是一个全局资源,不属于任何namespace,独立于pod

●PVC(将持久卷挂载到pod中),消耗存储资源,是namespace中的资源,基于PV,独立于pod

●PV,PVC三种访问模式,RWO,ROX,RWX

●PV删除机制:retain(保持数据)、recycle(空间回收)、delete(自动删除)

pod探针

■三种探测方法

●execation探测,脚本写好探测命令,执行脚本结果$?返回0正常,其他异常

●tcp探测,端口开启正常,否则异常

●http探测,url访问状态码大于等于200小于400正常,否则异常

■两种探测类型

●存活探针:控制pod重启

●就绪探针:控制pod从svc的endpoint去掉,恢复正常了会自动加上。一般两种探测结合使用。

horizontal pod autoscaler HPA控制器简介及实现

●手动扩容缩容方法:

●修改yaml文件的副本数量

●修改dashboard的deployment的pod数量

●kubectl scale命令

●kubectl edit命令

●早高峰晚高峰定时执行扩缩容命令

●自动扩容缩容方法:

●HPA

●管理员执行kubectl autoscale命令设置最小和最大pod数量,基于cpu负载50%来伸缩。这样会配置一个HPA控制器

●使用metrices来实现对容器数据的采集,给hpa提供数据。达到目标值50%就扩容

●或者使用yaml文件定义HPA

k8s基础组件之kube-dns和coredns

●使用方法:server name.namespace name.svc.集群域名后缀

●比如:dashboard-metrics-scraper.kubernetes-dashboard.svc.magedu.local

●services名称不会变,yaml文件中定义。跨服务调用使用完整域名访问。

master和node节点服务详解

kube-scheduler,提供了k8s各类资源对象,的增删改查及watch等HTTP Rest接口,是整个系统的数据总线和数据中心。对外暴露6443端口给其他服务访问。监听分127.0.0.1:8080非安全的端口来调用api。

kube-controller-manager,非安全默认端口10252。负责集群内的node,pod副本,ep,ns,服务账号,资源限定的管理,当某个node意外宕机,会及时发现并执行自动化修复流程,确保集群始终处于预期状态。调用kube-api-server本地8080端口进行通信

kube-scheduler,负责pod的调度,在整个系统起到承上启下的作用,负责接受controller manager创建新的pod,为其选择一个合适的node;启下:选择一个node后,node上的kubelet接管pod的生命周期。 调度方法:1是资源消耗最少的节点,2是选择含有指定label的节点,3是选择各项资源使用率最均衡的节点。

kubelet:每个节点都会启动kubelet进程,处理master下发到本节点的任务,管理pod和其中的容器。会在apiserver上注册节点信息,定期向master汇报节点资源使用情况,并通过cAdvisor(顾问)监控容器和节点资源。

kube-proxy:运行在每个节点上,监听apiserver中服务对象的变化,在通过管理iptables来实现网络的转发。不同版本可支持三种工作模式。

service基于iptables和ipvs实现

●userspace k8sv1.2及以后已淘汰

●iptables,1.1开始支持,1.2开始为默认。kube-proxy监听kubernetes master增加和删除service以及endpoint信息。对于每一个service,kube-proxy创建对应的iptables规则,并将发送到service cluster ip 的流量转发到service后端提供的服务pod的相应端口上。 注意:虽然可以通过svc的cluster ip和服务端口访问到后端pod提供的服务,但是该ip是ping不通的,其原因是cluster ip 只是iptables中的规则,并不对应到一个任何网络设备。ipvs模式的cluster ip是可以ping通的。

●ipvs:1.9引入到1.11正式版本。效率比iptables更高,但是需要在节点安装ipvsadm,ipset工具包和加载ip_vs内核模块,当kube-proxy以ipvs模式启动是,kube_proxy将验证节点是否安装了ipvs模块,如果未安装将回退到iptables代理模式。使用ipvs模式,kube-proxy会监视kubernetes service对象和endpoint,调用宿主机netlink接口以相应的创建ipvs规则并定期与kubernetes service对象和endpoint对象同步ipvs规则,确保ipvs状态与期望一致,访问服务时,流量将被重定向到其中一个后端pod,ipvs使用哈希表作为底层数据结构并在内核空间中工作,这意味着ipvs可以更快的重定向流量,并且在同步代理规则时有更好的性能,此外为负载均衡算法提供了更多选项,例如rr,lc,dh,sh,sed,nq等。lsmod |grep vs

●就算使用ipvs模式,还是有一些iptables规则,是nodeport到service的转发规则。比如nginx的svc监听宿主机的30012端口,iptables会转发宿主机的30012端口流量到容器的80端口。

etcd服务简介

●高可用分布式键值数据库,etcd内部采用raft协议作为一致性算法,etcd基于go语言实现

●监听端口2380

●etcd增删改查数据etcdctl命令

●etcd数据watch机制

●etcd数据备份与恢复

网络组件之flannel简介_udp模式不可用

●有coreos开源的针对k8s的网络服务,其目的是为解决k8s集群中各主机上pod的相互通信问题,其借助etcd维护网络ip地址分配,并为每一个node服务器分配一个不同的ip地址网段。

●同一个k8s集群会给每个pod独一无二的ip地址,会给每一个node分配一个子网。flannel 24位掩码 calico 22位

● flannel网络模型(后端),flannel目前有三种方式实现udp,vxlan,host-gw

●udp模式:使用udp封装完成报文的跨主机转发。需要cni虚拟网桥转发,flannel.0(overlay网络设备)封装一次容器源目地址,eth0封装一次物理机源目地址,封装两次,到对面宿主机再解封两次。安全性和性能略有不足。

●vxlan模式:linux内核在2012年底的v3.7.0之后加入了vxlan协议的支持,因此新版本的flannel也由udp转换为 vxlan,它本质上是一种tunnel隧道协议,用来基于三层网络实现虚拟二层网络。目前flannel的网络模型已经是基于vxlan的叠加(覆盖)网络。

●host-gw模型:通过在node节点上创建到达各目标容器地址的路由表而完成报文的转发。因此这种方式要求各node节点本身必须处于同一个局域网(二层网络)中,因此不适用于网络变动频繁或比较大型的网络环境,但其性能好。

●vxlan的直连网络模式,route -n 查看少了一层flannel.1的封装。

网络组件之calico使用详解

●calico支持更多的网络安全策略,flannel不支持。大多数人认为calico性能略高与flannel

●calico是一个纯三层的网络解决方案,为容器提供多node间的访问通信,calico将每一个node节点都当做一个vroute,各节点间通过BGP边界网关协议学习并在node节点生成路由规则,从而将不同node节点上的pod连接起来。

●BGP是一个去中心化的协议,它通过自动学习和维护路由表实现网络的可用性,但并不是所有的网络都支持BGP,另外为了跨网络实现更大规模的网络管理,calico还支持ip-in-ip的叠加模型(简称IPIP),通过在各个node间做一个tunnel,再把两个网络连接起来的模式。启用IPIP模式时,将在各个node上创建一个名为tunl0的虚拟网络接口。配置calico的配置文件设置是否启用IPIP,如果公司内部k8s的node节点没有跨网段建议关闭IPIP,直接使用物理机作为虚拟路由器,不再额外创建tunnel。

部署方式

kubeadm:部署简单,官方出品。使用场景:开发环境,测试环境。

https://www.kubernetes.org.cn/tags/kubeadm kubernetes中文社区,kubeadm安装

使用k8s官 方提供的部署 工具kubeadm 自动安装,需要在master和node节点上安装docker等组件,然后初始化,把管理 端的控制服务和node上的服务都以pod的 方式运行 。

ansible:推荐github上的kubeasz。kubernetes后期维护方便。

●添加master

●添加node

●升级k8s

container storage interface(CSI)

container storage interface(CSI)

container network interface(CNI)

container runtime interface(CRI)

container volume interface(CVI)

master节点和node节点。

●master节点不保存数据,数据放在etcd集群。

●node节点运行容器。

●防止master受容器负载影响,master节点不运行容器。

●master节点有kube-Controler-manager,kube-scheduler,kube-apiserver。

●node节点有kube-proxy,kubelet。

https://kubernetes.io/zh/docs/setup/production-environment/container-runtimes/ #CRI运 行 时选择docker

https://kubernetes.io/zh-cn/docs/concepts/cluster-administration/addons/ #CNI选择flannel或者calico

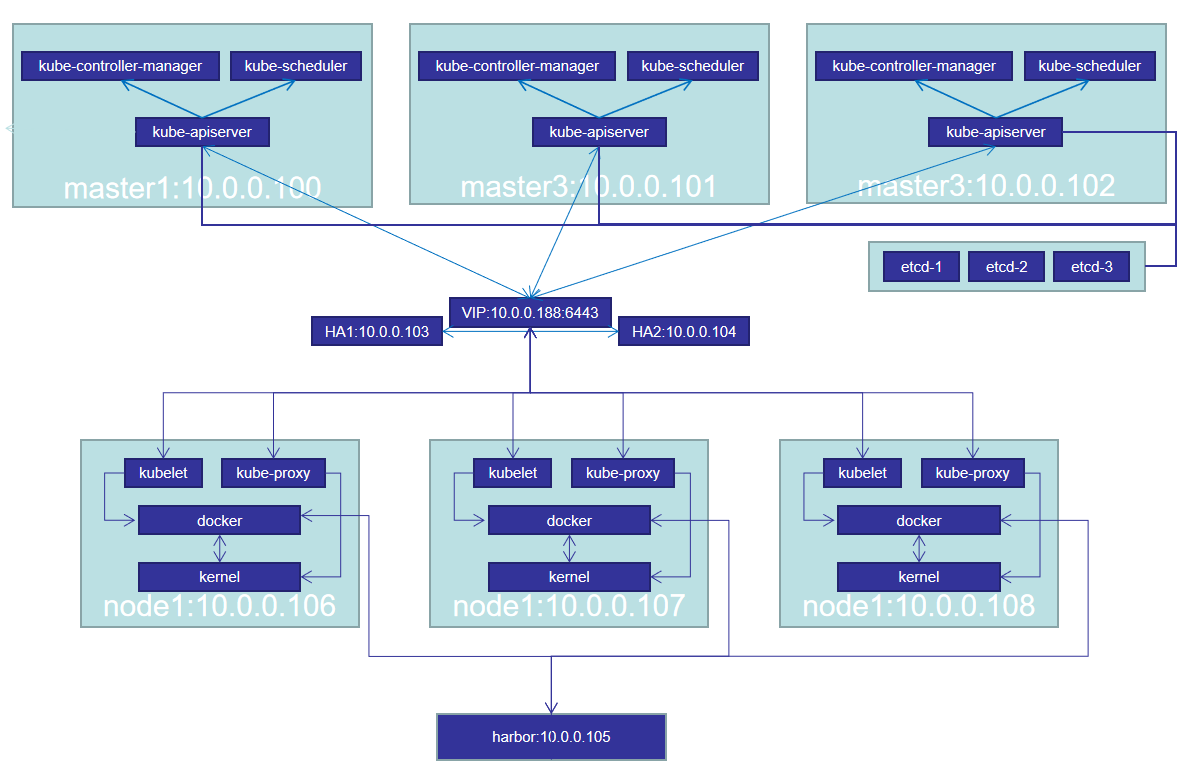

2.2:k8s集群架构图:

2.3:环境准备

使用centos8系统,最小化安装基础系统,关闭防火墙、selinux和swap,更新软件源、时间同步、安装常用命令,重启后验证基础配置

角 色 机名 IP地址

k8s-master1 kubeadm-master1.example.local 10.0.0.100

k8s-master2 kubeadm-master2.example.local 10.0.0.101

k8s-master3 kubeadm-master3.example.local 10.0.0.102

ha1 ha1.example.local 10.0.0.103

ha2 ha2.example.local 10.0.0.104

harbor harbor.example.local 10.0.0.105

node1 node1.example.local 10.0.0.106

node2 node2.example.local 10.0.0.107

node3 node3.example.local 10.0.0.108

2.4:具体步骤

1、基础环境准备

2、部署harbor及haproxy 高可 用反向代理 ,实现控制节点的API反问 入 口 高可 用

3、在所有master节点安装指定版本的kubeadm 、kubelet、kubectl、docker

4、在所有node节点安装指定版本的kubeadm 、kubelet、docker,在node节点kubectl为可选安装,看是否需要在node执 行 kubectl命令进 行 集群管理 及pod管理 等操作。

5、master节点运 行 kubeadm init初始化命令

6、验证master节点状态

7、在node节点使 用kubeadm命令将 自 己加 入k8s master(需要使 用master 生成token认证)

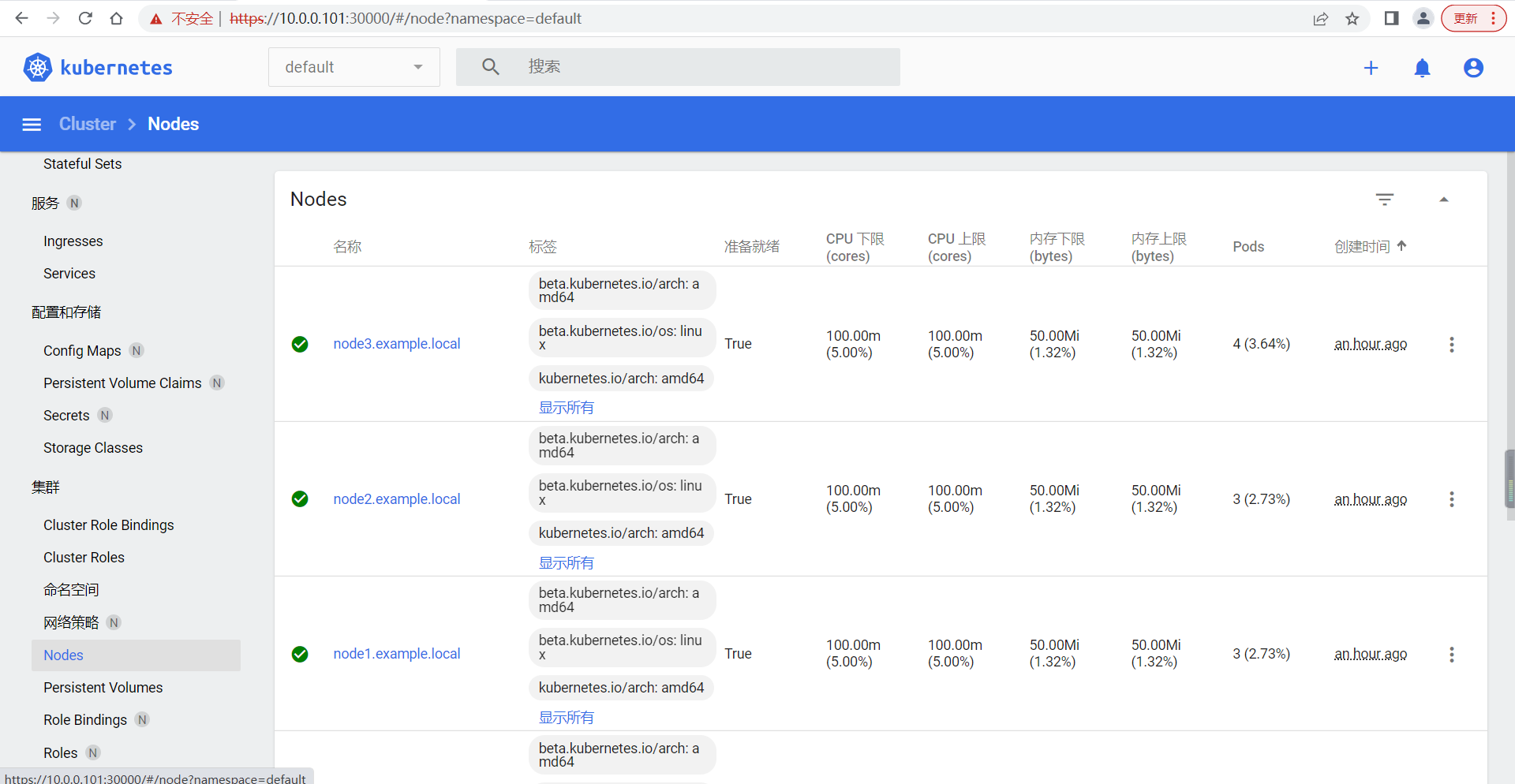

8、验证node节点状态

9、创建pod并测试 网络通信

10、部署web服务Dashboard

11、k8s集群升级案例

2.5:环境配置

查看系统版本

[root@centos8 ~]#cat /etc/centos-release

CentOS Linux release 8.3.2011

配置主机名

[root@centos8 ~]#hostnamectl set-hostname kubeadm-master1.example.local

#断开远程连接工具重新连接

配置网络

[root@centos8 ~]#cat /etc/sysconfig/network-scripts/ifcfg-eth0

TYPE=Ethernet

BOOTPROTO=static

NAME=eth0

DEVICE=eth0

IPADDR=10.0.0.100

PREFIX=24

GATEWAY=10.0.0.2

DNS1=10.0.0.2

DNS2=180.76.76.76

ONBOOT=yes

[root@centos8 ~]#nmcli c reload eth0

[root@centos8 ~]#nmcli c up eth0

关闭swap分区,注释最后一行

[root@centos8 ~]#cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Mon Jul 19 12:39:26 2021

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

UUID=a09f483f-5645-4bf5-84e6-74ff560bfcab / xfs defaults 0 0

UUID=a7cdba8a-1b4f-4cf3-ace5-809ec6b732d6 /boot ext4 defaults 1 2

UUID=b1482237-a49f-4e3b-a534-bb7a1325b7d6 /date xfs defaults 0 0

#UUID=30f25ee0-b7f6-47b5-978d-bc2a3d33e1d4 none swap defaults 0 0

配置yum源

[root@centos8 ~]#mkdir /etc/yum.repos.d/bak

[root@centos8 ~]#mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/bak/

[root@centos8 bak]#curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-8.repo

关闭防火墙和selinux

[root@centos8 ~]#systemctl disable firewalld

[root@centos8 ~]#setenforce 0

[root@centos8 ~]#cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX=disabled

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

优化内核参数,将桥接的ipv4的流量传递到iptables的链

[root@centos8 ~]#vim /etc/sysctl.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@centos8 ~]#modprobe br_netfilter

[root@centos8 ~]#sysctl -p

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

安装常用包

[root@centos8 ~]#yum install vim bash-completion net-tools gcc -y

时间同步

[root@centos8 ~]#systemctl start chronyd

[root@centos8 ~]#timedatectl

Local time: Thu 2022-09-15 17:40:00 CST

Universal time: Thu 2022-09-15 09:40:00 UTC

RTC time: Thu 2022-09-15 09:39:59

Time zone: Asia/Shanghai (CST, +0800)

System clock synchronized: yes

NTP service: active

RTC in local TZ: no

2.6:高可用反向代理

基于keepalived及HAProxy实现高可用反向代理环境,为k8s apiserver提供高可用反向代理

[root@ha1 ~]#yum install -y keepalived haproxy

[root@ha2 ~]#yum install -y keepalived haproxy

[root@ha1 ~]#vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.188 dev eth0 label eth0:1

}

}

[root@ha1 ~]#scp /etc/keepalived/keepalived.conf 10.0.0.104:/etc/keepalived/keepalived.conf

[root@ha2 ~]#vim /etc/keepalived/keepalived.conf

priority 80

[root@ha1 ~]#grep -v "^#" /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

ssl-default-bind-ciphers PROFILE=SYSTEM

ssl-default-server-ciphers PROFILE=SYSTEM

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

listen stats

mode http

bind 0.0.0.0:9999

stats enable

log global

stats uri /haproxy-status

stats auth haadmin:123456

listen k8s-6443

bind 10.0.0.188:6443

mode tcp

server 10.0.0.100 10.0.0.100:6443 check inter 2s fall 3 rise 5

server 10.0.0.101 10.0.0.101:6443 check inter 2s fall 3 rise 5

server 10.0.0.102 10.0.0.102:6443 check inter 2s fall 3 rise 5

[root@ha1 ~]#scp /etc/haproxy/haproxy.cfg 10.0.0.104:/etc/haproxy/haproxy.cfg

[root@ha1 ~]#systemctl enable --now keepalived

[root@ha1 ~]#systemctl enable --now haproxy

#配置内核参数,使app可以绑定不存在的ip地址。因为ha2目前没有vip,但是需要绑定到vip。

[root@ha1 ~]#echo "net.ipv4.ip_nonlocal_bind = 1" >> /etc/sysctl.conf

[root@ha1 ~]#sysctl -p

[root@ha2 ~]#echo "net.ipv4.ip_nonlocal_bind = 1" >> /etc/sysctl.conf

[root@ha2 ~]#sysctl -p

[root@ha2 ~]#systemctl enable --now keepalived

[root@ha2 ~]#systemctl enable --now haproxy

2.7:配置harbor

#安装docker

[root@harbor ~]#yum install -y yum-utils device-mapper-persistent-data lvm2

[root@harbor ~]#yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@harbor ~]#wget https://download.docker.com/linux/centos/7/x86_64/edge/Packages/containerd.io-1.2.6-3.3.el7.x86_64.rpm

#下载比较慢,下载一次后,用scp传输到其他需要安装docker的节点。

[root@harbor ~]#yum install containerd.io-1.2.6-3.3.el7.x86_64.rpm -y

[root@harbor ~]#yum install -y docker-ce --nobest

[root@harbor ~]#systemctl start docker && systemctl enable docker

#配置镜像加速

[root@harbor ~]#mkdir -p /etc/docker

[root@harbor ~]#vim /etc/docker/daemon.json

[root@harbor ~]#cat /etc/docker/daemon.json

{

"registry-mirrors":["https://fl791z1h.mirror.aliyuncs.com"]

}

[root@harbor ~]#systemctl daemon-reload

[root@harbor ~]#systemctl restart docker

#下载docker-compose

[root@harbor ~]#curl -L https://get.daocloud.io/docker/compose/releases/download/1.25.4/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

[root@harbor ~]#chmod +x /usr/local/bin/docker-compose

#下载并安装harbor

[root@harbor ~]#wget https://github.com/goharbor/harbor/releases/download/v2.3.4/harbor-offline-installer-v2.3.4.tgz

[root@harbor ~]#mkdir /app

[root@harbor ~]#tar xf harbor-offline-installer-v2.3.4.tgz -C /app

[root@harbor ~]#cp /app/harbor/harbor.yml.tmpl /app/harbor/harbor.yml

[root@harbor ~]#vim /app/harbor/harbor.yml

修改hostname为私网ip,修改port为5000,注释https配置。

[root@harbor ~]#/app/harbor/install.sh

#验证

[root@harbor ~]#cd /app/harbor/

[root@harbor harbor]#docker-compose ps

Name Command State Ports

------------------------------------------------------------------------------------------

harbor-core /harbor/entrypoint.sh Up (healthy)

harbor-db /docker-entrypoint.sh 96 13 Up (healthy)

harbor-jobservice /harbor/entrypoint.sh Up (healthy)

harbor-log /bin/sh -c /usr/local/bin/ Up (healthy) 127.0.0.1:1514->10514/tcp

...

harbor-portal nginx -g daemon off; Up (healthy)

nginx nginx -g daemon off; Up (healthy) 0.0.0.0:5000->8080/tcp

redis redis-server Up (healthy)

/etc/redis.conf

registry /home/harbor/entrypoint.sh Up (healthy)

registryctl /home/harbor/start.sh Up (healthy)

#登录harbor

[root@harbor harbor]#docker login 10.0.0.105:5000

#修改docker指定仓库

[root@harbor harbor]#vim /lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --insecure-registry 10.0.0.105

[root@harbor harbor]#systemctl daemon-reload

[root@harbor harbor]#systemctl restart docker

[root@harbor harbor]#dockers info

Insecure Registries:

10.0.0.105

[root@harbor harbor]#docker-compose down

[root@harbor harbor]#docker-compose up -d

[root@harbor harbor]#docker-compose ps

2.8:安装kubeadm等组件

#在master和node节点安装kubeadm,kubelet,kubectl,docker等组件,负载均衡服务器不需要安装。在每个master节点和node节点安装经过验证的docker版本

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.17.md#v11711

Update the latest validated version of Docker to 19.03 (#84476, @neolit123)

#安装docker-ce使用阿里云的源

https://developer.aliyun.com/mirror/docker-ce?spm=a2c6h.13651102.0.0.655e1b11Tw5Afz

[root@kubeadm-master1 ~]#yum install -y yum-utils device-mapper-persistent-data lvm2

[root@kubeadm-master1 ~]#yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@kubeadm-master1 ~]#yum install containerd.io-1.2.6-3.3.el7.x86_64.rpm -y

[root@kubeadm-master1 ~]#yum install -y docker-ce --nobest

[root@kubeadm-master1 ~]#systemctl start docker && systemctl enable docker

#配置镜像加速,不安全的仓库,cgroup driver为systemd

[root@kubeadm-master1 ~]#mkdir -p /etc/docker

[root@kubeadm-master1 ~]#vim /etc/docker/daemon.json

{

"registry-mirrors":["https://fl791z1h.mirror.aliyuncs.com"],

"insecure-registries": ["10.0.0.105"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

[root@kubeadm-master1 ~]#systemctl daemon-reload && systemctl restart docker

[root@kubeadm-master1 ~]#docker version

Server: Docker Engine - Community

Engine:

Version: 19.03.15

#所有节点配置阿里云的kubernetes仓库地址并安装相关组件,node节点可选安装kubectl

https://developer.aliyun.com/mirror/kubernetes?spm=a2c6h.13651102.0.0.3e221b11Otippu

[root@kubeadm-master1 ~]#cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#列出有那些版本

[root@kubeadm-master1 ~]#yum --showduplicates list kubeadm

[root@kubeadm-master1 ~]#yum install kubectl-1.20.5-0 kubelet-1.20.5-0 kubeadm-1.20.5-0 -y

[root@kubeadm-master1 ~]#systemctl enable kubelet

[root@kubeadm-master1 ~]#kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.5"

2.9:初始化集群

#在master中的任意一台master进行集群初始化,而且集群初始化只需要初始化一次。

#kubeadm命令使用

https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/ #命令选项及帮助

[root@kubeadm-master1 ~]#kubeadm --help

Available Commands:

alpha #kubeadm处于测试阶段的命令

completion #bash命令补全,需要安装bash-completion

config #管理 kubeadm集群的配置,该配置保留 在集群的ConfigMap中

help #Help about any command

init #初始化 一个Kubernetes控制平面

join #将节点加 入到已经存在的k8s master

reset #还原使 用kubeadm init或者kubeadm join对系统产 生的环境变化

token #管理 token

uprade #升级k8s版本

version #查看版本信息

#kubeadm init命令使用

https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/ #命令使用

https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/kubeadm-init/ #集群初始化

[root@kubeadm-master1 ~]#kubeadm init --help

## --apiserver-advertise-address string #K8S API Server将要监听的监听的本机IP

## --apiserver-bind-port int32 #API Server绑定的端 口,默认为6443

--apiserver-cert-extra-sans stringSlice #可选的证书额外信息, 用于指定API Server的服务器 证书。可以是IP地址也可以是DNS名称。

--cert-dir string #证书的存储路径,缺省路径为/etc/kubernetes/pki

--certificate-key string #定义一个用于加密kubeadm-certs Secret中的控制平台证书的密钥

--config string #kubeadm #配置文件的路径

##--control-plane-endpoint string #为控制平台指定一个稳定的IP地址或DNS名称,即配置 一个可以 长期使 用切是 高可 用的VIP或者域名,k8s 多master 高可 用基于此参数实现

--cri-socket string #要连接的CRI(容器 运 行 时接 口,Container Runtime Interface, 简称CRI套接字的路径,如果为空,则kubeadm将尝试 自动检测此值,"仅当安装了 多个CRI或具有 非标准CRI插槽时,才使 用此选项"

--dry-run #不 要应 用任何更 改,只是输出将要执行的操作,其实就是测试运 行 。

--experimental-kustomize string # 用于存储kustomize为静态pod清单所提供的补丁的路 径。 --feature-gates string # 一组 用来描述各种功能特性的键值(key=value)对,选项是: IPv6DualStack=true|false (ALPHA - default=false)

##--ignore-preflight-errors strings #可以忽略 检查过程 中出现的错误信息, 比如忽略 swap,如果为all就忽略 所有

##--image-repository string #设置 一个镜像仓库,默认为k8s.gcr.io

##--kubernetes-version string #指定安装k8s版本,默认为stable-1

--node-name string #指定node节点名称

##--pod-network-cidr #设置pod ip地址范围

##--service-cidr #设置service 网络地址范围

##--service-dns-domain string #设置k8s内部域名,默认为cluster.local,会有相应的DNS服务(kube-dns/coredns)解析 生成的域名记录。

--skip-certificate-key-print #不 打印 用于加密的key信息

--skip-phases strings #要跳过哪些阶段

--skip-token-print #跳过打印token信息

--token #指定token

--token-ttl #指定token过期时间,默认为24 小时,0为永不 过期

--upload-certs #更 新证书

#全局可选项:

--add-dir-header #如果为true,在日志头部添加日志目录

--log-file string #如果不为空,将使用此日志文件

--log-file-max-size uint #设置日志文件的最大大小,单位为兆,默认为1800兆,0为没有限制

--rootfs #宿主机的根路径,也就是绝对路径

--skip-headers #如果为true,在log志里面不显示标题前缀

--skip-log-headers #如果为true,在log日志里不显示标题

#高可用master初始化

[root@kubeadm-master1 ~]#kubeadm init --apiserver-advertise-address=10.0.0.100 --control-plane-endpoint=10.0.0.188 --apiserver-bind-port=6443 --kubernetes-version=v1.20.5 --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --service-dns-domain=tan.local --image-repository=registry.aliyuncs.com/google_containers --ignore-preflight-errors=swap

#集群初始化结果

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 10.0.0.188:6443 --token lb7w9h.fhfhjn2edax3a8ck \

--discovery-token-ca-cert-hash sha256:f48441950109cc670ab1da7be745b39ed0f95196c23d68e2de087b03989fdcb2 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.0.188:6443 --token lb7w9h.fhfhjn2edax3a8ck \

--discovery-token-ca-cert-hash sha256:f48441950109cc670ab1da7be745b39ed0f95196c23d68e2de087b03989fdcb2

#扩展另外一种master初始化的方法,基于文件初始化。两种方法任选其一

[root@kubeadm-master1 ~]#kubeadm config print init-defaults >kubeadm-init.yaml

[root@kubeadm-master1 ~]#vim kubeadm-init.yaml

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.0.0.100

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: kubeadm-master1.example.local

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 10.0.0.188:6443

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.20.5

networking:

dnsDomain: tan.local

podSubnet: 10.100.0.0/16

serviceSubnet: 10.200.0.0/16

scheduler: {}

[root@kubeadm-master1 ~]#kubeadm init --config kubeadm-init.yaml

2.10:配置kube-config文件及网络组件

#无论使用命令还是文件初始化的k8s环境, 无论是单机还是集群,需要配置一下kube-config 文件及网络组件。

#kube-config 文件:Kube-config 文件中包含kube-apiserver地址及相关认证信息

[root@kubeadm-master1 ~]#mkdir -p $HOME/.kube

[root@kubeadm-master1 ~]#sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@kubeadm-master1 ~]#sudo chown $(id -u):$(id -g) $HOME/.kube/config

#执行下面命令,使kubectl可以自动补充

[root@kubeadm-master1 ~]#source <(kubectl completion bash)

[root@kubeadm-master1 ~]#kubectl get node

NAME STATUS ROLES AGE VERSION

kubeadm-master1.example.local NotReady control-plane,master 13m v1.20.5

[root@kubeadm-master1 ~]#kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7f89b7bc75-9j742 0/1 Pending 0 16m

kube-system coredns-7f89b7bc75-kfmrk 0/1 Pending 0 16m

kube-system etcd-kubeadm-master1.example.local 1/1 Running 0 16m

kube-system kube-apiserver-kubeadm-master1.example.local 1/1 Running 0 16m

kube-system kube-controller-manager-kubeadm-master1.example.local 1/1 Running 0 16m

kube-system kube-proxy-vp64b 1/1 Running 0 16m

kube-system kube-scheduler-kubeadm-master1.example.local 1/1 Running 0 16m

#部署网络组件

https://kubernetes.io/zh/docs/concepts/cluster-administration/addons/ #kubernetes 支持的 网络扩展

https://github.com/flannel-io/flannel #flannel的github项目地址

#flannel的yaml文件地址

https://github.com/flannel-io/flannel/blob/3903d9e9c17a2f0db9d8ac0d6edc2e56357da67b/Documentation/kube-flannel.yml

#calico的yaml文件地址

https://docs.projectcalico.org/manifests/calico.yaml

#在github上下载flannel的yaml文件。在浏览器访问,直接复制内容到kube-flannel.yml文件中。

https://github.com/flannel-io/flannel/blob/3903d9e9c17a2f0db9d8ac0d6edc2e56357da67b/Documentation/kube-flannel.yml

[root@kubeadm-master1 ~]#vim kube-flannel.yml

"Network": "10.244.0.0/16"改为主机的 podCIDR: "Network": "10.100.0.0/16"

#部署flannel

[root@kubeadm-master1 ~]#kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

#等flannel部署完成后,coredns就是running状态了。

[root@kubeadm-master1 ~]#kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-hj6g4 0/1 Init:1/2 0 32s

kube-system coredns-7f89b7bc75-9j742 0/1 Pending 0 38m

kube-system coredns-7f89b7bc75-kfmrk 0/1 Pending 0 38m

kube-system etcd-kubeadm-master1.example.local 1/1 Running 0 38m

kube-system kube-apiserver-kubeadm-master1.example.local 1/1 Running 0 38m

kube-system kube-controller-manager-kubeadm-master1.example.local 1/1 Running 0 38m

kube-system kube-proxy-vp64b 1/1 Running 0 38m

kube-system kube-scheduler-kubeadm-master1.example.local 1/1 Running 0 38m

[root@kubeadm-master1 ~]#kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-hj6g4 1/1 Running 0 111s

kube-system coredns-7f89b7bc75-9j742 1/1 Running 0 39m

kube-system coredns-7f89b7bc75-kfmrk 1/1 Running 0 39m

kube-system etcd-kubeadm-master1.example.local 1/1 Running 0 39m

kube-system kube-apiserver-kubeadm-master1.example.local 1/1 Running 0 39m

kube-system kube-controller-manager-kubeadm-master1.example.local 1/1 Running 0 39m

kube-system kube-proxy-vp64b 1/1 Running 0 39m

kube-system kube-scheduler-kubeadm-master1.example.local 1/1 Running 0 39m

#当前master生成证书,用于添加新的控制节点:

[root@kubeadm-master1 ~]#kubeadm init phase upload-certs --upload-certs

W0916 11:24:28.525992 24180 version.go:102] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get "https://storage.googleapis.com/kubernetes-release/release/stable-1.txt": dial tcp [::1]:443: connect: connection refused

W0916 11:24:28.526044 24180 version.go:103] falling back to the local client version: v1.20.5

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

432a6ec33740451b5820e756ff6c8309a69b96f070464d19417f55d319a9a87f

2.11:添加节点到k8s集群

#将其他的maser节点及node节点分别添加到k8集群中。

#在另外 一台已经安装了 docker、kubeadm和kubelet的master节点上执 行 以下操作:

#master2节点:

[root@kubeadm-master2 ~]#kubeadm join 10.0.0.188:6443 --token lb7w9h.fhfhjn2edax3a8ck --discovery-token-ca-cert-hash sha256:f48441950109cc670ab1da7be745b39ed0f95196c23d68e2de087b03989fdcb2 --control-plane --certificate-key 432a6ec33740451b5820e756ff6c8309a69b96f070464d19417f55d319a9a87f

#master3节点:

[root@kubeadm-master3 ~]#kubeadm join 10.0.0.188:6443 --token lb7w9h.fhfhjn2edax3a8ck --discovery-token-ca-cert-hash sha256:f48441950109cc670ab1da7be745b39ed0f95196c23d68e2de087b03989fdcb2 --control-plane --certificate-key 432a6ec33740451b5820e756ff6c8309a69b96f070464d19417f55d319a9a87f

#查看node信息

[root@kubeadm-master1 ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubeadm-master1.example.local Ready control-plane,master 55m v1.20.5

kubeadm-master2.example.local Ready control-plane,master 98s v1.20.5

kubeadm-master3.example.local Ready control-plane,master 32s v1.20.5

#添加node节点

#各需要加 入到k8s master集群中的node节点都要安装docker kubeadm kubelet ,因此都要重新执 行 安装docker kubeadm kubelet的步骤,即配置apt仓库、配置docker加速器 、安装命令、启动kubelet服务。添加命令为master端kubeadm init 初始化完成之后返回的添加命令

#添加node1节点

[root@node1 ~]#kubeadm join 10.0.0.188:6443 --token lb7w9h.fhfhjn2edax3a8ck --discovery-token-ca-cert-hash sha256:f48441950109cc670ab1da7be745b39ed0f95196c23d68e2de087b03989fdcb2

#添加node2节点

[root@node2 ~]#kubeadm join 10.0.0.188:6443 --token lb7w9h.fhfhjn2edax3a8ck --discovery-token-ca-cert-hash sha256:f48441950109cc670ab1da7be745b39ed0f95196c23d68e2de087b03989fdcb2

#添加node3节点

[root@node3 ~]#kubeadm join 10.0.0.188:6443 --token lb7w9h.fhfhjn2edax3a8ck --discovery-token-ca-cert-hash sha256:f48441950109cc670ab1da7be745b39ed0f95196c23d68e2de087b03989fdcb2

#验证节点信息

#各Node节点会自动加入到master节点,下载镜像并启动flannel,直到最终在master看到node处于Ready状态。

[root@kubeadm-master1 ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubeadm-master1.example.local Ready control-plane,master 60m v1.20.5

kubeadm-master2.example.local Ready control-plane,master 6m42s v1.20.5

kubeadm-master3.example.local Ready control-plane,master 5m36s v1.20.5

node1.example.local Ready <none> 119s v1.20.5

node2.example.local Ready <none> 69s v1.20.5

node3.example.local Ready <none> 67s v1.20.5

#验证当前证书状态

[root@kubeadm-master1 ~]#kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-758gv 9m20s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:lb7w9h Approved,Issued

csr-gjb6w 3m46s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:lb7w9h Approved,Issued

csr-hcwbk 8m13s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:lb7w9h Approved,Issued

csr-kszbx 3m44s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:lb7w9h Approved,Issued

csr-qqv6c 62m kubernetes.io/kube-apiserver-client-kubelet system:node:kubeadm-master1.example.local Approved,Issued

csr-zbv46 4m36s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:lb7w9h Approved,Issued

#k8s创建容器,测试内部网络

创建测试容器 ,测试 网络连接是否可以通信:

注:单master节点要允许pod运 行 在master节点

#kubectl taint nodes --all node-role.kubernetes.io/master-

[root@kubeadm-master1 ~]#kubectl run net-test1 --image=alpine sleep 360000

pod/net-test1 created

[root@kubeadm-master1 ~]#kubectl run net-test2 --image=alpine sleep 360000

pod/net-test2 created

[root@kubeadm-master1 ~]#kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test1 1/1 Running 0 28s 10.100.5.2 node3.example.local <none> <none>

net-test2 1/1 Running 0 20s 10.100.4.2 node2.example.local <none> <none>

[root@kubeadm-master1 ~]#kubectl exec -it net-test1 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 16:C3:F6:29:F5:D1

inet addr:10.100.5.2 Bcast:10.100.5.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1

RX packets:43 errors:0 dropped:0 overruns:0 frame:0

TX packets:1 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:6026 (5.8 KiB) TX bytes:42 (42.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

#验证到另一个容器的内部网络通信状态

/ # ping 10.100.4.2

PING 10.100.4.2 (10.100.4.2): 56 data bytes

64 bytes from 10.100.4.2: seq=0 ttl=62 time=0.816 ms

64 bytes from 10.100.4.2: seq=1 ttl=62 time=0.369 ms

64 bytes from 10.100.4.2: seq=2 ttl=62 time=0.408 ms

^C

--- 10.100.4.2 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.369/0.531/0.816 ms

#验证到外网百度的通信状态

/ # ping www.baidu.com

PING www.baidu.com (36.152.44.95): 56 data bytes

64 bytes from 36.152.44.95: seq=0 ttl=127 time=35.853 ms

64 bytes from 36.152.44.95: seq=1 ttl=127 time=36.257 ms

^C

--- www.baidu.com ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 35.853/36.055/36.257 ms

2.12:部署dashboard

https://github.com/kubernetes/dashboard

#部署dashboard v2.2.0

[root@kubeadm-master1 ~]#wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc7/aio/deploy/recommended.yaml

--2022-09-16 11:54:18-- https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc7/aio/deploy/recommended.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 0.0.0.0, ::

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|0.0.0.0|:443... failed: Connection refused.

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|::|:443... failed: Connection refused.

#给的链接连不上,直接到github上找到yaml文件复制内容到自己的文件中。自己选择版本。

https://github.com/kubernetes/dashboard/blob/master/aio/deploy/recommended.yaml

#官方部署dashboard的服务没使用nodeport,将yaml文件下载到本地,在service里添加nodeport

[root@kubeadm-master1 ~]#vim dashboard-2.2.0.yaml

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #增加

ports:

- port: 443

targetPort: 8443

nodePort: 30000 #增加

selector:

k8s-app: kubernetes-dashboard

#创建项目目录

[root@kubeadm-master1 ~]#mkdir /opt/k8s-date

[root@kubeadm-master1 ~]#cd /opt/k8s-date

[root@kubeadm-master1 k8s-date]#mkdir yaml

[root@kubeadm-master1 k8s-date]#cd yaml

[root@kubeadm-master1 yaml]#mkdir namespace #逻辑隔离

[root@kubeadm-master1 yaml]#mkdir kubernetes-dashboard #项目目录

#拷贝dashboard的yaml文件到项目目录下

[root@kubeadm-master1 yaml]#cp /root/dashboard-2.2.0.yml kubernetes-dashboard/

#创建证书目录

[root@kubeadm-master1 yaml]#mkdir kubernetes-dashboard/dashboard-certs

#创建key文件

[root@kubeadm-master1 dashboard-certs]#openssl genrsa -out dashboard.key 2048

#证书请求

openssl req -days 36000 -new -out dashboard.csr -key dashboard.key -subj '/CN=dashboard-cert'

#自签名证书

openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

# 创建kubernetes-dashboard-certs对象

kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kubernetes-dashboard

#安装kubernetes-dashboard

[root@kubeadm-master1 dashboard-certs]#cd ..

[root@kubeadm-master1 kubernetes-dashboard]#ls

dashboard-2.2.0.yml dashboard-admin.yaml

dashboard-admin-bind-cluster-role.yaml dashboard-certs

[root@kubeadm-master1 kubernetes-dashboard]#kubectl create -f dashboard-2.2.0.yml

#创建管理用户

[root@kubeadm-master1 kubernetes-dashboard]#vim dashboard-admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: dashboard-admin

namespace: kubernetes-dashboard

[root@kubeadm-master1 kubernetes-dashboard]#kubectl create -f ./dashboard-admin.yaml

#为用户分配权限

[root@kubeadm-master1 kubernetes-dashboard]#vim dashboard-admin-bind-cluster-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: dashboard-admin-bind-cluster-role

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kubernetes-dashboard

[root@kubeadm-master1 kubernetes-dashboard]#kubectl create -f ./dashboard-admin-bind-cluster-role.yaml

#查看service

[root@kubeadm-master1 kubernetes-dashboard]#kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.200.76.171 <none> 8000/TCP 21m

kubernetes-dashboard NodePort 10.200.186.241 <none> 443:30000/TCP 21m

#验证30000端口是否监听

[root@kubeadm-master1 ~]#ss -tnl |grep 30000

LISTEN 0 128 0.0.0.0:30000 0.0.0.0:*

#每个节点都会监听30000端口

[root@node3 ~]#lsof -i :30000

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

kube-prox 11545 root 10u IPv4 158277 0t0 TCP *:ndmps (LISTEN)

#获取登录token

[root@kubeadm-master1 kubernetes-dashboard]#kubectl describe secrets -n kubernetes-dashboard `kubectl get secrets -A |grep dashboard-admin |awk 'NR==1{print $2}'` | grep token | awk 'NR==3{print $2}'

eyJhbGciOiJSUzI1NiIsImtpZCI6Ik9LeTJlVERIdkZIR3FYTXFLa21rZGM0MWhyS3BONTNiYTdWZC0xZzFBb1UifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tbmZkZ3oiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNDgwOWY1NzgtMGYwOS00YjY0LTk5NGUtNGJkNTk2MmZlMjcwIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.QzOqjdGGXjgHxdzTv_zufH_8tA2Z8eBmTcHYIxDzIDeskE8sAYOds--valwzC1qGbWFVAorDU9yI13OLtq0DGYpxynkX_Un33KBq1Rh_nm7NI5wi0czPlvV348c0jCtWy8nyiCw9ZQJ4R1g0ngek_6CJoQWvtDxtQ5ohJpmm9mVM8UgRTfUkjEWlMBJUnxDlqDxyaUpR1nwqpnJJTpuCxUQsEN-35Gkt6a7PzIqlCLngzHjPLNoB9hHgU-B8ZjnxFSKGUBSIyEUSZZcQxBNOYigrchap6QgK9D2epsrQIfLLVNBXO_WWPlR03MD2qslKRuGtdf9oaPyZsmPfthcLxQ

三、基于k8s部署运行nginx+tomcat实现动静分离

3.1:运行nginx

https://kubernetes.io/zh/docs/concepts/workloads/controllers/deployment/

[root@kubeadm-master1 yaml]#pwd

/opt/k8s-date/yaml

#编辑nginx的yaml文件

[root@kubeadm-master1 yaml]#mkdir nginx

[root@kubeadm-master1 yaml]#vim nginx/nginx.yml

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: default

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.18.0

ports:

- containerPort: 80

---

kind: Service

apiVersion: v1

metadata:

labels:

app: test-nginx-service-label

name: test-nginx-service

namespace: default

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 30004

selector:

app: nginx

#部署nginx

[root@kubeadm-master1 yaml]#kubectl apply -f nginx/nginx.yml

deployment.apps/nginx-deployment created

service/test-nginx-service created

[root@kubeadm-master1 yaml]#kubectl get pod

NAME READY STATUS RESTARTS AGE

net-test1 1/1 Running 0 7h29m

net-test2 1/1 Running 0 7h29m

nginx-deployment-67dfd6c8f9-44dlf 1/1 Running 0 38s

#验证

[root@kubeadm-master1 yaml]#curl http://10.0.0.100:30004/

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

3.2:运行tomcat

#编辑tomcat的yaml文件

[root@kubeadm-master1 yaml]#pwd

/opt/k8s-date/yaml

[root@kubeadm-master1 yaml]#mkdir tomcat

[root@kubeadm-master1 yaml]#vim tomcat/tomcat.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: default

name: tomcat-deployment

labels:

app: tomcat

spec:

replicas: 1

selector:

matchLabels:

app: tomcat

template:

metadata:

labels:

app: tomcat

spec:

containers:

- name: tomcat

image: tomcat

ports:

- containerPort: 8080

---

kind: Service

apiVersion: v1

metadata:

labels:

app: test-tomcat-service-label

name: test-tomcat-service

namespace: default

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 30005

selector:

app: tomcat

#部署

[root@kubeadm-master1 yaml]#kubectl apply -f tomcat/tomcat.yaml

deployment.apps/tomcat-deployment created

service/test-tomcat-service created

#访问验证,返回Apache Tomcat/10.0.14服务正常。not found是因为tomcat没有资源。

[root@kubeadm-master1 yaml]#curl 10.0.0.100:30005

<!doctype html><html lang="en"><head><title>HTTP Status 404 – Not Found</title><style type="text/css">body {font-family:Tahoma,Arial,sans-serif;} h1, h2, h3, b {color:white;background-color:#525D76;} h1 {font-size:22px;} h2 {font-size:16px;} h3 {font-size:14px;} p {font-size:12px;} a {color:black;} .line {height:1px;background-color:#525D76;border:none;}</style></head><body><h1>HTTP Status 404 – Not Found</h1><hr class="line" /><p><b>Type</b> Status Report</p><p><b>Description</b> The origin server did not find a current representation for the target resource or is not willing to disclose that one exists.</p><hr class="line" /><h3>Apache Tomcat/10.0.14</h3></body></html>

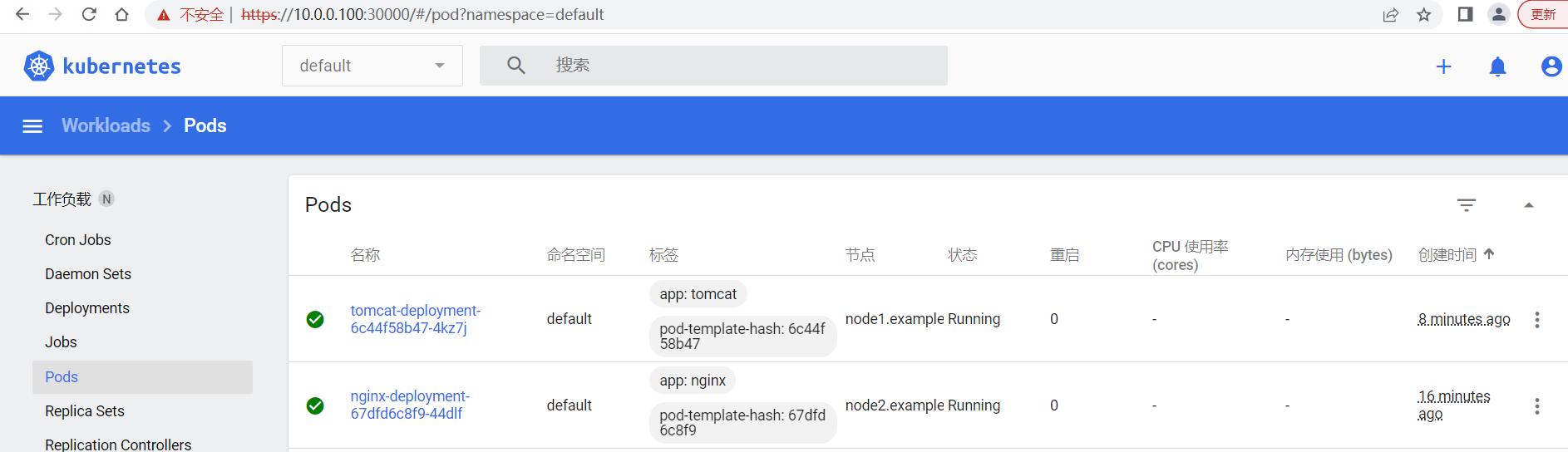

3.3:在dashboard验证pod

3.4:进入tomcat pod生成app

root@kubeadm-master1 yaml]#kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test1 1/1 Running 0 7h47m 10.100.5.2 node3.example.local <none> <none>

net-test2 1/1 Running 0 7h47m 10.100.4.2 node2.example.local <none> <none>

nginx-deployment-67dfd6c8f9-44dlf 1/1 Running 0 17m 10.100.4.4 node2.example.local <none> <none>

tomcat-deployment-6c44f58b47-4kz7j 1/1 Running 0 9m54s 10.100.3.6 node1.example.local <none> <none>

[root@kubeadm-master1 yaml]#kubectl exec -it tomcat-deployment-6c44f58b47-4kz7j sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

# pwd

/usr/local/tomcat

# ls

BUILDING.txt NOTICE RUNNING.txt lib temp work

CONTRIBUTING.md README.md bin logs webapps

LICENSE RELEASE-NOTES conf native-jni-lib webapps.dist

# cd webapps

# mkdir tomcat

# echo "<h1>Tomcat test page for Pod</h1>" > tomcat/index.html

[root@kubeadm-master1 yaml]#curl 10.0.0.100:30005/tomcat/index.html

<h1>Tomcat test page for Pod</h1>

3.5:nginx实现动静分离

[root@kubeadm-master2 ~]#kubectl get pod

NAME READY STATUS RESTARTS AGE

net-test1 1/1 Running 0 7h55m

net-test2 1/1 Running 0 7h55m

nginx-deployment-67dfd6c8f9-44dlf 1/1 Running 0 26m

tomcat-deployment-6c44f58b47-4kz7j 1/1 Running 0 18m

[root@kubeadm-master2 ~]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.200.0.1 <none> 443/TCP 8h

test-nginx-service NodePort 10.200.85.84 <none> 80:30004/TCP 25m

test-tomcat-service NodePort 10.200.185.236 <none> 80:30005/TCP 17m

#进入pod

[root@kubeadm-master1 yaml]#kubectl exec -it nginx-deployment-67dfd6c8f9-44dlf bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@nginx-deployment-67dfd6c8f9-44dlf:/# cat /etc/issue

Debian GNU/Linux 10 \n \l

#更新软件源并安装基础命令

root@nginx-deployment-67dfd6c8f9-44dlf:/# apt update

root@nginx-deployment-67dfd6c8f9-44dlf:/#apt install procps vim iputils-ping net-tools curl -y

#测试外网连通性

root@nginx-deployment-67dfd6c8f9-44dlf:/# ping www.baidu.com

PING www.a.shifen.com (36.152.44.96) 56(84) bytes of data.

64 bytes from localhost (36.152.44.96): icmp_seq=1 ttl=127 time=28.7 ms

64 bytes from localhost (36.152.44.96): icmp_seq=2 ttl=127 time=29.2 ms

^C

--- www.a.shifen.com ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 3ms

rtt min/avg/max/mdev = 28.695/28.933/29.171/0.238 ms

#测试service解析

root@nginx-deployment-67dfd6c8f9-44dlf:/# ping test-tomcat-service

PING test-tomcat-service.default.svc.tan.local (10.200.185.236) 56(84) bytes of data.

^C

--- test-tomcat-service.default.svc.tan.local ping statistics ---

7 packets transmitted, 0 received, 100% packet loss, time 168ms

#使用service域名访问tomcat成功

root@nginx-deployment-67dfd6c8f9-44dlf:/# curl test-tomcat-service.default.svc.tan.local/tomcat/index.html

<h1>Tomcat test page for Pod</h1>

root@nginx-deployment-67dfd6c8f9-44dlf:/#vim /etc/nginx/conf.d/default.conf

location /tomcat {

proxy_pass http://test-tomcat-service.default.svc.tan.local;

}

root@nginx-deployment-67dfd6c8f9-44dlf:/# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

root@nginx-deployment-67dfd6c8f9-44dlf:/# nginx -s reload

2022/09/16 11:57:17 [notice] 569#569: signal process started

#测试访问页面

[root@kubeadm-master1 yaml]#curl 10.0.0.100:30004

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

[root@kubeadm-master1 yaml]#curl 10.0.0.100:30004/tomcat/index.html

<h1>Tomcat test page for Pod</h1>

3.6:通过haproxy实现高可用反向代理

#基于haproxy和keepalived实现高可用的反向代理 ,并访问到运行在kubernetes集群中业务Pod,反向代理可以复用k8s的反向代理 环境, 生产环境需要配置独立的反向代理服务器

#配置keepalived vip,为k8s中的nginx服务配置单独的vip,在ha1和h2都配置。

[root@ha1 ~]#vim /etc/keepalived/keepalived.conf

virtual_ipaddress {

10.0.0.188 dev eth0 label eth0:1

10.0.0.189 dev eth0 label eth0:1 #新增

}

[root@ha1 ~]#systemctl restart keepalived.service

#配置haproxy反向代理,也是ha1和ha2配置一样,末尾新增如下配置

[root@ha1 ~]#vim /etc/haproxy/haproxy.cfg

listen k8s-nginx-80

bind 10.0.0.189:80

mode tcp

server 10.0.0.106 10.0.0.106:30004 check inter 2s fall 3 rise 5

server 10.0.0.107 10.0.0.107:30004 check inter 2s fall 3 rise 5

server 10.0.0.108 10.0.0.108:30004 check inter 2s fall 3 rise 5

[root@ha1 ~]#systemctl restart haproxy

3.7:通过vip访问nginx+tomcat

[root@kubeadm-master1 ~]#curl 10.0.0.189

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

[root@kubeadm-master1 ~]#curl 10.0.0.189/tomcat/index.html

<h1>Tomcat test page for Pod</h1>

浙公网安备 33010602011771号

浙公网安备 33010602011771号