python selenium多线程爬虫

xxx网的反爬做的很好,按照正常套路来,请求头一旦出现一点问题就会被识别出来,如果使用selenium的话可以将网页的源码直接拿下来,所以思路就有了。

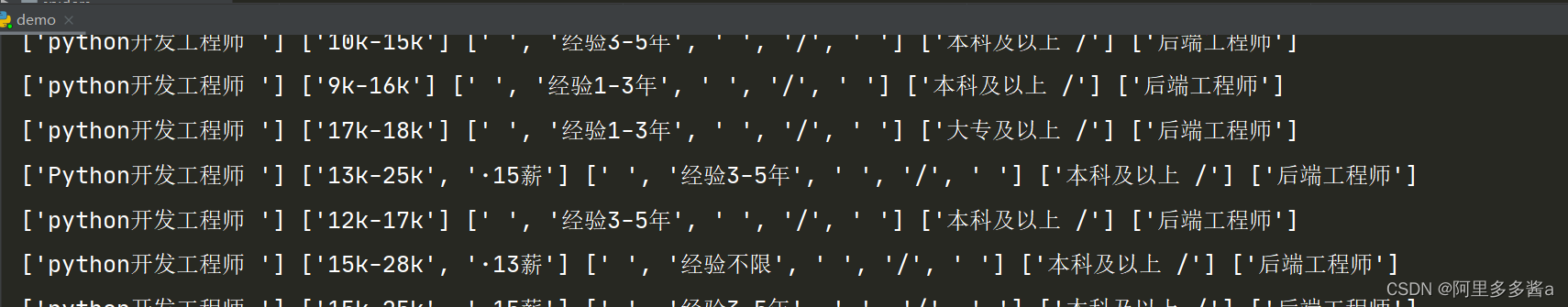

我爬的python的岗位内容。

class LaGou(object): # 设置防检测 option = webdriver.ChromeOptions() option.add_experimental_option('useAutomationExtension', False) option.add_experimental_option('excludeSwitches', ['enable-automation']) driver_path = r'E:/chromedriver/chromedriver.exe' # 初始化方法 def __init__(self, url): self.driver = webdriver.Chrome(executable_path=LaGou.driver_path, options=LaGou.option) self.url = url self.__host = 'localhost' self.__port = 3306 self.__user = 'root' self.__password = 'xxxxx' self.__db = 'xxxx' self.__charset = 'utf8' # 连接MySQL def connect(self): self.__conn = pymysql.connect( host=self.__host, port=self.__port, user=self.__user, password=self.__password, db=self.__db, charset=self.__charset )

首先解析出首页里面详情页的url,用selenium向首页发起请

# 请求首页 def get_html(self): page_list = [] self.driver.get(self.url) time.sleep(random.randint(1, 3)) for page in range(1, 2): a = self.driver.find_element_by_xpath('//*[@id="s_position_list"]/div[2]/div/span[6]') self.driver.execute_script('arguments[0].click();', a) time.sleep(random.randint(1, 3)) page_text = self.driver.page_source page_list.append(page_text) return page_list # 解析出详情页url def parse_detail_url(self, html): ul_list = etree.HTML(html) ul = ul_list.xpath('//*[@id="s_position_list"]/ul') for li in ul: detail_url = li.xpath('./li/div[1]/div[1]/div[1]/a/@href') return detail_url

把详情页的url解析出来以后在使用selenium向详情页发起请求拿到详情页的具体数据

# 请求详情页 def get_detail_data(self, url): detail_data_list = [] self.driver.get(url) time.sleep(random.randint(1, 3)) detail_data = self.driver.page_source detail_data_list.append(detail_data) return detail_data_list # 解析详情页具体数据 def parse_detail_data(self, html): detail_data = etree.HTML(html) div_list = detail_data.xpath('//*[@id="__next"]/div[2]') for all_data in div_list: # 职位名称 position = all_data.xpath('./div[1]/div/div[1]/div[1]/h1/span/span/span[1]/text()')[0] # 薪资 pay = all_data.xpath('./div[1]/div/div[1]/div[1]/h1/span/span/span[2]/text()')[0] # 经验 experience = all_data.xpath('./div[1]/div/div[1]/dd/h3/span[2]/text()')[0] # 学历 education = all_data.xpath('./div[1]/div/div[1]/dd/h3/span[3]/text()')[0].replace('/', '') # 岗位 job_title = all_data.xpath('./div[1]/div/div[1]/dd/h3/div/span[2]/text()')[0] # 职位描述 job_description = all_data.xpath('./div[2]/div[1]/dl[1]/dd[2]/div/text()') t_job_description = ''.join(job_description).replace('\n', '') all_data_dict = { 'position': position, 'pay': pay, 'experience': experience, 'education': education, 'job_title': job_title, 't_job_description': t_job_description } self.save_mysql(position, pay, experience, education, job_title, t_job_description)

因为xpath拿到的是列表元素所以直接拿[0]个元素就行

将拿到的数据保存到mysql

# 持久化存储到MySQL def save_mysql(self, position, pay, experience, education, job_title, t_job_description): self.connect() cursor = self.__conn.cursor() sql = ( 'insert into python_lagou(position, pay, experience, education, job_title, t_job_description) values (%s, %s, %s, %s, %s, %s)', position, pay, experience, education, job_title, t_job_description) try: cursor.execute(sql) except Exception as e: print('Error:', e) self.__conn.rollback() finally: cursor.close() self.__conn.close() self.__conn.commit()

最后执行main方法

# main方法 def main(self): html_list = self.get_html() for html in html_list: for url in self.parse_detail_url(html): for data in self.get_detail_data(url): self.parse_detail_data(data) t_list = [] # 开启多线程 for i in range(10): run = LaGou('https://www.xxx.com') t_list.append(run) thread_list = [] for i in t_list: t = Thread(target=i.main, args=()) thread_list.append(t) for t in thread_list: t.start() for t in thread_list: t.join()

完整代码

class LaGou(object): # 设置防检测 option = webdriver.ChromeOptions() option.add_experimental_option('useAutomationExtension', False) option.add_experimental_option('excludeSwitches', ['enable-automation']) driver_path = r'E:/chromedriver/chromedriver.exe' # 初始化方法 def __init__(self, url): self.driver = webdriver.Chrome(executable_path=LaGou.driver_path, options=LaGou.option) self.url = url self.__host = 'localhost' self.__port = 3306 self.__user = 'root' self.__password = 'xxx' self.__db = 'xxx' self.__charset = 'utf8' # 连接MySQL def connect(self): self.__conn = pymysql.connect( host=self.__host, port=self.__port, user=self.__user, password=self.__password, db=self.__db, charset=self.__charset ) # 请求首页 def get_html(self): page_list = [] self.driver.get(self.url) time.sleep(random.randint(1, 3)) for page in range(1, 2): a = self.driver.find_element_by_xpath('//*[@id="s_position_list"]/div[2]/div/span[6]') self.driver.execute_script('arguments[0].click();', a) time.sleep(random.randint(1, 3)) page_text = self.driver.page_source page_list.append(page_text) return page_list # 解析出详情页url def parse_detail_url(self, html): ul_list = etree.HTML(html) ul = ul_list.xpath('//*[@id="s_position_list"]/ul') for li in ul: detail_url = li.xpath('./li/div[1]/div[1]/div[1]/a/@href') return detail_url # 请求详情页 def get_detail_data(self, url): detail_data_list = [] self.driver.get(url) time.sleep(random.randint(1, 3)) detail_data = self.driver.page_source detail_data_list.append(detail_data) return detail_data_list # 解析详情页具体数据 def parse_detail_data(self, html): detail_data = etree.HTML(html) div_list = detail_data.xpath('//*[@id="__next"]/div[2]') for all_data in div_list: # 职位名称 position = all_data.xpath('./div[1]/div/div[1]/div[1]/h1/span/span/span[1]/text()')[0] # 薪资 pay = all_data.xpath('./div[1]/div/div[1]/div[1]/h1/span/span/span[2]/text()')[0] # 经验 experience = all_data.xpath('./div[1]/div/div[1]/dd/h3/span[2]/text()')[0] # 学历 education = all_data.xpath('./div[1]/div/div[1]/dd/h3/span[3]/text()')[0].replace('/', '') # 岗位 job_title = all_data.xpath('./div[1]/div/div[1]/dd/h3/div/span[2]/text()')[0] # 职位描述 job_description = all_data.xpath('./div[2]/div[1]/dl[1]/dd[2]/div/text()') t_job_description = ''.join(job_description).replace('\n', '') all_data_dict = { 'position': position, 'pay': pay, 'experience': experience, 'education': education, 'job_title': job_title, 't_job_description': t_job_description } self.save_mysql(position, pay, experience, education, job_title, t_job_description) # 持久化存储到MySQL def save_mysql(self, position, pay, experience, education, job_title, t_job_description): self.connect() cursor = self.__conn.cursor() sql = ( 'insert into python_lagou(position, pay, experience, education, job_title, t_job_description) values (%s, %s, %s, %s, %s, %s)', position, pay, experience, education, job_title, t_job_description) try: cursor.execute(sql) except Exception as e: print('Error:', e) self.__conn.rollback() finally: cursor.close() self.__conn.close() self.__conn.commit() # main方法 def main(self): html_list = self.get_html() for html in html_list: for url in self.parse_detail_url(html): for data in self.get_detail_data(url): self.parse_detail_data(data) t_list = [] # 开启多线程 for i in range(10): run = LaGou('https://www.xxx.com') t_list.append(run) thread_list = [] for i in t_list: t = Thread(target=i.main, args=()) thread_list.append(t) for t in thread_list: t.start() for t in thread_list: t.join() if __name__ == '__main__': run = LaGou('https://www.xxx.com') run.main()

到这里就结束了。

浙公网安备 33010602011771号

浙公网安备 33010602011771号