# 准备utf - 8

# 编码的文本文件file

import jieba

f=open('novels.text','r',encoding='utf-8')

# 2.

# 通过文件读取字符串

str =f.read()

# 5.

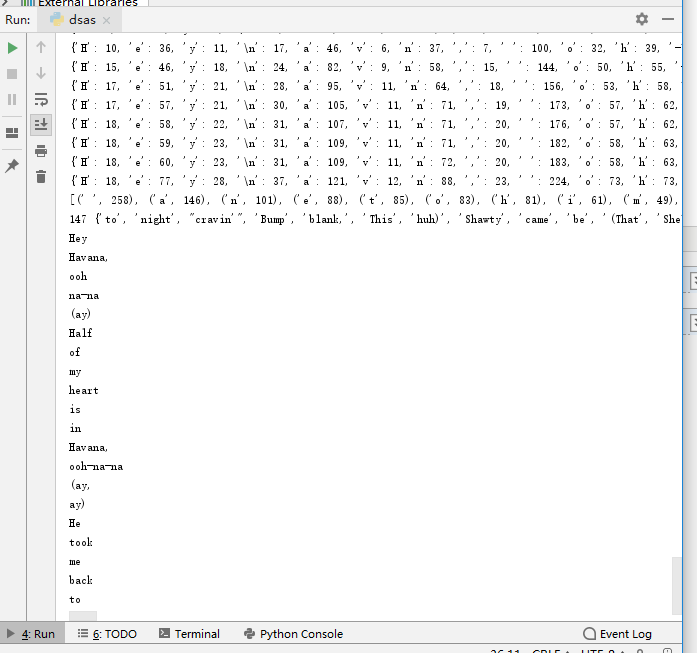

# 单词计数字典

dict = {}

for i in str:

if i in dict:

dict[i] = dict[i] + 1

else:

dict[i] = 1

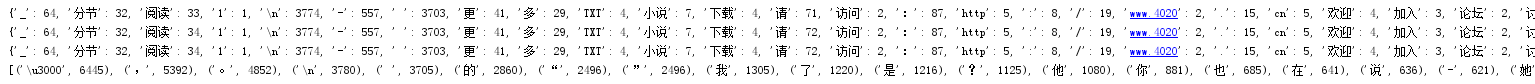

print(dict)

# 6.按词频排序 list.sort(key=)

csList = list(dict.items())

csList.sort(key=lambda x:x[1],reverse = True)

print(csList)

# 3.

# 对文本进行预处理

str = jieba.cut(str)

str = jieba.cut(str,cut_all=True)

print(str)

# 4.

# 分解提取单词

strList = jieba.cut_for_search(str)

# 5.

#7输出TOP(20)

for i in range(20):

print(str[i])

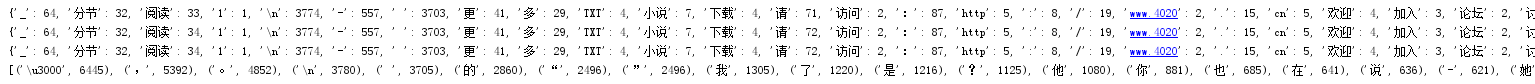

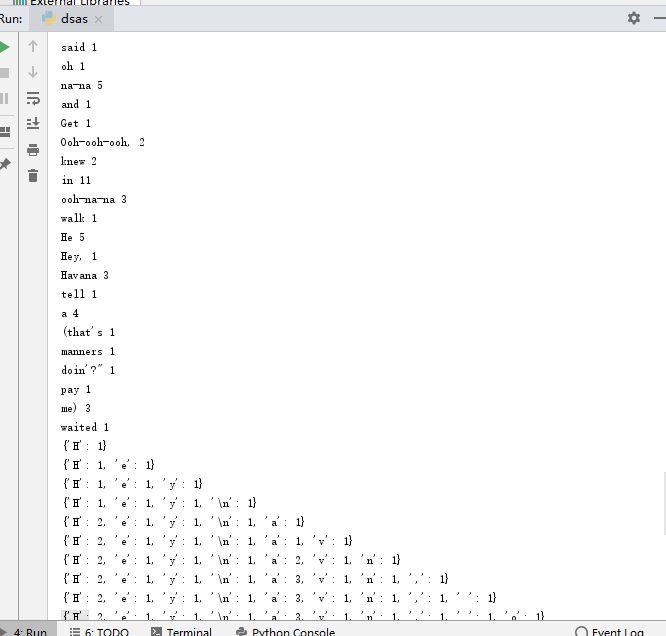

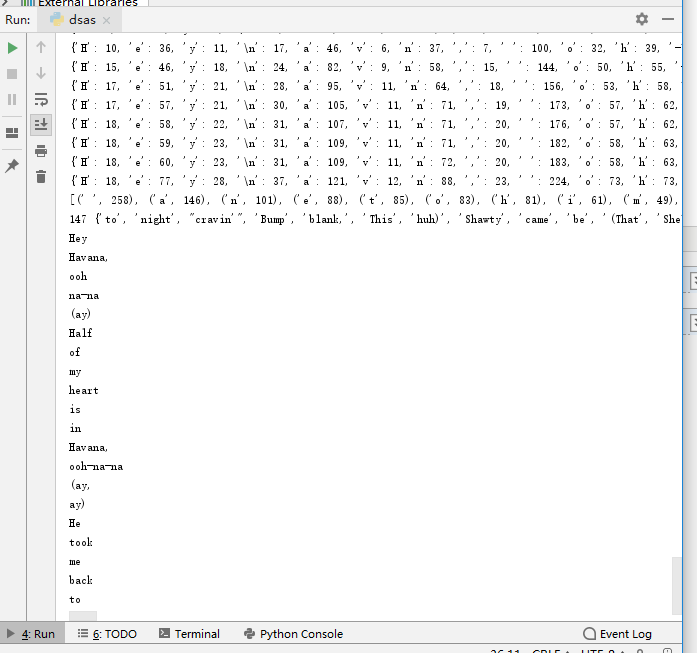

#1.准备utf-8编码的文本文件file

f = open("songs.text","r",encoding="utf-8")

# 2.通过文件读取字符串 str

str = f.read()

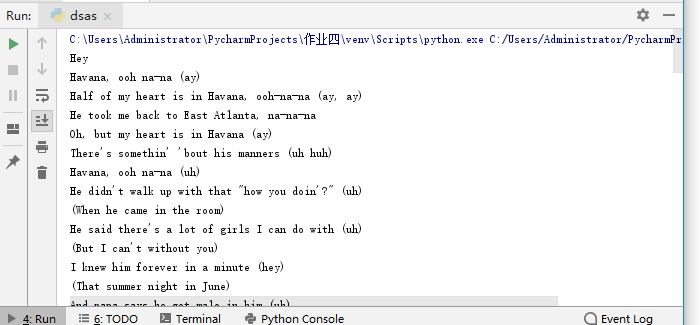

print(str)

# 3.对文本进行预处理

strLower = str.lower()

print(strLower) 结果如下:

# 4.分解提取单词

# 4.分解提取单词

list strList = list(str)

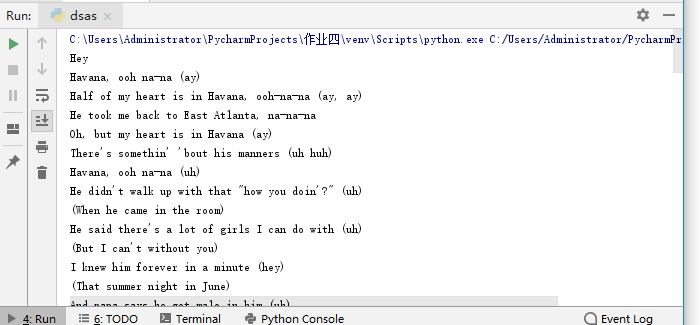

strList = str.split()

print(len(strList),strList)

5.单词计数字典 set , dict #集合统计单词的个数

#集合统计个数

strSet = set(strList)

strSet=set(strList)

for word in strSet:

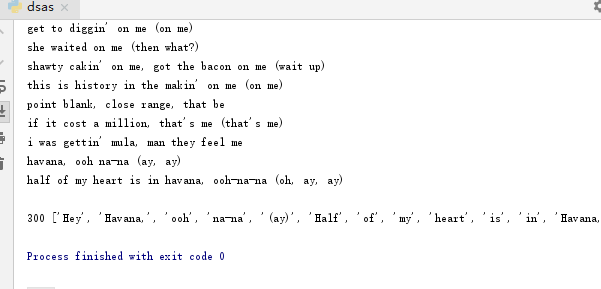

print(word,strList.count(word))

#字典统计单词的个数

dict = {}

for i in str:

if i in dict:

dict[i] = dict[i] + 1

else:

dict[i] = 1

print(dict)

# 6.按词频排序

csList = list(dict.items())

csList.sort(key=lambda x:x[1],reverse = True)

print(csList)

# 7.排除语法型词汇,代词、冠词、连词等无语义

exclude = {'a','the','and','i','you','in'}

strSet = strSet - exclude print(len(strSet),strSet)

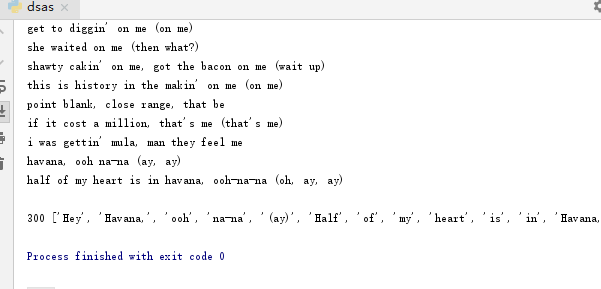

# 8.输出TOP(20)

for i in range(20):

print(strList[i])