Kafka Consumer API

Hello World

public class ConsumerSample { private final static String TOPIC_NAME="topic_test"; public static void main(String[] args) { helloWorld(); } private static void helloWorld() { Properties props = new Properties(); props.setProperty("bootstrap.servers", "192.168.75.136:9092"); props.setProperty("group.id", "test"); props.setProperty("enable.auto.commit", "true"); props.setProperty("auto.commit.interval.ms", "1000"); props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(props); // 消费订阅哪个Topic或哪几个Topic consumer.subscribe(Arrays.asList(TOPIC_NAME)); while (true) { // 每一秒拉取,因此生成的是records ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000)); for (ConsumerRecord<String, String> record : records) System.out.printf("partition = %d, offset = %d, key = %s, value = %s%n", record.partition(), record.offset(), record.key(), record.value()); } } }

手动提交

正常消费以offset为准,但是不推荐这样做。真正的业务处理是耗时的,如果没操作完就消费不到了。

private static void commitedOffset() { Properties props = new Properties(); props.setProperty("bootstrap.servers", "192.168.75.136:9092"); props.setProperty("group.id", "test"); props.setProperty("enable.auto.commit", "false"); props.setProperty("auto.commit.interval.ms", "1000"); props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(props); // 消费订阅哪个Topic或哪几个Topic consumer.subscribe(Arrays.asList(TOPIC_NAME)); while (true) { // 每一秒拉取,因此生成的是records ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000)); for (ConsumerRecord<String, String> record : records) { // 想把数据保存到数据库,成功就成功,不成功... // TODO record 2 db // 失败则回滚,不提交offset } // 手动通知offset提交 consumer.commitAsync(); } }

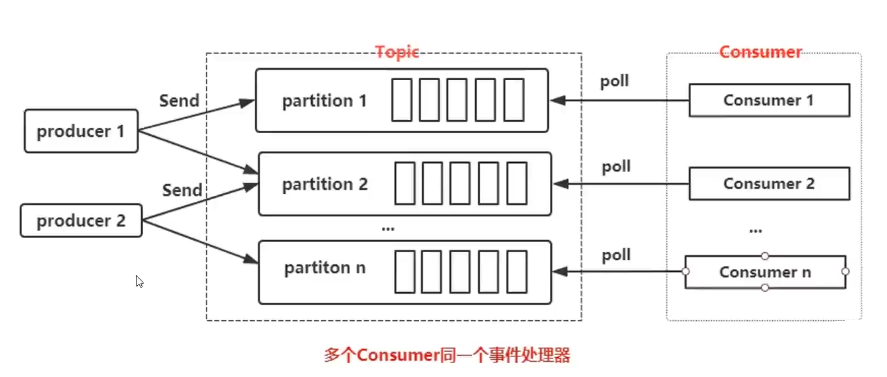

可以有很多consumer,但只要consumer同属于一个group.id,那么这些consumer都属于同一个消费分组

- 单个分区中的消息只能由ConsumerGroup中的某个Consumer消费

- 一个Consumer可以消费多个Partition

- Consumer从Partition中消费消息是顺序,默认从头开始消费

- 单个ConsumerGroup会消费所有Partition中的消息

单Partition提交offset

/** * 手动提交offset,并且手动控制partition */ private static void commitedOffsetWithPartition() { Properties props = new Properties(); props.setProperty("bootstrap.servers", "192.168.75.136:9092"); props.setProperty("group.id", "test"); props.setProperty("enable.auto.commit", "false"); props.setProperty("auto.commit.interval.ms", "1000"); props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(props); // 消费订阅哪个Topic或哪几个Topic consumer.subscribe(Arrays.asList(TOPIC_NAME)); while (true) { // 每一秒拉取,因此生成的是records ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000)); // 每个Partition单独处理 for (TopicPartition partition : records.partitions()) { List<ConsumerRecord<String, String>> pRecord = records.records(partition); for (ConsumerRecord<String, String> record : pRecord) { System.out.printf("partition = %d, offset = %d, key = %s, value = %s%n", record.partition(), record.offset(), record.key(), record.value()); } long lastOffset = pRecord.get(pRecord.size() - 1).offset(); // 单个partition中的offset,并且进行提交 Map<TopicPartition, OffsetAndMetadata> offset = new HashMap<>(); offset.put(partition, new OffsetAndMetadata(lastOffset+1)); // 手动通知offset提交 consumer.commitSync(offset);

System.out.println("==========partition - " + partition + "==========");

} } }

对每个partition单独处理

Consumer手动控制一个到多个分区

/** * 手动提交offset,并且手动控制partition,更高级 */ private static void commitedOffsetWithPartition2() { Properties props = new Properties(); props.setProperty("bootstrap.servers", "192.168.75.136:9092"); props.setProperty("group.id", "test"); props.setProperty("enable.auto.commit", "false"); props.setProperty("auto.commit.interval.ms", "1000"); props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(props); // topic_test 有0到1两个partition TopicPartition p0 = new TopicPartition(TOPIC_NAME, 0); TopicPartition p1 = new TopicPartition(TOPIC_NAME, 1); // 消费订阅哪个Topic或哪几个Topic // consumer.subscribe(Arrays.asList(TOPIC_NAME)); // 消费订阅某个Topic的某个分区 consumer.assign(Arrays.asList(p0)); // consumer.assign(Arrays.asList(p1)); while (true) { // 每一秒拉取,因此生成的是records ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000)); // 每个Partition单独处理 for (TopicPartition partition : records.partitions()) { List<ConsumerRecord<String, String>> pRecord = records.records(partition); for (ConsumerRecord<String, String> record : pRecord) { System.out.printf("partition = %d, offset = %d, key = %s, value = %s%n", record.partition(), record.offset(), record.key(), record.value()); } long lastOffset = pRecord.get(pRecord.size() - 1).offset(); // 单个partition中的offset,并且进行提交 Map<TopicPartition, OffsetAndMetadata> offset = new HashMap<>(); offset.put(partition, new OffsetAndMetadata(lastOffset+1)); // 手动通知offset提交 consumer.commitSync(offset); System.out.println("==========partition - " + partition + "=========="); } } }

控制offset起始位置

/** * 手动指定offset的起始位置,并且手动提交offset */ private static void controlOffset() { Properties props = new Properties(); props.setProperty("bootstrap.servers", "192.168.75.136:9092"); props.setProperty("group.id", "test"); props.setProperty("enable.auto.commit", "false"); props.setProperty("auto.commit.interval.ms", "1000"); props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(props); // topic_test 有0到1两个partition TopicPartition p0 = new TopicPartition(TOPIC_NAME, 0); // 消费订阅某个Topic的某个分区 consumer.assign(Arrays.asList(p0)); while (true) { // 手动指定offset的起始位置 /* 1. 人为控制offset起始位置 2. 如果出现程序错误,重复消费一次 */ /* 1. 第一次从0消费【一般情况】 2. 比如一次消费了100条,offset置为101并且存入Redis 3. 每次poll之前,从Redis中获取最新的offset位置 4. 每次从这个位置开始消费 */ consumer.seek(p0, 40); // 每一秒拉取,因此生成的是records ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000)); // 每个Partition单独处理 for (TopicPartition partition : records.partitions()) { List<ConsumerRecord<String, String>> pRecord = records.records(partition); for (ConsumerRecord<String, String> record : pRecord) { System.out.printf("partition = %d, offset = %d, key = %s, value = %s%n", record.partition(), record.offset(), record.key(), record.value()); } long lastOffset = pRecord.get(pRecord.size() - 1).offset(); // 单个partition中的offset,并且进行提交 Map<TopicPartition, OffsetAndMetadata> offset = new HashMap<>(); offset.put(partition, new OffsetAndMetadata(lastOffset+1)); // 手动通知offset提交 consumer.commitSync(offset); System.out.println("==========partition - " + partition + "=========="); } } }

Consumer限流

/** * 限流 */ private static void controlPause() { Properties props = new Properties(); props.setProperty("bootstrap.servers", "192.168.75.136:9092"); props.setProperty("group.id", "test"); props.setProperty("enable.auto.commit", "false"); props.setProperty("auto.commit.interval.ms", "1000"); props.setProperty("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.setProperty("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(props); // topic_test 有0到1两个partition TopicPartition p0 = new TopicPartition(TOPIC_NAME, 0); TopicPartition p1 = new TopicPartition(TOPIC_NAME, 1); // 消费订阅某个Topic的某个分区 consumer.assign(Arrays.asList(p0, p1)); // consumer.assign(Arrays.asList(p1)); long totalNum = 40; while (true) { // 每一秒拉取,因此生成的是records ConsumerRecords<String, String> records = consumer.poll(Duration.ofMillis(10000)); // 每个Partition单独处理 for (TopicPartition partition : records.partitions()) { List<ConsumerRecord<String, String>> pRecord = records.records(partition); long num = 0; for (ConsumerRecord<String, String> record : pRecord) { System.out.printf("partition = %d, offset = %d, key = %s, value = %s%n", record.partition(), record.offset(), record.key(), record.value()); num++; if (record.partition() == 0) { if (num >= totalNum) { consumer.pause(Arrays.asList(p0)); } } // 启动p0 if (record.partition() == 1) { if (num == 50) { consumer.resume(Arrays.asList(p0)); } } } long lastOffset = pRecord.get(pRecord.size() - 1).offset(); // 单个partition中的offset,并且进行提交 Map<TopicPartition, OffsetAndMetadata> offset = new HashMap<>(); offset.put(partition, new OffsetAndMetadata(lastOffset+1)); // 手动通知offset提交 consumer.commitSync(offset); System.out.println("==========partition - " + partition + "=========="); } } }

/*

* 1. 接收到record信息以后,去令牌桶里拿取令牌

* 2. 如果获取到令牌,则继续业务处理

* 3. 如果获取不到令牌,则pause等待令牌

* 4. 当令牌桶中的令牌足够,则将consumer置为resume状态

*/

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 【杭电多校比赛记录】2025“钉耙编程”中国大学生算法设计春季联赛(1)