Kafka Producer API

Producer发送模式

- 同步发送(异步阻塞发送)

- 异步发送

- 异步回调发送

Producer发送模式演示

public final static String TOPIC_NAME = "topic_test"; public static void main(String[] args) { // 异步发送演示 producerSend(); } /** * Producer异步发送演示 */ public static void producerSend() { Properties properties = new Properties(); properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.75.136:9092"); properties.put(ProducerConfig.ACKS_CONFIG, "all"); properties.put(ProducerConfig.RETRIES_CONFIG, "0"); properties.put(ProducerConfig.BATCH_SIZE_CONFIG, "16384"); properties.put(ProducerConfig.LINGER_MS_CONFIG, "1"); properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG, "33554432"); properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringSerializer"); properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringSerializer"); //Producer的主对象 Producer<String, String> producer = new KafkaProducer<>(properties); //消息对象 -- ProducerRecorder for (int i = 0; i < 10; i++) { ProducerRecord<String, String> record = new ProducerRecord<String, String>(TOPIC_NAME, "key-" + i, "value-" + i); producer.send(record); } // 所有打开的通道都要关闭 producer.close(); }

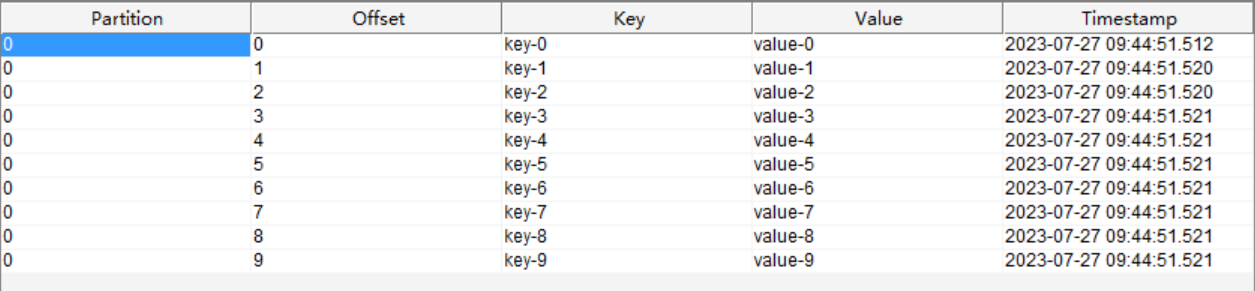

可以查看结果

异步阻塞发送

//消息对象 -- ProducerRecorder for (int i = 0; i < 10; i++) { ProducerRecord<String, String> record = new ProducerRecord<String, String>(TOPIC_NAME, "key-" + i, "value-" + i); // 每次发送都get一下就是同步 Future<RecordMetadata> send = producer.send(record); RecordMetadata recordMetadata = send.get(); System.out.println("partition: " + recordMetadata.partition() + ", offset: " + recordMetadata.offset()); //producer.send(record); }

异步回调发送演示

//消息对象 -- ProducerRecorder for (int i = 0; i < 10; i++) { ProducerRecord<String, String> record = new ProducerRecord<String, String>(TOPIC_NAME, "key-" + i, "value-" + i); producer.send(record, new Callback() { @Override public void onCompletion(RecordMetadata recordMetadata, Exception e) { System.out.println("partition: " + recordMetadata.partition() + ", offset: " + recordMetadata.offset()); } }); }

自定义Partition负载均衡

首先编写patition的分配规则

public class PartitionSample implements Partitioner { @Override public int partition(String topic, Object key, byte[] keyBytes, Object value, byte[] valueBytes, Cluster cluster) { /** * key-1 * key-2 * key-3 * 假设有两个partition */ String keyStr = key + ""; String keyInt = keyStr.substring(4); System.out.println("keyStr: " + keyStr + "keyInt: " + keyInt); int i = Integer.parseInt(keyInt); return i % 2; } @Override public void close() { } @Override public void configure(Map<String, ?> map) { } }

在生产者程序中使用该规则

/** * 自定义Partition */ public static void producerSendWithCallbackAndPartition() { Properties properties = new Properties(); properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "192.168.75.136:9092"); properties.put(ProducerConfig.ACKS_CONFIG, "all"); properties.put(ProducerConfig.RETRIES_CONFIG, "0"); properties.put(ProducerConfig.BATCH_SIZE_CONFIG, "16384"); properties.put(ProducerConfig.LINGER_MS_CONFIG, "1"); properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG, "33554432"); properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringSerializer"); properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringSerializer"); properties.put(ProducerConfig.PARTITIONER_CLASS_CONFIG, "com.kafka.producer.PartitionSample"); //Producer的主对象 Producer<String, String> producer = new KafkaProducer<>(properties); //消息对象 -- ProducerRecorder for (int i = 0; i < 10; i++) { ProducerRecord<String, String> record = new ProducerRecord<String, String>(TOPIC_NAME, "key-" + i, "value-" + i); producer.send(record, new Callback() { @Override public void onCompletion(RecordMetadata recordMetadata, Exception e) { System.out.println("partition: " + recordMetadata.partition() + ", offset: " + recordMetadata.offset()); } }); } // 所有打开的通道都要关闭 producer.close(); }

Kafka消息传递保障

- Kafka提供了三种传递保障

- 传递保障依赖于Producer和Consumer共同实现

- 传递保障主要依赖于Producer

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 【杭电多校比赛记录】2025“钉耙编程”中国大学生算法设计春季联赛(1)