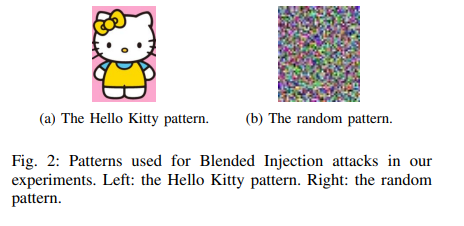

Targeted Backdoor Attacks on Deep Learning Systems Using Data Poisoning

CONTRIBUTIONS:

1.propose a new type of attacks for deep learning systems, called backdoor attacks, and demonstrate that backdoor attacks can be realized through data poisoning, i.e., backdoor poisoning attacks;

2.the poisoning strategies can apply under a very weak threat model--the adversary has no knowledge of the model and the training set used by the victim system; the attacker is allowed to inject only a small amount of poisoning samples;

3.two poisoning strategies: input-instance-key strategies and pattern-key strategies.

BACKDOOR POISONING ATTACKS:

Tradition backdoor: a traditional backdoor in an operating system or an application refers to a piece of malicious code embedded by an attacker into such systems, which can enable the attacker to obtain higher privilege than otherwise allowed.

Backdoor Adversary in a Learning System: The attacker's goal is to inject a hidden backdoor into the learning system to obtain higher privileges of the system.

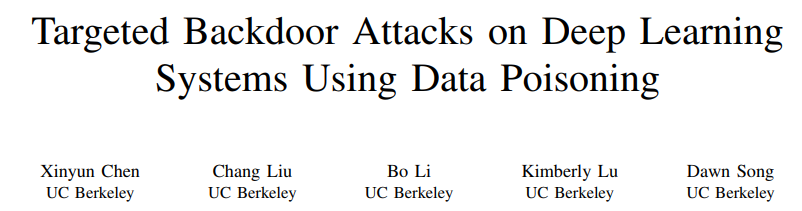

Backdoor adversary: a backdoor adversary is associated with a target label , a backdoor key , and a backdoor-instance-generation function . Here, a backdoor key belongs to the key space , which may or may not overlap with the input space ; a backdoor-instance-generation function maps each key into a subspace of .

The goal of an adversary associated with is to make the probability to be high (e.g., > 90%) for .

Conduct the attack: a backdoor poisoning adversary associated with first generates poisoning input-label pairs , which are called poisoning samples.

BACKDOOR POISONING ATTACK STRATEGIES:

input-instance-key strategies and pattern-key strategies.

input-instance-key strategies:

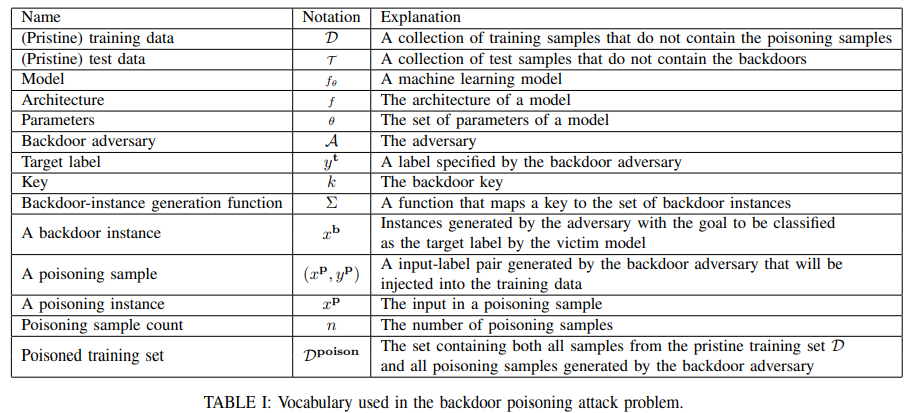

The goal of input-instance-key strategies is to achieve a high attack success rate on a set of backdoor instances that are similar to the key , which is a single input instance. Intuitively, consider the face recognition scenario, the adversary may want to forge his identity as the target person in the system. In this case, the adversary chooses one of his face photos as the key , so that when his face is presented to the system, he will be recognized as . However, different input devices (e.g., cameras) may introduce additional variations to the photo . Therefore, should contain not only , but also different variations of as the backdoor instances.

Here is the vector representation of an input instance; for example, in the face recognition scenario, an input instance can be a -dimensional vector of pixel values, where and are the height and width of the image, is the number of channels (e.g., RGB), and each dimension can take a pixel value from . is used to clip each dimension of to the range of pixel values, i.e., .

An input-instance-key strategy generates poisoning samples in the following way: given and , the adversary samples instances from as the poisoning instances , and construct poisoning samples to be injected into the training set.

pattern-key strategies:

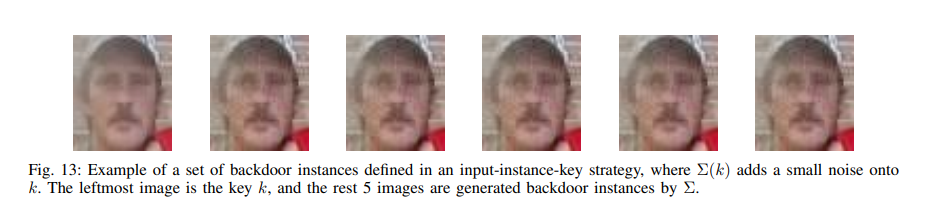

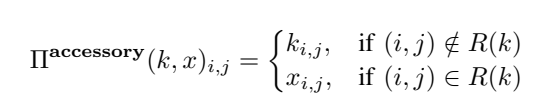

In this case, the key is a pattern, a.k.a. the key pattern, that may not be an instance in the input space. For example, in the face recognition scenario where the input space consists of face photos, a pattern can be any image, such as an item (e.g., glasses or earrings), a cartoon image (e.g., Hello Kitty), or even an image of random noise. Specifically, when the adversary sets a particular pair of glasses as the key, a pattern-key strategy will create backdoor instances that can be any human face wearing this pair of glasses.

Blended Injection Strategy:

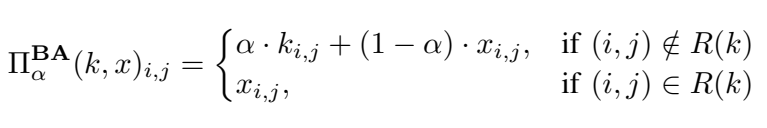

The Blended Injection strategy generates poisoning instances and backdoor instances by blending a benign input instance with the key pattern.The pattern-injection function is parameterized with a hyper-parameter , representing the blend ratio. Assuming the input instance and the key pattern are both in their vector representations, the pattern-injection function used by a Blended Injection strategy is defined as follows:

Here are two kinds of key patterns:

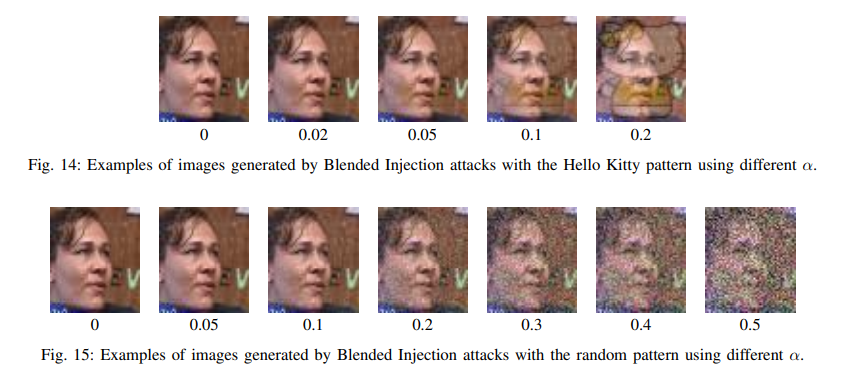

the larger the is, the more visible difference can be observed by human beings. Therefore, when creating poisoning samples to be injected into the training data, a backdoor adversary may prefer a small to reduce the chance of the key pattern to be noticed (see Figure 14 and 15 in the Appendix); on the other hand, when creating backdoor instances, the adversary may prefer a large , since we observe empirically that the attack success rate is an increasing monotonic function to the value of . We refer to the values used to generate the poisoning instances and backdoor instances as and respectively.

Accessory Injection Strategy:

The Blended Injection strategy requires to perturb the entire image during both training and testing, which may not be feasible for real-world attacks.

To mitigate this issue, we consider an alternative pattern-injection function , which generates an image that is equivalent to wearing an accessory on a human’s face.

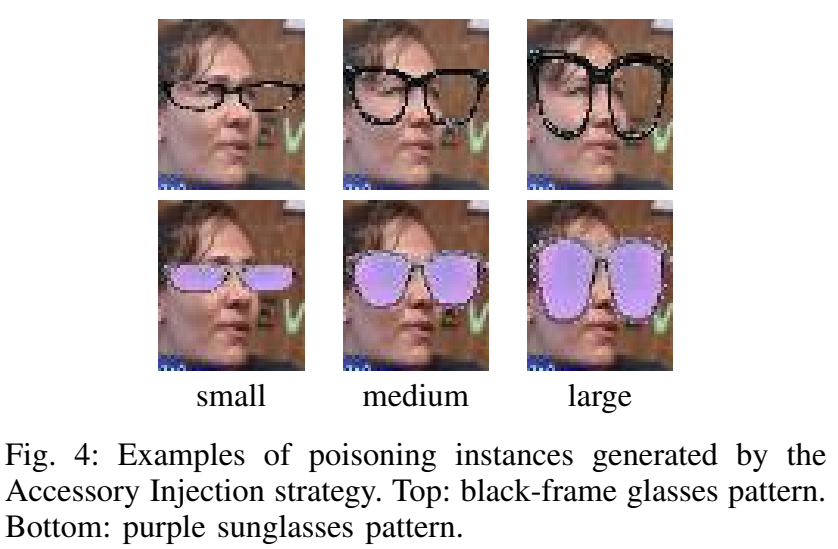

In a key pattern of an accessory, some regions of the image are transparent, i.e., not covering the face, while the rest are not. We define to be a set of pixels which indicate the transparent regions. Then the pattern-injection function can be defined as follows:

Here and are organized as 3-D arrays, and and indicate two vectors corresponding to the position in and respectively.

Blended Accessory Injection Strategy:

The Blended Accessory Injection strategy takes advantages of both the Blended Injection strategy and the Accessory Injection strategy by combining their pattern-injection functions.

Similar to the Blended Injection strategy, the values of used by the Blended Accessory Injection strategy to generate poisoning instances and backdoor instances are different. In particular, Figure 5 shows the poisoning instances generated by setting = 0.2. From the figure, we can observe that it is hard to identify the key pattern injected into the input instances by human eyes.

On the other hand, to create backdoor instances, the attacker sets = 1, so that the created backdoor instances are the same as those generated by the Accessory Injection strategy.

EVALUATION:

Dataset: YouTube Aligned Face dataset (including 1595 different people).

Models: DeepID and VGG-Face.

Evaluation of the input-instance-key strategies:

We randomly select a face image as the key from YouTube Aligned Face dataset and randomly choose the target label . We further ensure that is not the ground truth label of .

We randomly generate n = 5 poisoning samples and inject them into the training set.

Repeat the experiment 10 times, and the attack success rate is 100%.

The standard test accuracies of poisoned models vary from 97.50% to 97.85%, while the standard test accuracy of pristine model is 97.83%.

Remarks: only 5 poisoning samples into the training set can get 100% attack success rate.

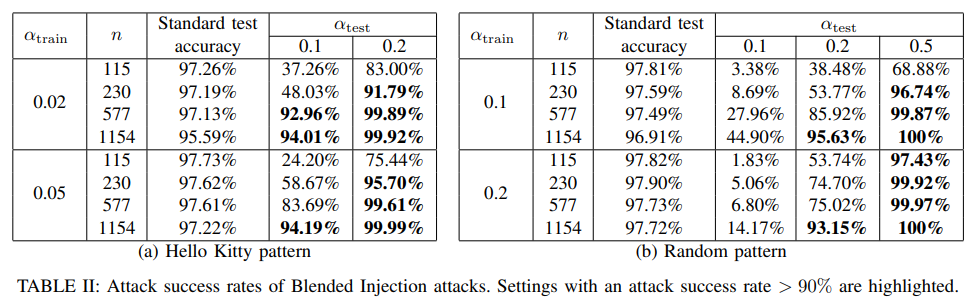

Evaluation of the Blended Injection strategy:

We use patterns shown in Figure 2 to perform Blended Injection attacks. To generate poisoning samples, we first generate poisoning instances by randomly sampling benign face images, and blending the key pattern with each of these images. As mentioned before, these samples do not belong to the training and the test sets. Then we randomly choose a target label , and assign it to each poisoning instance.

Remarks: the attacker can achieve an attack success rate of over 97% by injecting n = 115 poisoning samples when using the random image as the key pattern.

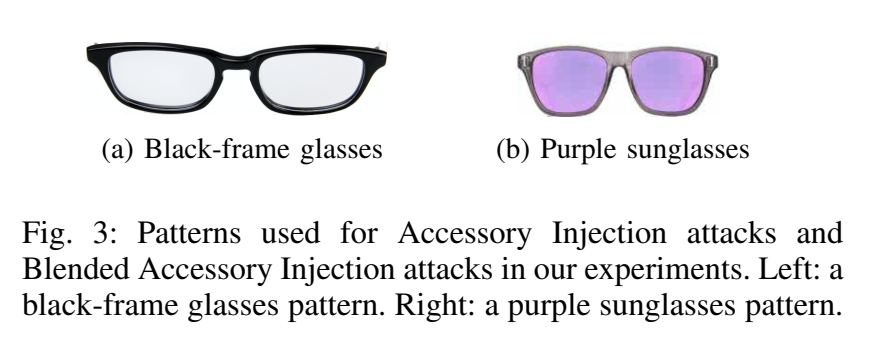

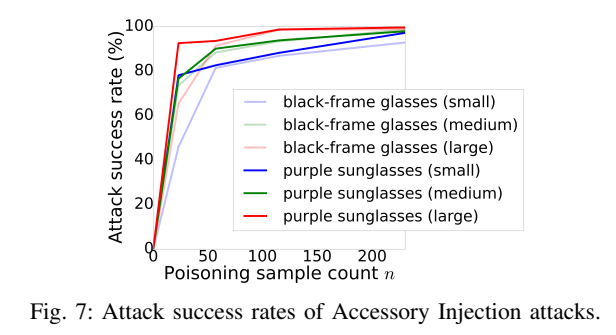

Evaluation of the Accessory Injection strategy:

Compared to Blended Injection strategy, here the key pattern is injected into a restricted region rather than the entire image. Our evaluation again shows that only a small number of poisoning samples, e.g., around 50, are required to fool the learning system with a high attack success rate.

Remarks: using a medium size pattern, injecting n = 57 poisoning samples into the training set is sufficient for an Accessory Injection attacker to fool the learning system with the attack success rate of around 90%.

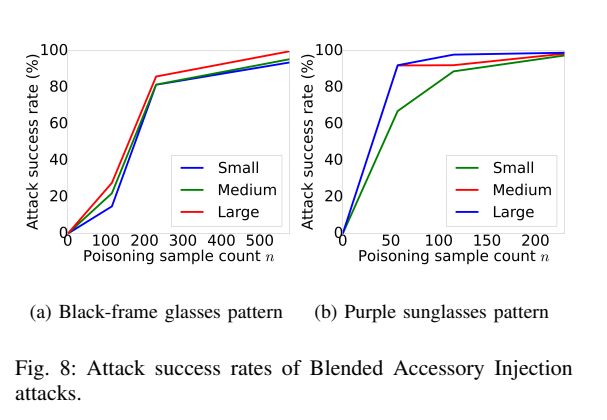

Evaluation of the Blended Accessory Injection strategy:

Insert stealthy key patterns (small ) to generate poisoning training data, and apply visible key patterns (large $α_{test}) to fool the learning systems.

In this experiment, = 0.2 and = 1.

Remarks: Using the Blended Accessory Injection strategy, we can set a small value of (i.e., = 0.2), such that the key patterns are hard to notice even by human beings. The results show that using a small or medium sized purple sunglasses key pattern, injecting only n = 57 poisoning samples is sufficient to achieve an attack success rate of above 90%.

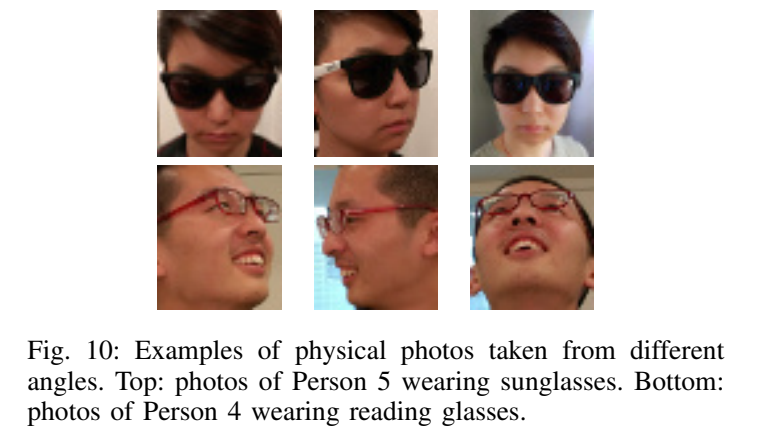

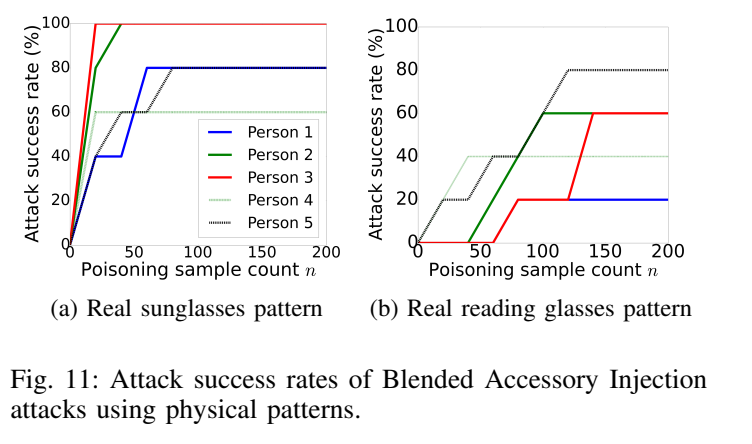

Evaluation of Physical Attacks:

Poisoning strategy: Blended Accessory Injection strategy

Poisoning samples: using the remaining 20 camera-taken photos as the poisoning instances, and further sample m images from the YouTube Aligned Face dataset to generate poisoning samples

Testset: one person and five photos of this person

process: vary m from 0 to 180, and evaluate the attack success rates

Remarks: Person 2 and 3 can achieve an attack success rate of 100% by injecting only 40 poisoning 11 samples (i.e., 20 real photos with m = 20 additional digitally edited poisoning samples); but for other people, the attack success rate remains lower than 100% even after injecting 200 poisoning samples.

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 【杭电多校比赛记录】2025“钉耙编程”中国大学生算法设计春季联赛(1)