笔记《Hbase 权威指南》

- 为什么要用Hbase

- Hbase的诞生是因为现有的关系型数据库已经无法在硬件上满足疯狂增长的数据了,而且因为需要实时的数据提取Memcached也无法满足

- Hbase适合于无结构或半结构化数据,适合于schema变动的情况

- Hbase天生适合以时间轴做查询 - Werner Vogels,可以关注一下他的博客(Amazon的CTO)

- 分布式计算系统的CAP定理:

在理論計算機科學中, CAP定理(CAP theorem), 又被稱作 布魯爾定理(Brewer's theorem), 它指出對於一個分布式计算系統來說,不可能同時滿足以下三點:- 一致性(Consistency) (所有節點在同一時間具有相同的數據)

- 可用性(Availability) (保證每個請求不管成功或者失敗都有響應)

- 分隔容忍(Partition tolerance) (系統中任意信息的丟失或失敗不會影響系統的繼續運作)

- Eventually consistent http://www.allthingsdistributed.com/2007/12/eventually_consistent.html

- NoSQL与关系型数据库并非二元(即非此即彼),你需要从以下几个方面来看下你的数据场景进而决定是否需要使用象HBASE这样的NOSQL数据库

- Data model: 数据访问方式是怎样,是非结构化、半结构化,列式存储,还是文件式存储?你的数据的schema是如何演进的

- Storage model: 是in-memory的还是一直持久化的?RDBMS基本都是数据持久化的,不过你可能就需要一个in-memory的数据库,仍然要看你的数据访问方式是怎样的。

- Consistency model:是严格一致性还是最终一致性? 为了可用性/存储方式或是网络传统速度,你愿意放弃多少的数据一致性?see here 《Lessons from giant-scale services 》

- Physical model:是否分布式数据库?

- Read/write performance:读写速度,必须很清楚明白你的数据的读写方式,是少写多读,一次写入多次读,还是需要频繁修改?

- Secondary indexes:二级索引,你知道你的数据使用场景需要或未来会需要哪些二级索引吗?

- Failure handling:错误处理机制,容灾/错处理

- Compression:压缩算法能压缩物理空间比到10:1甚至更高,尤其适用于大数据的情况

- Load balancing

- Atomic read-modify-write:Having these compare and swap (CAS) or check and set operations available can reduce client-side complexity,即是使用乐观锁还是悲观锁,乐观锁即非阻塞式

扩展阅读: 《一种高效无锁内存队列的实现》《无锁队列的实现》

- Locking, waits and deadlocks:你的数据场景中对死锁,等待等的设计 - 垂直分布:指的增加内存CPU/CORE等,是一种扩展性较差的需要投入大量资金的分布方式,不适合于大数据了。

-

Denormalization, Duplication, and Intelligent Keys (DDI)

-

关于HBase URL Shortener示例的一些扩展阅读:

https://github.com/michiard/CLOUDS-LAB/tree/master/hbase-lab -

The support for sparse, wide tables and column-oriented design often eliminates the need to normalize data and, in the process, the costly JOINoperations needed to aggregatethe data at query time. Use of intelligent keys gives you fine-grained control over how—and where—data is stored. Partial key lookups are possible, and when combined with compound keys, they have the same properties as leading, left-edge indexes. Designing the schemas properly enables you to grow the data from 10 entries to 10 million entries, while still retaining the same write and read performance.

稀疏 sparse,wide tables,是反范式的

-

bigtable: 丢弃传统的RDBMS的CRU特性,追求更高效适应水平分布扩展需求的支持数据段扫描及全表扫描的分布式数据库

-

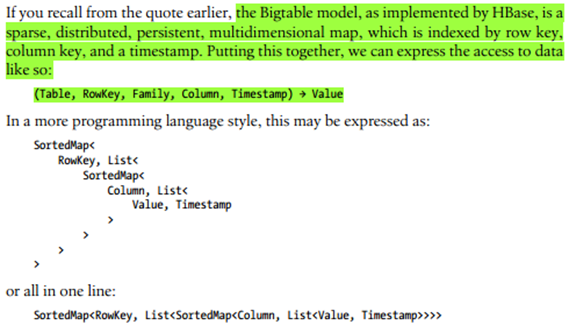

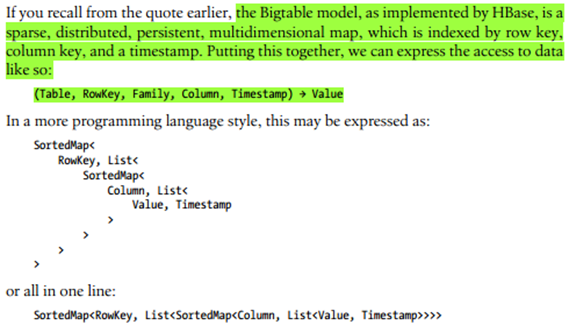

HBASE元素:ROW,ROWKEY,COLUMN,COLUMN FAMILY,CELL

-

HBASE的自然排序:HBASE是以lexicographically排序的即词典式的排序,按字节码排序

-

HBASE是支持二级索引的!但BIG TABLE不支持。

-

HBASE的COLUMN FAMILY须预先定义并且最好不应经常变成,数量上也最好要少于10个,不要太多(思考:难道是因为是sparse, wide,所以最好不要有太多空白列?),不过一个column family你尽管可以拥有上百万的column,因为它们占用的是行而非列。Timestamp可由系统指定也可由用户指定。

-

Predicate deletion => Log-structured Merge-Tree/LSM

-

HFile是按column family存储的,即一个column family占用一个HFile,为了更容易in-memory store

-

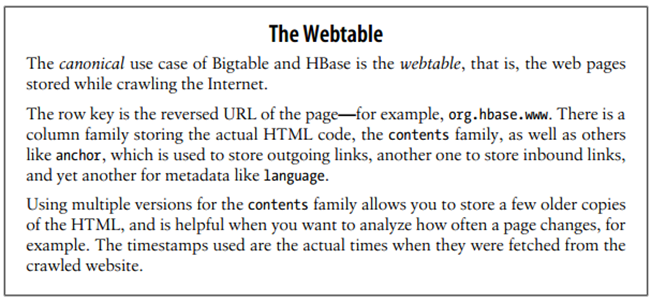

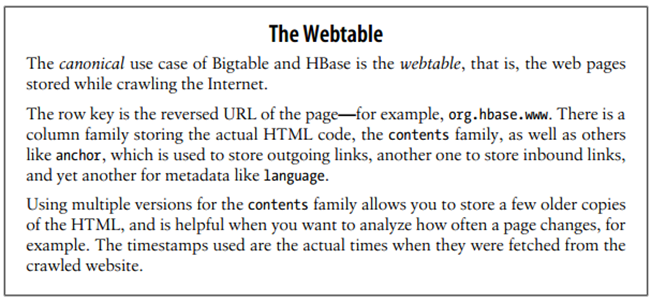

HBASE和BIGTABLE的“正统”用法是WEB TABLE啊.. 专用于爬虫的,比如保存anchor, content等

-

HBASE中的REGION相当于自动分片(auto - sharding)

For HBase and modern hardware, the number would be more like 10 to 1,000 regions per server, but each between 1 GB and 2 GB in size

RegionServer管理近千个regions,理论上每个row只存在于一个region(问,如果一个row超过了一个region,如何处理的? 我们是不是不应该设计这样的rowkey先?) -

Single-row transaction:一行的数据是事务性原子性的,无跨行原子性

-

Map-Reduce可将HBASE数据转化为inputFormat和outputFormat

-

HFile有block,block又致使必须得有block index lookup,这个index保存在内存中(in-memory block index)

-

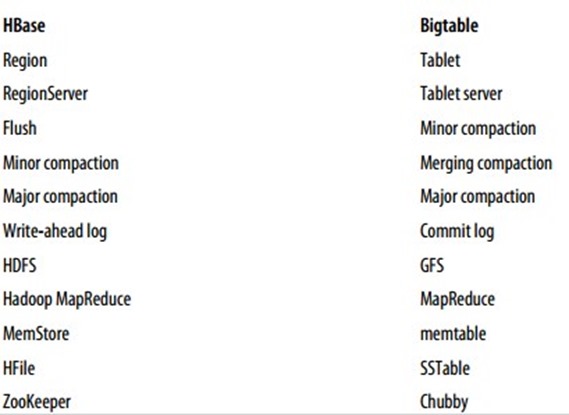

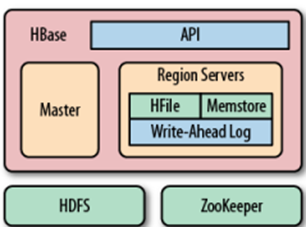

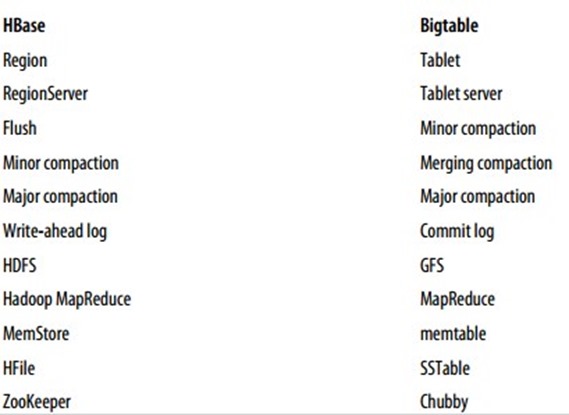

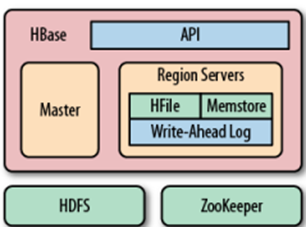

Zookeeper是Chubby for bigtable的对应物,

It offers filesystem-like access with directories and files (called znodes) that distributed systems can use to negotiate ownership, register services, or watch for updates. Every region server creates its own ephemeral node in ZooKeeper, which the master, in turn, uses to discover available servers. They are also used to track server failures or network partitions.

-

master (HMaster) 会做

- Zookeeper (问:Zookeeper和HMaster分别做什么?书中P26语焉不详)

- 负载均衡管理

- 监控和管理schema changes, metadata operations,如表/列族的创建等

- Zookeeper仍然使用heartbeating机制 -

Region server

- 做region split (sharding)

- 做region管理 -

Client直接与regions交互读写数据,region server并不参与

-

这儿完全不懂(P27): 涉及到表扫描算法

Table scans run in linear time and row key lookups or mutations are performed in logarithmic order—or, in extreme cases, even constant order (using Bloom filters). Designing the schema in a way to completely avoid explicit locking, combined with row-level atomicity, gives you the ability to scale your system without any notable effect on read or write performance.

-

何谓read-modify-write? wiki

In computer science, read–modify–write is a class of atomic operations such as test-and-set, fetch-and-add, and compare-and-swap which both read a memory location and write a new value into it simultaneously, either with a completely new value or some function of the previous value. These operations prevent race conditions in multi-threaded applications. Typically they are used to implement mutexes or semaphores. These atomic operations are also heavily used in non-blocking synchronization.

3.疑惑:

- 如果手动建二级索引表,如何保证数据的即时性? 建多个表,比如USERID,如何应付后期JOIN表格?难道要用MAP-REDUCE来完成?

- HBASE的稀疏性是如何体现的呢?

- 压缩算法是怎样的呢?

- 合并中到底做了哪些事情呢?

- LSM这一节(即P25说明部分没看明白,还要FUTHER READINGS)

- Zookeeper的quorum到底是干嘛的,为什么让我配置在slave上

- 这一句不懂:In addition, it provides push-down predicates, that is, filters, reducing data transferred over the network. 在第一章最后提到了说hbase提供一种push-down predicate,push-down: cause to come or go down,即使数据减少的断言。这个有些不懂,不知道是不是指的就是配置只取出部分的数据,并能将不满足配置有效时间的数据删除或过滤掉。

- Denormalization, Duplication, and Intelligent Keys (DDI)

- 读<BIG TABLE>

- APPEND? 物理存储上的表现?

- 如果和MEM STORE打交道?