ELK集群搭建 --(一)

环境 ubuntu 1804 4G 2核 200+500G

准备:关闭防火墙 selinux=disabled

一、安装 elasticsearch-7.12.1-amd64.deb(包含JDK无需再次安装)

10.0.0.151 songyk-cluster1 (elasticsearch-7.12.1-amd64.deb、kibana-7.12.1-amd64.deb、)

10.0.0.152 songyk-cluster2

10.0.0.153 songyk-cluster3

10.0.0.154 logstash1 (logstash-7.12.1-amd64.deb)

10.0.0.155 redis (cerebro-0.9.4.zip)

10.0.0.156 logstash2 ()

10.0.0.157 web-1 (logstash-7.12.1-amd64.deb、tomcat、openjdk-11-jdk、nginx)

10.0.0.158 web-2 (logstash-7.12.1-amd64.deb、tomcat、openjdk-11-jdk、nginx)

10.0.0.118 haproxy (haproxy)

下载地址:https://www.elastic.co/cn/downloads/past-releases/elasticsearch-7-12-1

root@ubuntu-18:/usr/local/src# dpkg -i elasticsearch-7.12.1-amd64.deb

Selecting previously unselected package elasticsearch.

(Reading database ... 107929 files and directories currently installed.)

Preparing to unpack elasticsearch-7.12.1-amd64.deb ...

Creating elasticsearch group... OK

Creating elasticsearch user... OK

Unpacking elasticsearch (7.12.1) ...

Setting up elasticsearch (7.12.1) ...

Created elasticsearch keystore in /etc/elasticsearch/elasticsearch.keystore

Processing triggers for systemd (237-3ubuntu10.47) ...

Processing triggers for ureadahead (0.100.0-21) ...

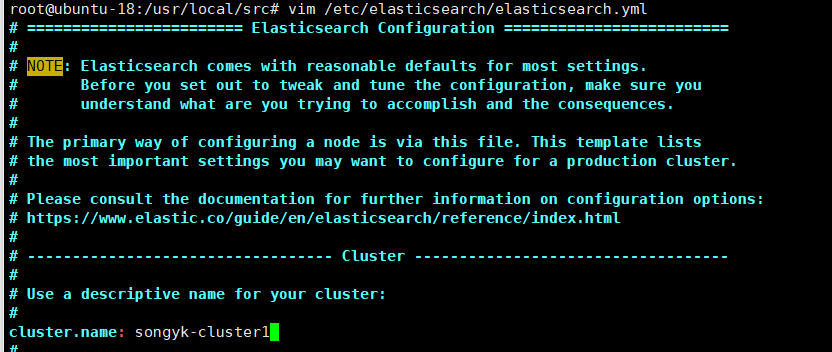

(1)elasticsearch.yml文件配置

root@ubuntu-18:/usr/local/src# vim /etc/elasticsearch/elasticsearch.yml

集群内cluster.name需要相同

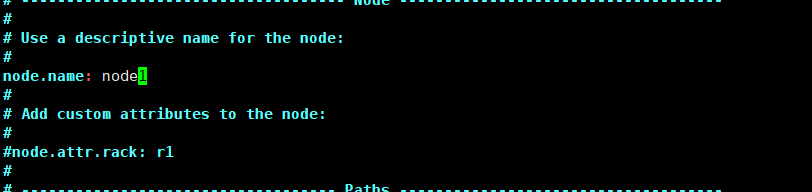

集群内node.name需要不相同

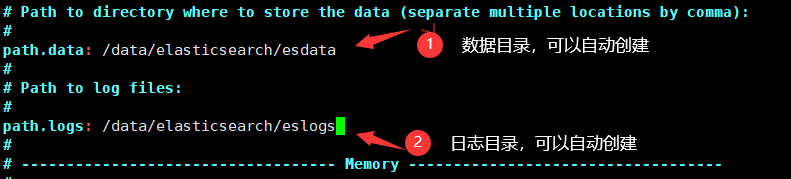

更改数据目录和日志目录,默认系统,太小

root@ubuntu-18:/usr/local/src# grep "^[a-Z]" /etc/elasticsearch/elasticsearch.yml

cluster.name: songyk-cluster1

node.name: node3

path.data: /data/elasticsearch/esdata

path.logs: /data/elasticsearch/eslogs

network.host: 10.0.0.153

http.port: 9200

discovery.seed_hosts: ["10.0.0.151", "10.0.0.152","10.0.0.153"]

cluster.initial_master_nodes: ["10.0.0.151", "10.0.0.152","10.0.0.153"]

action.destructive_requires_name: true

root@ubuntu-18:/usr/local/src# chown elasticsearch.elasticsearch /data/ -R

root@ubuntu-18:/usr/local/src# systemctl restart elasticsearch

#修改内存限制

root@songyk-cluster3:~# vim /usr/lib/systemd/system/elasticsearch.service

LimitMEMLOCK=infinity #无限制

root@songyk-cluster3:~# vim /etc/elasticsearch/jvm.options

-Xms1g

-Xmx1g

root@songyk-cluster1:~# systemctl daemon-reload

root@songyk-cluster1:~# systemctl restart elasticsearch.service

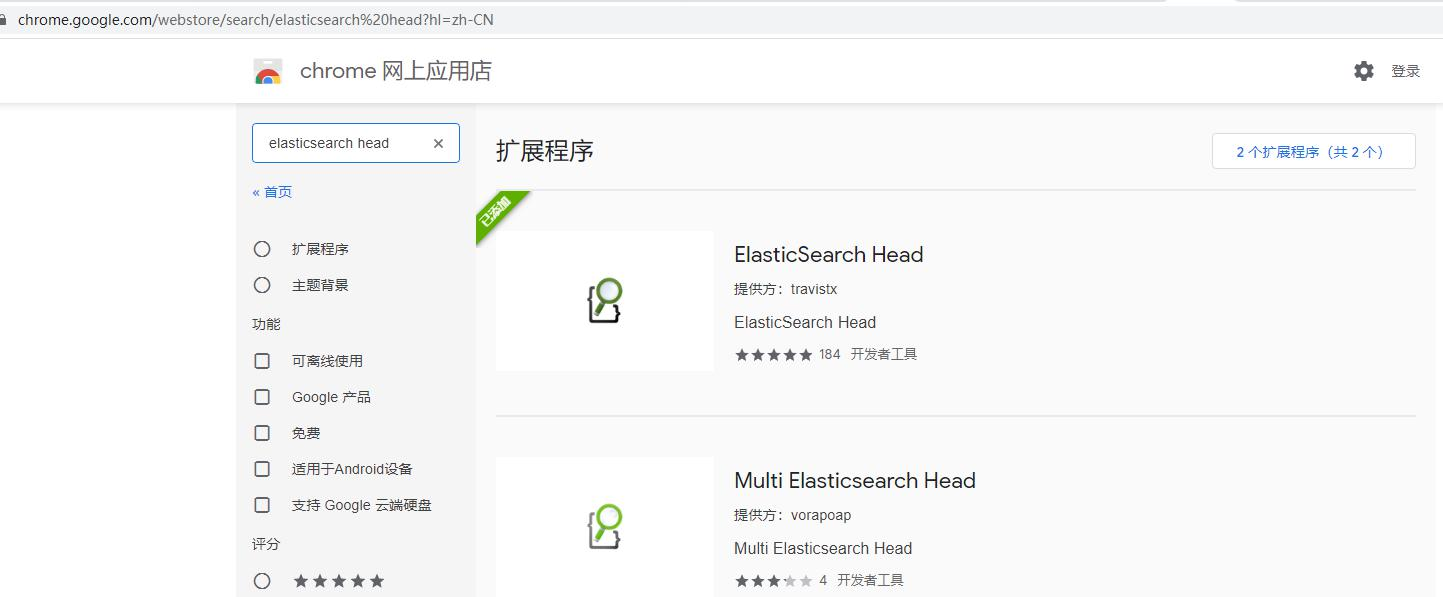

#安装 elasticsearch head chrome网上应用商店下载(自己想办法)

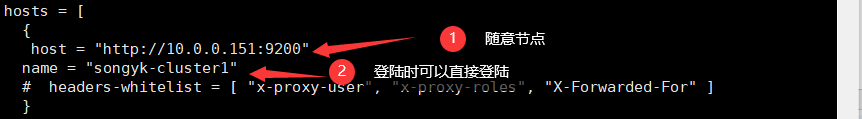

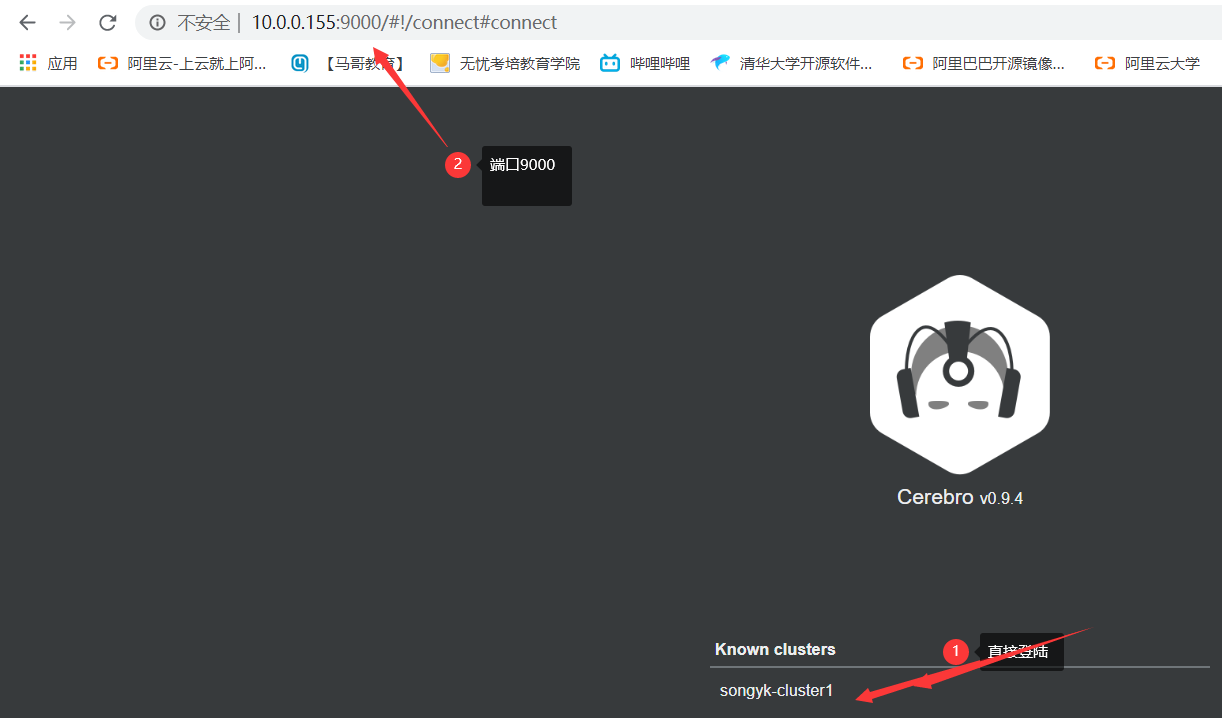

#安转cerebro 新开源的 elasticsearch 集群 web 管理程序,0.9.3 及之前的版本需要 java 8 或者更高版本,0.9.4 开始就需要 java 11 或更高版本,

具体参考 github 地址,https://github.com/lmenezes/cerebro

root@redis:~# apt install openjdk-11-jdk

root@redis:~# mkdir /apps

root@redis:~# cd /apps

root@redis:~# unzip cerebro-0.9.4.zip

root@redis:~# cd cerebro-0.9.4

root@redis:/apps/cerebro-0.9.4# vim /apps/cerebro-0.9.4/conf/application.conf

#启动

root@redis:/apps/cerebro-0.9.4# ./bin/cerebro

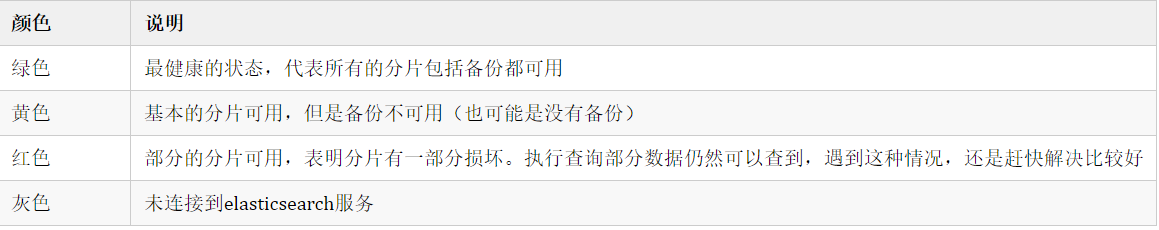

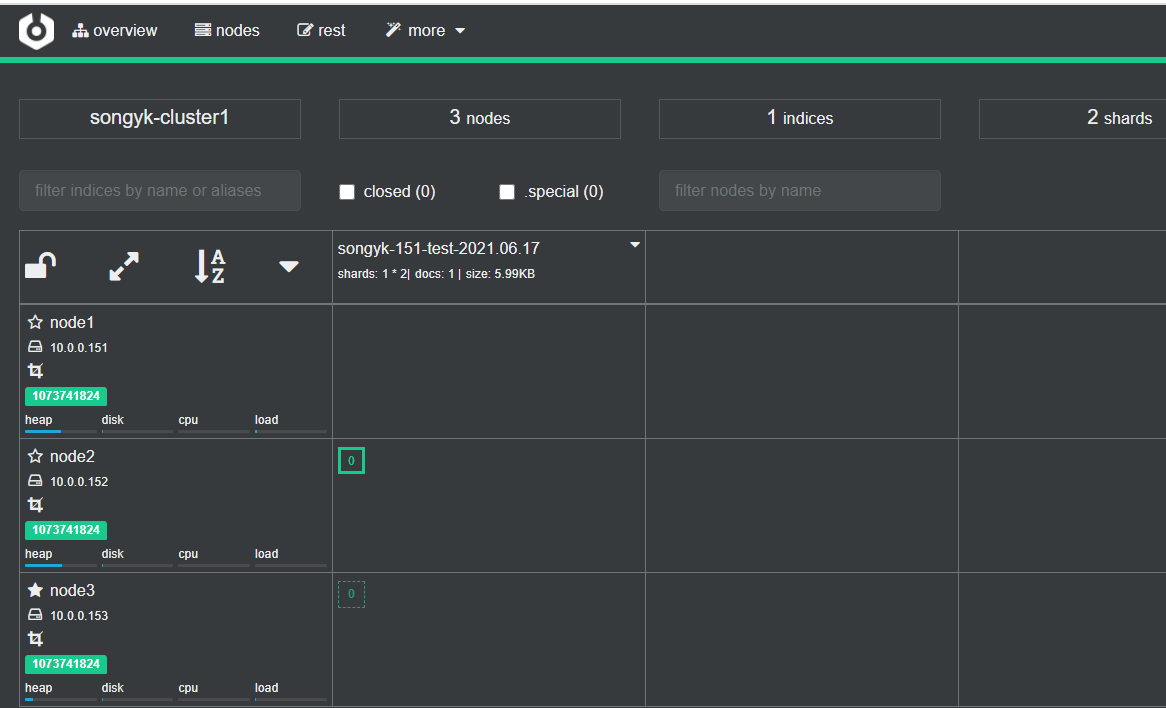

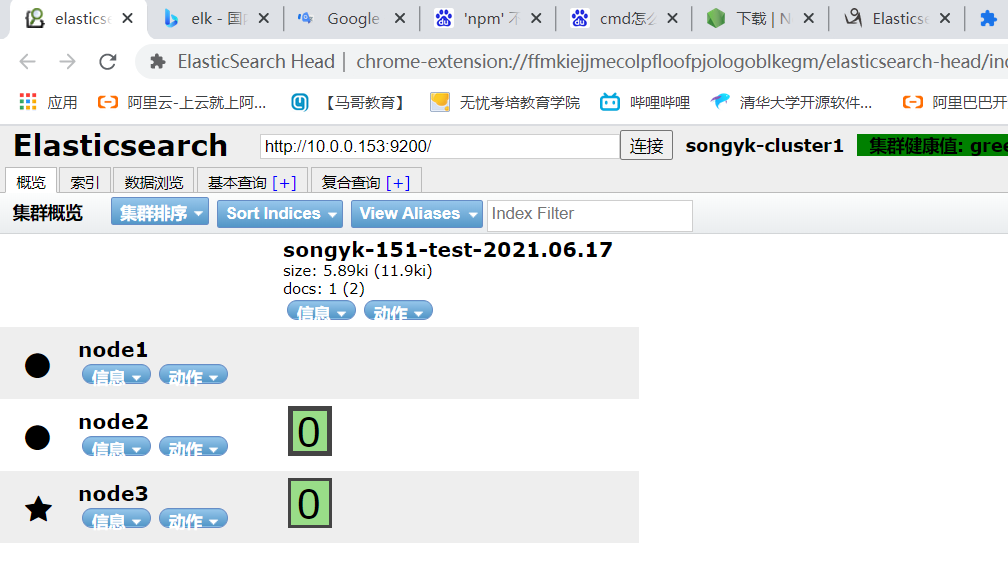

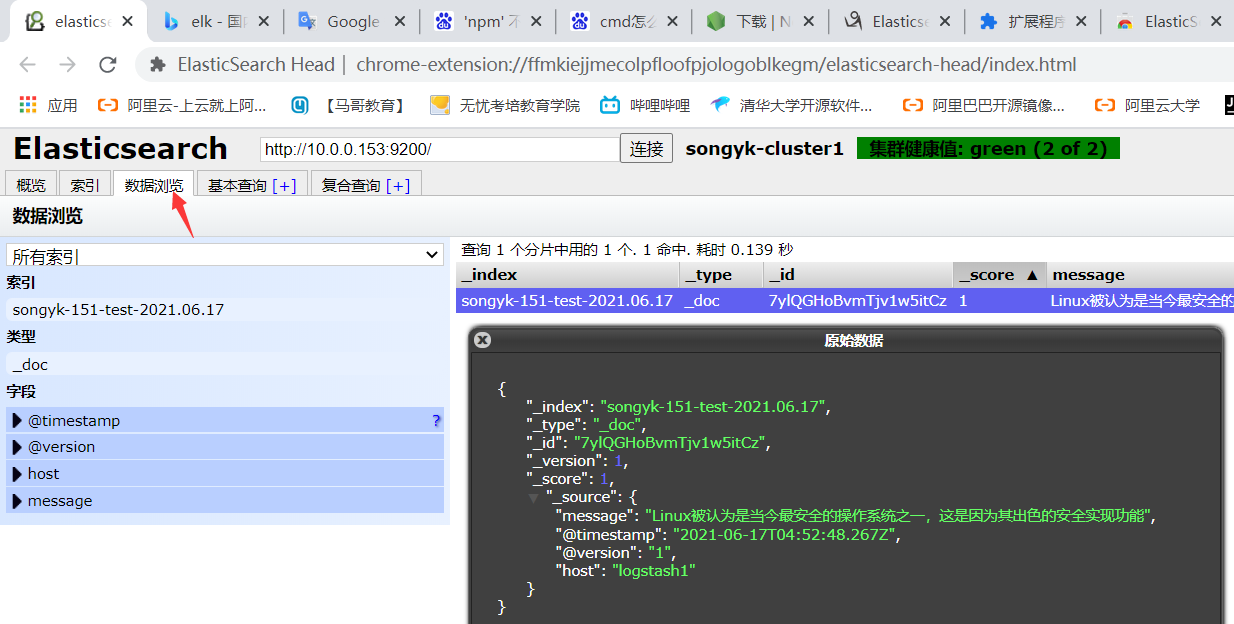

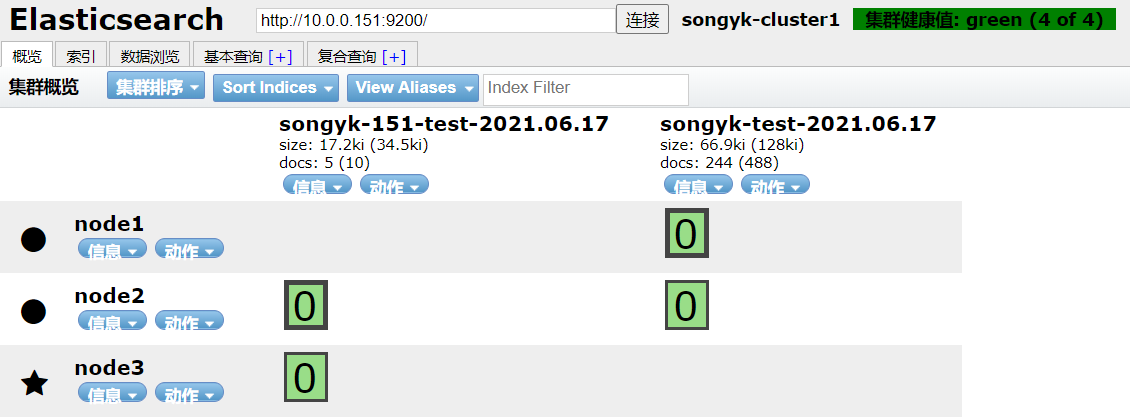

(2)监控集群状态

root@cerebro-154:~# curl -sXGET http://10.0.0.151:9200/_cluster/health?pretty=true

{

"cluster_name" : "songyk-cluster1",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 3,

"active_primary_shards" : 0,

"active_shards" : 0,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

green所有的主分片和副本分片都已分配。你的集群是 100% 可用的。

yellow所有的主分片已经分片了,但至少还有一个副本是缺失的。不会有数据丢失,所以搜索结果依然是完整的。不过,你的高可用性在某种程度上被弱化。

如果 更多的 分片消失,你就会丢数据了。把 yellow 想象成一个需要及时调查的警告。

red至少一个主分片(以及它的全部副本)都在缺失中。这意味着你在缺少数据:搜索只能返回部分数据,而分配到这个分片上的写入请求会返回一个异常。

(二)部署logstash

root@logstash1:~# apt install openjdk-11-jdk

root@logstash1:~# dpkg -i logstash-7.12.1-amd64.deb

Selecting previously unselected package logstash.

(Reading database ... 110593 files and directories currently installed.)

Preparing to unpack logstash-7.12.1-amd64.deb ...

Unpacking logstash (1:7.12.1-1) ...

Setting up logstash (1:7.12.1-1) ...

Using bundled JDK: /usr/share/logstash/jdk

Using provided startup.options file: /etc/logstash/startup.options

OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release.

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/pleaserun-0.0.32/lib/pleaserun/platform/base.rb:112: warning: constant ::Fixnum is deprecated

Successfully created system startup script for Logstash

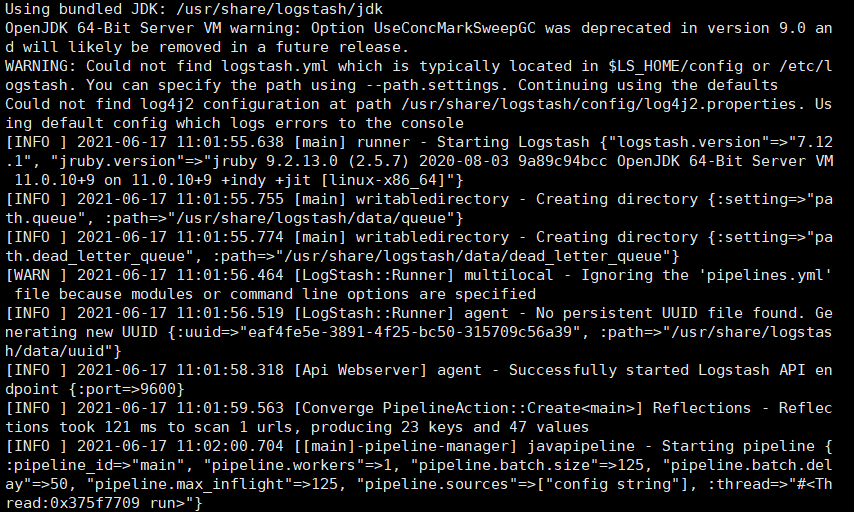

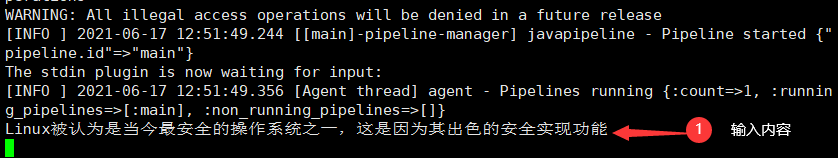

#测试logstash (第一次启动就慢一些,稍等)

root@logstash1:~# /usr/share/logstash/bin/logstash -e 'input { stdin{} } output { stdout{ codec => rubydebug }}'

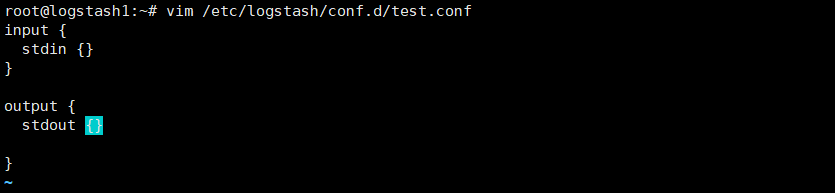

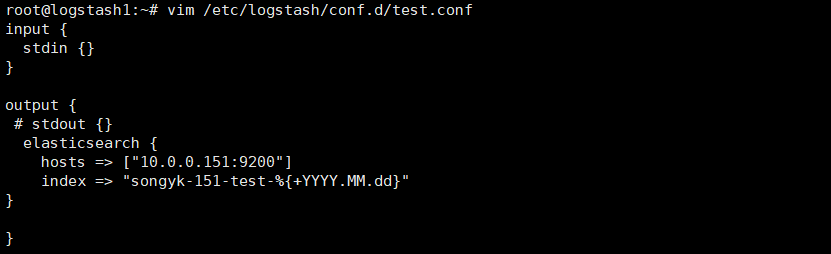

#编写配置文件

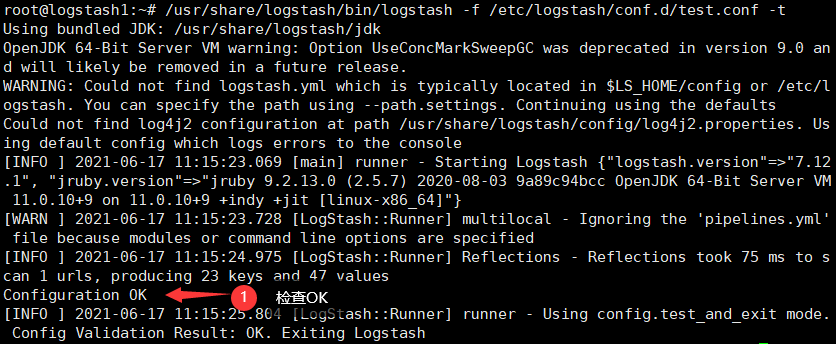

root@logstash1:~# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/test.conf -t # -f指定配置文件 -t 检查

root@logstash1:~# systemctl restart logstash.service

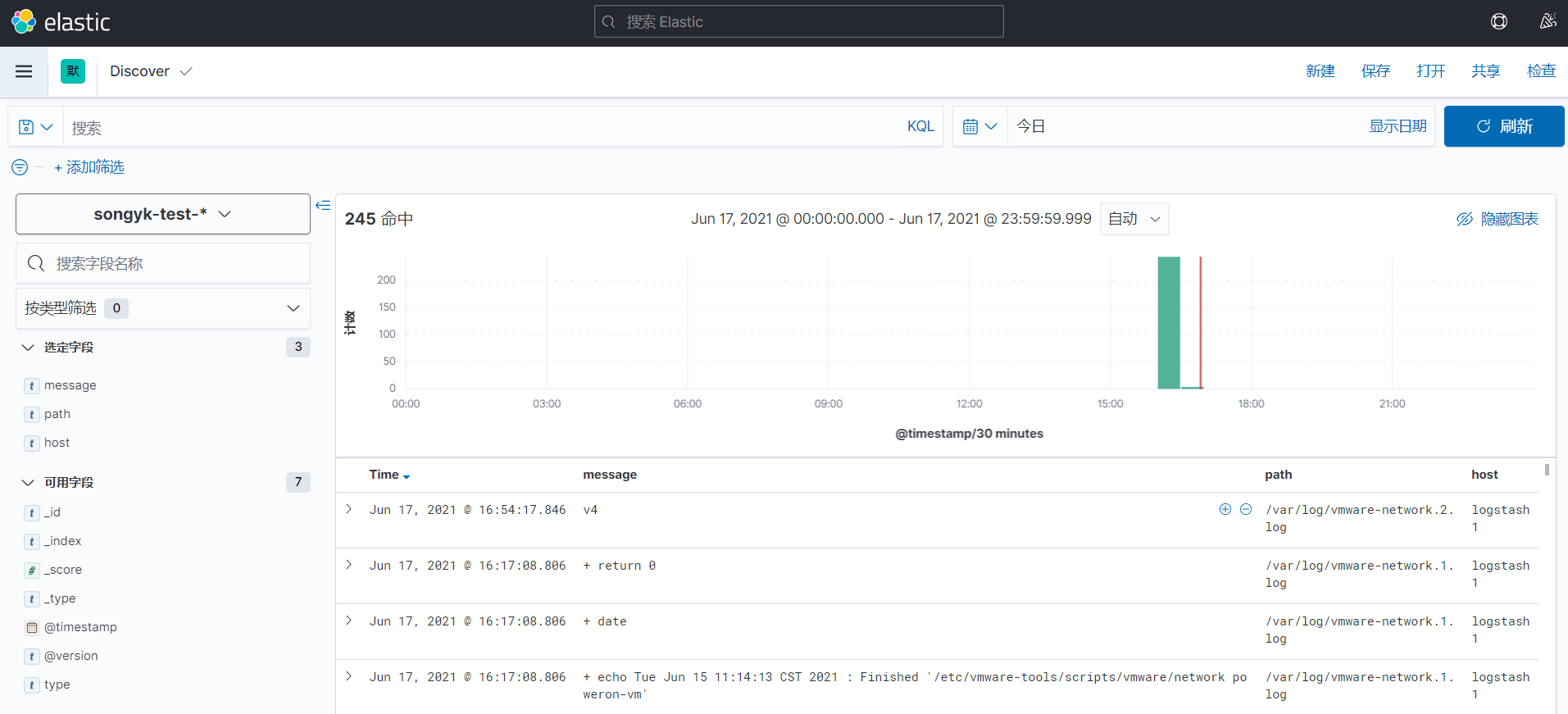

(三)#安装 kibana --需要与 Elasticsearch 版本相同

root@songyk-cluster1:~# dpkg -i kibana-7.12.1-amd64.deb

Selecting previously unselected package kibana.

(Reading database ... 109039 files and directories currently installed.)

Preparing to unpack kibana-7.12.1-amd64.deb ...

Unpacking kibana (7.12.1) ...

Setting up kibana (7.12.1) ...

Creating kibana group... OK

Creating kibana user... OK

Created Kibana keystore in /etc/kibana/kibana.keystore

Processing triggers for systemd (237-3ubuntu10.47) ...

Processing triggers for ureadahead (0.100.0-21) ...

#修改配置文件

root@songyk-cluster1:~# grep "^[a-Z]" /etc/kibana/kibana.yml

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.hosts: ["http://10.0.0.151:9200"]

i18n.locale: "zh-CN"

#多文件模式

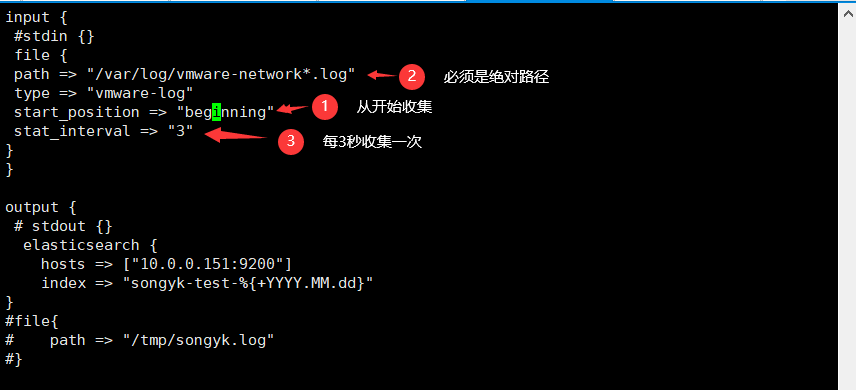

root@logstash1:~# vim /etc/logstash/conf.d/test.conf

input {

#stdin {}

file {

path => "/var/log/vmware-network*.log"

input {

#stdin {}

file {

input {

#stdin {}

file {

path => "/var/log/vmware-network.log"

type => "vmware-log"

start_position => "beginning"

stat_interval => "3"

}

file {

path => "/var/log/syslog"

type => "systemlog"

start_position => "beginning"

stat_interval => "3"

}

}

output {

# stdout {}

if [type] == "vmware-log" {

elasticsearch {

hosts => ["10.0.0.151:9200"]

index => "songyk-vmwarelog-%{+YYYY.MM.dd}"

}

}

if [type] == "systemlog" {

elasticsearch {

hosts => ["10.0.0.152:9200"]

index => "songyk-systemlog-%{+YYYY.MM.dd}"

}

}

root@logstash1:~# systemctl restart logstash.service

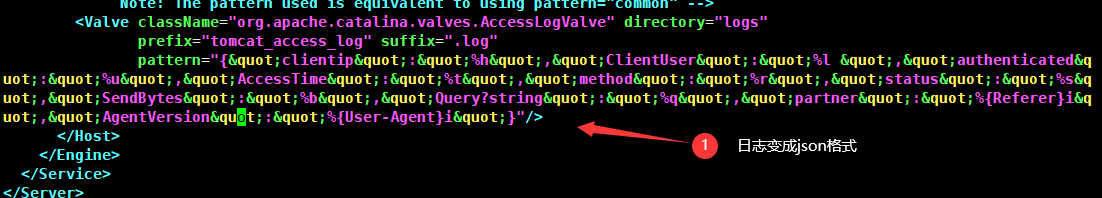

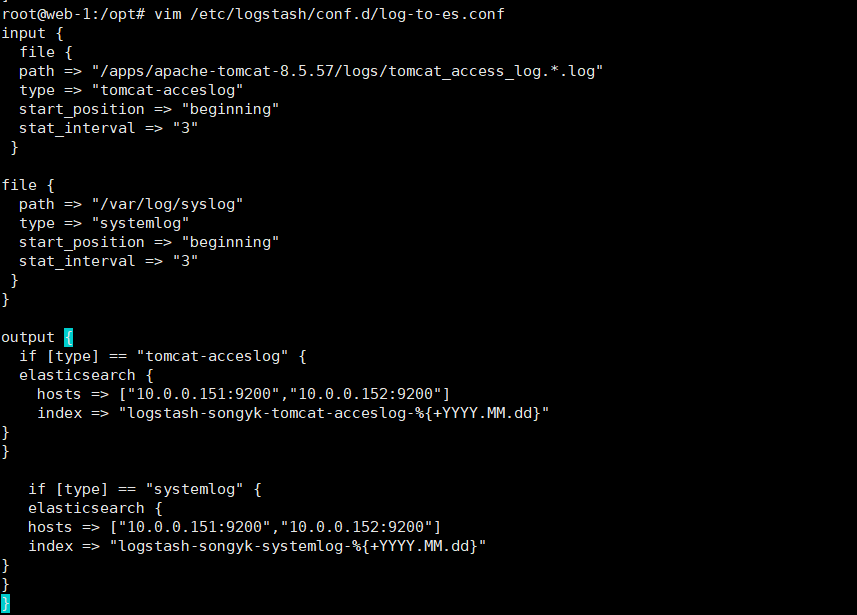

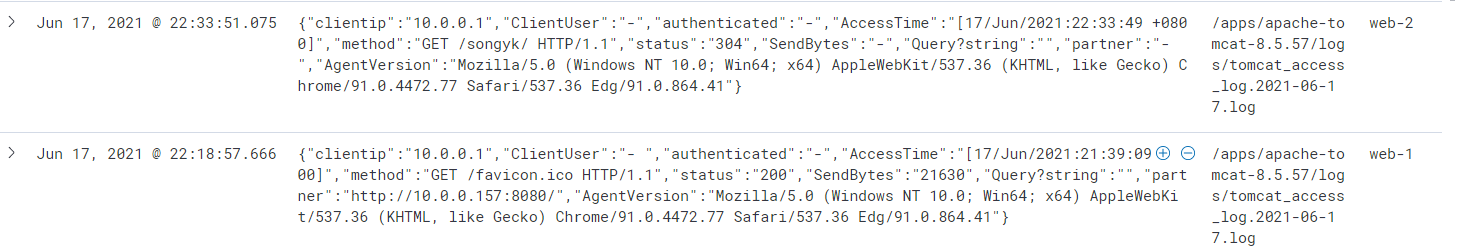

#通过 logtsash 收集 tomcat 和 java 日志 (web-1和web-2操作相同)

root@web-1:/apps# apt install openjdk-11-jdk

root@web-1:/apps# tar xvf apache-tomcat-8.5.57.tar.gz

root@web-1:/apps/apache-tomcat-8.5.57# vim conf/server.xml --好好复制,别留空格,tomcat容易起不来

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="tomcat_access_log" suffix=".log"

pattern="{"clientip":"%h","ClientUser":"%l ","authenticated&q

uot;:"%u","AccessTime":"%t","method":"%r","status":"%s&q

uot;,"SendBytes":"%b","Query?string":"%q","partner":"%{Referer}i&q

uot;,"AgentVersion":"%{User-Agent}i"}"/>

#安装logstash

root@web-1:/opt# dpkg -i logstash-7.12.1-amd64.deb

Selecting previously unselected package logstash.

(Reading database ... 110593 files and directories currently installed.)

Preparing to unpack logstash-7.12.1-amd64.deb ...

Unpacking logstash (1:7.12.1-1) ...

Setting up logstash (1:7.12.1-1) ...

Using bundled JDK: /usr/share/logstash/jdk

Using provided startup.options file: /etc/logstash/startup.options

OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release.

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/pleaserun-0.0.32/lib/pleaserun/platform/base.rb:112: warning: constant ::Fixnum is deprecated

Successfully created system startup script for Logstash

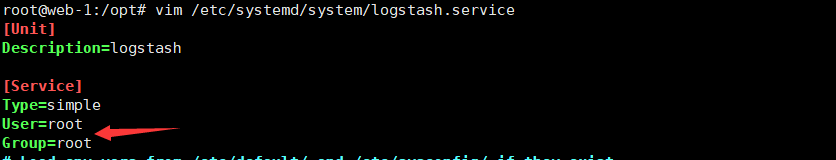

#修改配置文件

# 收集Java日志

root@web-2:/etc/logstash/conf.d# vim javalog-to-es.conf

input {

stdin {

codec => multiline {

pattern => "^\["

#当遇到[开头的行时候将多行进行合并

negate => true

#true 为匹配成功进行操作,false 为不成功进行操作

what => "previous"

#与以前的行合并,如果是下面的行合并就是 next

}

}

}

output {

stdout {

}

}

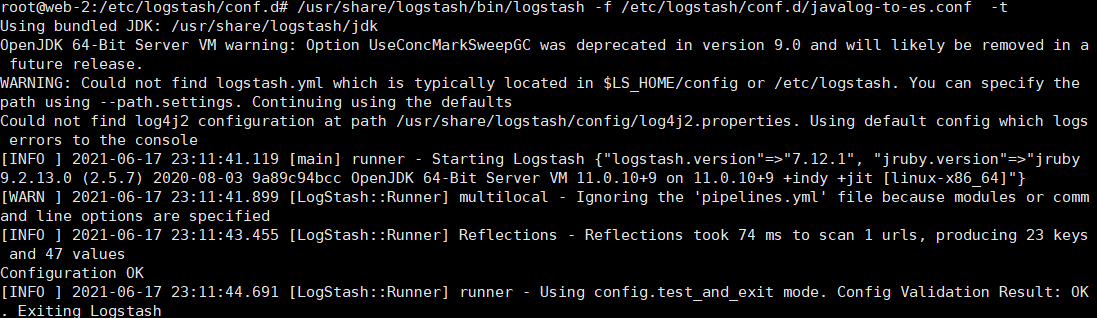

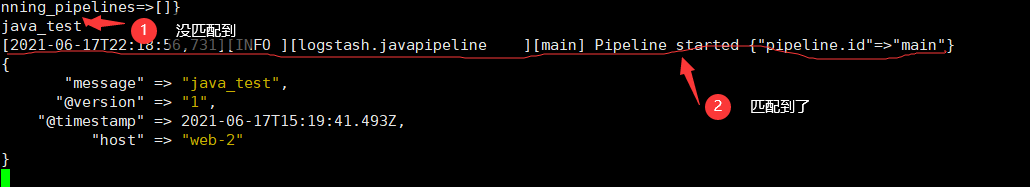

#测试正常启动

root@web-2:/etc/logstash/conf.d# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/javalog-to-es.conf

root@web-2:/etc/logstash/conf.d# vim javalog-to-es.conf

input {

file {

path => "/var/log/logstash/logstash-plain.log"

type => "logstash-log"

start_position => "beginning"

stat_interval => "3"

codec => multiline {

pattern => "^\["

negate => true

what => "previous"

}}

}

output {

if [type] == "logstash-log" {

elasticsearch {

hosts => ["10.0.0.151:9200","10.0.0.152:9200"]

index => "logstash-log-%{+YYY.MM.dd}"

}

}

}

root@web-1:/opt# systemctl restart logstash.service