AWS EKS 集群创建

创建方式

- AWS 管理控制台

- AWS 命令行工具

- eksctl 工具

- AWS 云开发工具包

一条命令启动eks集群

eksctl create cluster

- 自动生成集群名称

- 2x m5.large节点

- 自动选择AWS EKS AMI

- 默认 us-west-2 region

- 自动创建VPC

使用配置文件创建集群

创建cluster.yaml文件:

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: basic-cluster

region: ap-northeast-1

nodeGroups:

- name: ng-1

instanceType: m5.large

desiredCapacity: 10

volumeSize: 80

ssh:

allow: true # will use ~/.ssh/id_rsa.pub as the default ssh key

- name: ng-2

instanceType: m5.xlarge

desiredCapacity: 2

volumeSize: 100

ssh:

publicKeyPath: ~/.ssh/id_rsa.pub

指定文件创建

eksctl create cluster -f cluster.yaml

#####输入信息#####

[ℹ] eksctl version 0.23.0

[ℹ] using region ap-northeast-1

[ℹ] setting availability zones to [ap-northeast-1a ap-northeast-1c ap-northeast-1d]

[ℹ] subnets for ap-northeast-1a - public:192.168.0.0/19 private:192.168.96.0/19

[ℹ] subnets for ap-northeast-1c - public:192.168.32.0/19 private:192.168.128.0/19

[ℹ] subnets for ap-northeast-1d - public:192.168.64.0/19 private:192.168.160.0/19

[ℹ] nodegroup "ng-1" will use "ami-0b6f41e05739de6f7" [AmazonLinux2/1.16]

[ℹ] using SSH public key "/Users/15b883/.ssh/id_rsa.pub" as "eksctl-basic-cluster-nodegroup-ng-1-d3:25:57:cb:e1:6d:d2:ef:65:ca:a2:29:ae:f6:c9:91"

[ℹ] nodegroup "ng-2" will use "ami-0b6f41e05739de6f7" [AmazonLinux2/1.16]

[ℹ] using SSH public key "/Users/15b883/.ssh/id_rsa.pub" as "eksctl-basic-cluster-nodegroup-ng-2-d3:25:57:cb:e1:6d:d2:ef:65:ca:a2:29:ae:f6:c9:91"

[ℹ] using Kubernetes version 1.16

[ℹ] creating EKS cluster "basic-cluster" in "ap-northeast-1" region with un-managed nodes

[ℹ] 2 nodegroups (ng-1, ng-2) were included (based on the include/exclude rules)

[ℹ] will create a CloudFormation stack for cluster itself and 2 nodegroup stack(s)

[ℹ] will create a CloudFormation stack for cluster itself and 0 managed nodegroup stack(s)

[ℹ] if you encounter any issues, check CloudFormation console or try 'eksctl utils describe-stacks --region=ap-northeast-1 --cluster=basic-cluster'

[ℹ] CloudWatch logging will not be enabled for cluster "basic-cluster" in "ap-northeast-1"

[ℹ] you can enable it with 'eksctl utils update-cluster-logging --region=ap-northeast-1 --cluster=basic-cluster'

[ℹ] Kubernetes API endpoint access will use default of {publicAccess=true, privateAccess=false} for cluster "basic-cluster" in "ap-northeast-1"

[ℹ] 2 sequential tasks: { create cluster control plane "basic-cluster", 2 sequential sub-tasks: { no tasks, 2 parallel sub-tasks: { create nodegroup "ng-1", create nodegroup "ng-2" } } }

[ℹ] building cluster stack "eksctl-basic-cluster-cluster"

[ℹ] deploying stack "eksctl-basic-cluster-cluster"

[ℹ] building nodegroup stack "eksctl-basic-cluster-nodegroup-ng-1"

[ℹ] building nodegroup stack "eksctl-basic-cluster-nodegroup-ng-2"

[ℹ] --nodes-min=10 was set automatically for nodegroup ng-1

[ℹ] --nodes-max=10 was set automatically for nodegroup ng-1

[ℹ] --nodes-min=2 was set automatically for nodegroup ng-2

[ℹ] --nodes-max=2 was set automatically for nodegroup ng-2

[ℹ] deploying stack "eksctl-basic-cluster-nodegroup-ng-1"

[ℹ] deploying stack "eksctl-basic-cluster-nodegroup-ng-2"

[ℹ] waiting for the control plane availability...

[✔] saved kubeconfig as "/Users/15b883/.kube/config"

[ℹ] no tasks

[✔] all EKS cluster resources for "basic-cluster" have been created

[ℹ] adding identity "arn:aws:iam::543300565893:role/eksctl-basic-cluster-nodegroup-ng-NodeInstanceRole-1O2LKZ01OEC7P" to auth ConfigMap

[ℹ] nodegroup "ng-1" has 0 node(s)

[ℹ] waiting for at least 10 node(s) to become ready in "ng-1"

[ℹ] nodegroup "ng-1" has 10 node(s)

[ℹ] node "ip-192-168-11-187.ap-northeast-1.compute.internal" is ready

[ℹ] node "ip-192-168-13-162.ap-northeast-1.compute.internal" is ready

[ℹ] node "ip-192-168-28-36.ap-northeast-1.compute.internal" is ready

[ℹ] node "ip-192-168-4-22.ap-northeast-1.compute.internal" is ready

[ℹ] node "ip-192-168-47-220.ap-northeast-1.compute.internal" is ready

[ℹ] node "ip-192-168-48-101.ap-northeast-1.compute.internal" is ready

[ℹ] node "ip-192-168-52-0.ap-northeast-1.compute.internal" is ready

[ℹ] node "ip-192-168-81-199.ap-northeast-1.compute.internal" is ready

[ℹ] node "ip-192-168-91-225.ap-northeast-1.compute.internal" is ready

[ℹ] node "ip-192-168-91-6.ap-northeast-1.compute.internal" is ready

[ℹ] adding identity "arn:aws:iam::543300565893:role/eksctl-basic-cluster-nodegroup-ng-NodeInstanceRole-OPSPI8KGIEP" to auth ConfigMap

[ℹ] nodegroup "ng-2" has 0 node(s)

[ℹ] waiting for at least 2 node(s) to become ready in "ng-2"

[ℹ] nodegroup "ng-2" has 2 node(s)

[ℹ] node "ip-192-168-5-7.ap-northeast-1.compute.internal" is ready

[ℹ] node "ip-192-168-76-218.ap-northeast-1.compute.internal" is ready

[ℹ] kubectl command should work with "/Users/15b883/.kube/config", try 'kubectl get nodes'

[✔] EKS cluster "basic-cluster" in "ap-northeast-1" region is ready

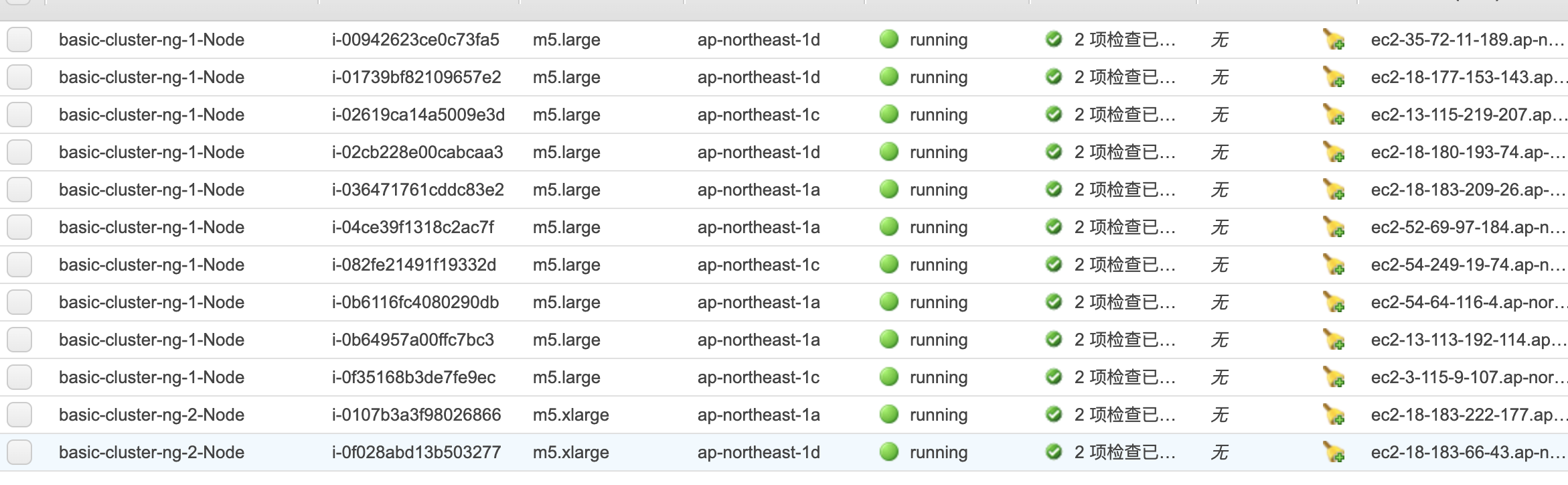

查看集群信息

➜ ekslab eksctl get nodegroup --cluster=basic-cluster

CLUSTER NODEGROUP CREATED MIN SIZE MAX SIZE DESIRED CAPACITY INSTANCE TYPE IMAGE ID

basic-cluster ng-1 2020-09-01T09:00:07Z 10 10 10 m5.large ami-0b6f41e05739de6f7

basic-cluster ng-2 2020-09-01T09:00:08Z 2 2 2 m5.xlarge ami-0b6f41e05739de6f7

➜ ekslab eksctl get cluster

NAME REGION

basic-cluster ap-northeast-1

➜ ekslab eksctl get nodegroup --cluster=basic-cluster

CLUSTER NODEGROUP CREATED MIN SIZE MAX SIZE DESIRED CAPACITY INSTANCE TYPE IMAGE ID

basic-cluster ng-1 2020-09-01T09:00:07Z 10 10 10 m5.large ami-0b6f41e05739de6f7

basic-cluster ng-2 2020-09-01T09:00:08Z 2 2 2 m5.xlarge ami-0b6f41e05739de6f7

删除集群

eksctl delete cluster ##删除创建的默认集群

eksctl delete cluster -f cluster.yaml ##删除指定文件的集群

###

[ℹ] eksctl version 0.23.0

[ℹ] using region ap-northeast-1

[ℹ] deleting EKS cluster "basic-cluster"

[ℹ] deleted 0 Fargate profile(s)

[✔] kubeconfig has been updated

[ℹ] cleaning up LoadBalancer services

[ℹ] 2 sequential tasks: { 2 parallel sub-tasks: { delete nodegroup "ng-2", delete nodegroup "ng-1" }, delete cluster control plane "basic-cluster" [async] }

[ℹ] will delete stack "eksctl-basic-cluster-nodegroup-ng-2"

[ℹ] waiting for stack "eksctl-basic-cluster-nodegroup-ng-2" to get deleted

[ℹ] will delete stack "eksctl-basic-cluster-nodegroup-ng-1"

[ℹ] waiting for stack "eksctl-basic-cluster-nodegroup-ng-1" to get deleted

[ℹ] will delete stack "eksctl-basic-cluster-cluster"

[✔] all cluster resources were deleted