Argo之argo rollout

Argo rollout

argo项目的子项目,配合argocd使用,完成k8s高级部署功能,包括:

- 蓝绿部署

- 金丝雀、金丝雀分析

- 渐进式交付

应用编排控制器,可用于取代deployment控制器

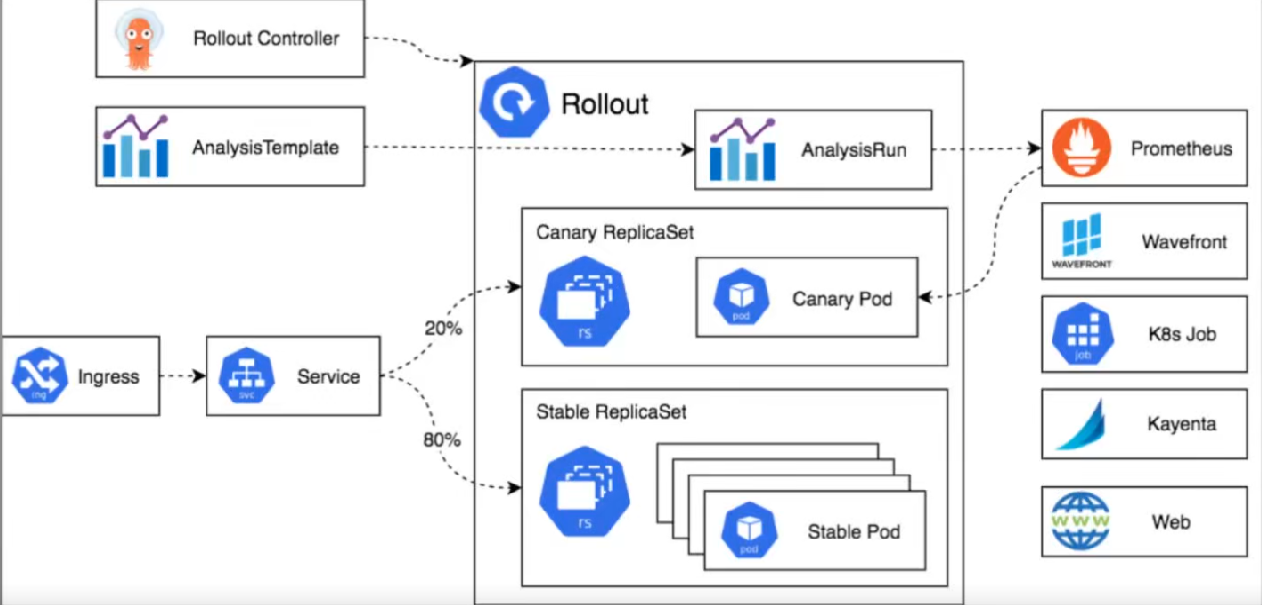

支持ingress控制器(ngx、alb)和服务网格(istio、linkerd、smi)集成,利用他们的流量治理实现流量迁移过程,并且可以查询和解释来自指标系统(普罗米修斯、k8s-job、web、datadog)的指标来验证蓝绿、金丝雀的部署结果,并根据结果自动决定进行升级和回滚

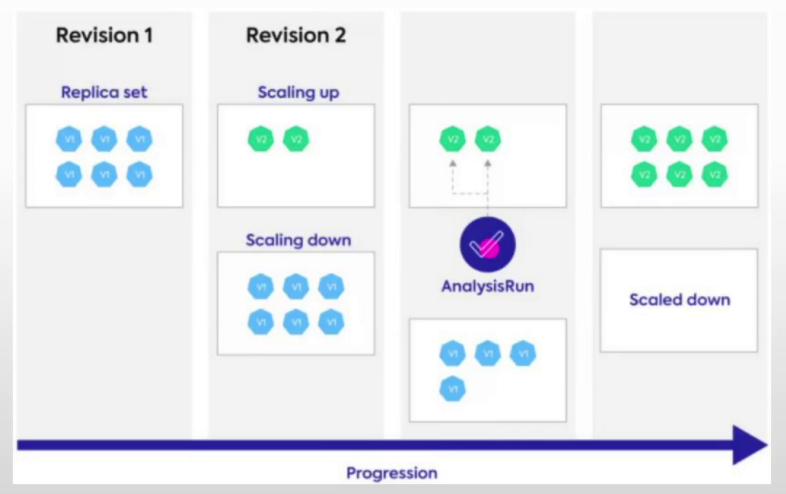

工作机制: 与deployment类似,rollout控制器借助rs控制器完成应用的创建、缩放、删除。rs资源定义在Rollout的spec.template字段

crd资源

- Rollout

- AnalysisTemplate

- ClusterAnalysisTemplate

- AnalysisRun

架构

由以下内容组成

- Argo rollout controller

- rollout crd

- replicaSet

- ingress/service

- analysisTemplate/analysisRun

- metric providers

- cli/gui

配置

rollout资源

简写:ro

命令

kubectl-argo-rollouts get rollout ro-spring-boot-hello --watch

kubectl-argo-rollouts set image ro-spring-boot-hello spring-boot-hello=ikubernetes/spring-boot-helloworld:v0.9.5

kubectl-argo-rollouts promote ro-spring-boot-hello

语法

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

spec:

analysis:

successfulRunHistoryLimit: int

unsuccessfulRunHistoryLimit: int

minReadySeconds: int

paused: boolean

progressDeadlineAbort: boolean

progressDeadlineSeconds: int

replicas: int #副本数

restartAt: str

revisionHistoryLimit: int #运行配置暴露的历史版本数

rollbackWindow:

revisions: int

selector: #标签选择器

matchExpressions:

- key: str

operator: str

values: [str]

matchLabels: {}

strategy: #发布策略

blueGreen: #蓝绿部署相关

abortScaleDownDelaySeconds: int

activeMetadata:

annotations: {}

labels: {}

activeService: str #当前活动状态的服务,也就是即将更新的服务

antiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

weight: int

requiredDuringSchedulingIgnoredDuringExecution:

autoPromotionEnabled: boolean #自动进行promote流量迁移,蓝绿发布下,新、旧pod都启动后,默认自动将浏览切到新pod,而旧pod在30s后自动删除

autoPromotionSeconds: int #在指定时间后执行promote

maxUnavailable: #更新期间最多允许处于不可用状态的pod数量或百分比

postPromotionAnalysis: #promote流量迁移操作后运行的分析,若分析运行失败或出错,则ro进入中止状态并将流量切换回之前的稳定pod。调用at模板,并为其赋值等

analysisRunMetadata:

args:

- name: str

value: str

valueFrom:

fieldRef:

fieldPath: str

podTemplateHashValue: str

dryRun:

- metricName: str

measurementRetention:

- metricName: str

limit: int

templates:

- templateName: str

clusterScope: boolean

prePromotionAnalysis: #promote操作前要运行的分析,分析的结果决定了ro进行流量切换还是中止更新。配置选项参考spec.strategy.blueGreen.postPromotionAnalysis

previewMetadata: #更新期间添加到预览版pod上的元数据

annotations: {}

labels: {}

previewReplicaCount: int #预览版的pod数,默认和稳定版同步,为100%

previewService: str #预览版的服务,也是要更新的目标服务版本

scaleDownDelayRevisionLimit: int

scaleDownDelaySeconds: int

canary: #金丝雀发布相关

abortScaleDownDelaySeconds: int #启用流量路由时,因为更新中止而收缩金丝雀版本pod数量之前的延迟时间,默认30s

analysis: #滚动更新期间与后台运行的分析,可选。其余部分配置选项参考spec.strategy.blueGreen.postPromotionAnalysis

startingStep: int #从steps定义的步骤中,第几步开始进行分析指标数据,期间1次不达标就回滚

...

antiAffinity: #金丝雀pod和副本pod之间的反亲和关系。配置选项参考spec.strategy.blueGreen.antiAffinity

canaryMetadata: #需要添加到金丝雀版本的pod中的元数据,仅存在与金丝雀更新期间,更新完成后就是稳定版

annotations: {}

labels: {}

canaryService: str #由控制器来匹配到金丝雀pod上的svc,流量路由依赖此选项

dynamicStableScale: boolean

maxSurge:

maxUnavailable:

minPodsPerReplicaSet: int

pingPong:

pingService: str

pongService: str

scaleDownDelayRevisionLimit: int #在旧rs上启动缩容前,可运行的旧rs数量

scaleDownDelaySeconds: int #启用流量路由时,缩容前1个rs副本规模的延迟时间,默认30s

stableMetadata: #需要添加到稳定版上的pod的元数据

annotations: {}

labels: {}

stableService: str #由控制器来匹配稳定版pod上的svc,流量路由依赖此选项

steps: #更新期间要执行的步骤,可选

- analysis: #配置选项参考spec.strategy.blueGreen.postPromotionAnalysis

- experiment:

analyses:

- name: str

args:

- name: str

value: str

valueFrom:

fieldRef:

fieldPath: str

podTemplateHashValue: str

clusterScope: boolean

requiredForCompletion: boolean

templateName: str

duration: str

templates:

- name: str

metadata:

annotations: {}

labels: {}

selector:

matchExpressions:

matchLabels: {}

replicas: int

service:

name: str

specRef: str

weight: int

- pause: #暂停发布过程

duration: #指定暂停时间,金丝雀中转移部分流量后自动暂停,而后选择自动流量迁移还是手动进行

- setCanaryScale: #配置金丝雀扩容期间pod扩容与流量扩增的对应关系

matchTrafficWeight: boolean #默认true,流量比例、pod比例与权重比例相同,如:权重配置为10,金丝雀发布时,共10个pod,新起1个金丝雀po接入10%流量,其余90%由原来旧的接入。若5个pod的权重为10%,则不满1个po就启动1个po(保证最少启动1个)

replicas: int #指定金丝雀pod数量(不改变先前指定的流量比例)

weight: int #指定启动金丝雀pod与稳定版pod比例(不改变先前指定的流量比例)

- setHeaderRoute:

name: str

match:

- headerName: str

headerValue:

exact: str

prefix: str

regex: str

- setMirrorRoute:

name: str

match:

- headers: {}

method:

exact: str

prefix: str

regex: str

path:

exact: str

prefix: str

regex: str

percentage: int

- setWeight: int #设置金丝雀版本rs激活pod的比率,以及调度到金丝雀版本的流量比例

trafficRouting: #配置ingress控制器或服务网格做流量分割、迁移,根据具体使用的内容配置

managedRoutes:

- name: str

alb:

ambassador:

apisix:

appMesh:

istio: #istio配置流量迁移

destinationRule:

name: str

canarySubsetName: str

stableSubsetName: str

virtualService:

name: str

routes: [str]

tcpRoutes:

- port: int

tlsRoutes:

- port: int

sniHosts: [str]

virtualServices:

- name: str

routes: [str]

tcpRoutes:

- port: int

tlsRoutes: #指定vs上要动态调整的tls路由条目列表

- port: int

sniHosts: [str]

nginx:

additionalIngressAnnotations: {}

annotationPrefix: str

stableIngress: str

stableIngresses: [str] #要调整的stable版ingress名称

plugins:

smi:

traefik:

template: #pod模板

metadata:

spec:

workloadRef:

apiVersion: str

kind: str

name: str

示例

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

name: ro-spring-boot-hello

spec:

replicas: 10

strategy:

canary:

steps:

- setWeight: 10 #流量与pod启动数为10%

- pause: {} #完成后永久暂停,等待用户手动进行下一步

- setWeight: 20 #流量与pod启动数加大为20%

- pause: #完成后,暂停20秒后再执行下一个步骤

duration: 20

- setWeight: 30

- pause:

duration: 20

- setWeight: 40

- pause:

duration: 20

- setWeight: 60

- pause:

duration: 20

- setWeight: 80 #一直到流量与pod启动数为80%

- pause: #再暂停20秒后,后续没有其他定义则完成所有流量迁移与pod启动,也就是100%

duration: 20

revisionHistoryLimit: 5 #保留5个历史版本

selector:

matchLabels:

app: spring-boot-hello

template:

metadata:

labels:

app: spring-boot-hello

spec:

containers:

- name: spring-boot-hello

image: ikubernetes/spring-boot-helloworld:v0.9.2

ports:

- name: http

containerPort: 80

protocol: TCP

resources:

requests:

memory: 32Mi

cpu: 50m

livenessProbe:

httpGet:

path: '/'

port: 80

scheme: HTTP

initialDelaySeconds: 3

readinessProbe:

httpGet:

path: '/'

port: 80

scheme: HTTP

initialDelaySeconds: 5

AnalysisTemplate资源

简写:at

argo rollout中用于分析、测量结果来推动渐进式交付

分析机制通过分析模板定义,然后在ro中调用它

运行特定的交付过程时,ro会将调用的at实例化为AnalysisRun资源

场景

如果金丝雀发布时,定义了分析模板,设置请求的健康指标比率,2xx响应码比率占总请求的95%,则表示服务运行正常,可继续部署,否则进行回滚,其中剩余的5%中可指定4xx、5xx响应码占比小于多少

语法

apiVersion: argoproj.io/v1alpha1

kind: AnalysisTemplate

metadata:

spec:

args: #模板参数,变量引用格式为:{{ args.变量 }}

- name: str #变量名

value: str

valueFrom: #从文件、pod模板中获取值

fieldRef:

fieldPath: str

podTemplateHashValue: str

dryRun: #允许dryrun模式的指标列表,这些指标的结果不会影响最终分析结果

- metricName: str

measurementRetention: #测量结果的历史保留数,dryrun模式的参数也支持历史保留

- metricName: str #指标名

limit: int #保留数

metrics: #必选字段,定义用于交付效果进行分析的指标

- name: str #指标名称

provider: #指标提供者,根据使用的自行定义

cloudWatch:

datadog:

graphite:

influxdb:

job:

kayenta:

newRelic:

plugin:

prometheus:

address: str #普罗米修斯的地址

authentication:

sigv4:

profile: str

region: str

roleArn: str

headers:

- key: str

value: str

insecure: boolean #不验证ssl

query: str #promQL语句

timeout: int #超时时间

skywalking:

address: str

interval: str

query: str

wavefront:

web:

consecutiveErrorLimit:

count: #总测试的次数

failureCondition: str #错误条件表达式,与successCondition相反

failureLimit:

inconclusiveLimit:

initialDelay: str

interval: str #每次查询的间隔

successCondition: 表达式 #测试结果为成功的条件表达式,在无用户请求或无数据时会出现“slice index out of range”错误,推荐结果是int类型的使用此表达式: len(result) == 0 || 实际表达式。字符串或无穷大的值使用:isNaN(result) || 实际表达式

#表达式支持与或、default(result,默认值)函数、

#使用普罗米修斯分析,使用:result[0]获取结果,结合推荐格式的就是:len(result) == 0 || result[0] > 1234

示例

apiVersion: argoproj.io/v1alpha1

kind: AnalysisTemplate

metadata:

name: success-rate

spec:

args:

- name: service-name

metrics:

- name: success-rate

successCondition: len(result) == 0 || result[0] >= 0.95 #获取普罗米修斯的结果,最判断,普罗米修斯结果取值格式:result[0]

interval: 20s

count: 3

failureLimit: 3

provider:

prometheus:

address: http://prometheus.istio-system.svc.cluster.local:9090

#过去1分钟内,除了5xx以外的请求占总请求比例(成功率)

query: |

sum(irate(

istio_requests_total{reporter="source",destination_service=~"{{args.service-name}}",response_code!~"5.*"}[1m]

)) /

sum(irate(

istio_requests_total{reporter="source",destination_service=~"{{args.service-name}}"}[1m]

))

案例

演示代码仓库可以克隆到自己本地gitlab中,方便操作

代码仓库:https://gitee.com/mageedu/spring-boot-helloWorld.git

配置仓库:https://gitee.com/mageedu/spring-boot-helloworld-deployment.git

例1:运行demo,普通金丝雀模式运行(无ingress或网格)

1)创建ro资源

kubectl apply -f - <<eof

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

name: ro-spring-boot-hello

spec:

replicas: 5

strategy:

canary:

steps:

- setWeight: 10 #流量与pod启动数为10%

- pause: {} #完成后永久暂停,等待用户手动进行下一步

- setWeight: 20 #流量与pod启动数加大为20%

- pause: #完成后,暂停20秒后再执行下一个步骤

duration: 20

- setWeight: 30

- pause:

duration: 20

- setWeight: 40

- pause:

duration: 20

- setWeight: 60

- pause:

duration: 20

- setWeight: 80 #一直到流量与pod启动数为80%

- pause: #再暂停20秒后,后续没有其他定义则完成所有流量迁移与pod启动,也就是100%

duration: 20

revisionHistoryLimit: 5 #保留5个历史版本

selector:

matchLabels:

app: spring-boot-hello

template:

metadata:

labels:

app: spring-boot-hello

spec:

containers:

- name: spring-boot-hello

image: ikubernetes/spring-boot-helloworld:v0.9.2

ports:

- name: http

containerPort: 80

protocol: TCP

resources:

requests:

memory: 32Mi

cpu: 50m

livenessProbe:

httpGet:

path: '/'

port: 80

scheme: HTTP

initialDelaySeconds: 3

readinessProbe:

httpGet:

path: '/'

port: 80

scheme: HTTP

initialDelaySeconds: 5

---

apiVersion: v1

kind: Service

metadata:

name: spring-boot-hello

spec:

ports:

- port: 80

targetPort: http

protocol: TCP

name: http

selector:

app: spring-boot-hello

eof

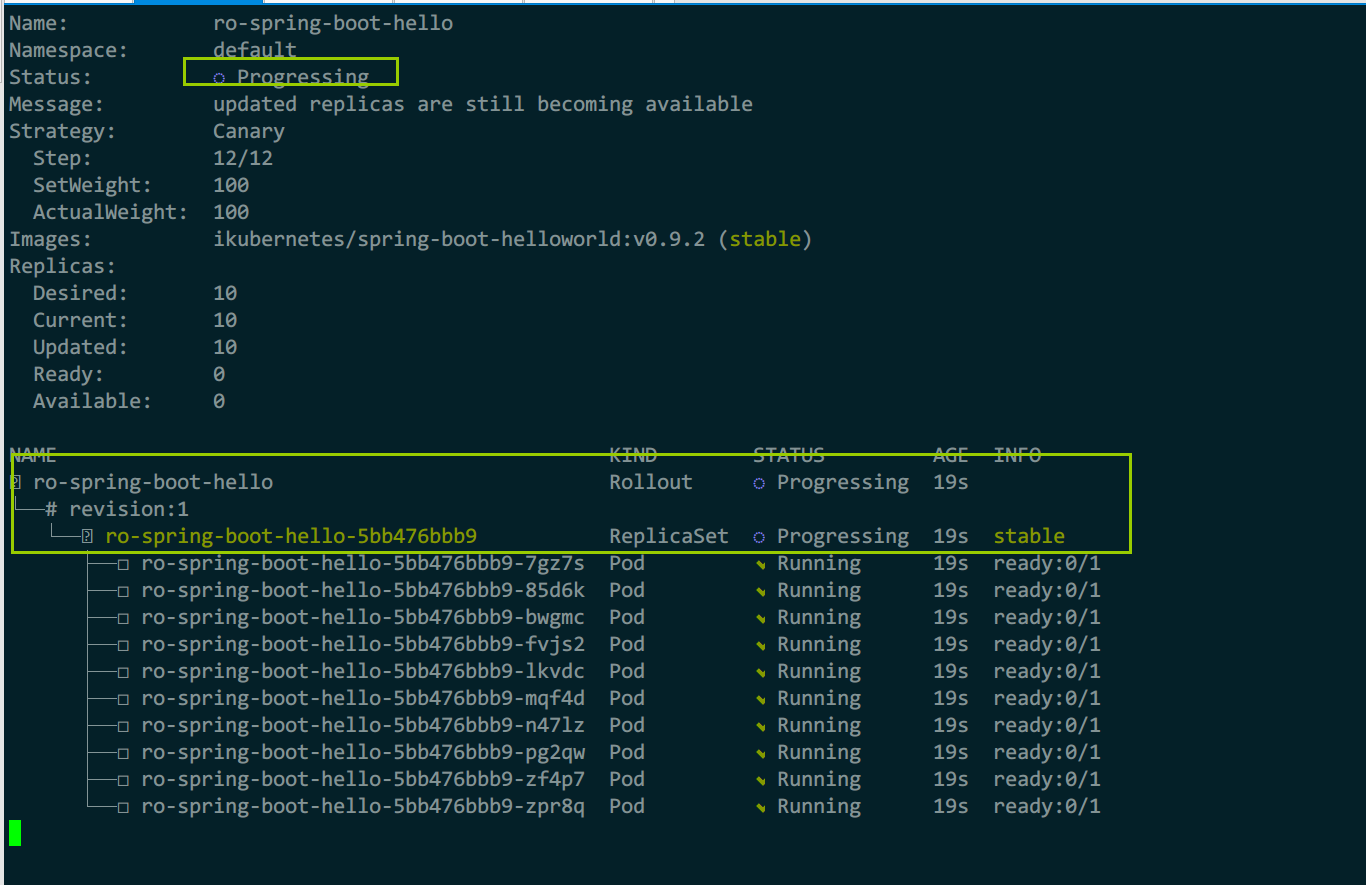

2)监测pod状态

kubectl-argo-rollouts get rollout ro-spring-boot-hello --watch

由于刚运行,还处于启动状态,revision也是第一个版本

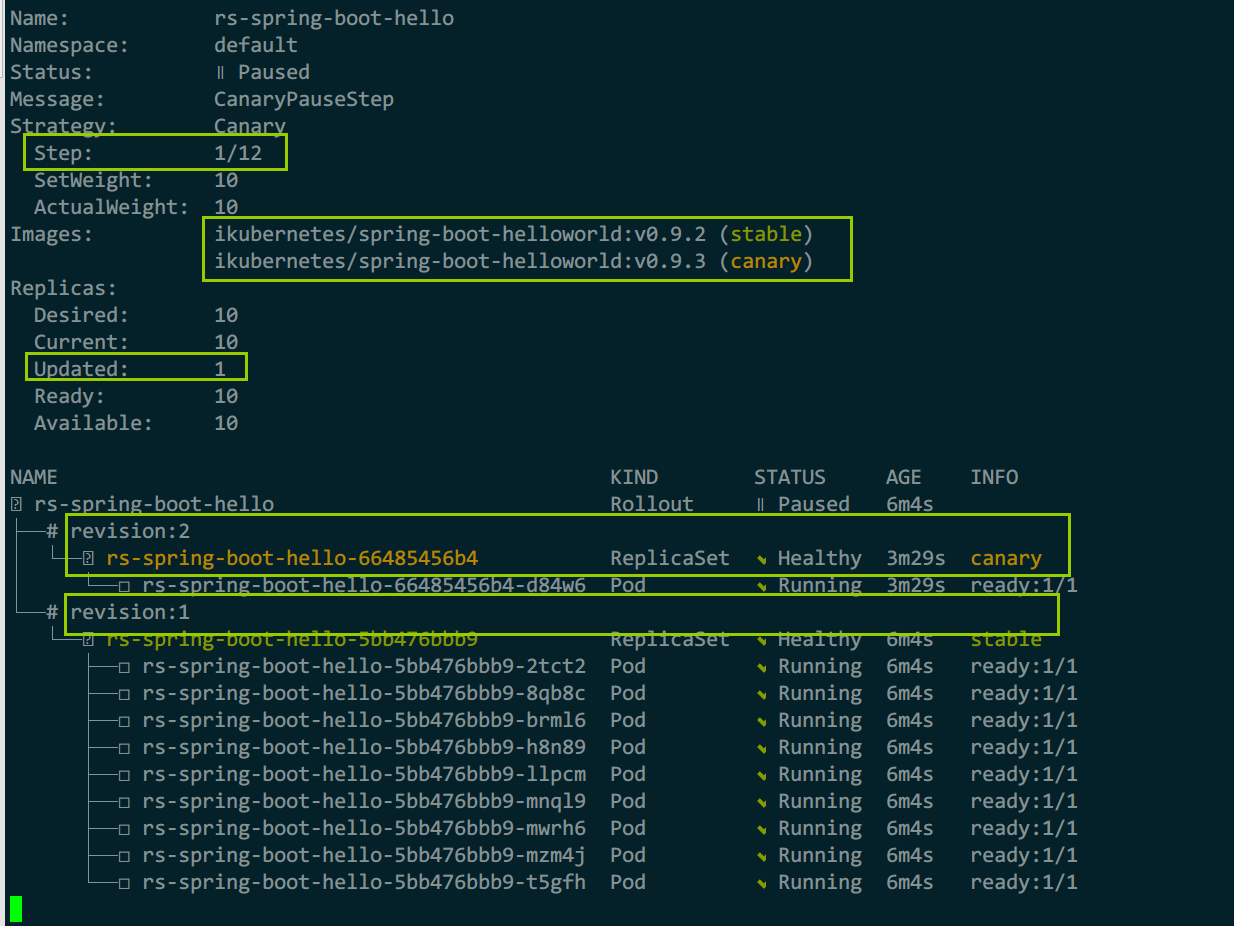

3)更新镜像,再继续监测

kubectl-argo-rollouts set image ro-spring-boot-hello spring-boot-hello=ikubernetes/spring-boot-helloworld:v0.9.5

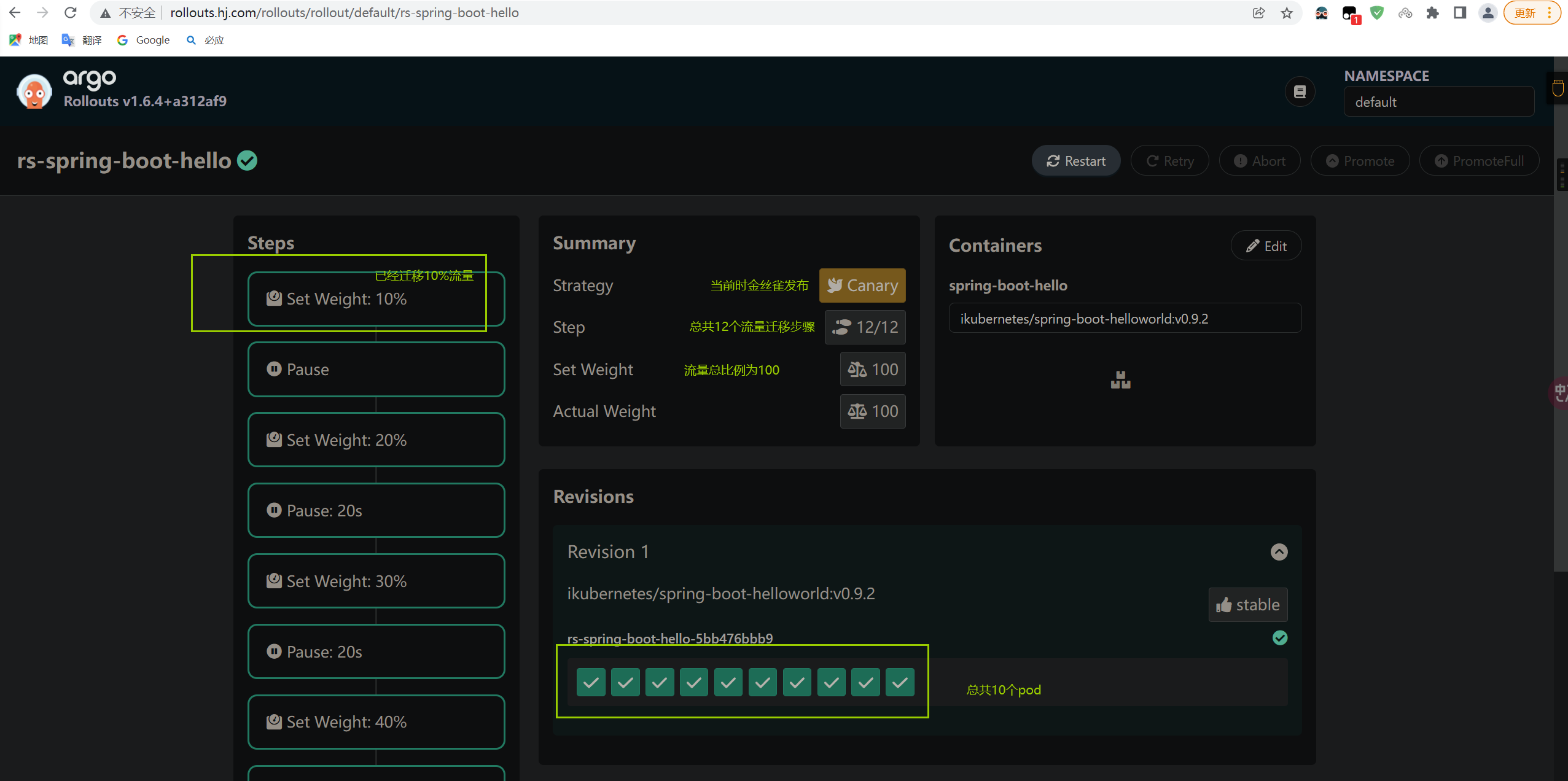

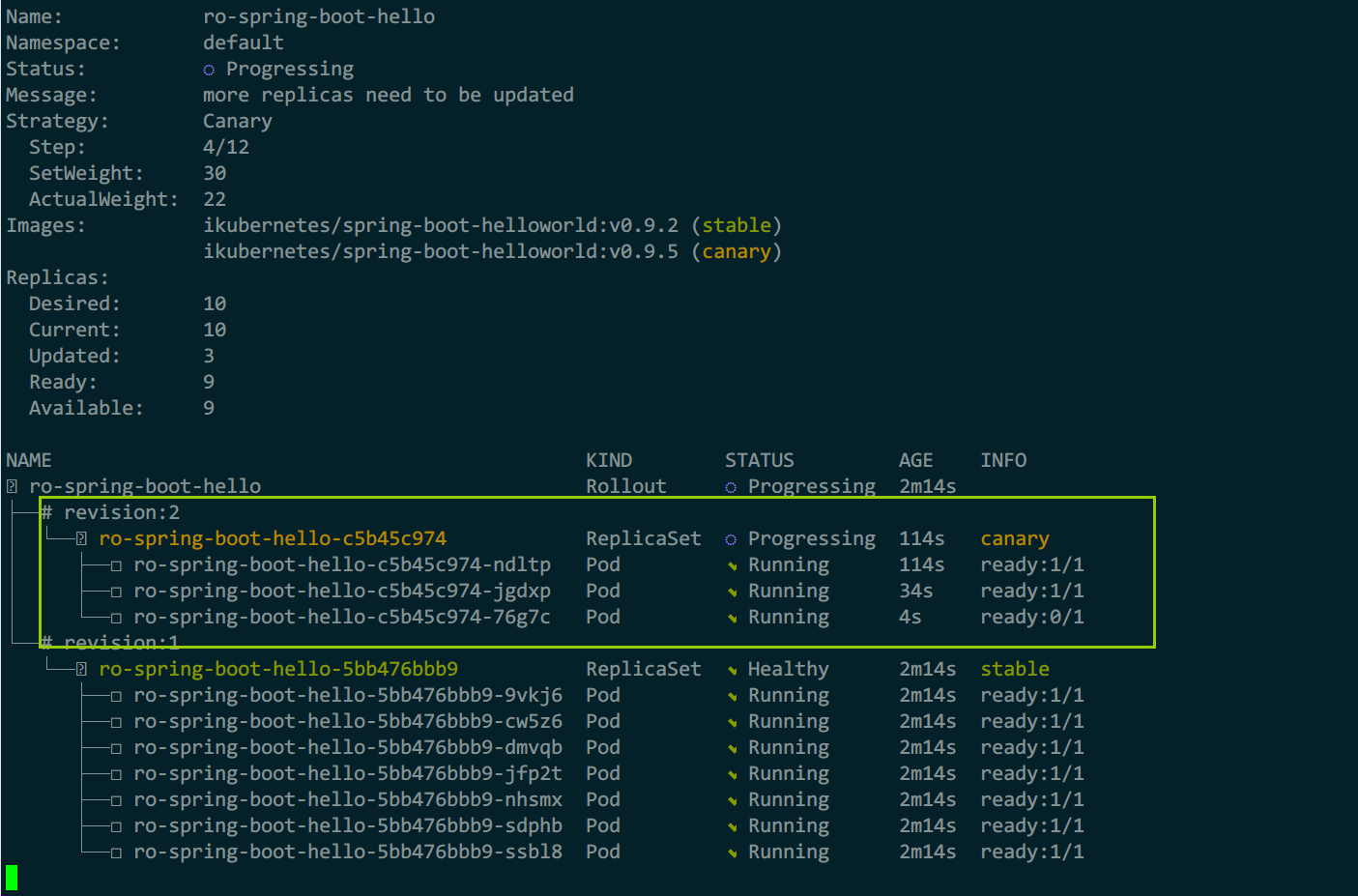

总共12个迁移步骤,能看到新镜像名,已经更新了一个pod

revision为1表示第一个版本,2表示第二个版本(新版本/金丝雀)

图形界面查看

4)手动执行流量迁移后,再继续监测

kubectl-argo-rollouts promote ro-spring-boot-hello

等待20s后,继续迁移了流量

例2:结合istio进行金丝雀流量迁移

istio支持2种流量分割:

- 基于host实现,2个svc对应2个版本,一个svc对应一个host

- 基于subset实现,做集群子集,参考istio、envoy配置

本例以subset为例, 在金丝雀发布期间,vs的动态调整可能会导致,gitops自动化部署出现问题:权重的瞬时摆动

解决方案:

如果结合app资源部署时,应该在app.spec.ignoreDifferences字段中,忽略vs的路由,具体json路径为:/spec/http/0

1)开启istio注入

kubectl label ns default istio-injection=enabled

2)创建ro、svc、vs、dr

kubectl apply -f - <<eof

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

name: ro-spring-boot-hello

spec:

replicas: 5

strategy:

canary:

trafficRouting:

istio:

virtualService: #配置vs

name: hello-ro-vs #使用此vs

routes: #hello-ro-vs的路由配置

- primary

destinationRule: #dr配置

name: hello-ro-dr

canarySubsetName: canary #金丝雀使用此

stableSubsetName: stable #稳定版使用此

steps:

- setCanaryScale:

matchTrafficWeight: true

- setWeight: 5

- pause: {duration: 1m}

- setWeight: 10

- pause: {duration: 1m}

- setWeight: 20

- pause: {duration: 20}

- setWeight: 20

- pause: {duration: 40}

- setWeight: 40

- pause: {duration: 20}

- setWeight: 60

- pause: {duration: 20}

- setWeight: 80

- pause: {duration: 20}

revisionHistoryLimit: 5

selector:

matchLabels:

app: spring-boot-hello

template:

metadata:

labels:

app: spring-boot-hello

spec:

containers:

- name: spring-boot-hello

image: ikubernetes/spring-boot-helloworld:v0.9.2

ports:

- name: http

containerPort: 80

protocol: TCP

resources:

requests:

memory: 32Mi

cpu: 50m

livenessProbe:

httpGet:

path: '/'

port: 80

scheme: HTTP

initialDelaySeconds: 3

readinessProbe:

httpGet:

path: '/'

port: 80

scheme: HTTP

initialDelaySeconds: 5

---

apiVersion: v1

kind: Service

metadata:

name: spring-boot-hello

spec:

ports:

- port: 80

targetPort: http

protocol: TCP

name: http

selector:

app: spring-boot-hello

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: hello-ro-vs

spec:

#gateways:

#- istio-rollout-gateway

hosts:

- spring-boot-hello

http:

- name: primary #对应ro中:spec.strategy.canary.trafficRouting.istio.virtualService.routes的名称

route:

- destination:

host: spring-boot-hello

subset: stable #对应ro中:spec.strategy.canary.trafficRouting.istio.destinationRule.stableSubsetName的名称

weight: 100

- destination:

host: spring-boot-hello

subset: canary #对应ro中:spec.strategy.canary.trafficRouting.istio.destinationRule.canarySubsetName的名称

weight: 0

---

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: hello-ro-dr

spec:

host: spring-boot-hello

subsets:

- name: canary

labels:

app: spring-boot-hello

- name: stable

labels:

app: spring-boot-hello

eof

3)监测ro信息

kubectl-argo-rollouts get rollout ro-spring-boot-hello --watch

4)更新镜像,再继续监测

kubectl-argo-rollouts set image ro-spring-boot-hello spring-boot-hello=ikubernetes/spring-boot-helloworld:v0.9.5

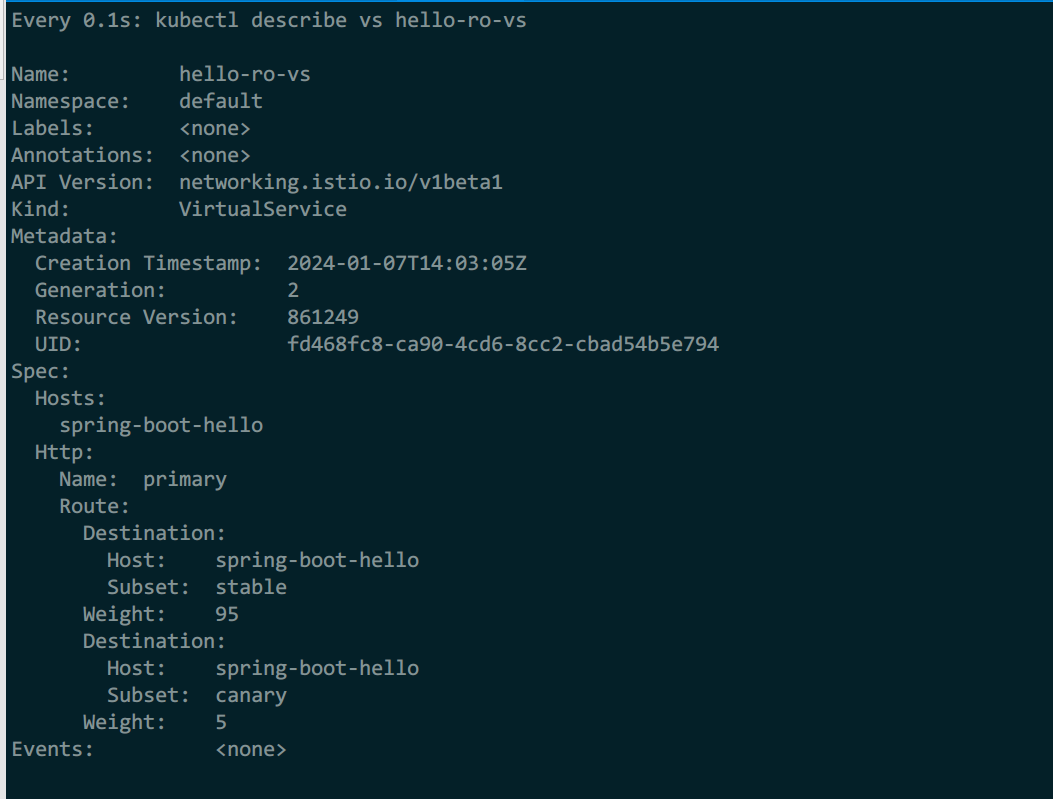

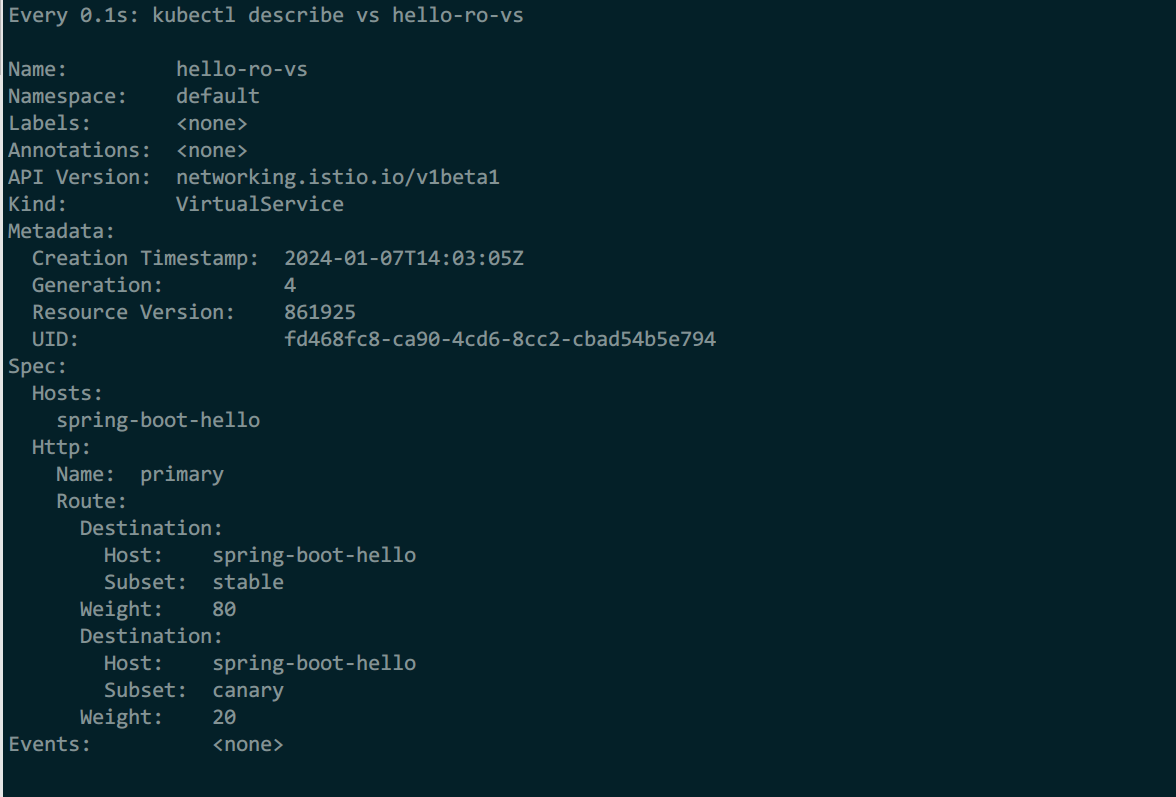

5)查看vs的流量比率变动

watch -n .1 'kubectl describe vs hello-ro-vs'

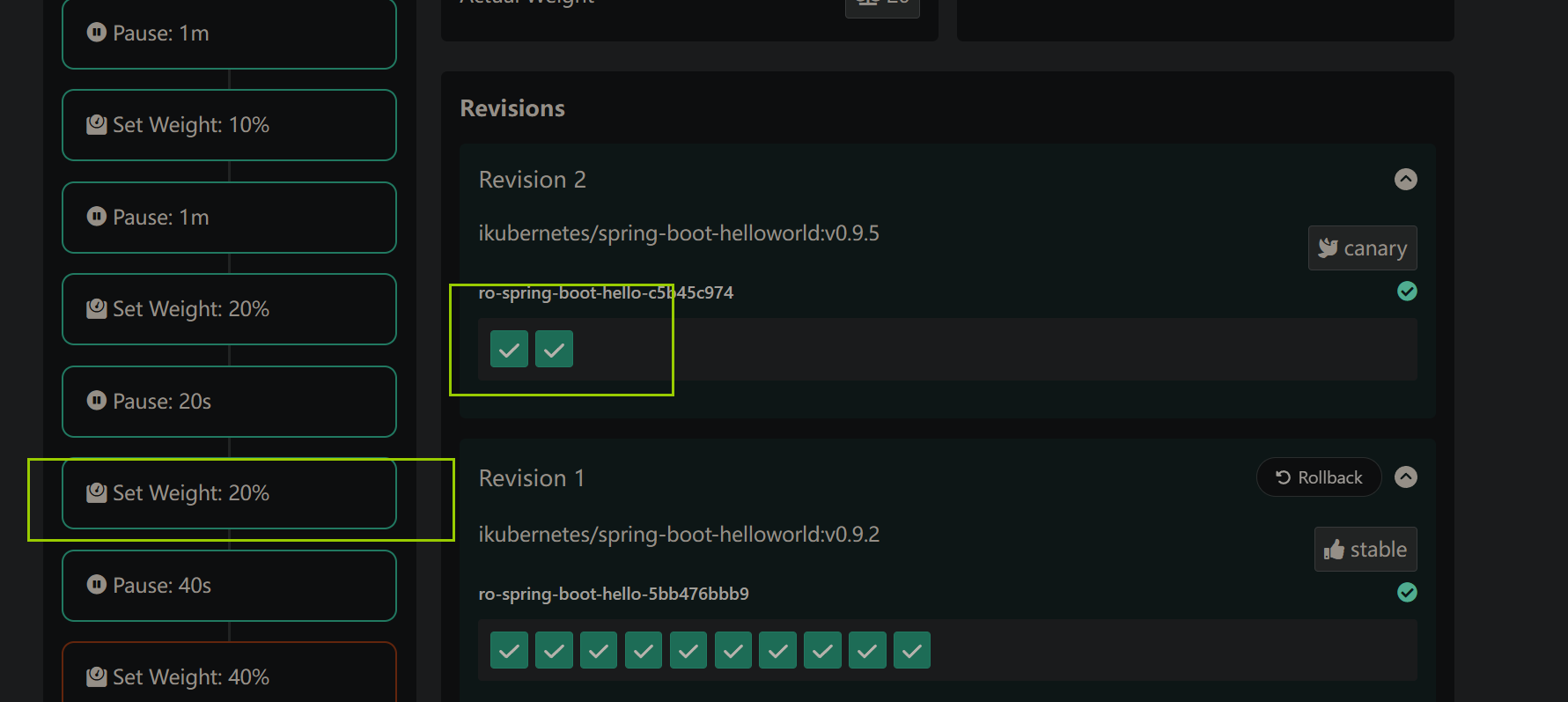

图形化中,看到结果,更新5%(为确保最少1个更新,所以更新了一个pod)

vs的流量权重

过一会再看,转移20%流量了

例3:结合istio,并从普罗米修斯分析数据,完成金丝雀发布

1)部署普罗米修斯

我这里直接使用istio附带的清单部署了

kubectl apply -f samples/addons/prometheus.yaml

2)配置清单

apiVersion: argoproj.io/v1alpha1

kind: AnalysisTemplate

metadata:

name: success-rate

spec:

args:

- name: service-name

metrics:

- name: success-rate

successCondition: len(result) == 0 || result[0] >= 0.95 #获取普罗米修斯的结果,最判断,普罗米修斯结果取值格式:result[0]

interval: 20s

count: 3

failureLimit: 3

provider:

prometheus:

address: http://prometheus.istio-system.svc.cluster.local:9090

#过去1分钟内,除了5xx以外的请求占总请求比例(成功率)

query: |

sum(irate(

istio_requests_total{reporter="source",destination_service=~"{{args.service-name}}",response_code!~"5.*"}[1m]

)) /

sum(irate(

istio_requests_total{reporter="source",destination_service=~"{{args.service-name}}"}[1m]

))

---

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

name: ro-spring-boot-hello

spec:

replicas: 5

strategy:

canary:

trafficRouting:

istio:

virtualService:

name: hello-ro-vs

routes:

- primary

destinationRule:

name: hello-ro-dr

canarySubsetName: canary

stableSubsetName: stable

analysis:

templates:

- templateName: success-rate

args:

- name: service-name

value: spring-boot-hello.default.svc.cluster.local

startingStep: 2 #启动2个步骤后再分析流量

steps:

- setWeight: 5

- pause: {duration: 1m}

- setWeight: 10

- pause: {duration: 1m}

- setWeight: 20

- pause: {duration: 20}

- setWeight: 20

- pause: {duration: 40}

- setWeight: 40

- pause: {duration: 20}

- setWeight: 60

- pause: {duration: 20}

- setWeight: 80

- pause: {duration: 20}

revisionHistoryLimit: 5

selector:

matchLabels:

app: spring-boot-hello

template:

metadata:

labels:

app: spring-boot-hello

spec:

containers:

- name: spring-boot-hello

image: ikubernetes/spring-boot-helloworld:v0.9.2

ports:

- name: http

containerPort: 80

protocol: TCP

resources:

requests:

memory: 32Mi

cpu: 50m

livenessProbe:

httpGet:

path: '/'

port: 80

scheme: HTTP

initialDelaySeconds: 3

readinessProbe:

httpGet:

path: '/'

port: 80

scheme: HTTP

initialDelaySeconds: 5

---

apiVersion: v1

kind: Service

metadata:

name: spring-boot-hello

spec:

ports:

- port: 80

targetPort: http

protocol: TCP

name: http

selector:

app: spring-boot-hello

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: hello-ro-vs

spec:

#gateways:

#- istio-rollout-gateway

hosts:

- spring-boot-hello

http:

- name: primary

route:

- destination:

host: spring-boot-hello

subset: stable

weight: 100

- destination:

host: spring-boot-hello

subset: canary

weight: 0

---

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: hello-ro-dr

spec:

host: spring-boot-hello

subsets:

- name: canary

labels:

app: spring-boot-hello

- name: stable

labels:

app: spring-boot-hello

2)监测

kubectl-argo-rollouts get rollout ro-spring-boot-hello --watch

3)更新

kubectl-argo-rollouts set image ro-spring-boot-hello spring-boot-hello=ikubernetes/spring-boot-helloworld:v0.9.5

4)测试

kubectl exec -it admin -- sh

sed -i 's/dl-cdn.alpinelinux.org/mirrors.ustc.edu.cn/g' /etc/apk/repositories ;apk add curl

while :;do curl spring-boot-hello ;sleep .5;done

流量分析成功

例4:结合普罗米修斯分析流量,完成蓝绿部署

由于蓝绿是直接切换流量到新集群,所以不需要流量策略,也就不需要用ingress这些

1)清单

apiVersion: argoproj.io/v1alpha1

kind: AnalysisTemplate

metadata:

name: success-rate

spec:

args:

- name: service-name

metrics:

- name: success-rate

successCondition: len(result) == 0 || result[0] >= 0.95

interval: 10s

count: 3

failureLimit: 2

provider:

prometheus:

address: http://prometheus.istio-system.svc.cluster.local:9090

query: |

sum(irate(

istio_requests_total{reporter="source",destination_service=~"{{args.service-name}}",response_code!~"5.*"}[1m]

)) /

sum(irate(

istio_requests_total{reporter="source",destination_service=~"{{args.service-name}}"}[1m]

))

---

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

name: rollout-helloworld-bluegreen-with-analysis

spec:

replicas: 3

revisionHistoryLimit: 5

selector:

matchLabels:

app: rollout-helloworld-bluegreen

template:

metadata:

labels:

app: rollout-helloworld-bluegreen

spec:

containers:

- name: spring-boot-helloworld

image: ikubernetes/spring-boot-helloworld:v0.9.2

ports:

- containerPort: 80

strategy:

blueGreen:

activeService: spring-boot-helloworld

previewService: spring-boot-helloworld-preview

prePromotionAnalysis: #切换前做分析

templates:

- templateName: success-rate

args:

- name: service-name

value: spring-boot-helloworld-preview.default.svc.cluster.local

postPromotionAnalysis: #切换后做分析

templates:

- templateName: success-rate

args:

- name: service-name

value: spring-boot-helloworld.default.svc.cluster.local

autoPromotionEnabled: true

---

kind: Service

apiVersion: v1

metadata:

name: spring-boot-helloworld

spec:

selector:

app: rollout-helloworld-bluegreen

ports:

- protocol: TCP

port: 80

targetPort: 80

---

kind: Service

apiVersion: v1

metadata:

name: spring-boot-helloworld-preview

spec:

selector:

app: rollout-helloworld-bluegreen

ports:

- protocol: TCP

port: 80

targetPort: 80

2)更新

kubectl-argo-rollouts set image rollout-helloworld-bluegreen-with-analysis spring-boot-helloworld=ikubernetes/spring-boot-helloworld:v0.9.5

3)监测

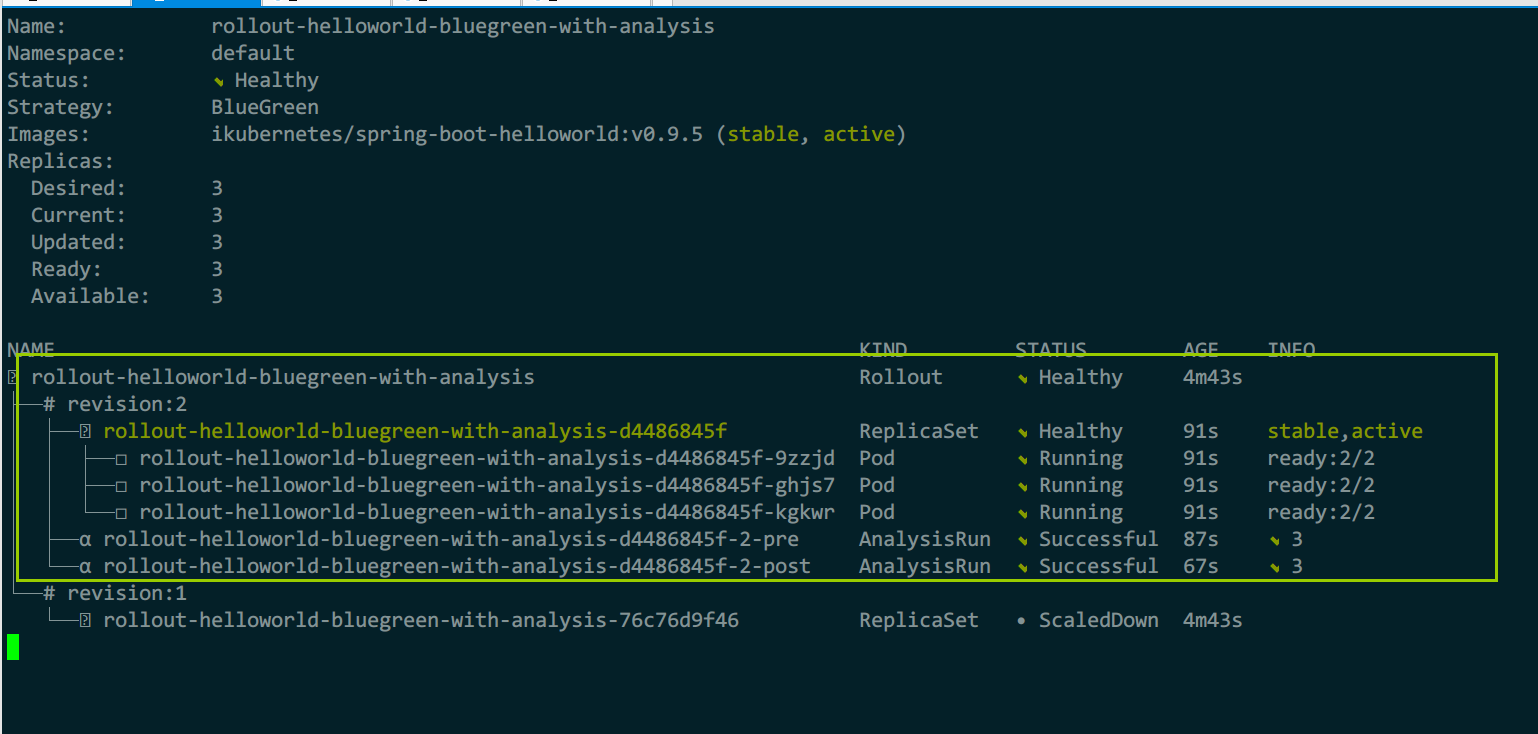

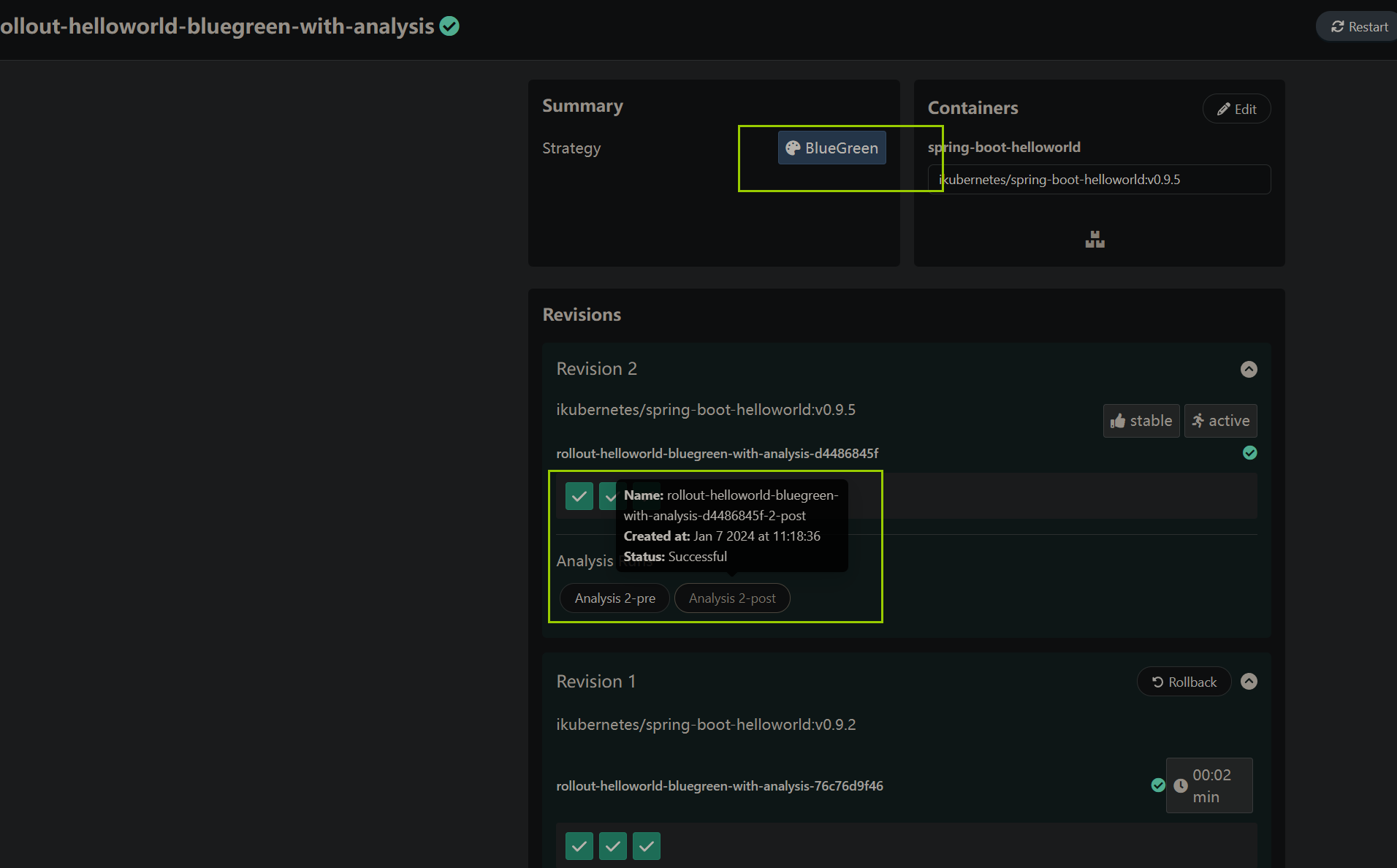

kubectl-argo-rollouts get rollout rollout-helloworld-bluegreen-with-analysis --watch

2个流量分析成功,然后流量转移

例5:argocd与argo-rollout实现gitops

1)创建app资源,监视git配置仓库

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: spring-boot-helloworld

namespace: argocd

spec:

project: default

source:

repoURL: http://2.2.2.15:80/root/spring-boot-helloworld-deployment.git

targetRevision: HEAD

path: rollouts/helloworld-canary-with-analysis

destination:

server: https://kubernetes.default.svc

# This sample must run in demo namespace.

namespace: demo

syncPolicy:

automated:

prune: true

selfHeal: true

allowEmpty: false

syncOptions:

- Validate=false

- CreateNamespace=true

- PrunePropagationPolicy=foreground

- PruneLast=true

- ApplyOutOfSyncOnly=true

retry:

limit: 5

backoff:

duration: 5s

factor: 2

maxDuration: 3m

ignoreDifferences:

- group: networking.istio.io

kind: VirtualService

jsonPointers:

- /spec/http/0

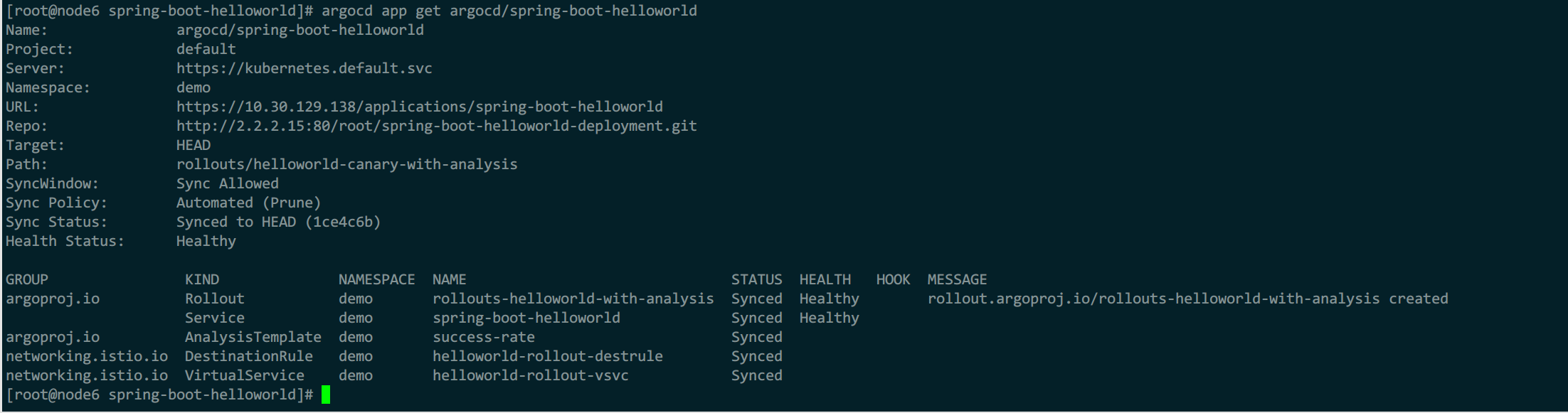

2)查看运行结果

#查看app运行结果

argocd app get argocd/spring-boot-helloworld

#查看rollout的版本控制结果

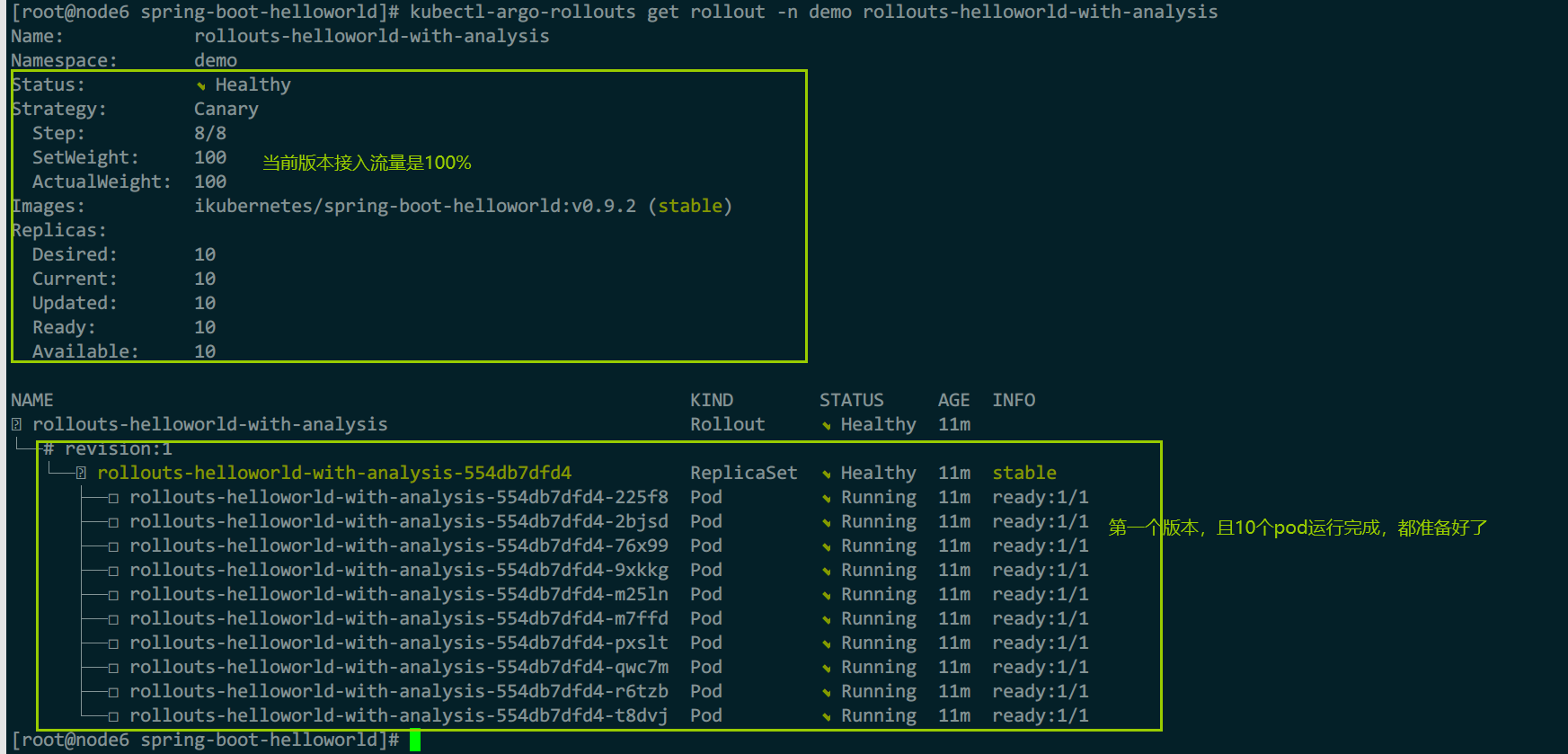

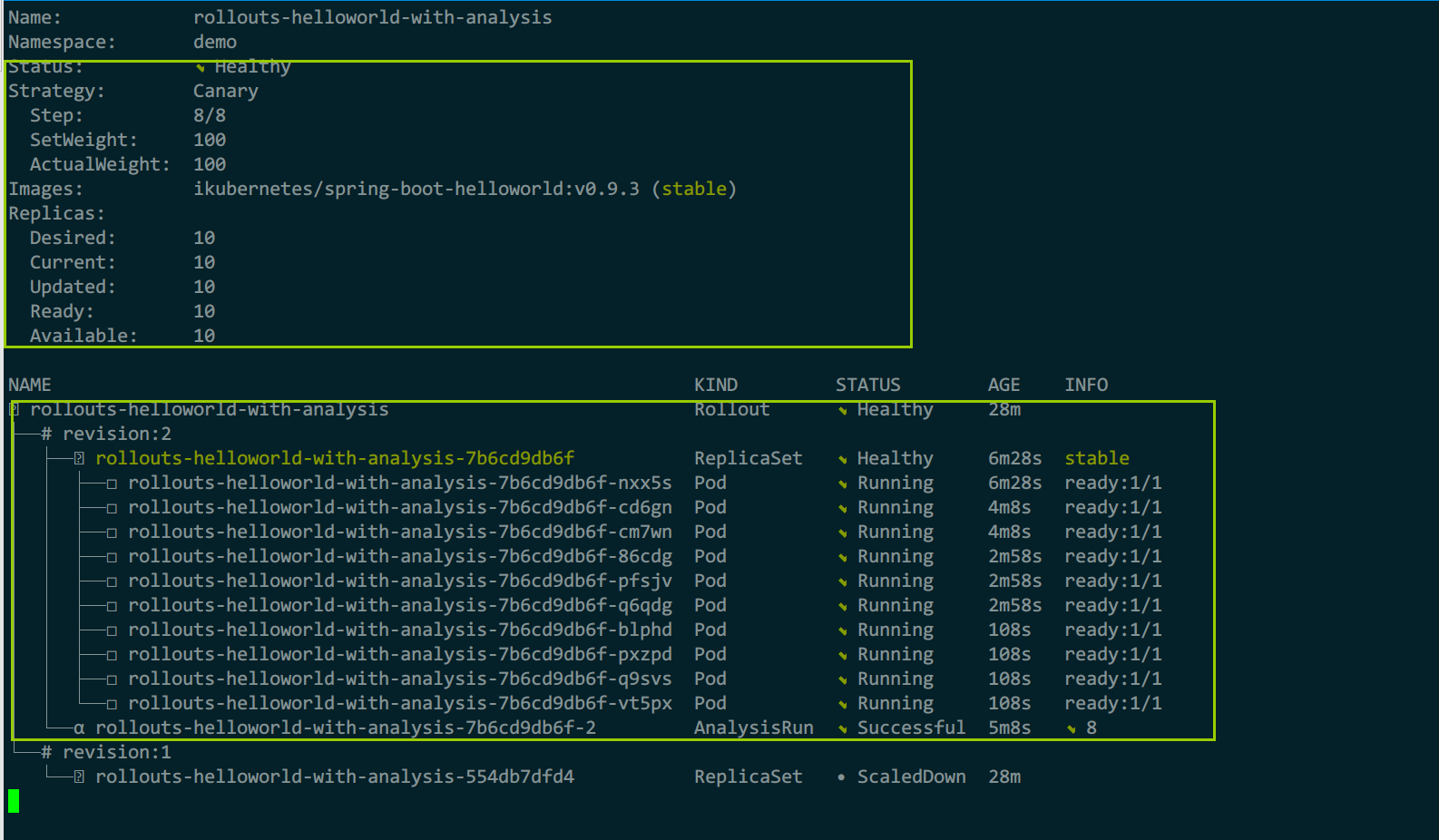

kubectl-argo-rollouts get rollout -n demo rollouts-helloworld-with-analysis

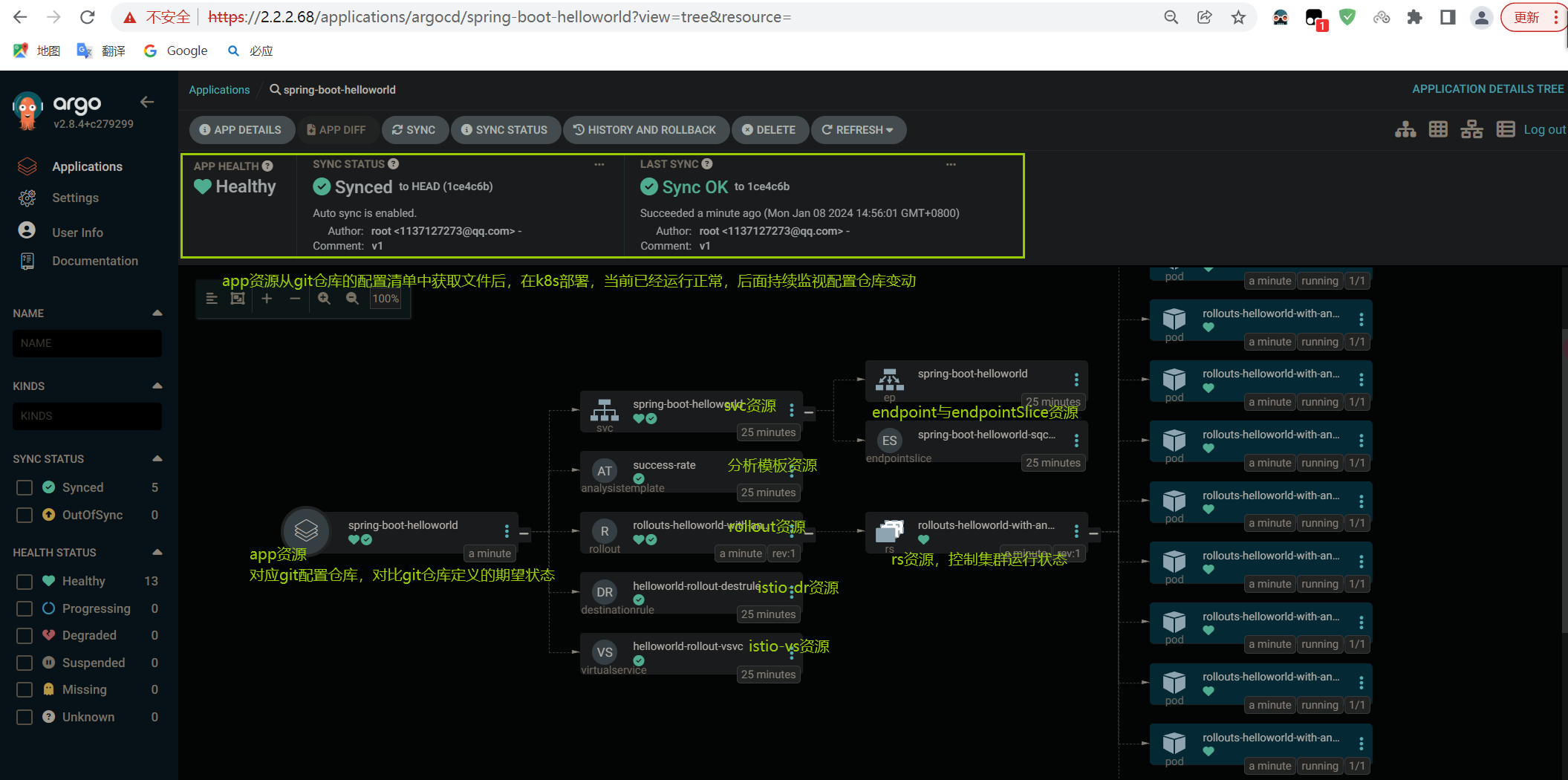

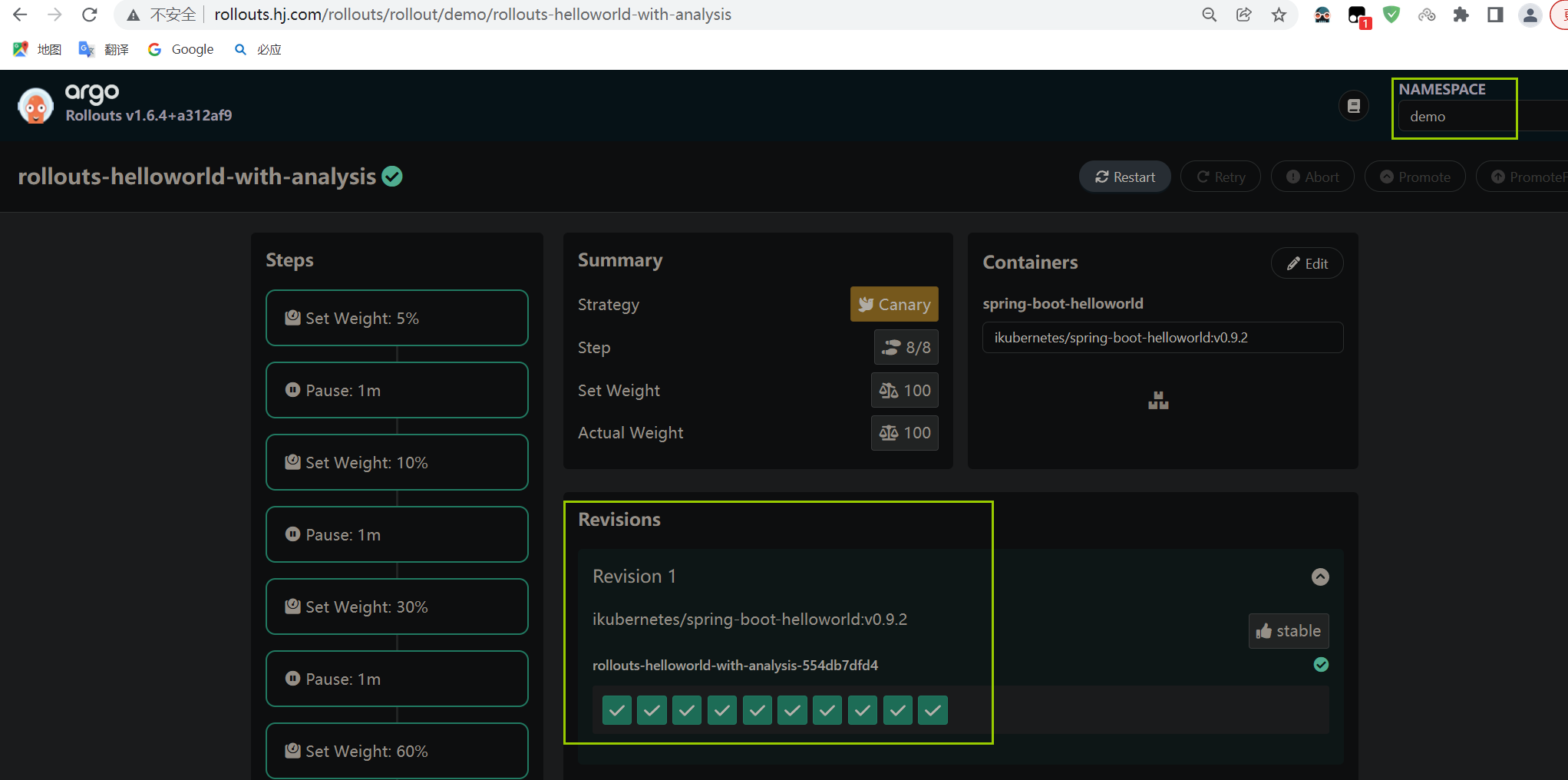

创建完成后,查看argocd图形界面,看运行结果

命令行查看,也能看到配置仓库中定义的各资源已经运行成功

argo-rollout图形界面也能看到运行结果

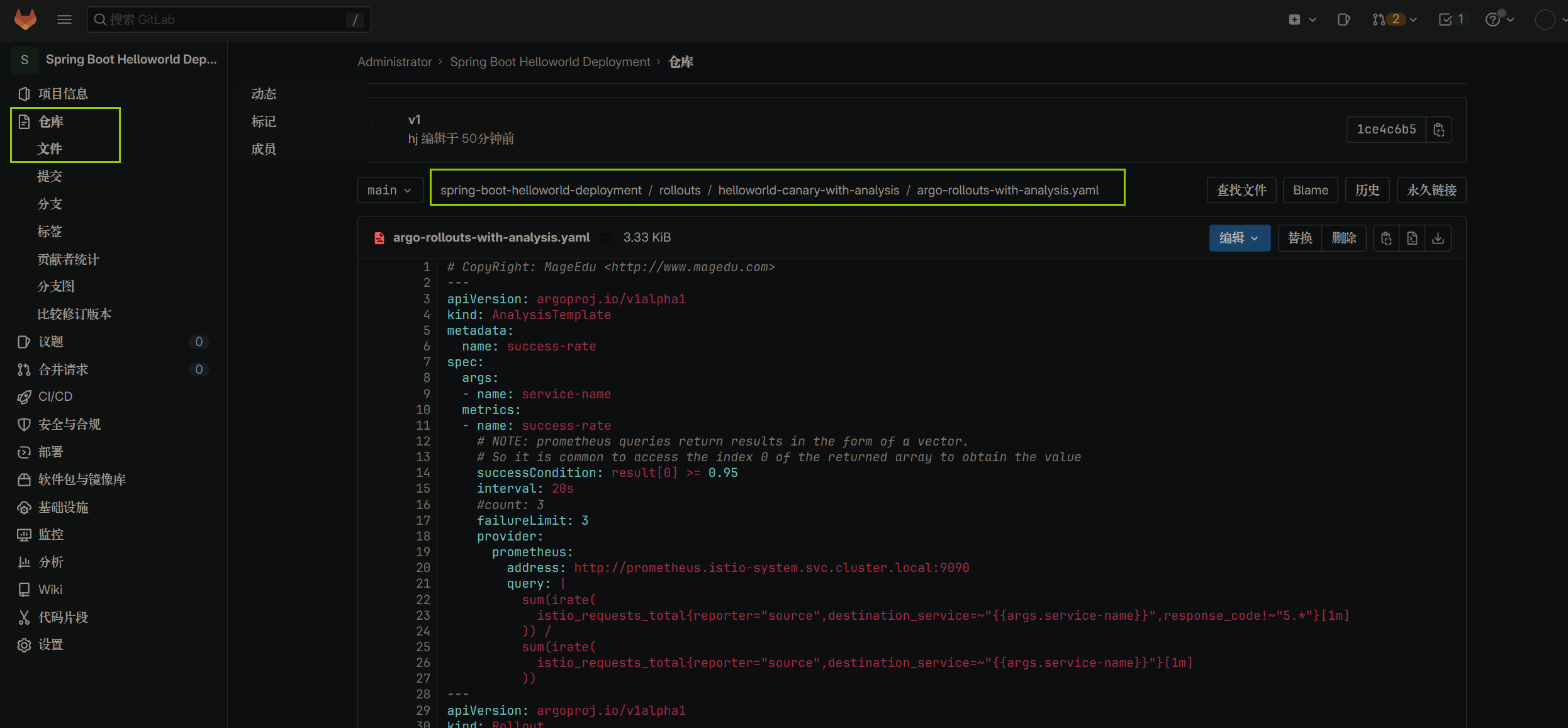

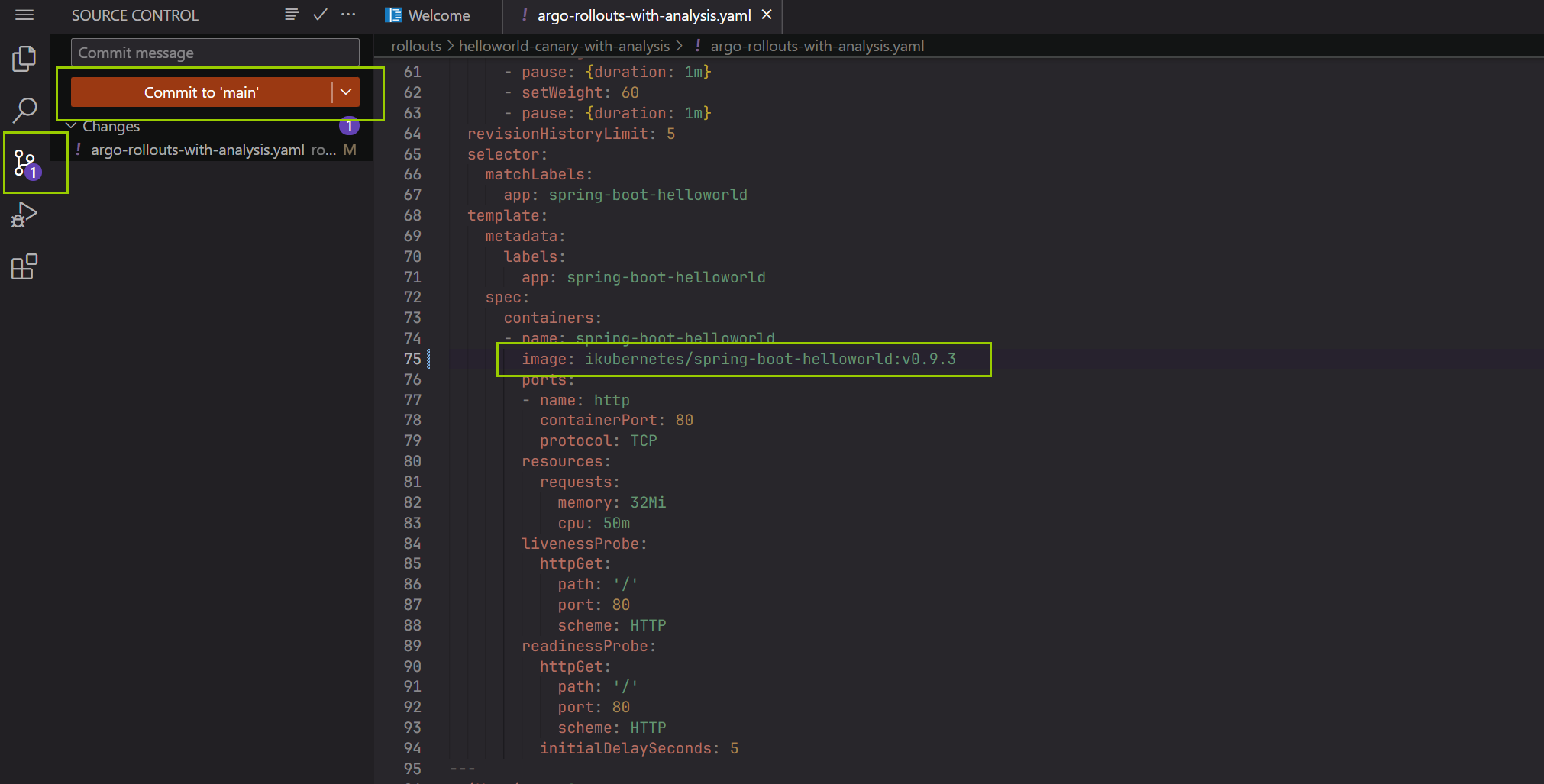

3)调整配置仓库,更新配置

打开gitlab,进入到配置仓库中,调整配置文件的镜像版本,模拟版本更新

修改镜像名,然后提交

4)查看版本发布过程

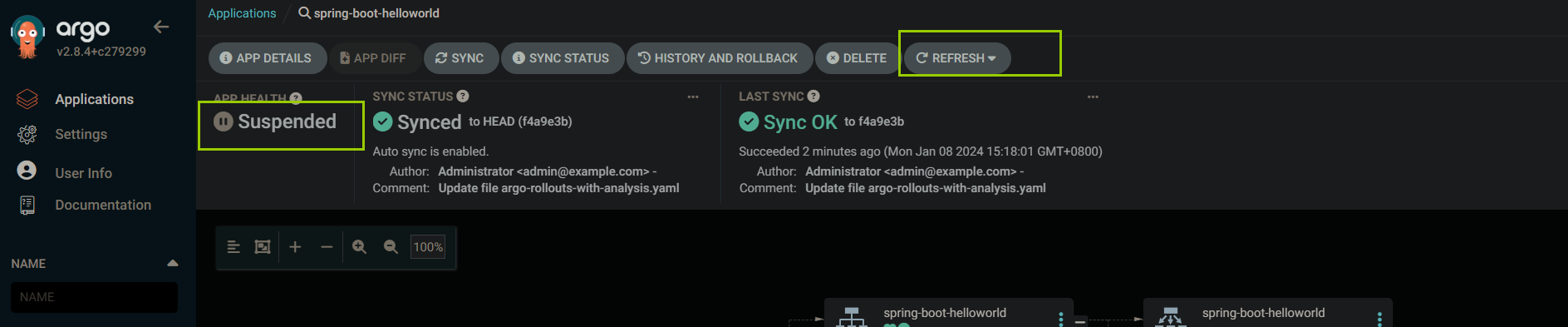

这里点手动同步配置仓库状态(不手动点刷新,过一段时间后也会自动刷新),此时获取到配置仓库的配置清单有变更,已经与集群中运行的pod状态有了变化,所以开始更新状态(执行配置仓库的新文件)

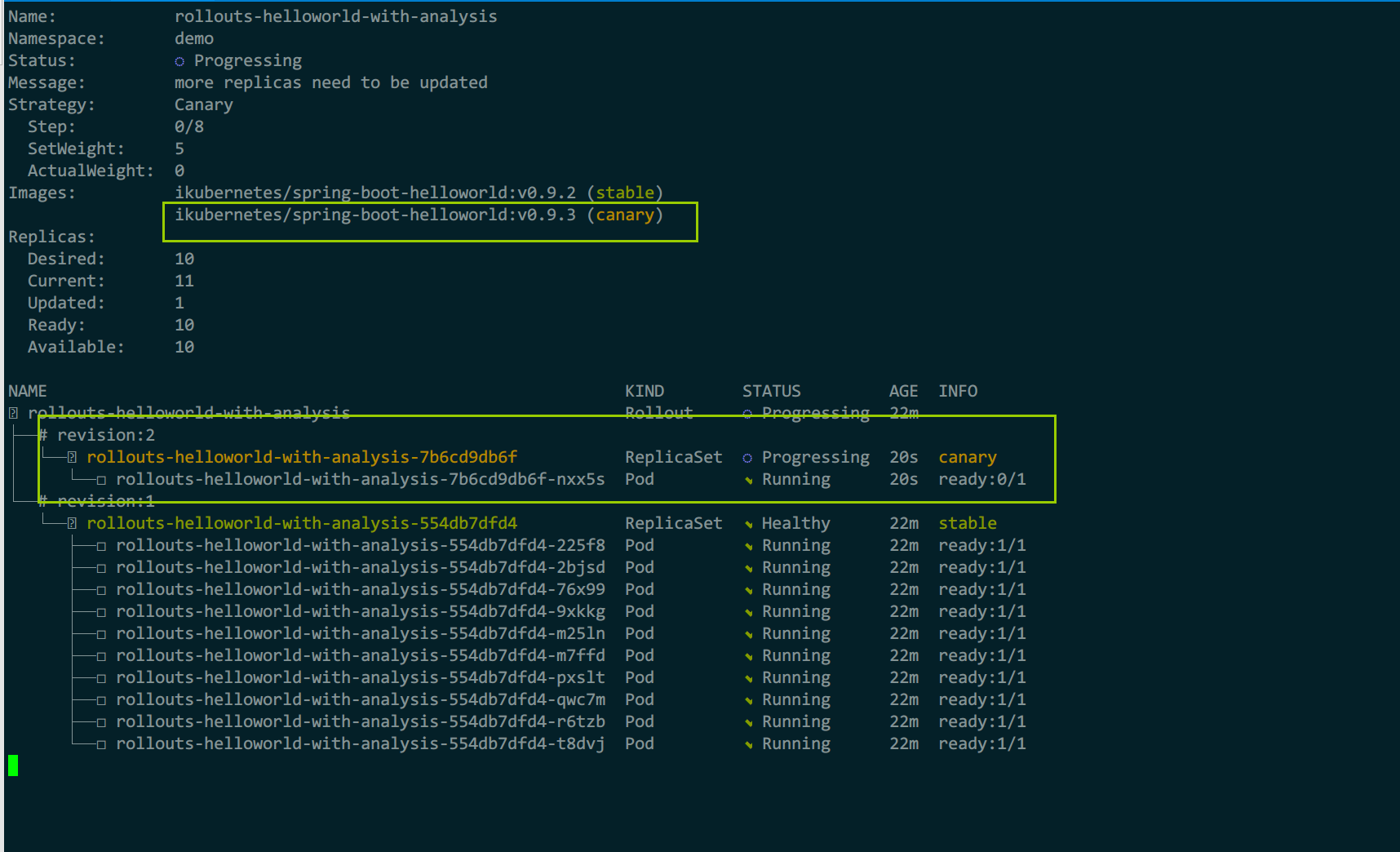

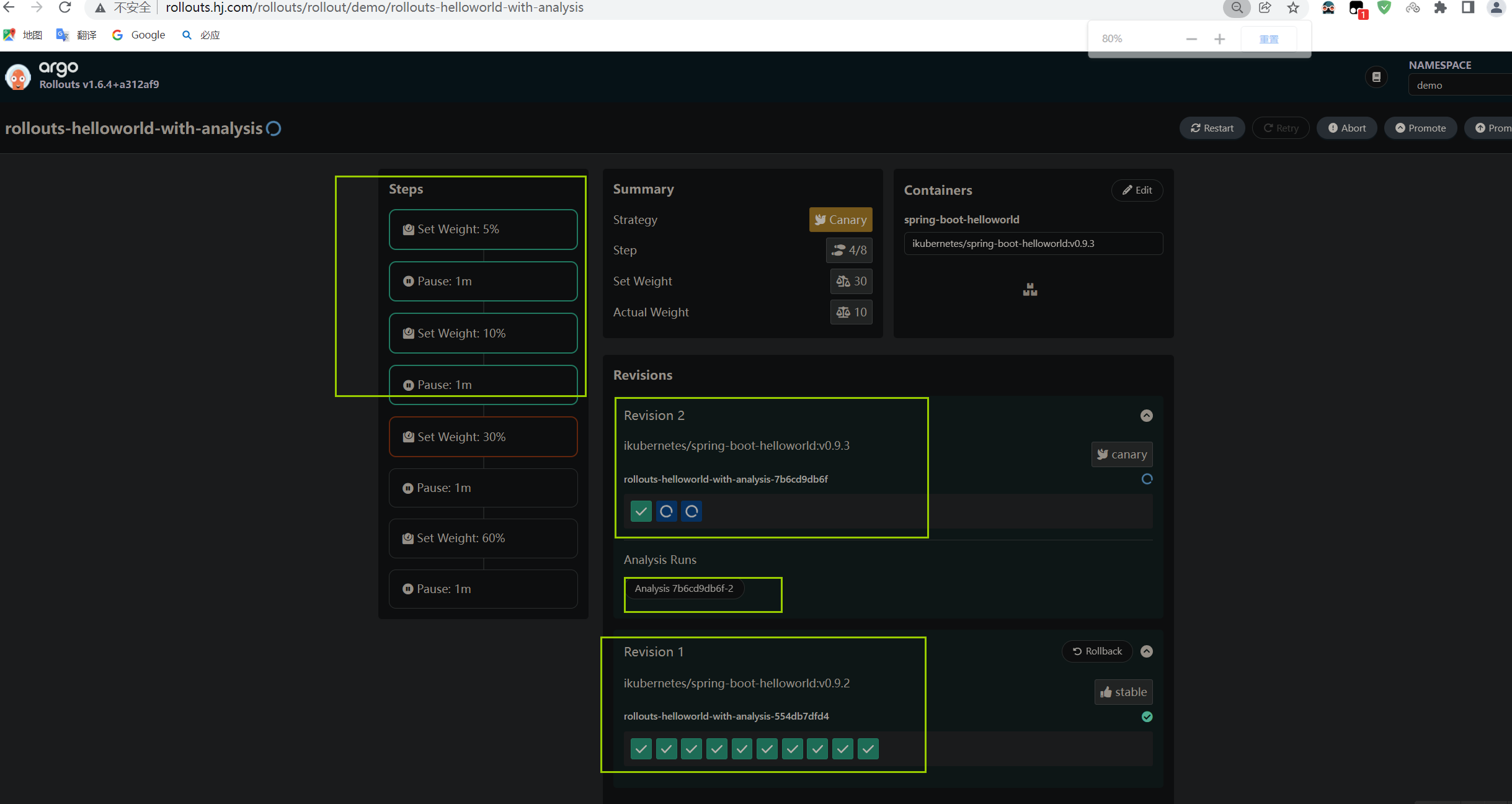

argo-rollout可以看到新版本开始部署,并且开始迁移部分流量,当前已经转移了5%流量比例到金丝雀版本

此时已经做完了金丝雀流量状态分析,且分析成功了,所以开始继续部署金丝雀版本,继续迁移流量

到最后,所有新pod部署完成,镜像版本为最新,流量也迁移完成

例6:tekton与argocd实现gitops

tekton用于替代jenkins

tekton与argocd完成CI/CD,argo-rollout结合istio、prometheus完成流量自动迁移,流量健康分析后自动发布与回滚

可以完全做到业务发布无人值守,自动根据请求的指标是否正常,完成发布与回滚

参考发布在稀土掘金上的内容:tekton与argocd实现gitops