knative之eventing

eventing属于Faas层,当业务应用是无状态服务且基于事件驱动架构,就可以迁到eventing运行

eventing仅提供消息基础设施

关于消息与事件相关基础概念,参考上一篇内容:knative之eventing消息与事件基础概念

作用

- 负责为事件的生产和消费提供基础设施,将事件从生产者路由到目标消费者,让开发者可以使用事件驱动架构

- 各资源是松散耦合关系,可分别独立开发和部署

相关组件

- sources:事件的生产者,事件从source发送到sink或订阅者

- broker

- triggers

- sinks:可接收传入的事件可寻址(addressable)或可调用(callable)资源。ksvc/svc、channel、broker、某接收服务端都可用作sink

术语

- event source:

- 事件源,对应事件的生产者

- 事件将被发给sink或subscriber

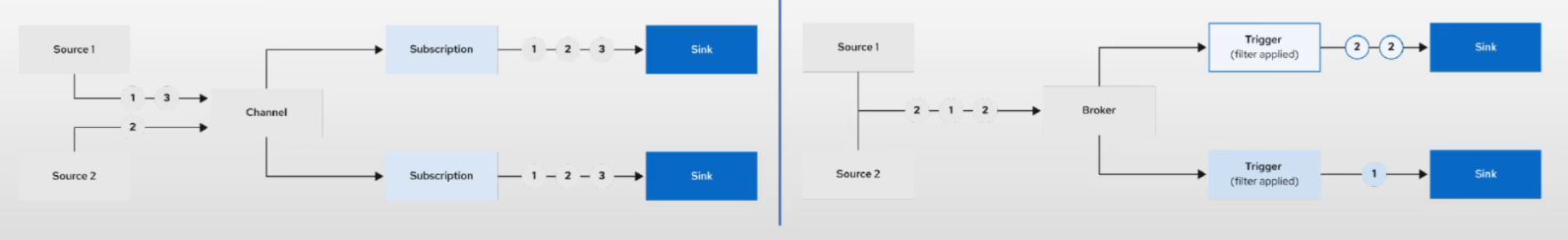

- channel和subscription:

- 事件管道模型,负责在channel及其个subscription之间格式化和路由事件

- 各consumer利用trigger订阅感兴趣的事件

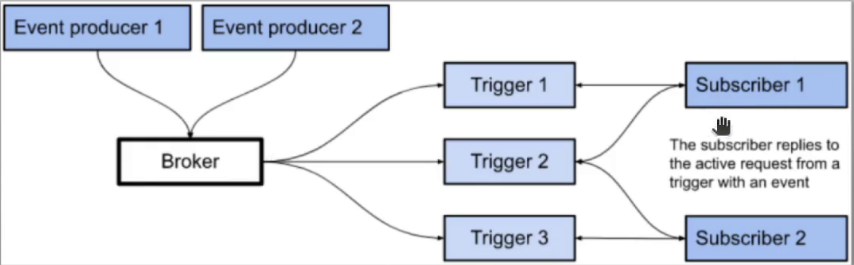

- broker和trigger:

- 事件网格模型,生产者把事件发往broker,再由broker统一经trigger发给各消费者

- 各consumer利用trigger订阅感兴趣的事件

- event registry:

- knative eventing使用eventtype帮助消费者从broker上发现其能够消费的事件类型

- event registry即为存储这eventtype的集合,这些eventtype含有消费者创建trigger的所有必要信息

- flow:

- 事件流处理,可手动定义工作流水线

- 流处理后继续发给sink

- sink:事件的最终接收者,也就是消费者,可以是broker、channel、flow、ksvc/svc等

事件传递模式

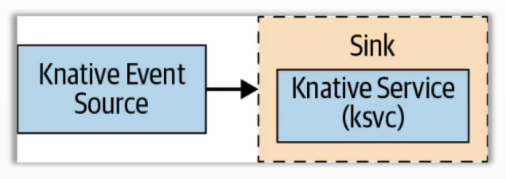

source到sink

- 单一模式,事件直接从生产者到消费者,不用排队、过滤等

- 消息传递就忘

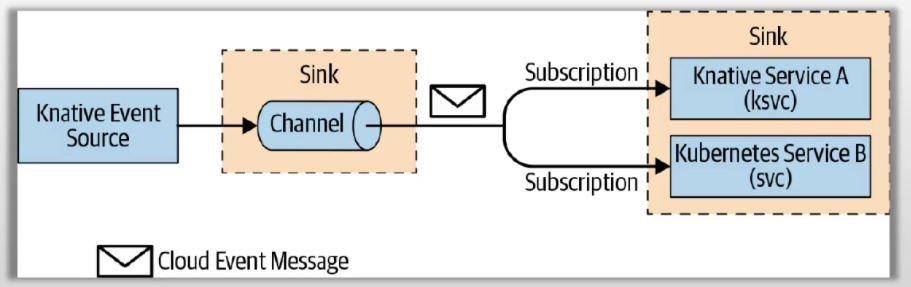

channel和订阅

- 生产者将消息发给channel,支持1到多个订阅者(sink),channel中的每个事件都被格式化cloudevent并发给各消费者

- 不支持消息过滤

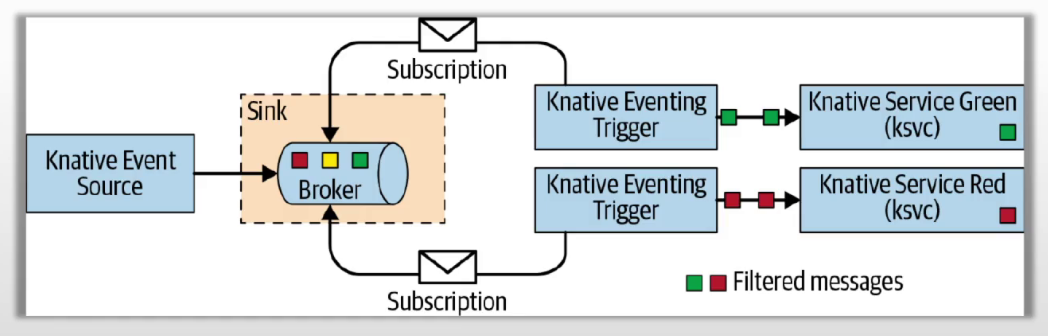

broker和trigger

- 比channel加订阅更高级,支持消息过滤

- 事件过滤允许订阅者使用基于事件属性的条件表达式(trigger)筛选事件

- trigger负责订阅broker,并对broker上的消息进行过滤,消息格式化

- 生产环境推荐使用

CRD资源

sources.knative.dev群组:提供source,其他以插件方式做手动加载

- ApiServerSource:监听k8s api事件,可以将api-server事件转为cloudevent

- ContainerSource:在特定容器中发出针对sink的事件

- PingSource:以周期性任务(cron)的方式生成事件

- SinkBingding:链接任何可寻址的k8s资源,以接收来自可能产生事件的任何其他k8s资源的事件

eventing.knative.dev群组:事件网络模型api

- Broker

- EventType:定义支持的事件类型

- Trigger

messaging.knative.dev群组:事件管道模型api

- Channel

- Subscription

flows.knative.dev群组:事件流模型(事件以并行或串行运行)

- Parallel

- Sequence

其他扩展资源:

其他的扩展配置,参考官方文档:https://knative.dev/docs/eventing/

channle和subscription

channle

定义命名空间级别的消息总线,依赖于特定组件上才能实现,如imc、nats channel、kafka等

每个channel对应1个特定topic

一般channel和subscription消息传递模式中需要自行创建channel,只有2种情况不用自己创建channel:

- source到sink:不需要channel

- broker和trigger:会自动配置

subscription

将sink连接到1个channel上

手动创建subscription的场景:

- channel和subscription消息传递模式:需要创建订阅到channel的subscription

- broker和trigger消息传递模式:需要创建订阅到trigger的subscription

broker与trigger

承载消息队列的组件,从生产者接收消息,并根据消息交换规则将其交换到相应的队列或topic,事件传递的任务由trigger负责,基于属性过滤事件,将筛选的事件发给订阅者,具体实现则根据broker class的

subscription可以生成响应事件,并将响应事件发给broker

常见中间件:kafka、rabbitmq、activemq、rocketmq

支持的broker

- mt channel-based broker:基于channel的多租户broker,基于channel进行事件路由,至少部署1种channel层组件(InMemoryChannel用于开发和测试、Kafka生产)

- kafka broker

- rabbitmq broker

使用默认broker

knative-serving在命名空间级别,提供了名为default的默认broker,但使用时需提前创建

创建方法

命令

kn broker create default -n 命名空间 #开启

kn broker delete default -n 命名空间 #删除

注解

在trigger资源上,配置注解,删除时需要手动删注解才行

eventing.knative.dev/injection=enabled

标签

为命名空间添加标签实现:

kubectl label ns eventing.knative.dev/injection=enabled #开启

kubectl label ns eventing.knative.dev/injection- #删除

flow工作流

以工作流方式,定义过滤器与通道

概念

- importer:连接三方消息系统。基于http协议到channel、broker、sequence(串行)/parallel(并行)或svc/ksvc

- channel:支持多路订阅,为订阅者持久化消息数据

- service:接收cloudEvents

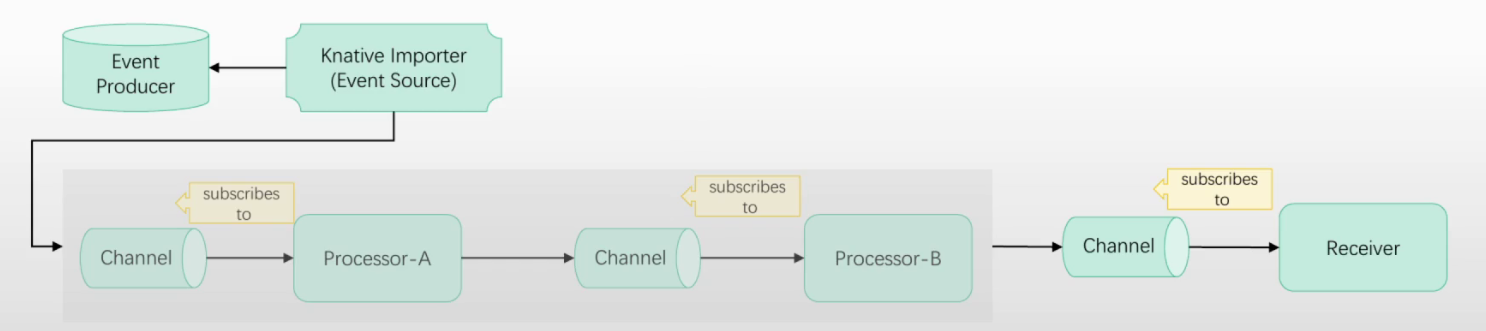

sequence

crd资源

串联1到多个processor(ksvc/svc),sequence由多个有序的step组成,每个step定义1个subscriber

step间的channel,由channelTemplate定义

事件源 --> sequence --> channel <-- subscription --> sink

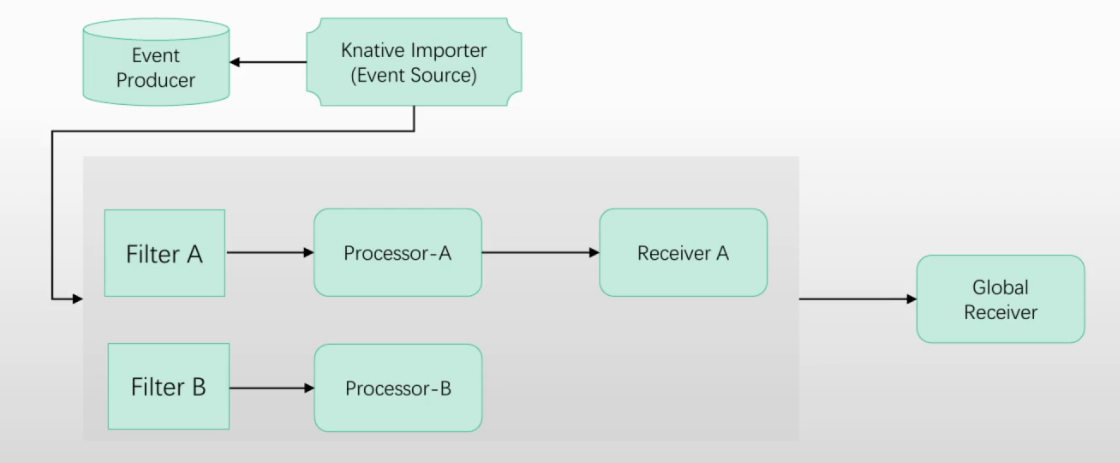

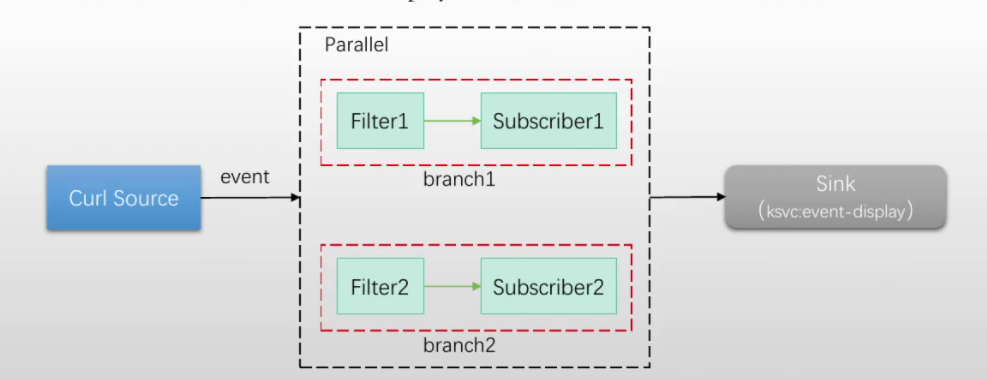

parallel

crd资源,可根据不同的过滤条件对事件进行选择处理

并联1到多个processor,由1到多个branch和一个channelTemplate组成

由多个条件并行branch组成,每个branch由1对filter及subscriber组成(每个filter即为1个processor,需自己开发)

channel由channelTemplate定义

事件源 --> parallel --> channel <-- subscription --> sink

kafka

kafka的相关概念信息,参考之前的博客:kafka消息队列

knative eventing中支持使用kafka做channel、broker,也支持获取数据源,kafka有4个组件,可各自独立运行:

- kafka source:负责从kafka集群中读取消息,转为cloudevents后引入eventing

- kafka channel:channel实现之一,功能与imc类似,但可以提供持久化功能,生产推荐环境使用。在kafka集群上创建对应topic

- kafka broker:broker实现之一,功能与mt-broker类似,依赖于kafka channel类型的channel实现

- kafka sink:转换cloudevents为kafka格式,将消息、事件发到kafka集群中

部署参考knative部署中eventing部署:knative部署

配置默认channel与broker为kafka

修改默认channel:

官方文档:https://knative.dev/docs/eventing/configuration/channel-configuration/

apiVersion: v1

kind: ConfigMap

metadata:

name: default-ch-webhook

namespace: knative-eventing

data:

default-ch-config: |

#clusterDefault为集群级别,namespaceDefaults为命名空间级别单独定义。集群级别和ns级别可以配置不同

clusterDefault:

apiVersion: messaging.knative.dev/v1

kind: InMemoryChannel

namespaceDefaults:

default: #default命名空间中使用此配置

apiVersion: messaging.knative.dev/v1

kind: KafkaChannel

spec:

numPartitions: 3 #分片数

replicationFactor: 1 #副本数,当前是单节点,1个即可

修改默认broker

apiVersion: v1

kind: ConfigMap

metadata:

name: config-br-defaults

namespace: knative-eventing

data:

default-br-config: |

#集群级配置。集群级别和ns级别可以配置不同

clusterDefault:

brokerClass: Kafka

apiVersion: v1

kind: ConfigMap

name: kafka-broker-config

namespace: knative-eventing

#命名空间级别必须指定,否则创建kafka-broker一定出错,以下都是模板,直接复制即可

namespaceDefaults:

default: #配置生效的命名空间,需要指定

brokerClass: Kafka

apiVersion: v1

kind: ConfigMap

name: kafka-broker-config

namespace: knative-eventing

kafka cm配置

broker配置:kafka-broker-config

apiVersion: v1

kind: ConfigMap

metadata:

name: kafka-broker-config

namespace: knative-eventing

data:

bootstrap.servers: my-cluster-kafka-bootstrap.kafka:9092 #kafka主集群svc

default.topic.partitions: "10" #分区数

default.topic.replication.factor: "1" #副本数,需要与broker数量对应,否则会报错,我这里是单节点运行kafka,所以为1

channel配置:kafka-channel-config

apiVersion: v1

data:

bootstrap.servers: my-cluster-kafka-bootstrap.kafka:9092

kind: ConfigMap

CRD配置

测试使用时,查看生产的事件:

此镜像可以将事件源产生的事件打印出来,以下案例中产生的事件都是发给这个镜像

kn service apply event --image ikubernetes/event_display --port 8080 --scale-min 1

kn service apply event-2 --image ikubernetes/event_display --port 8080 --scale-min 1

kn domain create event.hj.com --ref 'ksvc:event'

kubectl logs -f deployments/event-00001-deployment

pingsource资源

周期性发送事件

命令

kn source ping create cron-source --schedule '* * * * *' -d '{"message":"123"}' --sink=event

语法

kubectl explain pingsources

apiVersion: sources.knative.dev/v1

kind: PingSource

metadata:

spec:

ceOverrides:

extensions: {}

contentType: str #事件类型

data: str #数据类容

dataBase64: str #base64编码

schedule: 定时 #crond定时,遵循:* * * * *

sink: #事件接收者

CACerts: str

ref:

apiVersion: str #使用svc(必须关联pod)、ksvc接收事件

kind: str

name: str

namespace: str

url: str

timezone: str

示例

例:

1)运行

apiVersion: sources.knative.dev/v1

kind: PingSource

metadata:

name: cron-source

namespace: default

spec:

data: '{"message":"123"}'

schedule: '* * * * *'

sink:

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: event

namespace: default

2)测试

kubectl logs -f deployments/event-00001-deployment

containersource资源

命令

kn source container create cs --image=ikubernetes/containersource-heartbeats -s ksvc:event

语法

kubectl explain containersources

apiVersion: sources.knative.dev/v1

kind: ContainerSource

metadata:

name: cs

namespace: default

spec:

sink: #事件接收者

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: event

namespace: default

template: #容器模板

metadata: {}

spec:

containers:

- image: ikubernetes/containersource-heartbeats

name: cs-0

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name #获取pod自身名称

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace #后去pod自身命名空间

示例

例:

apiVersion: sources.knative.dev/v1

kind: ContainerSource

metadata:

name: cs

namespace: default

spec:

sink:

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: event

namespace: default

template:

spec:

containers:

- image: ikubernetes/containersource-heartbeats

name: cs-0

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name #获取pod自身名称

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace #获取pod自身命名空间

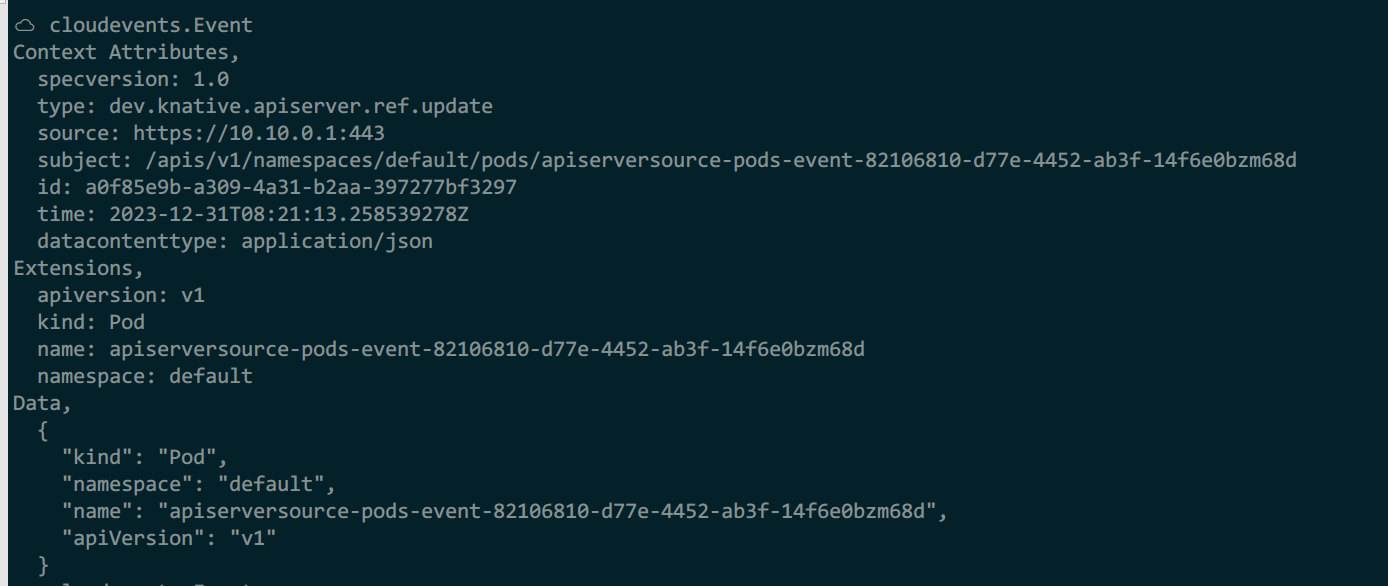

apiserversource资源

将k8s api-server中的事件,转为eventing中可识别的事件,如某代码pod做了更新,则event根据此事件做处理

命令

kn source apiserver create k8sevents --resource Event:v1 --service-account myaccountname --sink ksvc:mysvc

语法

kubectl explain apiserversource

apiVersion: sources.knative.dev/v1

kind: ApiServerSource

metadata:

name: pod-event

namespace: default

spec:

serviceAccountName: pod-watcher #获取api-server的事件必须要授权才行,关联已经授权的sa

mode: Reference

resources: #需要监听事件的资源对象,资源中所有事件都会监听

- apiVersion: v1

kind: Pod

selector: #选择生效的pod,不选择生效的pod,则命名空间中所有pod的事件都监听

matchLabels:

app: demoapp

sink:

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: event

示例

例:

kubectl apply -f - <<eof

apiVersion: v1

kind: ServiceAccount

metadata:

name: pod-watcher

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: pod-reader

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: pod-reader

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: pod-reader

subjects:

- kind: ServiceAccount

name: pod-watcher

namespace: default

---

apiVersion: sources.knative.dev/v1

kind: ApiServerSource

metadata:

name: pods-event

spec:

serviceAccountName: pod-watcher

mode: Reference

resources:

- apiVersion: v1

kind: Pod

#selector:

# matchLabels:

# app: demoapp

sink:

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: event

eof

可以看到pod创建时,发送了更新事件

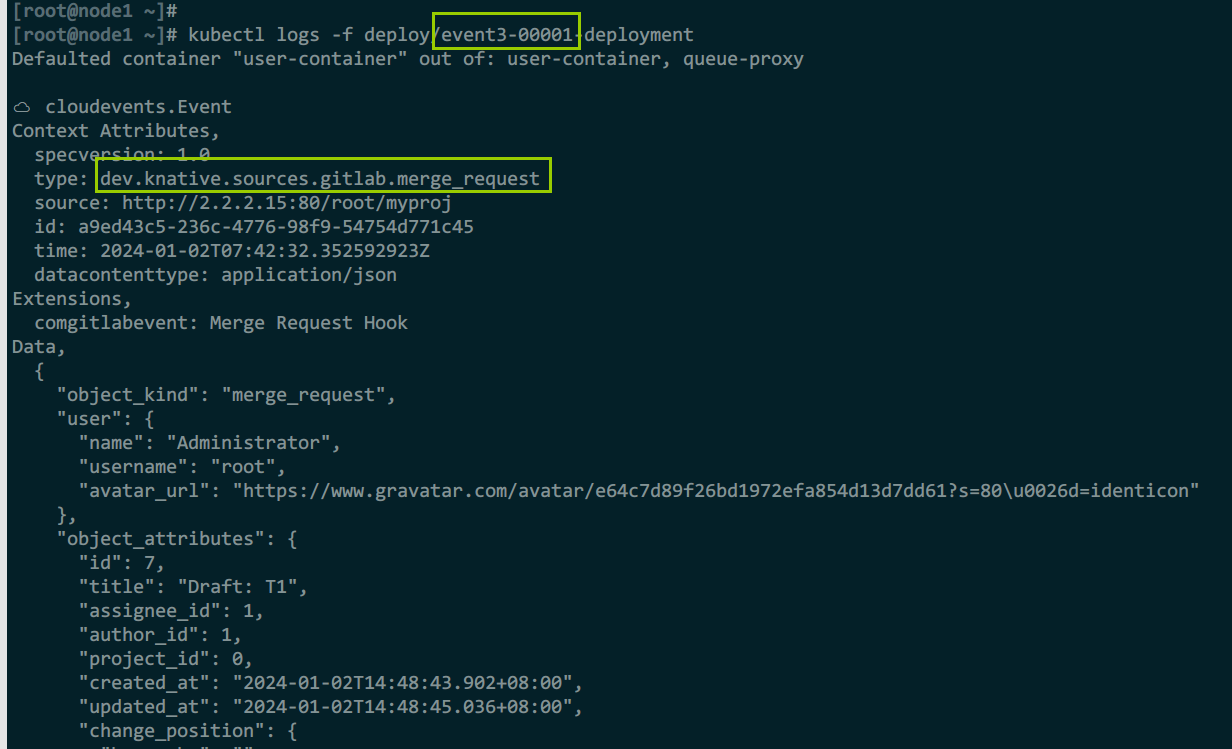

gitlabsource资源

属于扩展事件源,需要部署gitlab扩展,也必须提前运行好gitlab服务(不然产生不了数据嘛),这里就不介绍gitlab部署了,只介绍扩展部署以及配置语法

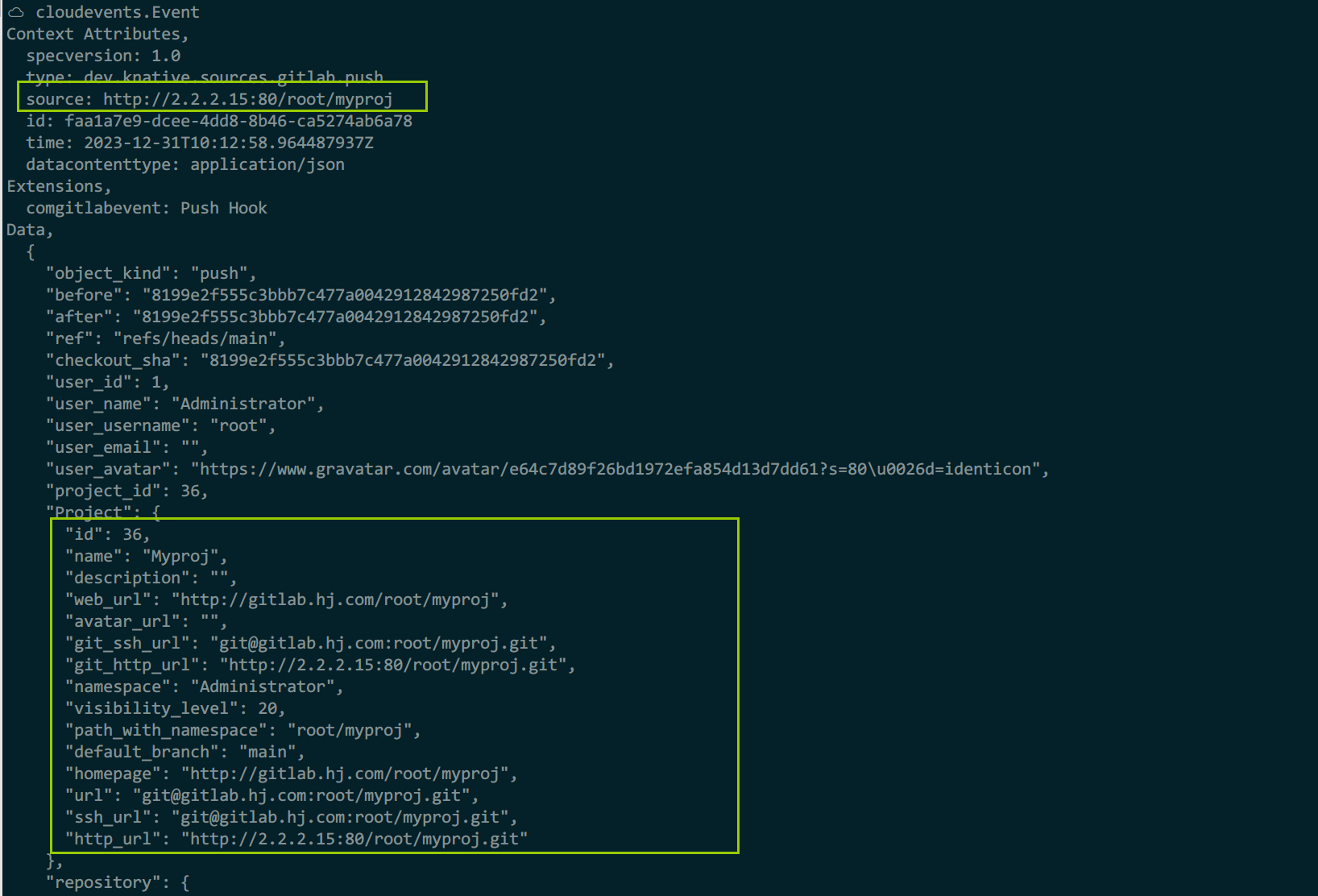

将gitlab仓库中的事件转为cloudevents,当创建gitlabsource时,控制器会启动一个pod,从gitlab中读取事件,然后通过这个pod转换格式后(转换后为cloudevent格式),发给kantive-eventing

为指定的事件类型创建1个webhook,监听传入的事件并传给消费者

官方文档:https://knative.dev/docs/eventing/sources/#knative-sources

gitlab事件类型:

语法清单中使用(GitLabSource.spec.eventTypes)

- 推送事件:push_events

- tag推送事件:tag_push_events

- 议题事件:issues_events

- 合并请求事件:merge_requests_events

- 私密议题事件:confidential_issue_events

- 私密评论:confidential_note_events

- 部署事件:deployment_events

- 作业事件:job_events

- 评论:note_events

- 流水线事件:pipeline_events

- wiki页面事件:wiki_page_events

filter过滤时使用(trigger.spec.filters、flow)

dev.knative.sources.gitlab.事件类型

dev.knative.sources.gitlab.push

- push

- tag_push

- merge_request

部署

export https_proxy=http://frp1.freefrp.net:16324

wget -q --show-progress https://github.com/knative-extensions/eventing-gitlab/releases/download/knative-v1.11.0/gitlab.yaml

unset https_proxy

wget -O rep-docker-img.sh 'https://files.cnblogs.com/files/blogs/731344/rep-docker-img.sh?t=1704012892&download=true'

sh rep-docker-img.sh

kubectl apply -f gitlab.yaml

语法

kubectl explain gitlabsources

apiVersion: sources.knative.dev/v1alpha1

kind: GitLabSource

metadata:

name:

spec:

accessToken: #访问gitlab的token

secretKeyRef:

name: str

key: str

eventTypes: [str] #监视的事件类型

projectUrl: str #监视的项目

secretToken: #cm资源名称

secretKeyRef:

name: str

key: str

serviceAccountName: str #sa名称

sink:

ref:

apiVersion: str

kind: str

name: str

namespace: str

url: str

sslverify: boolean #ssl验证

示例:

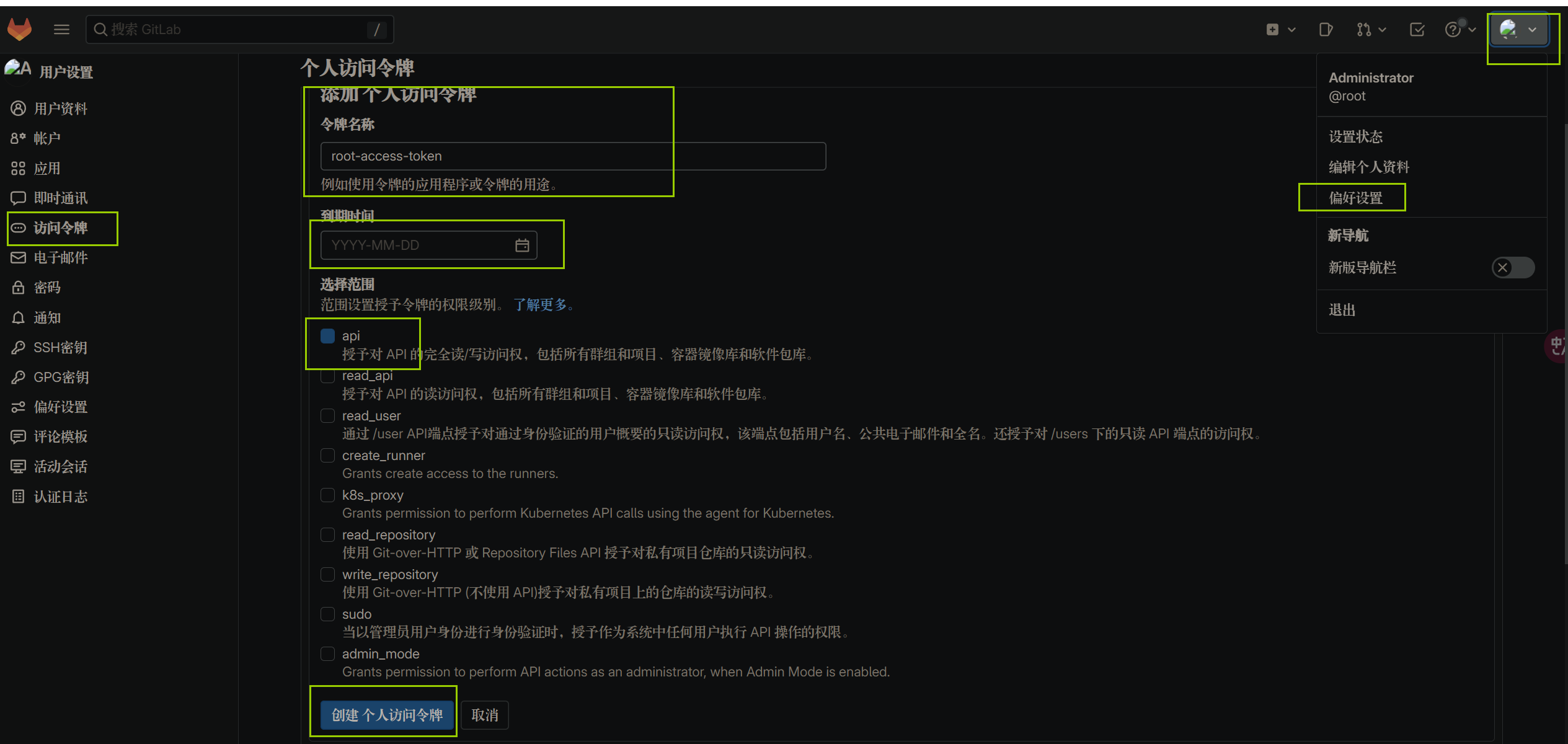

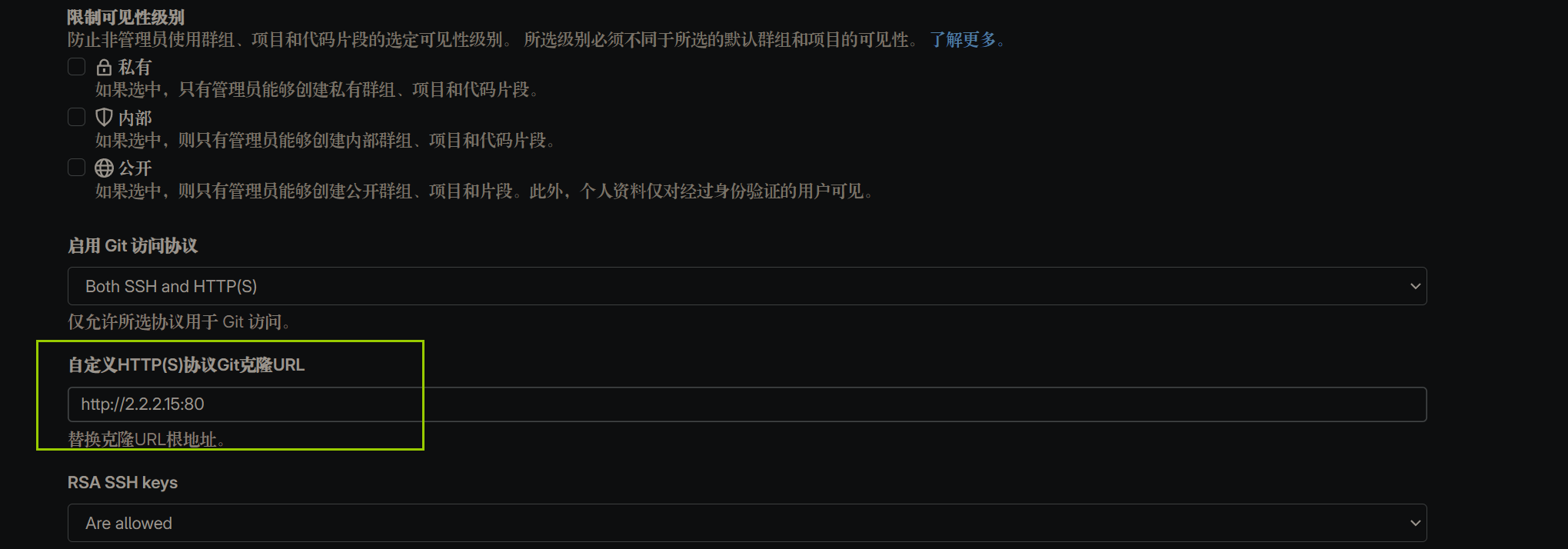

例1:gitlab界面配置,允许扩展通过webhook调用api

1)gitlab web界面配置

创建测试项目

创建用户访问令牌,webhook api调用需要用token才行

令牌创建后,需要复制保存(创建后只能看到一次,之后再也看不到了),后续要用到

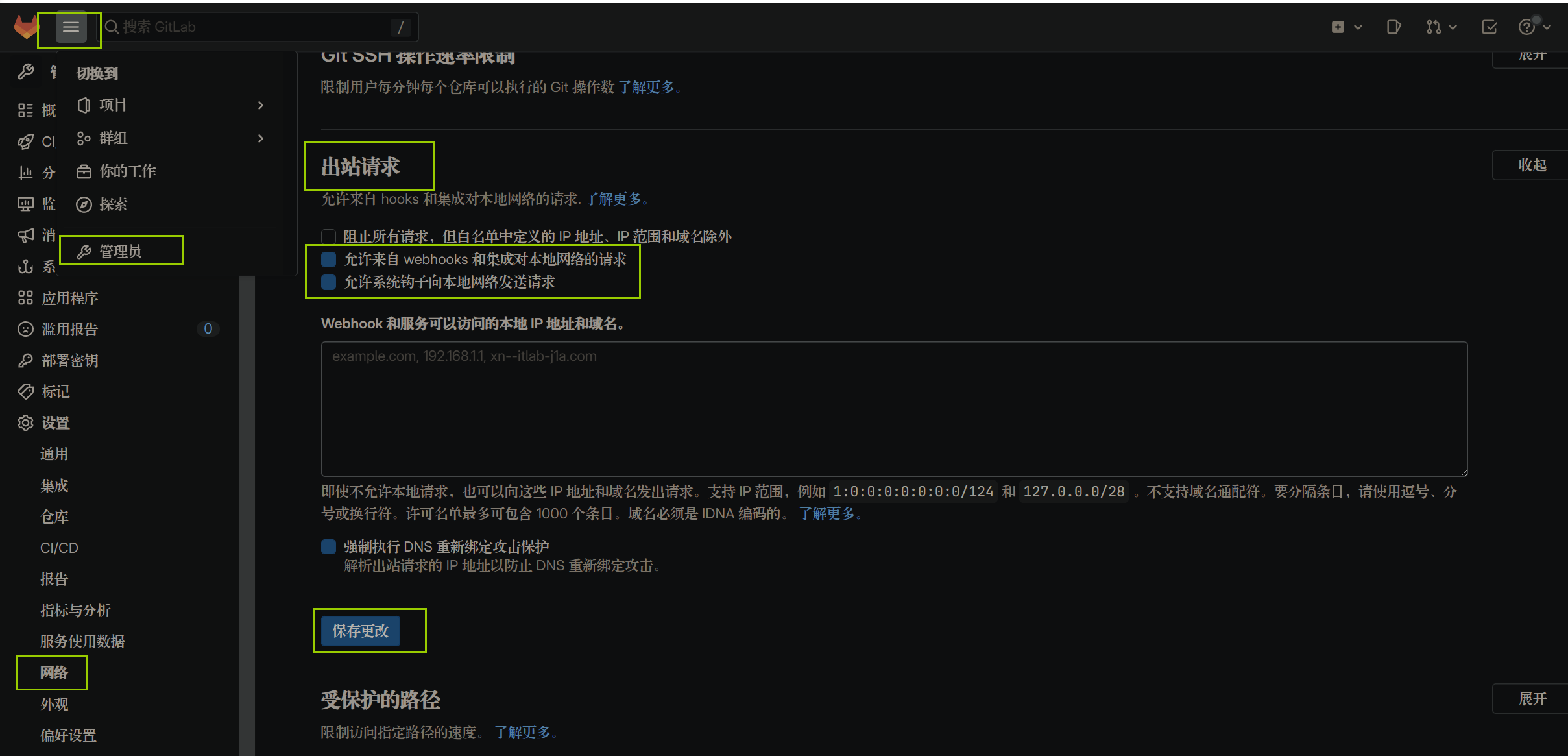

开启gitlab的webhook调用

配置克隆代码时的url,由于我这里直接rpm安装的,也没有外部域名,所以直接指定ip了,不然pod中访问主机名还要加hosts文件,比较麻烦

2)将gitlab-token保存到k8s secret

kubectl apply -f - <<eof

apiVersion: v1

kind: Secret

metadata:

name: gitlab-root-secret

type: Opaque

stringData:

#前面图形界面创建的token

accessToken: glpat-UuGGekr4LE47h3-1gpFz

#secretToken为自定义,手动生成:openssl rand -base64 16

secretToken: mrwuxlyw0+hZTLTmpEWIpA

例2:获取gitlab事件

1)创建gitlabsource

kubectl apply -f - <<eof

apiVersion: sources.knative.dev/v1alpha1

kind: GitLabSource

metadata:

name: gitlabsource

spec:

eventTypes: #读取gitlab中push、issue、合并、tag标签相关事件,这几个就是最常用的事件

- push_events

- issues_events

- merge_requests_events

- tag_push_events

projectUrl: http://2.2.2.15:80/root/myproj #监听的git项目仓库

sslverify: false #跳过https

accessToken: #token

secretKeyRef:

name: gitlab-root-secret

key: accessToken

secretToken:

secretKeyRef:

name: gitlab-root-secret

key: secretToken

sink: #将转换后的事件发给event

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: event

eof

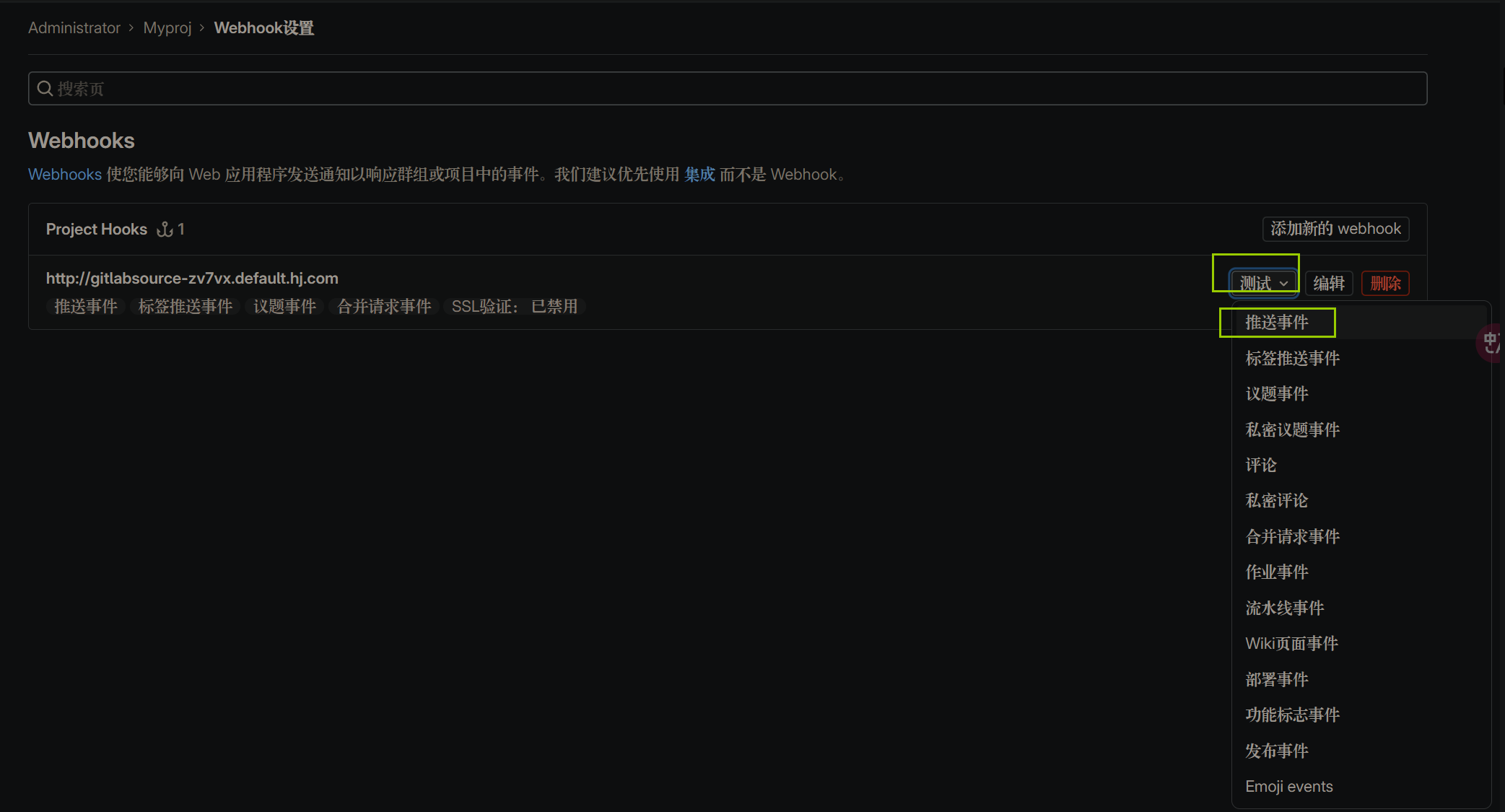

2)gitlab web界面修改配置

gitlab中的myproj项目下,被自动创建了一个webhook,这个名称是刚刚运行pod访问路径,但这里是外部部署gitlab,所以需要修改。修改方法有2种:

- 直接改为knative-istio外部可以解析的域名

- 直接在gitlab的主机上加hosts文件解析

#没有外部域名,所以直接加hosts解析

echo 2.2.2.17 gitlabsource-zv7vx.default.hj.com >> /etc/hosts

3)测试

kubectl logs -f deployments/event-00001-deployment

测试webhook事件,发一个推送事件

点击测试后,弹出钩子202响应码

观察event pod日志,可以看到事件接收成功

channel资源

这里是总的channel配置,如果部署多个channel时,每个都有自己的crd资源,如imc是inmemorychannels,kafka有自己的

较少手动管理,此处就不写配置清单了,要结合subscription资源使用

命令

kn channel create imc1 --type imc

kn channel create kc1 --type messaging.knative.dev:v1beta1:KafkaChannel

kn channel ls

语法

apiVersion: messaging.knative.dev/v1

kind: Channel

metadata:

...

spec:

channelTemplate: #channel使用的模板,有部署多个channel时,要指定kafka还是imc等

apiVersion: str

kind: str

spec: {} #可选配置,具体字段根据使用channel配置imc、kafka、nats

delivery:

backoffDelay: str

backoffPolicy: str

deadLetterSink:

CACerts: str

audience: str

ref: str

uri: str

retry: int

subscribers:

- uid: str

delivery: {} #参考spec.delivery

generation: int

replyAudience: str

replyCACerts: str

replyUri: str

subscriberAudience: str

subscriberCACerts: str

subscriberUri: str

cm配置

config-br-default-channel

apiVersion: v1

kind: ConfigMap

metadata:

data:

channel-template-spec: |

#默认使用的channel模板配置

apiVersion: messaging.knative.dev/v1

kind: InMemoryChannel

default-ch-webhook

apiVersion: v1

kind: ConfigMap

metadata:

name: default-ch-webhook

namespace: knative-eventing

data:

default-ch-config: |

#clusterDefault为集群级别,namespaceDefaults为命名空间级别单独定义。集群与ns可以配置不同,推荐集群使用imc,可以避免无其他channel时无法创建

clusterDefault:

apiVersion: messaging.knative.dev/v1

kind: InMemoryChannel

namespaceDefaults:

default: #default命名空间中使用此配置

apiVersion: messaging.knative.dev/v1

kind: KafkaChannel

spec:

numPartitions: 3 #分片数

replicationFactor: 1 #副本数,当前是单节点,1个即可

subscription资源

较少手动管理,此处就不写配置清单了

命令

kn subscription create sub1 --channel imc1 --sink ksvc:event

kn subscription create sub0 --channel imcv1beta1:pipe0 --sink ksvc:receiver

kn subscription create sub1 --channel messaging.knative.dev:v1alpha1:KafkaChannel:k1 --sink mirror --sink-reply broker:nest --sink-dead-letter bucket

kn subscription list

示例

例1:将gitlab消息发到channel中

apiVersion: sources.knative.dev/v1alpha1

kind: GitLabSource

metadata:

name: gitlabsource-demo

namespace: default

spec:

accessToken:

secretKeyRef:

name: gitlabsecret

key: accessToken

eventTypes:

- push_events

- issues_events

- merge_requests_events

- tag_push_events

projectUrl: http://code.gitlab.svc.cluster.local/root/test

sslverify: false

secretToken:

secretKeyRef:

name: gitlabsecret

key: secretToken

sink:

ref:

apiVersion: messaging.knative.dev/v1

kind: Channel

name: imc01

namespace: default

broker资源

命令

kn broker create br1

kn broker describe br1

语法

kubectl explain triggers

apiVersion: eventing.knative.dev/v1

kind: Broker

spec:

config: #基于哪个channel来实现broker的功能

apiVersion: v1

kind: ConfigMap

name: config-br-default-channel

namespace: knative-eventing

delivery:

backoffDelay: str

backoffPolicy: str

retry: int

deadLetterSink:

CACerts: str

audience: str

ref:

apiVersion: str

kind: str

name: str

namespace: str

uri: str

cm配置:config-br-defaults

apiVersion: v1

kind: ConfigMap

metadata:

data:

default-br-config: |

#默认broker配置,当未指定时,使用此broker

clusterDefault:

#使用的broker:MTChannelBasedBroker、

######## MTChannelBasedBroker ########

brokerClass: MTChannelBasedBroker

#使用的channel配置,从cm中获取,当未指定channel时,就用此处的

apiVersion: v1

kind: ConfigMap

name: config-br-default-channel

namespace: knative-eventing

delivery:

retry: 10

backoffPolicy: exponential

backoffDelay: PT0.2S

######## Kafka ########

# brokerClass: Kafka

# apiVersion: v1

# kind: ConfigMap

# name: kafka-broker-config

# namespace: knative-eventing

namespaceDefaults:

some-namespace:

brokerClass: MTChannelBasedBroker

apiVersion: v1

kind: ConfigMap

name: kafka-channel

namespace: knative-eventing

triggers资源

命令

kn trigger create tr1 --broker br1 --filter type=com.hj.hi -s ksvc:event

kn trigger create tr2 --broker br1 --filter type=com.hj.bye -s ksvc:event-2

kn trigger ls

语法

kubectl explain triggers

apiVersion: eventing.knative.dev/v1

kind: Trigger

spec:

broker: str

delivery: {}

filter: #可选,当未定义过滤时,所有broker消息都接收,并发给订阅者

attributes: #过滤内容,或者过滤支持的事件类型

type: "hi.hj.com" #此处为自定义内容

subscriber:

CACerts: str

audience: str

ref: #订阅者是谁

apiVersion: serving.knative.dev/v1

kind: Service

name: event

namespace: default

uri: str

eventtypes资源

负责维护各broker可以使用的事件类型 ,仅应用在 broker/trigger 模型中

knative支持自动注册部分事件源的事件类型,在创建相关事件源后,会自动生成事件类型:

- pingSource

- apiserverSource

- githubSource

- kafkaSource

语法

kubectl explain eventtypes

apiVersion: eventing.knative.dev/v1beta2

kind: EventType

spec:

broker: str #broker,必须项

description: str

reference:

apiVersion: str

kind: str

name: str

namespace: str

schema: str

schemaData: str

source: str #事件源,必须项

type: str #事件类型,必须项

sequence资源

kn命令不支持

语法

kubectl explain sequences

apiVersion: flows.knative.dev/v1

kind: Sequence

metadata:

spec:

channelTemplate: #channel模板,未指定时,将使用当前ns或集群级默认模板

apiVersion: str #imc、kafka等

kind: str

spec: #具体资源定义

reply: #序列中最后1个订阅者处理后的信息发送目的地

ref:

apiVersion: str

kind: str

name: str

namespace: str

url: str

steps: #定义具体序列processor,按顺序调用,未指定时使用使用当前集群中默认channel资源

- CACerts: str

delivery:

backoffDelay: str

backoffPolicy: str

deadLetterSink:

CACerts: str

ref:

apiVersion: str

kind: str

name: str

namespace: str

uri: str

retry: int

ref: #ksvc、svc

apiVersion: str

kind: str

name: str

namespace: str

uri: str

parallel资源

kn命令不支持

语法

kubectl explain parallel

apiVersion: flows.knative.dev/v1

kind: Parallel

metadata:

name: parallel

spec:

channelTemplate: #未指定时,使用当前ns或全局的通道模板

apiVersion: messaging.knative.dev/v1

kind: Channel

branches: #定义分支,每个分支都有filter(过滤类型)、subscription(将filter过滤的事件做处理,发给processor),分支处理后的结果发给reply或全局的reply

- filter:

CACerts: str

audience: str

uri: str

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: image-filter

subscriber: {} #参考spec.branches.filter

delivery: {} #参考spec.branches.filter

reply: #参考spec.branches.filter

reply: #所有的branch处理后的信息发给一个总的sink,配置项参考spec.branches.filter

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: event

综合案例

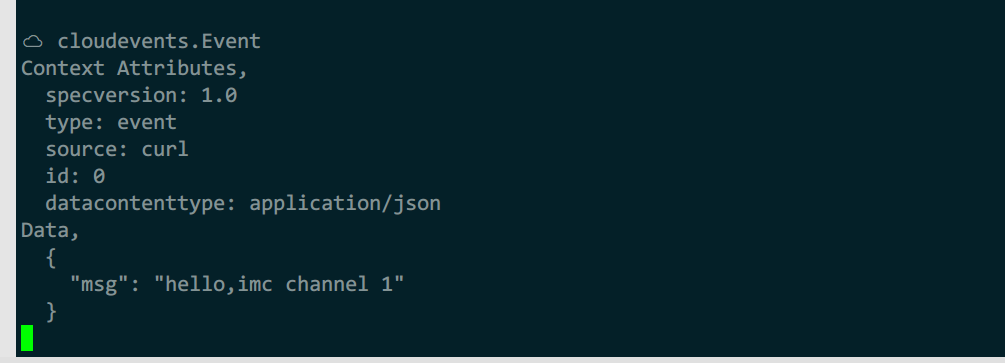

例1:1对多不过滤消息(channel+subscription)

事件源->channel->sub->sink

1)命令行创建channel与订阅

kn channel create imc1 --type imc

kn service create event --image ikubernetes/event --port 8080 --scale-min 1

#可以创建多个订阅者订阅imc1,可以同时收到消息

kn subscription create sub1 --channel imc1 --sink ksvc:event

kn subscription create sub2 --channel imc1 --sink ksvc:event-2

2)模拟事件

kn channel describe imc1

kubectl exec -it admin -- sh

curl -v imc1-kn-channel.default.svc.cluster.local \

-X POST \

-H 'Ce-Id: 0' \

-H 'Ce-Specversion: 1.0' \

-H 'Ce-Type: event' \

-H 'Ce-Time: ' \

-H 'Ce-source: curl' \

-H 'Content-Type: application/json' \

-d '{"message":"hello,imc channel 1"}'

查看event pod日志,可以收到消息

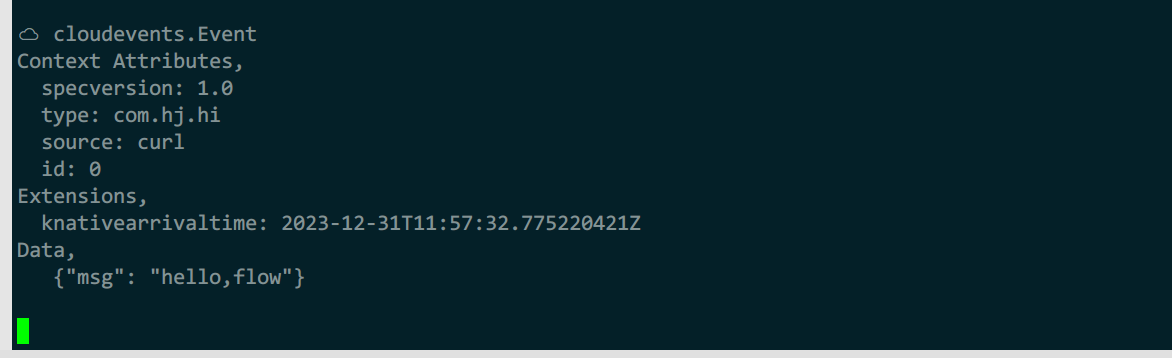

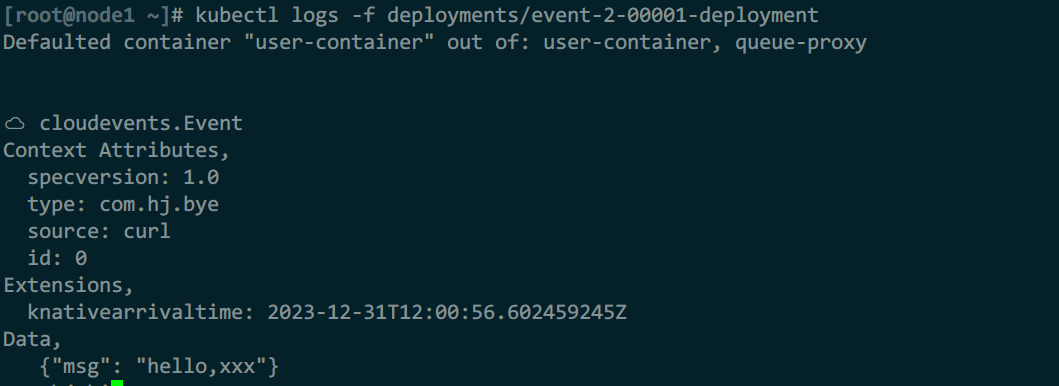

例2:1对多过滤消息(broker+trigger)

由trigger基于过滤条件从broker获取消息集合,发给订阅者

source -> broker -> trigger -> ksvc/svc

1)创建broker与trigger

kn broker create br1

kn trigger create tr1 --broker br1 --filter type=com.hj.hi -s ksvc:event

kn trigger create tr2 --broker br1 --filter type=com.hj.bye -s ksvc:event-2

2)模拟消息发送

#观察2个event pod的日志

kubectl logs -f deployments/event-2-00001-deployment

kubectl logs -f deployments/event-00001-deployment

kubectl exec -it admin -- sh

#模拟类型:com.hj.hi

curl -i broker-ingress.knative-eventing.svc.cluster.local/default/br1 \

-X POST \

-H 'Content-Type: application/cloudevents+json' \

-d '{

"id": "0",

"specversion": "1.0",

"type": "com.hj.hi",

"source": "curl",

"data": {"message": "hello,flow"}

}'

#模拟类型:com.hj.bye

curl -i broker-ingress.knative-eventing.svc.cluster.local/default/br1 \

-X POST \

-H 'Content-Type: application/cloudevents+json' \

-d '{

"id": "0",

"specversion": "1.0",

"type": "com.hj.bye",

"source": "curl",

"data": {"message": "hello,xxx"}

}'

event pod中显示了类似:com.hj.hi的记录

event-2 pod显示了类型:com.hj.bye记录,但无com.hj.hi记录

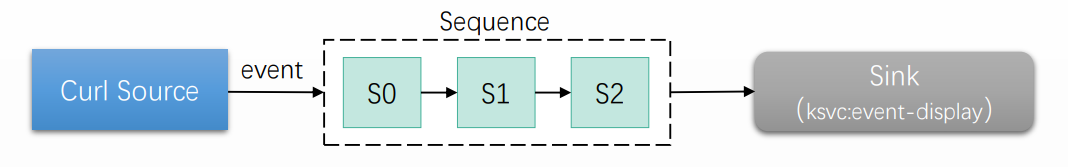

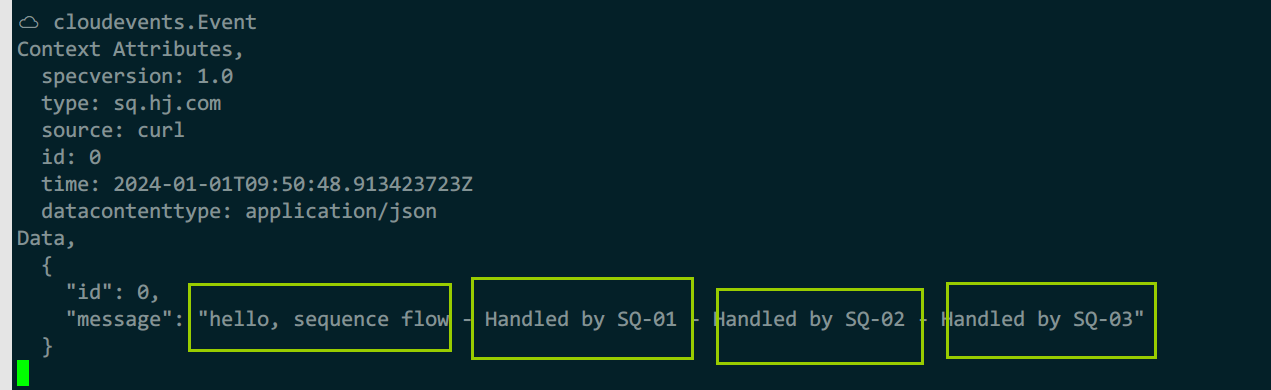

例3:串行处理消息

1)生成3个step中的ksvc

kn service apply sq-append-1 --image ikubernetes/appender --env MESSAGE=" - Handled by SQ-01"

kn service apply sq-append-2 --image ikubernetes/appender --env MESSAGE=" - Handled by SQ-02"

kn service apply sq-append-3 --image ikubernetes/appender --env MESSAGE=" - Handled by SQ-03"

2)接收事件的ksvc

kn service create event --image ikubernetes/event_display --port 8080 --scale-min 1

3)创建sequence

kubectl apply -f - <<eof

apiVersion: flows.knative.dev/v1

kind: Sequence

metadata:

name: sq-demo

spec:

channelTemplate:

apiVersion: messaging.knative.dev/v1

kind: InMemoryChannel

steps:

- ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: sq-append-1

- ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: sq-append-2

- ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: sq-append-3

reply:

ref:

kind: Service

apiVersion: serving.knative.dev/v1

name: event

eof

4)测试

#查看日志

kubectl logs -f deploy/event-00001-deployment

kubectl exec -it admin -- sh

#模拟事件源

curl -v http://sq-demo-kn-sequence-0-kn-channel.default.svc.cluster.local \

-X POST \

-H 'Content-Type: application/cloudevents+json' \

-d '{

"id": "0",

"specversion": "1.0",

"type": "sq.hj.com",

"source": "curl",

"data": {"message": "hello, sequence flow"}

}'

可以看到发送的消息,以及经过step中定义的3个ksvc处理后的消息

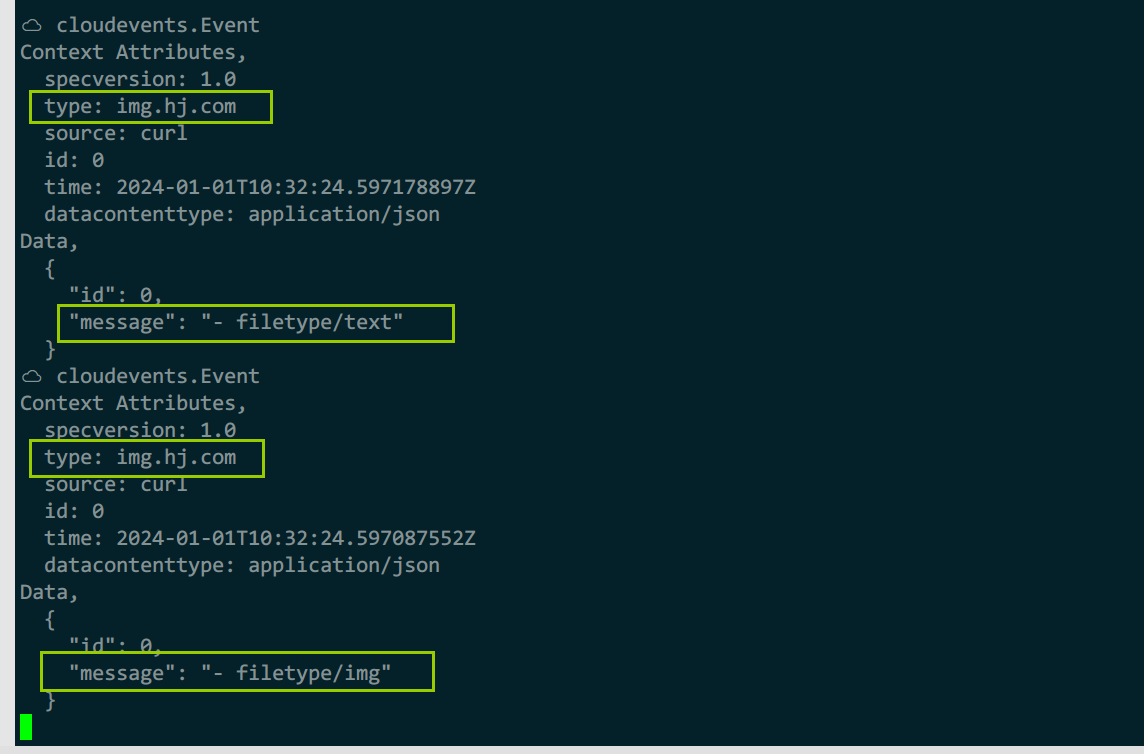

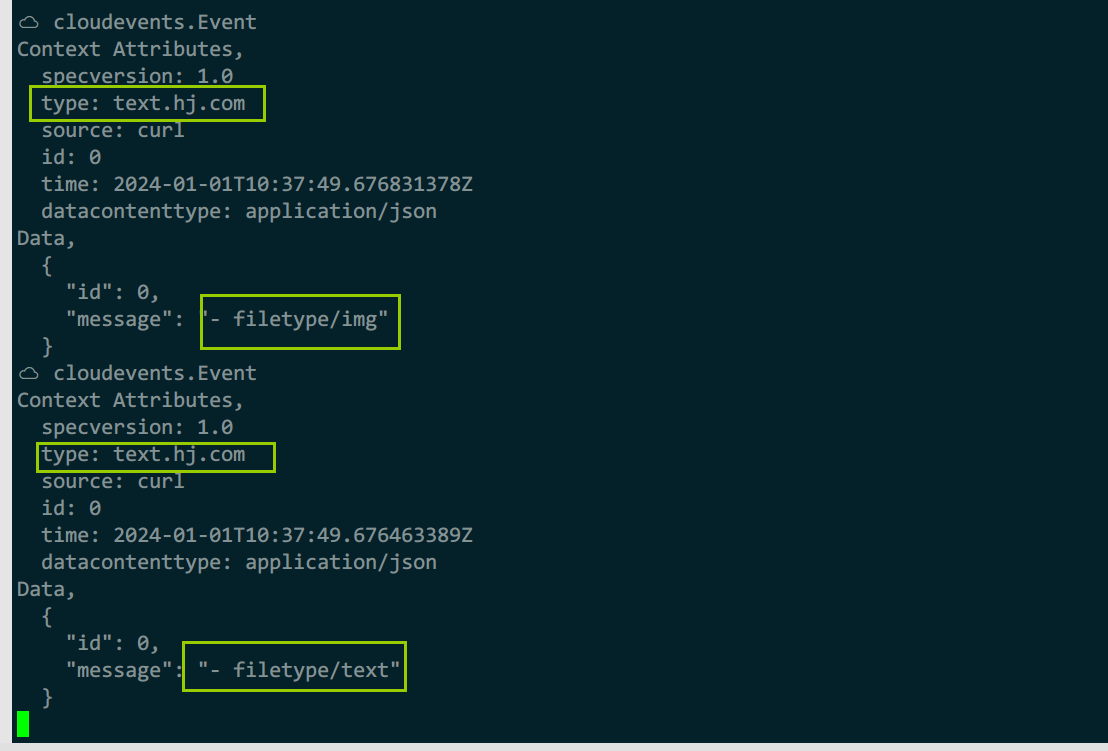

例4:多分支并行过滤消息

1)创建过滤器ksvc

kn service apply image-filter --image villardl/filter-nodejs:0.1 -e FILTER='event.type == "img.hj.com"' --scale-min 1

kn service apply text-filter --image villardl/filter-nodejs:0.1 -e FILTER='event.type == "text.hj.com"' --scale-min 1

2)创建sub

kn service apply para-appender-img --image ikubernetes/appender --env MESSAGE='- filetype is img' --scale-min 1

kn service apply para-appender-text --image ikubernetes/appender --env MESSAGE='- filetype is text' --scale-min 1

3)创建parallel

kubectl apply -f - <<eof

apiVersion: flows.knative.dev/v1

kind: Parallel

metadata:

name: parallel

spec:

channelTemplate:

apiVersion: messaging.knative.dev/v1

kind: InMemoryChannel

branches:

- filter:

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: image-filter

subscriber:

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: para-appender-img

- filter:

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: text-filter

subscriber:

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: para-appender-text

reply:

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: event

eof

4)测试

kubectl exec -it admin -- sh

curl -v http://parallel-kn-parallel-kn-channel.default.svc.cluster.local \

-X POST \

-H 'Content-Type: application/cloudevents+json' \

-d '{

"id": "0",

"specversion": "1.0",

"type": "img.hj.com",

"source": "curl",

"data": {"message": "hello,flow parallel"}

}'

curl -v http://parallel-kn-parallel-kn-channel.default.svc.cluster.local \

-X POST \

-H 'Content-Type: application/cloudevents+json' \

-d '{

"id": "0",

"specversion": "1.0",

"type": "text.hj.com",

"source": "curl",

"data": {"message": "hello,flow parallel"}

}'

event pod中,显示了2个订阅者自己的信息

例5:将gitlab事件输出到channel

gitlab配置参考gitlabsource配置中的示例

1)创建channel与subscription

kn service apply event --image ikubernetes/event_display --port 8080 --scale-min 1

kn service apply event2 --image ikubernetes/event_display --port 8080 --scale-min 1

kn channel create imc1 --type imc

kn subscription create sub1 --channel imc1 --sink ksvc:event

kn subscription create sub2 --channel imc1 --sink ksvc:event2

2)创建gitlab事件源

kubectl apply -f - <<eof

apiVersion: sources.knative.dev/v1alpha1

kind: GitLabSource

metadata:

name: gitlabsource

spec:

eventTypes:

- push_events

- tag_push_events

projectUrl: http://2.2.2.15:80/root/myproj

sslverify: false

accessToken:

secretKeyRef:

name: gitlab-root-secret

key: accessToken

secretToken:

secretKeyRef:

name: gitlab-root-secret

key: secretToken

sink:

ref:

apiVersion: messaging.knative.dev/v1

kind: Channel

name: imc1

eof

3)将注册的webhook名解析,并测试

由于gitlab运行在k8s集群外,gitlabsource注册webhook时,使用的是k8s的svc名称,所以gitlab解析不了,需要加入hosts解析

echo 2.2.2.17 gitlabsource-n6v2q.default.hj.com >> /etc/hosts

kubectl logs -f deploy/event-00001-deployment

kubectl logs -f deploy/event2-00001-deployment

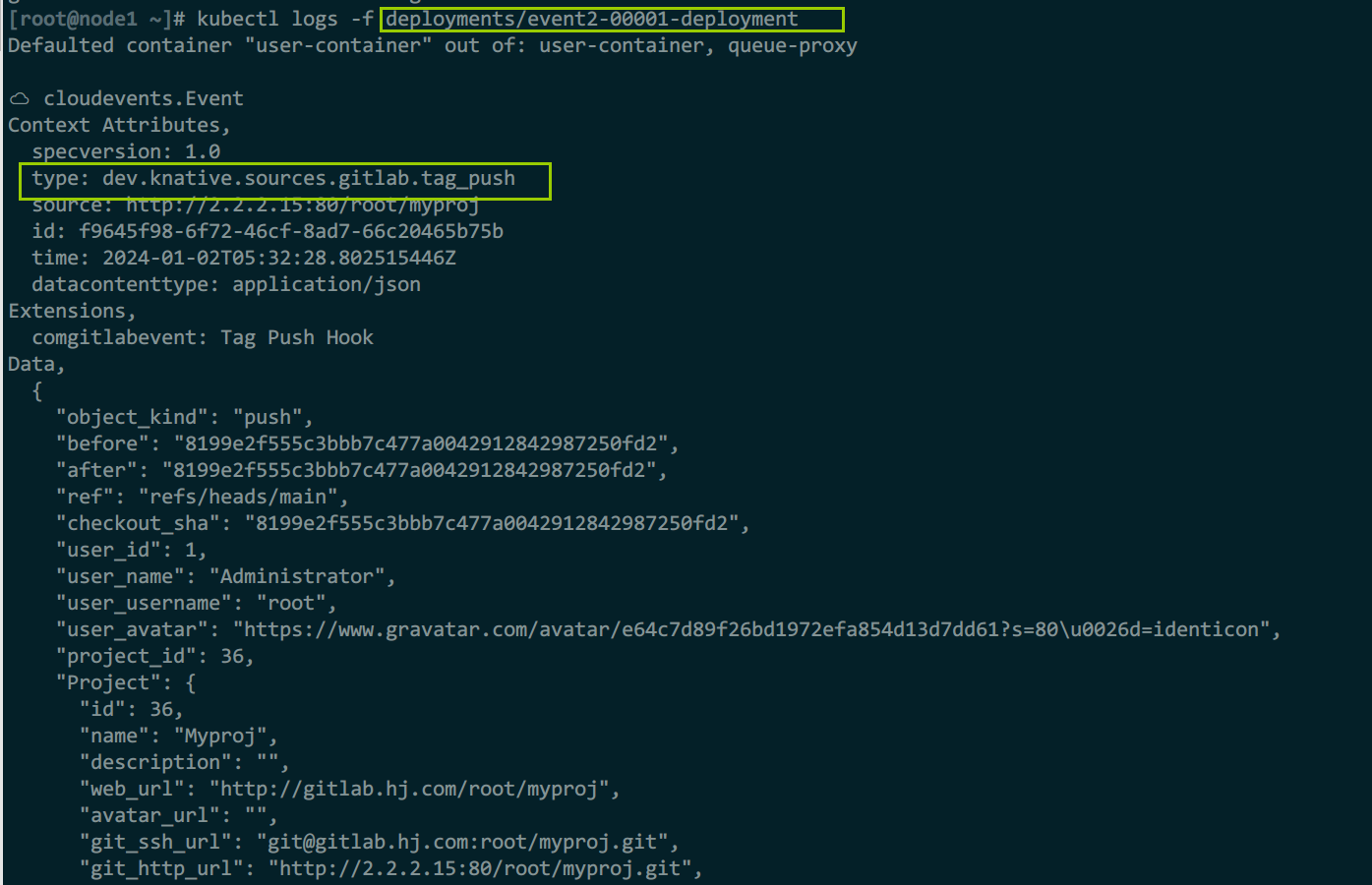

测试tag创建事件

2个sink接收事件正常,可以看到事件类型是tag_push

4)清理

kn service delete event

kn service delete event2

kn service delete event3

kn channel delete imc1

kn subscription delete sub1

kn subscription delete sub2

kubectl delete gitlabsources.sources.knative.dev gitlabsource

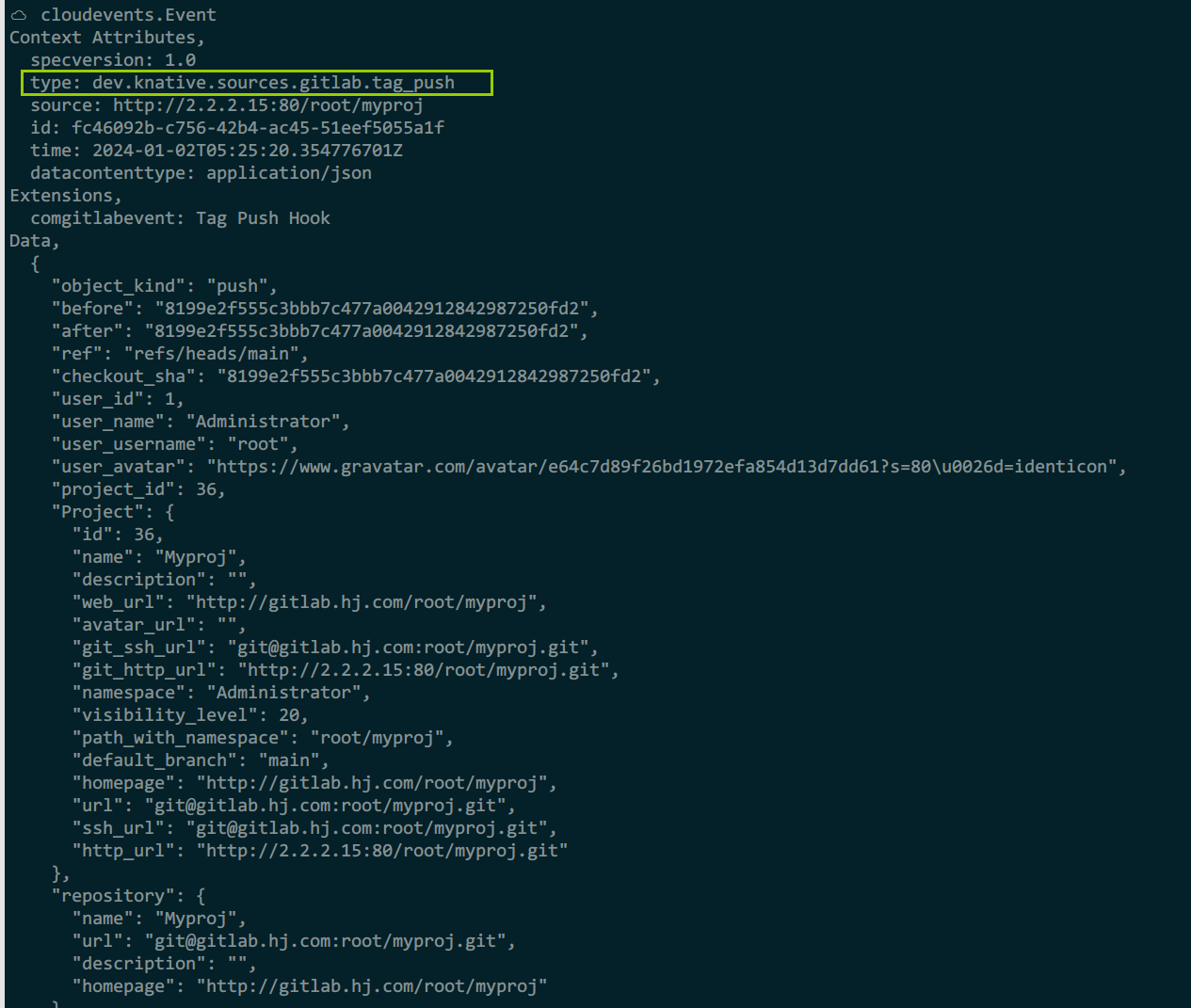

例6:将gitlab事件输出到broker

1)创建broker与trigger

kn service apply event --image ikubernetes/event_display --port 8080 --scale-min 1

kn service apply event2 --image ikubernetes/event_display --port 8080 --scale-min 1

kn service apply event3 --image ikubernetes/event_display --port 8080 --scale-min 1

kn broker create br-gitlab

kn trigger create tr1-gitlab --broker br-gitlab --filter type=dev.knative.sources.gitlab.push -s ksvc:event

kn trigger create tr2-gitlab --broker br-gitlab --filter type=dev.knative.sources.gitlab.tag_push -s ksvc:event2

kn trigger create tr3-gitlab --broker br-gitlab --filter type=dev.knative.sources.gitlab.merge_request -s ksvc:event3

2)创建gitlab事件源

kubectl apply -f - <<eof

apiVersion: sources.knative.dev/v1alpha1

kind: GitLabSource

metadata:

name: gitlabsource

spec:

eventTypes:

- push_events

- tag_push_events

- merge_requests_events

projectUrl: http://2.2.2.15:80/root/myproj

sslverify: false

accessToken:

secretKeyRef:

name: gitlab-root-secret

key: accessToken

secretToken:

secretKeyRef:

name: gitlab-root-secret

key: secretToken

sink:

ref:

apiVersion: eventing.knative.dev/v1

kind: Broker

name: br-gitlab

eof

3)解析webhook名称,测试使用

echo 2.2.2.17 gitlabsource-jghsj.default.hj.com >> /etc/hosts

kubectl logs -f deploy/event-00001-deployment

kubectl logs -f deploy/event2-00001-deployment

kubectl logs -f deploy/event3-00001-deployment

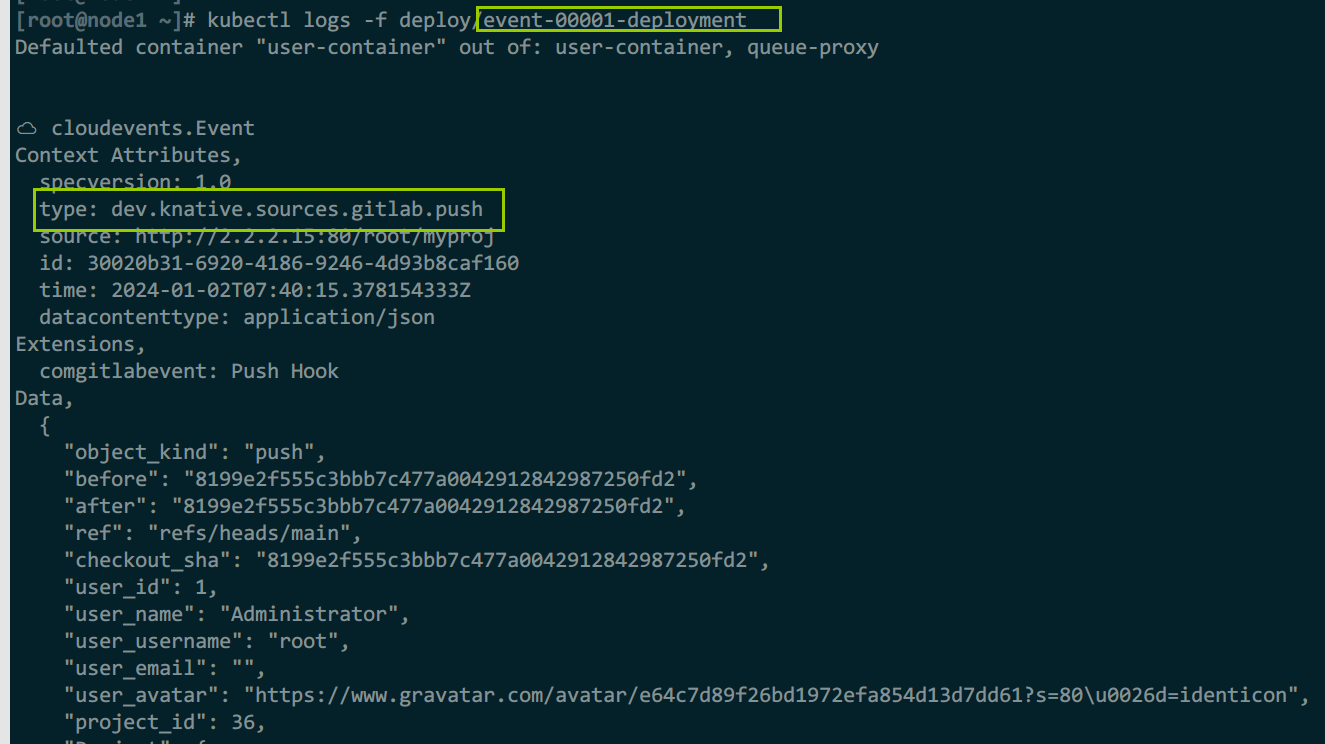

第一个event pod可以看到push事件,其他则没有

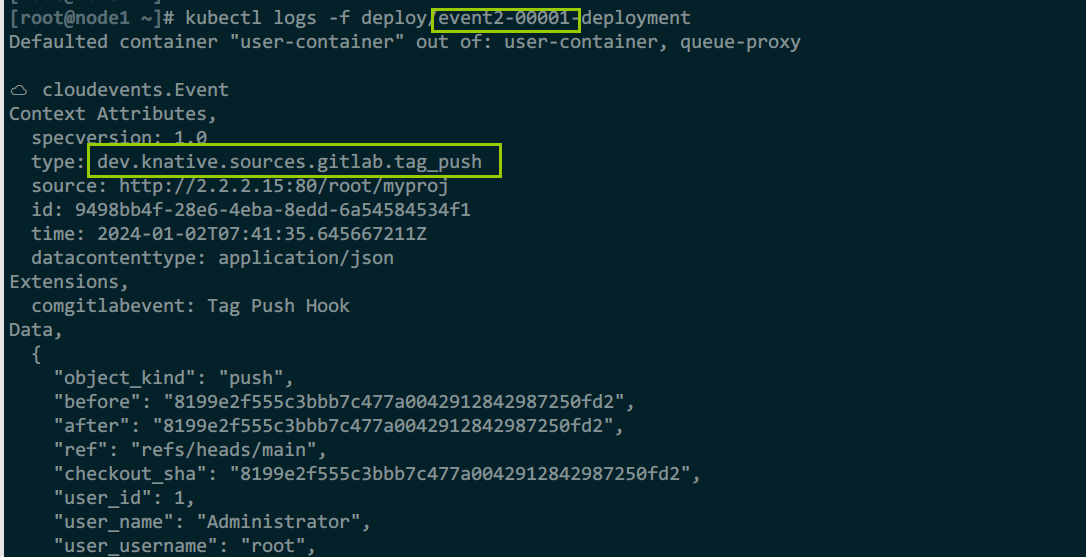

第二个event2 pod可以看到tag_push事件,其他则没有

第三个event pod可以看到合并事件,其他则没有