knative部署

knative由2部分实现Faas平台方案:

- serving:属于baas层面,完成服务自动扩缩容、流量/版本管理等

- eventing:属于faas层面,完成函数即服务的编排,事件驱动管理等

部署方式

- yaml

- knative operator

生产运行环境推荐

- k8s版本最低1.21

- 单节点k8s:最少6C6G,30g存储

- 多节点k8s:每节点最少2c4g,20g存储

Serving

官方文档:https://knative.dev/docs/install/yaml-install/serving/install-serving-with-yaml/

运行环境依赖

- 网络层组件:istio、contour、kourier三选一

- dns(可选),生产环境公网配置泛域名

- serving扩展(可选):HPA(支持k8s的hpa)、cert manager(工作负载自签证书)、encrypt http01(工作负载自签证书)

安装后运行pod说明

- avtivator:revision中pod数量收缩到0时,activator负责接收缓存相关请求,同时报告指标给autosacler,并在autoscaler在revision上扩展出必要的pod后,再将请求路由到响应的revision

- autoscaler:knative通过注入1个成为queue-proxy容器的sidecar代理来了解它部署的pod上的请求,而autoscaler会为每个服务使用每秒请求数来自动缩放其revision上的pod

- controller:负责监视serving资源(kservice、configuration、route、revision)相关的api对象并管理他的生命周期,是serving声明式api的关键保障

- webhook:为k8s提供外置的准入控制器,间距验证和鉴权功能,主要作用于serving专有的几个api资源上,以及相关的cm资源

- domain-mapping:将制定的域名映射到service、kservice,甚至是knatice route上,从而使用自定义域名访问服务,部署

Magic DNS时才有 - domainmapping-webhook:domain-mapping专用的准入控制器,部署

Magic DNS时才有 - net-certmanager-controller:证书管理协同时使用的专用控制器,部署证书管理应用时才有

- net-istio-controller:istio协同使用使用的专用控制器

安装

下载github慢,可以配置代理,博主自己搞的一个

export https_proxy=http://frp1.freefrp.net:16324 #配置

unset https_proxy #取消

1)安装istio

可以自行安装istio,或者已经有istio在运行时,只用安装net-istio插件即可

方法1

istioctl install -y

方法2

mkdir -p knative

cd !$

export https_proxy=http://frp1.freefrp.net:16324

wget https://github.com/knative/net-istio/releases/download/knative-v1.11.0/istio.yaml

wget -o istio-2.yaml https://github.com/knative/net-istio/releases/download/knative-v1.11.0/istio.yaml

unset https_proxy

kubectl apply -l knative.dev/crd-install=true -f istio.yaml

kubectl apply -f istio-2.yaml

2)安装serving

#下载博主的镜像名替换脚本,由于kantive需要从谷歌下载镜像,所以要修改k

wget -O rep-docker-img.sh 'https://files.cnblogs.com/files/blogs/731344/rep-docker-img.sh?t=1704012892&download=true'

export https_proxy=http://frp1.freefrp.net:16324

wget https://github.com/knative/serving/releases/download/knative-v1.11.1/serving-crds.yaml

wget https://github.com/knative/serving/releases/download/knative-v1.11.1/serving-core.yaml

unset https_proxy

#替换镜像名

sh rep-docker-img.sh

#可选操作。注入sidecar envoy,注入后可以删除istio-system命名空间下的svc:knative-local-gateway

kubectl create ns knative-serving

kubectl label ns knative-serving istio-injection=enabled

kubectl apply -f serving-crds.yaml

kubectl apply -f serving-core.yaml

3)安装Knative Istio 控制器

让knative serving可以调用istio的功能

ip link a vip0 type dummy

ip add a 2.2.2.17/32 dev vip0

kubectl patch svc -n istio-system istio-ingressgateway -p '{"spec":{"externalIPs":["2.2.2.17"]}}'

export https_proxy=http://frp1.freefrp.net:16324

wget https://github.com/knative/net-istio/releases/download/knative-v1.11.0/net-istio.yaml

unset https_proxy

#替换镜像名

sh rep-docker-img.sh

kubectl apply -f net-istio.yaml

4)安装hpa扩展

export https_proxy=http://frp1.freefrp.net:16324

wget https://github.com/knative/serving/releases/download/knative-v1.11.1/serving-hpa.yaml

unset https_proxy

sh rep-docker-img.sh

kubectl apply -f serving-hpa.yaml

5)安装kn命令行工具

export https_proxy=http://frp1.freefrp.net:16324

wget -O /bin/kn https://github.com/knative/client/releases/download/knative-v1.11.0/kn-linux-amd64

unset https_proxy

chmod +x /bin/kn

kn completion bash > /etc/bash_completion.d/kn

source <(/bin/kn completion bash)

6)配置dns

此步骤为,外部访问knative使用时的域名配置,生产环境应配置公网域名,此处是学习使用,随便配置了

参考官方配置:https://knative.dev/docs/install/yaml-install/serving/install-serving-with-yaml/#__tabbed_2_3

kubectl patch configmap/config-domain \

--namespace knative-serving \

--type merge \

--patch '{"data":{"example.com":""}}'

7)配置自动域名映射

kubectl patch cm config-network \

-n knative-serving \

-p '{"data":{"autocreate-cluster-domain-claims":"true"}}'

8)将knative配置为mtls通信(可选)

kubectl apply -f - <<EOF

apiVersion: "security.istio.io/v1beta1"

kind: "PeerAuthentication"

metadata:

name: "default"

namespace: "knative-serving"

spec:

mtls:

mode: PERMISSIVE

EOF

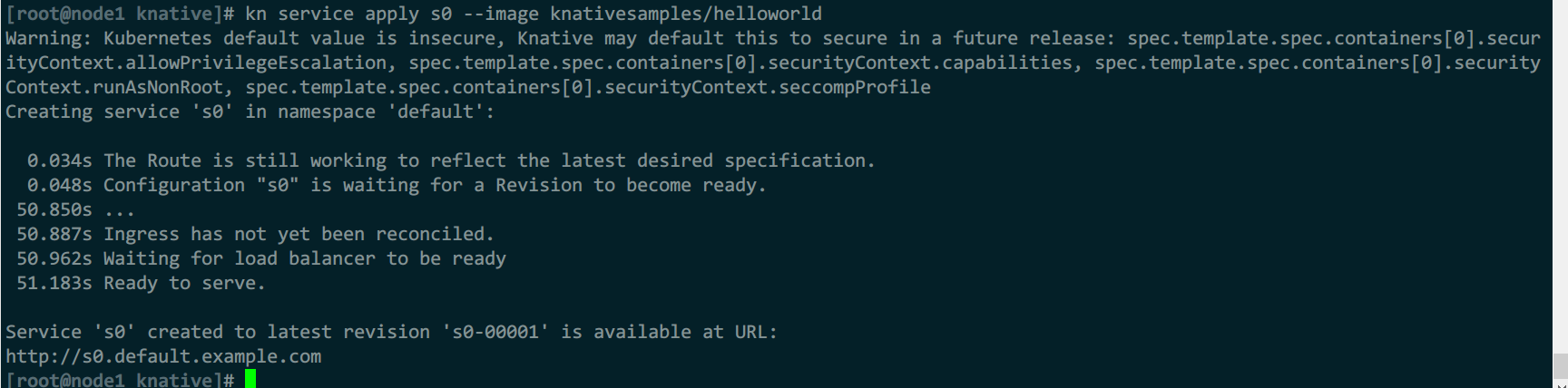

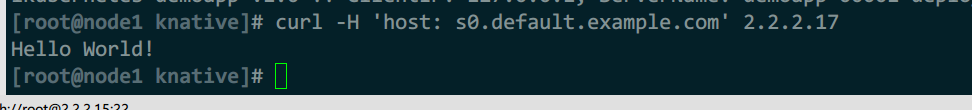

9)验证

kn service apply s0 --image knativesamples/helloworld

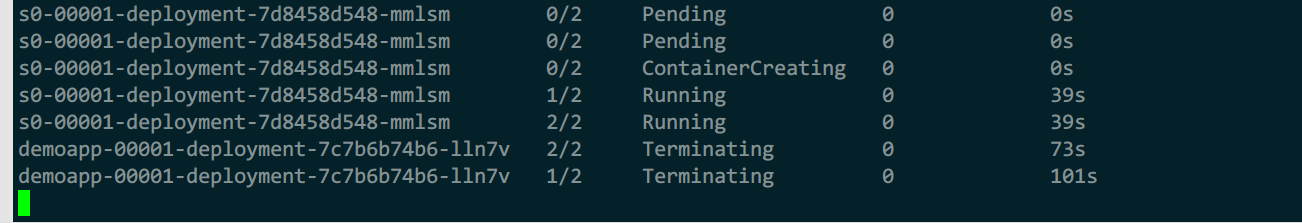

#等待s0容器完全停止后再访问,能够拉起一个处理请求就是正常的

curl -H 'host: s0.default.example.com' 2.2.2.17

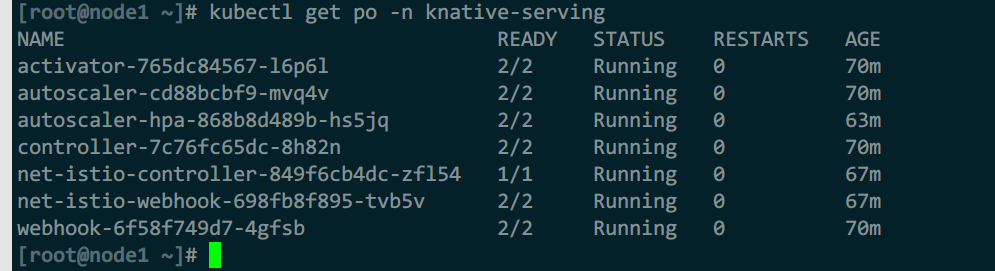

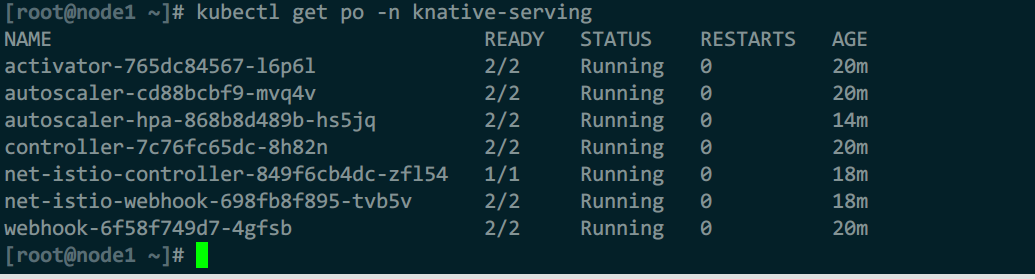

安装后运行的pod如下:

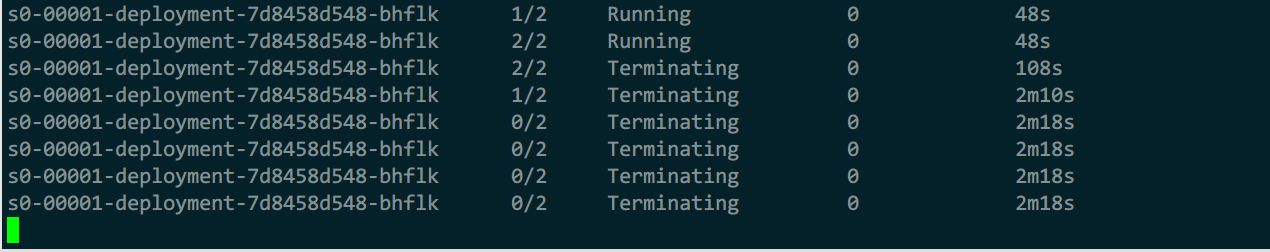

kn运行的测试pod,默认运行一段时间后会停掉,这是正常的

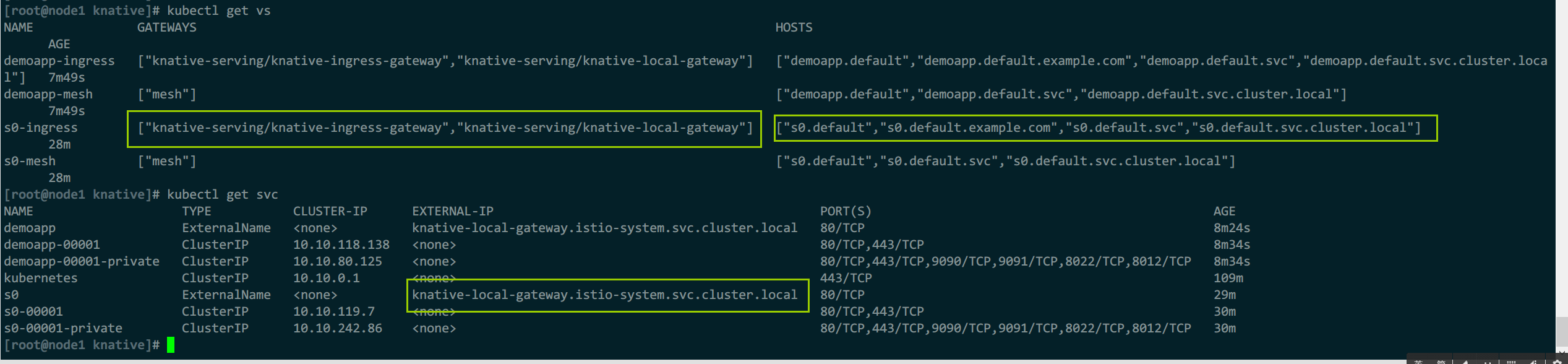

之所以可以直接域名访问,是因为knative自动配置了svc、istio的vs资源

10)升级

参考官方文档:https://knative.dev/docs/install/upgrade/upgrade-installation/

升级仅适用于一个版本升级,如:1.11升1.12,多个版本要循序渐进

export https_proxy=http://frp1.freefrp.net:16324

wget https://github.com/knative/serving/releases/download/knative-v1.12.3/serving-core.yaml

wget https://github.com/knative/serving/releases/download/knative-v1.12.3/serving-post-install-jobs.yaml

wget -O /bin/kn https://github.com/knative/client/releases/download/knative-v1.12.0/kn-linux-amd64

unset https_proxy

chmod +x /bin/kn

kubectl apply -f serving-core.yaml

kubectl create -f serving-post-install-jobs.yaml

eventing

注:部署时,channel、broker可以同时部署多个一起使用,如果使用kafka、rabbitmq时,需要提前运行对应的服务,eventing才能调用他们

可选组件

- channel(消息传递通道):有kafka、in-memory(内存)、nats

- broker:若消息传递不涉及到1对多过滤,可以不用部署broker。支持:kafka、mt-channel-based(直接基于channel完成broker)、rabbitmq

- sink:有些额外的事件源原生不支持,所以需要额外部署扩展,将消息发给对应sink

生产推荐

- mt-broler + kafka-channel

- kafka-broker + kafka-channel

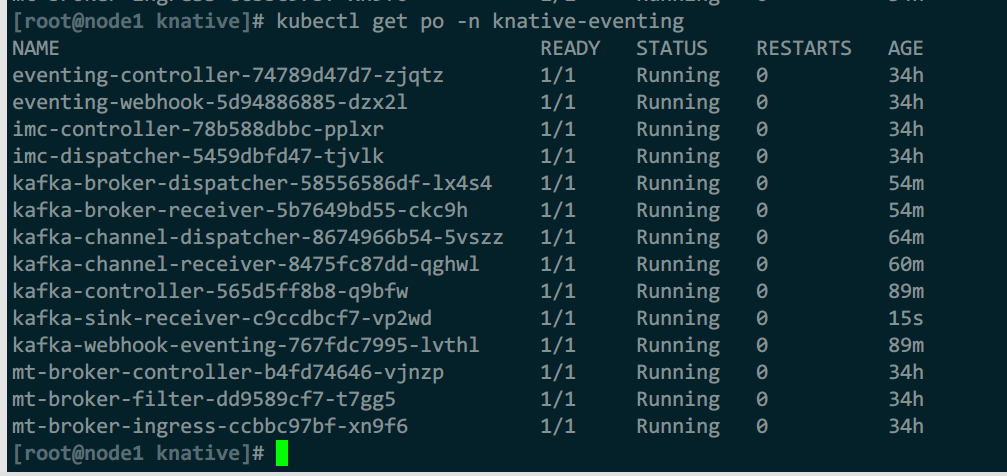

安装后运行pod说明

- eventing-controller

- eventing-webhook

- imc-controller:channel控制器

- imc-dispatcher:完成channel中消息的1对多分发

- mt-broker-controller

- mt-broker-filter:支持trigger功能

- mt-broker-ingress:broker也需要将自己暴露出去给别人访问,所以提供ingress管理

- kafka-xxx:部署kafka相关组件后运行

部署kafka

kafka的组件需要从kafka集群获取消息,所以需要提前运行有kafka集群,而k8s部署kafka方式有:

- yaml部署:需要自己写yaml清单

- operator部署:第三方开源项目提供了operator,kafka官方并没有做这个功能

operator部署kafka

官网:https://strimzi.io/

项目文档(minikube):https://strimzi.io/quickstarts/

github:https://github.com/strimzi/strimzi-kafka-operator

github配置清单说明:

- kafka-ephemeral-single.yaml:单节点部署,非持久化存储(临时目录)

- kafka-ephemeral.yaml:多节点部署,非持久化存储(临时目录)

- kafka-persistent-single.yaml:单节点部署,持久化存储(需要配置存储类)

- kafka-persistent.yaml:多节点部署,持久化存储

kubectl create namespace kafka

#创建kafka-operator

kubectl create -f 'https://strimzi.io/install/latest?namespace=kafka' -n kafka

#创建zk集群和kafka

kubectl apply -f https://strimzi.io/examples/latest/kafka/kafka-ephemeral-single.yaml -n kafka

#创建一个生产者,测试集群是否可用

kubectl -n kafka run kafka-producer -ti --image=quay.io/strimzi/kafka:0.39.0-kafka-3.6.1 --rm=true --restart=Never -- bin/kafka-console-producer.sh --bootstrap-server my-cluster-kafka-bootstrap:9092 --topic my-topic

#创建一个消费者,测试集群是否可用

kubectl -n kafka run kafka-consumer -ti --image=quay.io/strimzi/kafka:0.39.0-kafka-3.6.1 --rm=true --restart=Never -- bin/kafka-console-consumer.sh --bootstrap-server my-cluster-kafka-bootstrap:9092 --topic my-topic --from-beginning

安装

1)下载所有配置清单

#eventing版本

version=1.12.2

kafka_version=$(echo $version |awk -F'.' '{print $1,$2,($NF-1)}' |tr ' ' '.')

#开启代理

export https_proxy=http://frp1.freefrp.net:16324

#下载的url,eventing清单、channel清单、broker清单

url_list=(

https://github.com/knative/eventing/releases/download/knative-v${version}/eventing-crds.yaml

https://github.com/knative/eventing/releases/download/knative-v${version}/eventing-core.yaml

https://github.com/knative/eventing/releases/download/knative-v${version}/in-memory-channel.yaml

https://github.com/knative/eventing/releases/download/knative-v${version}/mt-channel-broker.yaml

https://github.com/knative-extensions/eventing-kafka-broker/releases/download/knative-v${kafka_version}/eventing-kafka-controller.yaml

https://github.com/knative-extensions/eventing-kafka-broker/releases/download/knative-v${kafka_version}/eventing-kafka-channel.yaml

https://github.com/knative-extensions/eventing-kafka-broker/releases/download/knative-v${kafka_version}/eventing-kafka-controller.yaml

https://github.com/knative-extensions/eventing-kafka-broker/releases/download/knative-v${kafka_version}/eventing-kafka-broker.yaml

https://github.com/knative-extensions/eventing-kafka-broker/releases/download/knative-v${kafka_version}/eventing-kafka-source.yaml

https://github.com/knative-extensions/eventing-kafka-broker/releases/download/knative-v${kafka_version}/eventing-kafka-sink.yaml

)

for url in ${url_list[*]} ;do

for i in {1..3} ;do

wget -q --show-progress $url && break ||echo "下载失败,正在重试$i: $url";

done

done

unset https_proxy

2)部署

wget -O rep-docker-img.sh 'https://files.cnblogs.com/files/blogs/731344/rep-docker-img.sh?t=1704012892&download=true'

sh rep-docker-img.sh

#部署eventing

kubectl apply -f eventing-crds.yaml

kubectl apply -f eventing-core.yaml

#部署imc channel,此处测试使用,直接使用内存

kubectl apply -f in-memory-channel.yaml

#部署mt-broker

kubectl apply -f mt-channel-broker.yaml

3)测试

#运行官方的测试镜像,国内源下载

kn service apply event --image ikubernetes/event_display --port 8080 --scale-min 1

kn domain create event.hj.com --ref 'ksvc:event'

#运行一个集群内pod,方便测试使用

kubectl exec run amdin --image alpine -- tail -f /dev/null

kubectl exec -it admin -- sh

sed -i 's/dl-cdn.alpinelinux.org/mirrors.ustc.edu.cn/g' /etc/apk/repositories

apk add curl jq

#模拟产生事件源,发给测试pod:event

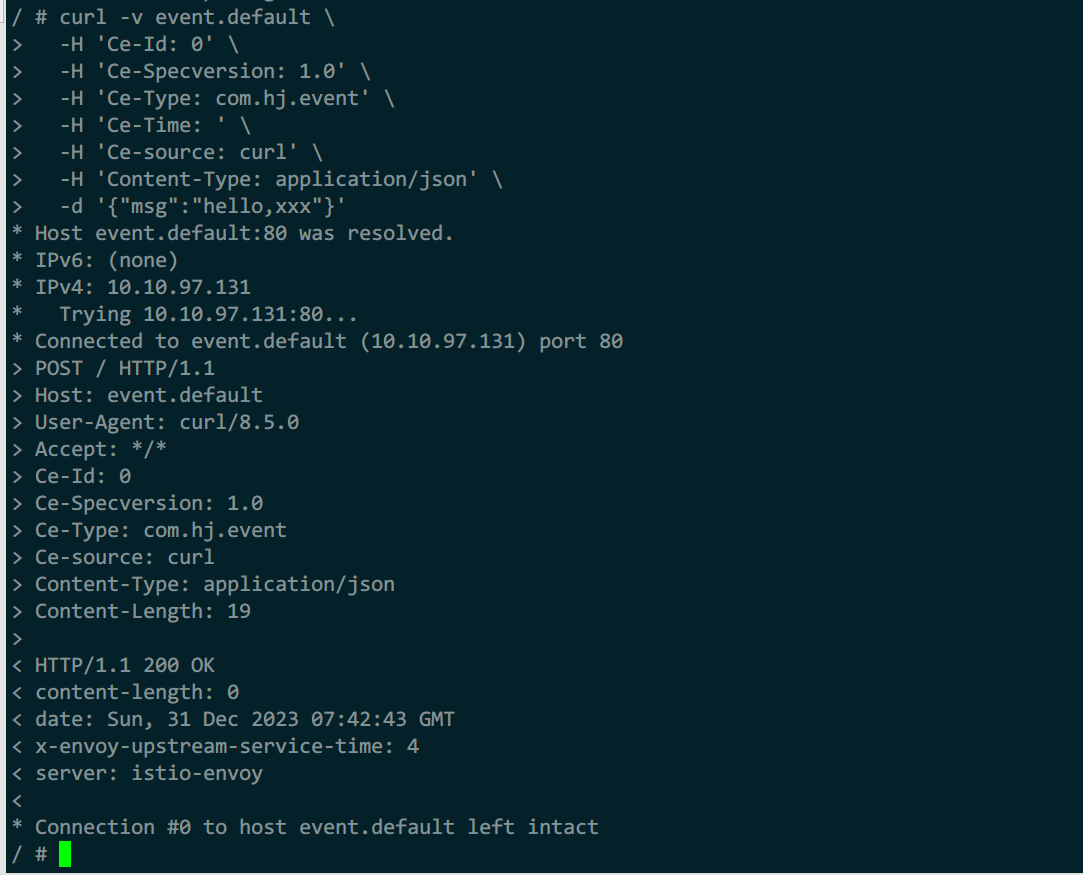

curl -v event.default \

-H 'Ce-Id: 0' \

-H 'Ce-Specversion: 1.0' \

-H 'Ce-Type: com.hj.event' \

-H 'Ce-Time: ' \

-H 'Ce-source: curl' \

-H 'Content-Type: application/json' \

-d '{"msg":"hello,xxx"}'

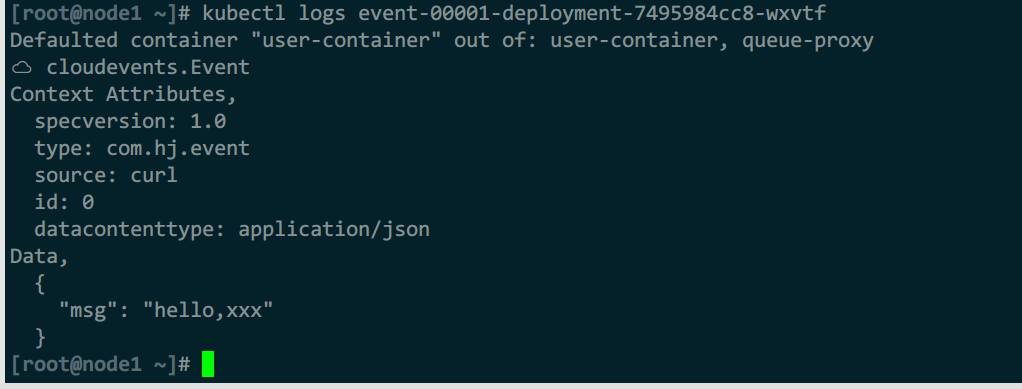

#查看event中是否接收到了事件

kubectl logs deployments/event-00001-deployment

模拟事件产生

消息正常接收,各组件运行正常

4)部署第二个channel与broker(kafka)

#前面已经下载过配置文件了,这里直接部署kafka channel

kubectl apply -f eventing-kafka-controller.yaml

kubectl apply -f eventing-kafka-channel.yaml

#部署kafka broker

kubectl apply -f eventing-kafka-broker.yaml

#部署kafka source。可选

kubectl apply -f eventing-kafka-source.yaml

#部署kafka sink。可选

kubectl apply -f eventing-kafka-sink.yaml

5)配置kafka组件

kubectl apply -f - <<eof

apiVersion: v1

kind: ConfigMap

metadata:

name: default-ch-webhook

namespace: knative-eventing

data:

default-ch-config: |

clusterDefault:

apiVersion: messaging.knative.dev/v1

kind: InMemoryChannel

namespaceDefaults:

default:

apiVersion: messaging.knative.dev/v1

kind: KafkaChannel

spec:

numPartitions: 5

replicationFactor: 1

---

apiVersion: v1

kind: ConfigMap

metadata:

name: config-br-defaults

namespace: knative-eventing

data:

default-br-config: |

clusterDefault:

brokerClass: MTChannelBasedBroker

apiVersion: v1

kind: ConfigMap

name: config-br-default-channel

namespace: knative-eventing

#命名空间级别必须指定,否则创建kafka-broker一定出错,以下都是模板,直接复制即可

namespaceDefaults:

default: #配置生效的命名空间

brokerClass: Kafka

apiVersion: v1

kind: ConfigMap

name: kafka-broker-config

namespace: knative-eventing

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kafka-broker-config

namespace: knative-eventing

data:

bootstrap.servers: my-cluster-kafka-bootstrap.kafka:9092

default.topic.partitions: "5"

default.topic.replication.factor: "1"

eof

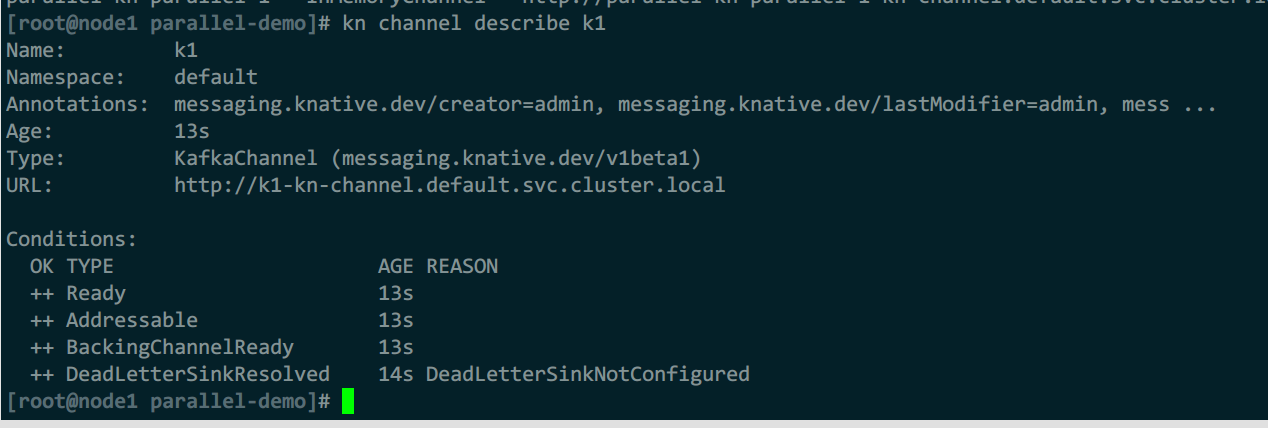

6)测试kafka是否可用

kn channel create k1 --type messaging.knative.dev:v1beta1:KafkaChannel

kn channel describe k1

7)升级

参考官方文档