istio集群内流量治理

istio流量治理

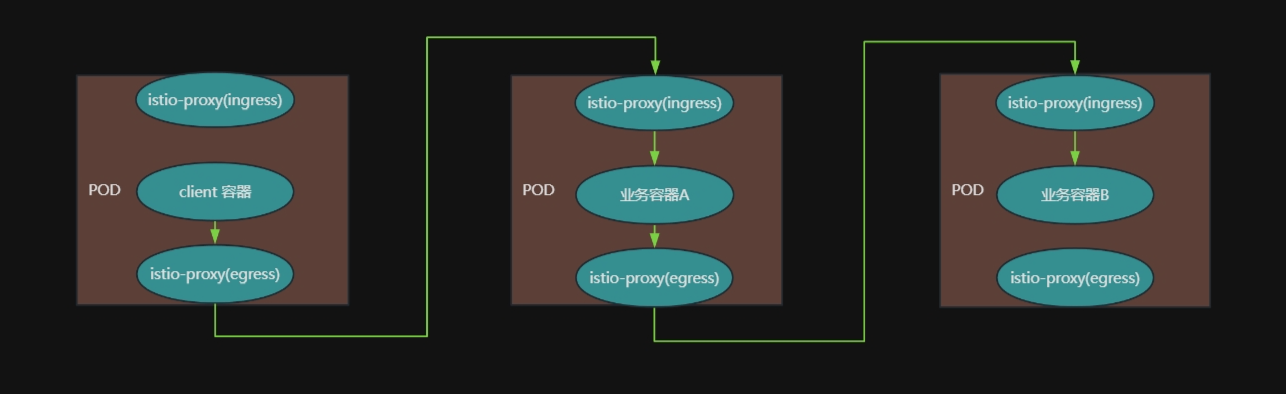

流量治理主要是在客户端sidecar的egress上面进行

CRD资源

virtual services、destination rules是istio流量路由的核心资源

查看资源:

kubectl api-resources --api-group networking.istio.io

验证语法:

istioctl analyze

gateway

生效在istio-ingress-gw上,接入集群外部、集群内部流量

类似envoy的listener中的虚拟主机

配置:

kubectl explain gw

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: 名称

namespace: 命名空间 #必须定义在与istio-ingressgateway相同的命名空间,因为下面标签选择器要找gw的pod,否则无法生效,默认运行在:istio-system。后期可在命名空间单独运行istio-ingressgateway(注意,无需为它注入sidecar)

spec:

selector: {} #标签选择器,关联到指定的istio的ingress-gw/egress-gw

servers: [obj] #定义多个侦听器、监听的虚拟主机等

- bind: str #监听地址

defaultEndpoint: str #默认后端

host: [str] #主机名

name: str #当前配置名称

port: #端口

name: 名称

number: 端口

protocol: 协议 #envoy支持的协议,一般为tcp、http

targetPort: 目标端口

tls: #tls配置

caCertificates: CA证书

cipherSuites: [算法] #面对客户端时,验证证书的算法,有:TLS_AUTO、TLSV1_0、TLSV1_1、TLSV1_2、TLSV1_3

credentialName: str #含证书、私钥,证书的secret名称,被引用的secret必须创建在根命名空间(istio-system),否则会找不到

httpsRedirect: boolean #是否自动重定向https

maxProtocolVersion: 加密协议版本

minProtocolVersion: 加密协议版本

mode: str #tls模式

#PASSTHROUTH,tls透传给实际pod,tcp代理

#SIMPLE,客户端与网关建立tls

#MUTUAL,mtls,对客户端也要求证书

#AUTO_PASSTHROUTH,自动透传

#ISTIO_MUTUAL,要求客户端证书是itsio的ca签发的,当y另外一个istio集群

privateKey: 证书私钥

serverCertificate: 证书

subjectAltNames: [] #证书相关信息

verifyCertificateHash: [] #验证证书hash

verifyCertificateSpki: [] #验证证书

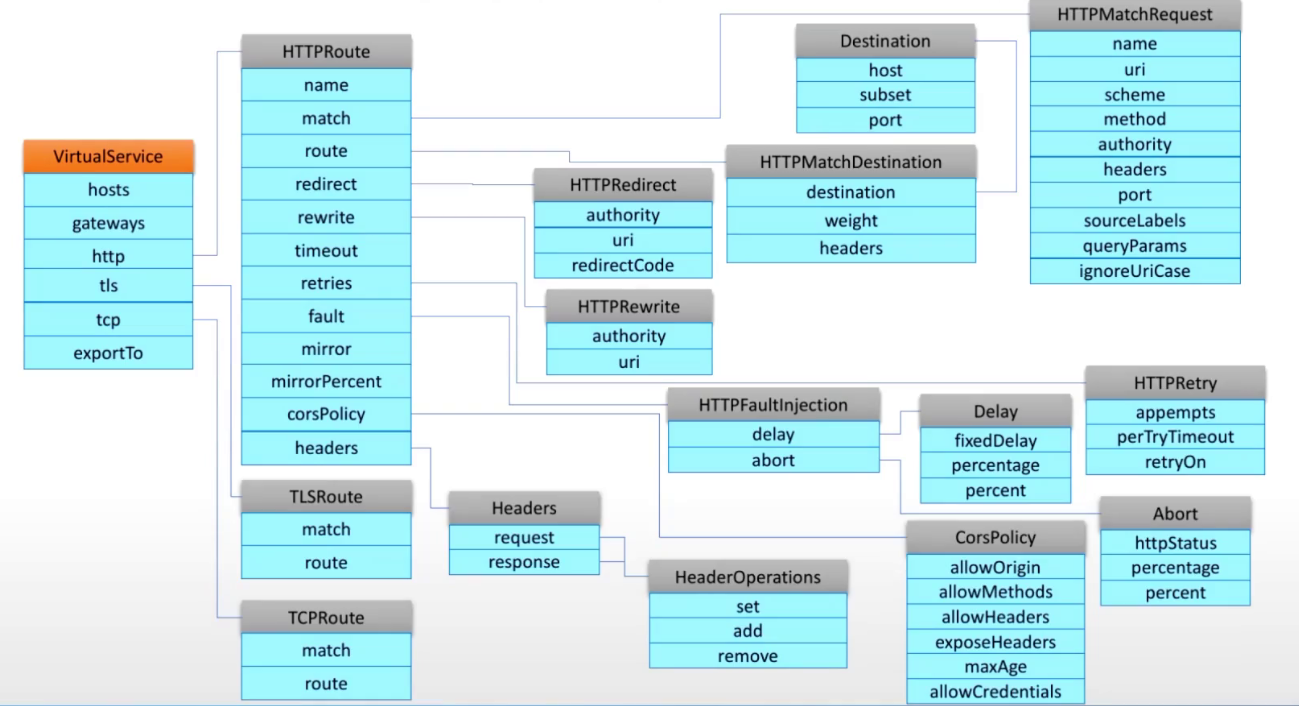

virtual services

流量的具体路径资源

生效逻辑:

- 生效在ingress-gw上:接入集群外部流量,作为ingress listener存在

- 生效在mesh上:接入集群内部流量,配置在网格内所有的sidecar envoy上,作为egress listener存在

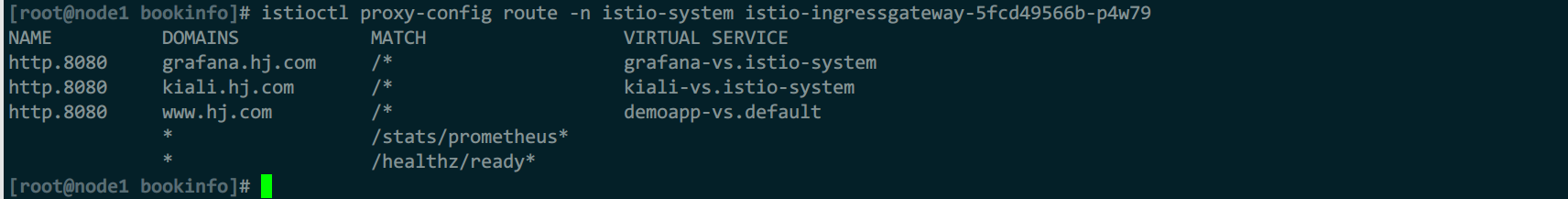

istioctl查看配置:

istioctl proxy-config route ingress-gw的pod -n istio-system

当某个sidecar envoy关联了vs,在istioctl查看route配置时,就会在vs处显示,否则就是空的

配置

kubectl explain vs

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

spec:

exportTo: [str]

gateways: [str] #关联istio的gw配置(允许集群外访问),未指定时表示关联到集群中所有sidecar(仅集群内访问)。如果都允许,则要指定gw、mesh(同时运行集群内访问)

- 绑定gw #只关联到ingress-gw上,各sidecar envoy不配置

- mesh #启用网格内访问,不配置mesh则网格内无法访问,根据业务情况定

hosts: [str] #绑定的虚拟主机,与侦听器中的虚拟主机对应,如果只绑定1个gw,且gw处关联域名,并只有1个域名,此处可写*

http: [obj] #虚拟主机配置

- corsPolicy: #跨域策略

allowCredentials:

allowHeaders: [str]

allowMethods: [str]

allowOrigin: [str]

allowOrigins:

exact: str

prefix: str

regex: 正则

exposeHeaders: [str]

maxAge: str

delegate:

name: str

namespace: str

directResponse:

body:

bytes: str

string: str

status: int

fault: #故障注入

abort: #中断故障

grpcStatus: str #指定故障响应码,被影响的请求返回此响应码

http2Error: str

httpStatus: str

percentage:

value: int #故障注入比率,100为所有请求都注入

delay: #延迟故障

exponentialDelay: 时间

fixedDelay: 时间 #延迟时间

percent: int

percentage: #故障注入百分比

value: int

headers: #对所有的请求和响应标头修改

request: #envoy请求上游

add: #添加

标头: 值

remove: [str] #删除

set: #修改

标头: 值

response: #envoy响应客户端)

add: #添加

标头: 值

remove: [str] #删除

set: #修改

标头: 值

match: #规则匹配

- authority:

exact: str

prefix: str

regex: 正则

gateways: [str] #匹配gw配置,mesh代表网格内配置,其他为自定义的gw配置

headers: #标头匹配

标头: #标头自定义,匹配方法三选一

exact: str #精确匹配

prefix: str #前缀匹配

regex: 正则 #正则匹配

ignoreUriCase: boolean

method:

exact: str

prefix: str

regex: 正则

name: str

port: int #匹配的端口

queryParams:

exact: str

prefix: str

regex: 正则

scheme:

exact: str

prefix: str

regex: 正则

sourceLabels: {}

sourceNamespace: str

statPrefix: str

uri: #uri匹配

exact: str #精确匹配

prefix: str #前缀匹配

regex: 正则

withoutHeaders:

exact: str

prefix: str

regex: 正则

route: #路由规则

destination: #接收端点配置

#如果vs定义在于实际接收流量的svc不在统一命名空间,路径应该写详细,否则路由不了。如:kiali.i

host: str #接收流量的上游集群,k8s中指的是svc,或者是pod的ip。在egress场景中,如果启用mesh匹配,istio-egress-gw的svc名称必须写全(svc+ns+集群后缀),如:istio-egressgateway.istio-system.svc.cluster.local,否则无法正常解析vs

port: #端口

number: int

subset: str #选择集群的子集

headers: #对匹配的请求的标头修改

request:

add: #添加

标头: 值

remove: [str] #删除

set: #修改

标头: 值

response:

add: {}

remove: [str]

set: {}

weight: 权重

mirror: #流量镜像配置

host: str #接收影子流量的上游集群,也就是对应svc

port:

number: int

subset: str #集群子集

mirrorPercent: #流量镜像比率

mirrorPercentage:

value: int

mirror_percent:

name: str

redirect:

authority: str

derivePort: str

port: int

redirectCode: int

scheme: str

uri: str

retries: #重试配置

attempts: int #重试次数

perTryTimeout: 1s #重试时的请求使用1s超时时间

retryOn: str #重试条件,与envoy相同,多个用","分隔

#4xx,4系列响应码

#5xx,5系列响应码

#connect-failure,tcp连接错误

#refused-stream,grpc连接拒绝

#gateway-error

#retriable-4xx

#retriable-status-code

#reset

#retriable-headers

#envoy-ratelimited

retryRemoteLocalities:

rewrite:

authority: str

uri: str

timeout: 时间 #超时时间

tcp: [obj]

- match: [obj]

- destinationSubnets: [str]

gateways: [str]

port: int

sourceLabels: {}

sourceNamespace: str

sourceSubnet: str

route: [obj]

- destination:

host: str

port:

number: 端口

subset: str

weight: 权重

tls: [obj]

- match:

- destinationSubnets: [str]

gateways: [str]

port: int

sniHosts: [str]

sourceLabels: {}

sourceNamespace: str

route: [obj]

- destination:

host: str

port:

number: 端口

subset: str

weight: 权重

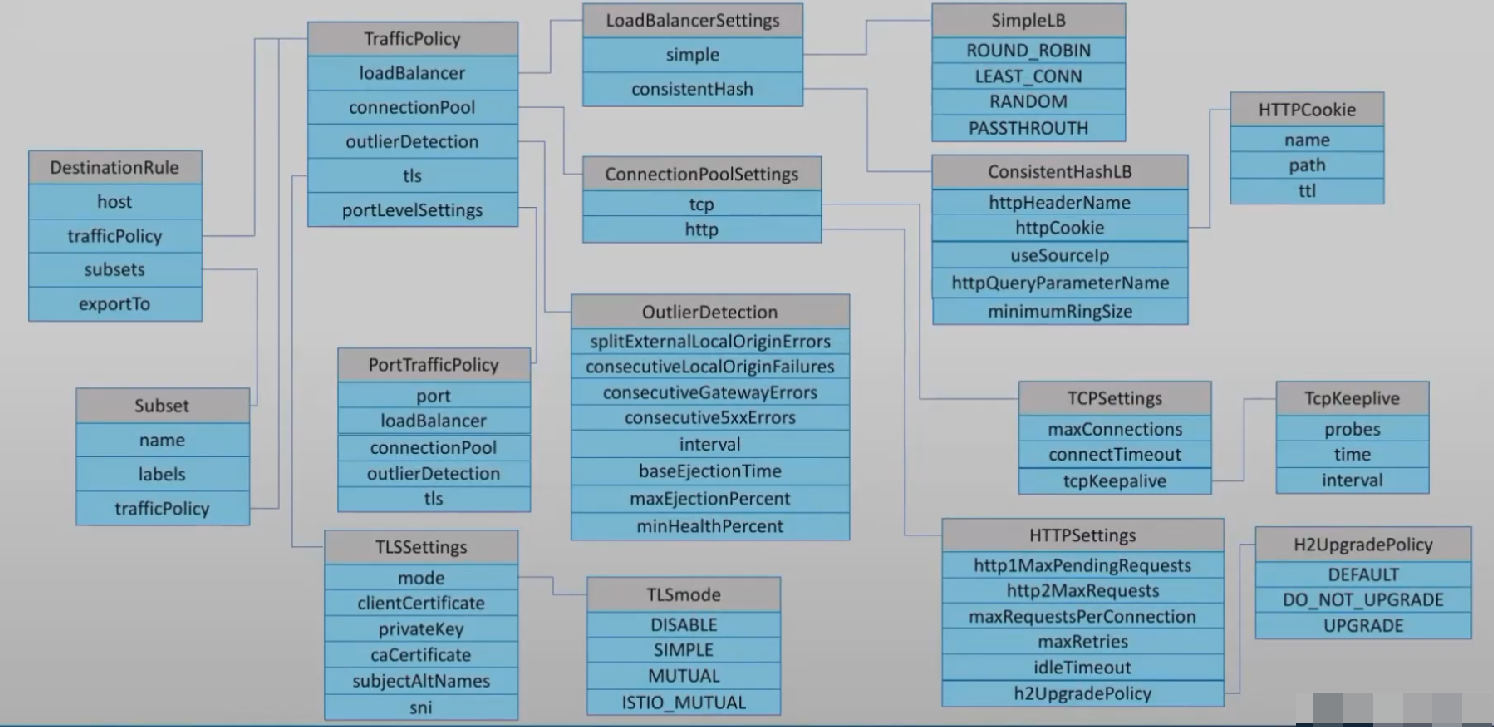

destination rules

配置集群内部的流量分发,如负载均衡算法、连接池、熔断等,对应envoy的cluster

配置

kubectl explain dr

apiVersion: networking.istio.io/v1beta1

kind: DestationRule

metadata:

name: kiali-dr

namespace: istio-system

spec:

exportTo: [str] #暴露到指定命名空间,未指定则是当前命名空间

host: str #上游集群(对应svc)

subset: #集群子集配置

labels: {}

name: str

trafficPolicy: #流量策略,仅对当前子集生效,没有定义则继承全局

connectionPool: #连接池配置,用于限制连接数,超出后的连接,应配合断路器处理

http: #http连接池

h2UpgradePolicy: str

http1MaxPendingRequests: int #http协议1,最大挂起请求数

http2MaxRequests: int #http协议2,最大连接数

idleTimeout: int #空闲超时时间

maxRequestsPerConnection: int #每个连接最大请求数

maxRetries: int

useClientProtocol: boolean

tcp: #tcp连接池

connectTimeout: str #超时时间

maxConnectionDuration: str

maxConnections: int #最大连接数

tcpKeepalive: #长连接

interval: str #检测间隔

probes: int

time: str #保持多长时间

loadBalancer: #负载均衡配置

consistentHash: #一致性哈希

httpCookie: #cookie哈希

name: str

path: str

ttl: str

httpHeaderName: str #标头哈希

httpQueryParameterName: str #请求参数哈希

maglev: #磁悬浮haah

tableSize: int

minimumRingSize: int #最小hash环

ringHash: #环哈希

minimumRingSize: int

useSourceIp: boolean

localityLbSetting:

distribute:

- from: str

to: {}

enabled:

failover:

- from: str

to: str

failoverPriority: [str]

simple: 算法 #简单算法

#ROUND_ROBIN,轮询

#LEAST_CONN,最少连接数

#RANDOM,随机

#PASSTHROUTH,透传,连接哪个端点,直接交给它

warmupDurationSecs: str

outlierDetection: #异常值探测配置

baseEjectionTime: str #异常弹出的基准时间主机第一次异常时弹出此时间,再次弹出时,运算后的时间为:弹出次数*基准时间+检测间隔

consecutive5xxErrors: int #连续5xx错误n次,则弹出

consecutiveErrors: int

consecutiveGatewayErrors: #检测网关错误

consecutiveLocalOriginFailures:

interval: 1m #检测间隔

maxEjectionPercent: int #最大弹出比例

minHealthPercent: int #最小健康状态比例,小于此禁用弹出

splitExternalLocalOriginErrors: boolean

portLevelSettings: #端口配置

- connectionPool:

http:

h2UpgradePolicy: str

http1MaxPendingRequests: int

http2MaxRequests: int

idleTimeout: str

maxRequestsPerConnection: int

maxRetries: int

useClientProtocol: boolean

tcp:

connectTimeout: str

maxConnectionDuration: str

maxConnections: int

tcpKeepalive:

interval: str

probes: int

time: str

loadBalancer: obj #参考前面lb配置

outlierDetection: obj #参考前面lb配置

port:

number: int

tls: #客户端与上游集群tls通信配置,对应mtls的客户端

caCertificates: str #ca证书

clientCertificate: str #客户端证书

privateKey: str #私钥

credentialName: str #包含客户端私钥、证书,ca证书的secret资源名称,仅用于istio-gw

insecureSkipVerify: boolean #不用ca验证证书是否合法。如果启用,则必须开启全局参数:VerifyCertAtClient

mode: str #tls模式

#DISABLE,禁用tls

#SIMPLE,简单tls,仅验证服务端的证书,需要启用insecureSkipVerify或指定caCertificates,因为客户端不能识别istio自签证书

#MUTUAL,双向tls,启用后就要指定证书,网格外使用,需配置caCertificates或insecureSkipVerify、clientCertificate、privateKey

#ISTIO_MUTUAL,网格内使用,istio可自动管理证书

sni: str

subjectAltNames: [str] #描述信息匹配,证书的主体和这里的匹配1个即可

tls: #参考上面tls配置

tunnel:

protocol: str

targetHost: str

targetPort: int

trafficPolicy: #流量策略配置,全局生效,断路器、重试等

connectionPool: #连接池配置

http:

h2UpgradePolicy: str

http1MaxPendingRequests: int

http2MaxRequests: int

idleTimeout: str

maxRequestsPerConnection: int

maxRetries: int

useClientProtocol: boolean

tcp:

connectTimeout: str

maxConnectionDuration: str

maxConnections: int

tcpKeepalive:

interval: str

probes: int

time: str

loadBalancer: obj #负载均衡算法,参考前面lb配置

outlierDetection: obj #故障弹出,参考前面outlierDetection配置

portLevelSettings: obj #端口配置,参考前面portLevelSettings配置

tls: obj #参考前面tls配置

tunnel: obj #参考前面tunnel配置

workloadSelector:

matchLabels: {}

流量路由过程:

图为k8s集群外流量访问,集群内则不用经过gw

网格内流量

案例

测试运行pod模板

所有案例都是基于这些模板所做

1)svc

cat <<EOF |kubectl apply -f -

apiVersion: v1

kind: Service

metadata:

labels:

app: demoapp

name: demoapp

spec:

ports:

- name: http-80-80

port: 80

protocol: TCP

targetPort: 80

selector:

app: demoapp

type: ClusterIP

EOF

2)后端应用模板

demoapp pod模板

cat <<EOF |kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: demoapp

version: v1.0

name: demoapp-10

spec:

replicas: 2

selector:

matchLabels:

app: demoapp

version: v1.0

template:

metadata:

labels:

app: demoapp

version: v1.0

spec:

containers:

- image: ikubernetes/demoapp:v1.0

name: demoapp

ports:

- containerPort: 80

name: web

protocol: TCP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: demoapp

version: v1.1

name: demoapp-11

spec:

replicas: 2

selector:

matchLabels:

app: demoapp

version: v1.1

template:

metadata:

labels:

app: demoapp

version: v1.1

spec:

containers:

- image: ikubernetes/demoapp:v1.1

name: demoapp

ports:

- containerPort: 80

name: web

protocol: TCP

EOF

backend pod模板

cat <<EOF |kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: backend

version: v3.6

name: backend-36

spec:

progressDeadlineSeconds: 600

replicas: 2

selector:

matchLabels:

app: backend

version: v3.6

template:

metadata:

creationTimestamp: null

labels:

app: backend

version: v3.6

spec:

containers:

- image: ikubernetes/gowebserver:v0.1.0

imagePullPolicy: IfNotPresent

name: gowebserver

env:

- name: "SERVICE_NAME"

value: "backend"

- name: "SERVICE_PORT"

value: "8082"

- name: "SERVICE_VERSION"

value: "v3.6"

ports:

- containerPort: 8082

name: web

protocol: TCP

resources:

limits:

cpu: 50m

---

apiVersion: v1

kind: Service

metadata:

labels:

app: backend

name: backend

spec:

ports:

- name: http-web

port: 8082

protocol: TCP

targetPort: 8082

selector:

app: backend

version: v3.6

EOF

3)proxy pod模板(前端)

作用:客户端--> proxy前端 --> demoapp后端/backend后端

cat <<EOF |kubectl apply -f -

apiVersion: v1

kind: Service

metadata:

labels:

app: proxy

name: proxy

spec:

ports:

- name: http-80-8080

port: 80

protocol: TCP

targetPort: 8080

selector:

app: proxy

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: proxy

name: proxy

spec:

progressDeadlineSeconds: 600

replicas: 1

selector:

matchLabels:

app: proxy

template:

metadata:

labels:

app: proxy

spec:

containers:

- env:

- name: PROXYURL

value: http://demoapp:80

image: ikubernetes/proxy:v0.1.1

imagePullPolicy: IfNotPresent

name: proxy

ports:

- containerPort: 8080

name: web

protocol: TCP

resources:

limits:

cpu: 50m

EOF

4)运行客户端容器

kubectl run admin --image alpine -- tail -f /dev/null

kubectl exec -it admin -- sed -i 's/dl-cdn.alpinelinux.org/mirrors.ustc.edu.cn/g' /etc/apk/repositories

5)其他模板

dr子集与vs子集路由模板

例2金丝雀发布使用,但后续案例都需要基于集群子集划分,所以提前列出来,如果不看例2,但看后续的例子,需要使用此

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: demoapp-dr

spec:

host: demoapp

subsets:

- name: v10

labels:

version: v1.0

- name: v11

labels:

version: v1.1

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp-vs

spec:

hosts:

- demoapp

http:

- name: canary

match:

- uri:

prefix: /canary

rewrite:

uri: /

route:

- destination:

host: demoapp

subset: v11

- name: default

route:

- destination:

host: demoapp

subset: v10

EOF

ingress-gw模板

用于实现外部访问k8s集群,此为配置案例中的部分,具体结合例1、例2的金丝雀发布,后面几个案例会用到这个网关,所以提前列出来。如果不看例1、例2,只用其他案例,需要使用此

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: proxy-gw

namespace: istio-system

spec:

selector:

app: istio-ingressgateway

servers:

- port:

number: 80

name: http

protocol: http

hosts:

- 'ft.hj.com'

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: proxy-vs

spec:

hosts:

- 'ft.hj.com'

- 'proxy'

- 'mesh'

gateways:

- istio-system/proxy-gw

- mesh

http:

- name: default

route:

- destination:

host: proxy

EOF

6)清理模板

kubectl delete svc -l app=demoapp

kubectl delete svc -l app=proxy

kubectl delete svc -l app=backend

kubectl delete deploy -l app=demoapp

kubectl delete deploy -l app=proxy

kubectl delete deploy -l app=backend

kubectl delete vs demoapp-vs proxy-vs

kubectl delete dr demoapp-dr

kubectl delete gw -n istio-system proxy-gw

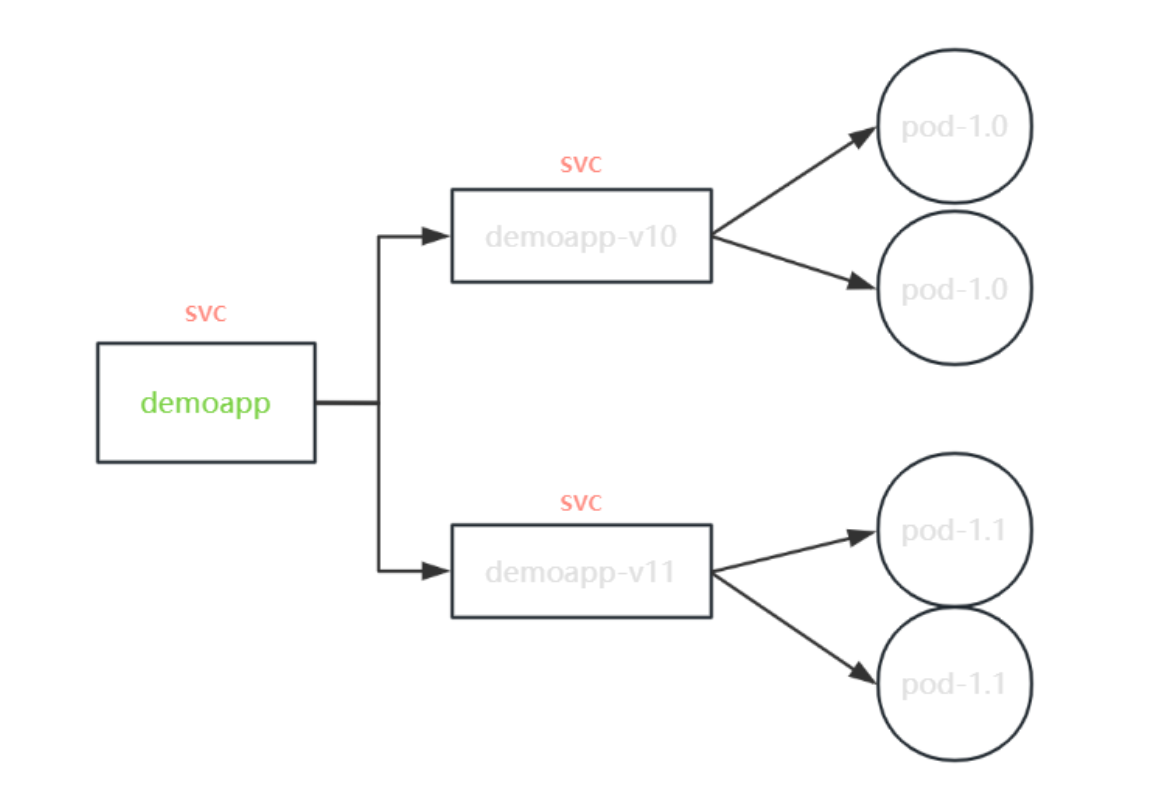

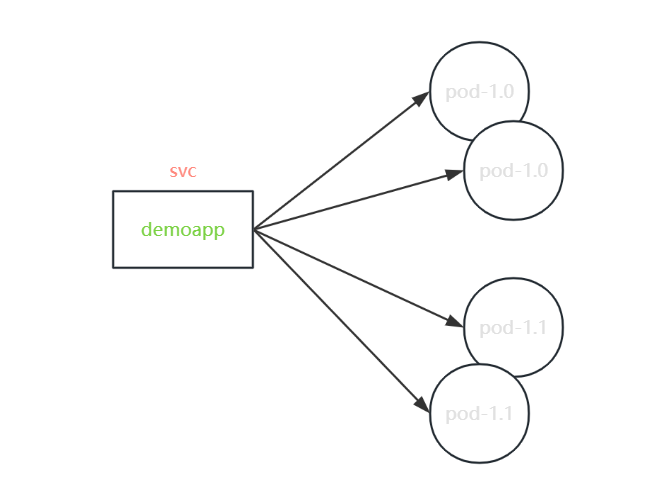

例1:基础版金丝雀发布

普通k8s集群中,一般结合ingress使用此方法做金丝雀发布,istio也能做

1)参考模板,运行pod

2)单独创建与版本对应的svc

cat <<EOF |kubectl apply -f -

#v10版本svc

apiVersion: v1

kind: Service

metadata:

labels:

app: demoapp

name: demoapp-10-svc

spec:

ports:

- name: http-80-80

port: 80

protocol: TCP

targetPort: 80

selector:

app: demoapp

version: v1.0

type: ClusterIP

---

#v11版本svc

apiVersion: v1

kind: Service

metadata:

labels:

app: demoapp

name: demoapp-11-svc

spec:

ports:

- name: http-80-80

port: 80

protocol: TCP

targetPort: 80

selector:

app: demoapp

version: v1.1

type: ClusterIP

EOF

3)创建vs资源

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

labels:

app: demoapp-vs

name: demoapp-vs

spec:

hosts:

- demoapp

http:

- name: canary

match:

- uri:

prefix: /canary

rewrite:

uri: /

route:

- destination:

host: demoapp-11-svc

- name: default

route:

- destination:

host: demoapp-10-svc

EOF

4)建立ingress网关,暴露到集群外

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: proxy-gw

namespace: istio-system

spec:

selector:

app: istio-ingressgateway

servers:

- port:

number: 80

name: http

protocol: http

hosts:

- 'ft.hj.com'

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: proxy-vs

spec:

hosts:

- 'ft.hj.com'

- 'proxy'

- 'mesh'

gateways:

- istio-system/proxy-gw

- mesh

http:

- name: default

route:

- destination:

host: proxy

EOF

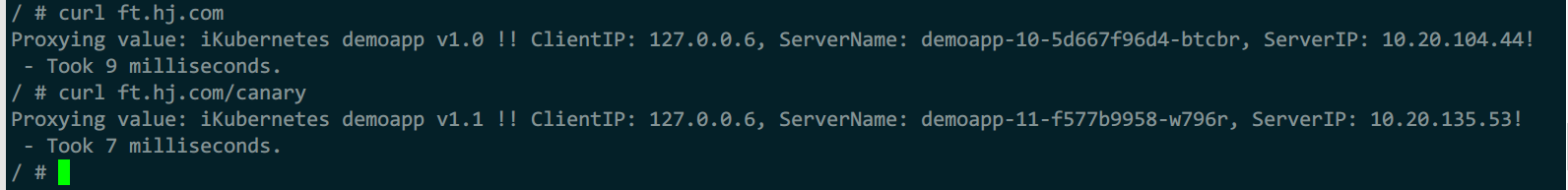

5)测试

#istio配置分析

istioctl analyze

#访问前端,查看是否完成金丝雀流量路由

kubectl exec -it admin -- sh

apk add curl

curl proxy

curl proxy/canary

curl -H 'host: ft.hj.com' 2.2.2.17

curl -H 'host: ft.hj.com' 2.2.2.17/canary

例2:进阶版金丝雀发布

例1中不是纯粹的istio/envoy风格,是参照nginx、svc这种,如果是istio风格,应该配合使用envoy中集群子集的概念,做金丝雀发布

进行uri匹配,转给集群子集(基于子集直接关联应用版本,实现更高级功能)

注:继续使用模板运行,可全部删除重新运行,或删除部分继续

1)删除2个独立svc和vs资源

kubectl delete svc demoapp-10-svc demoapp-11-svc

kubectl delete vs demoapp-vs

2)创建dr与vs资源

dr使用集群子集,vs基于url做子集调度

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: demoapp-dr

spec:

host: demoapp

subsets:

- name: v10

labels:

version: v1.0

- name: v11

labels:

version: v1.1

---

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp-vs

spec:

hosts:

- demoapp

http:

- name: canary

match:

- uri:

prefix: /canary

rewrite:

uri: /

route:

- destination:

host: demoapp

subset: v11

- name: default

route:

- destination:

host: demoapp

subset: v10

EOF

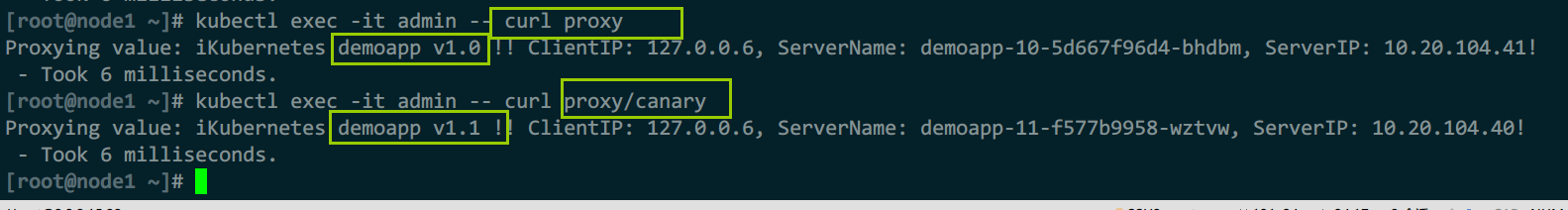

3)测试

curl -H 'host: ft.hj.com' 2.2.2.17

curl -H 'host: ft.hj.com' 2.2.2.17/canary

例3:url重定向

1)proxy-vs资源

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: proxy-vs

spec:

hosts:

- proxy

http:

- name: redirect

match:

- uri:

prefix: "/backend"

redirect:

uri: /

authority: backend

port: 8082

- name: default

route:

- destination:

host: proxy

EOF

2)demoapp-vs资源

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp-vs

spec:

hosts:

- demoapp

http:

- name: rewrite

match:

- uri:

prefix: /canary

rewrite:

uri: /

route:

- destination:

host: demoapp

subset: v11

- name: redirect

match:

- uri:

prefix: "/backend"

redirect:

uri: /

authority: backend

port: 8082

- name: default

route:

- destination:

host: demoapp

subset: v10

EOF

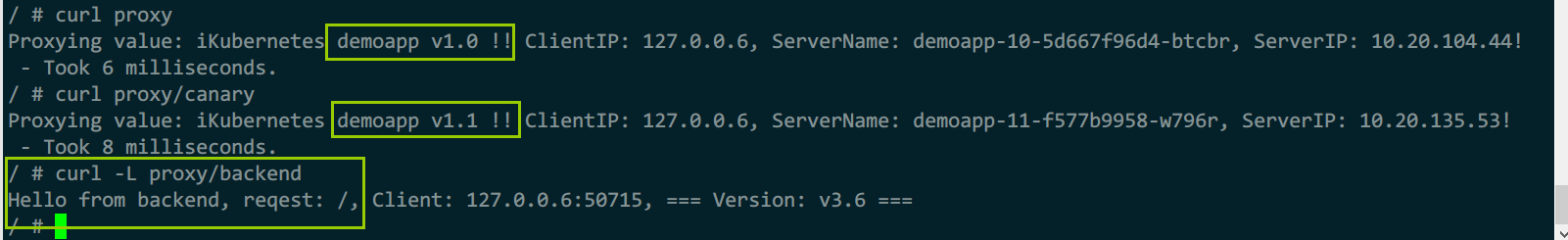

3)测试

kubectl exec -it admin -- sh

curl proxy

curl proxy/canary

curl -L proxy/backend

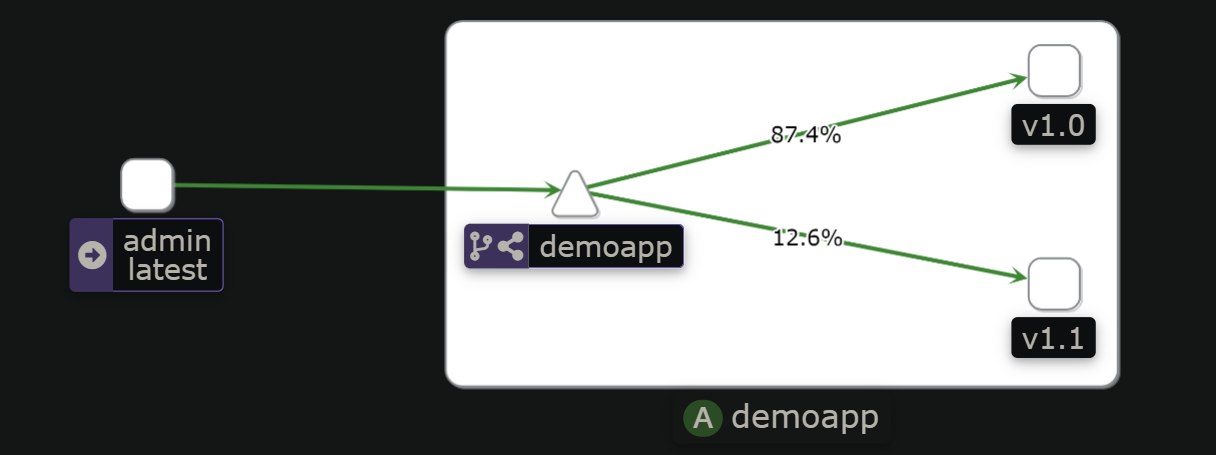

例4:流量分割

基于权重路由,分割流量

1)修改vs资源

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp-vs

spec:

hosts:

- demoapp

http:

- name: weight-based-routing

route:

- destination:

host: demoapp

subset: v10

weight: 90

- destination:

host: demoapp

subset: v11

weight: 10

EOF

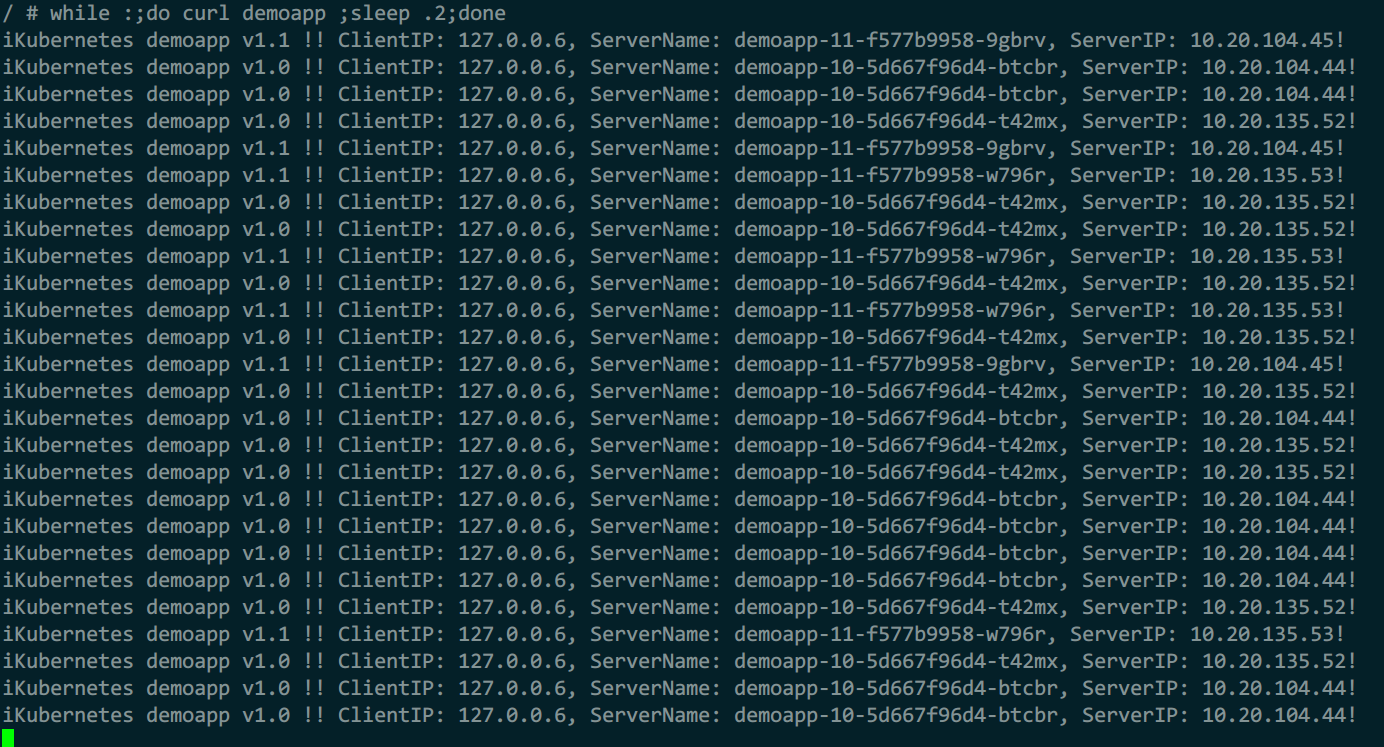

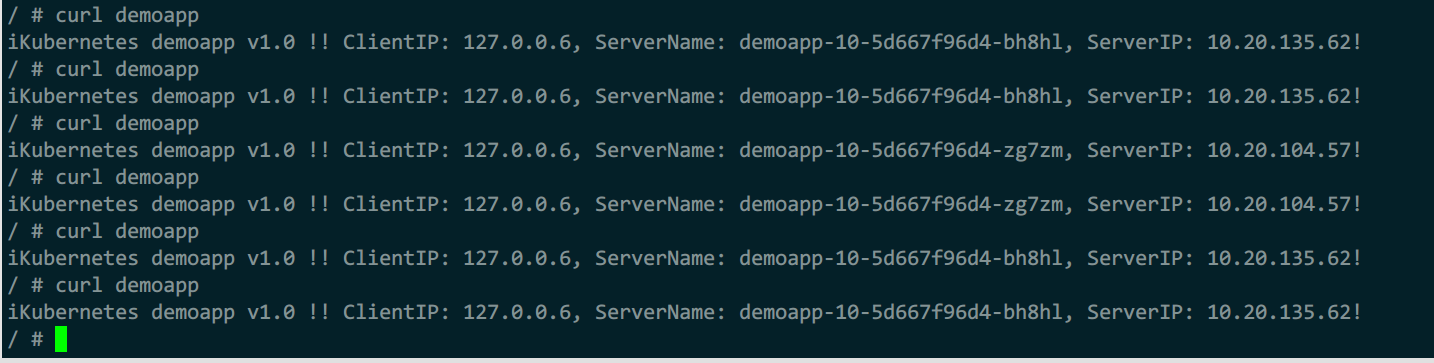

2)测试

kubectl exec -it admin -- sh

while :;do curl demoapp ;sleep .2;done

流量比例9:1

例5:根据请求标头路由

1)修改vs资源

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp-vs

spec:

hosts:

- demoapp

http:

- name: canary

match:

- headers:

x-canary:

exact: "true"

route:

- destination:

host: demoapp

subset: v11

headers:

request:

set:

User-Agent: Chrome

response:

add:

x-canary: "true"

- name: default

headers:

response:

add:

X-Envoy: test

route:

- destination:

host: demoapp

subset: v10

EOF

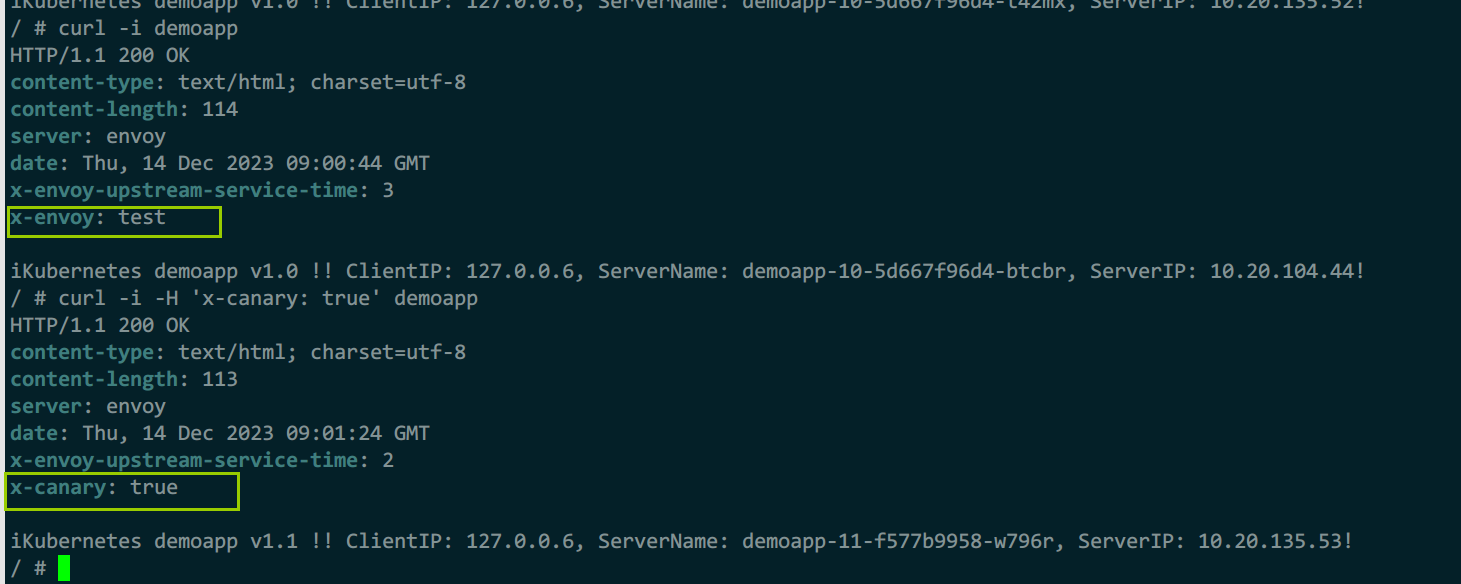

2)测试

kubectl exec -it admin -- sh

curl -i demoapp

curl -i -H 'x-canary: true' demoapp

例6:故障注入与重试

1)配置demoapp-vs资源

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp-vs

spec:

hosts:

- demoapp

http:

- name: canary

match:

- uri:

prefix: /canary

rewrite:

uri: /

route:

- destination:

host: demoapp

subset: v11

fault:

abort:

percentage:

value: 50

httpStatus: 555

- name: default

route:

- destination:

host: demoapp

subset: v10

fault:

delay:

percentage:

value: 50

fixedDelay: 3s

EOF

2)配置proxy-vs资源

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: proxy-vs

spec:

hosts:

- "ft.hj.com"

- "proxy"

- "proxy.default.svc"

gateways:

- istio-system/proxy-gw

- mesh

http:

- name: default

route:

- destination:

host: proxy

timeout: 1s

retries:

attempts: 2

perTryTimeout: 1s

retryOn: 5xx,connect-failure,refused-stream

EOF

3)测试

kubectl exec -it admin -- sh

curl -i demoapp/canary

例7:流量镜像

1)demoapp-vs.yml

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp-vs

spec:

hosts:

- demoapp

http:

- name: traffic-mirror

route:

- destination:

host: demoapp

subset: v10

mirror:

host: demoapp

subset: v11

EOF

2)测试

while :;do curl proxy ;sleep .2;done

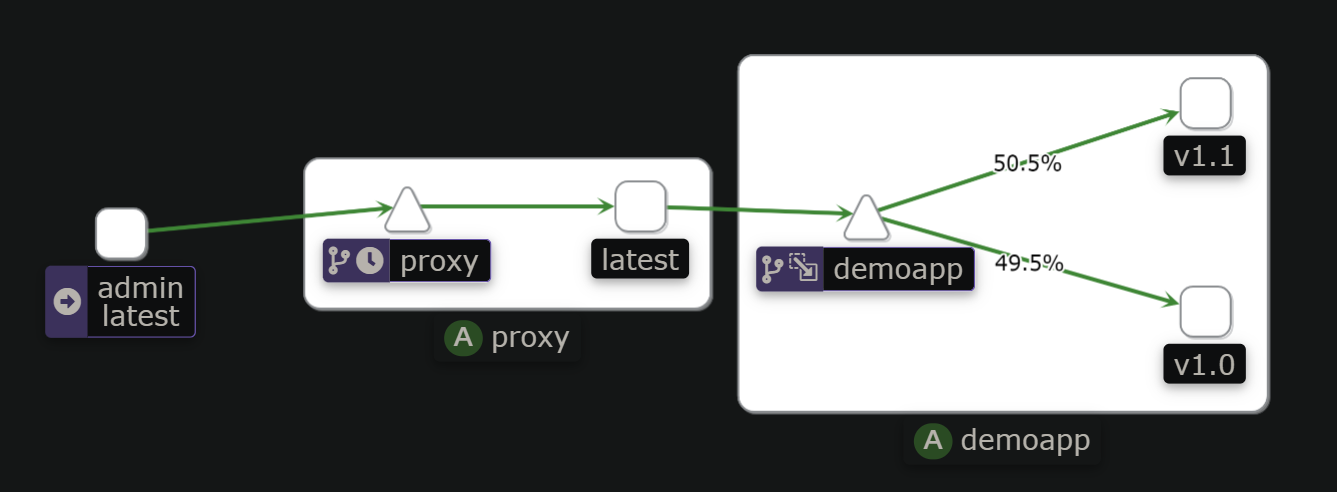

例8:使用负载均衡算法高级调度

属于envoy中cluster的配置,使用dr资源配置

1)配置demoapp-dr.yml

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: demoapp-dr

spec:

host: demoapp

trafficPolicy:

loadBalancer:

simple: LEAST_CONN

subsets:

- name: v10

labels:

version: v1.0

trafficPolicy:

loadBalancer:

consistentHash:

httpHeaderName: X-User

- name: v11

labels:

version: v1.1

EOF

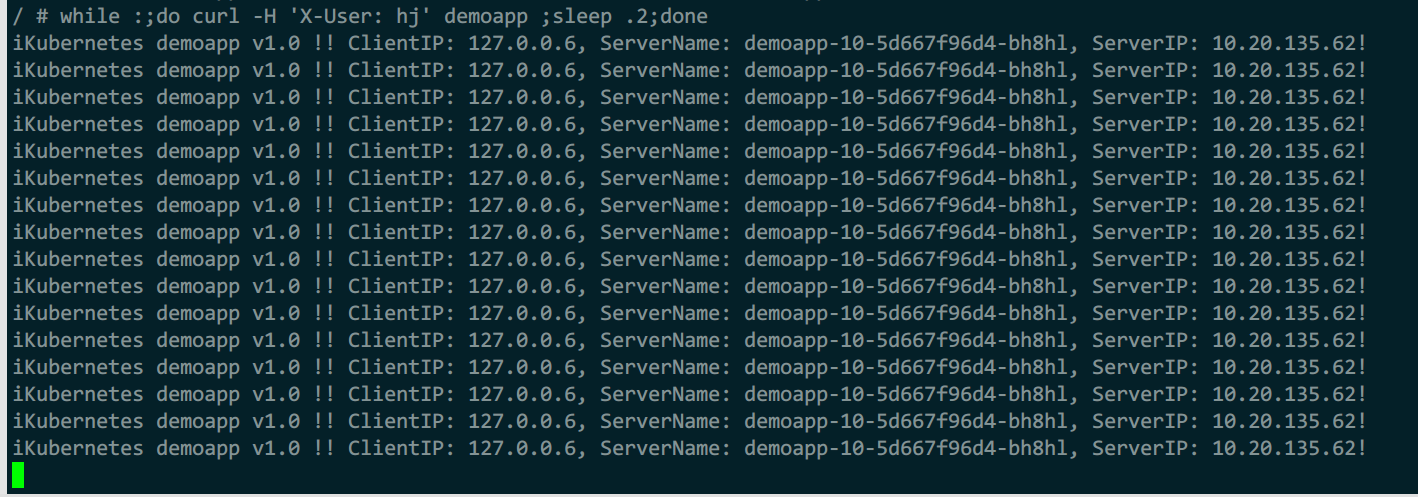

2)测试

curl demoapp

while :;do curl -H 'X-User: hj' demoapp ;sleep .2;done

未指定标头时,使用全局负载均衡算法,最少连接数

指定请求标头后,使用内部定义的一致性hash算法

例9:连接池与断路器

1)demoapp-vs.yml

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: demoapp-vs

spec:

hosts:

- demoapp

http:

- name: canary

match:

- uri:

prefix: /canary

rewrite:

uri: /

route:

- destination:

host: demoapp

subset: v11

- name: default

route:

- destination:

host: demoapp

subset: v10

EOF

2)demoapp-dr.yml

cat <<EOF |kubectl apply -f -

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: demoapp-dr

spec:

host: demoapp

trafficPolicy:

loadBalancer:

simple: RANDOM

connectionPool:

tcp:

maxConnections: 100

connectTimeout: 30ms

tcpKeepalive:

time: 7200s

interval: 75s

http:

http2MaxRequests: 1000

maxRequestsPerConnection: 10

outlierDetection:

maxEjectionPercent: 50

consecutive5xxErrors: 5

interval: 10s

baseEjectionTime: 1m

minHealthPercent: 40

subsets:

- name: v10

labels:

version: v1.0

- name: v11

labels:

version: v1.1

EOF

3)测试

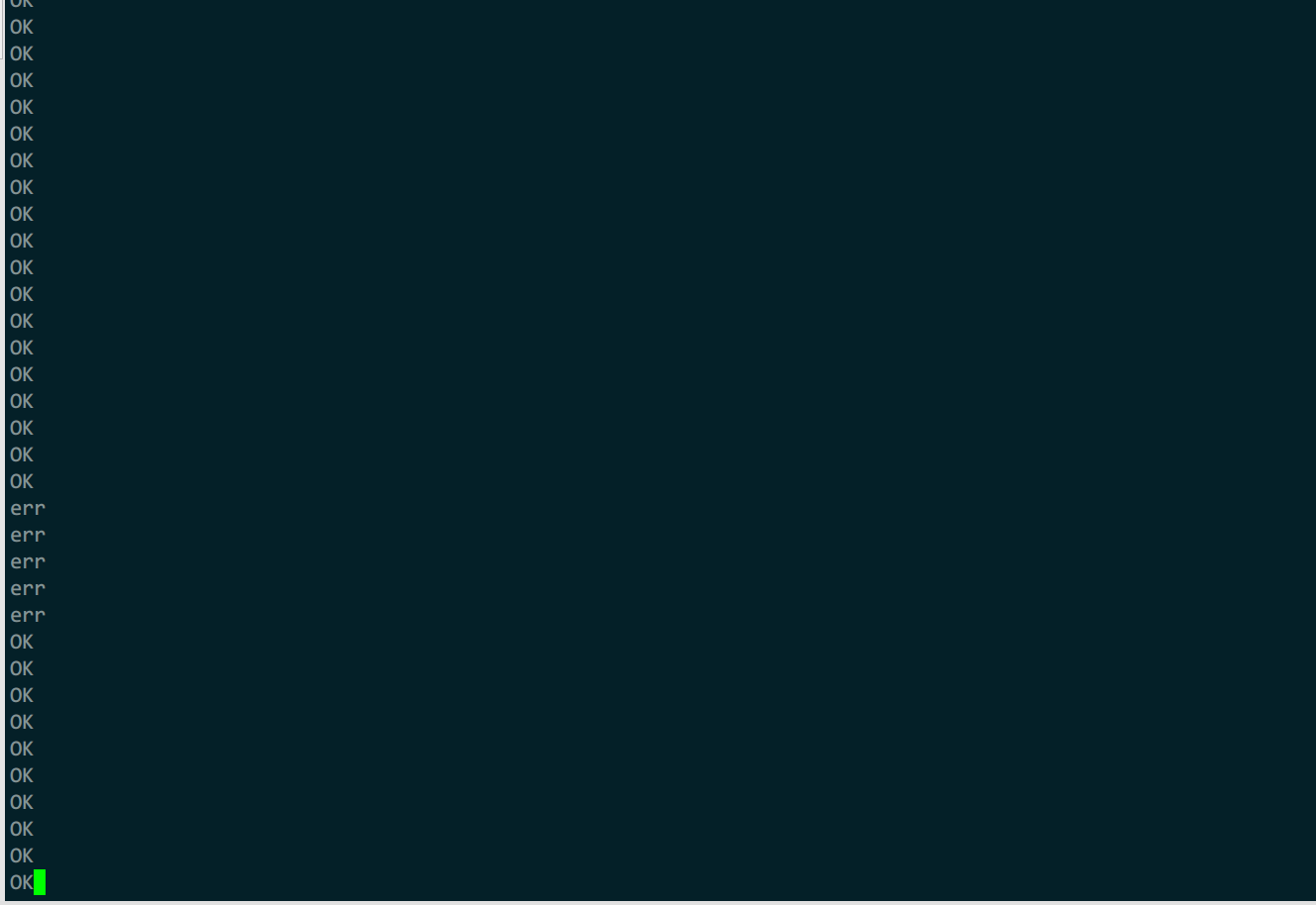

while :;do curl demoapp/livez ;sleep .2; echo;done

#修改demoapp-10中1个pod的监控检测值为错误

D_IP=`kubectl get po -owide |egrep demoapp-10 |awk 'NR==1{print $6}'`

curl -X POST -d 'livez=err' $D_IP/livez

由于监控检测改为err后,在前几次检测到失败后,自动弹出了错误主机,截图发生在弹出1分钟后,再次重新检测,继续失败继续弹出