挂载ceph存储

k8s挂载ceph存储

例1:使用配置文件挂载rbd

1)ceph创建rbd存储池及用户

#创建存储池

ceph osd pool create k8s-rbd 32 32

ceph osd pool application enable k8s-rbd rbd

rbd pool init -p k8s-rbd

rbd create k8s-rbd-img --size 5G -p k8s-rbd

#创建用户

ceph auth get-or-create client.k8s-rbd-user mon 'allow r' osd 'allow * pool=k8s-rbd'

ceph auth get client.k8s-rbd-user > /etc/ceph/ceph.client.k8s-rbd-user.keyring

#复制用户信息和配置文件到K8s集群

cd /etc/ceph/

for i in 2.2.2.{1..4}5 ;do scp ceph.client.k8s-rbd-user.keyring ceph.conf root@$i:/etc/ceph/ ;done

#k8s集群中验证

ceph --name client.k8s-rbd-user -s

2)k8s集群所有节点安装ceph客户端

#红帽系安装

rpm --import 'https://mirrors.ustc.edu.cn/ceph/keys/release.asc'

dnf config-manager --add-repo=https://mirrors.ustc.edu.cn/ceph/rpm-quincy/el8/noarch

yum install -y ceph-common

#debain系安装,ubuntu可直接用

wget -q -O- 'https://download.ceph.com/keys/release.asc' | apt-key add -

apt-add-repository 'deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-octopus/ buster main'

apt install -y ceph-common

3)挂载ceph的rbd存储,使用配置文件方式

apiVersion: v1

kind: Pod

metadata:

name: pod-t1

spec:

containers:

- image: busybox

imagePullPolicy: IfNotPresent

name: bsx-t1

command: ['sleep','3600']

volumeMounts:

- name: rbd-data1

mountPath: /data

volumes:

- name: rbd-data1

rbd:

monitors:

- 2.2.2.10:6789

- 2.2.2.20:6789

- 2.2.2.30:6789

pool: k8s-rbd

image: k8s-rbd-img

fsType: xfs

user: k8s-rbd-user

keyring: /etc/ceph/ceph.client.k8s-rbd-user.keyring

验证

kubectl apply -f ceph.yml

rbd status -p k8s-rbd --image k8s-rbd-img -n client.k8s-rbd-user

例2: 使用sercet挂载ceph rbd

1)创建secret

#命令行直接创建

user_key=`awk '/key/{print $3}' /etc/ceph/ceph.client.k8s-rbd-user.keyring`

kubectl create secret generic k8s-ceph-user --type="kubernetes.io/rbd" --from-literal=user-key=$user_key

#清单文件创建

cat > k8s-ceph-user.yml <<eof

apiVersion: v1

kind: Secret

metadata:

name: k8s-ceph-user

data:

user-key: `awk '/key/{print $3}' /etc/ceph/ceph.client.k8s-rbd-user.keyring |base64`

type: kubernetes.io/rbd

eof

kubectl apply -f k8s-ceph-user.yml

2)用例1的配置清单修改为secret

apiVersion: v1

kind: Pod

metadata:

name: pod-t1

spec:

containers:

- image: busybox

imagePullPolicy: IfNotPresent

name: bsx-t1

command: ['sleep','3600']

volumeMounts:

- name: rbd-data1

mountPath: /data

volumes:

- name: rbd-data1

rbd:

monitors:

- 2.2.2.10:6789

- 2.2.2.20:6789

- 2.2.2.30:6789

pool: k8s-rbd

image: k8s-rbd-img

fsType: xfs

user: k8s-rbd-user

secretRef:

name: k8s-ceph-user

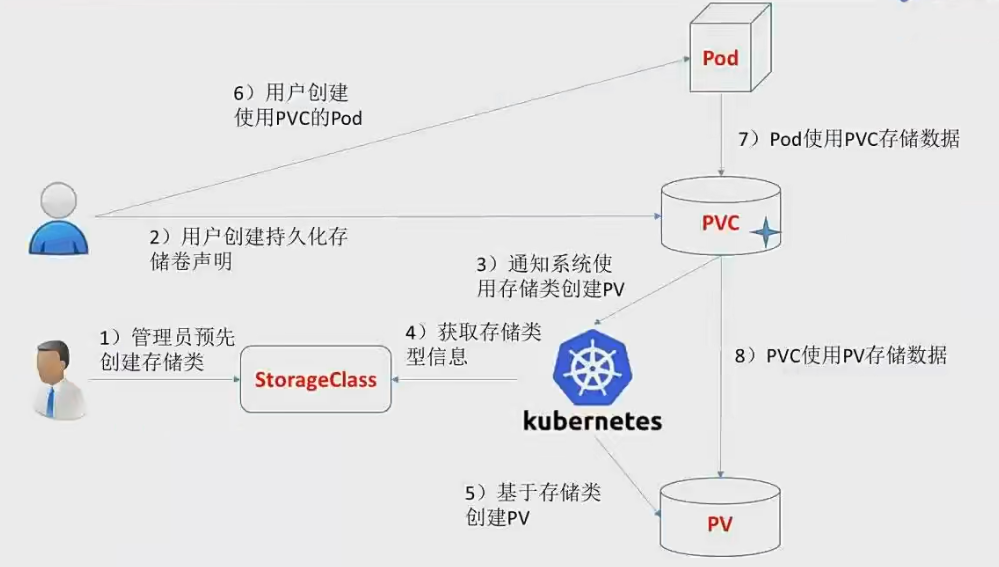

例3: 通过pv/pvc动态管理ceph

定义pv、pvc的配置清单

k8s的pod控制器去ceph管理节点创建pv

pvc绑定pod控制器

1)文件准备

#辅助ceph的admin配置文件到k8s控制节点

for i in 2.2.2.{1..4}5 ;do scp /etc/ceph/ceph.client.admin.keyring root@$i:/etc/ceph/ ;done

#创建普通用户secret

key1=`awk '/key/{print $3}' /etc/ceph/ceph.client.k8s-rbd-user.keyring`

kubectl create secret generic ceph-user --type="kubernetes.io/rbd" --from-literal=user-key=$key1

#创建administration

key2=`awk '/key/{print $3}' /etc/ceph/ceph.client.admin.keyring`

kubectl create secret generic ceph-admin --type="kubernetes.io/rbd" --from-literal=user-key=$key2

2)定义存储类

cat > ceph-sc.yml <<eof

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: ceph-sc

annotations:

#设为默认存储池类

storageclass.kubernetes.io/is-default-class: "true"

provisioner: kubernetes.io/rbd

parameters:

monitors: 2.2.2.10:6789,2.2.2.20:6789,2.2.2.30:6789

adminId: admin

adminSecretName: ceph-admin-user

adminSecretNamespace: default

pool: k8s-rbd

userId: k8s-rbd-user

userSecretName: k8s-ceph-user

eof

kubectl apply -f ceph-sc.yml

3)创建pvc

自动进行pv和pvc的绑定

cat > ngx-pvc.yml <<eof

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ngx-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: ceph-sc

resources:

requests:

storage: '500M'

eof

kubectl apply -f ngx-pvc.yml

rbd ls -p k8s-rbd #验证是否有创建的pvc

4)创建测试pod

apiVersion: apps/v1

kind: Deployment

metadata:

name: ngx-dep

spec:

selector:

matchLabels:

app: ngx

strategy:

type: Recreate

template:

metadata:

labels:

app: ngx

spec:

containers:

- name: ngx

image: nginx

imagePullPolicy: IfNotPresent

ports:

- name: ngx-port

containerPort: 80

volumeMounts:

- name: ngx-data

mountPath: /data

volumes:

- name: ngx-data

persistentVolumeClaim:

claimName: ngx-pvc

例4: 挂载cephfs

1)创建用户的secret

key2=`awk '/key/{print $3}' /etc/ceph/ceph.client.admin.keyring`

kubectl create secret generic ceph-admin --type="kubernetes.io/rbd" --from-literal=user-key=$key2

2)创建pod

apiVersion: apps/v1

kind: Deployment

metadata:

name: cephfs-dep

spec:

selector:

matchLabels:

app: cephfs-ngx

template:

metadata:

labels:

app: cephfs-ngx

spec:

containers:

- name: cephfs-rw

image: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: "/mnt/cephfs"

name: cephfs

volumes:

- name: cephfs

cephfs:

monitors:

- 2.2.2.10:6789

- 2.2.2.20:6789

- 2.2.2.30:6789

user: admin

secretRef:

name: ceph-admin

readOnly: false