b0114 数据开发中遗留问题

Spark

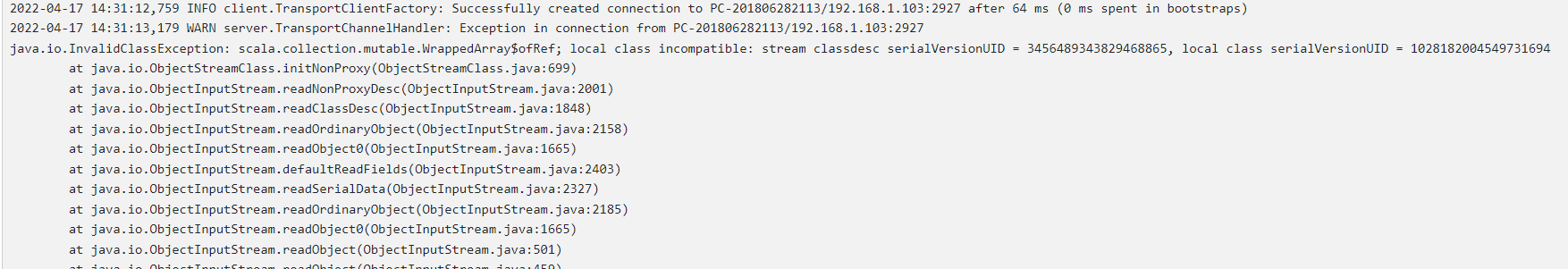

1. 序列化编号 本地、远程不一致 2022-04

描述:

IDEA 中 跑 scala 编写的 spark程序, 指定master 为 远程 standlone的主机 -Dspark.master=spark://hc2108:7077,

结果在集群 程序的日志中发现这个错误

local class incompatible: stream classdesc serialVersionUID = 3456489343829468865, local class serialVersionUID = 1028182004549731694

本地与 远程的对象 id不一致

如果打成jar 包,在 standlone所在节点提交,是能够跑成功的。

网上说, jar包版本,不一致, 将本地、远程 spark 包保持一致了

2. 客户机linux 跑 spark-shell, standlone 主机无法连接客户机

客户机 hc2107,执行 spark-shell --master spark://hc2108:7077 --driver-memory 512m

web 上查看 提交的spark 程序的日志,显示拒绝连接 hc2107。

直接在hc2108机器上可以提交成功。

Spark Executor Command: "/opt/jdk1.8.0_301/bin/java" "-cp" "/opt/spark-3.1.2-bin-hadoop3.2/conf/:/opt/spark-3.1.2-bin-hadoop3.2/jars/*:/opt/hadoop-3.3.1/etc/hadoop/" "-Xmx1024M" "-Dspark.driver.port=39947" "org.apache.spark.executor.CoarseGrainedExecutorBackend" "--driver-url" "spark://CoarseGrainedScheduler@hc2107:39947" "--executor-id" "0" "--hostname" "192.168.1.10" "--cores" "2" "--app-id" "app-20220417161816-0010" "--worker-url" "spark://Worker@192.168.1.10:34731" ======================================== 2022-04-17 16:18:17,430 INFO executor.CoarseGrainedExecutorBackend: Started daemon with process name: 88790@hc2108 2022-04-17 16:18:17,440 INFO util.SignalUtils: Registering signal handler for TERM 2022-04-17 16:18:17,441 INFO util.SignalUtils: Registering signal handler for HUP 2022-04-17 16:18:17,442 INFO util.SignalUtils: Registering signal handler for INT 2022-04-17 16:18:17,830 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 2022-04-17 16:18:17,931 INFO spark.SecurityManager: Changing view acls to: hadoop 2022-04-17 16:18:17,932 INFO spark.SecurityManager: Changing modify acls to: hadoop 2022-04-17 16:18:17,932 INFO spark.SecurityManager: Changing view acls groups to: 2022-04-17 16:18:17,933 INFO spark.SecurityManager: Changing modify acls groups to: 2022-04-17 16:18:17,934 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set() Exception in thread "main" java.lang.reflect.UndeclaredThrowableException at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1748) at org.apache.spark.deploy.SparkHadoopUtil.runAsSparkUser(SparkHadoopUtil.scala:61) at org.apache.spark.executor.CoarseGrainedExecutorBackend$.run(CoarseGrainedExecutorBackend.scala:393) at org.apache.spark.executor.CoarseGrainedExecutorBackend$.main(CoarseGrainedExecutorBackend.scala:382) at org.apache.spark.executor.CoarseGrainedExecutorBackend.main(CoarseGrainedExecutorBackend.scala) Caused by: org.apache.spark.SparkException: Exception thrown in awaitResult: at org.apache.spark.util.ThreadUtils$.awaitResult(ThreadUtils.scala:301) at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:75) at org.apache.spark.rpc.RpcEnv.setupEndpointRefByURI(RpcEnv.scala:101) at org.apache.spark.executor.CoarseGrainedExecutorBackend$.$anonfun$run$9(CoarseGrainedExecutorBackend.scala:413) at scala.runtime.java8.JFunction1$mcVI$sp.apply(JFunction1$mcVI$sp.java:23) at scala.collection.TraversableLike$WithFilter.$anonfun$foreach$1(TraversableLike.scala:877) at scala.collection.immutable.Range.foreach(Range.scala:158) at scala.collection.TraversableLike$WithFilter.foreach(TraversableLike.scala:876) at org.apache.spark.executor.CoarseGrainedExecutorBackend$.$anonfun$run$7(CoarseGrainedExecutorBackend.scala:411) at org.apache.spark.deploy.SparkHadoopUtil$$anon$1.run(SparkHadoopUtil.scala:62) at org.apache.spark.deploy.SparkHadoopUtil$$anon$1.run(SparkHadoopUtil.scala:61) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730) ... 4 more Caused by: java.io.IOException: Failed to connect to hc2107/192.168.1.13:39947 at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:287) at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:218) at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:230) at org.apache.spark.rpc.netty.NettyRpcEnv.createClient(NettyRpcEnv.scala:204) at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:202) at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:198) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) Caused by: io.netty.channel.AbstractChannel$AnnotatedConnectException: 拒绝连接: hc2107/192.168.1.13:39947 Caused by: java.net.ConnectException: 拒绝连接 at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:715) at io.netty.channel.socket.nio.NioSocketChannel.doFinishConnect(NioSocketChannel.java:330) at io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.finishConnect(AbstractNioChannel.java:334) at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:702) at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:650) at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:576) at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:493) at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:989) at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74) at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30) at java.lang.Thread.run(Thread.java:748)

写满200篇博文再说

浙公网安备 33010602011771号

浙公网安备 33010602011771号