大作业

import os

import jieba

path=r"/Volumes/E盘/词库/258"

with open(r'/Volumes/E盘/词库/stopsCN.txt',encoding='utf-8')as f:

stopword=f.read().split('\n')

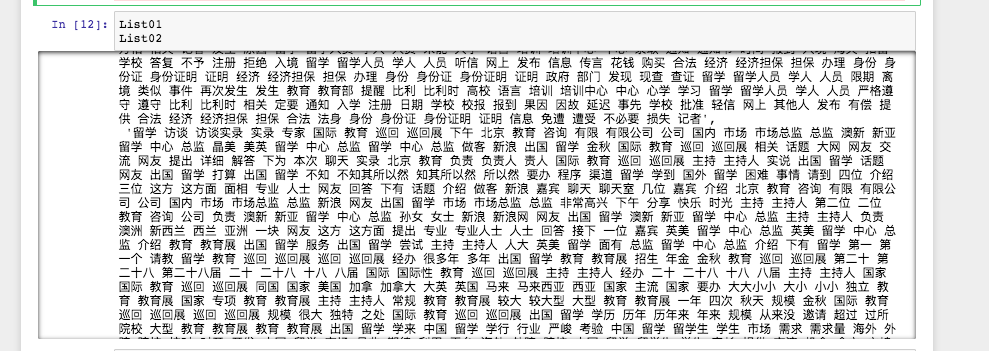

List01=[]

List02=[]

# for root,dirs,files in os.walk(path):

def read_text(name,start,end):

for file in range(start,end):

file = '/Volumes/E盘/词库/258/'+name+'/'+str(file)+'.txt'

with open(file,'r',encoding='utf-8') as f:

texts=f.read()

#target=file.split('/')[-2]

target = name

texts = "".join([text for text in texts if text.isalpha()])

texts = [text for text in jieba.cut(texts,cut_all=True) if len(text) >=2]

texts = " ".join([text for text in texts if text not in stopword])

List01.append(target)

List02.append(texts)

read_text("家居",224236,224263)

read_text("教育",284460,284487)

read_text("科技",481650,481677)

read_text("社会",430801,430827)

read_text("时尚",326396,326423)

# 划分训练集和测试集 from sklearn.model_selection import train_test_split x_train,x_test,y_train,y_test = train_test_split(List02,List01,test_size=0.2)

# 文本特征提取 from sklearn.feature_extraction.text import TfidfVectorizer vec = TfidfVectorizer() X_train = vec.fit_transform(x_train) X_test = vec.transform(x_test)

from sklearn.naive_bayes import MultinomialNB

from sklearn.model_selection import cross_val_score

from sklearn.metrics import classification_report

# 多项式朴素贝叶斯

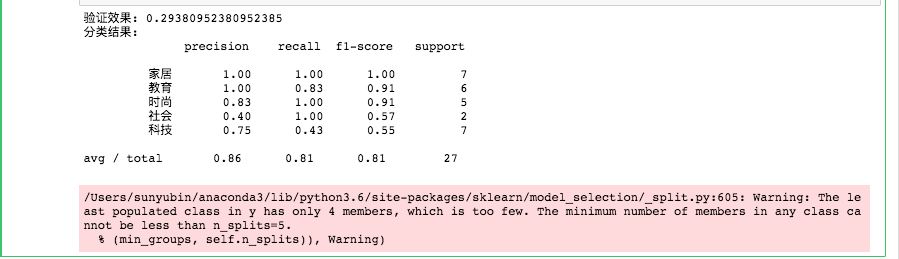

mnb = MultinomialNB()

module = mnb.fit(X_train, y_train)

y_predict = module.predict(X_test)

# 对数据进行5次分割

scores=cross_val_score(mnb,X_test,y_test,cv=5)

print("验证效果:",scores.mean())

print("分类结果:\n",classification_report(y_predict,y_test))

import collections

# 统计测试集和预测集的各类新闻个数

testCount = collections.Counter(y_test)

predCount = collections.Counter(y_predict)

print('实际:',testCount,'\n', '预测', predCount)

# 建立标签列表,实际结果列表,预测结果列表,

nameList = list(testCount.keys())

testList = list(testCount.values())

predictList = list(predCount.values())

x = list(range(len(nameList)))

print("新闻类别:",nameList,'\n',"实际:",testList,'\n',"预测:",predictList)