keeplaived实现haproxy高可用

| 名称 | IP |

| node1(haproxy,keepalived) | 192.168.6.152 |

| node2 (haproxy,keepalived) | 192.168.6.153 |

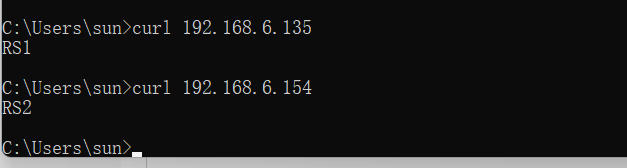

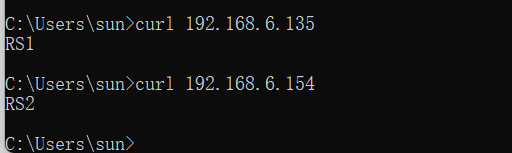

| RS1 | 192.168.6.135 |

| RS2 | 192.168.6.154 |

# 在Rs1,Rs2下载nginx写一个网页文件 [root@rs1 ~]# dnf -y install nginx [root@rs1 ~]# echo 'rs1' > /usr/share/nginx/html/index.html [root@rs1 ~]# systemctl enable --now nginx Created symlink /etc/systemd/system/multi-user.target.wants/nginx.service → /usr/lib/systemd/system/nginx.service. [root@rs2 ~]# dnf -y install nginx [root@rs2 ~]# echo 'rs2' > /usr/share/nginx/html/index.html [root@rs2 ~]# systemctl enable --now nginx Created symlink /etc/systemd/system/multi-user.target.wants/nginx.service → /usr/lib/systemd/system/nginx.service.

node1安装haproxy及配置

#下载haproxy [root@node1 ~]# ls anaconda-ks.cfg haproxy-2.6.0.tar.gz #安装依赖包 [root@node1 ~]# yum -y install make gcc pcre-devel bzip2-devel openssl-devel systemd-devel #创建用户 [root@node1 ~]# useradd -r -M -s /sbin/nologin haproxy [root@node1 ~]# id haproxy uid=994(haproxy) gid=991(haproxy) groups=991(haproxy) #解压 [root@node1 ~]# tar xf haproxy-2.6.0.tar.gz [root@node1 ~]# ls anaconda-ks.cfg haproxy-2.6.0 haproxy-2.6.0.tar.gz [root@node1 ~]# cd haproxy-2.6.0 [root@node1 haproxy-2.6.0]# ls addons CONTRIBUTING include Makefile src VERSION admin dev INSTALL README SUBVERS BRANCHES doc LICENSE reg-tests tests CHANGELOG examples MAINTAINERS scripts VERDATE #编译并安装 [root@ haproxy-2.6.0]# make -j $(grep 'processor' /proc/cpuinfo |wc -l) \ > TARGET=linux-glibc \ > USE_OPENSSL=1 \ > USE_ZLIB=1 \ > USE_PCRE=1 \ > USE_SYSTEMD=1 [root@node1 haproxy-2.6.0]# make install PREFIX=/usr/local/haproxy # 做软连接或者设置环境变量都可以 [root@node1 haproxy-2.6.0]# ln -s /usr/local/haproxy/sbin/haproxy /usr/sbin/

#配置各个负载的内核参数 [root@node1 haproxy-2.6.0]# vim /etc/sysctl.conf net.ipv4.ip_nonlocal_bind = 1 #ip绑定功能打开,绑定一个非本地ip防止报错 net.ipv4.ip_forward = 1 #ip转发的功能打开 # 生效 [root@node1 haproxy-2.6.0]# sysctl -p net.ipv4.ip_nonlocal_bind = 1 net.ipv4.ip_forward = 1

#提供配置文件 [root@node1 ~]# mkdir /etc/haproxy [root@haproxy haproxy]# cat > /etc/haproxy/haproxy.cfg <<EOF #--------------全局配置---------------- global log 127.0.0.1 local0 info # 日志放在这个local0 #log loghost local0 info maxconn 20480 #最大连接数 #chroot /usr/local/haproxy pidfile /var/run/haproxy.pid #maxconn 4000 user haproxy #用户 group haproxy #组 daemon #守护模式运行 #--------------------------------------------------------------------- #common defaults that all the 'listen' and 'backend' sections will #use if not designated in their block #--------------------------------------------------------------------- defaults #默认的意思 mode http #代理的http协议 log global option dontlognull #不记录空日志 option httpclose option httplog #option forwardfor #转发的 option redispatch #打包的 balance roundrobin #算法 rr轮询模式 timeout connect 10s #链接超时时间10秒 timeout client 10s #客户端超时时间10秒 timeout server 10s #服务端超时时间10ian timeout check 10s #检查超时时间10秒 maxconn 60000 # 最大连接数 retries 3 #重试次数 #--------------统计页面配置------------------ listen admin_stats #管理界面 bind 0.0.0.0:8189 #绑定在8189的端口号上 stats enable #状态 mode http #模式是http log global #日志 stats uri /haproxy_stats #访问格式 stats realm Haproxy\ Statistics stats auth admin:admin #用户名和密码 #stats hide-version stats admin if TRUE #为真可以访问到 stats refresh 30s #30秒刷新一下 #---------------web设置----------------------- listen webcluster #集群 bind 0.0.0.0:80 #80端口号 mode http #用到http协议 #option httpchk GET /index.html log global maxconn 3000 #最大连接数 balance roundrobin #算法是 cookie SESSION_COOKIE insert indirect nocache server web01 192.168.6,135:80 check inter 2000 fall 5 server web02 192.168.6.154:80 check inter 2000 fall 5 #server web01 192.168.80.102:80 cookie web01 check inter 2000 fall 5> EOF

haproxy.service文件编写

[root@node1 haproxy]# cat > /usr/lib/systemd/system/haproxy.service <<EOF > [Unit] > Description=HAProxy Load Balancer > After=syslog.target network.target > > [Service] > ExecStartPre=/usr/local/haproxy/sbin/haproxy -f /etc/haproxy/haproxy.cfg -c -q > ExecStart=/usr/local/haproxy/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /var/run/haproxy.pid > ExecReload=/bin/kill -USR2 $MAINPID > > [Install] > WantedBy=multi-user.target > EOF [root@node1 haproxy]# systemctl daemon-reload

启用日志

[root@node1 ~]# vi /etc/rsyslog.conf # Save boot messages also to boot.log local7.* /var/log/boot.log local0.* /var/log/haproxy.log #加入此行 [root@node1 ~]# systemctl restart rsyslog # #重启这个服务

启动服务

[root@node1 ~]# systemctl restart haproxy [root@node1 ~]# ss -antl State Recv-Q Send-Q Local Address:Port Peer Address:Port Process LISTEN 0 128 0.0.0.0:8189 0.0.0.0:* LISTEN 0 128 0.0.0.0:80 0.0.0.0:* LISTEN 0 128 0.0.0.0:22 0.0.0.0:* LISTEN 0 128 [::]:22 [::]:*

node2安装haproxy及配置

[root@node2 ~]# ls anaconda-ks.cfg haproxy-2.6.0.tar.gz [root@node2 ~]# useradd -r -M -s /sbin/nologin haproxy [root@node2 ~]# id haproxy uid=993(haproxy) gid=990(haproxy) groups=990(haproxy) [root@node2 ~]# dnf -y install make gcc pcre-devel bzip2-devel openssl-devel systemd-devel [root@node2 ~]# tar xf haproxy-2.6.0.tar.gz [root@node2 ~]# ls anaconda-ks.cfg haproxy-2.6.0 haproxy-2.6.0.tar.gz [root@node2 ~]# cd haproxy-2.6.0 [root@node2 haproxy-2.6.0]# ls addons CHANGELOG doc INSTALL Makefile scripts tests admin CONTRIBUTING examples LICENSE README src VERDATE BRANCHES dev include MAINTAINERS reg-tests SUBVERS VERSION [root@node2 haproxy-2.6.0]# make -j $(grep 'processor' /proc/cpuinfo |wc -l) \ TARGET=linux-glibc \ USE_OPENSSL=1 \ USE_ZLIB=1 \ USE_PCRE=1 \ USE_SYSTEMD=1 [root@node2 haproxy-2.6.0]# make install PREFIX=/usr/local/haproxy [root@node2 haproxy-2.6.0]# ln -s /usr/local/haproxy/sbin/haproxy /usr/sbin/

#配置各个负载的内核参数 [root@node2 haproxy-2.6.0]# vi /etc/sysctl.conf # sysctl settings are defined through files in # /usr/lib/sysctl.d/, /run/sysctl.d/, and /etc/sysctl.d/. # # Vendors settings live in /usr/lib/sysctl.d/. # To override a whole file, create a new file with the same in # /etc/sysctl.d/ and put new settings there. To override # only specific settings, add a file with a lexically later # name in /etc/sysctl.d/ and put new settings there. # # For more information, see sysctl.conf(5) and sysctl.d(5). net.ipv4.ip_nonlocal_bind = 1 net.ipv4.ip_forward = 1 [root@node2 haproxy-2.6.0]# sysctl -p net.ipv4.ip_nonlocal_bind = 1 net.ipv4.ip_forward = 1

#提供配置文件

#创建目录 [root@node2 ~]# mkdir /etc/haproxy [root@node2 ~]# cd /etc/haproxy/ [root@node2 haproxy]# cat > /etc/haproxy/haproxy.cfg <<EOF > #--------------全局配置---------------- > global > log 127.0.0.1 local0 info # 日志放在这个local0 > #log loghost local0 info > maxconn 20480 #最大连接数 > #chroot /usr/local/haproxy > pidfile /var/run/haproxy.pid > #maxconn 4000 > user haproxy #用户 > group haproxy #组 > daemon #守护模式运行 > #--------------------------------------------------------------------- > #common defaults that all the 'listen' and 'backend' sections will > #use if not designated in their block > #--------------------------------------------------------------------- > defaults #默认的意思 > mode http #代理的http协议 > log global > option dontlognull #不记录空日志 > option httpclose > option httplog > #option forwardfor #转发的 > option redispatch #打包的 > balance roundrobin #算法 rr轮询模式 > timeout connect 10s #链接超时时间10秒 > timeout client 10s #客户端超时时间10秒 > timeout server 10s #服务端超时时间10ian > timeout check 10s #检查超时时间10秒 > maxconn 60000 # 最大连接数 > retries 3 #重试次数 > #--------------统计页面配置------------------ > listen admin_stats #管理界面 > bind 0.0.0.0:8189 #绑定在8189的端口号上 > stats enable #状态 > mode http #模式是http > log global #日志 > stats uri /haproxy_stats #访问格式 > stats realm Haproxy\ Statistics > stats auth admin:admin #用户名和密码 > #stats hide-version > stats admin if TRUE #为真可以访问到 > stats refresh 30s #30秒刷新一下 > #---------------web设置----------------------- > listen webcluster #集群 > bind 0.0.0.0:80 #80端口号 > mode http #用到http协议 > #option httpchk GET /index.html > log global > maxconn 3000 #最大连接数 > balance roundrobin #算法是 cookie SESSION_COOKIE insert indirect nocache server web01 192.168.6,142:80 check inter 2000 fall 5 server web02 192.168.6.200:80 check inter 2000 fall 5 #server web01 192.168.80.102:80 cookie web01 check inter 2000 fall 5> EOF

haproxy.service文件编写

[root@node2 haproxy]# vi /usr/lib/systemd/system/haproxy.service [Unit] Description=HAProxy Load Balancer After=syslog.target network.target [Service] ExecStartPre=/usr/local/haproxy/sbin/haproxy -f /etc/haproxy/haproxy.cfg -c -q ExecStart=/usr/local/haproxy/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /var/run/haproxy.pid ExecReload=/bin/kill -USR2 $MAINPID [Install] WantedBy=multi-user.target [root@node2 haproxy]# systemctl daemon-reload

启用日志

[root@node2 haproxy]# vi /etc/rsyslog.conf #加入下面内容 local0.* /var/log/haproxy.log [root@node2 haproxy]# systemctl restart rsyslog #重启这个服务

启动服务

[root@node2 ~]# systemctl restart haproxy [root@node2 ~]# ss -antl State Recv-Q Send-Q Local Address:Port Peer Address:Port Process LISTEN 0 128 0.0.0.0:8189 0.0.0.0:* LISTEN 0 128 0.0.0.0:80 0.0.0.0:* LISTEN 0 128 0.0.0.0:22 0.0.0.0:* LISTEN 0 128 [::]:22 [::]:*

下载keepalived配置主keepalived

[root@node1 ~]# dnf -y install keepalived # 下载 [root@node1 ~]# cd /etc/keepalived/ [root@node1 keepalived]# ls keepalived.conf [root@node1 keepalived]# cp keepalived.conf keepalived.conf123 #复制一个原本默认的配置文件修改名字备份一下 [root@node1 keepalived]# > keepalived.conf #内容清空 [root@node1 keepalived]# vim keepalived.conf #编辑主配置文件 ! Configuration File for keepalived global_defs { # 全局配置 router_id lb01 } vrrp_instance VI_1 { #定义实例 state MASTER # 定义keepalived节点的初始状态,可以为MASTER和BACKUP interface ens160 # VRRP实施绑定的网卡接口, virtual_router_id 51 # 虚拟路由的ID,同一集群要一致 priority 100 #定义优先级,按优先级来决定主备角色优先级越大越有限 advert_int 1 # 主备通讯时间间隔 authentication { #配置认证 auth_type PASS #认证方式此处为密码 auth_pass 023654 # 修改密码 } virtual_ipaddress { #要使用的VIP地址 192.168.6.250 # 修改vip } } virtual_server 192.168.6.250 80 { # 配置虚拟服务器 delay_loop 6 # 健康检查时间间隔 lb_algo rr # lvs调度算法 lb_kind DR #lvs模式 persistence_timeout 50 #持久化超时时间,单位是秒 protocol TCP #4层协议 real_server 192.168.6.152 80 { # 定义真实处理请求的服务器 weight 1 # 给服务器指定权重,默认为1 TCP_CHECK { connect_port 80 #端口号为80 connect_timeout 3 # 连接超时时间 nb_get_retry 3 # 连接次数 delay_before_retry 3 # 在尝试之前延迟多少时间 } } real_server 192.168.6.153 80 { weight 1 TCP_CHECK { connect_port 80 connect_timeout 3 nb_get_retry 3 delay_before_retry 3 } } }

[root@node1 keepalived]# systemctl enable --now keepalived #启动并开机自启

Created symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service.

[root@node1 keepalived]# systemctl status keepalived # 查看状态

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disa>

Active: active (running) since Wed 2022-08-31 21:20:10 CST; 15s ago

Process: 20949 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/>

Main PID: 20950 (keepalived)

Tasks: 3 (limit: 11202)

Memory: 3.6M

CGroup: /system.slice/keepalived.service

├─20950 /usr/sbin/keepalived -D

├─20951 /usr/sbin/keepalived -D

└─20952 /usr/sbin/keepalived -D

下载keepalived配置备keepalived

[root@node2 ~]# dnf -y install keepalived [root@node2 ~]# cd /etc/keepalived/ [root@node2 keepalived]# ls keepalived.conf [root@node2 keepalived]# cp keepalived.conf keepalived.conf123 [root@node2 keepalived]# > keepalived.conf [root@node2 keepalived]# vim keepalived.conf ! Configuration File for keepalived global_defs { router_id lb02 } vrrp_instance VI_1 { state BACKUP interface ens160 virtual_router_id 51 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 023654 } virtual_ipaddress { 192.168.6.250 } } virtual_server 192.168.6.250 80 { delay_loop 6 lb_algo rr lb_kind DR persistence_timeout 50 protocol TCP real_server 192.168.6.152 80 { weight 1 TCP_CHECK { connect_port 80 connect_timeout 3 nb_get_retry 3 delay_before_retry 3 } } real_server 192.168.6.153 80 { weight 1 TCP_CHECK { connect_port 80 connect_timeout 3 nb_get_retry 3 delay_before_retry 3 } } } [root@node2 keepalived]# systemctl enable --now keepalived #启动并设置开机自启 Created symlink /etc/systemd/system/multi-user.target.wants/keepalived.service → /usr/lib/systemd/system/keepalived.service. [root@node2 keepalived]# systemctl status keepalived #查看状态是否是启动并开机自启的 ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disa> Active: active (running) since Wed 2022-08-31 21:21:09 CST; 17s ago Process: 24443 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/> Main PID: 24444 (keepalived) Tasks: 3 (limit: 11202) Memory: 3.6M CGroup: /system.slice/keepalived.service ├─24444 /usr/sbin/keepalived -D ├─24445 /usr/sbin/keepalived -D └─24446 /usr/sbin/keepalived -D

查看VIP在哪里

# 在MASTER上查看 [root@node1 ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 00:0c:29:d7:b0:2d brd ff:ff:ff:ff:ff:ff inet 192.168.6.152/24 brd 192.168.6.255 scope global dynamic noprefixroute ens160 valid_lft 1454sec preferred_lft 1454sec inet 192.168.6.250/32 scope global ens160 # vip在主节点上 valid_lft forever preferred_lft forever inet6 fe80::6e9:511e:b6a8:693c/64 scope link noprefixroute valid_lft forever preferred_lft forever #在SLAVE上查看 [root@node2 keepalived]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 00:0c:29:42:d0:bc brd ff:ff:ff:ff:ff:ff inet 192.168.6.153/24 brd 192.168.6.255 scope global dynamic noprefixroute ens160 valid_lft 1412sec preferred_lft 1412sec inet6 fe80::da77:9e16:6359:1029/64 scope link noprefixroute valid_lft forever preferred_lft forever

模拟主出现问题挂掉了

[root@node1 ~]# systemctl stop keepalived [root@node1 ~]# systemctl status keepalived ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disa> Active: inactive (dead) since Wed 2022-08-31 22:09:17 CST; 4s ago Process: 21090 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/> Main PID: 21091 (code=exited, status=0/SUCCESS) [root@node1 ~]# ip a # vip释放掉了 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 00:0c:29:d7:b0:2d brd ff:ff:ff:ff:ff:ff inet 192.168.6.152/24 brd 192.168.6.255 scope global dynamic noprefixroute ens160 valid_lft 1268sec preferred_lft 1268sec inet6 fe80::6e9:511e:b6a8:693c/64 scope link noprefixroute valid_lft forever preferred_lft forever

查看备

[root@node2 scripts]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 00:0c:29:42:d0:bc brd ff:ff:ff:ff:ff:ff inet 192.168.6.153/24 brd 192.168.6.255 scope global dynamic noprefixroute ens160 valid_lft 1381sec preferred_lft 1381sec inet 192.168.6.250/32 scope global ens160 # 备顶替主接管vip valid_lft forever preferred_lft forever inet6 fe80::da77:9e16:6359:1029/64 scope link noprefixroute valid_lft forever preferred_lft forever

把被停掉看主

[root@node2 scripts]# systemctl stop keepalived [root@node2 scripts]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 00:0c:29:42:d0:bc brd ff:ff:ff:ff:ff:ff inet 192.168.6.153/24 brd 192.168.6.255 scope global dynamic noprefixroute ens160 valid_lft 1175sec preferred_lft 1175sec inet6 fe80::da77:9e16:6359:1029/64 scope link noprefixroute valid_lft forever preferred_lft forever [root@node2 scripts]# systemctl status keepalived ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disa> Active: inactive (dead) since Wed 2022-08-31 22:43:46 CST; 12s ago Process: 25066 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/> Main PID: 25067 (code=exited, status=0/SUCCESS)

查看主

[root@node1 ~]# systemctl restart keepalived [root@node1 ~]# systemctl status keepalived ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disa> Active: active (running) since Wed 2022-08-31 22:44:26 CST; 11s ago Process: 21152 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/> Main PID: 21153 (keepalived) Tasks: 2 (limit: 11202) Memory: 1.8M CGroup: /system.slice/keepalived.service ├─21153 /usr/sbin/keepalived -D └─21154 /usr/sbin/keepalived -D [root@node1 ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 00:0c:29:d7:b0:2d brd ff:ff:ff:ff:ff:ff inet 192.168.6.152/24 brd 192.168.6.255 scope global dynamic noprefixroute ens160 valid_lft 1121sec preferred_lft 1121sec inet 192.168.6.250/32 scope global ens160 # 起来以后有vip valid_lft forever preferred_lft forever inet6 fe80::6e9:511e:b6a8:693c/64 scope link noprefixroute valid_lft forever preferred_lft forever

让keepalived监控nginx负载均衡机

keepalived通过脚本来监控nginx负载均衡机的状态

在master上编写脚本

[root@node1 ~]# mkdir /scripts # 创建一个脚本目录为了标准化 [root@node1 ~]# cd /scripts/ [root@node1 scripts]# vim check_n.sh #编写健康检查脚本 [root@node1 scripts]# vim check_n.sh #!/bin/bash haproxy_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bhaproxy\b'|wc -l) if [ $haproxy_status -lt 1 ];then systemctl stop keepalived fi [root@node1 scripts]# chmod +x check_n.sh # 增加执行权限 [root@node1 scripts]# ll total 4 -rwxr-xr-x 1 root root 142 Aug 30 23:28 check_n.sh [root@node1 scripts]# vim notify.sh #!/bin/bash case "$1" in master) haproxy_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bhaproxy\b'|wc -l) if [ $haproxy_status -lt 1 ];then systemctl start haproxy fi sendmail ;; backup) haproxy_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bhaproxy\b'|wc -l) if [ $haproxy_status -gt 0 ];then systemctl stop haproxy fi ;; *) echo "Usage:$0 master|backup VIP" ;; esac [root@node1 scripts]# chmod +x notify.sh [root@node1 scripts]# ll total 8 -rwxr-xr-x 1 root root 142 Aug 30 23:28 check_n.sh -rwxr-xr-x 1 root root 444 Aug 30 23:32 notify.sh

在slave上编写脚本

[root@node2 ~]# mkdir /scripts #创建脚本目录为了标准化 [root@node2 ~]# cd /scripts/ [root@node2 scripts]# vim notify.sh [root@node2 scripts]# vim notify.sh #!/bin/bash case "$1" in master) haproxy_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bhaproxy\b'|wc -l) if [ $haproxy_status -lt 1 ];then systemctl start haproxy fi sendmail ;; backup) haproxy_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bhaproxy\b'|wc -l) if [ $haproxy_status -gt 0 ];then systemctl stop haproxy fi ;; *) echo "Usage:$0 master|backup VIP" ;; esac [root@node2 scripts]# chmod +x notify.sh # 增加权限 [root@node2 scripts]# ll total 4 -rwxr-xr-x 1 root root 445 Aug 30 23:39 notify.sh

配置keepalived加入监控脚本的配置

配置主keepalived

[root@node1 scripts]# vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id lb01 } vrrp_script nginx_check { script "/scripts/check_n.sh" interval 1 weight -20 } vrrp_instance VI_1 { state MASTER interface ens160 virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 023654 } virtual_ipaddress { 192.168.6.250 } track_script { nginx_check } notify_master "/scripts/notify.sh master 192.168.6.250" } virtual_server 192.168.6.250 80 { delay_loop 6 lb_algo rr lb_kind DR persistence_timeout 50 protocol TCP real_server 192.168.6.152 80 { weight 1 TCP_CHECK { connect_port 80 connect_timeout 3 nb_get_retry 3 delay_before_retry 3 } } real_server 192.168.6.153 80 { weight 1 TCP_CHECK { connect_port 80 connect_timeout 3 nb_get_retry 3 delay_before_retry 3 } } } [root@node1 scripts]# systemctl restart keepalived [root@node1 scripts]# systemctl status keepalived ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disa> Active: active (running) since Wed 2022-08-31 23:01:50 CST; 11s ago Process: 21227 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/> Main PID: 21228 (keepalived) Tasks: 3 (limit: 11202) Memory: 5.5M CGroup: /system.slice/keepalived.service ├─21228 /usr/sbin/keepalived -D ├─21229 /usr/sbin/keepalived -D └─21230 /usr/sbin/keepalived -D

配置备keepalived

backup无需检测nginx是否正常,当升级为MASTER时启动nginx,当降级为BACKUP时关闭

[root@node2 scripts]# vim /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { router_id lb02 } vrrp_instance VI_1 { state BACKUP interface ens160 virtual_router_id 51 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 023654 } virtual_ipaddress { 192.168.6.250 } notify_master "/scripts/notify.sh master 192.168.6.250" notify_backup "/scripts/notify.sh backup 192.168.6.250" } virtual_server 192.168.6.250 80 { delay_loop 6 lb_algo rr lb_kind DR persistence_timeout 50 protocol TCP real_server 192.168.6.152 80 { weight 1 TCP_CHECK { connect_port 80 connect_timeout 3 nb_get_retry 3 delay_before_retry 3 } } real_server 192.168.6.153 80 { weight 1 TCP_CHECK { connect_port 80 connect_timeout 3 nb_get_retry 3 delay_before_retry 3 } } } [root@node2 scripts]# systemctl restart keepalived [root@node2 scripts]# systemctl status keepalived ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disa> Active: active (running) since Wed 2022-08-31 23:08:00 CST; 8s ago Process: 25135 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/> Main PID: 25136 (keepalived) Tasks: 3 (limit: 11202) Memory: 4.0M CGroup: /system.slice/keepalived.service ├─25136 /usr/sbin/keepalived -D ├─25137 /usr/sbin/keepalived -D └─25138 /usr/sbin/keepalived -D

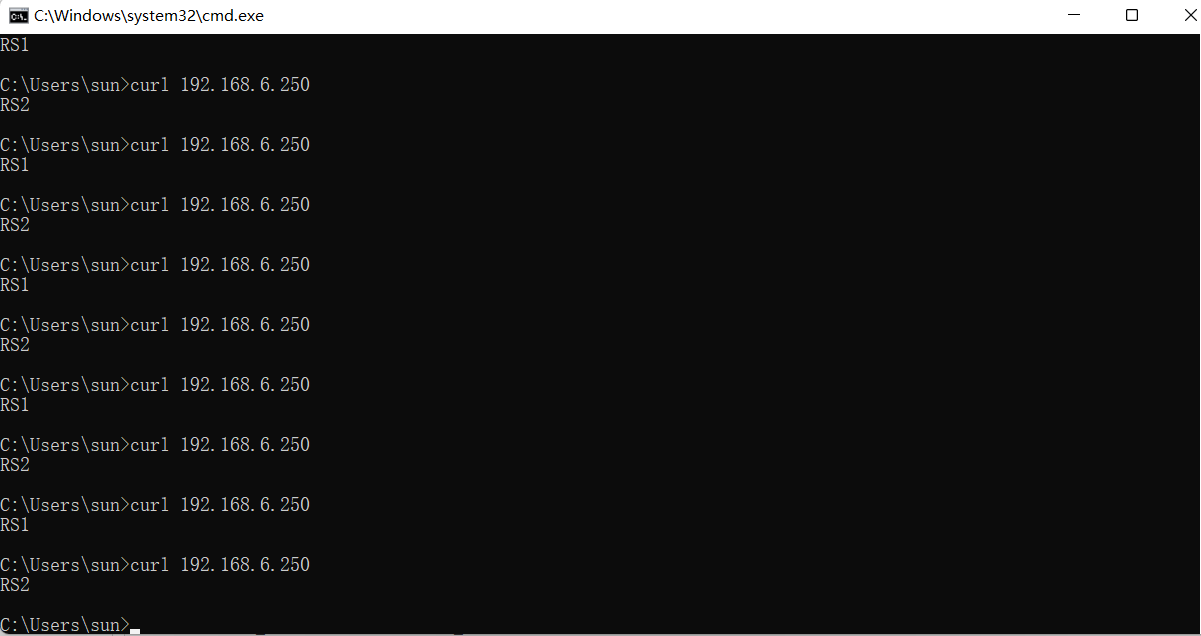

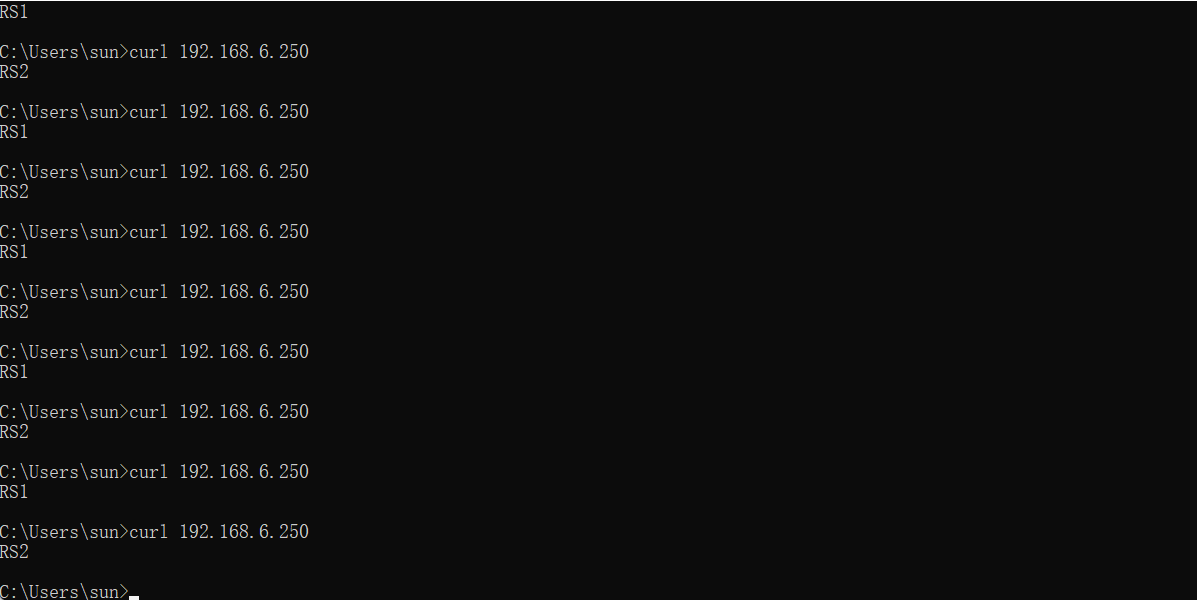

模拟主节点出现故障

[root@node1 scripts]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 00:0c:29:d7:b0:2d brd ff:ff:ff:ff:ff:ff inet 192.168.6.152/24 brd 192.168.6.255 scope global dynamic noprefixroute ens160 valid_lft 1440sec preferred_lft 1440sec inet 192.168.6.250/32 scope global ens160 valid_lft forever preferred_lft forever inet6 fe80::6e9:511e:b6a8:693c/64 scope link noprefixroute valid_lft forever preferred_lft forever [root@node1 scripts]# ss -antl State Recv-Q Send-Q Local Address:Port Peer Address:Port Process LISTEN 0 128 0.0.0.0:8189 0.0.0.0:* LISTEN 0 128 0.0.0.0:80 0.0.0.0:* LISTEN 0 128 0.0.0.0:22 0.0.0.0:* LISTEN 0 128 [::]:22 [::]:* [root@node1 scripts]# systemctl stop haproxy #停掉haproxy [root@node1 scripts]# ss -antl #端口也没有了 State Recv-Q Send-Q Local Address:Port Peer Address:Port Process LISTEN 0 128 0.0.0.0:22 0.0.0.0:* LISTEN 0 128 [::]:22 [::]:* [root@node1 scripts]# ss -antl State Recv-Q Send-Q Local Address:Port Peer Address:Port Process LISTEN 0 128 0.0.0.0:22 0.0.0.0:* LISTEN 0 128 [::]:22 [::]:* [root@node1 scripts]# systemctl status keepalived # keepalived也停掉了 ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disa> Active: inactive (dead) since Wed 2022-08-31 23:11:32 CST; 1min 35s ago Process: 21227 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/> Main PID: 21228 (code=exited, status=0/SUCCESS) 备节点查看 [root@node2 scripts]# ss -antl #端口起来了,备顶替了主 State Recv-Q Send-Q Local Address:Port Peer Address:Port Process LISTEN 0 128 0.0.0.0:8189 0.0.0.0:* LISTEN 0 128 0.0.0.0:80 0.0.0.0:* LISTEN 0 128 0.0.0.0:22 0.0.0.0:* LISTEN 0 128 [::]:22 [::]:* [root@node2 scripts]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 00:0c:29:42:d0:bc brd ff:ff:ff:ff:ff:ff inet 192.168.6.153/24 brd 192.168.6.255 scope global dynamic noprefixroute ens160 valid_lft 1272sec preferred_lft 1272sec inet 192.168.6.250/32 scope global ens160 #vip过来了 valid_lft forever preferred_lft forever inet6 fe80::da77:9e16:6359:1029/64 scope link noprefixroute valid_lft forever preferred_lft forever #模拟主节点修复好了 [root@node1 scripts]# systemctl restart haproxy keepalived #启动haproxy keepalived [root@node1 scripts]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000 link/ether 00:0c:29:d7:b0:2d brd ff:ff:ff:ff:ff:ff inet 192.168.6.152/24 brd 192.168.6.255 scope global dynamic noprefixroute ens160 valid_lft 973sec preferred_lft 973sec inet 192.168.6.250/32 scope global ens160 # vip又回来了 valid_lft forever preferred_lft forever inet6 fe80::6e9:511e:b6a8:693c/64 scope link noprefixroute valid_lft forever preferred_lft forever [root@node1 scripts]# ss -antl State Recv-Q Send-Q Local Address:Port Peer Address:Port Process LISTEN 0 128 0.0.0.0:8189 0.0.0.0:* LISTEN 0 128 0.0.0.0:80 0.0.0.0:* LISTEN 0 128 0.0.0.0:22 0.0.0.0:* LISTEN 0 128 [::]:22 [::]:*

#备节点释放vip和资源

[root@node2 scripts]# ip a # vip没有了

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:42:d0:bc brd ff:ff:ff:ff:ff:ff

inet 192.168.6.153/24 brd 192.168.6.255 scope global dynamic noprefixroute ens160

valid_lft 956sec preferred_lft 956sec

inet6 fe80::da77:9e16:6359:1029/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@node2 scripts]# systemctl status haproxy # haproxy自动停止了

● haproxy.service - HAProxy Load Balancer

Loaded: loaded (/usr/lib/systemd/system/haproxy.service; disabled; vendor preset: disabl>

Active: inactive (dead)