15、kubernetes之 高级调度方式

调度方式

节点选择器:nodeSelector、nodeName

节点亲和调度:nodeAffinity

Taint的effect定义对pod排斥效果

[root@k8s-master pki]# kubectl explain pods.spec.nodeSelector

[root@k8s-master yas]# mkdir schedule

c[root@k8s-master yas]# cd schedule/

[root@k8s-master schedule]# cat pod-demo.yaml

[root@k8s-master schedule]# cat pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-demo

namespace: default

labels:

app: myapp

tier: frontend

spec:

containers:

- image: ikubernetes/myapp:v1

name: myapp

nodeSelector:

disktype: ssd

[root@k8s-master schedule]# kubectl create -f pod-demo.yaml

pod/pod-demo created

[root@k8s-master schedule]# kubectl get pod/pod-demo

NAME READY STATUS RESTARTS AGE

pod-demo 0/1 Pending 0 16s

[root@k8s-master schedule]# kubectl describe pod/pod-demo

...

Node-Selectors: disktype=ssd

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 26s (x2 over 26s) default-scheduler 0/3 nodes are available: 3 node(s) didn't match node selector.

如上,发现没有匹配到相应的标签选择器。,不满足选择器,pod置于pending状态中。

现在绑定标签选择器

nodeselect条件不满足,预选过程就不通过。可以手动给node打上标签,即可运行。

[root@k8s-master schedule]# kubectl get no -l disktype=ssd

No resources found.

[root@k8s-master schedule]# kubectl label node k8s-node1 disktype=ssd

node/k8s-node1 labeled

[root@k8s-master schedule]# kubectl get no -l disktype=ssd

NAME STATUS ROLES AGE VERSION

k8s-node1 Ready <none> 6d22h v1.14.3

[root@k8s-master schedule]# kubectl get pod/pod-demo

NAME READY STATUS RESTARTS AGE

pod-demo 1/1 Running 0 3m46s

如下,匹配到相应的标签选择器,pod正常运行。

配置node节点亲和性:硬亲和性require,软亲和性preference

配置硬亲和性操作

[root@master schedule]# vim pod-affinity-demo.yaml

[root@k8s-master schedule]# cat pod-affinity-demo.yaml

[root@k8s-master schedule]# cat pod-affinity-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-affinity-demo

namespace: default

labels:

app: myapp

tier: frontend

spec:

containers:

- image: ikubernetes/myapp:v1

name: myapp

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: zone

operator: In

values:

- foo

- bar

[root@k8s-master schedule]# kubectl create -f pod-affinity-demo.yaml

[root@k8s-master schedule]# kubectl get pod/pod-affinity-demo --show-labels

NAME READY STATUS RESTARTS AGE LABELS

pod-affinity-demo 0/1 Pending 0 54s app=myapp,tier=frontend

[root@k8s-master schedule]# kubectl describe pod/pod-affinity-demo # 硬亲和性,没有匹配到标签、、、

软亲和性

修改一下,调整为软亲和性,系统会勉为其难的选择node节点部署pod。

[root@k8s-master schedule]# cat pod-affinity-demo-2.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-affinity-demo2

namespace: default

labels:

app: myapp

tier: frontend

spec:

containers:

- image: ikubernetes/myapp:v1

name: myapp

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- preference:

matchExpressions:

- key: zone

operator: In

values:

- foo

- bar

weight: 60

[root@k8s-master schedule]# kubectl create -f pod-affinity-demo-2.yaml

pod/pod-affinity-demo2 created

[root@k8s-master schedule]# kubectl get pod/pod-affinity-demo2 --show-labels

NAME READY STATUS RESTARTS AGE LABELS

pod-affinity-demo2 1/1 Running 0 29s app=myapp,tier=frontend

如上,自行完成了node亲和度调度,软亲和度下,不匹配也是可以运行的。

Pod自身亲和度调度

pod一样也有硬亲和性和软亲和性,通过ndoe亲和性来定义pod亲和性。

Pod亲和性不需要在同一个node,在相近位置就行。

以node名称为标准,不同节点在不同位置。如下,新增pod在上面两个node有亲和性。

第1个节点,那么扩容的2-4号节点,是否能运行与第一个pod一致。

机架rack,排数row

下面以2个pod作为演示验证亲和性

[root@k8s-master schedule]# cat pod-required-affinity-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-first

labels:

app: myapp

tier: frontend

spec:

containers:

- image: ikubernetes/myapp:v1

name: myapp

---

apiVersion: v1

kind: Pod

metadata:

name: pod-second

labels:

app: db

tier: db

spec:

containers:

- name: busybox

image: busybox:latest

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","sleep 3600"]

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- {key: app, operator: In, values: ["myapp"]}

topologyKey: kubernetes.io/hostname

[root@k8s-master schedule]# kubectl create -f pod-required-affinity-demo.yaml

pod/pod-first created

pod/pod-second created

[root@k8s-master ~]# kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod-first 1/1 Running 0 6s 10.244.1.41 k8s-node1 <none> <none>

pod-second 1/1 Running 0 6s 10.244.1.40 k8s-node1 <none> <none>

[root@k8s-master ~]# kubectl describe pods pod-second

其中一个pod在某个节点上,另一个pod相应启动也会在一台机器上。正常来说,k8s默认优选策略是会把pod分布在资源最先的节点上,但是这个做了亲和性,两个必须在一起,要切也得一起切。

反亲和性,

两者一定不能在同一个节点上,分布在不同节点。如下示例。

调整上面示例,为反亲和性

podAffinity--》podAntiAffinity,yaml文件修改。

[root@k8s-master schedule]# kubectl create -f pod-required-anti-affinity-demo.yaml

[root@k8s-master ~]# kubectl get pods -owide # 验证ok,分布在不同地方。

来玩高级一点,将topologyKey调整为zone

现在将两个node标记为一样的。看下pod是否正常启动。

[root@k8s-master schedule]# kubectl label nodes k8s-node1 zone=foo

[root@k8s-master schedule]# kubectl label nodes k8s-node2 zone=foo

[root@k8s-master schedule]# tail -2 pod-required-anti-affinity-demo.yaml

- {key: app, operator: In, values: ["myapp"]}

topologyKey: zone

[root@k8s-master schedule]# kubectl delete -f pod-required-anti-affinity-demo.yaml

[root@k8s-master schedule]# kubectl create -f pod-required-anti-affinity-demo.yaml

[root@k8s-master ~]# kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod-first 1/1 Running 0 11s 10.244.1.44 k8s-node1 <none> <none>

pod-second 0/1 Pending 0 10s <none> <none> <none> <none>

[root@k8s-master ~]# kubectl describe pod pod-second

如上,第二个节点由于强反亲和性,一直在pending状态,达到了预期效果。此时需要降低亲和力,第二个pod才可以跑起来。

达到了预期效果。

node-taint污点,pod-tolerations 容忍度

最后一个:污点调度,节点属性,用在节点之上。前面的亲和性都是基于pod执行的,节点都是被动选择的。

给node选择主动权,可以挑选和拒绝pod。

标签、注解所有对象都可以使用;污点通常用在节点之上,也是一种键值属性,拒绝不能容忍的pod。

taints:在nodes节点上定义污点,其effect对pod排斥效果有三种类型,

NoSchedule:仅影响调度过程,对现存的pod对象不影响

NoExecute:不仅影响调度,也影响现存的pod对象;不满足Pod对象,将被驱逐。

PreferNoSchedule:尽量不影响,也能接受

tolerations:容忍度,在pod上定义容忍哪些污点

[root@k8s-master ~]# kubectl explain pod.spec.tolerations

[root@k8s-master ~]# kubectl explain nodes.spec.taints

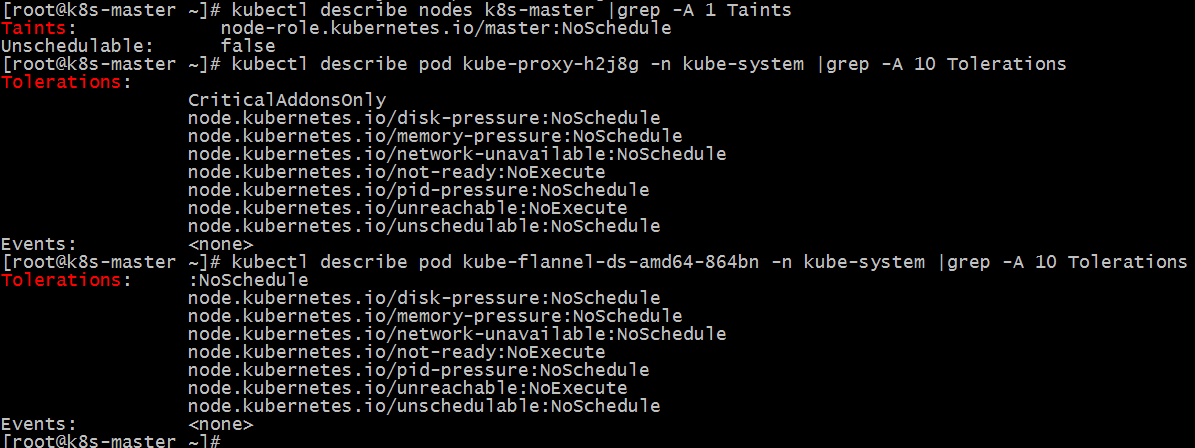

默认maser有一个污点。后期创建的pod从来都没有调度到master节点上,因为自行创建的pod没有定义容忍度,默认不能容忍这一个污点。Master就是靠这种方式去拒绝常规pod上master节点。如下,系统层面的node污点,和pod容忍度

[root@k8s-master ~]# kubectl get node -n kube-system

[root@k8s-master ~]# kubectl get pod -n kube-system

[root@k8s-master ~]# kubectl describe nodes k8s-master |grep -A 1 Taints

[root@k8s-master ~]# kubectl describe pod kube-proxy-h2j8g -n kube-system |grep -A 10 Tolerations

[root@k8s-master ~]# kubectl describe pod kube-flannel-ds-amd64-864bn -n kube-system |grep -A 10 Tolerations

如上,有taints污点和Tolerations容忍度设置。

那么如何管理节点的污点

示例,把node1标记为生产专用的,其他的为测试

[root@k8s-master ~]# kubectl taint node k8s-node1 node-type=production:NoSchedule # 设置污点

node/k8s-node1 tainted

[root@k8s-master schedule]# cat deploy-demo.yaml

root@k8s-master schedule]# cat deploy-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deploy

namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: myapp

release: canary

template:

metadata:

name: myapp

labels:

app: myapp

release: canary

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v2

ports:

- name: http

containerPort: 80

[root@k8s-master schedule]# kubectl create -f deploy-demo.yaml 如上,没有设置pod污点容忍度,

deployment.apps/myapp-deploy created

[root@k8s-master ~]# kubectl get pods -owide |grep myapp-deploy # pod全部票到node2上面了,因他们不能容忍节点上面的污点,没办法运行到node1节点1上面。

myapp-deploy-6b59768b8-7lp47 1/1 Running 0 59s 10.244.2.69 k8s-node2 <none> <none>

myapp-deploy-6b59768b8-9f6rr 1/1 Running 0 59s 10.244.2.67 k8s-node2 <none> <none>

myapp-deploy-6b59768b8-pcl2d 1/1 Running 0 59s 10.244.2.68 k8s-node2 <none> <none>

此时pod全部跑到node2

不妨继续操作,把node2污点标记为dev,看下会不会被驱逐

[root@k8s-master ~]# kubectl taint node k8s-node2 node-type=dev:NoExecute node/k8s-node2 tainted [root@k8s-master ~]# kubectl get pods -owide |grep myapp-deploy myapp-deploy-6b59768b8-gg966 0/1 Pending 0 20s <none> <none> <none> <none> myapp-deploy-6b59768b8-kmwrd 0/1 Pending 0 20s <none> <none> <none> <none> myapp-deploy-6b59768b8-mf2nq 0/1 Pending 0 21s <none> <none> <none> <none>

kubectl describe pods myapp-deploy-6b59768b8-gg966查看提示:节点不能容忍污点。

Warning FailedScheduling 10s (x16 over 28s) default-scheduler 0/3 nodes are available: 3 node(s) had taints that the pod didn't tolerate.

此时,pod全部被干掉了,一直处于pending状态。

pod添加Tolerations恢复

这个时候需要加Tolerations可以避免污点的限制。

配置deployment容忍度配置,重启生成pod服务。

Equal:精确容忍到哪个污点;

Exists:存在的污点服务,都容忍。

[root@k8s-master schedule]# grep -A 5 tolerations deploy-demo.yaml # 能精确容忍第一个污点,pod正常在node1上运行了。

[root@k8s-master schedule]# grep -A 5 tolerations deploy-demo.yaml # 能精确容忍第一个污点,pod正常在node1上运行了。 tolerations: - key: "node-type" operator: "Equal" value: "production" effect: "NoSchedule"

[root@k8s-master schedule]# kubectl apply -f deploy-demo.yaml

[root@k8s-master schedule]# kubectl get pods -owide |grep myapp-deploy

myapp-deploy-f9f7f6969-ng6jl 1/1 Running 0 19s 10.244.1.46 k8s-node1 <none> <none>

myapp-deploy-f9f7f6969-vpxr6 1/1 Running 0 22s 10.244.1.45 k8s-node1 <none> <none>

myapp-deploy-f9f7f6969-zjsmd 1/1 Running 0 17s 10.244.1.47 k8s-node1 <none> <none>

不妨调整pod能够容忍所有的污点,

[root@k8s-master schedule]# grep -A 5 tolerations deploy-demo.yaml

tolerations:

- key: "node-type"

operator: "Exists"

value: ""

effect: ""

[root@k8s-master schedule]# kubectl apply -f deploy-demo.yaml

deployment.apps/myapp-deploy configured

[root@k8s-master schedule]# kubectl get pods -owide |grep myapp-deploy

myapp-deploy-5db9b6869b-kp422 1/1 Running 0 24s 10.244.1.48 k8s-node1 <none> <none>

myapp-deploy-5db9b6869b-vl42v 1/1 Running 0 21s 10.244.2.71 k8s-node2 <none> <none>

myapp-deploy-5db9b6869b-zz8zc 1/1 Running 0 27s 10.244.2.70 k8s-node2 <none> <none>

如上,容忍所有的污点后,pod能够在优选策略下,自动分散pod的位置。

自此,完成了污点调度使用。

标签怎么去除

$ kubectl taint node node1 node-type-

[root@k8s-master ~]# kubectl describe nodes k8s-node2 |grep -A 0 Taints

Taints: node-type=dev:NoExecute

[root@k8s-master ~]# kubectl taint node k8s-node2 node-type-

node/k8s-node2 untainted

[root@k8s-master ~]# kubectl describe nodes k8s-node2 |grep -A 0 Taints

Taints: <none>

浙公网安备 33010602011771号

浙公网安备 33010602011771号