5、kubernetes之 Pod控制器

第五部分 Pod控制器

1、pod回顾

apiVersion, kind, metadata, spec, status(只读)

spec:

containers

nodeSelector

nodeName

restartPolicy:

Always, Never, OnFailure

containers:

name

image

imagePullPolicy:Always、Never、IfNotPresent

ports:

name

containerPort

livenessProbe

readinessProbe

liftcycle

ExecAction: exec

TCPSocketAction:tcpSocket

HTTPGetAction: httpGet

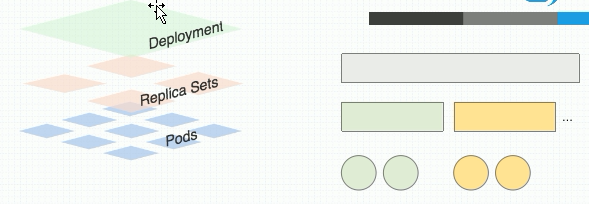

2、Pod控制器

几种控制器说明

Pod控制器: ReplicationController: ReplicaSet: Deployment: DaemonSet: Job: Cronjob: StatefulSet TPR: Third Party Resources, 1.2+, 1.7 CDR: Custom Defined Resources, 1.8+ Operator: Helm:更新慢,暂时用的不多。 Pod资源以外删除,不会被重建。 由控制器代管。 Rc:过于庞大 Job,一次性作业,保证周期性任务正常退出 Cronjob:周期性运行。 Deployment:只适用于无状态应用。关注群体行为。一群鸡,吃掉后买一只鸡苗。 StatefulSet:关注个体,哈士奇,投入感情。如redis-cluter,mysql。只是封装,需要手动封装脚本实现。在k8s上要求极其高,每种应用单独对待,对运维要求高。

3、控制器操作实践

ReplicaSet控制演示

[root@k8s-master ~]# kubectl get deploy,rs,pod No resources found. [root@k8s-master ~]# cat yas/rs-demo.yaml apiVersion: apps/v1 kind: ReplicaSet metadata: name: myapp namespace: default spec: replicas: 2 selector: matchLabels: app: myapp release: canary template: metadata: name: myapp-pod labels: app: myapp release: canary envirment: qa spec: containers: - name: myapp-container image: ikubernetes/myapp:v1 ports: - name: http containerPort: 80 [root@k8s-master ~]# kubectl create -f yas/rs-demo.yaml replicaset.apps/myapp created [root@k8s-master ~]# kubectl get deploy,rs,pod NAME DESIRED CURRENT READY AGE replicaset.extensions/myapp 2 2 2 3m10s NAME READY STATUS RESTARTS AGE pod/myapp-gwqpd 1/1 Running 0 3m9s pod/myapp-l9lvz 1/1 Running 0 3m9s [root@k8s-master ~]# kubectl describe pods myapp-gwqpd # 查看容器详细信息。 [root@k8s-master ~]# kubectl delete pod myapp-l9lvz # 此时直接删除pod,控制器作用会重建。 pod "myapp-l9lvz" deleted [root@k8s-master ~]# kubectl get pod -owide 更改pods数量,把pods由三个变为2个。 [root@k8s-master ~]# kubectl edit rs myapp # 编辑副本数replicas: 3,保存退出后即生效。 [root@k8s-master ~]# kubectl get rs NAME DESIRED CURRENT READY AGE myapp 3 3 2 18h [root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE myapp-6zr5r 1/1 Running 1 18h myapp-gwqpd 1/1 Running 1 18h myapp-h76x5 1/1 Running 0 4s [root@k8s-master ~]# kubectl get rs -owide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR myapp 3 3 3 18h myapp-container ikubernetes/myapp:v1 app=myapp,release=canary

Pod升级:(ikubernetes/myapp该镜像有时候只有V1、V3、V4版本可用)

编辑文件之后,只有重建资源,才会升级更新。

$ vim yas/rs-demo.yaml 升级到v2版本-->调整image镜像:image: ikubernetes/myapp:v2

$ kubectl apply -f yas/rs-demo.yaml

[root@k8s-master ~]# kubectl get pods -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES myapp-cklcv 1/1 Running 0 4m58s 10.244.1.223 k8s-node1 <none> <none> myapp-z2lsn 1/1 Running 0 4m58s 10.244.2.19 k8s-node2 <none> <none> [root@k8s-master ~]# curl 10.244.1.223 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> [root@k8s-master ~]# curl 10.244.2.19 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> [root@k8s-master ~]# kubectl delete pod myapp-cklcv #配置文件先做好,然后依次删除pods,对应rs来说是无感知状态。 pod "myapp-cklcv" deleted [root@k8s-master ~]# kubectl get pods -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES myapp-j7bjb 1/1 Running 0 20s 10.244.1.224 k8s-node1 <none> <none> myapp-z2lsn 1/1 Running 0 6m18s 10.244.2.19 k8s-node2 <none> <none> [root@k8s-master ~]# curl 10.244.1.224 Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a> [root@k8s-master ~]# curl 10.244.2.19 注意:不要一次性删除,会影响用户访问效果(需要手动删除pod)。

Deploymen控制器

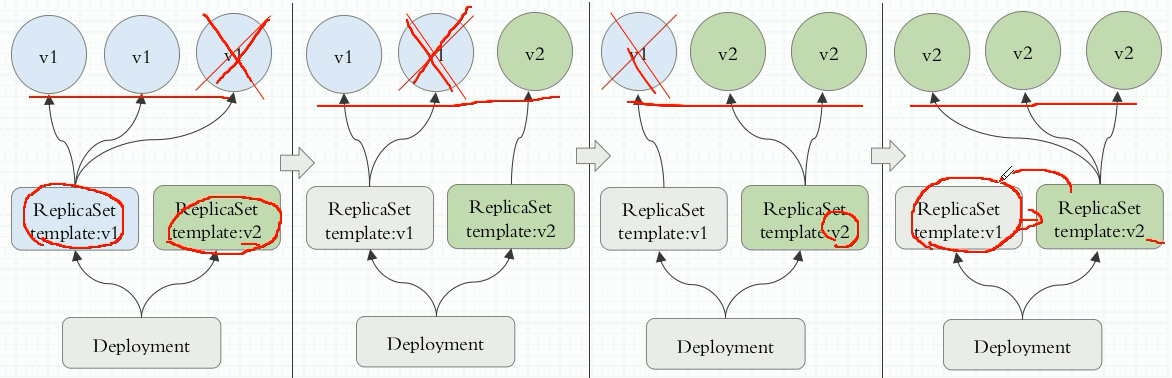

Deployment实现自动升级,明显优于ReplicaSet,建立在RS之上。如下,

[root@k8s-master yas]# cat deploy-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deploy

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: myapp

release: canary

template:

metadata:

name: myapp

labels:

app: myapp

release: canary

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

ports:

- name: http

containerPort: 80

[root@k8s-master yas]# kubectl create -f deploy-demo.yaml

deployment.apps/myapp-deploy created

[root@k8s-master ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

myapp-deploy 2/2 2 2 11s

[root@k8s-master ~]# kubectl get rs

NAME DESIRED CURRENT READY AGE

myapp-deploy-9699554f5 2 2 2 14s

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

myapp-deploy-9699554f5-2phbb 1/1 Running 0 45s

myapp-deploy-9699554f5-xtnds 1/1 Running 0 45s

同样修改镜像版本信息,image: ikubernetes/myapp:v2

Apply创建或者更新,Create只能创建

[root@k8s-master yas]# vim deploy-demo.yaml

root@k8s-master yas]# kubectl apply -f deploy-demo.yaml

此时可以看到,只要更新完成后,所有pod自动更新镜像。

-w查看更新过程:kubectl get pods -w

history版本状态查询:kubectl rollout history deploy/myapp-deploy

通过打补丁方式修改rs数量等操作

kubectl patch deployment myapp-deploy -p '{"spec":{"replicas":5}} # 修改副本

kubectl patch deployment myapp-deploy -p '{"spec":{"strategy":{"rollingUpdate":{"maxSurge":0 ,"maxUnavailable":1}}}}'

[root@k8s-master yas]# kubectl describe deploy myapp-deploy

...

MinReadySeconds: 0

RollingUpdateStrategy: 1 max unavailable, 0 max surge

Pod Template:

Labels: app=myapp

release=can

...

金丝雀发布,一个一个的删除替换,通过pause逐步替换实现。

[root@k8s-master ~]# kubectl rollout history 显示 rollout 历史 pause 标记提供的 resource 为中止状态 resume 继续一个停止的 resource status 显示 rollout 的状态 undo 撤销上一次的 rollout [root@k8s-master yas]# kubectl set image deployment/myapp-deploy myapp=ikubernetes/myapp:v3 && kubectl rollout pause deployment myapp-deploy deployment.extensions/myapp-deploy image updated deployment.extensions/myapp-deploy paused [root@k8s-master ~]# kubectl rollout status deploy/myapp-deploy 监视器1 [root@k8s-master ~]# kubectl get pods -l app -w 监视器2 [root@k8s-master ~]# kubectl rollout resume deployment/myapp-deploy && kubectl rollout pause deployment myapp-deploy # 解除,然后继续暂停 deployment.extensions/myapp-deploy resumed deployment.extensions/myapp-deploy paused 会继续更新第二个pod,然后暂停了。

回滚操作:

kubectrollout history deploy/myapp-deploy # 查看历史版本

$ kubectl rollout undo deploy/myapp-deploy --to-revision=1

error: you cannot rollback a paused deployment; resume it first with 'kubectl rollout resume deployment/myapp-deploy' and try again #提示上面暂停了,不能回滚,需要解除暂停后安排回滚:kubectl rollout resume deployment/myapp-deploy

$ kubectl rollout resume deployment/myapp-deploy deployment.extensions/myapp-deploy resumed $ kubectl rollout undo deploy/myapp-deploy --to-revision=1 deployment.extensions/myapp-deploy rolled back $ kubectl get pods -owide $ kubectl get rs -owide $ kubectl get deploy -owide $ curl 10.244.1.232 # 验证po服务版本信息

Demonset介绍

关注gitthub仓库:https://hub.docker.com/r/ikubernetes/filebeat/tags

$ docker pull ikubernetes/filebeat:5.6.5-alpine

$ docker images

$ docker image inspect ikubernetes/filebeat:5.6.5-alpine

$ cat ds-demo.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: redis

release: logstor

template:

metadata:

labels:

app: redis

release: logstor

spec:

containers:

- name: reids

image: redis:4.0-alpine

ports:

- name: redis

containerPort: 6379

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat-ds

namespace: default

spec:

selector:

matchLabels:

app: filebeat

release: stable

template:

metadata:

labels:

app: filebeat

release: stable

spec:

containers:

- name: filebeat

image: ikubernetes/filebeat:5.6.5-alpine

env:

- name: REDIS_HOST

value: redis.default.svc.cluster.local

- name: REDIS_LOG_LEVEL

value: info

$ kubectl create -f ds-demo.yaml

$ kubectl get pods

$ kubectl expose deploy redis --port=6379

$ kubectl get deploy redis

$ kubectl get svc redis

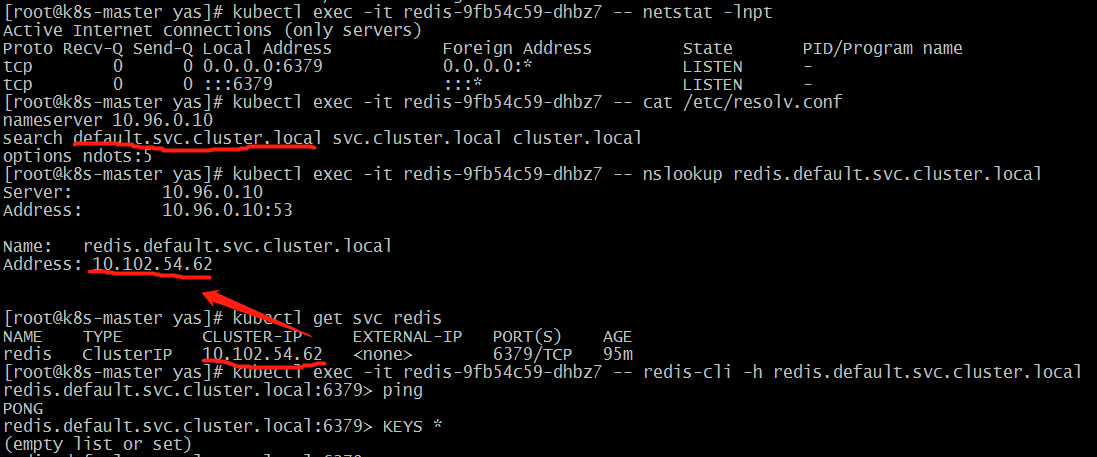

$ kubectl exec -it redis-9fb54c59-dhbz7 -- /bin/sh

$ kubectl exec -it redis-9fb54c59-dhbz7 -- netstat -lnpt

$ kubectl exec -it redis-9fb54c59-dhbz7 -- cat /etc/resolv.conf

$ kubectl exec -it redis-9fb54c59-dhbz7 -- nslookup redis.default.svc.cluster.local # 解析ip跟kubectl get svc redis获取匹配一致。

$ kubectl exec -it redis-9fb54c59-dhbz7 -- redis-cli -h redis.default.svc.cluster.local

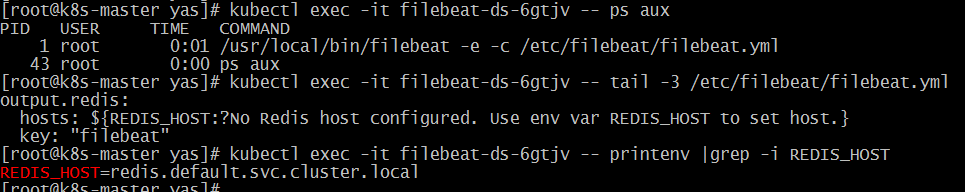

$ kubectl exec -it filebeat-ds-6gtjv -- ps aux

$ kubectl exec -it filebeat-ds-6gtjv -- tail -3 /etc/filebeat/filebeat.yml

$ kubectl exec -it filebeat-ds-6gtjv -- printenv |grep -i REDIS_HOST

如上,发现fillebeat有配环境变量,但是没有加载成功。应该是启动加载顺序的问题。

综上,,先是redis服务启动,并暴露服务,最后filebeat添加redis变量,启动服务。

升级,也是一个个pod依次执行...

[root@k8s-master yas]# kubectl set image ds filebeat-ds filebeat=ikubernetes/filebeat:5.6.6-alpine

daemonset.extensions/filebeat-ds image updated

[root@k8s-master yas]# kubectl get pods -w

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 25岁的心里话

· 闲置电脑爆改个人服务器(超详细) #公网映射 #Vmware虚拟网络编辑器

· 零经验选手,Compose 一天开发一款小游戏!

· 通过 API 将Deepseek响应流式内容输出到前端

· AI Agent开发,如何调用三方的API Function,是通过提示词来发起调用的吗