CentOS7部署kafka服务

1、安装jdk

[root@linux-host1 ~]# tail /etc/profile

export JAVA_HOME=/usr/local/jdk1.8.0_161

export PATH=$JAVA_HOME/bin:$PATH

[root@linux-host1 ~]# source /etc/profile

[root@linux-host1 ~]# which java

/usr/local/jdk1.8.0_161/bin/java

[root@linux-host1 ~]# java -version

2、安装zookeeper

[root@linux-host1 ~]# cd /opt/zookeeper-3.4.14/

[root@linux-host1 zookeeper-3.4.14]# ./bin/zkServer.sh start

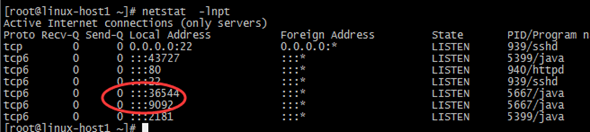

[root@linux-host1 ~]# netstat -lnpt

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 939/sshd

tcp6 0 0 :::43727 :::* LISTEN 5399/java

tcp6 0 0 :::80 :::* LISTEN 940/httpd

tcp6 0 0 :::22 :::* LISTEN 939/sshd

tcp6 0 0 :::2181 :::* LISTEN 5399/java

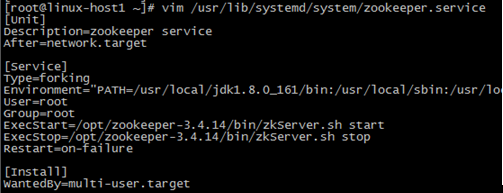

配置systemd启动服务,

[root@linux-host1 ~]# vim /usr/lib/systemd/system/zookeeper.service

[Unit]

Description=zookeeper service

After=network.target

[Service]

Type=forking

Environment="PATH=/usr/local/jdk1.8.0_161/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin"

User=root

Group=root

ExecStart=/opt/zookeeper-3.4.14/bin/zkServer.sh start

ExecStop=/opt/zookeeper-3.4.14/bin/zkServer.sh stop

Restart=on-failure

[Install]

WantedBy=multi-user.target

[root@linux-host1 ~]# systemctl daemon-reload

[root@linux-host1 ~]# systemctl stop zookeeper

[root@linux-host1 ~]# systemctl status zookeeper

3、安装kafka

Kafka解压目录:/root/ansibles/kafka/kafka_2.12-1.0.0

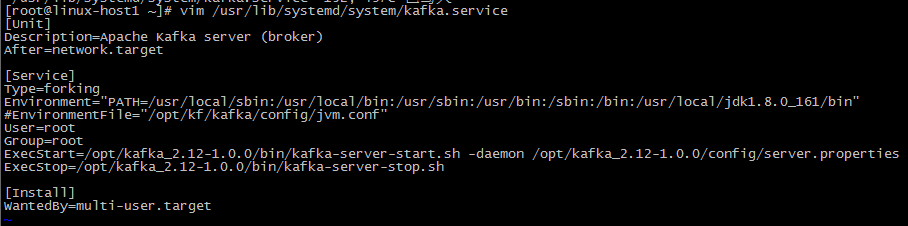

[root@linux-host1 ~]# vim /lib/systemd/system/kafka.service

[Unit]

Description=Apache Kafka server (broker)

After=network.target

[Service]

Type=forking

Environment="PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/jdk1.8.0_161/bin"

User=root

Group=root

ExecStart=/opt/kafka_2.12-1.0.0/bin/kafka-server-start.sh -daemon /opt/kafka_2.12-1.0.0/config/server.properties

ExecStop=/opt/kafka_2.12-1.0.0/bin/kafka-server-stop.sh

[Install]

WantedBy=multi-user.target

[root@linux-host1 ~]# systemctl daemon-reload

[root@linux-host1 ~]# systemctl status kafka

[root@linux-host1 ~]# systemctl start kafka

相关服务端口,

完成kafka安装部署。

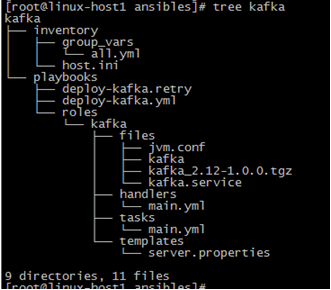

4、Ansible部署kafka服务

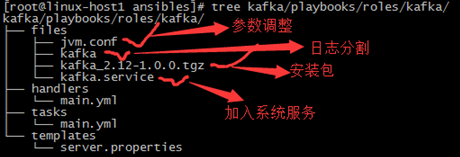

如上,主目录结构,配置主tasks服务。

启动服务:ansible-playbook -i inventory/host.ini playbooks/deploy-kafka.yml

[root@linux-host1 ansibles]# cat kafka/inventory/group_vars/all.yml

url: http://archive.apache.org/dist/kafka/1.0.0/kafka_2.12-1.0.0.tgz

zookeeper: "192.168.19.138:2181"

[root@linux-host1 ansibles]# cat kafka/inventory/host.ini

[kafkas]

192.168.19.139

[root@linux-host1 ansibles]# cat kafka/playbooks/deploy-kafka.yml

---

- name: Deploy kafka server

hosts: kafkas

become: yes

roles:

- { role: kafka }

[root@linux-host1 ansibles]# cat kafka/playbooks/roles/kafka/handlers/main.yml

---

- name: reload systemd

command: systemctl daemon-reload

- name: kafka restart service

service: name=kafka state=restarted

[root@linux-host1 ansibles]# cat kafka/playbooks/roles/kafka/templates/server.properties

日志切割文件配置说明:

##:https://blog.csdn.net/weixin_34233421/article/details/91504031

/opt/kf/kafka/logs/*.log { #第一行:指明日志文件位置,多个以空格分隔

missingok #在日志轮循期间,任何错误将被忽略,例如“文件无法找到”之类的错误。

rotate 7 #总共轮换多少个日志文件,这里为保留7个

notifempty #如果是空文件的话,不分割

daily #调用频率,按天轮训。有:daily,weekly,monthly可选

copytruncate #用于当前日志文件,把当前日志分割备份

compress #通过gzip 压缩日志

delaycompress #delaycompress 和 compress 一起使用时,分割的日志文件到下一次分割时才压缩

}

主机说明:

Ansible:192.168.19.138

Client:192.168.19.139

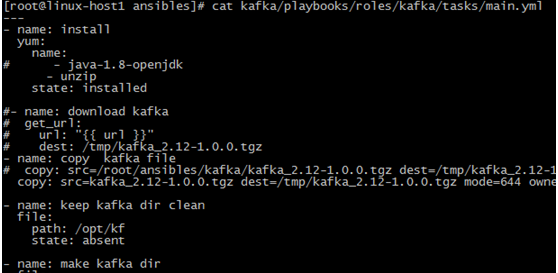

主线:kafka/playbooks/roles/kafka/tasks/main.yml

准备文件:

完成部署。

配置链接:git@github.com:Wangwang12345/ansiblepro.git

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· AI与.NET技术实操系列:基于图像分类模型对图像进行分类

· go语言实现终端里的倒计时

· 如何编写易于单元测试的代码

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 25岁的心里话

· 闲置电脑爆改个人服务器(超详细) #公网映射 #Vmware虚拟网络编辑器

· 零经验选手,Compose 一天开发一款小游戏!

· 通过 API 将Deepseek响应流式内容输出到前端

· AI Agent开发,如何调用三方的API Function,是通过提示词来发起调用的吗