tensorflow学习016——卷积神经网络识别Fashion mnist数据集

4.2卷积神经网络识别Fashion mnist数据集

点击查看代码

from tensorflow import keras

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

fashion_mnist = keras.datasets.fashion_mnist

(train_images, train_labels), (test_images, test_labels) = fashion_mnist.load_data()

#train_images(60000,28,28) test_images (10000,28,28)

# (个数,高,宽)

#扩张其维度 (60000,28,28,1) 第四维是它的通道数 黑白图的通道数是1 这是卷积神经网络的输入形状

#这里也可以使用reshape()方法

train_images = np.expand_dims(train_images, -1)

test_images = np.expand_dims(test_images, -1)

model = tf.keras.Sequential() #顺序模型

#第一层使用卷积层提取特征 32表示对每一个图片使用的卷积核的个数,此时每个图片就变成了(height,width,32)

#(3,3)表示卷积核的大小 input_shape表述输入的大小维(height,width.channel)

model.add(tf.keras.layers.Conv2D(32,(3,3),input_shape=(28,28,1),activation='relu')) #这里美誉使用padding 所以每张图片形状(26,26,32)

model.add(tf.keras.layers.MaxPooling2D()) #默认是2*2 (13,13,32)

model.add(tf.keras.layers.Conv2D(64,(3,3),activation='relu')) #(11,11,64)

# GlobalAveragePooling2D() 可以拥戴代替Flatten() 将(height,width,channel)转换为(chnnels) 也就是将(height,width)平均化为一个数据

model.add(tf.keras.layers.GlobalAveragePooling2D())

model.add(tf.keras.layers.Dense(10,activation='softmax'))

print(model.summary())

model.compile(optimizer='adam', loss = 'sparse_categorical_crossentropy',metrics= ['acc'])

history = model.fit(train_images, train_labels, epochs=30, validation_data=(test_images,test_labels))

print(history.history.keys()) #dict_leys(['loss','acc','val_loss','val_acc'])

plt.rcParams['font.sans-serif'] = ['SimHei'] #可以在plt绘图过程中中文无法显示的问题

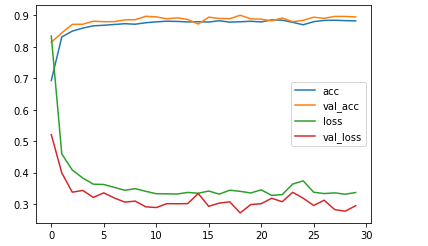

plt.plot(history.epoch, history.history.get('acc'), label = 'acc')

plt.plot(history.epoch, history.history.get('val_acc'),label = 'val_acc')

plt.plot(history.epoch,history.history.get('loss'), label = 'loss')

plt.plot(history.epoch, history.history.get('val_loss'), label = 'val_loss')

plt.legend() #显示图例,如果注释该行,即使设置了图例仍然不显示

plt.show() # 显示图片,如果注释该行,即使设置了图片仍不显示

#观看图像可以发现,对train数据拟合效果不是很好,因为正确率不高,对test则是过拟合了,正确率先升后降,所以我们可以增大网络的深度,可以加入dropout层

图4-4

点击查看代码

from tensorflow import keras

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

fashion_mnist = keras.datasets.fashion_mnist

(train_images, train_labels), (test_images, test_labels) = fashion_mnist.load_data()

#train_images(60000,28,28) test_images (10000,28,28)

# (个数,高,宽)

#扩张其维度 (60000,28,28,1) 第四维是它的通道数 黑白图的通道数是1 这是卷积神经网络的输入形状

#这里也可以使用reshape()方法

train_images = np.expand_dims(train_images, -1)

test_images = np.expand_dims(test_images, -1)

model = tf.keras.Sequential() #顺序模型

model.add(tf.keras.layers.Conv2D(64,(3,3),input_shape=(28,28,1),activation='relu', padding = 'same'))

model.add(tf.keras.layers.Conv2D(64,(3,3), activation='relu',padding='same'))

model.add(tf.keras.layers.MaxPooling2D())

model.add(tf.keras.layers.Dropout(0.5))

model.add(tf.keras.layers.Conv2D(128,(3,3), activation='relu',padding='same'))

model.add(tf.keras.layers.Conv2D(128,(3,3), activation='relu',padding='same'))

model.add(tf.keras.layers.MaxPooling2D())

model.add(tf.keras.layers.Dropout(0.5))

model.add(tf.keras.layers.Conv2D(256,(3,3), activation='relu',padding='same'))

model.add(tf.keras.layers.Conv2D(256,(3,3), activation='relu',padding='same'))

model.add(tf.keras.layers.MaxPooling2D())

model.add(tf.keras.layers.Dropout(0.5))

model.add(tf.keras.layers.Conv2D(512,(3,3), activation='relu',padding='same'))

model.add(tf.keras.layers.Conv2D(512,(3,3), activation='relu',padding='same'))

model.add(tf.keras.layers.Dropout(0.5))

model.add(tf.keras.layers.GlobalAveragePooling2D())

model.add(tf.keras.layers.Dense(256,activation='relu'))

model.add(tf.keras.layers.Dense(10,activation='softmax'))

model.compile(optimizer='adam', loss = 'sparse_categorical_crossentropy',metrics= ['acc'])

history = model.fit(train_images, train_labels, epochs=30, validation_data=(test_images,test_labels))

print(history.history.keys()) #dict_leys(['loss','acc','val_loss','val_acc'])

plt.rcParams['font.sans-serif'] = ['SimHei'] #可以在plt绘图过程中中文无法显示的问题

plt.plot(history.epoch, history.history.get('acc'), label = 'acc')

plt.plot(history.epoch, history.history.get('val_acc'),label = 'val_acc')

plt.plot(history.epoch,history.history.get('loss'), label = 'loss')

plt.plot(history.epoch, history.history.get('val_loss'), label = 'val_loss')

plt.legend() #显示图例,如果注释该行,即使设置了图例仍然不显示

plt.show() # 显示图片,如果注释该行,即使设置了图片仍不显示

图4-5

实际对fashion mnist进行卷积操作不需要这么多层,这里只是为了说明,当层数增加时,有利于准确率的提高。

作者:孙建钊

出处:http://www.cnblogs.com/sunjianzhao/

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接,否则保留追究法律责任的权利。