【ceph运维】nautilus版含秘钥三节点ceph集群手动部署

一、基础配置

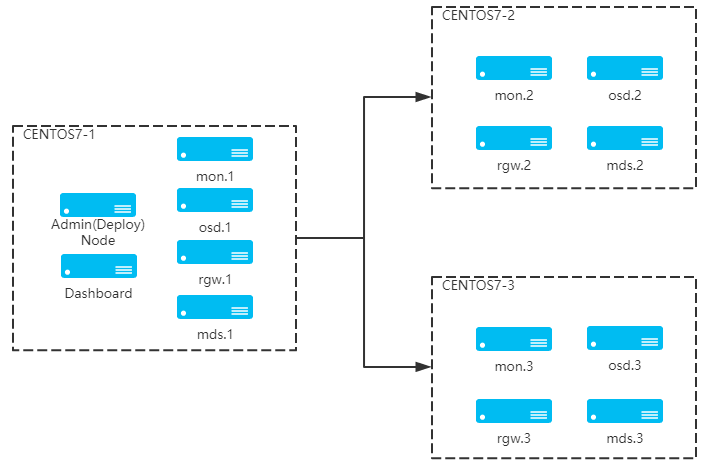

集群拓扑图:

三台环境为centos7,以下配置需要在每台机器上执行。

| 名称 | 主机IP | 说明 |

| node01 | 192.168.19.101 | mon、mds、rgw、mgr、osd |

| node02 | 192.168.19.102 | mon、mds、rgw、mgr、osd |

| node03 | 192.168.19.103 | mon、mds、rgw、mgr、osd |

1. 配置host 解析:

cat >> /etc/hosts <<EOF

192.168.19.101 node1

192.168.19.102 node2

192.168.19.103 node3

EOF2. 关闭防火墙和selinux:

systemctl stop firewalld && systemctl disable firewalld

setenforce 0 && sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config3. 分别在三个节点设置主机名:

hostnamectl set-hostname node1

hostnamectl set-hostname node2

hostnamectl set-hostname node34. 配置主机时间同步:

systemctl restart chronyd.service && systemctl enable chronyd.service5. 设置/etc/ceph/ceph.conf配置项:

auth client required = cephx

auth cluster required = cephx

auth service required = cephx配置文件/etc/ceph/ceph.conf:

ceph.conf

[client]

rbd_cache_max_dirty = 100663296

rbd_cache_max_dirty_age = 5

rbd_cache_size = 134217728

rbd_cache_target_dirty = 67108864

rbd_cache_writethrough_until_flush = True

[global]

auth client required = none

auth cluster required = none

auth service required = none

auth supported = none

cluster network = 0.0.0.0/0

fsid = 62bd6aeb-2da0-4a3e-b902-2bceb82abda9

mon host = [v2:192.168.19.101:3300,v1:192.168.19.101:6789][v2:192.168.19.102:3300,v1:192.168.19.102:6789][v2:192.168.19.103:3300,v1:192.168.19.103:6789]

mon initial members = node01

mon_max_pg_per_osd = 600

mon_osd_down_out_interval = 8640000

osd pool default crush rule = -1

osd_pool_default_min_size = 1

osd_pool_default_size = 3

public network = 172.17.0.9/26

mon_allow_pool_delete = true

[mds.node01]

host = node01

[mgr]

mgr_stats_threshold = 0

[client.rgw.node01.rgw0]

host = 172.17.0.9

keyring = /var/lib/ceph/radosgw/ceph-rgw.node01.rgw0/keyring

log file = /var/log/ceph/ceph-rgw-cephqa07.rgw0.log

rgw frontends = civetweb port=7480 num_threads=10000

rgw thread pool size = 512

rgw_s3_auth_use_rados = true

rgw_s3_auth_use_keystone = false

rgw_s3_auth_aws4_force_boto2_compat = false

rgw_s3_auth_use_ldap = false

rgw_s3_success_create_obj_status = 0

rgw_relaxed_s3_bucket_names = false

[osd]

bdev_async_discard = True

bdev_enable_discard = True

bluestore_block_db_size = 107374182400

bluefs_shared_alloc_size = 262144

bluestore_min_alloc_size_hdd = 262144

filestore_commit_timeout = 3000

filestore_fd_cache_size = 2500

filestore_max_inline_xattr_size = 254

filestore_max_inline_xattrs = 6

filestore_max_sync_interval = 10

filestore_op_thread_suicide_timeout = 600

filestore_op_thread_timeout = 580

filestore_op_threads = 10

filestore_queue_max_bytes = 1048576000

filestore_queue_max_ops = 50000

filestore_wbthrottle_enable = False

journal_max_write_bytes = 1048576000

journal_max_write_entries = 1000

journal_queue_max_bytes = 1048576000

journal_queue_max_ops = 3000

max_open_files = 327680

osd memory target = 10737418240

osd_client_message_cap = 10000

osd_enable_op_tracker = False

osd_heartbeat_grace = 60

osd_heartbeat_interval = 15

osd_journal_size = 20480

osd_max_backfills = 1

osd_op_num_shards_hdd = 32

osd_op_num_threads_per_shard_ssd = 1

osd_op_thread_suicide_timeout = 600

osd_op_thread_timeout = 580

osd_op_threads = 10

osd_recovery_max_active = 3

osd_recovery_max_single_start = 1

osd_recovery_thread_suicide_timeout = 600

osd_recovery_thread_timeout = 580

osd_scrub_begin_hour = 2

osd_scrub_end_hour = 6

osd_scrub_load_threshold = 5

osd_scrub_sleep = 2

osd_scrub_thread_suicide_timeout = 600

osd_scrub_thread_timeout = 580

rocksdb_perf = True二、部署mon

1. 为集群生成一个唯一的fsid:

uuidgen2. 为此集群创建密钥环 keyring 并生成监视器密钥 :

sudo ceph-authtool --create-keyring /tmp/ceph.mon.keyring --gen-key -n mon. --cap mon 'allow *'输出:

[root@node01 ~]# cat /tmp/ceph.mon.keyring

[mon.]

key = AQBQYt9kQr8SDBAA05KJPJ7wj/cojuhu6rvVWQ==

caps mon = "allow *"3. 生成管理员密钥环 keyring,生成 client.admin 用户并加入密钥环:

sudo ceph-authtool --create-keyring /etc/ceph/ceph.client.admin.keyring --gen-key -n client.admin --cap mon 'allow *' --cap osd 'allow *' --cap mds 'allow *' --cap mgr 'allow *'输出:

[root@node01 ~]# cat /etc/ceph/ceph.client.admin.keyring

[client.admin]

key = AQB3Yt9kcV0CHxAA2ljATohTAe0u75zEtyTgLA==

caps mds = "allow *"

caps mgr = "allow *"

caps mon = "allow *"

caps osd = "allow *"4. 生成引导 osd 密钥,生成用户并将用户添加到 client.bootstrap-osd keyring :

sudo ceph-authtool --create-keyring /var/lib/ceph/bootstrap-osd/ceph.keyring --gen-key -n client.bootstrap-osd --cap mon 'profile bootstrap-osd' --cap mgr 'allow r'输出:

[root@node01 ~]# cat /var/lib/ceph/bootstrap-osd/ceph.keyring

[client.bootstrap-osd]

key = AQCmYt9kmTFMBBAAW8+Rwnv3iuRU6f2qVo5kXw==

caps mgr = "allow r"

caps mon = "profile bootstrap-osd"5. 将生成的keyring 导入到 ceph.mon.keyring:

sudo ceph-authtool /tmp/ceph.mon.keyring --import-keyring /etc/ceph/ceph.client.admin.keyring

sudo ceph-authtool /tmp/ceph.mon.keyring --import-keyring /var/lib/ceph/bootstrap-osd/ceph.keyring输出:

[root@node01 ~]# sudo ceph-authtool /tmp/ceph.mon.keyring --import-keyring /etc/ceph/ceph.client.admin.keyring

importing contents of /etc/ceph/ceph.client.admin.keyring into /tmp/ceph.mon.keyring

[root@node01 ~]# cat /tmp/ceph.mon.keyring

[mon.]

key = AQBQYt9kQr8SDBAA05KJPJ7wj/cojuhu6rvVWQ==

caps mon = "allow *"

[client.admin]

key = AQB3Yt9kcV0CHxAA2ljATohTAe0u75zEtyTgLA==

caps mds = "allow *"

caps mgr = "allow *"

caps mon = "allow *"

caps osd = "allow *"

[root@node01 ~]# sudo ceph-authtool /tmp/ceph.mon.keyring --import-keyring /var/lib/ceph/bootstrap-osd/ceph.keyring

importing contents of /var/lib/ceph/bootstrap-osd/ceph.keyring into /tmp/ceph.mon.keyring

[root@node01 ~]# cat /tmp/ceph.mon.keyring

[mon.]

key = AQBQYt9kQr8SDBAA05KJPJ7wj/cojuhu6rvVWQ==

caps mon = "allow *"

[client.admin]

key = AQB3Yt9kcV0CHxAA2ljATohTAe0u75zEtyTgLA==

caps mds = "allow *"

caps mgr = "allow *"

caps mon = "allow *"

caps osd = "allow *"

[client.bootstrap-osd]

key = AQCmYt9kmTFMBBAAW8+Rwnv3iuRU6f2qVo5kXw==

caps mgr = "allow r"

caps mon = "profile bootstrap-osd"6. 更改 ceph.mon.keyring 的所有者:

sudo chown ceph:ceph /tmp/ceph.mon.keyring7. 使用主机名、主机 IP 地址和 FSID 生成monitor映射,保存在 文件 /tmp/monmap中:

monmaptool --create --addv node01 [v2:192.168.19.101:3300,v1:192.168.19.101:6789] --fsid 33af1a28-8923-4d40-af06-90c376ed74b0 /tmp/monmap8. 在mon主机上创建默认数据目录,目录名是{cluster-name}-{hostname}格式 :

sudo -u ceph mkdir /var/lib/ceph/mon/ceph-node019. 在node1节点对monitor进行初始化:

sudo -u ceph ceph-mon --mkfs -i node01 --monmap /tmp/monmap --keyring /tmp/ceph.mon.keyring10. 将配置文件拷贝到其他节点:

scp /etc/ceph/ceph.conf node2:/etc/ceph/ceph.conf

scp /etc/ceph/ceph.conf node3:/etc/ceph/ceph.conf11. 将mon keyring,mon map及admin keyring拷贝到其他节点

scp /tmp/ceph.mon.keyring node2:/tmp/ceph.mon.keyring

scp /etc/ceph/ceph.client.admin.keyring node2:/etc/ceph/ceph.client.admin.keyring

scp /tmp/monmap node2:/tmp/monmap

scp /tmp/ceph.mon.keyring node3:/tmp/ceph.mon.keyring

scp /etc/ceph/ceph.client.admin.keyring node3:/etc/ceph/ceph.client.admin.keyring

scp /tmp/monmap node3:/tmp/monmap12. 在node2、node3上添加monitor,分别在这两个节点创建数据目录:

sudo -u ceph mkdir /var/lib/ceph/mon/ceph-`hostname -s`即:

sudo -u mkdir /var/lib/ceph/mon/ceph-node02

sudo -u mkdir /var/lib/ceph/mon/ceph-node0313. 分别在这两个节点对monitor进行初始化:

sudo -u ceph ceph-mon --mkfs -i `hostname -s` --monmap /tmp/monmap --keyring /tmp/ceph.mon.keyring即:

sudo -u ceph ceph-mon --mkfs -i node02 --monmap /tmp/monmap --keyring /tmp/ceph.mon.keyring

sudo -u ceph ceph-mon --mkfs -i node03 --monmap /tmp/monmap --keyring /tmp/ceph.mon.keyring14. 分别在三个节点启动ceph-mon服务:

systemctl start ceph-mon@`hostname -s`

systemctl enable ceph-mon@`hostname -s`启动失败可以尝试:

ceph-mon -i node01 -c /etc/ceph/ceph.conf --cluster ceph三、部署osd

执行命令如下:

ceph-volume lvm create --bluestore --data /dev/sdx --block.wal /dev/nsdy --block.db /dev/sdzceph-volume lvm create --bluestore --data /dev/sdx --block.db /dev/sdyceph-volume lvm create --bluestore --data /dev/sdx输出:

[root@node01 mds]# ceph-volume lvm create --bluestore --data /dev/sdd

Running command: /usr/bin/ceph-authtool --gen-print-key

Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 411a36a0-15c1-4c62-b329-144c3af709f9

Running command: /usr/sbin/vgcreate --force --yes ceph-8ac53778-44de-4dba-9240-7cabd1e9ea44 /dev/sdd

stdout: Physical volume "/dev/sdd" successfully created.

stdout: Volume group "ceph-8ac53778-44de-4dba-9240-7cabd1e9ea44" successfully created

Running command: /usr/sbin/lvcreate --yes -l 5119 -n osd-block-411a36a0-15c1-4c62-b329-144c3af709f9 ceph-8ac53778-44de-4dba-9240-7cabd1e9ea44

stdout: Logical volume "osd-block-411a36a0-15c1-4c62-b329-144c3af709f9" created.

Running command: /usr/bin/ceph-authtool --gen-print-key

Running command: /usr/bin/mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-2

Running command: /usr/sbin/restorecon /var/lib/ceph/osd/ceph-2

Running command: /usr/bin/chown -h ceph:ceph /dev/ceph-8ac53778-44de-4dba-9240-7cabd1e9ea44/osd-block-411a36a0-15c1-4c62-b329-144c3af709f9

Running command: /usr/bin/chown -R ceph:ceph /dev/dm-5

Running command: /usr/bin/ln -s /dev/ceph-8ac53778-44de-4dba-9240-7cabd1e9ea44/osd-block-411a36a0-15c1-4c62-b329-144c3af709f9 /var/lib/ceph/osd/ceph-2/block

Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-2/activate.monmap

stderr: 2023-08-18 20:42:16.224 7f17f5c36700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory

2023-08-18 20:42:16.224 7f17f5c36700 -1 AuthRegistry(0x7f17f0065508) no keyring found at /etc/ceph/ceph.client.bootstrap-osd.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,, disabling cephx

stderr: got monmap epoch 1

Running command: /usr/bin/ceph-authtool /var/lib/ceph/osd/ceph-2/keyring --create-keyring --name osd.2 --add-key AQAnZ99kM378IBAAmqyuZMYDv6JwMKIguWypmA==

stdout: creating /var/lib/ceph/osd/ceph-2/keyring

added entity osd.2 auth(key=AQAnZ99kM378IBAAmqyuZMYDv6JwMKIguWypmA==)

Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2/keyring

Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2/

Running command: /usr/bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 2 --monmap /var/lib/ceph/osd/ceph-2/activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-2/ --osd-uuid 411a36a0-15c1-4c62-b329-144c3af709f9 --setuser ceph --setgroup ceph

stderr: 2023-08-18 20:42:16.569 7fe7d5ad9a80 -1 bluestore(/var/lib/ceph/osd/ceph-2/) _read_fsid unparsable uuid

--> ceph-volume lvm prepare successful for: /dev/sdd

Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2

Running command: /usr/bin/ceph-bluestore-tool --cluster=ceph prime-osd-dir --dev /dev/ceph-8ac53778-44de-4dba-9240-7cabd1e9ea44/osd-block-411a36a0-15c1-4c62-b329-144c3af709f9 --path /var/lib/ceph/osd/ceph-2 --no-mon-config

Running command: /usr/bin/ln -snf /dev/ceph-8ac53778-44de-4dba-9240-7cabd1e9ea44/osd-block-411a36a0-15c1-4c62-b329-144c3af709f9 /var/lib/ceph/osd/ceph-2/block

Running command: /usr/bin/chown -h ceph:ceph /var/lib/ceph/osd/ceph-2/block

Running command: /usr/bin/chown -R ceph:ceph /dev/dm-5

Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/osd/ceph-2

Running command: /usr/bin/systemctl enable ceph-volume@lvm-2-411a36a0-15c1-4c62-b329-144c3af709f9

stderr: Created symlink from /etc/systemd/system/multi-user.target.wants/ceph-volume@lvm-2-411a36a0-15c1-4c62-b329-144c3af709f9.service to /usr/lib/systemd/system/ceph-volume@.service.

Running command: /usr/bin/systemctl enable --runtime ceph-osd@2

stderr: Created symlink from /run/systemd/system/ceph-osd.target.wants/ceph-osd@2.service to /usr/lib/systemd/system/ceph-osd@.service.

Running command: /usr/bin/systemctl start ceph-osd@2

--> ceph-volume lvm activate successful for osd ID: 2

--> ceph-volume lvm create successful for: /dev/sdd注意:部署OSD需要文件/var/lib/ceph/bootstrap-osd/ceph.keyring, 将node01的该文件拷贝到node02、node03,在node02、node03执行命令如下:

scp root@192.168.19.101:/var/lib/ceph/bootstrap-osd/ceph.keyring /var/lib/ceph/bootstrap-osd/

清除lvm格式的osd:

// 逻辑卷

ceph-volume lvm zap {vg name/lv name}

// 块设备

ceph-volume lvm zap /dev/sdc1摧毁osd:

ceph-volume lvm zap /dev/sdc --destroy逻辑卷无法删除:

dmsetup remove {lv name}四、部署mgr

1. mgr节点执行:

sudo -u ceph mkdir /var/lib/ceph/mgr/ceph-`hostname -s`2. 创建身份验证密钥:

ceph auth get-or-create mgr.`hostname -s` mon 'allow profile mgr' osd 'allow *' mds 'allow *' > /var/lib/ceph/mgr/ceph-`hostname -s`/keyring输出:

[root@node01 ~]# ceph auth get-or-create mgr.`hostname -s` mon 'allow profile mgr' osd 'allow *' mds 'allow *' > /var/lib/ceph/mgr/ceph-`hostname -s`/keyring

[root@node01 ~]# cat /var/lib/ceph/mgr/ceph-`hostname -s`/keyring

[mgr.node01]

key = AQCdZd9kPTGvFBAAArWhRWZqpNgVKgkQoYjtcA==3. 启动mgr:

systemctl start ceph-mgr@`hostname -s` && systemctl enable ceph-mgr@`hostname -s`五、部署mds

1. 创建mds数据目录:

sudo -u ceph mkdir -p /var/lib/ceph/mds/ceph-`hostname -s`2. 创建keyting

ceph-authtool --create-keyring /var/lib/ceph/mds/ceph-`hostname -s`/keyring --gen-key -n mds.`hostname -s`输出:

[root@node01 ~]# ceph-authtool --create-keyring /var/lib/ceph/mds/ceph-node01/keyring --gen-key -n mds.node01

creating /var/lib/ceph/mds/ceph-node01/keyring

[root@node01 ~]# cat /var/lib/ceph/mds/ceph-node01/keyring

[mds.node01]

key = AQCTZt9kycWODhAA/0jjsASGYrQgnFmq36+qbQ==3. 导入keyring并设置权限:

ceph auth add mds.`hostname -s` osd "allow rwx" mds "allow" mon "allow profile mds" -i /var/lib/ceph/mds/ceph-node01/keyringchown ceph:ceph /var/lib/ceph/mds/ceph-`hostname -s`/keyring启动mds:

systemctl start ceph-mds@node01 && systemctl enable ceph-mds@ndoe014. cephfs 需要两个pool池,cephfs_data 和 cephfs_metadata,分别存储文件数据和文件元数据

创造数据 pool

ceph osd pool create cephfs_data 4创造元数据pool

ceph osd pool create cephfs_metadata 4启动文件系统:# 元数据池在前,数据池在后

ceph fs new cephfs cephfs_metadata cephfs_data 增加数据池:

ceph fs add_data_pool cephfs cephfs_data02fuse挂载文件系统

#挂载目录 "/test"

ceph-fuse -n client.admin -r / /test六、部署rgw

1. 创建keyring:

ceph-authtool --create-keyring /etc/ceph/ceph.client.radosgw.keyring

chown ceph:ceph /etc/ceph/ceph.client.radosgw.keyring输出:

[root@node01 ~]# ceph-authtool --create-keyring /etc/ceph/ceph.client.radosgw.keyring

creating /etc/ceph/ceph.client.radosgw.keyring

[root@node01 ~]# cat /etc/ceph/ceph.client.radosgw.keyring

[root@node01 ~]#2. 生成ceph-radosgw服务对应的用户和key:

ceph-authtool /etc/ceph/ceph.client.radosgw.keyring -n client.rgw.node01 --gen-key输出:

[root@node01 ~]# ceph-authtool /etc/ceph/ceph.client.radosgw.keyring -n client.rgw.node01 --gen-key

[root@node01 ~]# cat /etc/ceph/ceph.client.radosgw.keyring

[client.rgw.node01]

key = AQA+aN9kW/wEHBAAWu1bMUojV9Ala6Hd+LYdEw==3. 添加用户访问权限:

ceph-authtool -n client.rgw.node01 --cap osd 'allow rwx' --cap mon 'allow rwx' /etc/ceph/ceph.client.radosgw.keyring输出:

[root@node01 ~]# ceph-authtool -n client.rgw.node01 --cap osd 'allow rwx' --cap mon 'allow rwx' /etc/ceph/ceph.client.radosgw.keyring

[root@node01 ~]# cat /etc/ceph/ceph.client.radosgw.keyring

[client.rgw.node01]

key = AQA+aN9kW/wEHBAAWu1bMUojV9Ala6Hd+LYdEw==

caps mon = "allow rwx"

caps osd = "allow rwx4. 将keyring导入集群中:

ceph -k /etc/ceph/ceph.client.admin.keyring auth add client.rgw.node01 -i /etc/ceph/ceph.client.radosgw.keyring5. 编辑配置文件:

[client.rgw.node01]

host = node01

keyring = /etc/ceph/ceph.client.radosgw.keyring #重点

log file = /var/log/ceph/ceph-rgw-node01.log

rgw frontends = civetweb port=8080 num_threads=10000

rgw thread pool size = 512

rgw_s3_auth_use_rados = true

rgw_s3_auth_use_keystone = false

rgw_s3_auth_aws4_force_boto2_compat = false

rgw_s3_auth_use_ldap = false

rgw_s3_success_create_obj_status = 0

rgw_relaxed_s3_bucket_names = false6. 启动rgw服务:

systemctl start ceph-radosgw@rgw.node01 && systemctl enable ceph-radosgw@rgw.node01

七、参考资料