hadoop 常见的运维命令 hdfs fsck

hadoop2.x主要分为hdfs和yarn

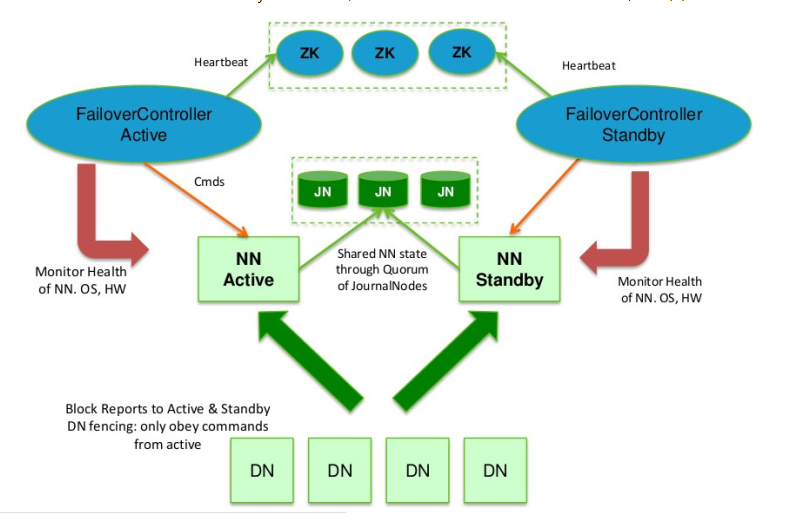

与hdfs上相关的角色:namenode(高可用情况下有2个)、datanode(若干个)、journal node(奇数个,一般为3个,用于高可用集群故障转移)、Failover Controller (这个角色向下监控name node,向上向zookeeper反映namenode的健康状态)httpfs(httpfs是一个hadoop hdfs的一个http接口,通过WebHDFS REST API 可以对hdfs进行读写等访问,可用于hue使用高可用的hdfs集群)。

Usage: hdfs [--config confdir] [--loglevel loglevel] COMMAND where COMMAND is one of: dfs run a filesystem command on the file systems supported in Hadoop. classpath prints the classpath namenode -format format the DFS filesystem secondarynamenode run the DFS secondary namenode namenode run the DFS namenode journalnode run the DFS journalnode zkfc run the ZK Failover Controller daemon datanode run a DFS datanode dfsadmin run a DFS admin client haadmin run a DFS HA admin client fsck run a DFS filesystem checking utility balancer run a cluster balancing utility jmxget get JMX exported values from NameNode or DataNode. mover run a utility to move block replicas across storage types oiv apply the offline fsimage viewer to an fsimage oiv_legacy apply the offline fsimage viewer to an legacy fsimage oev apply the offline edits viewer to an edits file fetchdt fetch a delegation token from the NameNode getconf get config values from configuration groups get the groups which users belong to snapshotDiff diff two snapshots of a directory or diff the current directory contents with a snapshot lsSnapshottableDir list all snapshottable dirs owned by the current user Use -help to see options portmap run a portmap service nfs3 run an NFS version 3 gateway cacheadmin configure the HDFS cache crypto configure HDFS encryption zones storagepolicies list/get/set block storage policies version print the version

检查hdfs 文件健康状态

[dip@dip001 ~]$ hdfs fsck /test/test.txt Connecting to namenode via http://dip001:50070 FSCK started by dip (auth:SIMPLE) from /172.21.25.127 for path /test/test.txt at Wed Nov 28 10:05:24 CST 2018 #健康状态 .Status: HEALTHY #总的大小 Total size: 1431 B Total dirs: 0 Total files: 1 Total symlinks: 0 Total blocks (validated): 1 (avg. block size 1431 B) Minimally replicated blocks: 1 (100.0 %) Over-replicated blocks: 0 (0.0 %) Under-replicated blocks: 0 (0.0 %) Mis-replicated blocks: 0 (0.0 %) Default replication factor: 3 Average block replication: 3.0 Corrupt blocks: 0 Missing replicas: 0 (0.0 %) Number of data-nodes: 3 Number of racks: 1 FSCK ended at Wed Nov 28 10:05:24 CST 2018 in 1 milliseconds The filesystem under path '/test/test.txt is HEALTHY

浙公网安备 33010602011771号

浙公网安备 33010602011771号