openstack基础

一:什么是云计算?

云计算是通过虚拟化技术去实现的,它是一种按量付费的模式!

二:为什么要用云计算?

小公司:1年 20人+ 500w 招一个运维 ,15K,(10台*1.5w,托管IDC机房,8k/年/每台,带宽100M,5个公网ip,10K/月),买10台云主机,600×10=6000

大公司:举行活动,加集群,把闲置时间出租,超卖(kvm,ksm)

16G,kvm,64G(ksm),金牌用户(200w+/月)

512G 物理服务器

90%用户 10%云厂商

30%用户 70%云厂商

4G

8台1G

三:云计算的服务类型

IDC

IAAS 基础设施即服务 ECS云主机 自己部署环境,自己管理代码和数据

infrastructure as an service

PAAS(docker) 平台即服务 提供软件的运行环境php,java,python,go,c#,nodejs 自己管理代码和数据

platform as an service

SAAS 软件即服务 企业邮箱,cdn,rds,

四:云计算IAAS有哪些功能?kvm虚拟化的管理平台(计费)

kvm: 1000宿主机(agent),虚拟机出2w虚拟机

虚拟机的详细情况:硬件资源,ip情况统计?

虚拟机管理平台:每台虚拟机的管理,都用数据库来统计

五:openstack实现的是云计算IAAS,开源的云计算平台,apache2.0开源协议,阿里云(飞天云平台)青云

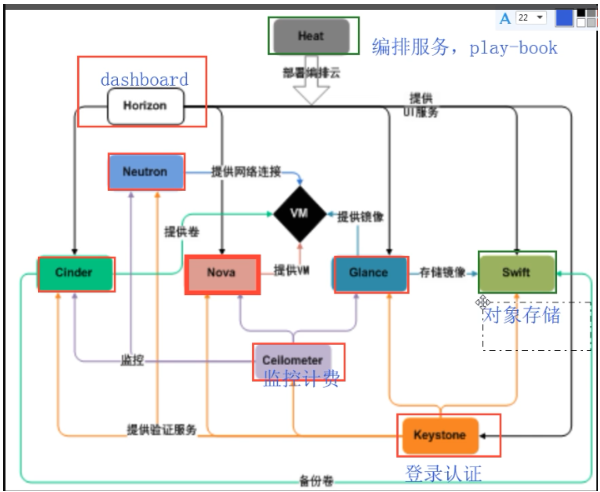

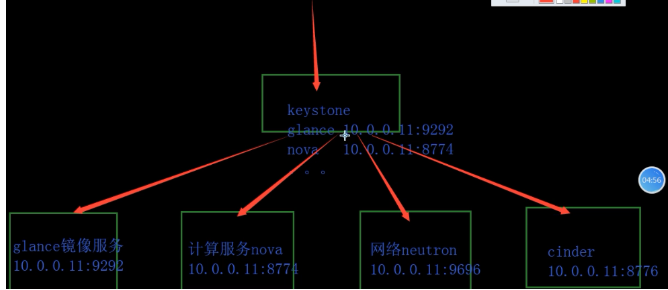

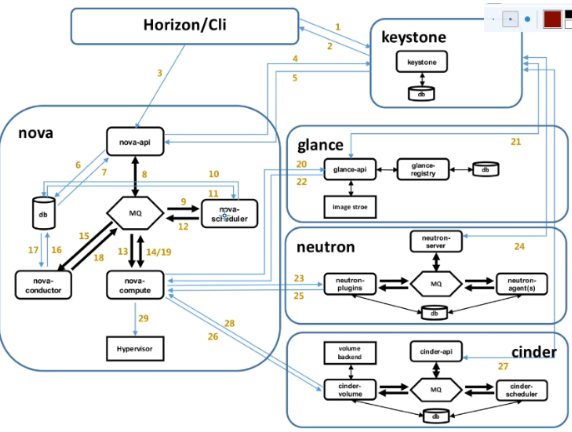

六.openstack (soa架构)

云平台(keystone认证服务,glance镜像服务,nova计算服务,neutron网络服务,cinder存储服务,horizonweb界面)

每个服务:数据库,消息队列,memcached缓存,时间同步

MVC(model view control)

首页 www.jd.com/index.html

秒杀 www.jd.com/miaosha/index.html

优惠券:www.jd.com/juan/index.html

会员:www.jd.com/plus/index.html

登录: www.jd.com/login/index.html

nginx+php+mysql(500张表)

SOA(拆业务,把每一个功能都拆成一个独立的web服务)千万用户同时访问

首页 www.jd.com/index.html(5张)+缓存+web+文件存储

秒杀 www.jd.com/miaosha/index.html(15张)

优惠券:www.jd.com/juan/index.html(15张)

会员:www.jd.com/plus/index.html(15张)

登录: www.jd.com/login/index.html(15张)

200个业务

微服务架构:亿级用户

阿里开源的dubbo

Spring Boot

自动化代码上线 Jenkins.gitlab.ci

自动化代码上线质量检测 sonarqube

openstack版本

A-Z 从d开始

七.虚拟机规划

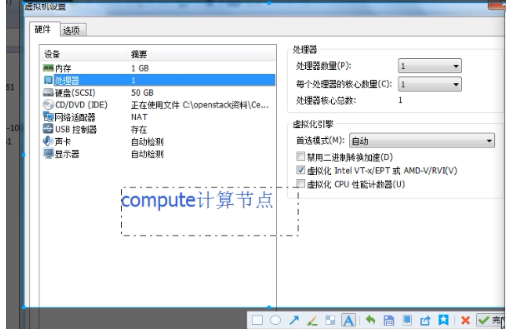

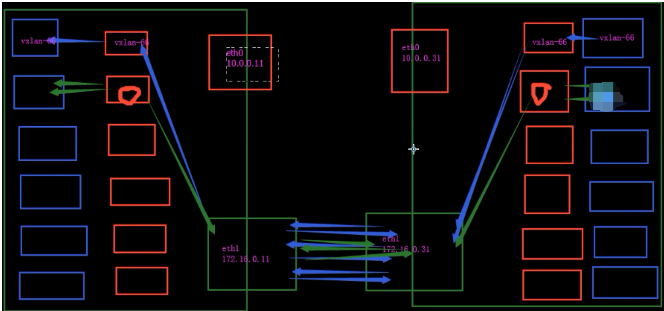

controller: 内存3G,cpu开启虚拟化 ip:10.0.0.11

compute1: 内存1G,cpu开启虚拟化(必开), ip:10.0.0.31

修改主机名,ip地址,host解析,测试ping百度

八.配置yum源

mount /dev//cdrom /mnt

rz 上传openstack_rpm.tar.gz到/opt.并解压

生成repo配置文件

cat /etc/yum.repos.d/local.repo

echo ‘[local]

name=local

baseurl=file:///mnt

gpgcheck=0

[openstack]

name=openstack

baseurl=file:///opt/repo

gpgcheck=0’ >/etc/yum.repos.d/local.repo

echo 'mount /dev/cdrom /mnt' >>/etc/rc.local

chmod +x /etc/rc.d/rc.local

九:安装基础服务

openstack网址

https://docs.openstack.org/mitaka/zh_CN/install-guide-rdo/

在所有节点上执行

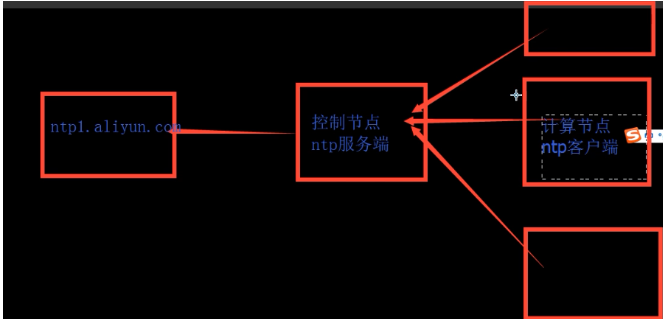

a:时间同步

控制节点:

vim /etc/chrony.conf

修改第26行为:

server ntp6.aliyun.com iburst

allow 10.0.0.0/24

systemctl restart chronyd

计算节点:

vim /etc/chrony.conf

修改第三行为:

server 10.0.0.11 iburst

systemctl restart chronyd

同时进行date检验是否时间同步

b:所有节点安装openstack客户户端和openstack-selinux

新开机器

rm -rf /etc/yum.repos.d/local.repo

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

ll /etc/yum.repos.d

yum list|grep openstack

yum install centos-release-openstack-ocate.noarch -y

ll /etc/yum.repos.d

yum install python-openstackclient openstack-selinux -y

仅控制节点

c:安装mariadb

yum install mariadb mariadb-server python2-PyMySQL -Y

php,php-mysql

echo ‘[mysqld]’

bind-address=10.0.0.11

default-storage-engine=innodb

innodb_file_per_table

max_connections=4096

collation-server=utf8_general_ci

character-set-server=utf8’ >/etc/my.cnf.d/openstack.cnf

systemctl start mariadb

systemctl enable mariadb

mysql_secure_installation

回车

n

y

y

y

y

d:安装rabbitmq并创建用户

yum install rabbitmq-server -y

systemctl start rabbitmq-server.service

systemctl enable rabbitmq-server.service

rabbitmqctl add_user openstack RABBIT_PASS

Creating user "openstack" ...

...done.

e:给openstack用户配置写和读权限:

# rabbitmqctl set_permissions openstack ".*" ".*" ".*"

Setting permissions for user "openstack" in vhost "/" ...

...done.

f:启动rabbitmq插件

rabbitmq-plugins enable rabbitmq_management

浏览器访问:http://10.0.0.11:15672 默认用户:guest默认密码:guest

g:memcached缓存token #memcached监听11211端口

yum install memcached python-memcached -y

sed -i ‘s#127.0.0.1#10.0.0.11#g’ /etc/sysconfig/memcached

systemctl restart memcached.service

systemctl enable memcached.service

PHP redis php-redis

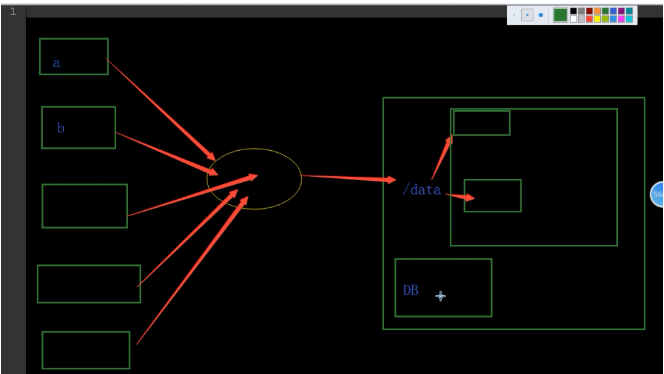

对象存储

一个文件记录到数据库中便于搜索存储。

十:keystone认证服务

keystone功能:认证管理,授权管理,服务目录

认证: 账户密码

授权: 授权管理

服务目录: 电话本

openstack服务安装的通用步骤:

1:创库授权

2:在keystone创建用户,关联角色

3:在keystone上创建服务,注册api

4:安装服务相关的软件包

5:修改配置文件

数据库的连接

keystone认证授权信息

rabbitmq连接信息

其他配置

6:同步数据库,创建表

7:启动服务

keystone认证方式:UUID,fernet,PKI;

都只是生成keystone随机密码的方式

a:创库授权

1.完成下面的步骤以创建数据库

用数据库连接客户端以 root 用户连接到数据库服务器:

mysql

创建 keystone 数据库

CREATE DATABASE keystone;

对keystone数据库授予恰当的权限:

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \

IDENTIFIED BY 'KEYSTONE_DBPASS';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \

IDENTIFIED BY 'KEYSTONE_DBPASS';

用合适的密码替换 KEYSTONE_DBPASS 。

退出数据库客户端。

b:安装keystone相关软件包

yum install openstack-keystone httpd mod_wsgi -y

c:修改配置文件

\cp /etc/keystone/keystone.conf{,.bak}

grep -Ev ‘^$|#’ /etc/keystone/keystone.conf.bak > /etc/keystone/keystone.conf

[DEFAULT]

...

admin_token = ADMIN_TOKEN

[database]

...

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

[token]

...

provider = fernet

yum install openstack-utils -y

openstack-config --set /etc/keystone/keystone.conf DEFAULT admin_token ADMIN_TOKEN

openstack-config --set /etc/keystone/keystone.conf database connection mysql+

pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

openstack-config --set /etc/keystone/keystone.conf token provider fernet

#校验

md5sum /etc/keystone/keystone.conf

d:同步数据库

mysql keystone -e 'show tables;'

su -s /bin/sh -c "keystone-manage db_sync" keystone

mysql keystone -e 'show tables;'

e:初始化fernet

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

f:配置 Apache HTTP 服务器

1.编辑/etc/httpd/conf/httpd.conf文件,配置ServerName选项为控制节点:

echo "ServerName controller" >/etc/httpd/conf/httpd.conf #centos6启动apache会非常快

用下面的内容创建文件 /etc/httpd/conf.d/wsgi-keystone.conf。

vi /etc/httpd/conf.d/wsgi-keystone.conf

echo 'Listen 5000

Listen 35357

<VirtualHost *:5000>

WSGIDaemonProcess keystone-public processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-public

WSGIScriptAlias / /usr/bin/keystone-wsgi-public

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

ErrorLogFormat "%{cu}t %M"

ErrorLog /var/log/httpd/keystone-error.log

CustomLog /var/log/httpd/keystone-access.log combined

<Directory /usr/bin>

Require all granted

</Directory>

</VirtualHost>

<VirtualHost *:35357>

WSGIDaemonProcess keystone-admin processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-admin

WSGIScriptAlias / /usr/bin/keystone-wsgi-admin

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

ErrorLogFormat "%{cu}t %M"

ErrorLog /var/log/httpd/keystone-error.log

CustomLog /var/log/httpd/keystone-access.log combined

<Directory /usr/bin>

Require all granted

</Directory>

</VirtualHost>' >/etc/httpd/conf.d/wsgi-keystone.conf

启动 Apache HTTP 服务并配置其随系统启动:

# systemctl enable httpd.service

# systemctl start httpd.service

h.创建服务和注册api:

1.配置认证令牌:

export OS_TOKEN=ADMIN_TOKEN #配置认证令牌

export OS_URL=http://controller:35357/v3 #配置端点URL

export OS_IDENTITY_API_VERSION=3 #配置认证 API 版本

env|grep OS

十一:创建服务实体和API端点

openstack service create \

--name keystone --description "OpenStack Identity" identity #创建服务实体和身份认证服务

注:openstack是动态生成ID的,因此看到的输出会与示例中的命令行输出不相同。

创建认证服务的 API 端点:

$ openstack endpoint create --region RegionOne \

identity public http://controller:5000/v3

$ openstack endpoint create --region RegionOne \

identity internal http://controller:5000/v3

$ openstack endpoint create --region RegionOne \

identity admin http://controller:35357/v3

注:每个添加到OpenStack环境中的服务要求一个或多个服务实体和三个认证服务中的API 端点变种。

I:创建域,项目(租户),用户,角色

创建域default:

openstack domain create --description "Default Domain" default

创建 admin 项目:

openstack project create --domain default \

--description "Admin Project" admin

注:OpenStack 是动态生成 ID 的,因此看到的输出会与示例中的命令行输出不相同。

创建 admin 用户

$ openstack user create --domain default \

--password-prompt admin

创建 admin 角色

$ openstack role create admin

添加admin 角色到 admin 项目和用户上:

$ openstack role add --project admin --user admin admin

创建service项目

$ openstack project create --domain default \

--description "Service Project" service

创建demo 项目:

$ openstack project create --domain default \

--description "Demo Project" demo

创建demo 用户:

$ openstack user create --domain default \

--password-prompt demo

User Password:

Repeat User Password:

创建 user 角色:

$ openstack role create user

添加 user角色到 demo 项目和用户:

$ openstack role add --project demo --user demo user

openstack token issue

unset OS_TOKEN #取消TOKEN

logout #退出TOKEN

env|grep OS

j:创建 admin 和 ``demo项目和用户创建客户端环境变量脚本。

vi admin-openrc

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

openstack token issue

openstack user list

logout #退出后会报错必须重新声明环境变量

glance服务

1.创库授权

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \

IDENTIFIED BY 'GLANCE_DBPASS';

GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \

IDENTIFIED BY 'GLANCE_DBPASS';

2.在keystone创建glance用户关联角色

openstack user create --domain default --password GLANCE_PASS glance #创建 glance 用户

openstack role add --project service --user glance admin #添加 admin 角色到 glance 用户和 service 项目上

3.在keystone上创建服务和注册api

openstack service create --name glance \

--description "OpenStack Image" image

openstack endpoint create --region RegionOne \

image public http://controller:9292

openstack endpoint create --region RegionOne \

image internal http://controller:9292

openstack endpoint create --region RegionOne \

image admin http://controller:9292

glance安全并配置组件

注:默认配置文件在各发行版本中可能不同。你可能需要添加这些部分,选项而不是修改已经存在的部分和选项。另外,在配置片段中的省略号(...)表示默认的配置选项你应该保留。

1.安装软件包

yum install openstack-glance -y

2.修改相应服务的配置文件

cp /etc/glance/glance-api.conf{,.bak}

grep '^[a-Z\[]' //etc/glance/glance-api.conf.bak>/etc/glance/glance-api.conf

openstack-config --set /etc/glance/glance-api.conf database connection mysql+

pymysql://glance:GLANCE_DBPASS@controller/glance

openstack-config --set /etc/glance/glance-api.conf glance_store stores file,http

openstack-config --set /etc/glance/glance-api.conf glance_store default_store file

openstack-config --set /etc/glance/glance-api.conf glance_store filesystem_store_datadir/var/lib/glance/images

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_uri

http://controller:5000

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_url

http://controller:35357

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken memcached_servers

controller:11211

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_domain_ name default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken password GLANCE_PASS

openstack-config --set /etc/glance/glance-api.conf paste_deploy flavor keystone

#####

cp /etc/glance/glance-registry.conf{,.bak}

grep '^[a-Z\[]' //etc/glance/glance-registry.conf.bak>/etc/glance/glance-registry.conf

openstack-config --set /etc/glance/glance-registry.conf database connection mysql+

pymysql://glance:GLANCE_DBPASS@controller/glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_uri

http://controller:5000

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_url

http://controller:35357

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken memcached_servers

controller:11211

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_type password

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_domain_ name default

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken project_name service

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken username glance

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken password GLANCE_PASS

openstack-config --set /etc/glance/glance-registry.conf paste_deploy flavor keystone

#验证

md5sum /etc/glance/glance-api.conf

md5sum /etc/glance/glance-registry.conf

3.同步数据库

su -s /bin/sh -c "glance-manage db_sync" glance

mysql glance -e "show tables;"

4.启动服务

systemctl enable openstack-glance-api.service \

openstack-glance-registry.service

systemctl start openstack-glance-api.service \

openstack-glance-registry.service

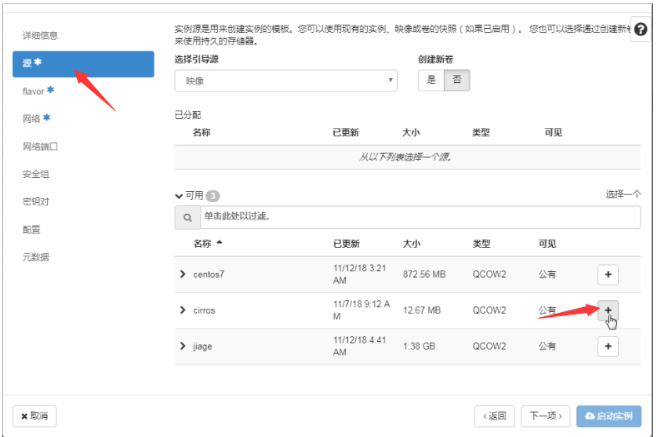

5.验证

wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img #下载镜像源

openstack image create "cirrors" \

--file cirrors-0.3.4-x86_64-disk.img \

--disk-format qcow2 --container-format bare \

--public

openstack image list

mysql glance -e "show tables;"|grep image

十二:nova计算服务

nova-api:接收和响应来自最终用户的计算API请求。

nova-api-metadata 服务

接受来自虚拟机发送的元数据请求。

nova-compute(多个)服务真正管理虚拟机(nova-compute调用libvirt)

nova-scheduler服务

nova调度器(挑选出最合适的nova-compute来创建虚拟机)

nova-conductor模块

帮助nova-compute代理修改数据库中虚拟机的状态

nova-network 早期openstack版本管理虚拟机的网络(已弃用,neutron)

nova-consoleauth和nova-novncproxy:web版的vnc来直接操作云主机

novncproxy:web版 vnc客户端

在控制节点上:

1.数据库创库授权

CREATE DATABASE nova_api;

CREATE DATABASE nova;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \

IDENTIFIED BY 'NOVA_DBPASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \

IDENTIFIED BY 'NOVA_DBPASS';

2.在keystone创建系统用户(glance,nova,neutron)关联角色

openstack user create --domain default \

--password NOVA_PASS nova

openstack role add --project service --user nova admin

3.在keystone上创建服务和注册api

openstack service create --name nova \

--description "OpenStack Compute" compute

openstack endpoint create --region RegionOne \

compute public http://controller:8774/v2.1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

compute internal http://controller:8774/v2.1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

compute admin http://controller:8774/v2.1/%\(tenant_id\)s

4.安装服务相关软件包

yum install openstack-nova-api openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler -y

5.修改相应服务的配置文件

cp /etc/nova/nova.conf{,.bak}

grep '^[a-Z\[]' /etc/nova/nova.conf.bak>/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.0.0.11

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron True

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.Noop

FirewallDriver

openstack-config --set /etc/nova/nova.conf api_database connection mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

openstack-config --set /etc/nova/nova.conf database connection mysql+pymysql://nova:NOVA_DBPASS@controller/nova

openstack-config --set /etc/nova/nova.conf glance api_servers http://controller:9292

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

openstack-config --set /etc/nova/nova.conf vnc vncserver_listen '$my_ip'

openstack-config --set /etc/nova/nova.conf vnc vncserver_proxyclient_address '$my_ip'

校验

md5sum /etc/nova/nova.conf

6:同步数据库

su -s /bin/sh -c "nova-manage api_db sync" nova

su -s /bin/sh -c "nova-manage db sync" nova

7:启动服务

# systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

# systemctl start openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

openstack compute service list

nova service-list

netstat -tlnup|grep 6080 no VNC #web界面需要登录密码不能启动

ps -ef|grep 2489

计算节点上

nova-compute调用libvirtd来创建虚拟机

安装

yum install openstack-nova-compute -y

yum install openstack-utils.noarch -y

配置

cp /etc/nova/nova.conf{,.bak}

grep '^[a-Z\[]' /etc/nova/nova.conf.bak>/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis osapi_compute,metadata

openstack-config --set /etc/nova/nova.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.0.0.31

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron True

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf glance api_servers http://controller:9292

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path = /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

openstack-config --set /etc/nova/nova.conf vnc enabled True

openstack-config --set /etc/nova/nova.conf vnc vncserver_listen 0.0.0.0

openstack-config --set /etc/nova/nova.conf vnc vncserver_proxyclient_address $my_ip

openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://controller:6080/vnc_auto.html

校验

md5sum /etc/nova/nova.conf

启动

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl start libvirtd.service openstack-nova-compute.service

nova service-list

十三.neutron网络服务

neutron-server 端口(9696) api:接受和响应外部的网络管理请求

neutron-linuxbridge-agent: 负责创建桥接网卡

neutron-dhcp-agent: 负责分配IP

neutron-metadata-agent: 配合nova-metadata-api实现虚拟机的定制化操作

L3-agent 实现三层网络vxlan(网络层)

LBaaS load balance 即服务(阿里云SLB)

fwaas

vpnaas

OSI 7层

数据链路层(平面网络flat)

网络层

公有网络

在控制节点上

1:数据库创库授权

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \

IDENTIFIED BY 'NEUTRON_DBPASS';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \

IDENTIFIED BY 'NEUTRON_DBPASS';

2.在keystone创建系统用户(glance,nova,neutron)关联角色

openstack user create --domain default --password NEUTRON_PASS neutron

openstack role add --project service --user neutron admin

3.在keystone上创建服务和注册api

openstack service create --name neutron \

--description "OpenStack Networking" network

openstack endpoint create --region RegionOne \

network public http://controller:9696

openstack endpoint create --region RegionOne \

network internal http://controller:9696

openstack endpoint create --region RegionOne \

network admin http://controller:9696

4.安装服务相应软件包

yum install openstack-neutron openstack-neutron-ml2 \

openstack-neutron-linuxbridge ebtables -y

glance 镜像服务

glance-api 镜像的查询,上传,下载

glance-registry 修改镜像的属性(磁盘大小,所需内存)

cirros,qcow2 虚拟机磁盘文件

xml配置文件

linuxbridge: 出现时间早,特别成熟,功能较少,稳定

openvswitch:出现时间晚,功能比较多,稳定性不如linuxbridge,配置复杂

5.修改相应服务的配置文件

a:配置服务组件

/etc/neutron/neutron.conf

cp /etc/neutron/neutron.conf{,.bak}

grep '[a-Z\[]'/etc/neutron/neutron.conf.bak>/etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin ml2

openstack-config --set /etc/neutron/neutron.conf DEFAULT service_plugins

openstack-config --set /etc/neutron/neutron.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes True

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes True

openstack-config --set /etc/neutron/neutron.conf database connection mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken password NEUTRON_PASS

openstack-config --set /etc/neutron/neutron.conf nova auth_url http://controller:35357

openstack-config --set /etc/neutron/neutron.conf nova auth_type password

openstack-config --set /etc/neutron/neutron.conf nova project_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova user_domain_name default

openstack-config --set /etc/neutron/neutron.conf nova region_name RegionOne

openstack-config --set /etc/neutron/neutron.conf nova project_name service

openstack-config --set /etc/neutron/neutron.conf nova username nova

openstack-config --set /etc/neutron/neutron.conf nova password NOVA_PASS

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

b:配置 Modular Layer 2 (ML2) 插件

/etc/neutron/plugins/ml2/ml2_conf.ini

cp /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak}

grep '[a-Z\[]'/etc/neutron/plugins/ml2/ml2_conf.ini.bak>/etc/neutron/plugins/ml2/ml2_conf.ini

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 type_drivers flat,vlan

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 tenant_network_types

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 mechanism_drivers linuxbridge

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2 extension_drivers port_security

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini ml2_type_flat flat_networks provider

openstack-config --set /etc/neutron/plugins/ml2/ml2_conf.ini securitygroup enable_ipset True

c:配置Linuxbridge代理

/etc/neutron/plugins/ml2/linuxbridge_agent.ini

cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep '[a-Z\[]'/etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak>/etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:PROVIDER_INTERFACE_NAME

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan False

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group True

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

d:配置DHCP代理

/etc/neutron/dhcp_agent.ini

cp /etc/neutron/dhcp_agent.ini{,.bak}

grep -Ev ‘$|#’ /etc/neutron/dhcp_agent.ini.bak

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT interface_driver neutron.agent.linux.interface.BridgeInterfaceDriver

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT dhcp_driver neutron.agent.linux.dhcp.Dnsmasq

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT enable_isolated_metadata True

校验

md5sum /etc/neutron/dhcp_agent.ini

e:/etc/neutron/metadata_agent.ini

cp /etc/neutron/metadata_agent.ini{,.bak}

grep -Ev '^$|#' /etc/neutron/metadata_agent.ini.bak>/etc/neutron/metadata_agent

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_ip controller

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT metadata_proxy_shared_secret

METADATA_SECRET

#校验:md5sum /etc/neutron/metadata_agent.ini

f:再次修改/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf neutron url http://controller:9696

openstack-config --set /etc/nova/nova.conf neutron auth_url http://controller:35357

openstack-config --set /etc/nova/nova.conf neutron auth_type password

openstack-config --set /etc/nova/nova.conf neutron project_domain_name default

openstack-config --set /etc/nova/nova.conf neutron user_domain_name default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password NEUTRON_PASS

openstack-config --set /etc/nova/nova.conf neutron service_metadata_proxy True

openstack-config --set /etc/nova/nova.conf neutron metadata_proxy_shared_secret METADATA_SECRET

#校验

md5sum /etc/nova/nova.conf

6.同步数据库

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

7.启动服务

systemctl restart openstack-nova-api.service

systemctl enable neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

systemctl start neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

mysql neutron -e 'show tables;'

neutron agent-list

nova service-list

计算节点

nova-compute

neutron-linuxbridge

控制节点

10.0.0.11 eth0

计算节点

10.0.0.31 eth1

10.0.0.31 eth3

1.安装组件

yum install openstack-neutron-linuxbridge ebtables ipset -y

openstack-neutron-linuxbridge #创建桥接网卡

2.配置

/etc/neutron/neutron.conf

cp /etc/neutron/neutron.conf{,.bak}

grep '[a-Z\[]'/etc/neutron/neutron.conf.bak>/etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes True

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes True

openstack-config --set /etc/neutron/neutron.conf database connection mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

校验

md5sum /etc/neutron/neutron.conf

scp -rp 10.0.0.11:/etc/neutron/plugins/ml2/linuxbridge_agent.ini /etc/neutron/plugins/ml2/linuxbridge_agent.ini

cat /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

[vxlan]

enable_vxlan = False

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

#启动

systemctl restart openstack-nova-compute.service

systemctl enable neutron-linuxbridge-agent.service

systemctl start neutron-linuxbridge-agent.service

neutron agent-list

计算节点安装horizon web界面

1.安装

yum install openstack-dashboard -y

2.配置

/etc/openstack-dashboard/local_settings

cp /etc/openstack-dashboard/local_settings{,.bak}

grep '[a-Z\[]'/etc/openstack-dashboard/local_settings.bak>/etc/openstack-dashboard/local_settings

cat /etc/openstack-dashboard/local_settings

OPENSTACK_HOST = "compute1" #在 controller 节点上配置仪表盘以使用 OpenStack 服务

ALLOWED_HOSTS = ['*', ] #允许所有主机访问仪表板

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'compute:11211',

}

} #配置 memcached 会话存储服务

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST #启用第3版认证API

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True #启用对域的支持

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

} #配置API版本

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "default" #通过仪表盘创建的用户默认角色配置为 user

选择网络参数1,禁用支持3层网络服务

OPENSTACK_NEUTRON_NETWORK = {

'enable_router': False,

'enable_quotas': False,

'enable_distributed_router': False,

'enable_ha_router': False,

'enable_lb': False,

'enable_firewall': False,

'enable_vpn': False,

'enable_fip_topology_check': False,

}

选择性地配置时区

TIME_ZONE = "Asia/Shanghai"

3.启动

systemctl start httpd

4:修改解决bug问题

cat /etc/httpd/conf.d/openstack-dashboard.conf

添加:WSGIApplicationGroup %(GLOBAL)

systemctl restart httpd

浏览器访问http://controller/dashboard #用户:admin 密码:ADMIN_PASS

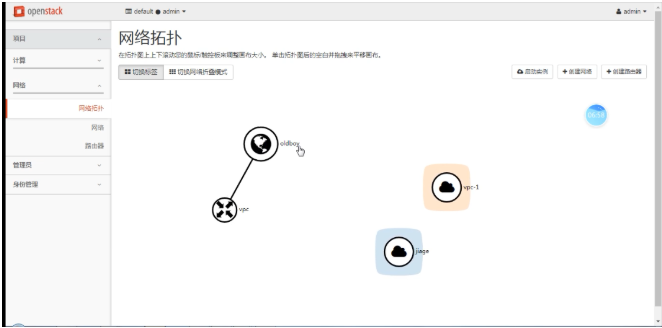

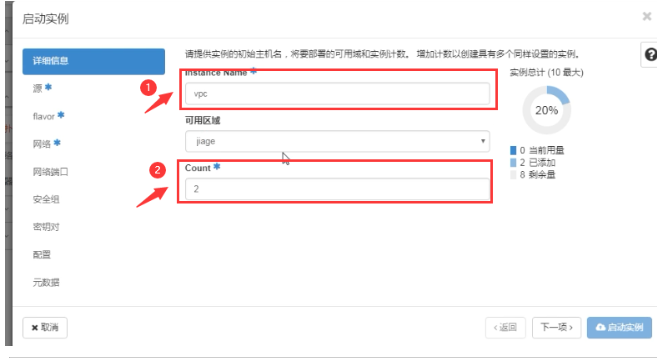

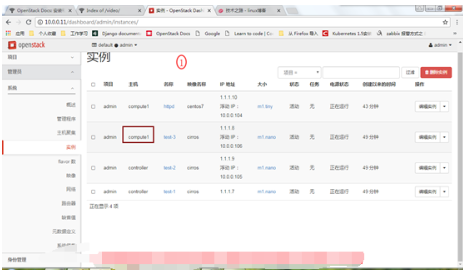

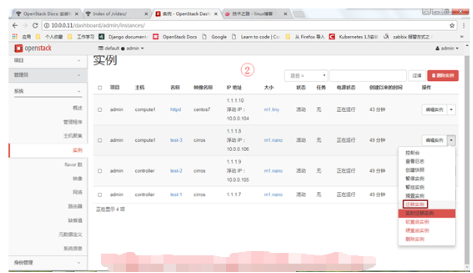

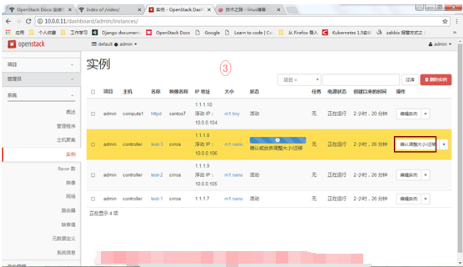

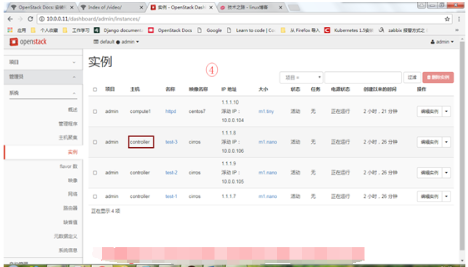

启动一个实例 (云主机)

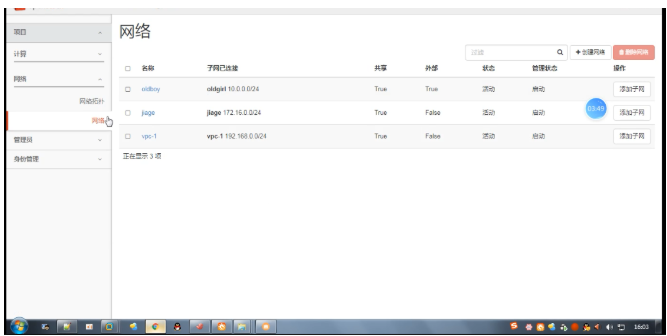

1.创建网络(网络名+子网)

neutron net-create --shared --provider:physical_network provider \

--provider:network_type flat oldboy

neutron subnet-create --name oldgirl \

--allocation-pool start=10.0.0.101,end=10.0.0.250 \

--dns-nameserver 223.5.5.5 --gateway 10.0.0.2 \

oldboy 10.0.0.0/24

2.创建云主机的硬件配置方案

openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano

3.创建秘钥对

ssh-keygen -q -N "" -f ~/.ssh/id_rsa

openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

验证公钥的添加

openstack keypair list

4.增加安全组规则

openstack security group rule create --proto icmp default #添加规则到 default 安全组

openstack security group rule create --proto tcp --dst-port 22 default #允许安全 shell (SSH) 的访问

5:启动一个实例:

openstack server create --flavor ml.nano --image cirros \

--nic net-id-网络id --security-group default \

--key-name mykey qiangge

neutron net-list #查看网络id

openstack server list

nova list

6:解决实例进不来系统的bug

在计算节点上

vim /etc/nova/nova.conf

[libvirt]

cpu_mode = none

virt_type = qemu

systemctl restart openstack-nova-compute.service

十六:增加一个计算节点10.0.0.32 compute2

前提条件:

修改主机名:host解析

1:配置yum源

scp -rp 10.0.0.31:/opt/openstack_rpm.tar.gz .

tar xf openstack_rpm.tar.gz

scp -rp 10.0.0.31:/etc/yum.repos.d/local.repo /etc/yum.repos.d

yum makecache

mount /dev/cdrom /mnt

echo 'mount/dev/cdrom /mnt' >>/etc/rc.d/rc.local

2.时间同步

vim /etc/chrony.conf

server 10.0.0.11 iburst

systemctl restart chronyd

date/timedatectl

3.安装openstack客户端和openstack-selinux

yum install python-openstackclient.noarch openstack-selinux.noarch -y

4.安装nova-compute

yum install openstack-nova-compute -y

yum install openstack-utils.noarch -y

\cp /etc/nova/nova.conf{,.bak}

grep -Ev '^$|#' /etc/nova/nova.conf.bak >/etc/nova/nova.conf

openstack-config --set /etc/nova/nova.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 10.0.0.32

openstack-config --set /etc/nova/nova.conf DEFAULT use_neutron True

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver

openstack-config --set /etc/nova/nova.conf glance api_servers http://controller:9292

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/nova/nova.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_type password

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/nova/nova.conf keystone_authtoken project_name service

openstack-config --set /etc/nova/nova.conf keystone_authtoken username nova

openstack-config --set /etc/nova/nova.conf keystone_authtoken password NOVA_PASS

openstack-config --set /etc/nova/nova.conf oslo_concurrency lock_path = /var/lib/nova/tmp

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/nova/nova.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

openstack-config --set /etc/nova/nova.conf vnc enabled True

openstack-config --set /etc/nova/nova.conf vnc vncserver_listen 0.0.0.0

openstack-config --set /etc/nova/nova.conf vnc vncserver_proxyclient_address $my_ip

openstack-config --set /etc/nova/nova.conf vnc novncproxy_base_url http://controller:6080/vnc_auto.html

openstack-config --set /etc/nova/nova.conf neutron url http://controller:9696

openstack-config --set /etc/nova/nova.conf neutron auth_url http://controller:35357

openstack-config --set /etc/nova/nova.conf neutron auth_type password

openstack-config --set /etc/nova/nova.conf neutron project_domain_name default

openstack-config --set /etc/nova/nova.conf neutron user_domain_name default

openstack-config --set /etc/nova/nova.conf neutron region_name RegionOne

openstack-config --set /etc/nova/nova.conf neutron project_name service

openstack-config --set /etc/nova/nova.conf neutron username neutron

openstack-config --set /etc/nova/nova.conf neutron password NEUTRON_PASS

5.安装neutron-linuxbridge-agent

yum install openstack-linuxbridge ebtables ipset -y

\cp /etc/neutron/neutron.conf{,.bak}

grep -Ev '$|#' /etc/neutron/neutron.conf.bak>/etc/neutron/neutron.conf

openstack-config --set /etc/neutron/neutron.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_status_changes True

openstack-config --set /etc/neutron/neutron.conf DEFAULT notify_nova_on_port_data_changes True

openstack-config --set /etc/neutron/neutron.conf database connection mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_type password

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken project_name service

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken username neutron

openstack-config --set /etc/neutron/neutron.conf oslo_concurrency lock_path /var/lib/neutron/tmp

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/neutron/neutron.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak}

grep '[a-Z\[]'/etc/neutron/plugins/ml2/linuxbridge_agent.ini.bak>/etc/neutron/plugins/ml2/linuxbridge_agent.ini

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini linux_bridge physical_interface_mappings provider:PROVIDER_INTERFACE_NAME

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini vxlan enable_vxlan False

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup enable_security_group True

openstack-config --set /etc/neutron/plugins/ml2/linuxbridge_agent.ini securitygroup firewall_driver neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

6.启动服务

systemctl start libvirtd.service openstack-nova-compute.service neutron-linuxbridge-agent.service

systemctl enable libvirtd.service openstack-nova-compute.service neutron-linuxbridge-agent.service

7.创建虚拟机来检查新增的计算节点是否可用!

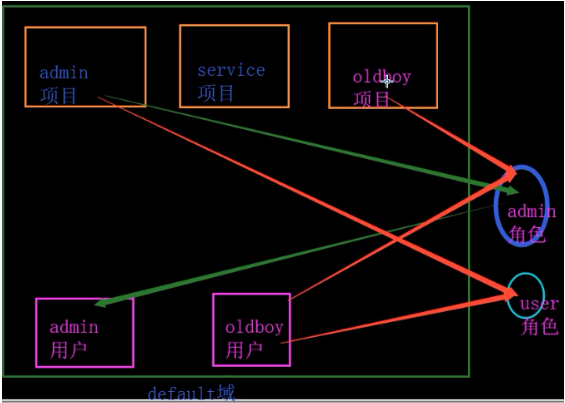

十七.openstack,用户,项目,角色的关系

openstack domain create --description "Default Domain" default

openstack project create --domain default --description "Admin Project" admin

openstack user create --domain default --password ADMIN_PASS admin

openstack role create admin

openstack role add --project admin --user admin admin

openstack权限设计不合理

全局管理员

项目管理员

普通用户

十八:glance镜像服务迁移

1:停掉控制节点的glance服务

glance-api和glance-registry

前提:在控制节点上

systemctl stop openstack-glance-api.service openstack-glance-registry.service

systemctl disable openstack-glance-api.service openstack-glance-registry.service

2)在compute2上安装mariadb

yum install mariadb mariadb-server python2-PyMSQL -y

systemctl start mariadb

systemctl enable mariadb

mysql_secure_installation

n

y

y

y

y

y

3:恢复glance数据库的数据

控制节点:

mysqldump -B glance >glance.sql

scp glance.sql 10.0.0.32:/root

compute2:

mysql<glance.sql

mysql glance -e 'show tables;'

mysql >GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \

IDENTIFIED BY 'GLANCE_DBPASS';

mysql >GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \

IDENTIFIED BY 'GLANCE_DBPASS';

4:安装配置glance服务

yum install openstack-glance openstack-utils -y

scp -rp 10.0.0.11:/etc/glance/glance-api.conf /etc/glance/glance-api.conf

scp -rp 10.0.0.11:/etc/glance/glance-registry.conf /etc/glance/glance-registry.conf

openstack-config --set /etc/glance/glance-api.conf database connection mysql+pymysql://glance:GLANCE_DBPASS@10.0.0.32/glance

openstack-config --set /etc/glance/glance-registry.conf database connection mysql+pymysql://glance:GLANCE_DBPASS@10.0.0.32/glance

5:启动服务

systemctl start openstack-glance-api.service openstack-glance-registry.service

systemctl enable openstack-glance-api.service openstack-glance-registry.service

netstat -tnulp

6.glance镜像文件迁移

scp -rp 10.0.0.11:/var/lib/glance/images/* /var/lib/glance/images/

chown glance:glance /var/lib/glance/images/

qemu-img info /var/lib/glance/images/镜像名

7:修改keystone服务目录的glance的api地址

openstack endpoint list|grep image

mysqldump keystone endpoint >endpoint.sql

cp endpoint.sql /opt/

vim endpoint.sql #替换的方法

:%s#http://controller:9292#http://10.0.0.32:9292#gc

mysql keystone < endpoint.sql

openstack endpoint list|grep image

控制节点验证

openstack image list

systemctl restart memcached.service

8.修改所有节点nova的配置文件

sed -i's#http://controller:9292#http://10.0.0.32:9292#g' /etc/nova/nova.conf

重启服务

控制节点:

systemctl restart openstack-nova-api

计算节点:

systemctl openstack-nova-compute

9.验证以上所有操作的方法

https://docs.openstack.org/image-guide/obtain-images.html 国外镜像地址获得

http://mirrors.ustc.edu.cn/centos-cloud/centos/7/images/ 国内镜像地址获得

上传一个新镜像,创建实例

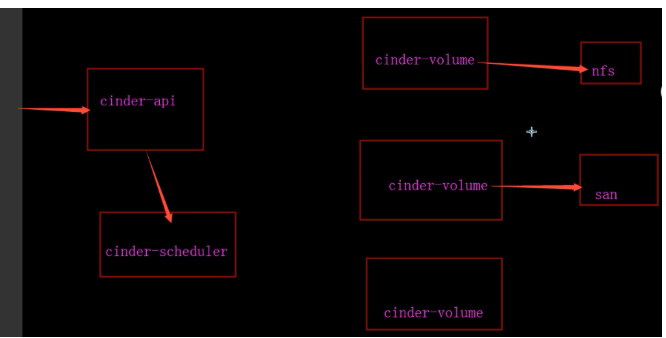

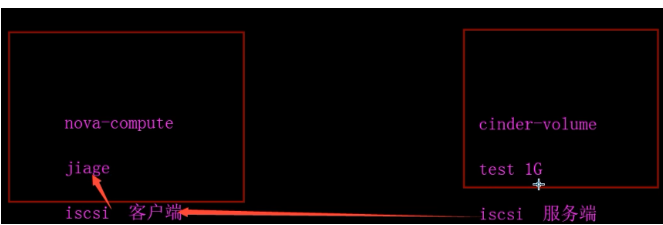

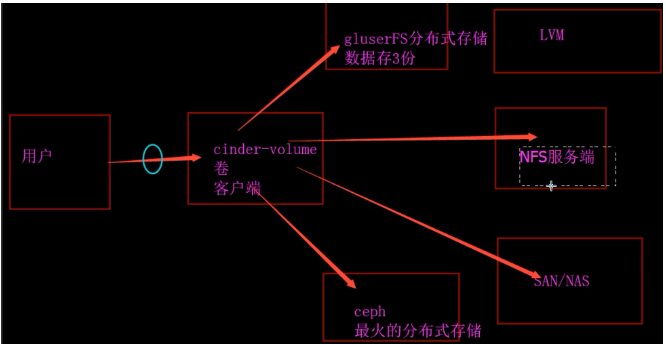

十九:cinder块存储服务

kvm热添加技术

nova-compute libvirt

cinder-volume LVM,nfs,glusterFS,ceph

cinder-api 接收外部的api请求

cinder-volume 提供存储空间

cinder-scheduler 调度器,决定将要分配的空间由哪一个cinder-volume提供

cinder-backup: 备份创建的卷

1:数据库创库授权

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' \

IDENTIFIED BY 'CINDER_DBPASS';

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' \

IDENTIFIED BY 'CINDER_DBPASS';

2:在keystone上创建系统用户(glance,nova,neutron,.cinder)关联角色

openstack user create --domain default --password CINDER_DBPASS cinder

openstack role add --project service --user cinder admin

3.在keystone上创建服务和注册api

openstack service create --name cinder \

--description "OpenStack Block Storage" volume

openstack service create --name cinderv2 \

--description "OpenStack Block Storage" volumev2

openstack endpoint create --region RegionOne \

volume public http://controller:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

volume internal http://controller:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

volume admin http://controller:8776/v1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

volumev2 public http://controller:8776/v2/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

volumev2 internal http://controller:8776/v2/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

volumev2 admin http://controller:8776/v2/%\(tenant_id\)s

注:v1和v2两个版本同时兼容

4.安装服务相应软件包

yum install openstack-cinder -y

5:修改相应服务的配置文件

cp /etc/cinder/cinder.conf{,.bak}

grep -Ev '^$|#' /etc/cinder/cinder.conf.bak >/etc/cinder/cinder.conf

openstack-config --set /etc/cinder/cinder.conf DEFAULT rpc_backend rabbit

openstack-config --set /etc/cinder/cinder.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/cinder/cinder.conf DEFAULT my_ip 10.0.0.11

openstack-config --set /etc/cinder/cinder.conf DEFAULT database connection mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_uri http://controller:5000

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_url http://controller:35357

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken memcached_servers controller:11211

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_type password

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken project_domain_name default

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken user_domain_name default

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken project_name service

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken username cinder

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken password CINDER_PASS

openstack-config --set /etc/cinder/cinder.conf oslo_messaging_rabbit rabbit_host controller

openstack-config --set /etc/cinder/cinder.conf oslo_messaging_rabbit rabbit_userid openstack

openstack-config --set /etc/cinder/cinder.conf oslo_messaging_rabbit rabbit_password RABBIT_PASS

openstack-config --set /etc/cinder/cinder.conf oslo_concurrency lock_path /var/lib/cinder/tmp

#校验

md5sum /etc/cinder/cinder.conf

6:同步数据库

su -s /bin/sh -c "cinder-manage db sync" cinder

mysql cinder -e 'show tables'

7:启动服务

#控制节点上运行

openstack-config --set /etc/nova/nova.conf cinder os_region_name RegionOne

systemctl restart openstack-nova-api.service

systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

cinder service-list

计算节点

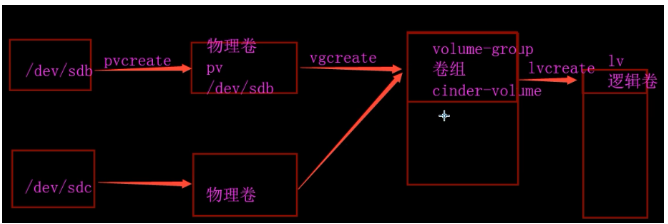

先决条件

1.安装支持的工具包

yum install lvm2 -y

启动LVM的metadata服务

# systemctl enable lvm2-lvmetad.service

# systemctl start lvm2-lvmetad.service

2.创建LVM 物理卷 /dev/sdb

增加两块硬盘

echo '---'>/sys/class/scsi_host/host{0,1,2}/scan

fdisk -l

pvcreate /dev/sdb

prcreate /dev/sdc

vgcreate cinder-ssd /dev/sdb

vgcreate cinder-sata /dev/sdc

修改/etc/lvm/lvm.conf

在130行下面插入一行

filter = [ "a/sdb/", "a/sdc/","r/.*/"]

安装

yum install openstack-cinder targetcli python-keystone -y

iscsi 作用:将服务端硬盘映射到本地

配置

cat /etc/cinder/cinder.conf

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

my_ip = 10.0.0.31

glance_api_servers = http://10.0.0.32:9292

enabled_backends = ssd,sata

[BACKEND]

[BRCD_FABRIC——EXAMPLE]

[CISCO_RABRIC_EXAMPLE]

[COORDINATION]

[FC-ZONE-MANAGER]

[KEYMGR]

[cors]

[cors.subdomain]

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = CINDER_PASS

[matchmaker_redis]

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[ssl]

[ssd]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-ssd

iscsi_protocol = iscsi

iscsi_helper = lioadm

[sata]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-sata

iscsi_protocol = iscsi

iscsi_helper = sata

启动服务

# systemctl enable openstack-cinder-volume.service target.service

# systemctl start openstack-cinder-volume.service target.service

cinder service-list

lvs

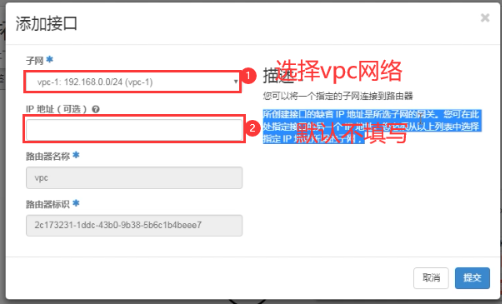

二十:再增加一个flan网段

ip address del 10.0.0.31/24 dev eth0

1:控制节点

a:

cp ifcfg-eth0 ifcfg-eth1

vim /etc/sysconfig/network-scripts/ifcfg-eth1

TYPE=Ethernet

BOOTPROTO=none

NAME=eth1

DEVICE=eth1

ONBOOT=yes

IPADDR=172.16.0.11 #controller

NETMASK=255.255.255.0

ifup eth1

b:

vim /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2_type_flat]

flat_networks = provider,net172_16_0

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:eth0,net172_16_0:eth1

c:重启

systemctl restart neutron-server.service neutron-linuxbridge-agent.service

2:计算节点

a:

vim /etc/sysconfig/network-scripts/ifcfg-eth1

TYPE=Ethernet

BOOTPROTO=none

NAME=eth1

DEVICE=eth1

ONBOOT=yes

IPADDR=172.16.0.31 #compute1

NETMASK=255.255.255.0

ifup eth1

ping 172.16.0.11

vim /etc/sysconfig/network-scripts/ifcfg-eth1

TYPE=Ethernet

BOOTPROTO=none

NAME=eth1

DEVICE=eth1

ONBOOT=yes

IPADDR=172.16.0.32 #compute2

NETMASK=255.255.255.0

ifup eth1

vim /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2_type_flat]

flat_networks = provider,net172_16_0

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:eth0,net172_16_0:eth1

b:重启

systemctl restart neutron-linuxbridge-agent.service

命令行创建网络

neutron net-create --shared --provider:physical_network net172_16_0 \

--provider:network_type flat jiage

neutron subnet-create --name jiage \

--allocation-pool start=172.16.0.101,end=172.16.0.250 \

--dns-nameserver 223.5.5.5 --gateway 172.16.0.2 \

jiage 172.16.0.0/24

neutron net-list

neutron subnet-list

linux使用iptable做网关

首先在能上外网的机器上增加一块网卡

我这里两块网卡配置如下

[root@muban1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0 外网卡

DEVICE=eth0

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=none

IPADDR=192.168.56.100

NETMASK=255.255.255.0

GATEWAY=192.168.56.2

DNS1=192.168.56.2

DNS2=223.5.5.5

IPV6INIT=no

USERCTL=no

[root@muban1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth1 内网卡

DEVICE=eth1

BOOTPROTO=none

ONBOOT=yes

NETMASK=255.255.255.0

TYPE=Ethernet

IPADDR=172.16.1.1

重启网络服务

service network restart

编辑内核配置文件,开启转发

vim /etc/sysctl.conf

使net.ipv4.ip_forward = 1

使内核生效

sysctl -p

清空防火墙的filter表

iptables -F

添加转发规则

iptables -t nat -A POSTROUTING -s 172.16.1.0/24 -j MASQUERADE 这里172.16.1.0/24与内网网段对应

其他机器不能上网的服务器的网卡,需要与上面的内网网卡,在同一局域网

网卡配置如下

[root@muban2 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth0

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=static

IPADDR=172.16.1.10

NETMASK=255.255.255.0

GATEWAY=172.16.1.1

DNS1=192.168.56.2

DNS2=223.5.5.5

重启网络服务

systemctl restart network

测试

[root@muban2 ~]# ping 172.16.1.1 -c4

PING 172.16.1.1 (172.16.1.1) 56(84) bytes of data.

64 bytes from 172.16.1.1: icmp_seq=1 ttl=64 time=0.329 ms

64 bytes from 172.16.1.1: icmp_seq=2 ttl=64 time=0.190 ms

64 bytes from 172.16.1.1: icmp_seq=3 ttl=64 time=0.189 ms

64 bytes from 172.16.1.1: icmp_seq=4 ttl=64 time=0.182 ms

--- 172.16.1.1 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3002ms

rtt min/avg/max/mdev = 0.182/0.222/0.329/0.063 ms

测试2

[root@muban2 ~]# ping www.baidu.com -c4

PING www.a.shifen.com (61.135.169.121) 56(84) bytes of data.

64 bytes from 61.135.169.121: icmp_seq=1 ttl=127 time=11.7 ms

64 bytes from 61.135.169.121: icmp_seq=2 ttl=127 time=32.7 ms

64 bytes from 61.135.169.121: icmp_seq=3 ttl=127 time=9.70 ms

64 bytes from 61.135.169.121: icmp_seq=4 ttl=127 time=9.99 ms

--- www.a.shifen.com ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3015ms

rtt min/avg/max/mdev = 9.700/16.060/32.761/9.676 ms

二十一:cinder对接nfs后端存储

#compute2

yum install nfs-utils -y

vim /etc/exports

/data 10.0.0.0/24(rw,async,no_root_squash,no_all_squash) 172.16.0.0/24(ro)

mkdir /data

showmount -e 172.16.0.32

systemctl restart rpcbind systemctl restart nfs

systemctl enable rpcbind systemctl enable nfs

#存储节点,计算节点compute1

修改/etc/cinder/cinder.conf

[DEFAULT]

enabled_backends = sata,ssd,nfs

[nfs]

volume_driver = cinder,volume,drivers,nfs,NfsDriver

nfs_shares_config = /etc/cinder/nfs_shares

volume_backend_name = nfs

vi /etc/cinder/nfs_shares

10.0.0.32:/data

systemctl restart openstack-cinder-volume

cinder service-list #查看是否出现新的节点

df -h

chown -R cinder:cinder e9b7872f2f12448b9ea1146c497a99f7

id cinder

# compute2

ll -h /data

qemu-img info /data/volume-f204f45-07b3

compute1

mount /dev/sdb/ /mnt

mkfs.ext4 /dev/sdb

mount -o loop /data/volume-f2f04f45 /srv

df -h

nova: 不提供虚拟化,支持多种虚拟化技术,nova-compute对接vmware ESXI

cinder: 不提供存储,支持多种存储技术,lvm,nfs,glusterFS,ceph

cinder对接glusterFS存储

二十二:把控制节点兼职计算节点controller

yum install openstack-nova-compute -y

cat /etc/nova/nova.conf

[vnc]

vncserver_listen = $my_ip

vncserver_proxyclient_address = $my_ip

替换成下面

[vnc]

enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

启动服务

systemctl start libvirted.service openstack-nova-compute.service

systemctl enable libvirted.service openstack-nova-compute.service

nova server-list

neutron agent-list

free -m

ll /var/lib/nova/instances/_base/ -h

云主机的冷迁移

开启nova计算节点之间互信

冷迁移需要nova计算节点之间使用nova用户互相免密码访问

默认nova用户禁止登陆,开启所有计算节点的nova用户登录shell。

usermod -s /bin/bash nova

su - nova

cp /etc/skel.bash* .

su - nova

ssh-keygen -t rsa -q -N ''

ls .ssh/

cd .ssh/

cp -fa id_rsa.pub authorized_keys

ssh nova@10.0.0.31

logout

scp -rp .ssh root@10.0.0.32:`pwd`

#compute2

ll /var/lib/nova/.ssh

chown -R nova:nova /var/lib/nova/.ssh

将公钥发送给其他计算节点的nova用户的/var/lib/nova/.ssh目录下,注意权限和所属组

ssh nova@10.0.0.32

ssh nova@10.0.0.11

ssh nova@10.0.0.31

vi /etc/nova/nova.conf

[DEFAULT]

scheduler_default_filters=RetryFilter,AvailabilityZoneFilter,RamFilter,DiskFilter,ComputeFilter,ComputeCapabilitiesFilter,ImagePropertiesFilter,ServerGroupAntiAffinityFilter,ServerGroupAffinityFilter

重启openstack-nova-scheduler

systemctl restart openstack-nova-scheduler.service

3:修改所有计算节点

vi /etc/nova/nova.conf

[DEFAULT]

allow_resize_to_same_host = True

重启openstack-nova-compute

systemctl restart openstack-nova-compute.service

4:dashboard上进行操作

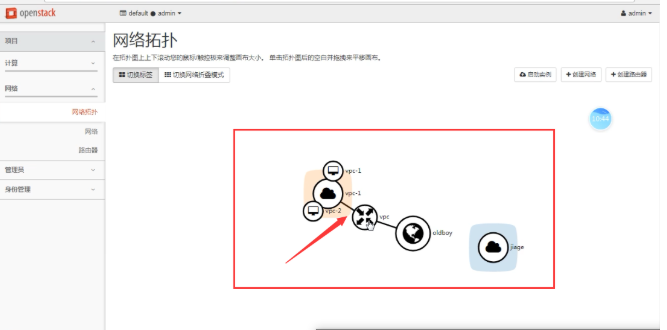

二十三:openstack创建虚拟机的流程

二十四:openstack定制化实例

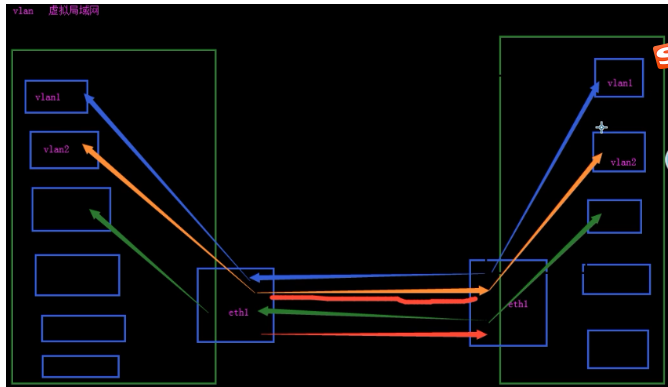

二十五:三层网络vxlan

在这之前,先把之前基于flat模式创建的虚机,全部删除

控制节点:

1.修改/etc/neutron/neutron.conf的[DEFAULT]区域

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

2.修改/etc/neutron/plugins/ml2/ml2_conf.ini文件

[ml2]区域修改如下

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = linuxbridge,l2population

在[ml2_type_vxlan]区域增加一行

vlan: 1-4094

vxlan: 4096*4096-2=1678万个网段

vni_ranges = 1:10000000

最终的配置文件如下

[root@controller ~]# cat /etc/neutron/plugins/ml2/ml2_conf.ini

[DEFAULT]

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = linuxbridge,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[ml2_type_geneve]

[ml2_type_gre]

[ml2_type_vlan]

[ml2_type_vxlan]

vni_ranges = 1:10000

[securitygroup]

enable_ipset = True

[root@controller ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth1

TYPE=Ethernet

BOOTPROTO=static

NAME=eth1

DEVICE=eth1

ONBOOT=yes

IPADDR=172.16.1.11

NETMASK=255.255.255.0

vlan

千万不要重启网卡!!!

我们使用ifconfig命令来添加网卡

ifconfig eth1 172.16.1.11 netmask 255.255.255.0

修改/etc/neutron/l3_agent.ini文件

在[DEFAULT]区域下,增加下面两行

interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

external_network_bridge =

启动

systemctl restart neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

systemctl start neutron-l3-agent.service

在vi /etc/rc.d/rc.local最后一行加上l3-agent的开机启动

systemctl start neutron-l3-agent.service

控制节点:

配置

修改/etc/neutron/plugins/ml2/linuxbridge_agent.ini文件

在[vxlan]区域下

enable_vxlan = True

local_ip = 172.16.1.11

l2_population = True

systemctl restart neutron-linuxbridge_agent.ini

最终的配置文件如下:

[root@compute1 ~]# cat /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[agent]

[linux_bridge]

physical_interface_mappings = provider:eth0

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

[vxlan]

enable_vxlan = True

local_ip = 172.16.1.11

l2_population = True

#这个ip暂时没有,所以也需要配置

[root@controller ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth2

TYPE=Ethernet

BOOTPROTO=static

NAME=eth2

DEVICE=eth2

ONBOOT=yes

IPADDR=172.16.1.11

NETMASK=255.255.255.0

千万不要重启网卡!!!

我们使用ifconfig命令来添加网卡

ifconfig eth1 172.16.0.31 netmask 255.255.255.0

注:所有节点需要增加网卡

cp ifcfg-eth1 ifcfg-eth2

cd /etc/sysconfig/network-scripts/

cat ifcfg-eth2

ifup eth2

4:修改/etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

external_network_bridge =

5:启动服务

systemctl restart neutron-server.service

neutron-linuxbridge-agent.service neutron-dhcp-agent.service

neutron-metadata-agent.service

systemctl enable neutron-l3-agent.service

systemctl restart neutron-l3-agent.service

计算节点

修改/etc/neutron/plugins/ml2/linuxbridge_agent.ini文件

在[vxlan]区域下

compute1

enable_vxlan = True

local_ip = 172.16.1.31

l2_population = True

systemctl restart neutron-linuxbridge_agent.ini

compute2

enable_vxlan = True

local_ip = 172.16.1.32

l2_population = True

systemctl restart neutron-linuxbridge_agent.ini

最终的配置文件如下:

[root@compute1 ~]# cat /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[agent]

[linux_bridge]

physical_interface_mappings = provider:eth0

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

[vxlan]

enable_vxlan = True

local_ip = 172.16.1.31

l2_population = True

#这个ip暂时没有,所以也需要配置

[root@controller ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth2

TYPE=Ethernet

BOOTPROTO=static

NAME=eth2

DEVICE=eth2

ONBOOT=yes

IPADDR=172.16.1.31

NETMASK=255.255.255.0

千万不要重启网卡!!!

我们使用ifconfig命令来添加网卡

ifconfig eth1 172.16.0.31 netmask 255.255.255.0

注:所有节点需要增加网卡

cp ifcfg-eth1 ifcfg-eth2

cd /etc/sysconfig/network-scripts/

cat ifcfg-eth2

ifup eth2

4:修改/etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

external_network_bridge =

5:启动服务

systemctl restart neutron-server.service

neutron-linuxbridge-agent.service neutron-dhcp-agent.service

neutron-metadata-agent.service

systemctl enable neutron-l3-agent.service

systemctl restart neutron-l3-agent.service

6:检查

neutron agent-list

三层网络vxlan的网络原理

回到控制节点

vi /etc/openstack-dashboard/local_settings

将263行的

'enable_router': False,

修改为

'enable_router': True,

systemctl restart httpd.service memcached.service

注:添加一个路由器,连接外网

启动实例

注:其余的不用配置

启动实例

二十六:openstack的api使用

二次开发,开发出更友好的dashboard

命令行获取token

token= `openstack token issue|awk 'NR==5{print $4}'`

获取一个token

curl -i -X POST -H "Content-type: application/json" \

-d '{

"auth": {

"identity": {

"methods": [

"password"

],

"password": {

"user": {

"domain": {

"name": "default"

},

"name": "admin",

"password": "ADMIN_PASS"

}

}

},

"scope": {

"project": {

"domain": {

"name": "default"

},

"name": "admin"

}

}

}

}' http://10.0.0.11:5000/v3/auth/tokens

token例子

gAAAAABaMImzumUV648tH56PGK38DlE9Jz0G2qg0pv5M7XlrZu1XoXMacvOsJXHH9NgvovrfgeJR-DlFPRrE0wpqdHW9VkSLWwuGZtaKkcX7zRlehrHttLPTigz9UPdQi4GrZ7u2APIG6kIsyKLiVLkUMMDen02FWKQGKZT8eOG2gx-OKDAV1cE