随笔-性能分析-sched(sched lat|wait time|run time)

perf sched lat计算原理

sched lat 计算过程:

tracepoint:sched:sched_wakeup,

tracepoint:sched:sched_wakeup_new

{

# 这里的sched属于 从等待队列 -> 运行队列 (比如io事件自动退出)

# Voluntary Switch

@qtime[args->pid] = nsecs; # 记录task进入runq的时刻(t1,sched lat场景1)

}

tracepoint:sched:sched_switch

{ # sched_switch - task被换入cpu执行的时刻

if (args->prev_state == TASK_RUNNING) { # 被换出的task如果是running,那么还是进入rq,

# 这里的sched属于 被cpu执行 -> 运行队列 (比如时间片耗尽,被抢占)

# Involuntary Switch

@qtime[args->prev_pid] = nsecs; # 记录task进入runq的时刻(t1,sched lat场景2)

}

$ns = @qtime[args->next_pid];

if ($ns) {

@usecs = hist((nsecs - $ns) / 1000); # nsecs当前时间,也就是被cpu执行的时刻(t2)

# t2 - t1 也就是sched latency

}

delete(@qtime[args->next_pid]);

}

通过实例观察对应的计算过程:

./stress-ng -t 10 --mutex 1 --mutex-procs 20 --times -v

+++

perf sched record -s -o sched.dat -- sleep 5

perf sched latency -i sched.dat -s max

-------------------------------------------------------------------------------------------------------------------------------------------

Task | Runtime ms | Switches | Avg delay ms | Max delay ms | Max delay start | Max delay end |

-------------------------------------------------------------------------------------------------------------------------------------------

stress-ng-mutex:(20) | 0.000 ms | 70247 | avg: 0.039 ms | max: 8.313 ms | max start: 86402.220563 s | max end: 86402.228876 s

...

perf sched script -i sched.dat > sched.dat.script

grep -E '86402.220563|86402.228876' sched.dat.script -C 3

perf sched wait time计算过程

按照自己的理解,sched_switch且state不为R,那么就是换出了runq,记为t1;当sched_wakeup,sched_wakeup_new,sched_switch时,那么就是重新执行,记为t2;wait time = t2 - t1

使用perf工具,wait time等于统计sched out到sched in的间隔(过滤perf script task两次sched_switch的事件)

可是实际跑perf看perf输出结果和自己的计算结果对不上:

xpid=1177879; perf sched timehist -i sched.dat --pid $xpid > sched.dat.timehist.$xpid; sort -k 4 -nr sched.dat.timehist.$xpid | head -n 10

178931.515435 [0003] stress-ng-mutex[1177886/1177879 10.093 9.203 0.006

178933.667048 [0003] stress-ng-mutex[1177882/1177879 9.957 4.163 0.006

...

+++

perf sched script -i sched.dat > sched.dat.script

grep 178931.515435 sched.dat.script -A 1000 | grep -E 'sched_switch.*1177886' | head -n 2

+++

stress-ng-mutex 1177886 [003] 178931.515435: sched:sched_switch: prev_comm=stress-ng-mutex prev_pid=1177886 prev_prio=96 prev_state=S ==> next_comm=swapper/3 next_pid=0 next_prio=120

swapper 0 [003] 178931.516394: sched:sched_switch: prev_comm=swapper/3 prev_pid=0 prev_prio=120 prev_state=R ==> next_comm=stress-ng-mutex next_pid=1177886 next_prio=96

从script输出可以确认178931.515435s是wait起始时间,也就是sched out的时间,下一次sched in是178931.516394s,178931.516394s−178931.515435s=0.959ms 可是timehist输出的是10.093

待进一步确认,需要阅读代码tools/perf/builtin-sched.c

/* * Explanation of delta-time stats: * * t = time of current schedule out event * tprev = time of previous sched out event * also time of schedule-in event for current task * last_time = time of last sched change event for current task * (i.e, time process was last scheduled out) * ready_to_run = time of wakeup for current task * * -----|------------|------------|------------|------ * last ready tprev t * time to run * * |-------- dt_wait --------| * |- dt_delay -|-- dt_run --| * * dt_run = run time of current task * dt_wait = time between last schedule out event for task and tprev * represents time spent off the cpu * dt_delay = time between wakeup and schedule-in of task */

perf sched 分析wait time

./stress-ng -t 10 --mutex 1 --mutex-procs 10 --times

perf sched record -s -o sched.dat -- sleep 5

perf sched timehist -i sched.dat

perf sched timehist -i sched.dat > sched.dat.timehist

xpid=861201; perf sched timehist -i sched.dat --pid $xpid > sched.dat.timehist.$xpid; sort -k 4 -nr sched.dat.timehist.$xpid | head -n 10

+++

[tid/pid] (msec) (msec) (msec)

time cpu task name wait time sch delay run time

86401.739038 [0000] stress-ng-mutex[571134/571116] 33.304 0.027 0.010

86401.739141 [0000] stress-ng-mutex[571128/571116] 32.998 0.034 0.005

86401.738230 [0002] stress-ng-mutex[571117/571116] 32.973 0.042 0.007

...

perf sched timehist还有sch delay,run time,sch delay是不是可以用来分析...(eee,待研究)

+++

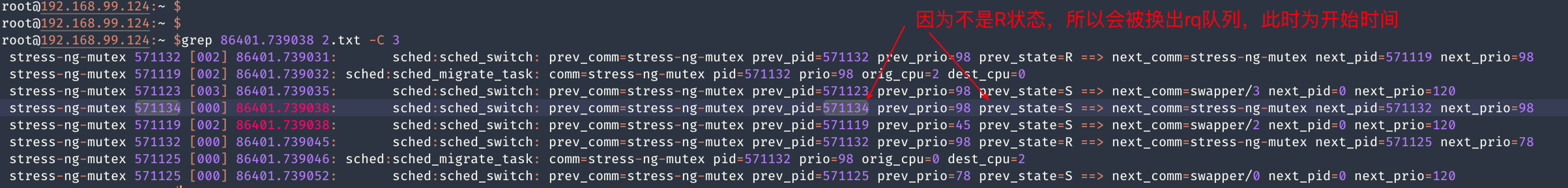

进一步分析数据,分析wait time最高时,是谁在这个时间段运行,又是为何需要wait这么久?

86401.739038 [0000] stress-ng-mutex[571134/571116] 33.304 0.027 0.010

86401.739038是起始时间。

perf sched script -i sched.dat > sched.dat.script

xtime=86401.739038; grep $xtime sched.dat.script -A 1000 | grep 862765

grep 86401.739038 2.txt -A 1000 | grep 'pid=571134'

root@192.168.99.124:~ $grep 86401.739038 2.txt -A 1000 | grep 'next_pid=571134'

stress-ng-mutex 571124 [003] 86401.739797: sched:sched_switch: prev_comm=stress-ng-mutex prev_pid=571124 prev_prio=98 prev_state=S ==> next_comm=stress-ng-mutex next_pid=571134 next_prio=98

...

确定开始和结束时间,截取这段时间的采样数据:

perf sched script -i sched.dat --time 86401.739038,86401.739797 > 3.txt

We can now obtain a general list of the threads that were active during this period using the following:

root@192.168.99.124:~ $grep sched_stat_runtime 3.txt|awk '{print $1}' | sort | uniq -c

1 rcu_sched

root@192.168.99.124:~ $grep sched_stat_runtime 3.txt

rcu_sched 12 [000] 86401.739791: sched:sched_stat_runtime: comm=rcu_sched pid=12 runtime=8413 [ns] vruntime=2362444912196 [ns]

root@192.168.99.124:~ $

看着好像不太理想

接下来要确认一些事情,是什么原因被换出,又是什么原因被换入,为什么需要这么久?

参考

本文来自博客园,作者:LiYanbin,转载请注明原文链接:https://www.cnblogs.com/stellar-liyanbin/p/18379854

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】博客园社区专享云产品让利特惠,阿里云新客6.5折上折

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· DeepSeek “源神”启动!「GitHub 热点速览」

· 微软正式发布.NET 10 Preview 1:开启下一代开发框架新篇章

· C# 集成 DeepSeek 模型实现 AI 私有化(本地部署与 API 调用教程)

· DeepSeek R1 简明指南:架构、训练、本地部署及硬件要求

· 2 本地部署DeepSeek模型构建本地知识库+联网搜索详细步骤