Hadoop的Archive归档命令使用指南

2018-03-19 16:49 staryea-bigdata 阅读(10079) 评论(1) 收藏 举报

hadoop不适合小文件的存储,小文件本省就占用了很多的metadata,就会造成namenode越来越大。Hadoop Archives的出现视为了缓解大量小文件消耗namenode内存的问题。

采用ARCHIVE 不会减少 文件存储大小,只会压缩NAMENODE 的空间使用

Hadoop档案指南

概述

Hadoop存档是特殊格式的存档。Hadoop存档映射到文件系统目录。Hadoop归档文件总是带有* .har扩展名

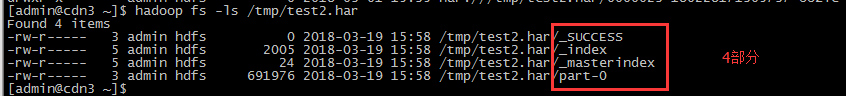

Hadoop存档目录包含元数据(采用_index和_masterindex形式)

数据部分data(part- *)文件。

_index文件包含归档文件的名称和部分文件中的位置。

如何创建档案

用法:hadoop archive -archiveName 归档名称 -p 父目录 [-r <复制因子>] 原路径(可以多个) 目的路径

-archivename是您想要创建的档案的名称。一个例子是foo.har。该名称应该有一个* .har扩展名。父参数是指定文件应归档到的相对路径。例子是:

hadoop archive -archiveName foo.har -p /foo/bar -r 3 a b c /user/hz

执行该命令后,原输入文件不会被删除,需要手动删除

hadoop fs -rmr /foo/bar/a

hadoop fs -rmr /foo/bar/b

hadoop fs -rmr /foo/bar/c

这里/ foo / bar是父路径,a b c (路径用空格隔开,可以配置多个)是父路径的相对路径。请注意,这是一个创建档案的Map / Reduce作业。你需要一个map reduce集群来运行它。

-r表示期望的复制因子; 如果未指定此可选参数,则将使用复制因子10。

目的存档一个目录/user/hz

如果您指定加密区域中的源文件,它们将被解密并写入存档。如果har文件不在加密区中,则它们将以清晰(解密)的形式存储。如果har文件位于加密区域,它们将以加密形式存储。

例子:

###########################################################################################

[admin@cdn3 ~]$ hadoop archive -archiveName test3.har -p /user/admin -r 3 oozie-oozi /user/admin

18/03/19 16:18:33 INFO impl.TimelineClientImpl: Timeline service address: http://cnn1.sctel.com:8188/ws/v1/timeline/

18/03/19 16:18:33 INFO client.RMProxy: Connecting to ResourceManager at cnn1.sctel.com/192.168.2.244:8050

18/03/19 16:18:34 INFO impl.TimelineClientImpl: Timeline service address: http://cnn1.sctel.com:8188/ws/v1/timeline/

18/03/19 16:18:34 INFO client.RMProxy: Connecting to ResourceManager at cnn1.sctel.com/192.168.2.244:8050

18/03/19 16:18:35 INFO impl.TimelineClientImpl: Timeline service address: http://cnn1.sctel.com:8188/ws/v1/timeline/

18/03/19 16:18:35 INFO client.RMProxy: Connecting to ResourceManager at cnn1.sctel.com/192.168.2.244:8050

18/03/19 16:18:35 INFO hdfs.DFSClient: Created HDFS_DELEGATION_TOKEN token 2461 for admin on 192.168.2.244:8020

18/03/19 16:18:35 INFO security.TokenCache: Got dt for hdfs://cnn1.sctel.com:8020; Kind: HDFS_DELEGATION_TOKEN, Service: 192.168.2.244:8020, Ident: (HDFS_DELEGATION_TOKEN token 2461 for admin)

18/03/19 16:18:35 INFO mapreduce.JobSubmitter: number of splits:1

18/03/19 16:18:36 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1519809200150_0029

18/03/19 16:18:36 INFO mapreduce.JobSubmitter: Kind: HDFS_DELEGATION_TOKEN, Service: 192.168.2.244:8020, Ident: (HDFS_DELEGATION_TOKEN token 2461 for admin)

18/03/19 16:18:37 INFO impl.YarnClientImpl: Submitted application application_1519809200150_0029

18/03/19 16:18:37 INFO mapreduce.Job: The url to track the job: http://cnn1.sctel.com:8088/proxy/application_1519809200150_0029/

18/03/19 16:18:37 INFO mapreduce.Job: Running job: job_1519809200150_0029

18/03/19 16:18:51 INFO mapreduce.Job: Job job_1519809200150_0029 running in uber mode : false

18/03/19 16:18:51 INFO mapreduce.Job: map 0% reduce 0%

18/03/19 16:19:05 INFO mapreduce.Job: map 100% reduce 0%

18/03/19 16:19:14 INFO mapreduce.Job: map 100% reduce 100%

18/03/19 16:19:15 INFO mapreduce.Job: Job job_1519809200150_0029 completed successfully

18/03/19 16:19:15 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=2405

FILE: Number of bytes written=286331

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=694282

HDFS: Number of bytes written=694279

HDFS: Number of read operations=34

HDFS: Number of large read operations=0

HDFS: Number of write operations=7

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=24348

Total time spent by all reduces in occupied slots (ms)=12302

Total time spent by all map tasks (ms)=12174

Total time spent by all reduce tasks (ms)=6151

Total vcore-seconds taken by all map tasks=12174

Total vcore-seconds taken by all reduce tasks=6151

Total megabyte-seconds taken by all map tasks=18699264

Total megabyte-seconds taken by all reduce tasks=12597248

Map-Reduce Framework

Map input records=18

Map output records=18

Map output bytes=2357

Map output materialized bytes=2405

Input split bytes=98

Combine input records=0

Combine output records=0

Reduce input groups=18

Reduce shuffle bytes=2405

Reduce input records=18

Reduce output records=0

Spilled Records=36

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=315

CPU time spent (ms)=6760

Physical memory (bytes) snapshot=1349992448

Virtual memory (bytes) snapshot=4604309504

Total committed heap usage (bytes)=1558183936

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=2208

File Output Format Counters

Bytes Written=0

###################################################################################################

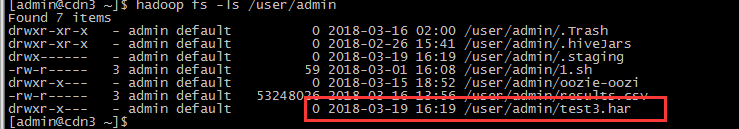

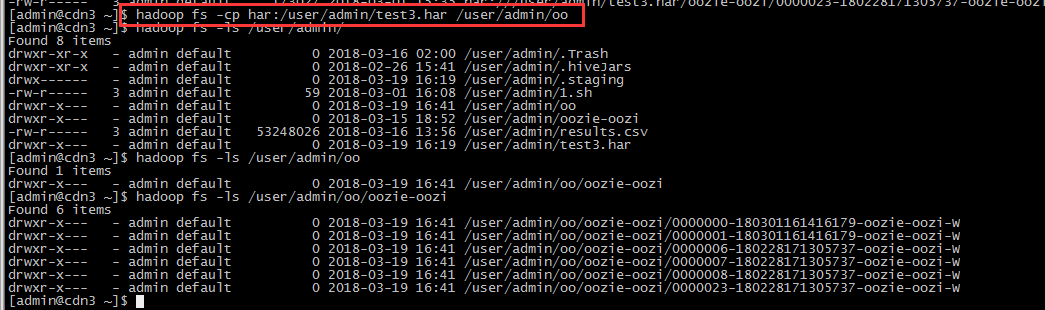

查看生成的文件:

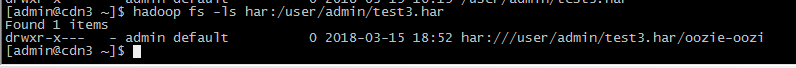

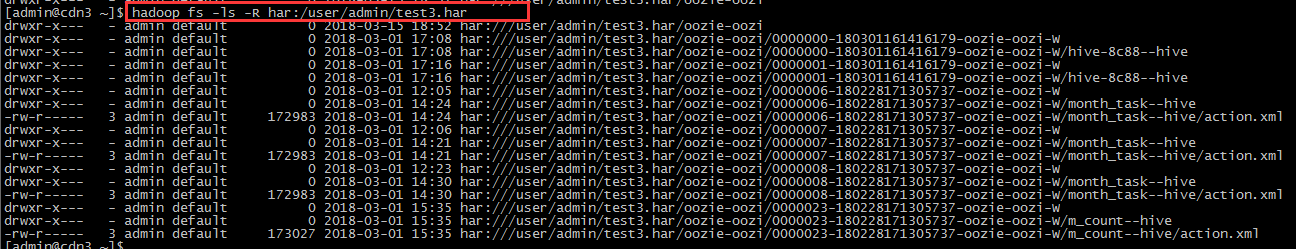

如何在档案中查找文件

该档案将自己公开为文件系统层。因此,档案中的所有fs shell命令都可以工作,但使用不同的URI。另外,请注意档案是不可变的。所以,重命名,删除并创建返回一个错误。Hadoop Archives的URI是

HAR://方案-主机名:端口/ archivepath / fileinarchive

如果没有提供方案,它假定底层文件系统。在这种情况下,URI看起来像

HAR:/// archivepath / fileinarchive

查询:

hadoop fs -ls har:/user/admin/test3.har

hadoop fs -ls -R har:/user/admin/test3.har

如何解除归档

由于档案中的所有fs shell命令都是透明的,因此取消存档只是复制的问题。

依次取消存档:

hadoop fs -cp har:/user/admin/test3.har /user/admin/oo

要并行解压缩,请使用DistCp:

hadoop distcp har:/user/admin/test3.har /user/admin/oo2

##################################################################################################################################

[admin@cdn3 ~]$ hadoop distcp har:/user/admin/test3.har /user/admin/oo2

18/03/19 16:42:49 INFO tools.DistCp: Input Options: DistCpOptions{atomicCommit=false, syncFolder=false, deleteMissing=false, ignoreFailures=false, maxMaps=20, sslConfigurationFile='null', copyStrategy='uniformsize', sourceFileListing=null, sourcePaths=[har:/user/admin/test3.har], targetPath=/user/admin/oo2, targetPathExists=false, preserveRawXattrs=false}

18/03/19 16:42:50 INFO impl.TimelineClientImpl: Timeline service address: http://cnn1.sctel.com:8188/ws/v1/timeline/

18/03/19 16:42:50 INFO client.RMProxy: Connecting to ResourceManager at cnn1.sctel.com/192.168.2.244:8050

18/03/19 16:42:51 INFO hdfs.DFSClient: Created HDFS_DELEGATION_TOKEN token 2462 for admin on 192.168.2.244:8020

18/03/19 16:42:51 INFO security.TokenCache: Got dt for har:/user/admin/test3.har; Kind: HDFS_DELEGATION_TOKEN, Service: 192.168.2.244:8020, Ident: (HDFS_DELEGATION_TOKEN token 2462 for admin)

18/03/19 16:42:52 INFO impl.TimelineClientImpl: Timeline service address: http://cnn1.sctel.com:8188/ws/v1/timeline/

18/03/19 16:42:52 INFO client.RMProxy: Connecting to ResourceManager at cnn1.sctel.com/192.168.2.244:8050

18/03/19 16:42:52 INFO mapreduce.JobSubmitter: number of splits:8

18/03/19 16:42:53 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1519809200150_0030

18/03/19 16:42:53 INFO mapreduce.JobSubmitter: Kind: HDFS_DELEGATION_TOKEN, Service: 192.168.2.244:8020, Ident: (HDFS_DELEGATION_TOKEN token 2462 for admin)

18/03/19 16:42:54 INFO impl.YarnClientImpl: Submitted application application_1519809200150_0030

18/03/19 16:42:54 INFO mapreduce.Job: The url to track the job: http://cnn1.sctel.com:8088/proxy/application_1519809200150_0030/

18/03/19 16:42:54 INFO tools.DistCp: DistCp job-id: job_1519809200150_0030

18/03/19 16:42:54 INFO mapreduce.Job: Running job: job_1519809200150_0030

18/03/19 16:43:11 INFO mapreduce.Job: Job job_1519809200150_0030 running in uber mode : false

18/03/19 16:43:11 INFO mapreduce.Job: map 0% reduce 0%

18/03/19 16:43:23 INFO mapreduce.Job: map 25% reduce 0%

18/03/19 16:43:25 INFO mapreduce.Job: map 50% reduce 0%

18/03/19 16:43:27 INFO mapreduce.Job: map 63% reduce 0%

18/03/19 16:43:28 INFO mapreduce.Job: map 75% reduce 0%

18/03/19 16:43:29 INFO mapreduce.Job: map 100% reduce 0%

18/03/19 16:43:30 INFO mapreduce.Job: Job job_1519809200150_0030 completed successfully

18/03/19 16:43:30 INFO mapreduce.Job: Counters: 33

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=1135544

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=717982

HDFS: Number of bytes written=691976

HDFS: Number of read operations=266

HDFS: Number of large read operations=0

HDFS: Number of write operations=40

Job Counters

Launched map tasks=8

Other local map tasks=8

Total time spent by all maps in occupied slots (ms)=86922

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=86922

Total vcore-seconds taken by all map tasks=86922

Total megabyte-seconds taken by all map tasks=89008128

Map-Reduce Framework

Map input records=18

Map output records=0

Input split bytes=928

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=681

CPU time spent (ms)=14030

Physical memory (bytes) snapshot=1731399680

Virtual memory (bytes) snapshot=16419467264

Total committed heap usage (bytes)=2720006144

File Input Format Counters

Bytes Read=6654

File Output Format Counters

Bytes Written=0

org.apache.hadoop.tools.mapred.CopyMapper$Counter

BYTESCOPIED=691976

BYTESEXPECTED=691976

COPY=18

[admin@cdn3 ~]$ hadoop fs -ls /user/admin/oo2

Found 1 items

drwxr-x--- - admin default 0 2018-03-19 16:43 /user/admin/oo2/oozie-oozi

################################################################################################################

浙公网安备 33010602011771号

浙公网安备 33010602011771号