08 学生课程分数的Spark SQL分析(sql语言)

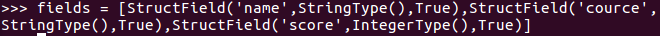

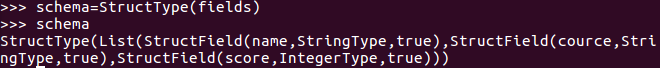

1.生成“表头”

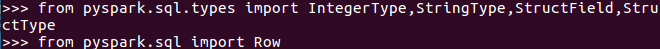

from pyspark.sql.types import IntegerType,StringType,StructField,StructType

from pyspark.sql import Row

fields=[StructField('name',StringType(),True),StructField('course',StringType(),True),StructField('score',IntegerType(),True)]

schema=StructType(fields)

schema

2.生成“表中的记录”

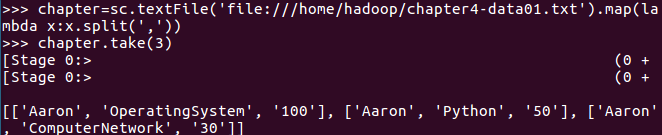

chapter=sc.textFile('file:///home/hadoop/chapter4-data01.txt').map(lambda x:x.split(','))

chapter.take(3)

data=chapter.map(lambda p:Row(p[0],p[1],int(p[2])))

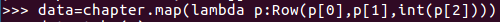

3.把“表头”和“表中的记录”拼装在一起

df_scs=spark.createDataFrame(data,schema)

df_scs.printSchema()

二、用SQL语句完成以下数据分析要求

必须把DataFrame注册为临时表

df_scs.createOrReplaceTempView('scs')

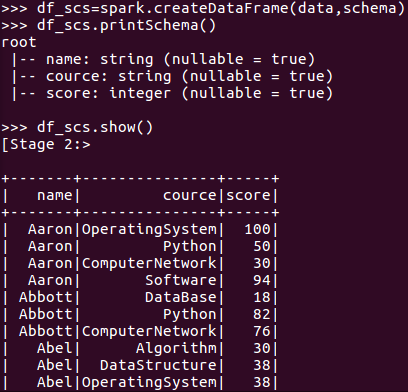

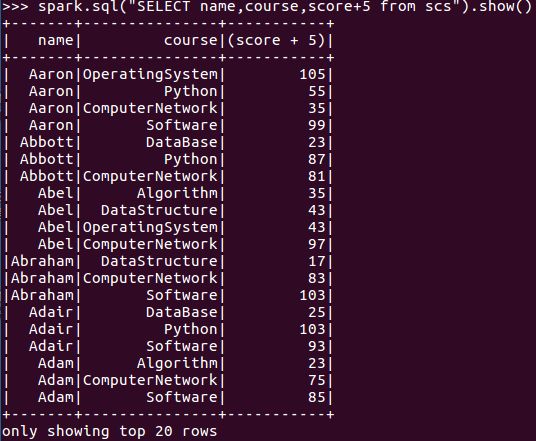

- 每个分数+5分。

- spark.sql("SELECT name,course,score+5 from scs").show()

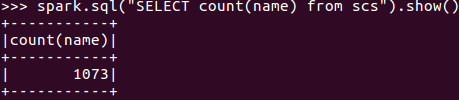

- 总共有多少学生?

- spark.sql("SELECT count(name) from scs").show()

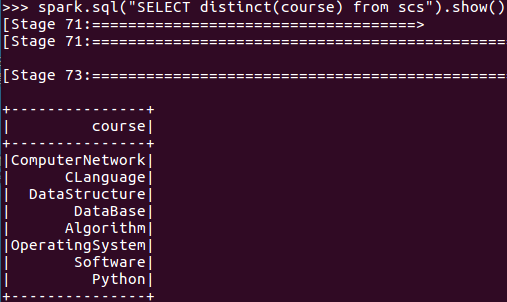

- 总共开设了哪些课程?

- spark.sql("SELECT distinct(course) from scs").show()

- 每个学生选修了多少门课?

- spark.sql("SELECT name,count(course) from scs group by name").show()

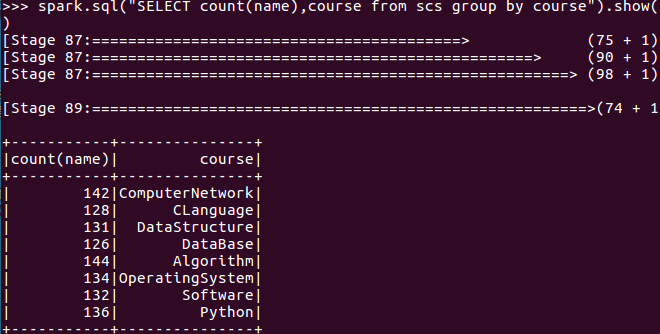

- 每门课程有多少个学生选?

- spark.sql("SELECT count(name),course from scs group by course").show()

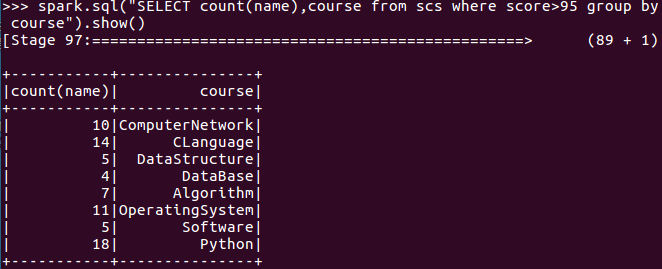

- 每门课程大于95分的学生人数?

- spark.sql("SELECT count(name),course from scs where score>95 group by course").show()

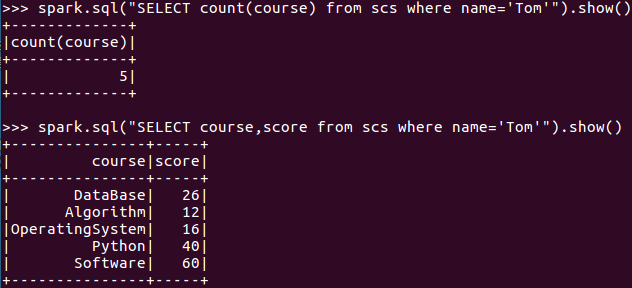

- Tom选修了几门课?每门课多少分

- spark.sql("SELECT count(course) from scs where name='Tom'").show()

- spark.sql("SELECT course,score from scs where name='Tom'").show()

-

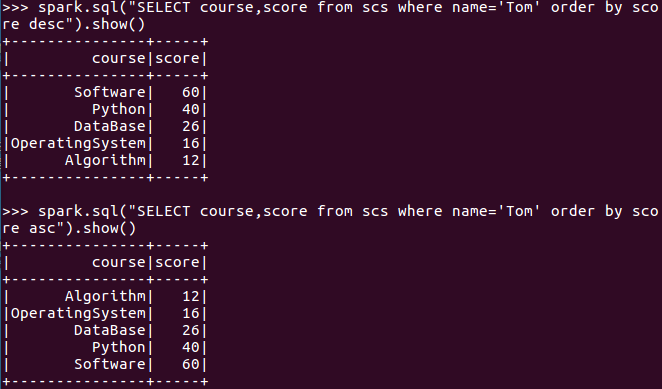

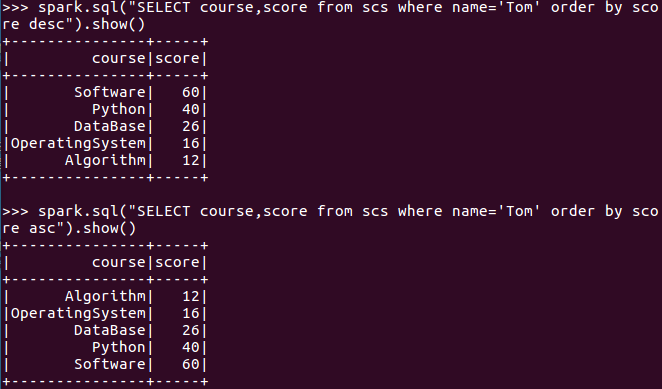

- Tom的成绩按分数大小排序。

- (从大到小 desc 从小到大 asc)

- spark.sql("SELECT course,score from scs where name='Tom' order by score desc").show()

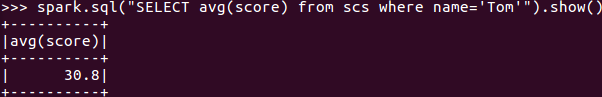

- Tom的平均分。

- spark.sql("SELECT avg(score) from scs where name='Tom'").show()

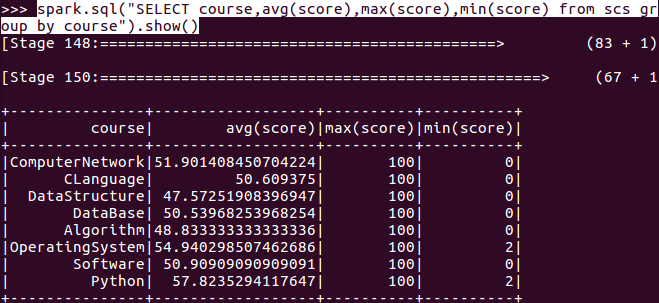

- 求每门课的平均分,最高分,最低分。

- spark.sql("SELECT course,avg(score),max(score),min(score) from scs group by course").show()

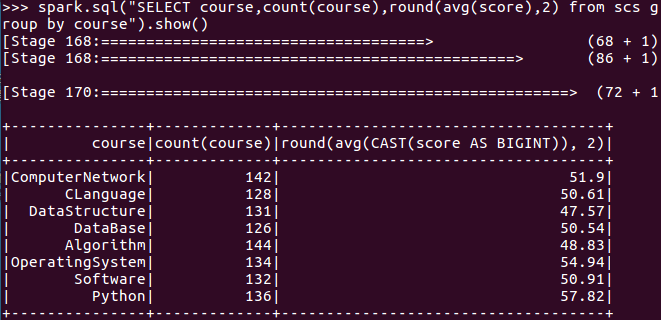

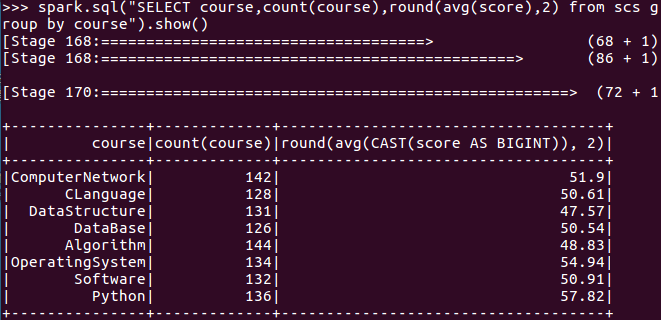

- 求每门课的选修人数及平均分,精确到2位小数。

-

spark.sql("SELECT course,count(course),round(avg(score),2) from scs group by course").show()

-

- 每门课的不及格人数,通过率(未成功)

-

spark.sql("SELECT a.nopass,(b.total-a.nopass)/b.total from (SELECT course,distinct(count(*))as nopass from scs where score<60 group by course)as a left join (SELECT course,distinct(count(course))as total from scs group by course)as b on a.course=b.course").show()

-

spark.sql("SELECT course, count(name) as n, avg(score) as avg FROM scs group by course").createOrReplaceTempView("a")

spark.sql("SELECT course, count(score) as notPass FROM scs WHERE score<60 group by course").createOrReplaceTempView("b")

spark.sql("SELECT * FROM a left join b on a.course=b.course").show()

spark.sql("SELECT a.course, round(a.avg, 2), b.notPass, round((a.n-b.notPass)/a.n, 2) as passRat FROM a left join b on a.course=b.course").show()

-

三、对比分别用RDD操作实现、用DataFrame操作实现和用SQL语句实现的异同。(比较两个以上问题)

准备工作的不同:

RDD:chapters = sc.textFile('file:///home/hadoop/chapter4-data01.txt')

DataFrame:

1.生成“表头”

from pyspark.sql.types import IntegerType,StringType,StructField,StructType

from pyspark.sql import Row

fields=[StructField('name',StringType(),True),StructField('course',StringType(),True),StructField('score',IntegerType(),True)]

schema=StructType(fields)

2.生成“表中的记录”

chapter=sc.textFile('file:///home/hadoop/chapter4-data01.txt').map(lambda x:x.split(','))

data=chapter.map(lambda p:Row(p[0],p[1],int(p[2])))

3.把“表头”和“表中的记录”拼装在一起

df_scs=spark.createDataFrame(data,schema)

SQL:

在DataFrame基础上添加:df_scs.createOrReplaceTempView('scs')

求每门课的选修人数及平均分,精确到2位小数:

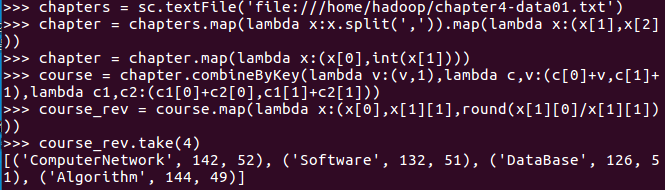

RDD:

chapter = chapters.map(lambda x:x.split(',')).map(lambda x:(x[1],x[2]))

chapter = chapter.map(lambda x:(x[0],int(x[1])))

course = chapter.combineByKey(lambda v:(v,1),lambda c,v:(c[0]+v,c[1]+1),lambda c1,c2:(c1[0]+c2[0],c1[1]+c2[1]))

course_rev = course.map(lambda x:(x[0],x[1][1],round(x[1][0]/x[1][1])))

course_rev.take(4)

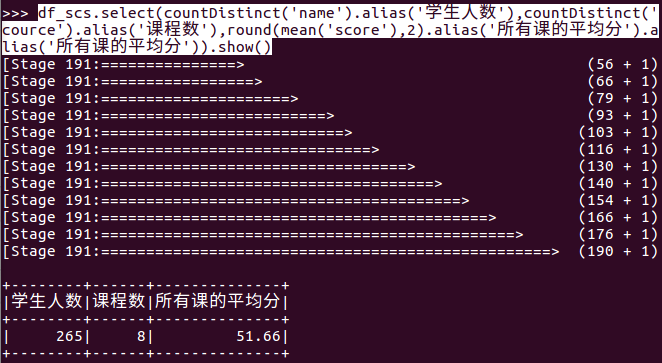

DataFrame:

df_scs.select(countDistinct('name').alias('学生人数'),countDistinct('cource').alias('课程数'),round(mean('score'),2).alias('所有课的平均分').alias('所有课的平均分')).show()

SQL:

spark.sql("SELECT course,count(course),round(avg(score),2) from scs group by course").show()

Tom的成绩按分数大小排序:

RDD:

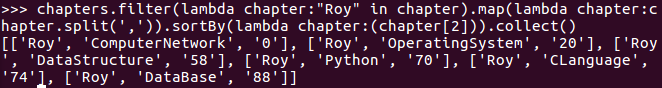

chapters.filter(lambda chapter:"Roy" in chapter).map(lambda chapter:chapter.split(',')).sortBy(lambda chapter:(chapter[2])).collect()

DataFrame:

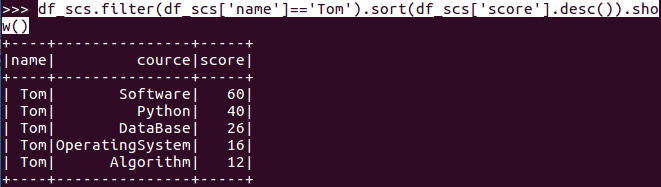

df_scs.filter(df_scs['name']=='Tom').sort(df_scs['score'].desc()).show()

SQL:

spark.sql("SELECT course,score from scs where name='Tom' order by score desc").show()