WebRTC学习(三)WebRTC设备管理

一:WebRTC设备管理

(一)重要API,用于获取音视频设备

其中返回的ePromise结果,是一个Promise对象。

Promise对象的结构体:MediaDevicesInfo

deviceID:是设备唯一标识符ID label:是设备的名字(可读的) kind:设备的种类(音频输入/输出两种,视频输入/输出两类) groupID:同一个设备可能包含两种类型,比如输入/输出;但是这两种都是包含同一个groupID

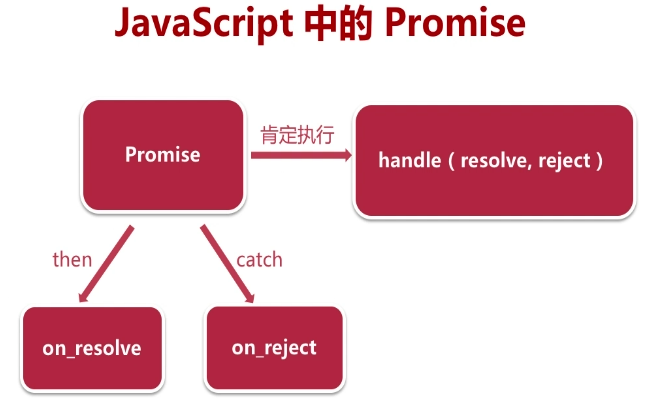

(二)JavaScript中的Promise (异步调用中的其中一种方式)

补充:javascript中是使用单线程去处理逻辑,所以为了防止阻塞,大量使用了异步调用

详解见:https://segmentfault.com/a/1190000017312249

(三)获取音视频设备

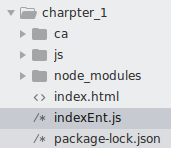

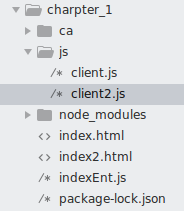

1.开启web服务器indexEnt.js

'use strict' var http = require("http"); var https = require("https"); var fs = require("fs"); var express = require("express"); var serveIndex = require("serve-index"); var app = express(); //实例化express app.use(serveIndex("./")); //设置首路径,url会直接去访问该目录下的文件 app.use(express.static("./")); //可以访问目录下的所有文件 //http server var http_server = http.createServer(app); //这里不使用匿名函数,使用express对象 http_server.listen(80,"0.0.0.0"); //https server var options = { key : fs.readFileSync("./ca/learn.webrtc.com-key.pem"), //同步读取文件key cert: fs.readFileSync("./ca/learn.webrtc.com.pem"), //同步读取文件证书 }; var https_server = https.createServer(options,app); https_server.listen(443,"0.0.0.0");

2.设置index.html

<html>

<head>

<title> WebRTC get audio and video devices </title>

<script type="text/javascript" src="./js/client.js"></script>

</head>

<body>

<h1>Index.html</h1>

</body>

</html>

3.设置client.js

'use strict' if(!navigator.mediaDevices || !navigator.mediaDevices.enumerateDevices){ console.log("enumerateDevices is not supported!"); }else{ navigator.mediaDevices.enumerateDevices() .then(gotDevices) .catch(handleError); } function gotDevices(deviceInfos){ deviceInfos.forEach(function(deviceInfo){ console.log("id="+deviceInfo.deviceId+ ";label="+deviceInfo.label+ ";kind="+deviceInfo.kind+ ";groupId="+deviceInfo.groupId); }); } function handleError(err){ console.log(err.name+":"+err.message); }

4.开启服务

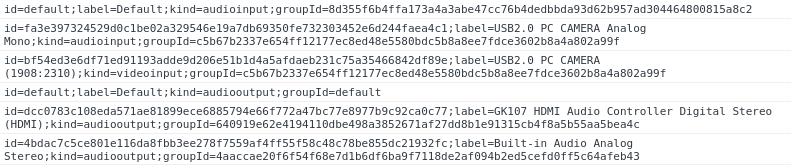

5.查看结果

id=default;

label=Default;

kind=audioinput;

groupId=8d355f6b4ffa173a4a3abe47cc76b4dedbbda93d62b957ad304464800815a8c2

id=fa3e397324529d0c1be02a329546e19a7db69350fe732303452e6d244faea4c1;

label=USB2.0 PC CAMERA Analog Mono;

kind=audioinput;

groupId=c5b67b2337e654ff12177ec8ed48e5580bdc5b8a8ee7fdce3602b8a4a802a99f

id=bf54ed3e6df71ed91193adde9d206e51b1d4a5afdaeb231c75a35466842df89e;

label=USB2.0 PC CAMERA (1908:2310);

kind=videoinput;

groupId=c5b67b2337e654ff12177ec8ed48e5580bdc5b8a8ee7fdce3602b8a4a802a99f

id=default;

label=Default;

kind=audiooutput;

groupId=default

id=dcc0783c108eda571ae81899ece6885794e66f772a47bc77e8977b9c92ca0c77;

label=GK107 HDMI Audio Controller Digital Stereo (HDMI);

kind=audiooutput;

groupId=640919e62e4194110dbe498a3852671af27dd8b1e91315cb4f8a5b55aa5bea4c

id=4bdac7c5ce801e116da8fbb3ee278f7559af4ff55f58c48c78be855dc21932fc;

label=Built-in Audio Analog Stereo;

kind=audiooutput;

groupId=4aaccae20f6f54f68e7d1b6df6ba9f7118de2af094b2ed5cefd0ff5c64afeb43

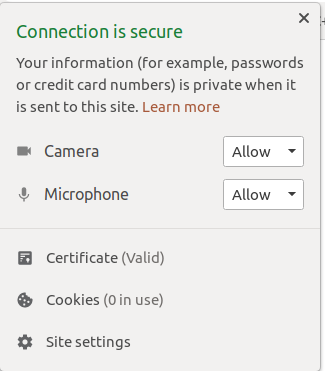

注意:我们需要先设置站点,允许摄像头和麦克风才能获取信息

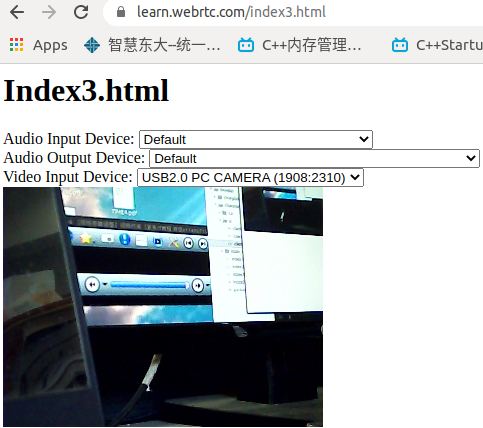

(四)显示设备到页面

1.修改index.html

<html>

<head>

<title> WebRTC get audio and video devices </title>

</head>

<body>

<h1>Index.html</h1>

<div>

<label>Audio Input Device:</label>

<select id="audioInput"></select>

</div>

<div>

<label>Audio Output Device:</label>

<select id="audioOutput"></select>

</div>

<div>

<label>Video Input Device:</label>

<select id="videoInput"></select>

</div>

</body>

<script type="text/javascript" src="./js/client.js"></script>

</html>

注意:script中要使用到id信息,所以script应该写在所有需要的id元素后面!!

2.修改client.js文件

'use strict' var audioInput = document.getElementById("audioInput"); var audioOutput = document.getElementById("audioOutput"); var videoInput = document.getElementById("videoInput"); if(!navigator.mediaDevices || !navigator.mediaDevices.enumerateDevices){ console.log("enumerateDevices is not supported!"); }else{ navigator.mediaDevices.enumerateDevices() .then(gotDevices) .catch(handleError); } function gotDevices(deviceInfos){ deviceInfos.forEach(function(deviceInfo){ console.log("id="+deviceInfo.deviceId+ ";label="+deviceInfo.label+ ";kind="+deviceInfo.kind+ ";groupId="+deviceInfo.groupId); var option = document.createElement("option"); option.text = deviceInfo.label; option.value = deviceInfo.deviceId; if(deviceInfo.kind === "audioinput"){ audioInput.appendChild(option); }else if(deviceInfo.kind === "audiooutput"){ audioOutput.appendChild(option); }else if(deviceInfo.kind === "videoinput"){ videoInput.appendChild(option); } }); } function handleError(err){ console.log(err.name+":"+err.message); }

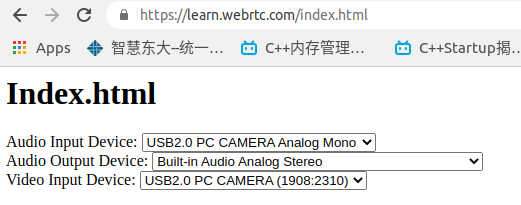

二:WebRTC获取音视频数据

(一)采集音视频数据

其中constraints参数类型为MediaStreamConstraints

分别对video与audio进行限制,可以用布尔类型或者MediaTrackConstraints类型,其中MediaTrackConstraints可以进行详细的设置(比如帧率、分辨率、声道)

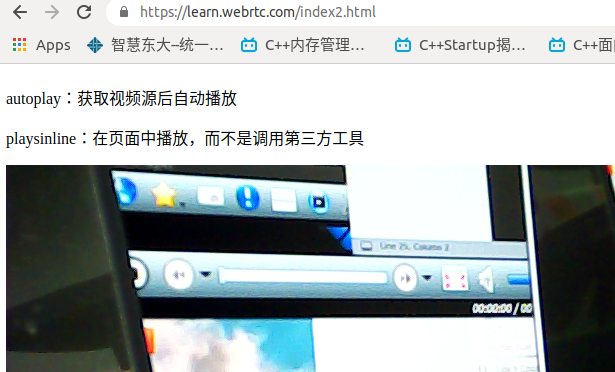

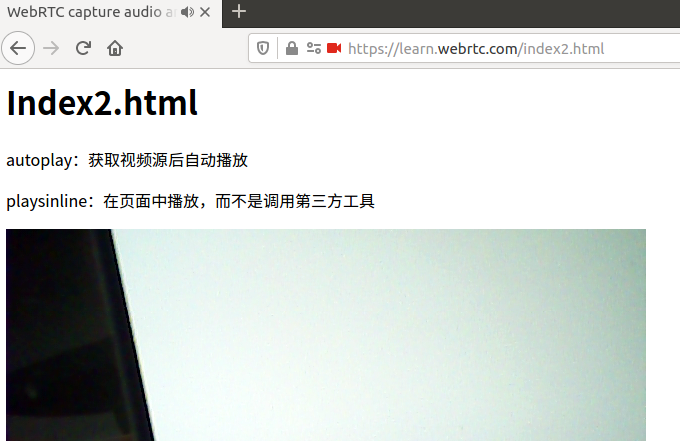

1.实现index2.html

<html>

<head>

<title> WebRTC capture audio and video data </title>

</head>

<body>

<h1>Index2.html</h1>

<p>autoplay:获取视频源后自动播放</p>

<p>playsinline:在页面中播放,而不是调用第三方工具</p>

<video autoplay playsinline id="player"></video>

</body>

<script type="text/javascript" src="./js/client2.js"></script>

</html>

2.实现client2.js

'use strict' var videoplay = document.querySelector("video#player"); if(!navigator.mediaDevices || !navigator.mediaDevices.getUserMedia){ console.log("getUserMedia is not supported!"); }else{ var constraints = { video : true, audio : true } navigator.mediaDevices.getUserMedia(constraints) .then(getUserMedia) .catch(handleError); } function getUserMedia(stream){ videoplay.srcObject = stream; //设置采集到的流进行播放 } function handleError(err){ console.log(err.name+":"+err.message); }

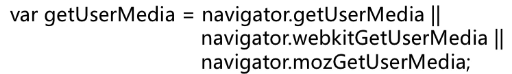

(二)getUserMedia适配问题(在WebRTC规范1.0之前)

对于不同的浏览器,实现了不同版本的getUserMedia

解决方法一:自己实现

解决方法二:使用google开源库 adapter.js 适配各个浏览器接口

![]()

随着规范的使用,以后可以不用考虑适配问题!

<html>

<head>

<title> WebRTC capture audio and video data </title>

</head>

<body>

<h1>Index2.html</h1>

<p>autoplay:获取视频源后自动播放</p>

<p>playsinline:在页面中播放,而不是调用第三方工具</p>

<video autoplay playsinline id="player"></video>

</body>

<script type="text/javascript" src="https://webrtc.github.io/adapter/adapter-latest.js"></script>

<script type="text/javascript" src="./js/client2.js"></script>

</html>

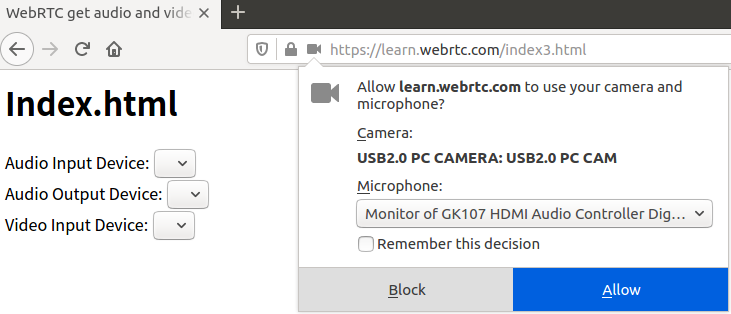

(三)获取音视频设备的访问权限

在一中获取了音视频设备之后,虽然在chrome中可以显示结果,但是在其他浏览器中并没有显示,比如Firefox

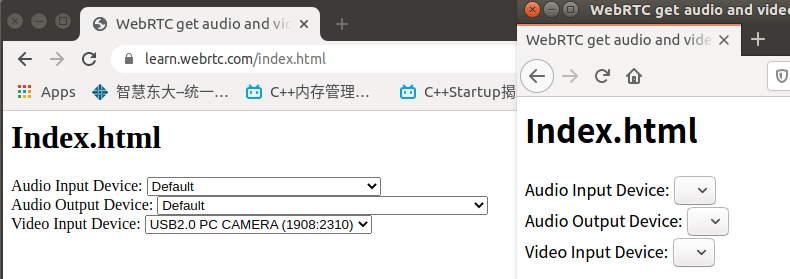

左(chrome)右(Firefox),因为我们没有允许访问权限。其实前面的chrome权限也是我们提前去设置中开启的。

但是我们可以使用前面(一)中的getUserMedia方法,去使得浏览器弹出访问权限请求窗口,从而去获取权限!!!

1.index3.html实现

<html>

<head>

<title> WebRTC get audio and video devices </title>

</head>

<body>

<h1>Index.html</h1>

<div>

<label>Audio Input Device:</label>

<select id="audioInput"></select>

</div>

<div>

<label>Audio Output Device:</label>

<select id="audioOutput"></select>

</div>

<div>

<label>Video Input Device:</label>

<select id="videoInput"></select>

</div>

<video autoplay playsinline id="player"></video>

</body>

<script type="text/javascript" src="https://webrtc.github.io/adapter/adapter-latest.js"></script>

<script type="text/javascript" src="./js/client3.js"></script>

</html>

2.client3.js实现

'use strict' var audioInput = document.getElementById("audioInput"); var audioOutput = document.getElementById("audioOutput"); var videoInput = document.getElementById("videoInput"); var videoplay = document.querySelector("video#player"); if(!navigator.mediaDevices || !navigator.mediaDevices.getUserMedia){ console.log("getUserMedia is not supported!"); }else{ var constraints = { video : true, audio : true } navigator.mediaDevices.getUserMedia(constraints) .then(getUserMedia) //getUserMedia获取权限,返回enumerateDevices进行串联 .then(gotDevices) //可以进行串联处理 .catch(handleError); } function getUserMedia(stream){ videoplay.srcObject = stream; //设置采集到的流进行播放 return navigator.mediaDevices.enumerateDevices(); //为了进行串联处理!!! } function gotDevices(deviceInfos){ deviceInfos.forEach(function(deviceInfo){ console.log("id="+deviceInfo.deviceId+ ";label="+deviceInfo.label+ ";kind="+deviceInfo.kind+ ";groupId="+deviceInfo.groupId); var option = document.createElement("option"); option.text = deviceInfo.label; option.value = deviceInfo.deviceId; if(deviceInfo.kind === "audioinput"){ audioInput.appendChild(option); }else if(deviceInfo.kind === "audiooutput"){ audioOutput.appendChild(option); }else if(deviceInfo.kind === "videoinput"){ videoInput.appendChild(option); } }); } function handleError(err){ console.log(err.name+":"+err.message); }

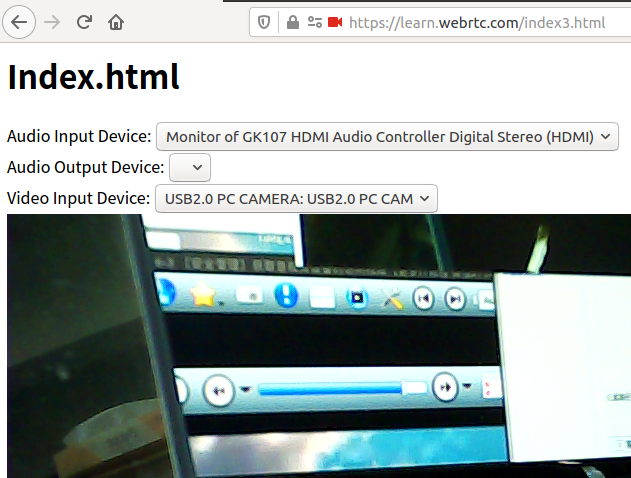

结果显示:

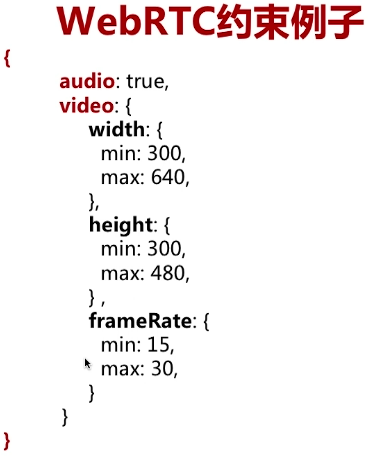

三:WebRTC音视频采集约束

通过约束,可以精确的控制音视频的采集数据!!

(一)视频参数调整

width与height用来控制分辨率,宽高比 aspectRatio = 宽/高 frameRate帧率 facingMode设置摄像头模式 resizeMode是否进行裁剪

'use strict' var audioInput = document.getElementById("audioInput"); var audioOutput = document.getElementById("audioOutput"); var videoInput = document.getElementById("videoInput"); var videoplay = document.querySelector("video#player"); if(!navigator.mediaDevices || !navigator.mediaDevices.getUserMedia){ console.log("getUserMedia is not supported!"); }else{ var constraints = { video : { width:320, height:240, frameRate:30, facingMode:"user" }, audio : false } navigator.mediaDevices.getUserMedia(constraints) .then(getUserMedia) .then(gotDevices) //可以进行串联处理 .catch(handleError); } function getUserMedia(stream){ videoplay.srcObject = stream; //设置采集到的流进行播放 return navigator.mediaDevices.enumerateDevices(); //为了进行串联处理!!! } function gotDevices(deviceInfos){ deviceInfos.forEach(function(deviceInfo){ console.log("id="+deviceInfo.deviceId+ ";label="+deviceInfo.label+ ";kind="+deviceInfo.kind+ ";groupId="+deviceInfo.groupId); var option = document.createElement("option"); option.text = deviceInfo.label; option.value = deviceInfo.deviceId; if(deviceInfo.kind === "audioinput"){ audioInput.appendChild(option); }else if(deviceInfo.kind === "audiooutput"){ audioOutput.appendChild(option); }else if(deviceInfo.kind === "videoinput"){ videoInput.appendChild(option); } }); } function handleError(err){ console.log(err.name+":"+err.message); }

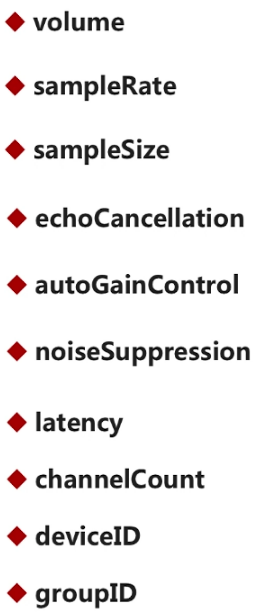

(二)音频参数调整

sampleSize:采样大小(位深) echoCancellation:回音消除 autoGainControl:自动增益,是否在原有声音基础上,增强音量 noiseSuppression:降噪 latency:延迟大小(延迟小,则实时性强) channelCount:声道数量 deviceID:选取设备的切换 groupID:组ID

'use strict' var audioInput = document.getElementById("audioInput"); var audioOutput = document.getElementById("audioOutput"); var videoInput = document.getElementById("videoInput"); var videoplay = document.querySelector("video#player"); function start(){ console.log("start......"); if(!navigator.mediaDevices || !navigator.mediaDevices.getUserMedia){ console.log("getUserMedia is not supported!"); }else{ var devId = videoInput.value; var constraints = { video : { width:320, height:240, frameRate:30, facingMode:"user" }, audio : { noiseSuppression:true, echoCancellation:true }, deviceId : devId ? devId : undefined //undefined是使用默认设备 } navigator.mediaDevices.getUserMedia(constraints) .then(getUserMedia) .then(gotDevices) //可以进行串联处理 .catch(handleError); } } function getUserMedia(stream){ videoplay.srcObject = stream; //设置采集到的流进行播放 return navigator.mediaDevices.enumerateDevices(); //为了进行串联处理!!! } function gotDevices(deviceInfos){ deviceInfos.forEach(function(deviceInfo){ console.log("id="+deviceInfo.deviceId+ ";label="+deviceInfo.label+ ";kind="+deviceInfo.kind+ ";groupId="+deviceInfo.groupId); var option = document.createElement("option"); option.text = deviceInfo.label; option.value = deviceInfo.deviceId; if(deviceInfo.kind === "audioinput"){ audioInput.appendChild(option); }else if(deviceInfo.kind === "audiooutput"){ audioOutput.appendChild(option); }else if(deviceInfo.kind === "videoinput"){ videoInput.appendChild(option); } }); } function handleError(err){ console.log(err.name+":"+err.message); } start(); //开始执行逻辑 //---------设置事件-----select选择摄像头之后 videoInput.onchange = start;

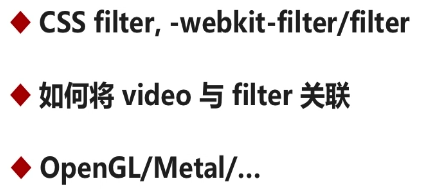

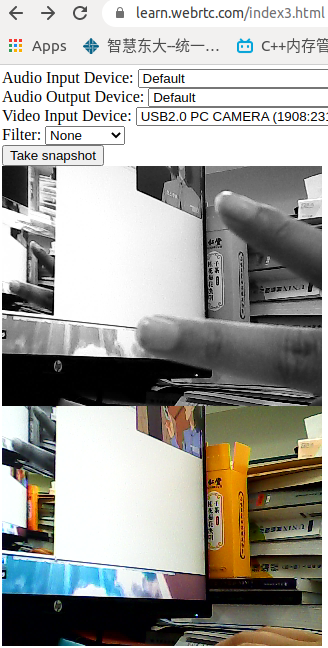

四:WebRTC视频特效处理

(一)浏览器视频特效

在浏览器中使用CSS filter进行特效处理,在不同的浏览器中,filter不同。

在浏览器中使用filter进行渲染的时候,底层最终就是调用OpenGL或者Metal等等

(二)支持的特效种类(常见的)

(三)代码实现

<html>

<head>

<title> WebRTC get audio and video devices </title>

<style>

.none {

-webkit-filter: none;

}

.blue {

-webkit-filter: blur(3px);

}

.grayscale {

-webkit-filter: grayscale(1);

}

.invert {

-webkit-filter: invert(1);

}

.sepia {

-webkit-filter: sepia(1);

}

</style>

</head>

<body>

<h1>Index3.html</h1>

<div>

<label>Audio Input Device:</label>

<select id="audioInput"></select>

</div>

<div>

<label>Audio Output Device:</label>

<select id="audioOutput"></select>

</div>

<div>

<label>Video Input Device:</label>

<select id="videoInput"></select>

</div>

<div>

<label>Filter:</label>

<select id="filter">

<option value="none">None</option>

<option value="blur">blur</option>

<option value="grayscale">grayscale</option>

<option value="invert">invert</option>

<option value="sepia">sepia</option>

</select>

</div>

<video autoplay playsinline id="player"></video>

</body>

<script type="text/javascript" src="https://webrtc.github.io/adapter/adapter-latest.js"></script>

<script type="text/javascript" src="./js/client3.js"></script>

</html>

实现client3.js

'use strict' var audioInput = document.getElementById("audioInput"); var audioOutput = document.getElementById("audioOutput"); var videoInput = document.getElementById("videoInput"); var filtersSelect = document.getElementById("filter"); var videoplay = document.querySelector("video#player"); function start(){ console.log("start......"); if(!navigator.mediaDevices || !navigator.mediaDevices.getUserMedia){ console.log("getUserMedia is not supported!"); }else{ var devId = videoInput.value; var constraints = { video : { width:320, height:240, frameRate:30, facingMode:"user" }, audio : { noiseSuppression:true, echoCancellation:true }, deviceId : devId ? devId : undefined //undefined是使用默认设备 } navigator.mediaDevices.getUserMedia(constraints) .then(getUserMedia) .then(gotDevices) //可以进行串联处理 .catch(handleError); } } function getUserMedia(stream){ videoplay.srcObject = stream; //设置采集到的流进行播放 return navigator.mediaDevices.enumerateDevices(); //为了进行串联处理!!! } function gotDevices(deviceInfos){ deviceInfos.forEach(function(deviceInfo){ console.log("id="+deviceInfo.deviceId+ ";label="+deviceInfo.label+ ";kind="+deviceInfo.kind+ ";groupId="+deviceInfo.groupId); var option = document.createElement("option"); option.text = deviceInfo.label; option.value = deviceInfo.deviceId; if(deviceInfo.kind === "audioinput"){ audioInput.appendChild(option); }else if(deviceInfo.kind === "audiooutput"){ audioOutput.appendChild(option); }else if(deviceInfo.kind === "videoinput"){ videoInput.appendChild(option); } }); } function handleError(err){ console.log(err.name+":"+err.message); } start(); //开始执行逻辑 //---------设置事件-----select选择摄像头之后 videoInput.onchange = start; filtersSelect.onchange = function(){ videoplay.className = filtersSelect.value; //修改使用的类名 }

五:从视频中获取图片

通过html中canvas标签去视频流中获取对应的帧,输出为图片即可。

1.添加button控件,触发事件去获取图片(从视频流中) 2.添加canvas,去将帧转换为图片

<html>

<head>

<title> WebRTC get audio and video devices </title>

<style>

.none {

-webkit-filter: none;

}

.blue {

-webkit-filter: blur(3px);

}

.grayscale {

-webkit-filter: grayscale(1);

}

.invert {

-webkit-filter: invert(1);

}

.sepia {

-webkit-filter: sepia(1);

}

</style>

</head>

<body>

<h1>Index3.html</h1>

<div>

<label>Audio Input Device:</label>

<select id="audioInput"></select>

</div>

<div>

<label>Audio Output Device:</label>

<select id="audioOutput"></select>

</div>

<div>

<label>Video Input Device:</label>

<select id="videoInput"></select>

</div>

<div>

<label>Filter:</label>

<select id="filter">

<option value="none">None</option>

<option value="blur">blur</option>

<option value="grayscale">grayscale</option>

<option value="invert">invert</option>

<option value="sepia">sepia</option>

</select>

</div>

<div>

<button id="snapshot">Take snapshot</button>

</div>

<div>

<canvas id="picture"></canvas>

</div>

<video autoplay playsinline id="player"></video>

</body>

<script type="text/javascript" src="https://webrtc.github.io/adapter/adapter-latest.js"></script>

<script type="text/javascript" src="./js/client3.js"></script>

</html>

client.js文件实现

'use strict' //设备 var audioInput = document.getElementById("audioInput"); var audioOutput = document.getElementById("audioOutput"); var videoInput = document.getElementById("videoInput"); //过滤器 var filtersSelect = document.getElementById("filter"); //图片 var snapshot = document.getElementById("snapshot"); var picture = document.getElementById("picture"); picture.width = 320; picture.height = 240; //视频 var videoplay = document.querySelector("video#player"); function start(){ console.log("start......"); if(!navigator.mediaDevices || !navigator.mediaDevices.getUserMedia){ console.log("getUserMedia is not supported!"); }else{ var devId = videoInput.value; var constraints = { video : { width:320, height:240, frameRate:30, facingMode:"user" }, audio : { noiseSuppression:true, echoCancellation:true }, deviceId : devId ? devId : undefined //undefined是使用默认设备 } navigator.mediaDevices.getUserMedia(constraints) .then(getUserMedia) .then(gotDevices) //可以进行串联处理 .catch(handleError); } } function getUserMedia(stream){ videoplay.srcObject = stream; //设置采集到的流进行播放 return navigator.mediaDevices.enumerateDevices(); //为了进行串联处理!!! } function gotDevices(deviceInfos){ deviceInfos.forEach(function(deviceInfo){ console.log("id="+deviceInfo.deviceId+ ";label="+deviceInfo.label+ ";kind="+deviceInfo.kind+ ";groupId="+deviceInfo.groupId); var option = document.createElement("option"); option.text = deviceInfo.label; option.value = deviceInfo.deviceId; if(deviceInfo.kind === "audioinput"){ audioInput.appendChild(option); }else if(deviceInfo.kind === "audiooutput"){ audioOutput.appendChild(option); }else if(deviceInfo.kind === "videoinput"){ videoInput.appendChild(option); } }); } function handleError(err){ console.log(err.name+":"+err.message); } start(); //开始执行逻辑 //---------设置事件-----select选择摄像头之后 videoInput.onchange = start; filtersSelect.onchange = function(){ videoplay.className = filtersSelect.value; } snapshot.onclick = function(){

picture.className = filtersSelect.value; //设置图片的特效

picture.getContext("2d") //获取图像上下文,为2d图像 .drawImage(videoplay, //从视频流源中,获取数据 0,0, picture.width,picture.height); }

图片的保存并不会保存特效信息!!!

六:WebRTC只采集音频数据

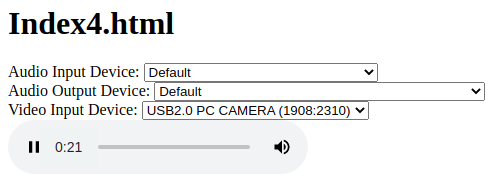

1.index4.html

<html>

<head>

<title> WebRTC get audio devices </title>

</head>

<body>

<h1>Index4.html</h1>

<div>

<label>Audio Input Device:</label>

<select id="audioInput"></select>

</div>

<div>

<label>Audio Output Device:</label>

<select id="audioOutput"></select>

</div>

<div>

<label>Video Input Device:</label>

<select id="videoInput"></select>

</div>

<div>

<!--controls会将控制按钮显示出来-->

<audio autoplay controls id="audioplayer"></audio>

</div>

</body>

<script type="text/javascript" src="https://webrtc.github.io/adapter/adapter-latest.js"></script>

<script type="text/javascript" src="./js/client4.js"></script>

</html>

2.client4.js实现

'use strict' //设备 var audioInput = document.getElementById("audioInput"); var audioOutput = document.getElementById("audioOutput"); var videoInput = document.getElementById("videoInput"); //音频 var audioplay = document.querySelector("audio#audioplayer"); function start(){ console.log("start......"); if(!navigator.mediaDevices || !navigator.mediaDevices.getUserMedia){ console.log("getUserMedia is not supported!"); }else{ var constraints = { video : false, audio : true } navigator.mediaDevices.getUserMedia(constraints) .then(getUserMedia) .then(gotDevices) //可以进行串联处理 .catch(handleError); } } function getUserMedia(stream){ audioplay.srcObject = stream; //设置采集到的流进行播放 return navigator.mediaDevices.enumerateDevices(); //为了进行串联处理!!! } function gotDevices(deviceInfos){ deviceInfos.forEach(function(deviceInfo){ console.log("id="+deviceInfo.deviceId+ ";label="+deviceInfo.label+ ";kind="+deviceInfo.kind+ ";groupId="+deviceInfo.groupId); var option = document.createElement("option"); option.text = deviceInfo.label; option.value = deviceInfo.deviceId; if(deviceInfo.kind === "audioinput"){ audioInput.appendChild(option); }else if(deviceInfo.kind === "audiooutput"){ audioOutput.appendChild(option); }else if(deviceInfo.kind === "videoinput"){ videoInput.appendChild(option); } }); } function handleError(err){ console.log(err.name+":"+err.message); } start(); //开始执行逻辑

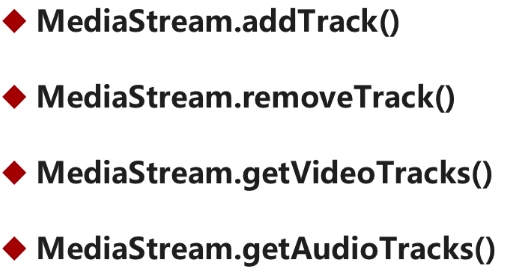

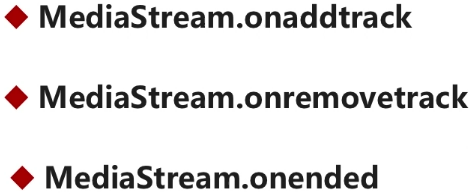

七:MediaStream API及获取视频约束

(一)MediaStream中的方法和事件

1.方法

addTrack: 向媒体流中添加轨

removeTrack: 从媒体流中移除轨

getVideoTracks:将所有的视频轨获取

getAudioTracks:将所有的音频轨获取

2.事件

(二)获取视频约束

<html>

<head>

<title> WebRTC get audio and video devices </title>

<style>

.none {

-webkit-filter: none;

}

.blue {

-webkit-filter: blur(3px);

}

.grayscale {

-webkit-filter: grayscale(1);

}

.invert {

-webkit-filter: invert(1);

}

.sepia {

-webkit-filter: sepia(1);

}

</style>

</head>

<body>

<h1>Index3.html</h1>

<div>

<label>Audio Input Device:</label>

<select id="audioInput"></select>

</div>

<div>

<label>Audio Output Device:</label>

<select id="audioOutput"></select>

</div>

<div>

<label>Video Input Device:</label>

<select id="videoInput"></select>

</div>

<div>

<label>Filter:</label>

<select id="filter">

<option value="none">None</option>

<option value="blur">blur</option>

<option value="grayscale">grayscale</option>

<option value="invert">invert</option>

<option value="sepia">sepia</option>

</select>

</div>

<div>

<button id="snapshot">Take snapshot</button>

</div>

<div>

<canvas id="picture"></canvas>

</div>

<div>

<table>

<tr>

<td>

<video autoplay playsinline id="player"></video>

</td>

<td>

<div id="constraints"></div>

</td>

</tr>

</table>

</div>

</body>

<script type="text/javascript" src="https://webrtc.github.io/adapter/adapter-latest.js"></script>

<script type="text/javascript" src="./js/client3.js"></script>

</html>

'use strict' //设备 var audioInput = document.getElementById("audioInput"); var audioOutput = document.getElementById("audioOutput"); var videoInput = document.getElementById("videoInput"); //过滤器 var filtersSelect = document.getElementById("filter"); //图片 var snapshot = document.getElementById("snapshot"); var picture = document.getElementById("picture"); //约束显示 var divConstraints = document.querySelector("div#constraints"); picture.width = 320; picture.height = 240; //视频 var videoplay = document.querySelector("video#player"); function start(){ console.log("start......"); if(!navigator.mediaDevices || !navigator.mediaDevices.getUserMedia){ console.log("getUserMedia is not supported!"); }else{ var devId = videoInput.value; var constraints = { video : { width:320, height:240, frameRate:30, facingMode:"user" }, audio : { noiseSuppression:true, echoCancellation:true }, deviceId : devId ? devId : undefined //undefined是使用默认设备 } navigator.mediaDevices.getUserMedia(constraints) .then(getUserMedia) .then(gotDevices) //可以进行串联处理 .catch(handleError); } } function getUserMedia(stream){ videoplay.srcObject = stream; //设置采集到的流进行播放 //获取视频的track var videoTrack = stream.getVideoTracks()[0]; //只有一个,所以只取一个 var videoConstraints = videoTrack.getSettings(); //获取约束对象 divConstraints.textContent = JSON.stringify(videoConstraints,null,2); //转为json return navigator.mediaDevices.enumerateDevices(); //为了进行串联处理!!! } function gotDevices(deviceInfos){ deviceInfos.forEach(function(deviceInfo){ console.log("id="+deviceInfo.deviceId+ ";label="+deviceInfo.label+ ";kind="+deviceInfo.kind+ ";groupId="+deviceInfo.groupId); var option = document.createElement("option"); option.text = deviceInfo.label; option.value = deviceInfo.deviceId; if(deviceInfo.kind === "audioinput"){ audioInput.appendChild(option); }else if(deviceInfo.kind === "audiooutput"){ audioOutput.appendChild(option); }else if(deviceInfo.kind === "videoinput"){ videoInput.appendChild(option); } }); } function handleError(err){ console.log(err.name+":"+err.message); } start(); //开始执行逻辑 //---------设置事件-----select选择摄像头之后 videoInput.onchange = start; filtersSelect.onchange = function(){ videoplay.className = filtersSelect.value; } snapshot.onclick = function(){ picture.className = filtersSelect.value; //设置图片的特效 picture.getContext("2d") //获取图像上下文 .drawImage(videoplay, //从视频流源中,获取数据 0,0, picture.width,picture.height); }

{

"aspectRatio": 1.3333333333333333,

"brightness": 128,

"contrast": 130,

"deviceId": "bf54ed3e6df71ed91193adde9d206e51b1d4a5afdaeb231c75a35466842df89e",

"frameRate": 30,

"groupId": "d0a8260d845d1e294dbf6819b7c36eedf635dd555e2529b7609c1fe25c9a1f82",

"height": 240,

"resizeMode": "none",

"saturation": 64,

"sharpness": 13,

"whiteBalanceMode": "none",

"width": 320

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号