Pytorch学习

注意:所有文章结合读,不要只读一篇文章!!!!

一:张量的学习

(一)基础数据结构--张量

https://blog.csdn.net/oldmao_2001/article/details/102534415

https://blog.csdn.net/oldmao_2001/article/details/102546607

(二)张量学习(含代码)

https://blog.zhangxiann.com/202002052039/

https://blog.zhangxiann.com/202002082037/

(三)计算图机制

https://blog.zhangxiann.com/202002112035/

https://blog.csdn.net/oldmao_2001/article/details/102546607

(四)autograd 与逻辑回归

https://blog.zhangxiann.com/202002152033/

https://blog.csdn.net/oldmao_2001/article/details/102638970

(五)补充

1.pytorch中的.detach和.data深入详解

相同点:

tensor.data和tensor.detach() 都是变量从图中分离,但而这都是“原位操作 inplace operation”。

不同点:

(1).data 是一个属性,二.detach()是一个方法; (2).data 是不安全的,.detach()是安全的。

2.饱和和非饱和激活函数

二:数据预处理与加载

(一)数据加载DataLoader 与 DataSet

https://blog.zhangxiann.com/202002192017/(自定义dataset,加载数据)

https://blog.csdn.net/oldmao_2001/article/details/102661974

(二)数据预处理与数据增强

https://blog.zhangxiann.com/202002212045/(预处理了解)

https://blog.csdn.net/oldmao_2001/article/details/102718002(自定义transform方法)

https://blog.zhangxiann.com/202002272047/(数据增强)

(三)补充

为什么pytorch中transforms.ToTensor要把(H,W,C)的矩阵转为(C,H,W)?

三:模型构建

(一)模型创建步骤与 nn.Module

https://blog.csdn.net/oldmao_2001/article/details/102787546

https://blog.zhangxiann.com/202003012001/

(二)nn网络层

https://blog.csdn.net/oldmao_2001/article/details/102844727(总)

https://blog.zhangxiann.com/202003032009/(卷积层)

https://blog.zhangxiann.com/202003072007/(池化层、线性层和激活函数层)

四:模型训练

(一)权值初始化(两篇都要看)---xavier与kaiming

https://blog.zhangxiann.com/202003092013/(着重:正态)

https://zhuanlan.zhihu.com/p/148034113(着重:均匀)

https://blog.csdn.net/oldmao_2001/article/details/102895144

(二)损失函数

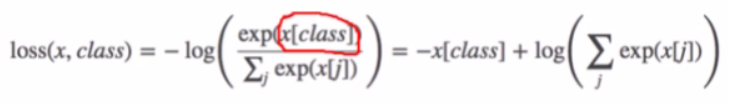

(1)交叉熵

交叉熵=信息熵+相对熵(https://www.zhihu.com/question/41252833)

https://www.cnblogs.com/JeasonIsCoding/p/10171201.html

https://www.cnblogs.com/ssyfj/p/13966848.html(重点:最后都是二分类和多分类)

注意:https://blog.csdn.net/oldmao_2001/article/details/102895144中的交叉熵求解也是对的,可以推导

浙公网安备 33010602011771号

浙公网安备 33010602011771号