XXL-RPC原理分析

背景

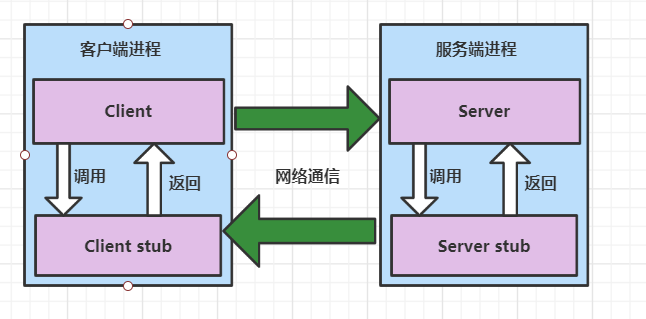

RPC(Remote Procedure Call Protocol,远程过程调用),调用远程服务就像调用本地服务,在提供远程调用能力时不损失本地调用的语义简洁性;

一般公司,尤其是大型互联网公司内部系统由上千上万个服务组成,不同的服务部署在不同机器,跑在不同的JVM上,此时需要解决两个问题:

- 1、如果我需要依赖别人的服务,但是别人的服务在远程机器上,我该如何调用?

- 2、如果其他团队需要使用我的服务,我该怎样发布自己的服务供他人调用?

“XXL-RPC”可以高效的解决这个问题:

- 1、如何调用:只需要知晓远程服务的stub和地址,即可方便的调用远程服务,同时调用透明化,就像调用本地服务一样简单;

- 2、如何发布:只需要提供自己服务的stub和地址,别人即可方便的调用我的服务,在开启注册中心的情况下服务动态发现,只需要提供服务的stub即可;

客户端(Client): 服务的调用方。

服务端(Server):真正的服务提供者。

客户端Stub:存放服务端的地址消息,再将客户端的请求参数打包成网络消息,然后通过网络远程发送给服务方。

服务端Stub:接收客户端发送过来的消息,将消息解包,并调用本地的方法。

Stub封装了远程调用的一些细节,使开发者进行远程调用的时候,就像调用本地接口一样。

客户端stub

代码位置参考(可在idea中全局搜索)

服务注册与发现 :

每隔10s钟服务就会像注册中心注册一下,用来更新updateTime

参考代码: boolean ret = registryBaseClient.registry(new ArrayList<XxlRpcAdminRegistryDataParamVO>(registryData));

心跳检测:不断更新updateTime,与当前时间差距30S就会删除:

参考代码:xxlRpcRegistryDataDao.cleanData(registryBeatTime * 3);

服务发现是在客户端本地发现的,

参考代码:invokerFactory.getRegister().discovery(serviceKey);

会有轮询去请求注册中心,更新本地缓存 如服务被禁用(discoveryData) 且注册中心从文件xxl.rpc.registry.data.filepath中拿,会有线程维护文件和db的一致性

参考代码:com.xxl.rpc.core.registry.impl.xxlrpcadmin.XxlRpcAdminRegistryClient#refreshDiscoveryData

检查注册中心数据 不断的从本地队列拿数据registryQueue.take();去check注册中心的数据

参考代码:checkRegistryDataAndSendMessage(xxlRpcRegistryData);

负载均衡 finalAddress = loadBalance.xxlRpcInvokerRouter.route(serviceKey, addressSet);

发送请求 clientInstance.asyncSend(finalAddress, xxlRpcRequest);

序列化 byte[] requestBytes = serializer.serialize(xxlRpcRequest);

注册中心高可用:如果想要注册中心高可用:简单点,使用Nginx代理,服务配置的注册中心是Nginx代理的地址

取消注册中心 :“@XxlRpcReference” 中address参数 注解参数服务远程地址,ip:port 格式;选填;非空时将会优先实用该服务地址,为空时会从注册中心服务地址发现;

Spring后置处理器 提供代理对象,封装底层通信细节,来代替所有业务调用方直接请求HTTP(Dubbo利用了Spring的Factorybean XXL利用了Spring的PostProcessAfterInstantiation)

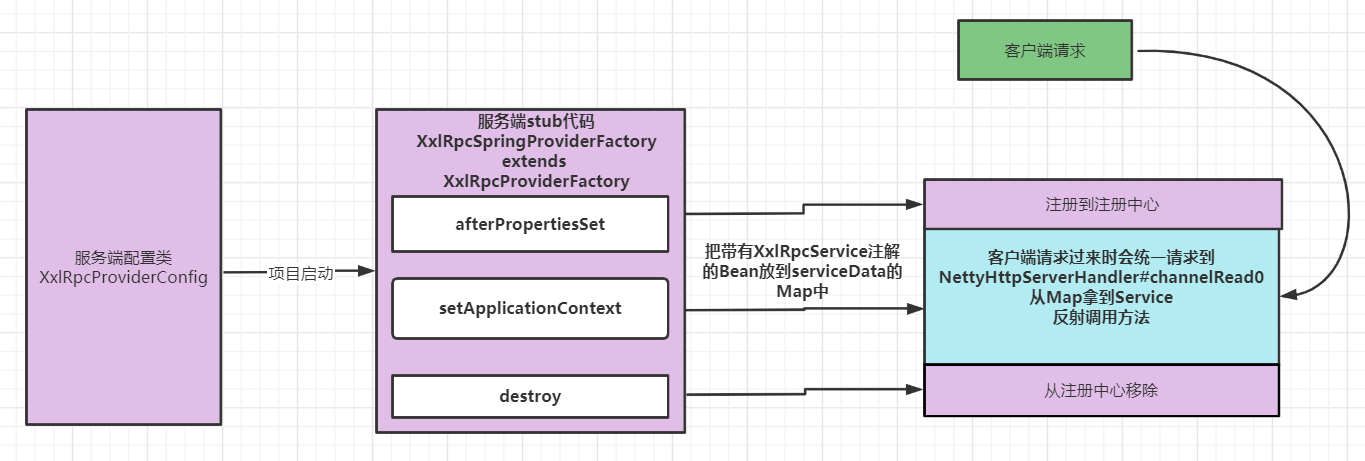

服务端stub

反射调用代码位置参考(可在idea中全局搜索)

// invoke + response

XxlRpcResponse xxlRpcResponse = xxlRpcProviderFactory.invokeService(xxlRpcRequest);

// response serialize

byte[] responseBytes = xxlRpcProviderFactory.getSerializerInstance().serialize(xxlRpcResponse);

引用

源码地址:https://gitee.com/xuxueli0323/xxl-rpc?_from=gitee_search

stub理解:https://www.jianshu.com/p/9ccdea882688

XXL对Netty的使用

客户端:NettyConnectClient

package com.xxl.rpc.core.remoting.net.impl.netty.client; import com.xxl.rpc.core.remoting.invoker.XxlRpcInvokerFactory; import com.xxl.rpc.core.remoting.net.common.ConnectClient; import com.xxl.rpc.core.remoting.net.impl.netty.codec.NettyDecoder; import com.xxl.rpc.core.remoting.net.impl.netty.codec.NettyEncoder; import com.xxl.rpc.core.remoting.net.impl.netty_http.client.NettyHttpConnectClient; import com.xxl.rpc.core.remoting.net.params.BaseCallback; import com.xxl.rpc.core.remoting.net.params.Beat; import com.xxl.rpc.core.remoting.net.params.XxlRpcRequest; import com.xxl.rpc.core.remoting.net.params.XxlRpcResponse; import com.xxl.rpc.core.serialize.Serializer; import com.xxl.rpc.core.util.IpUtil; import io.netty.bootstrap.Bootstrap; import io.netty.channel.Channel; import io.netty.channel.ChannelInitializer; import io.netty.channel.ChannelOption; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioSocketChannel; import io.netty.handler.timeout.IdleStateHandler; import java.util.concurrent.TimeUnit; /** * netty pooled client * * @author xuxueli */ public class NettyConnectClient extends ConnectClient { private static NioEventLoopGroup nioEventLoopGroup; private Channel channel; @Override public void init(String address, final Serializer serializer, final XxlRpcInvokerFactory xxlRpcInvokerFactory) throws Exception { // address Object[] array = IpUtil.parseIpPort(address); String host = (String) array[0]; int port = (int) array[1]; // group if (nioEventLoopGroup == null) { synchronized (NettyHttpConnectClient.class) { if (nioEventLoopGroup == null) { nioEventLoopGroup = new NioEventLoopGroup(); xxlRpcInvokerFactory.addStopCallBack(new BaseCallback() { @Override public void run() throws Exception { nioEventLoopGroup.shutdownGracefully(); } }); } } } // init final NettyConnectClient thisClient = this; Bootstrap bootstrap = new Bootstrap(); bootstrap.group(nioEventLoopGroup) .channel(NioSocketChannel.class) .handler(new ChannelInitializer<SocketChannel>() { @Override public void initChannel(SocketChannel channel) throws Exception { channel.pipeline() .addLast(new IdleStateHandler(0,0,Beat.BEAT_INTERVAL, TimeUnit.SECONDS)) // beat N, close if fail .addLast(new NettyEncoder(XxlRpcRequest.class, serializer)) .addLast(new NettyDecoder(XxlRpcResponse.class, serializer)) .addLast(new NettyClientHandler(xxlRpcInvokerFactory, thisClient)); } }) .option(ChannelOption.TCP_NODELAY, true) .option(ChannelOption.SO_KEEPALIVE, true) .option(ChannelOption.CONNECT_TIMEOUT_MILLIS, 10000); this.channel = bootstrap.connect(host, port).sync().channel(); // valid if (!isValidate()) { close(); return; } logger.debug(">>>>>>>>>>> xxl-rpc netty client proxy, connect to server success at host:{}, port:{}", host, port); } @Override public boolean isValidate() { if (this.channel != null) { return this.channel.isActive(); } return false; } @Override public void close() { if (this.channel != null && this.channel.isActive()) { this.channel.close(); // if this.channel.isOpen() } logger.debug(">>>>>>>>>>> xxl-rpc netty client close."); } @Override public void send(XxlRpcRequest xxlRpcRequest) throws Exception { this.channel.writeAndFlush(xxlRpcRequest).sync(); } }

服务端:NettyServer

package com.xxl.rpc.core.remoting.net.impl.netty.server; import com.xxl.rpc.core.remoting.net.Server; import com.xxl.rpc.core.remoting.net.impl.netty.codec.NettyDecoder; import com.xxl.rpc.core.remoting.net.impl.netty.codec.NettyEncoder; import com.xxl.rpc.core.remoting.net.params.Beat; import com.xxl.rpc.core.remoting.net.params.XxlRpcRequest; import com.xxl.rpc.core.remoting.net.params.XxlRpcResponse; import com.xxl.rpc.core.remoting.provider.XxlRpcProviderFactory; import com.xxl.rpc.core.util.ThreadPoolUtil; import io.netty.bootstrap.ServerBootstrap; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelInitializer; import io.netty.channel.ChannelOption; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioServerSocketChannel; import io.netty.handler.timeout.IdleStateHandler; import java.util.concurrent.ThreadPoolExecutor; import java.util.concurrent.TimeUnit; /** * netty rpc server * * @author xuxueli 2015-10-29 18:17:14 */ public class NettyServer extends Server { private Thread thread; @Override public void start(final XxlRpcProviderFactory xxlRpcProviderFactory) throws Exception { thread = new Thread(new Runnable() { @Override public void run() { // param final ThreadPoolExecutor serverHandlerPool = ThreadPoolUtil.makeServerThreadPool( NettyServer.class.getSimpleName(), xxlRpcProviderFactory.getCorePoolSize(), xxlRpcProviderFactory.getMaxPoolSize()); EventLoopGroup bossGroup = new NioEventLoopGroup(); EventLoopGroup workerGroup = new NioEventLoopGroup(); try { // start server ServerBootstrap bootstrap = new ServerBootstrap(); bootstrap.group(bossGroup, workerGroup) .channel(NioServerSocketChannel.class) .childHandler(new ChannelInitializer<SocketChannel>() { @Override public void initChannel(SocketChannel channel) throws Exception { channel.pipeline() .addLast(new IdleStateHandler(0,0, Beat.BEAT_INTERVAL*3, TimeUnit.SECONDS)) // beat 3N, close if idle .addLast(new NettyDecoder(XxlRpcRequest.class, xxlRpcProviderFactory.getSerializerInstance())) .addLast(new NettyEncoder(XxlRpcResponse.class, xxlRpcProviderFactory.getSerializerInstance())) .addLast(new NettyServerHandler(xxlRpcProviderFactory, serverHandlerPool)); } }) .childOption(ChannelOption.TCP_NODELAY, true) .childOption(ChannelOption.SO_KEEPALIVE, true); // bind ChannelFuture future = bootstrap.bind(xxlRpcProviderFactory.getPort()).sync(); logger.info(">>>>>>>>>>> xxl-rpc remoting server start success, nettype = {}, port = {}", NettyServer.class.getName(), xxlRpcProviderFactory.getPort()); onStarted(); // wait util stop future.channel().closeFuture().sync(); } catch (Exception e) { if (e instanceof InterruptedException) { logger.info(">>>>>>>>>>> xxl-rpc remoting server stop."); } else { logger.error(">>>>>>>>>>> xxl-rpc remoting server error.", e); } } finally { // stop try { serverHandlerPool.shutdown(); // shutdownNow } catch (Exception e) { logger.error(e.getMessage(), e); } try { workerGroup.shutdownGracefully(); bossGroup.shutdownGracefully(); } catch (Exception e) { logger.error(e.getMessage(), e); } } } }); thread.setDaemon(true); thread.start(); } @Override public void stop() throws Exception { // destroy server thread if (thread != null && thread.isAlive()) { thread.interrupt(); } // on stop onStoped(); logger.info(">>>>>>>>>>> xxl-rpc remoting server destroy success."); } }