CentOS7搭建keepalived+DRBD+NFS高可用共享存储

一、服务器信息

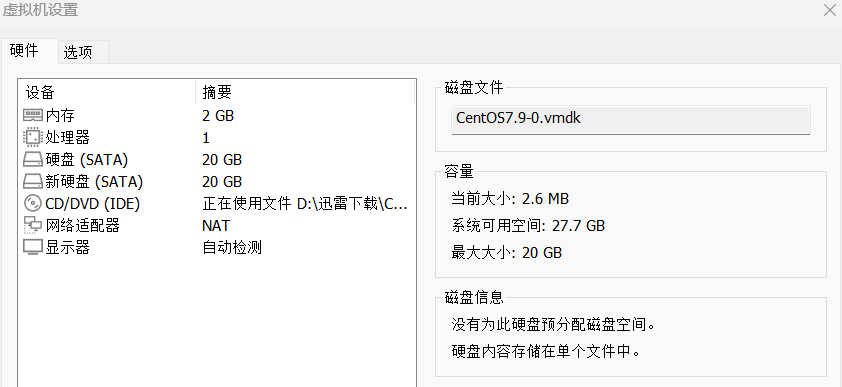

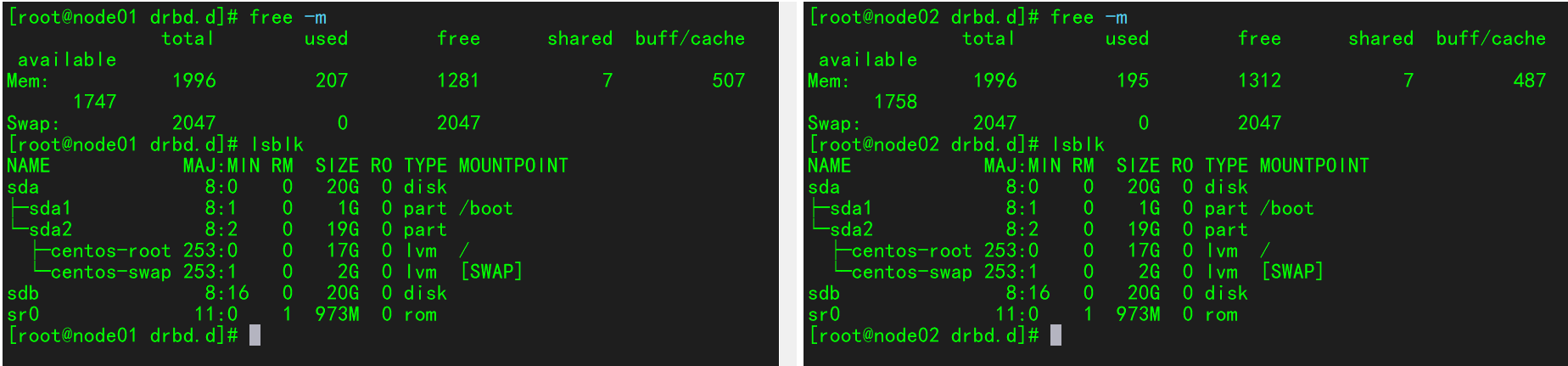

IP地址 类型 主机名 操作系统 内存 磁盘

192.168.11.110 主服务器 node01 CentOS7.9 2G 系统盘20G 存储盘20G

192.168.11.111 备服务器 node02 CentOS7.9 2G 系统盘20G 存储盘20G

二、两台主机关闭防火墙,禁用SELinux

[root@node01 ~]# systemctl stop firewalld

[root@node01 ~]# systemctl is-active firewalld.service

unknown

[root@node01 ~]# iptables -F

[root@node01 ~]# sed -i '/^SELINUX=/ cSELINUX=disabled' /etc/selinux/config

[root@node01 ~]# setenforce 0

三、两台主机设置hosts文件,打通通道

[root@node01 ~]# vim /etc/hosts

[root@node01 ~]# tail -2 /etc/hosts

192.168.11.110 node01

192.168.11.111 node02

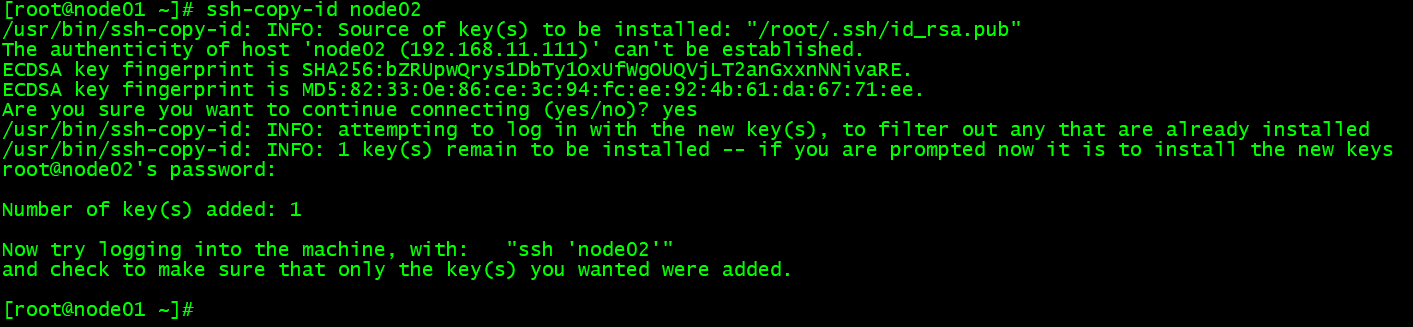

#配置root用户免密钥互信

#主服务器

[root@node01 ~]# ssh-keygen -f ~/.ssh/id_rsa -P '' -q

[root@node01 ~]# ssh-copy-id node02

#备服务器

[root@node02 ~]# ssh-keygen -f ~/.ssh/id_rsa -P '' -q

[root@node02 ~]# ssh-copy-id node01

四、两台主机配置drbd的yum源,方便软件安装

以下操作两台主机同时完成:

导入源

[root@node01 ~]# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

[root@node01 ~]# yum localinstall -y https://mirrors.tuna.tsinghua.edu.cn/elrepo/elrepo/el7/x86_64/RPMS/elrepo-release-7.0-6.el7.elrepo.noarch.rpm

[root@node01 ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

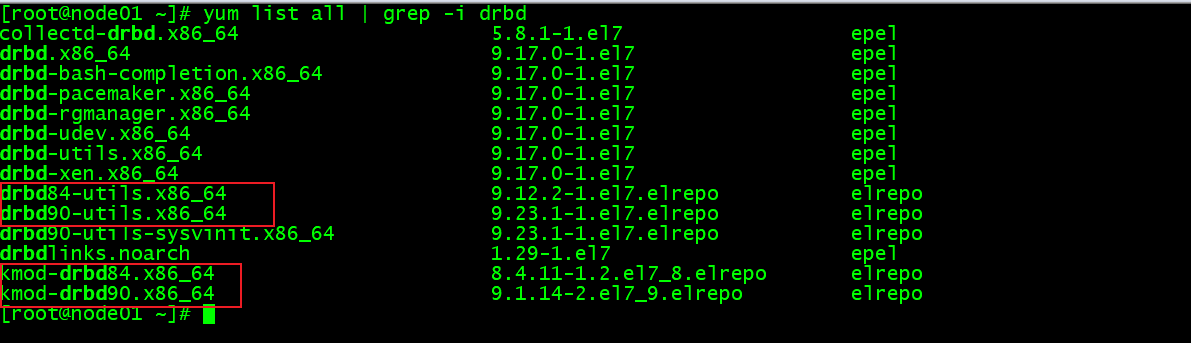

安装DRBD软件包

版本信息

安装90版本

[root@node01 ~]# yum install -y drbd90-utils kmod-drbd90

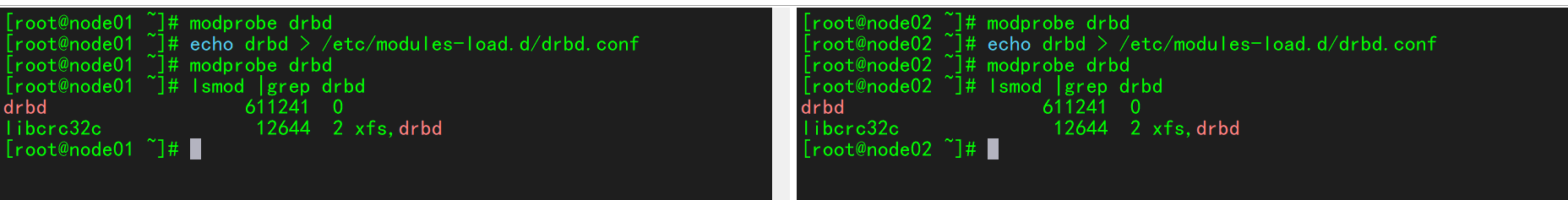

启动DRBD内核模块

[root@node01 ~]# modprobe drbd

[root@node01 ~]# echo drbd > /etc/modules-load.d/drbd.conf

[root@node01 ~]# modprobe drbd

[root@node01 ~]# lsmod |grep drbd

drbd 611241 0

libcrc32c 12644 2 xfs,drbd

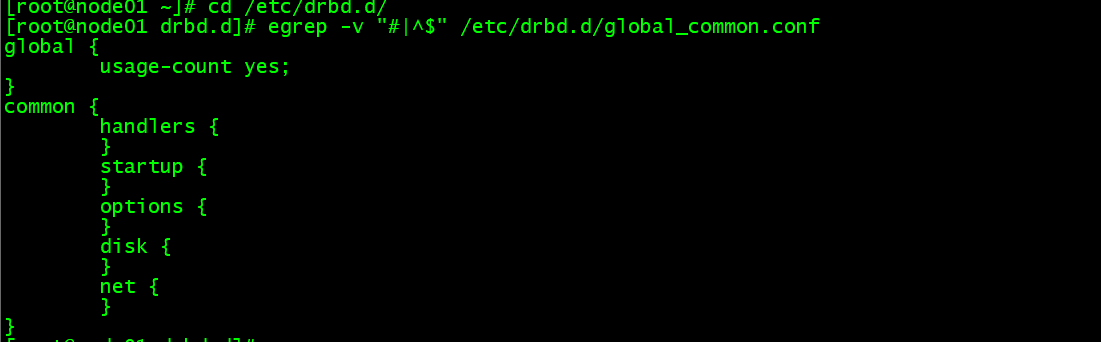

五、配置DRBD

[root@node01 ~]# cd /etc/drbd.d/

[root@node01 drbd.d]# egrep -v "#|^$" /etc/drbd.d/global_common.conf

[root@node01 drbd.d]# vim global_common.conf

global {

usage-count no;

}

common {

protocol C;

handlers {

pri-on-incon-degr "/usr/lib/drbd/notify-pri-on-incon-degr.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

pri-lost-after-sb "/usr/lib/drbd/notify-pri-lost-after-sb.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

local-io-error "/usr/lib/drbd/notify-io-error.sh; /usr/lib/drbd/notify-emergency-shutdown.sh; echo o > /proc/sysrq-trigger ; halt -f";

}

startup {

}

options {

}

disk {

on-io-error detach;

}

net {

}

}

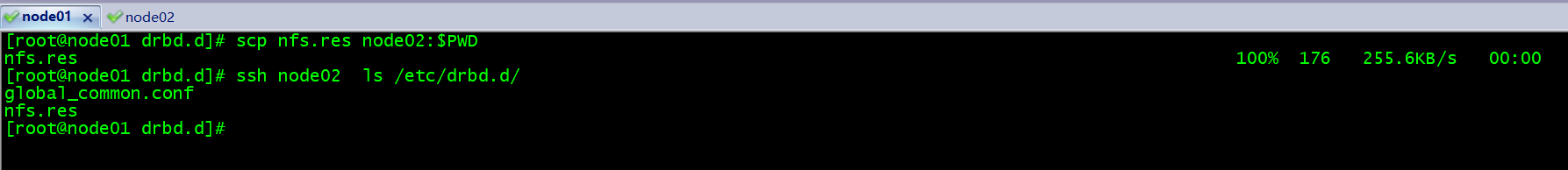

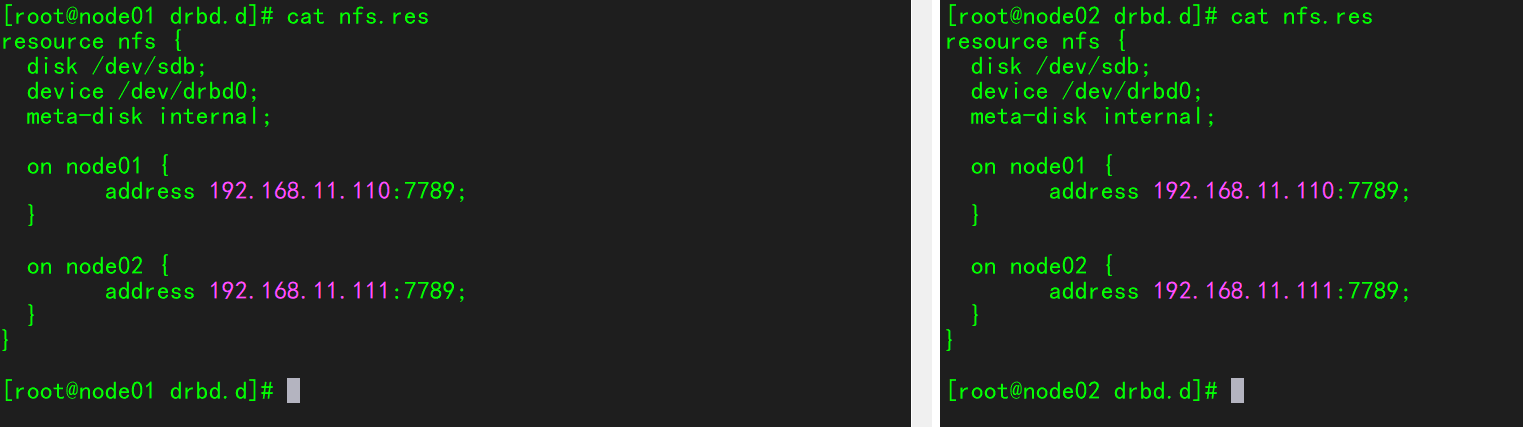

配置资源

[root@node01 drbd.d]# vim nfs.res

resource nfs {

disk /dev/sdb;

device /dev/drbd0;

meta-disk internal;

on node01 {

address 192.168.11.110:7789;

}

on node02 {

address 192.168.11.111:7789;

}

}

[root@node01 drbd.d]# scp nfs.res node02:$PWD

nfs.res 100% 176 255.6KB/s 00:00

[root@node01 drbd.d]# ssh node02 ls /etc/drbd.d/

global_common.conf

nfs.res

六、启用DRBD

1、首次启用资源

有补全功能,需要重新登录会话

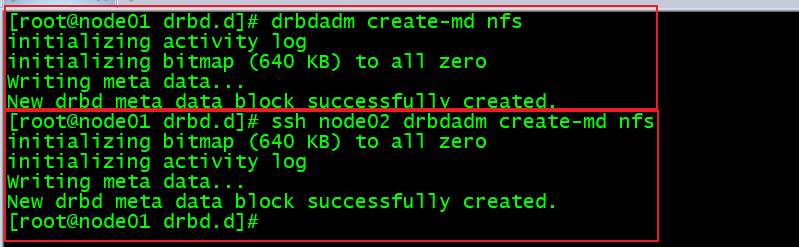

1> 创建设备元数据

[root@node01 drbd.d]# drbdadm create-md nfs

initializing activity log

initializing bitmap (640 KB) to all zero

Writing meta data...

New drbd meta data block successfully created.

[root@node01 drbd.d]# ssh node02 drbdadm create-md nfs

initializing bitmap (640 KB) to all zero

initializing activity log

Writing meta data...

New drbd meta data block successfully created.

[root@node01 drbd.d]#

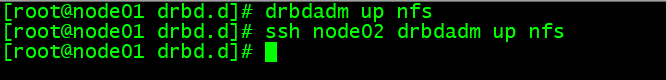

2> 启用资源

[root@node01 drbd.d]# drbdadm up nfs

[root@node01 drbd.d]# ssh node02 drbdadm up nfs

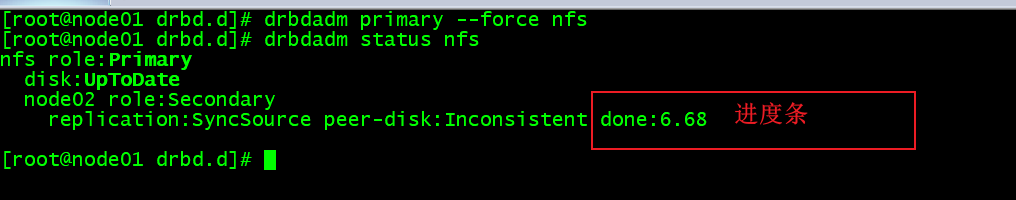

3> 初始设备同步

只在主端执行

[root@node01 drbd.d]# drbdadm primary --force nfs

[root@node01 drbd.d]# drbdadm status nfs

nfs role:Primary

disk:UpToDate

node02 role:Secondary

replication:SyncSource peer-disk:Inconsistent done:6.68

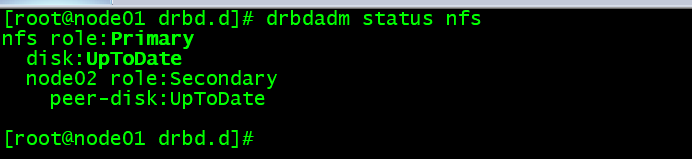

初始完成后

2、常用命令

drbdadm cstate nfs 连接状态

drbdadm dstate nfs 磁盘状态

drbdadm role nfs 资源角色

drbdadm primary nfs 提升资源

drbdadm secondary nfs 降级资源

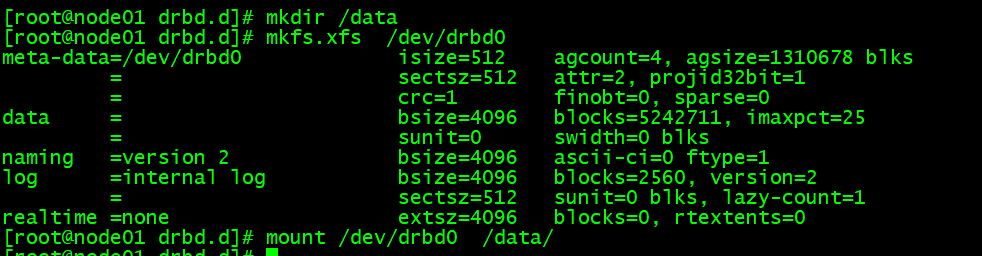

七、验证DRBD

两台节点都创建挂载点:mkdir /data

主节点上操作:

[root@node01 drbd.d]# mkdir /data

[root@node01 drbd.d]# mkfs.xfs /dev/drbd0

meta-data=/dev/drbd0 isize=512 agcount=4, agsize=1310678 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=5242711, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@node01 drbd.d]# mount /dev/drbd0 /data/

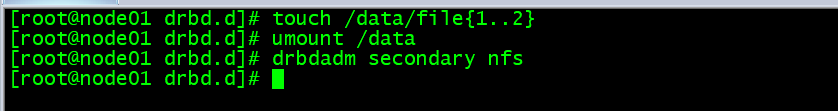

创建测试文件

[root@node01 drbd.d]# touch /data/file{1..2}

卸载文件系统并切换为备节点

[root@node01 drbd.d]# umount /data

[root@node01 drbd.d]# drbdadm secondary nfs

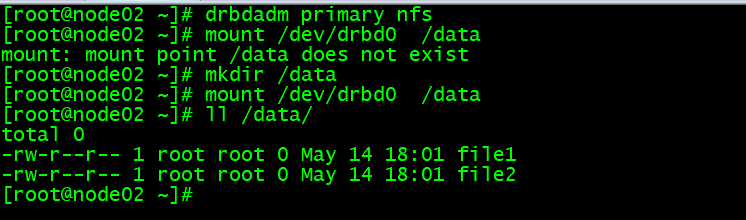

在从上执行以下命令确认文件

[root@node02 ~]# drbdadm primary nfs

[root@node02 ~]# mkdir /data

[root@node02 ~]# mount /dev/drbd0 /data

[root@node02 ~]# ll /data/

total 0

-rw-r--r-- 1 root root 0 May 14 18:01 file1

-rw-r--r-- 1 root root 0 May 14 18:01 file2

启用服务

[root@node01 ~]# systemctl enable --now drbd

Created symlink from /etc/systemd/system/multi-user.target.wants/drbd.service to /usr/lib/systemd/system/drbd.service.

[root@node02 ~]# systemctl enable --now drbd

Created symlink from /etc/systemd/system/multi-user.target.wants/drbd.service to /usr/lib/systemd/system/drbd.service.

至此,DRBD安装完成

八、keepalived+drbd+nfs构建高可用存储

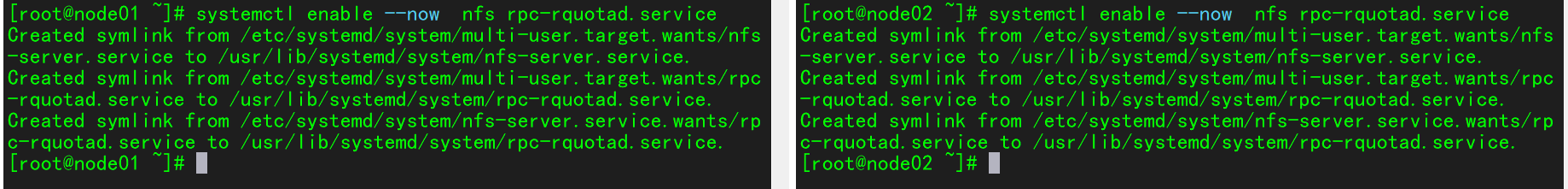

1、安装NFS软件

[root@node01 ~]# yum install rpcbind.x86_64 nfs-utils.x86_64 -y

2、配置

[root@node01 ~]# vim /etc/exports

[root@node01 ~]# cat /etc/exports

/data 192.168.11.0/24(rw,sync,no_root_squash)

3、重启服务,设置为开机自启动

[root@node01 ~]# systemctl enable --now nfs rpc-rquotad.service

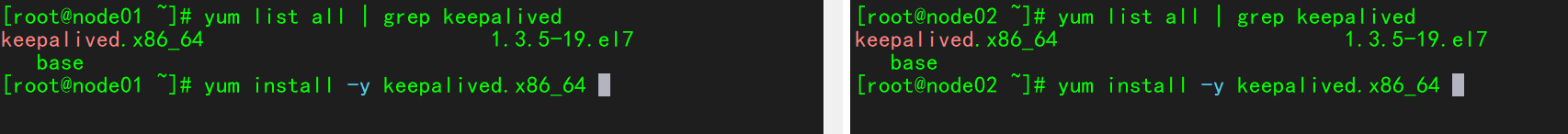

4、安装配置keepalived

自带keepalived版本

[root@node01 ~]# yum list all | grep keepalived

keepalived.x86_64 1.3.5-19.el7 base

安装keepalived ,两台机器都安装

[root@node01 ~]# yum install -y keepalived.x86_64

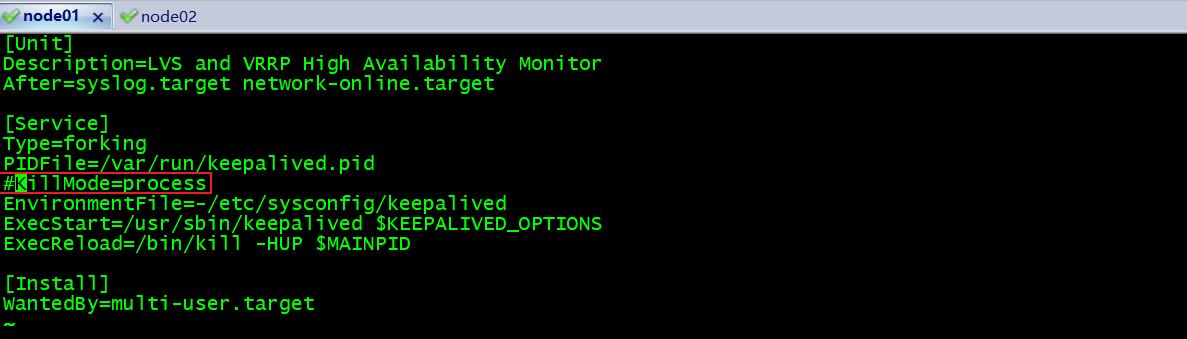

5、修改配置文件

[root@node01 drbd.d]# vim /usr/lib/systemd/system/keepalived.service

[Unit]

Description=LVS and VRRP High Availability Monitor

After=syslog.target network-online.target

[Service]

Type=forking

PIDFile=/var/run/keepalived.pid

#KillMode=process

EnvironmentFile=-/etc/sysconfig/keepalived

ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS

ExecReload=/bin/kill -HUP $MAINPID

[Install]

WantedBy=multi-user.target

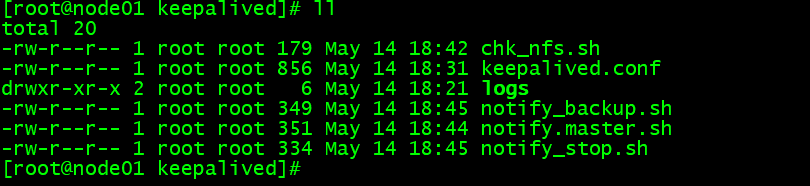

6、两台机器都创建logs目录:mkdir /etc/keepalived/logs

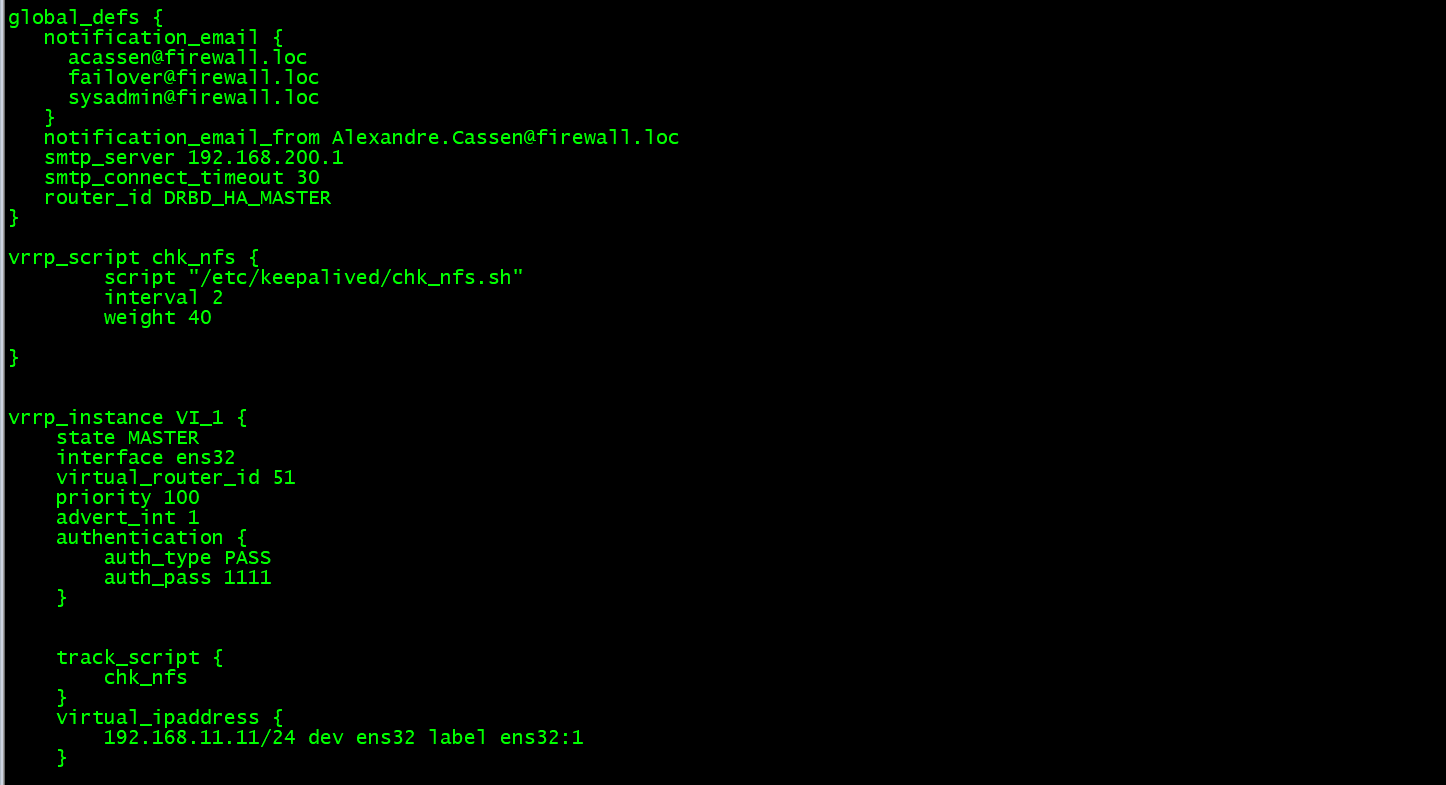

7、主节点配置:

[root@node01 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id DRBD_HA_MASTER

}

vrrp_script chk_nfs {

script "/etc/keepalived/chk_nfs.sh"

interval 2

weight 40

}

vrrp_instance VI_1 {

state MASTER

interface ens32

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

chk_nfs

}

notify_stop /etc/keepalived/notify_stop.sh

notify_master /etc/keepalived/notify_master.sh

notify_backup /etc/keepalived/notify_backup.sh

virtual_ipaddress {

192.168.11.11/24 dev ens32 label ens32:1

}

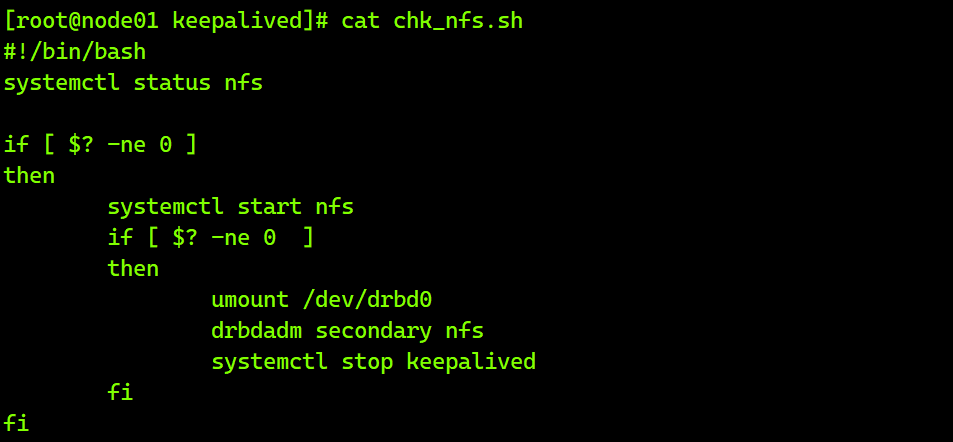

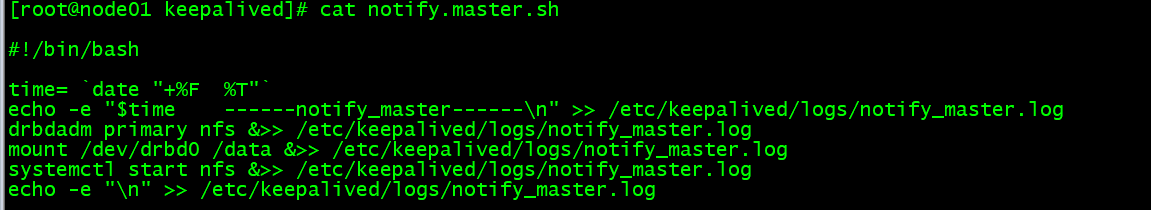

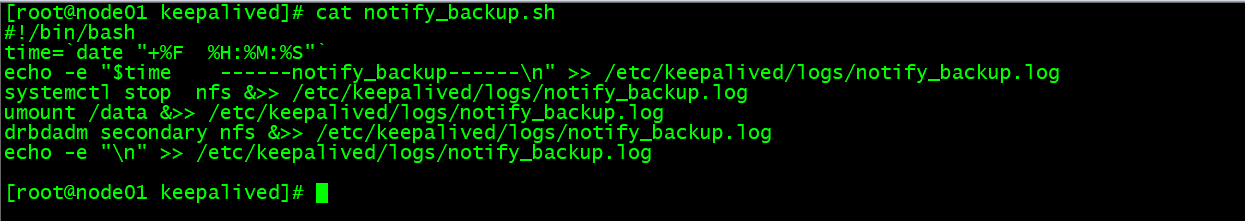

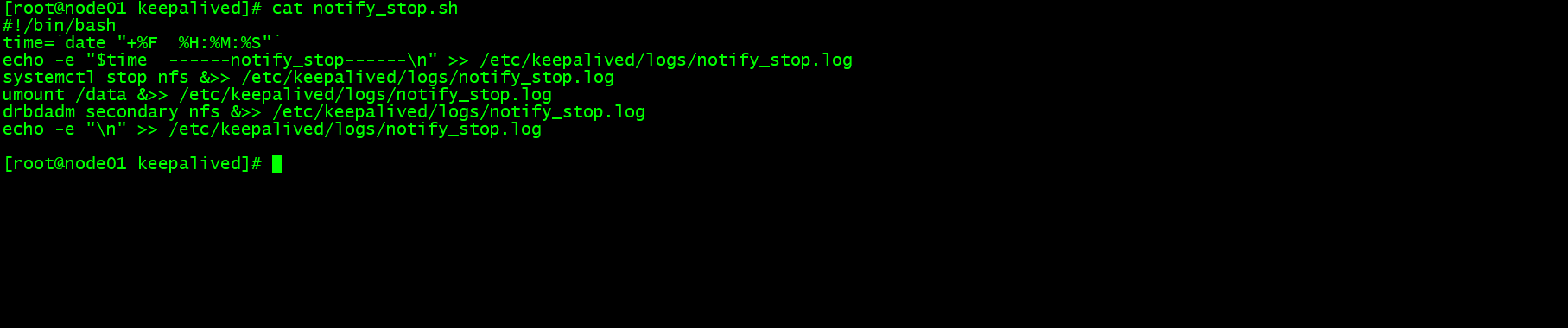

8、配置文件中的几个shell脚本

[root@node01 keepalived]# vim chk_nfs.sh

#!/bin/bash

systemctl status nfs

if [ $? -ne 0 ]

then

systemctl start nfs

if [ $? -ne 0 ]

then

umount /dev/drbd0

drbdadm secondary nfs

systemctl stop keepalived

fi

fi

脚本增加执行权限:chomod +x *.sh

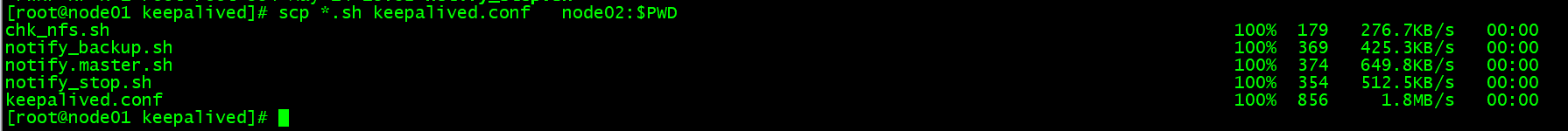

将配置文件和脚本拷贝到备节点:

[root@node01 keepalived]# scp *.sh keepalived.conf node02:$PWD

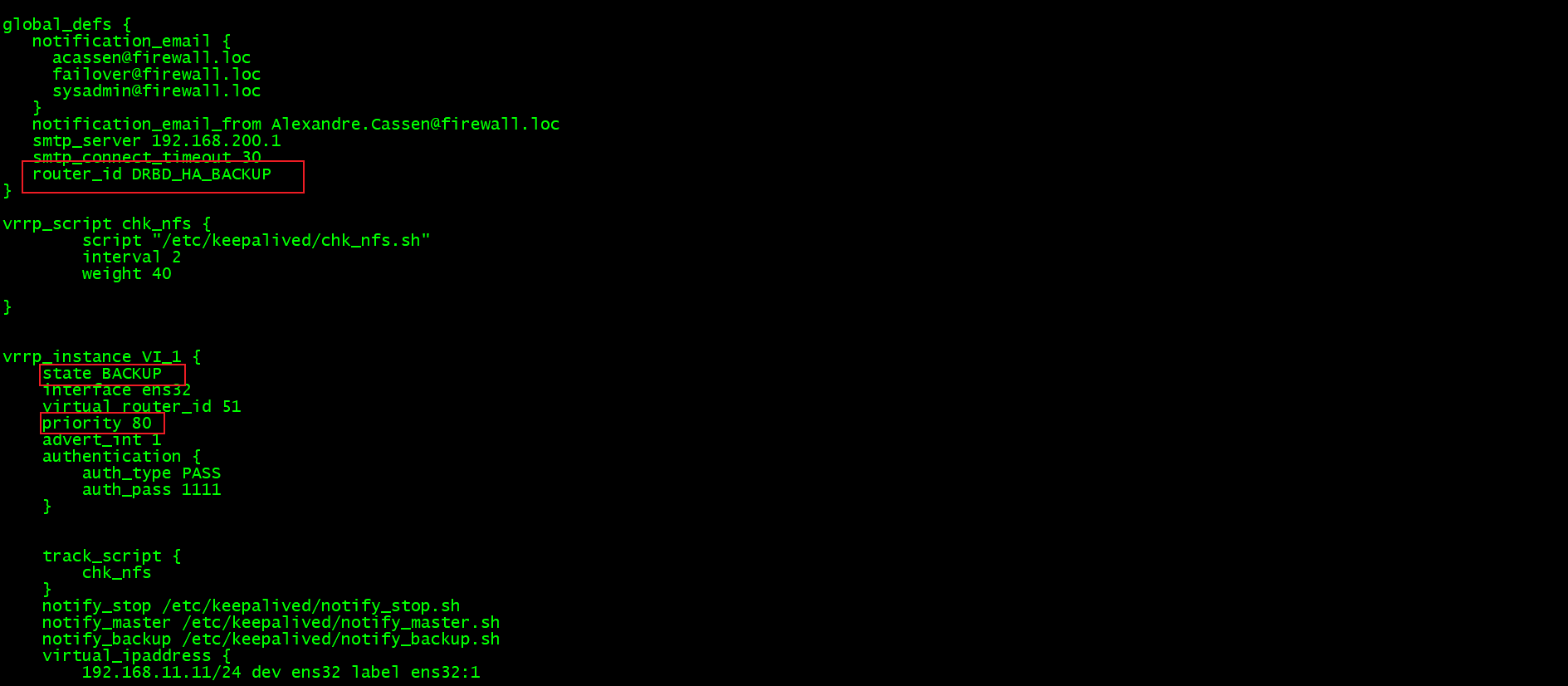

9、备节点修改配置文件:

[root@node02 keepalived]# vim keepalived.conf

10、测试:

启动keepalived进行测试

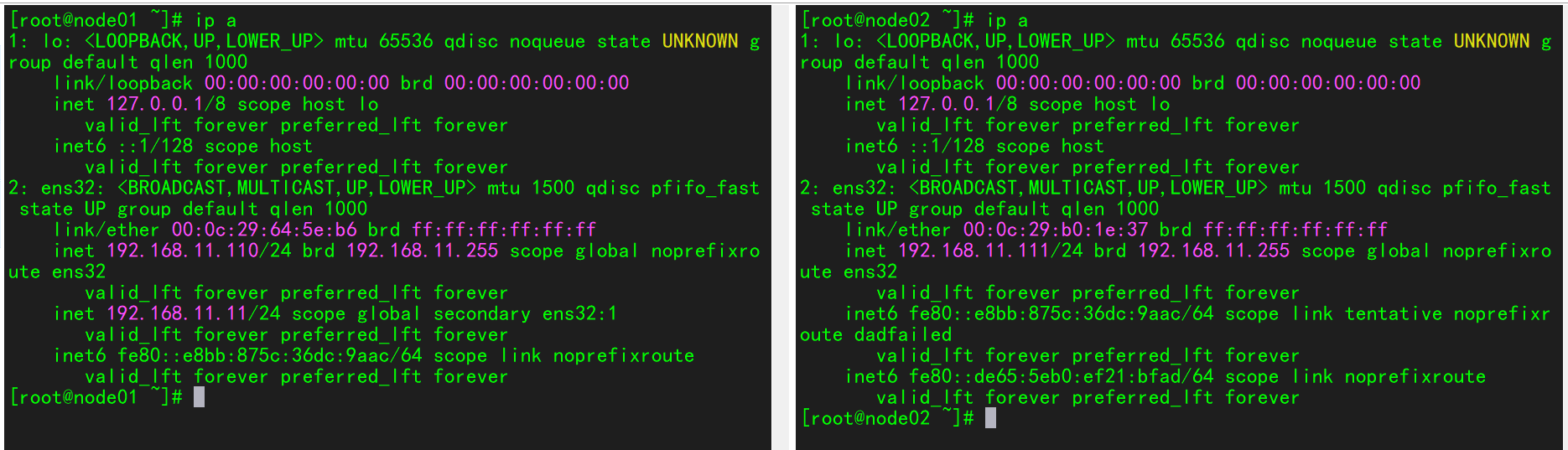

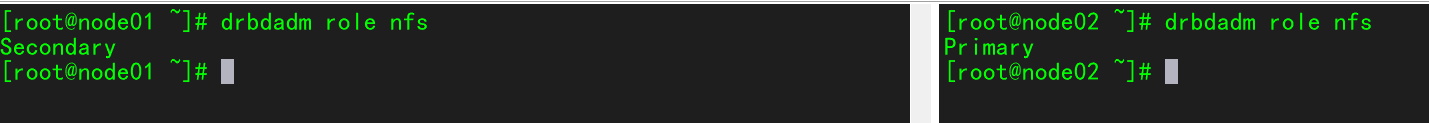

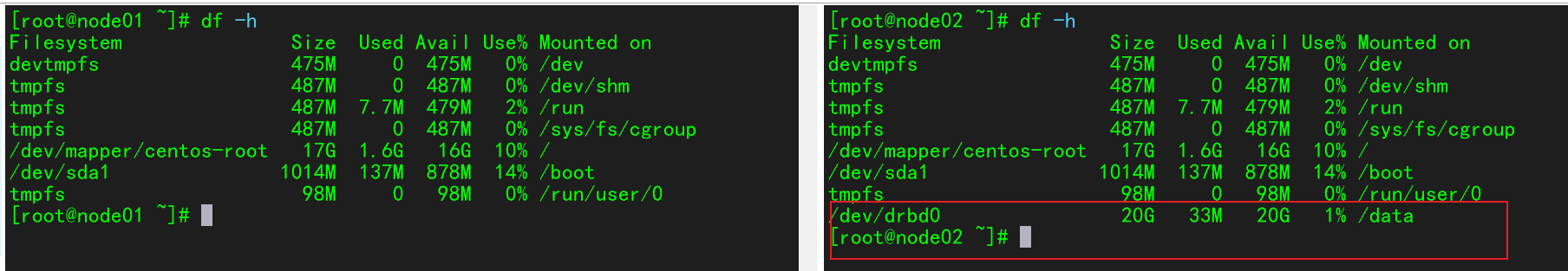

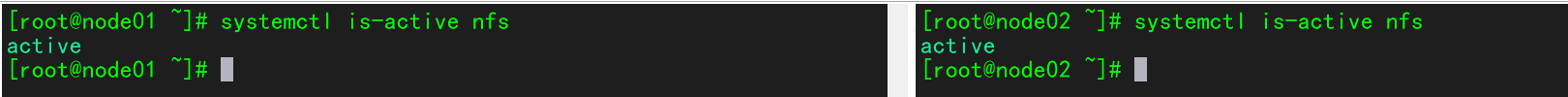

验证(正常):

1、查看VIP

2、查看资源角色

3、查看挂载

4、查看nfs

作者:ChAn

-------------------------------------------

个性签名:今天做了别人不想做的事,明天你就做得到别人做不到的事,尝试你都不敢,你拿什么赢!

如果觉得这篇文章对你有小小的帮助的话,记得在右下角点个“推荐”哦,博主在此感谢!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· Docker 太简单,K8s 太复杂?w7panel 让容器管理更轻松!