大数据技术之Hadoop(MapReduce)

第1章 MapReduce概述

1.1 MapReduce定义

1.2 MapReduce优缺点

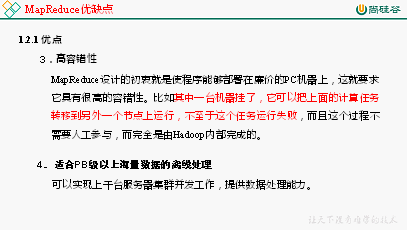

1.2.1 优点

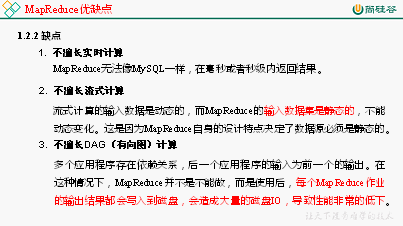

1.2.2 缺点

1.3 MapReduce核心思想

MapReduce核心编程思想,如图4-1所示。

图4-1 MapReduce核心编程思想

1)分布式的运算程序往往需要分成至少2个阶段。

2)第一个阶段的MapTask并发实例,完全并行运行,互不相干。

3)第二个阶段的ReduceTask并发实例互不相干,但是他们的数据依赖于上一个阶段的所有MapTask并发实例的输出。

4)MapReduce编程模型只能包含一个Map阶段和一个Reduce阶段,如果用户的业务逻辑非常复杂,那就只能多个MapReduce程序,串行运行。

总结:分析WordCount数据流走向深入理解MapReduce核心思想。

1.4 MapReduce进程

1.5 官方WordCount源码

采用反编译工具反编译源码,发现WordCount案例有Map类、Reduce类和驱动类。且数据的类型是Hadoop自身封装的序列化类型。

1.6 常用数据序列化类型

表4-1 常用的数据类型对应的Hadoop数据序列化类型

Java类型 | Hadoop Writable类型 |

boolean | BooleanWritable |

byte | ByteWritable |

int | IntWritable |

float | FloatWritable |

long | LongWritable |

double | DoubleWritable |

String | Text |

map | MapWritable |

array | ArrayWritable |

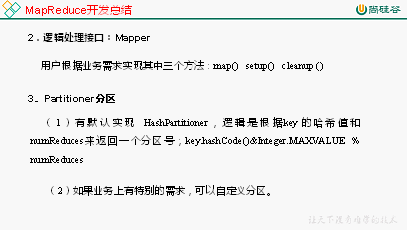

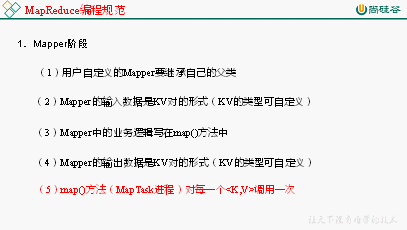

1.7 MapReduce编程规范

用户编写的程序分成三个部分:Mapper、Reducer和Driver。

1.8 WordCount案例实操

1.需求

在给定的文本文件中统计输出每一个单词出现的总次数

(1)输入数据

(2)期望输出数据

atguigu 2

banzhang 1

cls 2

hadoop 1

jiao 1

ss 2

xue 1

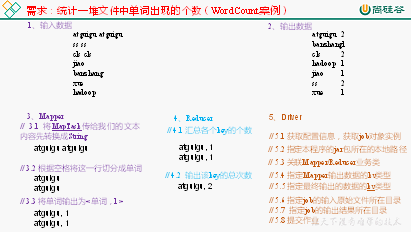

2.需求分析

按照MapReduce编程规范,分别编写Mapper,Reducer,Driver,如图4-2所示。

图4-2 需求分析

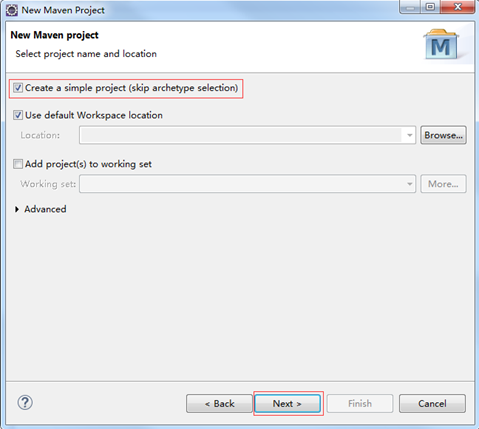

3.环境准备

(1)创建maven工程

(2)在pom.xml文件中添加如下依赖

<artifactId>junit</artifactId>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

(2)在项目的src/main/resources目录下,新建一个文件,命名为"log4j.properties",在文件中填入。

log4j.rootLogger=INFO, stdout log4j.appender.stdout=org.apache.log4j.ConsoleAppender log4j.appender.stdout.layout=org.apache.log4j.PatternLayout log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n log4j.appender.logfile=org.apache.log4j.FileAppender log4j.appender.logfile.File=target/spring.log log4j.appender.logfile.layout=org.apache.log4j.PatternLayout log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n |

package com.atguigu.mapreduce; import java.io.IOException; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper;

public class WordcountMapper extends Mapper<LongWritable, Text, Text, IntWritable>{

Text k = new Text(); IntWritable v = new IntWritable(1);

@Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 1 获取一行 String line = value.toString();

// 2 切割 String[] words = line.split(" ");

// 3 输出 for (String word : words) {

k.set(word); context.write(k, v); } } } |

package com.atguigu.mapreduce.wordcount; import java.io.IOException; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer;

public class WordcountReducer extends Reducer<Text, IntWritable, Text, IntWritable>{

int sum; IntWritable v = new IntWritable();

@Override protected void reduce(Text key, Iterable<IntWritable> values,Context context) throws IOException, InterruptedException {

// 1 累加求和 sum = 0; for (IntWritable count : values) { sum += count.get(); }

// 2 输出 v.set(sum); context.write(key,v); } } |

package com.atguigu.mapreduce.wordcount; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordcountDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

// 1 获取配置信息以及封装任务 Configuration configuration = new Configuration(); Job job = Job.getInstance(configuration);

// 2 设置jar加载路径 job.setJarByClass(WordcountDriver.class);

// 3 设置map和reduce类 job.setMapperClass(WordcountMapper.class); job.setReducerClass(WordcountReducer.class);

// 4 设置map输出 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class);

// 5 设置最终输出kv类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class);

// 6 设置输入和输出路径 FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 7 提交 boolean result = job.waitForCompletion(true);

System.exit(result ? 0 : 1); } } |

注意:win8电脑和win10家庭版操作系统可能有问题,需要重新编译源码或者更改操作系统。

<artifactId>maven-compiler-plugin</artifactId>

<artifactId>maven-assembly-plugin </artifactId>

<descriptorRef>jar-with-dependencies</descriptorRef>

<mainClass>com.atguigu.mr.WordcountDriver</mainClass>

注意:如果工程上显示红叉。在项目上右键->maven->update project即可。

[atguigu@hadoop102 software]$ hadoop jar wc.jar

com.atguigu.wordcount.WordcountDriver /user/atguigu/input /user/atguigu/output

第2章 Hadoop序列化

2.1 序列化概述

2.2 自定义bean对象实现序列化接口(Writable)

在企业开发中往往常用的基本序列化类型不能满足所有需求,比如在Hadoop框架内部传递一个bean对象,那么该对象就需要实现序列化接口。

(2)反序列化时,需要反射调用空参构造函数,所以必须有空参构造

public FlowBean() { super(); } |

@Override public void write(DataOutput out) throws IOException { out.writeLong(upFlow); out.writeLong(downFlow); out.writeLong(sumFlow); } |

@Override public void readFields(DataInput in) throws IOException { upFlow = in.readLong(); downFlow = in.readLong(); sumFlow = in.readLong(); } |

(6)要想把结果显示在文件中,需要重写toString(),可用"\t"分开,方便后续用。

(7)如果需要将自定义的bean放在key中传输,则还需要实现Comparable接口,因为MapReduce框中的Shuffle过程要求对key必须能排序。详见后面排序案例。

@Override public int compareTo(FlowBean o) { // 倒序排列,从大到小 return this.sumFlow > o.getSumFlow() ? -1 : 1; } |

2.3 序列化案例实操

7 13560436666 120.196.100.99 1116 954 200 id 手机号码 网络ip 上行流量 下行流量 网络状态码 |

13560436666 1116 954 2070 手机号码 上行流量 下行流量 总流量 |

package com.atguigu.mapreduce.flowsum; import java.io.DataInput; import java.io.DataOutput; import java.io.IOException; import org.apache.hadoop.io.Writable;

// 1 实现writable接口 public class FlowBean implements Writable{

private long upFlow; private long downFlow; private long sumFlow;

//2 反序列化时,需要反射调用空参构造函数,所以必须有 public FlowBean() { super(); }

public FlowBean(long upFlow, long downFlow) { super(); this.upFlow = upFlow; this.downFlow = downFlow; this.sumFlow = upFlow + downFlow; }

//3 写序列化方法 @Override public void write(DataOutput out) throws IOException { out.writeLong(upFlow); out.writeLong(downFlow); out.writeLong(sumFlow); }

//4 反序列化方法 //5 反序列化方法读顺序必须和写序列化方法的写顺序必须一致 @Override public void readFields(DataInput in) throws IOException { this.upFlow = in.readLong(); this.downFlow = in.readLong(); this.sumFlow = in.readLong(); }

// 6 编写toString方法,方便后续打印到文本 @Override public String toString() { return upFlow + "\t" + downFlow + "\t" + sumFlow; }

public long getUpFlow() { return upFlow; }

public void setUpFlow(long upFlow) { this.upFlow = upFlow; }

public long getDownFlow() { return downFlow; }

public void setDownFlow(long downFlow) { this.downFlow = downFlow; }

public long getSumFlow() { return sumFlow; }

public void setSumFlow(long sumFlow) { this.sumFlow = sumFlow; } } |

package com.atguigu.mapreduce.flowsum; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper;

public class FlowCountMapper extends Mapper<LongWritable, Text, Text, FlowBean>{

FlowBean v = new FlowBean(); Text k = new Text();

@Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 1 获取一行 String line = value.toString();

// 2 切割字段 String[] fields = line.split("\t");

// 3 封装对象 // 取出手机号码 String phoneNum = fields[1];

// 取出上行流量和下行流量 long upFlow = Long.parseLong(fields[fields.length - 3]); long downFlow = Long.parseLong(fields[fields.length - 2]);

k.set(phoneNum); v.set(downFlow, upFlow);

// 4 写出 context.write(k, v); } } |

package com.atguigu.mapreduce.flowsum; import java.io.IOException; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer;

public class FlowCountReducer extends Reducer<Text, FlowBean, Text, FlowBean> {

@Override protected void reduce(Text key, Iterable<FlowBean> values, Context context)throws IOException, InterruptedException {

long sum_upFlow = 0; long sum_downFlow = 0;

// 1 遍历所用bean,将其中的上行流量,下行流量分别累加 for (FlowBean flowBean : values) { sum_upFlow += flowBean.getUpFlow(); sum_downFlow += flowBean.getDownFlow(); }

// 2 封装对象 FlowBean resultBean = new FlowBean(sum_upFlow, sum_downFlow);

// 3 写出 context.write(key, resultBean); } } |

package com.atguigu.mapreduce.flowsum; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class FlowsumDriver {

public static void main(String[] args) throws IllegalArgumentException, IOException, ClassNotFoundException, InterruptedException {

// 输入输出路径需要根据自己电脑上实际的输入输出路径设置 args = new String[] { "e:/input/inputflow", "e:/output1" };

// 1 获取配置信息,或者job对象实例 Configuration configuration = new Configuration(); Job job = Job.getInstance(configuration);

// 6 指定本程序的jar包所在的本地路径 job.setJarByClass(FlowsumDriver.class);

// 2 指定本业务job要使用的mapper/Reducer业务类 job.setMapperClass(FlowCountMapper.class); job.setReducerClass(FlowCountReducer.class);

// 3 指定mapper输出数据的kv类型 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(FlowBean.class);

// 4 指定最终输出的数据的kv类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(FlowBean.class);

// 5 指定job的输入原始文件所在目录 FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 7 将job中配置的相关参数,以及job所用的java类所在的jar包, 提交给yarn去运行 boolean result = job.waitForCompletion(true); System.exit(result ? 0 : 1); } } |

第3章 MapReduce框架原理

3.1 InputFormat数据输入

3.1.1 切片与MapTask并行度决定机制

MapTask的并行度决定Map阶段的任务处理并发度,进而影响到整个Job的处理速度。

数据切片:数据切片只是在逻辑上对输入进行分片,并不会在磁盘上将其切分成片进行存储。

3.1.2 Job提交流程源码和切片源码详解

new Cluster(getConfiguration());

initialize(jobTrackAddr, conf);

submitter.submitJobInternal(Job.this, cluster)

Path jobStagingArea = JobSubmissionFiles.getStagingDir(cluster, conf);

JobID jobId = submitClient.getNewJobID();

copyAndConfigureFiles(job, submitJobDir);

rUploader.uploadFiles(job, jobSubmitDir);

writeSplits(job, submitJobDir);

maps = writeNewSplits(job, jobSubmitDir);

writeConf(conf, submitJobFile);

status = submitClient.submitJob(jobId, submitJobDir.toString(), job.getCredentials());

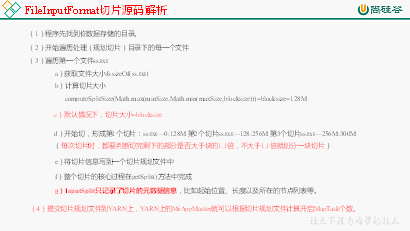

2.FileInputFormat切片源码解析(input.getSplits(job))

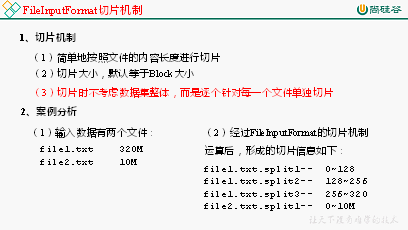

3.1.3 FileInputFormat切片机制

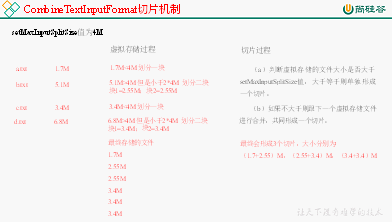

3.1.4 CombineTextInputFormat切片机制

CombineTextInputFormat用于小文件过多的场景,它可以将多个小文件从逻辑上规划到一个切片中,这样,多个小文件就可以交给一个MapTask处理。

CombineTextInputFormat.setMaxInputSplitSize(job, 4194304);// 4m

注意:虚拟存储切片最大值设置最好根据实际的小文件大小情况来设置具体的值。

(a)判断虚拟存储的文件大小是否大于setMaxInputSplitSize值,大于等于则单独形成一个切片。

(b)如果不大于则跟下一个虚拟存储文件进行合并,共同形成一个切片。

(c)测试举例:有4个小文件大小分别为1.7M、5.1M、3.4M以及6.8M这四个小文件,则虚拟存储之后形成6个文件块,大小分别为:

1.7M,(2.55M、2.55M),3.4M以及(3.4M、3.4M)

(1.7+2.55)M,(2.55+3.4)M,(3.4+3.4)M

3.1.5 CombineTextInputFormat案例实操

(1)不做任何处理,运行1.6节的WordCount案例程序,观察切片个数为4。

(2)在WordcountDriver中增加如下代码,运行程序,并观察运行的切片个数为3。

// 如果不设置InputFormat,它默认用的是TextInputFormat.class

job.setInputFormatClass(CombineTextInputFormat.class);

CombineTextInputFormat.setMaxInputSplitSize(job, 4194304);

(3)在WordcountDriver中增加如下代码,运行程序,并观察运行的切片个数为1。

// 如果不设置InputFormat,它默认用的是TextInputFormat.class

job.setInputFormatClass(CombineTextInputFormat.class);

CombineTextInputFormat.setMaxInputSplitSize(job, 20971520);

3.1.6 FileInputFormat实现类

3.1.7 KeyValueTextInputFormat使用案例

package com.atguigu.mapreduce.KeyValueTextInputFormat; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper;

public class KVTextMapper extends Mapper<Text, Text, Text, LongWritable>{

// 1 设置value LongWritable v = new LongWritable(1);

@Override protected void map(Text key, Text value, Context context) throws IOException, InterruptedException {

// banzhang ni hao

// 2 写出 context.write(key, v); } } |

package com.atguigu.mapreduce.KeyValueTextInputFormat; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer;

public class KVTextReducer extends Reducer<Text, LongWritable, Text, LongWritable>{

LongWritable v = new LongWritable();

@Override protected void reduce(Text key, Iterable<LongWritable> values, Context context) throws IOException, InterruptedException {

long sum = 0L;

// 1 汇总统计 for (LongWritable value : values) { sum += value.get(); }

v.set(sum);

// 2 输出 context.write(key, v); } } |

package com.atguigu.mapreduce.keyvaleTextInputFormat; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.input.KeyValueLineRecordReader; import org.apache.hadoop.mapreduce.lib.input.KeyValueTextInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class KVTextDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration(); // 设置切割符 conf.set(KeyValueLineRecordReader.KEY_VALUE_SEPERATOR, " "); // 1 获取job对象 Job job = Job.getInstance(conf);

// 2 设置jar包位置,关联mapper和reducer job.setJarByClass(KVTextDriver.class); job.setMapperClass(KVTextMapper.class); job.setReducerClass(KVTextReducer.class);

// 3 设置map输出kv类型 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(LongWritable.class);

// 4 设置最终输出kv类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(LongWritable.class);

// 5 设置输入输出数据路径 FileInputFormat.setInputPaths(job, new Path(args[0]));

// 设置输入格式 job.setInputFormatClass(KeyValueTextInputFormat.class);

// 6 设置输出数据路径 FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 7 提交job job.waitForCompletion(true); } } |

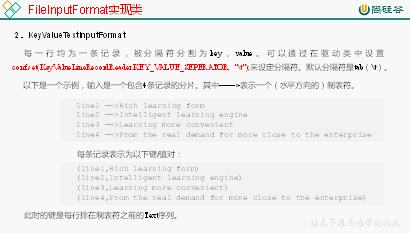

3.1.8 NLineInputFormat使用案例

对每个单词进行个数统计,要求根据每个输入文件的行数来规定输出多少个切片。此案例要求每三行放入一个切片中。

xihuan hadoop banzhang banzhang ni hao

package com.atguigu.mapreduce.nline; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper;

public class NLineMapper extends Mapper<LongWritable, Text, Text, LongWritable>{

private Text k = new Text(); private LongWritable v = new LongWritable(1);

@Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 1 获取一行 String line = value.toString();

// 2 切割 String[] splited = line.split(" ");

// 3 循环写出 for (int i = 0; i < splited.length; i++) {

k.set(splited[i]);

context.write(k, v); } } } |

package com.atguigu.mapreduce.nline; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer;

public class NLineReducer extends Reducer<Text, LongWritable, Text, LongWritable>{

LongWritable v = new LongWritable();

@Override protected void reduce(Text key, Iterable<LongWritable> values, Context context) throws IOException, InterruptedException {

long sum = 0l;

// 1 汇总 for (LongWritable value : values) { sum += value.get(); }

v.set(sum);

// 2 输出 context.write(key, v); } } |

package com.atguigu.mapreduce.nline; import java.io.IOException; import java.net.URISyntaxException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.input.NLineInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class NLineDriver {

public static void main(String[] args) throws IOException, URISyntaxException, ClassNotFoundException, InterruptedException {

// 输入输出路径需要根据自己电脑上实际的输入输出路径设置 args = new String[] { "e:/input/inputword", "e:/output1" };

// 1 获取job对象 Configuration configuration = new Configuration(); Job job = Job.getInstance(configuration);

// 7设置每个切片InputSplit中划分三条记录 NLineInputFormat.setNumLinesPerSplit(job, 3);

// 8使用NLineInputFormat处理记录数 job.setInputFormatClass(NLineInputFormat.class);

// 2设置jar包位置,关联mapper和reducer job.setJarByClass(NLineDriver.class); job.setMapperClass(NLineMapper.class); job.setReducerClass(NLineReducer.class);

// 3设置map输出kv类型 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(LongWritable.class);

// 4设置最终输出kv类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(LongWritable.class);

// 5设置输入输出数据路径 FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 6提交job job.waitForCompletion(true); } } |

xihuan hadoop banzhang banzhang ni hao

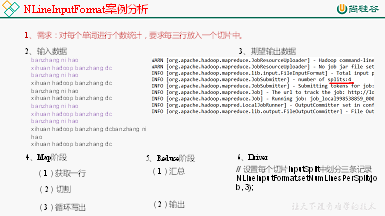

3.1.9 自定义InputFormat

3.1.10 自定义InputFormat案例实操

无论HDFS还是MapReduce,在处理小文件时效率都非常低,但又难免面临处理大量小文件的场景,此时,就需要有相应解决方案。可以自定义InputFormat实现小文件的合并。

package com.atguigu.mapreduce.inputformat; import java.io.IOException; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.BytesWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.mapreduce.InputSplit; import org.apache.hadoop.mapreduce.JobContext; import org.apache.hadoop.mapreduce.RecordReader; import org.apache.hadoop.mapreduce.TaskAttemptContext; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

// 定义类继承FileInputFormat public class WholeFileInputformat extends FileInputFormat<Text, BytesWritable>{

@Override protected boolean isSplitable(JobContext context, Path filename) { return false; }

@Override public RecordReader<Text, BytesWritable> createRecordReader(InputSplit split, TaskAttemptContext context) throws IOException, InterruptedException {

WholeRecordReader recordReader = new WholeRecordReader(); recordReader.initialize(split, context);

return recordReader; } } |

package com.atguigu.mapreduce.inputformat; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FSDataInputStream; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.BytesWritable; import org.apache.hadoop.io.IOUtils; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.mapreduce.InputSplit; import org.apache.hadoop.mapreduce.RecordReader; import org.apache.hadoop.mapreduce.TaskAttemptContext; import org.apache.hadoop.mapreduce.lib.input.FileSplit;

public class WholeRecordReader extends RecordReader<Text, BytesWritable>{

private Configuration configuration; private FileSplit split;

private boolean isProgress= true; private BytesWritable value = new BytesWritable(); private Text k = new Text();

@Override public void initialize(InputSplit split, TaskAttemptContext context) throws IOException, InterruptedException {

this.split = (FileSplit)split; configuration = context.getConfiguration(); }

@Override public boolean nextKeyValue() throws IOException, InterruptedException {

if (isProgress) {

// 1 定义缓存区 byte[] contents = new byte[(int)split.getLength()];

FileSystem fs = null; FSDataInputStream fis = null;

try { // 2 获取文件系统 Path path = split.getPath(); fs = path.getFileSystem(configuration);

// 3 读取数据 fis = fs.open(path);

// 4 读取文件内容 IOUtils.readFully(fis, contents, 0, contents.length);

// 5 输出文件内容 value.set(contents, 0, contents.length);

// 6 获取文件路径及名称 String name = split.getPath().toString();

// 7 设置输出的key值 k.set(name);

} catch (Exception e) {

}finally { IOUtils.closeStream(fis); }

isProgress = false;

return true; }

return false; }

@Override public Text getCurrentKey() throws IOException, InterruptedException { return k; }

@Override public BytesWritable getCurrentValue() throws IOException, InterruptedException { return value; }

@Override public float getProgress() throws IOException, InterruptedException { return 0; }

@Override public void close() throws IOException { } } |

package com.atguigu.mapreduce.inputformat; import java.io.IOException; import org.apache.hadoop.io.BytesWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.lib.input.FileSplit;

public class SequenceFileMapper extends Mapper<Text, BytesWritable, Text, BytesWritable>{

@Override protected void map(Text key, BytesWritable value, Context context) throws IOException, InterruptedException {

context.write(key, value); } } |

package com.atguigu.mapreduce.inputformat; import java.io.IOException; import org.apache.hadoop.io.BytesWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer;

public class SequenceFileReducer extends Reducer<Text, BytesWritable, Text, BytesWritable> {

@Override protected void reduce(Text key, Iterable<BytesWritable> values, Context context) throws IOException, InterruptedException {

context.write(key, values.iterator().next()); } } |

package com.atguigu.mapreduce.inputformat; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.BytesWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import org.apache.hadoop.mapreduce.lib.output.SequenceFileOutputFormat;

public class SequenceFileDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

// 输入输出路径需要根据自己电脑上实际的输入输出路径设置 args = new String[] { "e:/input/inputinputformat", "e:/output1" };

// 1 获取job对象 Configuration conf = new Configuration(); Job job = Job.getInstance(conf);

// 2 设置jar包存储位置、关联自定义的mapper和reducer job.setJarByClass(SequenceFileDriver.class); job.setMapperClass(SequenceFileMapper.class); job.setReducerClass(SequenceFileReducer.class);

// 7设置输入的inputFormat job.setInputFormatClass(WholeFileInputformat.class);

// 8设置输出的outputFormat job.setOutputFormatClass(SequenceFileOutputFormat.class);

// 3 设置map输出端的kv类型 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(BytesWritable.class);

// 4 设置最终输出端的kv类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(BytesWritable.class);

// 5 设置输入输出路径 FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 6 提交job boolean result = job.waitForCompletion(true); System.exit(result ? 0 : 1); } } |

3.2 MapReduce工作流程

上面的流程是整个MapReduce最全工作流程,但是Shuffle过程只是从第7步开始到第16步结束,具体Shuffle过程详解,如下:

1)MapTask收集我们的map()方法输出的kv对,放到内存缓冲区中

4)在溢出过程及合并的过程中,都要调用Partitioner进行分区和针对key进行排序

5)ReduceTask根据自己的分区号,去各个MapTask机器上取相应的结果分区数据

6)ReduceTask会取到同一个分区的来自不同MapTask的结果文件,ReduceTask会将这些文件再进行合并(归并排序)

7)合并成大文件后,Shuffle的过程也就结束了,后面进入ReduceTask的逻辑运算过程(从文件中取出一个一个的键值对Group,调用用户自定义的reduce()方法)

Shuffle中的缓冲区大小会影响到MapReduce程序的执行效率,原则上说,缓冲区越大,磁盘io的次数越少,执行速度就越快。

缓冲区的大小可以通过参数调整,参数:io.sort.mb默认100M。

context.write(k, NullWritable.get());

collector.collect(key, value,partitioner.getPartition(key, value, partitions));

3.3 Shuffle机制

3.3.1 Shuffle机制

Map方法之后,Reduce方法之前的数据处理过程称之为Shuffle。如图4-14所示。

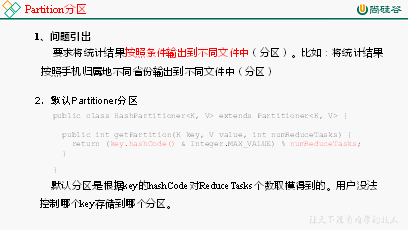

3.3.2 Partition分区

3.3.3 Partition分区案例实操

手机号136、137、138、139开头都分别放到一个独立的4个文件中,其他开头的放到一个文件中。

package com.atguigu.mapreduce.flowsum;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

public class ProvincePartitioner extends Partitioner<Text, FlowBean> {

public int getPartition(Text key, FlowBean value, int numPartitions) {

String preNum = key.toString().substring(0, 3);

}else if ("137".equals(preNum)) {

}else if ("138".equals(preNum)) {

}else if ("139".equals(preNum)) {

4.在驱动函数中增加自定义数据分区设置和ReduceTask设置

package com.atguigu.mapreduce.flowsum;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

args = new String[]{"e:/output1","e:/output2"};

Configuration configuration = new Configuration();

Job job = Job.getInstance(configuration);

job.setJarByClass(FlowsumDriver.class);

// 3 指定本业务job要使用的mapper/Reducer业务类

job.setMapperClass(FlowCountMapper.class);

job.setReducerClass(FlowCountReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(FlowBean.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

job.setPartitionerClass(ProvincePartitioner.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 7 将job中配置的相关参数,以及job所用的java类所在的jar包, 提交给yarn去运行

boolean result = job.waitForCompletion(true);

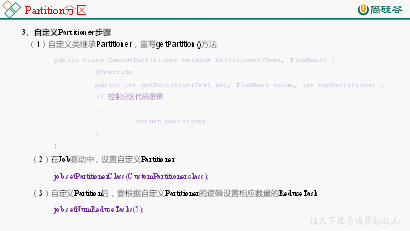

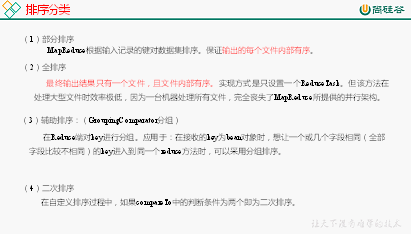

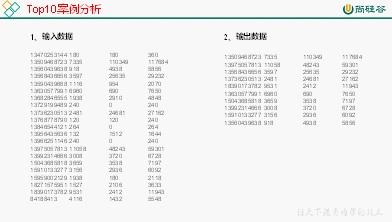

3.3.4 WritableComparable排序

bean对象做为key传输,需要实现WritableComparable接口重写compareTo方法,就可以实现排序。

public int compareTo(FlowBean o) {

if (sumFlow > bean.getSumFlow()) {

}else if (sumFlow < bean.getSumFlow()) {

3.3.5 WritableComparable排序案例实操(全排序)

13509468723 7335 110349 117684

package com.atguigu.mapreduce.sort;

import org.apache.hadoop.io.WritableComparable;

public class FlowBean implements WritableComparable<FlowBean> {

public FlowBean(long upFlow, long downFlow) {

this.sumFlow = upFlow + downFlow;

public void set(long upFlow, long downFlow) {

this.sumFlow = upFlow + downFlow;

public void setSumFlow(long sumFlow) {

public void setUpFlow(long upFlow) {

public void setDownFlow(long downFlow) {

public void write(DataOutput out) throws IOException {

public void readFields(DataInput in) throws IOException {

return upFlow + "\t" + downFlow + "\t" + sumFlow;

public int compareTo(FlowBean o) {

if (sumFlow > bean.getSumFlow()) {

}else if (sumFlow < bean.getSumFlow()) {

package com.atguigu.mapreduce.sort;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class FlowCountSortMapper extends Mapper<LongWritable, Text, FlowBean, Text>{

FlowBean bean = new FlowBean();

String line = value.toString();

String[] fields = line.split("\t");

long upFlow = Long.parseLong(fields[1]);

long downFlow = Long.parseLong(fields[2]);

package com.atguigu.mapreduce.sort;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class FlowCountSortReducer extends Reducer<FlowBean, Text, Text, FlowBean>{

package com.atguigu.mapreduce.sort;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class FlowCountSortDriver {

args = new String[]{"e:/output1","e:/output2"};

Configuration configuration = new Configuration();

Job job = Job.getInstance(configuration);

job.setJarByClass(FlowCountSortDriver.class);

// 3 指定本业务job要使用的mapper/Reducer业务类

job.setMapperClass(FlowCountSortMapper.class);

job.setReducerClass(FlowCountSortReducer.class);

job.setMapOutputKeyClass(FlowBean.class);

job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 7 将job中配置的相关参数,以及job所用的java类所在的jar包, 提交给yarn去运行

boolean result = job.waitForCompletion(true);

3.3.6 WritableComparable排序案例实操(区内排序)

package com.atguigu.mapreduce.sort;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

public class ProvincePartitioner extends Partitioner<FlowBean, Text> {

public int getPartition(FlowBean key, Text value, int numPartitions) {

String preNum = value.toString().substring(0, 3);

}else if ("137".equals(preNum)) {

}else if ("138".equals(preNum)) {

}else if ("139".equals(preNum)) {

job.setPartitionerClass(ProvincePartitioner.class);

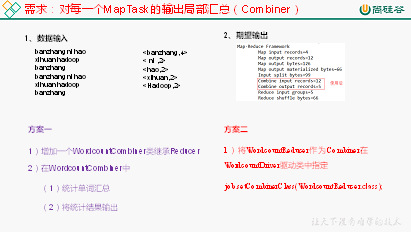

3.3.7 Combiner合并

(a)自定义一个Combiner继承Reducer,重写Reduce方法

public class WordcountCombiner extends Reducer<Text, IntWritable, Text,IntWritable>{

@Override protected void reduce(Text key, Iterable<IntWritable> values,Context context) throws IOException, InterruptedException {

// 1 汇总操作 int count = 0; for(IntWritable v :values){ count += v.get(); }

// 2 写出 context.write(key, new IntWritable(count)); } } |

job.setCombinerClass(WordcountCombiner.class); |

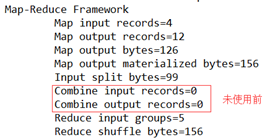

3.3.8 Combiner合并案例实操

统计过程中对每一个MapTask的输出进行局部汇总,以减小网络传输量即采用Combiner功能。

期望:Combine输入数据多,输出时经过合并,输出数据降低。

1)增加一个WordcountCombiner类继承Reducer

package com.atguigu.mr.combiner;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class WordcountCombiner extends Reducer<Text, IntWritable, Text, IntWritable>{

IntWritable v = new IntWritable();

for(IntWritable value :values){

2)在WordcountDriver驱动类中指定Combiner

// 指定需要使用combiner,以及用哪个类作为combiner的逻辑

job.setCombinerClass(WordcountCombiner.class);

1)将WordcountReducer作为Combiner在WordcountDriver驱动类中指定

// 指定需要使用Combiner,以及用哪个类作为Combiner的逻辑

job.setCombinerClass(WordcountReducer.class);

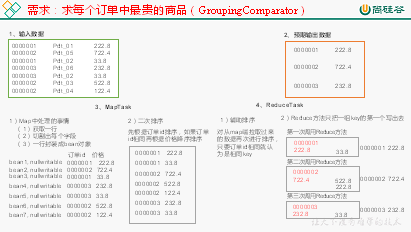

3.3.9 GroupingComparator分组(辅助排序)

public int compare(WritableComparable a, WritableComparable b) {

protected OrderGroupingComparator() {

3.3.10 GroupingComparator分组案例实操

订单id | 商品id | 成交金额 |

0000001 | Pdt_01 | 222.8 |

Pdt_02 | 33.8 | |

0000002 | Pdt_03 | 522.8 |

Pdt_04 | 122.4 | |

Pdt_05 | 722.4 | |

0000003 | Pdt_06 | 232.8 |

Pdt_02 | 33.8 |

(1)利用"订单id和成交金额"作为key,可以将Map阶段读取到的所有订单数据按照id升序排序,如果id相同再按照金额降序排序,发送到Reduce。

(2)在Reduce端利用groupingComparator将订单id相同的kv聚合成组,然后取第一个即是该订单中最贵商品,如图4-18所示。

package com.atguigu.mapreduce.order; import java.io.DataInput; import java.io.DataOutput; import java.io.IOException; import org.apache.hadoop.io.WritableComparable;

public class OrderBean implements WritableComparable<OrderBean> {

private int order_id; // 订单id号 private double price; // 价格

public OrderBean() { super(); }

public OrderBean(int order_id, double price) { super(); this.order_id = order_id; this.price = price; }

@Override public void write(DataOutput out) throws IOException { out.writeInt(order_id); out.writeDouble(price); }

@Override public void readFields(DataInput in) throws IOException { order_id = in.readInt(); price = in.readDouble(); }

@Override public String toString() { return order_id + "\t" + price; }

public int getOrder_id() { return order_id; }

public void setOrder_id(int order_id) { this.order_id = order_id; }

public double getPrice() { return price; }

public void setPrice(double price) { this.price = price; }

// 二次排序 @Override public int compareTo(OrderBean o) {

int result;

if (order_id > o.getOrder_id()) { result = 1; } else if (order_id < o.getOrder_id()) { result = -1; } else { // 价格倒序排序 result = price > o.getPrice() ? -1 : 1; }

return result; } } |

package com.atguigu.mapreduce.order; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper;

public class OrderMapper extends Mapper<LongWritable, Text, OrderBean, NullWritable> {

OrderBean k = new OrderBean();

@Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 1 获取一行 String line = value.toString();

// 2 截取 String[] fields = line.split("\t");

// 3 封装对象 k.setOrder_id(Integer.parseInt(fields[0])); k.setPrice(Double.parseDouble(fields[2]));

// 4 写出 context.write(k, NullWritable.get()); } } |

(3)编写OrderSortGroupingComparator类

package com.atguigu.mapreduce.order; import org.apache.hadoop.io.WritableComparable; import org.apache.hadoop.io.WritableComparator;

public class OrderGroupingComparator extends WritableComparator {

protected OrderGroupingComparator() { super(OrderBean.class, true); }

@Override public int compare(WritableComparable a, WritableComparable b) {

OrderBean aBean = (OrderBean) a; OrderBean bBean = (OrderBean) b;

int result; if (aBean.getOrder_id() > bBean.getOrder_id()) { result = 1; } else if (aBean.getOrder_id() < bBean.getOrder_id()) { result = -1; } else { result = 0; }

return result; } } |

package com.atguigu.mapreduce.order; import java.io.IOException; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.mapreduce.Reducer;

public class OrderReducer extends Reducer<OrderBean, NullWritable, OrderBean, NullWritable> {

@Override protected void reduce(OrderBean key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException {

context.write(key, NullWritable.get()); } } |

package com.atguigu.mapreduce.order; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class OrderDriver {

public static void main(String[] args) throws Exception, IOException {

// 输入输出路径需要根据自己电脑上实际的输入输出路径设置 args = new String[]{"e:/input/inputorder" , "e:/output1"};

// 1 获取配置信息 Configuration conf = new Configuration(); Job job = Job.getInstance(conf);

// 2 设置jar包加载路径 job.setJarByClass(OrderDriver.class);

// 3 加载map/reduce类 job.setMapperClass(OrderMapper.class); job.setReducerClass(OrderReducer.class);

// 4 设置map输出数据key和value类型 job.setMapOutputKeyClass(OrderBean.class); job.setMapOutputValueClass(NullWritable.class);

// 5 设置最终输出数据的key和value类型 job.setOutputKeyClass(OrderBean.class); job.setOutputValueClass(NullWritable.class);

// 6 设置输入数据和输出数据路径 FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 8 设置reduce端的分组 job.setGroupingComparatorClass(OrderGroupingComparator.class);

// 7 提交 boolean result = job.waitForCompletion(true); System.exit(result ? 0 : 1); } } |

3.4 MapTask工作机制

(1)Read阶段:MapTask通过用户编写的RecordReader,从输入InputSplit中解析出一个个key/value。

(2)Map阶段:该节点主要是将解析出的key/value交给用户编写map()函数处理,并产生一系列新的key/value。

(5)Combine阶段:当所有数据处理完成后,MapTask对所有临时文件进行一次合并,以确保最终只会生成一个数据文件。

当所有数据处理完后,MapTask会将所有临时文件合并成一个大文件,并保存到文件output/file.out中,同时生成相应的索引文件output/file.out.index。

让每个MapTask最终只生成一个数据文件,可避免同时打开大量文件和同时读取大量小文件产生的随机读取带来的开销。

3.5 ReduceTask工作机制

(1)Copy阶段:ReduceTask从各个MapTask上远程拷贝一片数据,并针对某一片数据,如果其大小超过一定阈值,则写到磁盘上,否则直接放到内存中。

(2)Merge阶段:在远程拷贝数据的同时,ReduceTask启动了两个后台线程对内存和磁盘上的文件进行合并,以防止内存使用过多或磁盘上文件过多。

(4)Reduce阶段:reduce()函数将计算结果写到HDFS上。

ReduceTask的并行度同样影响整个Job的执行并发度和执行效率,但与MapTask的并发数由切片数决定不同,ReduceTask数量的决定是可以直接手动设置:

(1)实验环境:1个Master节点,16个Slave节点:CPU:8GHZ,内存: 2G

MapTask =16 | ||||||||||

ReduceTask | 1 | 5 | 10 | 15 | 16 | 20 | 25 | 30 | 45 | 60 |

总时间 | 892 | 146 | 110 | 92 | 88 | 100 | 128 | 101 | 145 | 104 |

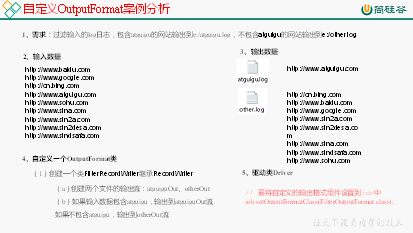

3.6 OutputFormat数据输出

3.6.1 OutputFormat接口实现类

3.6.2 自定义OutputFormat

3.6.3 自定义OutputFormat案例实操

过滤输入的log日志,包含atguigu的网站输出到e:/atguigu.log,不包含atguigu的网站输出到e:/other.log。

package com.atguigu.mapreduce.outputformat; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper;

public class FilterMapper extends Mapper<LongWritable, Text, Text, NullWritable>{

@Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 写出 context.write(value, NullWritable.get()); } } |

package com.atguigu.mapreduce.outputformat; import java.io.IOException; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer;

public class FilterReducer extends Reducer<Text, NullWritable, Text, NullWritable> {

Text k = new Text();

@Override protected void reduce(Text key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException {

// 1 获取一行 String line = key.toString();

// 2 拼接 line = line + "\r\n";

// 3 设置key k.set(line);

// 4 输出 context.write(k, NullWritable.get()); } } |

package com.atguigu.mapreduce.outputformat; import java.io.IOException; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.RecordWriter; import org.apache.hadoop.mapreduce.TaskAttemptContext; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class FilterOutputFormat extends FileOutputFormat<Text, NullWritable>{

@Override public RecordWriter<Text, NullWritable> getRecordWriter(TaskAttemptContext job) throws IOException, InterruptedException {

// 创建一个RecordWriter return new FilterRecordWriter(job); } } |

package com.atguigu.mapreduce.outputformat; import java.io.IOException; import org.apache.hadoop.fs.FSDataOutputStream; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.RecordWriter; import org.apache.hadoop.mapreduce.TaskAttemptContext;

public class FilterRecordWriter extends RecordWriter<Text, NullWritable> {

FSDataOutputStream atguiguOut = null; FSDataOutputStream otherOut = null;

public FilterRecordWriter(TaskAttemptContext job) {

// 1 获取文件系统 FileSystem fs;

try { fs = FileSystem.get(job.getConfiguration());

// 2 创建输出文件路径 Path atguiguPath = new Path("e:/atguigu.log"); Path otherPath = new Path("e:/other.log");

// 3 创建输出流 atguiguOut = fs.create(atguiguPath); otherOut = fs.create(otherPath); } catch (IOException e) { e.printStackTrace(); } }

@Override public void write(Text key, NullWritable value) throws IOException, InterruptedException {

// 判断是否包含"atguigu"输出到不同文件 if (key.toString().contains("atguigu")) { atguiguOut.write(key.toString().getBytes()); } else { otherOut.write(key.toString().getBytes()); } }

@Override public void close(TaskAttemptContext context) throws IOException, InterruptedException {

// 关闭资源 IOUtils.closeStream(atguiguOut); IOUtils.closeStream(otherOut); } } |

package com.atguigu.mapreduce.outputformat; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class FilterDriver {

public static void main(String[] args) throws Exception {

// 输入输出路径需要根据自己电脑上实际的输入输出路径设置 args = new String[] { "e:/input/inputoutputformat", "e:/output2" };

Configuration conf = new Configuration(); Job job = Job.getInstance(conf);

job.setJarByClass(FilterDriver.class); job.setMapperClass(FilterMapper.class); job.setReducerClass(FilterReducer.class);

job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(NullWritable.class);

job.setOutputKeyClass(Text.class); job.setOutputValueClass(NullWritable.class);

// 要将自定义的输出格式组件设置到job中 job.setOutputFormatClass(FilterOutputFormat.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

// 虽然我们自定义了outputformat,但是因为我们的outputformat继承自fileoutputformat // 而fileoutputformat要输出一个_SUCCESS文件,所以,在这还得指定一个输出目录 FileOutputFormat.setOutputPath(job, new Path(args[1]));

boolean result = job.waitForCompletion(true); System.exit(result ? 0 : 1); } } |

3.7 Join多种应用

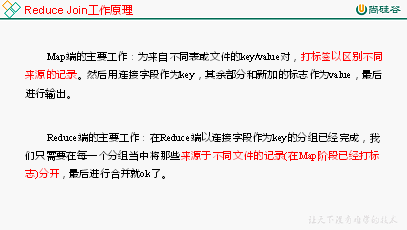

3.7.1 Reduce Join

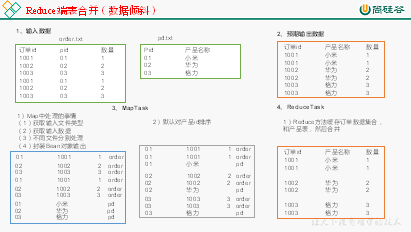

3.7.2 Reduce Join案例实操

id | pid | amount |

1001 | 01 | 1 |

1002 | 02 | 2 |

1003 | 03 | 3 |

1004 | 01 | 4 |

1005 | 02 | 5 |

1006 | 03 | 6 |

pid | pname |

01 | 小米 |

02 | 华为 |

03 | 格力 |

id | pname | amount |

1001 | 小米 | 1 |

1004 | 小米 | 4 |

1002 | 华为 | 2 |

1005 | 华为 | 5 |

1003 | 格力 | 3 |

1006 | 格力 | 6 |

通过将关联条件作为Map输出的key,将两表满足Join条件的数据并携带数据所来源的文件信息,发往同一个ReduceTask,在Reduce中进行数据的串联,如图4-20所示。

package com.atguigu.mapreduce.table; import java.io.DataInput; import java.io.DataOutput; import java.io.IOException; import org.apache.hadoop.io.Writable;

public class TableBean implements Writable {

private String order_id; // 订单id private String p_id; // 产品id private int amount; // 产品数量 private String pname; // 产品名称 private String flag; // 表的标记

public TableBean() { super(); }

public TableBean(String order_id, String p_id, int amount, String pname, String flag) {

super();

this.order_id = order_id; this.p_id = p_id; this.amount = amount; this.pname = pname; this.flag = flag; }

public String getFlag() { return flag; }

public void setFlag(String flag) { this.flag = flag; }

public String getOrder_id() { return order_id; }

public void setOrder_id(String order_id) { this.order_id = order_id; }

public String getP_id() { return p_id; }

public void setP_id(String p_id) { this.p_id = p_id; }

public int getAmount() { return amount; }

public void setAmount(int amount) { this.amount = amount; }

public String getPname() { return pname; }

public void setPname(String pname) { this.pname = pname; }

@Override public void write(DataOutput out) throws IOException { out.writeUTF(order_id); out.writeUTF(p_id); out.writeInt(amount); out.writeUTF(pname); out.writeUTF(flag); }

@Override public void readFields(DataInput in) throws IOException { this.order_id = in.readUTF(); this.p_id = in.readUTF(); this.amount = in.readInt(); this.pname = in.readUTF(); this.flag = in.readUTF(); }

@Override public String toString() { return order_id + "\t" + pname + "\t" + amount + "\t" ; } } |

package com.atguigu.mapreduce.table; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.lib.input.FileSplit;

public class TableMapper extends Mapper<LongWritable, Text, Text, TableBean>{

String name; TableBean bean = new TableBean(); Text k = new Text();

@Override protected void setup(Context context) throws IOException, InterruptedException {

// 1 获取输入文件切片 FileSplit split = (FileSplit) context.getInputSplit();

// 2 获取输入文件名称 name = split.getPath().getName(); }

@Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 1 获取输入数据 String line = value.toString();

// 2 不同文件分别处理 if (name.startsWith("order")) {// 订单表处理

// 2.1 切割 String[] fields = line.split("\t");

// 2.2 封装bean对象 bean.setOrder_id(fields[0]); bean.setP_id(fields[1]); bean.setAmount(Integer.parseInt(fields[2])); bean.setPname(""); bean.setFlag("order");

k.set(fields[1]); }else {// 产品表处理

// 2.3 切割 String[] fields = line.split("\t");

// 2.4 封装bean对象 bean.setP_id(fields[0]); bean.setPname(fields[1]); bean.setFlag("pd"); bean.setAmount(0); bean.setOrder_id("");

k.set(fields[0]); }

// 3 写出 context.write(k, bean); } } |

package com.atguigu.mapreduce.table; import java.io.IOException; import java.util.ArrayList; import org.apache.commons.beanutils.BeanUtils; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer;

public class TableReducer extends Reducer<Text, TableBean, TableBean, NullWritable> {

@Override protected void reduce(Text key, Iterable<TableBean> values, Context context) throws IOException, InterruptedException {

// 1准备存储订单的集合 ArrayList<TableBean> orderBeans = new ArrayList<>();

// 2 准备bean对象 TableBean pdBean = new TableBean();

for (TableBean bean : values) {

if ("order".equals(bean.getFlag())) {// 订单表

// 拷贝传递过来的每条订单数据到集合中 TableBean orderBean = new TableBean();

try { BeanUtils.copyProperties(orderBean, bean); } catch (Exception e) { e.printStackTrace(); }

orderBeans.add(orderBean); } else {// 产品表

try { // 拷贝传递过来的产品表到内存中 BeanUtils.copyProperties(pdBean, bean); } catch (Exception e) { e.printStackTrace(); } } }

// 3 表的拼接 for(TableBean bean:orderBeans){

bean.setPname (pdBean.getPname());

// 4 数据写出去 context.write(bean, NullWritable.get()); } } } |

package com.atguigu.mapreduce.table; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class TableDriver {

public static void main(String[] args) throws Exception {

// 0 根据自己电脑路径重新配置 args = new String[]{"e:/input/inputtable","e:/output1"};

// 1 获取配置信息,或者job对象实例 Configuration configuration = new Configuration(); Job job = Job.getInstance(configuration);

// 2 指定本程序的jar包所在的本地路径 job.setJarByClass(TableDriver.class);

// 3 指定本业务job要使用的Mapper/Reducer业务类 job.setMapperClass(TableMapper.class); job.setReducerClass(TableReducer.class);

// 4 指定Mapper输出数据的kv类型 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(TableBean.class);

// 5 指定最终输出的数据的kv类型 job.setOutputKeyClass(TableBean.class); job.setOutputValueClass(NullWritable.class);

// 6 指定job的输入原始文件所在目录 FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 7 将job中配置的相关参数,以及job所用的java类所在的jar包, 提交给yarn去运行 boolean result = job.waitForCompletion(true); System.exit(result ? 0 : 1); } } |

1001 小米 1 1001 小米 1 1002 华为 2 1002 华为 2 1003 格力 3 1003 格力 3 |

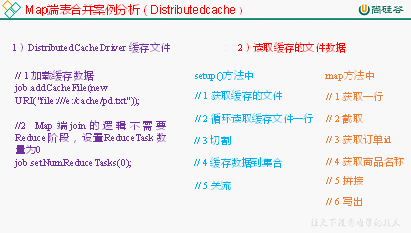

3.7.3 Map Join

思考:在Reduce端处理过多的表,非常容易产生数据倾斜。怎么办?

在Map端缓存多张表,提前处理业务逻辑,这样增加Map端业务,减少Reduce端数据的压力,尽可能的减少数据倾斜。

(1)在Mapper的setup阶段,将文件读取到缓存集合中。

job.addCacheFile(new URI("file://e:/cache/pd.txt"));

3.7.4 Map Join案例实操

id | pid | amount |

1001 | 01 | 1 |

1002 | 02 | 2 |

1003 | 03 | 3 |

1004 | 01 | 4 |

1005 | 02 | 5 |

1006 | 03 | 6 |

pid | pname |

01 | 小米 |

02 | 华为 |

03 | 格力 |

id | pname | amount |

1001 | 小米 | 1 |

1004 | 小米 | 4 |

1002 | 华为 | 2 |

1005 | 华为 | 5 |

1003 | 格力 | 3 |

1006 | 格力 | 6 |

package test; import java.net.URI; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class DistributedCacheDriver {

public static void main(String[] args) throws Exception {

// 0 根据自己电脑路径重新配置 args = new String[]{"e:/input/inputtable2", "e:/output1"};

// 1 获取job信息 Configuration configuration = new Configuration(); Job job = Job.getInstance(configuration);

// 2 设置加载jar包路径 job.setJarByClass(DistributedCacheDriver.class);

// 3 关联map job.setMapperClass(DistributedCacheMapper.class);

// 4 设置最终输出数据类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(NullWritable.class);

// 5 设置输入输出路径 FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 6 加载缓存数据 job.addCacheFile(new URI("file:///e:/input/inputcache/pd.txt"));

// 7 Map端Join的逻辑不需要Reduce阶段,设置reduceTask数量为0 job.setNumReduceTasks(0);

// 8 提交 boolean result = job.waitForCompletion(true); System.exit(result ? 0 : 1); } } |

package test; import java.io.BufferedReader; import java.io.FileInputStream; import java.io.IOException; import java.io.InputStreamReader; import java.util.HashMap; import java.util.Map; import org.apache.commons.lang.StringUtils; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper;

public class DistributedCacheMapper extends Mapper<LongWritable, Text, Text, NullWritable>{

Map<String, String> pdMap = new HashMap<>();

@Override protected void setup(Mapper<LongWritable, Text, Text, NullWritable>.Context context) throws IOException, InterruptedException {

// 1 获取缓存的文件 URI[] cacheFiles = context.getCacheFiles(); String path = cacheFiles[0].getPath().toString();

BufferedReader reader = new BufferedReader(new InputStreamReader(new FileInputStream(path), "UTF-8"));

String line; while(StringUtils.isNotEmpty(line = reader.readLine())){

// 2 切割 String[] fields = line.split("\t");

// 3 缓存数据到集合 pdMap.put(fields[0], fields[1]); }

// 4 关流 reader.close(); }

Text k = new Text();

@Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 1 获取一行 String line = value.toString();

// 2 截取 String[] fields = line.split("\t");

// 3 获取产品id String pId = fields[1];

// 4 获取商品名称 String pdName = pdMap.get(pId);

// 5 拼接 k.set(line + "\t"+ pdName);

// 6 写出 context.write(k, NullWritable.get()); } } |

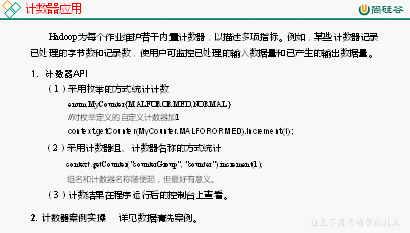

3.8 计数器应用

3.9 数据清洗(ETL)

在运行核心业务MapReduce程序之前,往往要先对数据进行清洗,清理掉不符合用户要求的数据。清理的过程往往只需要运行Mapper程序,不需要运行Reduce程序。

3.9.1 数据清洗案例实操-简单解析版

package com.atguigu.mapreduce.weblog; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper;

public class LogMapper extends Mapper<LongWritable, Text, Text, NullWritable>{

Text k = new Text();

@Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 1 获取1行数据 String line = value.toString();

// 2 解析日志 boolean result = parseLog(line,context);

// 3 日志不合法退出 if (!result) { return; }

// 4 设置key k.set(line);

// 5 写出数据 context.write(k, NullWritable.get()); }

// 2 解析日志 private boolean parseLog(String line, Context context) {

// 1 截取 String[] fields = line.split(" ");

// 2 日志长度大于11的为合法 if (fields.length > 11) {

// 系统计数器 context.getCounter("map", "true").increment(1); return true; }else { context.getCounter("map", "false").increment(1); return false; } } } |

package com.atguigu.mapreduce.weblog; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class LogDriver {

public static void main(String[] args) throws Exception {

// 输入输出路径需要根据自己电脑上实际的输入输出路径设置 args = new String[] { "e:/input/inputlog", "e:/output1" };

// 1 获取job信息 Configuration conf = new Configuration(); Job job = Job.getInstance(conf);

// 2 加载jar包 job.setJarByClass(LogDriver.class);

// 3 关联map job.setMapperClass(LogMapper.class);

// 4 设置最终输出类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(NullWritable.class);

// 设置reducetask个数为0 job.setNumReduceTasks(0);

// 5 设置输入和输出路径 FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 6 提交 job.waitForCompletion(true); } } |

3.9.2 数据清洗案例实操-复杂解析版

对Web访问日志中的各字段识别切分,去除日志中不合法的记录。根据清洗规则,输出过滤后的数据。

package com.atguigu.mapreduce.log;

public class LogBean { private String remote_addr;// 记录客户端的ip地址 private String remote_user;// 记录客户端用户名称,忽略属性"-" private String time_local;// 记录访问时间与时区 private String request;// 记录请求的url与http协议 private String status;// 记录请求状态;成功是200 private String body_bytes_sent;// 记录发送给客户端文件主体内容大小 private String http_referer;// 用来记录从那个页面链接访问过来的 private String http_user_agent;// 记录客户浏览器的相关信息

private boolean valid = true;// 判断数据是否合法

public String getRemote_addr() { return remote_addr; }

public void setRemote_addr(String remote_addr) { this.remote_addr = remote_addr; }

public String getRemote_user() { return remote_user; }

public void setRemote_user(String remote_user) { this.remote_user = remote_user; }

public String getTime_local() { return time_local; }

public void setTime_local(String time_local) { this.time_local = time_local; }

public String getRequest() { return request; }

public void setRequest(String request) { this.request = request; }

public String getStatus() { return status; }

public void setStatus(String status) { this.status = status; }

public String getBody_bytes_sent() { return body_bytes_sent; }

public void setBody_bytes_sent(String body_bytes_sent) { this.body_bytes_sent = body_bytes_sent; }

public String getHttp_referer() { return http_referer; }

public void setHttp_referer(String http_referer) { this.http_referer = http_referer; }

public String getHttp_user_agent() { return http_user_agent; }

public void setHttp_user_agent(String http_user_agent) { this.http_user_agent = http_user_agent; }

public boolean isValid() { return valid; }

public void setValid(boolean valid) { this.valid = valid; }

@Override public String toString() {

StringBuilder sb = new StringBuilder(); sb.append(this.valid); sb.append("\001").append(this.remote_addr); sb.append("\001").append(this.remote_user); sb.append("\001").append(this.time_local); sb.append("\001").append(this.request); sb.append("\001").append(this.status); sb.append("\001").append(this.body_bytes_sent); sb.append("\001").append(this.http_referer); sb.append("\001").append(this.http_user_agent);

return sb.toString(); } } |

package com.atguigu.mapreduce.log; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper;

public class LogMapper extends Mapper<LongWritable, Text, Text, NullWritable>{ Text k = new Text();

@Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 1 获取1行 String line = value.toString();

// 2 解析日志是否合法 LogBean bean = parseLog(line);

if (!bean.isValid()) { return; }

k.set(bean.toString());

// 3 输出 context.write(k, NullWritable.get()); }

// 解析日志 private LogBean parseLog(String line) {

LogBean logBean = new LogBean();

// 1 截取 String[] fields = line.split(" ");

if (fields.length > 11) {

// 2封装数据 logBean.setRemote_addr(fields[0]); logBean.setRemote_user(fields[1]); logBean.setTime_local(fields[3].substring(1)); logBean.setRequest(fields[6]); logBean.setStatus(fields[8]); logBean.setBody_bytes_sent(fields[9]); logBean.setHttp_referer(fields[10]);

if (fields.length > 12) { logBean.setHttp_user_agent(fields[11] + " "+ fields[12]); }else { logBean.setHttp_user_agent(fields[11]); }

// 大于400,HTTP错误 if (Integer.parseInt(logBean.getStatus()) >= 400) { logBean.setValid(false); } }else { logBean.setValid(false); }

return logBean; } } |

package com.atguigu.mapreduce.log; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class LogDriver { public static void main(String[] args) throws Exception {

// 1 获取job信息 Configuration conf = new Configuration(); Job job = Job.getInstance(conf);

// 2 加载jar包 job.setJarByClass(LogDriver.class);

// 3 关联map job.setMapperClass(LogMapper.class);

// 4 设置最终输出类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(NullWritable.class);

// 5 设置输入和输出路径 FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 6 提交 job.waitForCompletion(true); } } |

3.10 MapReduce开发总结

第4章 Hadoop数据压缩

4.1 概述

4.2 MR支持的压缩编码

压缩格式 | hadoop自带? | 算法 | 文件扩展名 | 是否可切分 | 换成压缩格式后,原来的程序是否需要修改 |

DEFLATE | 是,直接使用 | DEFLATE | .deflate | 否 | 和文本处理一样,不需要修改 |

Gzip | 是,直接使用 | DEFLATE | .gz | 否 | 和文本处理一样,不需要修改 |

bzip2 | 是,直接使用 | bzip2 | .bz2 | 是 | 和文本处理一样,不需要修改 |

LZO | 否,需要安装 | LZO | .lzo | 是 | 需要建索引,还需要指定输入格式 |

Snappy | 否,需要安装 | Snappy | .snappy | 否 | 和文本处理一样,不需要修改 |

为了支持多种压缩/解压缩算法,Hadoop引入了编码/解码器,如下表所示。

压缩格式 | 对应的编码/解码器 |

DEFLATE | org.apache.hadoop.io.compress.DefaultCodec |

gzip | org.apache.hadoop.io.compress.GzipCodec |

bzip2 | org.apache.hadoop.io.compress.BZip2Codec |

LZO | com.hadoop.compression.lzo.LzopCodec |

Snappy | org.apache.hadoop.io.compress.SnappyCodec |

压缩算法 | 原始文件大小 | 压缩文件大小 | 压缩速度 | 解压速度 |

gzip | 8.3GB | 1.8GB | 17.5MB/s | 58MB/s |

bzip2 | 8.3GB | 1.1GB | 2.4MB/s | 9.5MB/s |

LZO | 8.3GB | 2.9GB | 49.3MB/s | 74.6MB/s |

http://google.github.io/snappy/

On a single core of a Core i7 processor in 64-bit mode, Snappy compresses at about 250 MB/sec or more and decompresses at about 500 MB/sec or more.

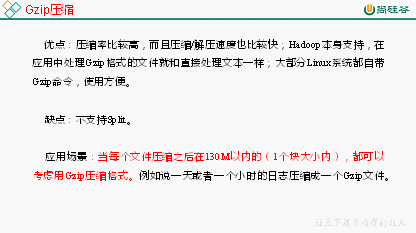

4.3 压缩方式选择

4.3.1 Gzip压缩

4.3.2 Bzip2压缩

4.3.3 Lzo压缩

4.3.4 Snappy压缩

4.4 压缩位置选择

压缩可以在MapReduce作用的任意阶段启用,如图4-22所示。

图4-22 MapReduce数据压缩

4.5 压缩参数配置

要在Hadoop中启用压缩,可以配置如下参数:

表4-10 配置参数

参数 | 默认值 | 阶段 | 建议 |

io.compression.codecs (在core-site.xml中配置) | org.apache.hadoop.io.compress.DefaultCodec, org.apache.hadoop.io.compress.GzipCodec, org.apache.hadoop.io.compress.BZip2Codec | 输入压缩 | Hadoop使用文件扩展名判断是否支持某种编解码器 |

mapreduce.map.output.compress(在mapred-site.xml中配置) | false | mapper输出 | 这个参数设为true启用压缩 |

mapreduce.map.output.compress.codec(在mapred-site.xml中配置) | org.apache.hadoop.io.compress.DefaultCodec | mapper输出 | 企业多使用LZO或Snappy编解码器在此阶段压缩数据 |

mapreduce.output.fileoutputformat.compress(在mapred-site.xml中配置) | false | reducer输出 | 这个参数设为true启用压缩 |

mapreduce.output.fileoutputformat.compress.codec(在mapred-site.xml中配置) | org.apache.hadoop.io.compress. DefaultCodec | reducer输出 | 使用标准工具或者编解码器,如gzip和bzip2 |

mapreduce.output.fileoutputformat.compress.type(在mapred-site.xml中配置) | RECORD | reducer输出 | SequenceFile输出使用的压缩类型:NONE和BLOCK |

4.6 压缩实操案例

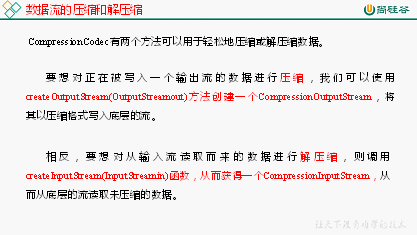

4.6.1 数据流的压缩和解压缩

测试一下如下压缩方式:

测试一下如下压缩方式:

表4-11

DEFLATE | org.apache.hadoop.io.compress.DefaultCodec |

gzip | org.apache.hadoop.io.compress.GzipCodec |

bzip2 | org.apache.hadoop.io.compress.BZip2Codec |

package com.atguigu.mapreduce.compress;

import java.io.File;

import java.io.FileInputStream;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.io.compress.CompressionCodec;

import org.apache.hadoop.io.compress.CompressionCodecFactory;

import org.apache.hadoop.io.compress.CompressionInputStream;

import org.apache.hadoop.io.compress.CompressionOutputStream;

import org.apache.hadoop.util.ReflectionUtils;

public class TestCompress {

public static void main(String[] args) throws Exception {

compress("e:/hello.txt","org.apache.hadoop.io.compress.BZip2Codec");

// decompress("e:/hello.txt.bz2");

}

// 1、压缩

private static void compress(String filename, String method) throws Exception {

// (1)获取输入流

FileInputStream fis = new FileInputStream(new File(filename));

Class codecClass = Class.forName(method);

CompressionCodec codec = (CompressionCodec) ReflectionUtils.newInstance(codecClass, new Configuration());

// (2)获取输出流

FileOutputStream fos = new FileOutputStream(new File(filename + codec.getDefaultExtension()));

CompressionOutputStream cos = codec.createOutputStream(fos);

// (3)流的对拷

IOUtils.copyBytes(fis, cos, 1024*1024*5, false);

// (4)关闭资源

cos.close();

fos.close();

fis.close();

}

// 2、解压缩

private static void decompress(String filename) throws FileNotFoundException, IOException {

// (0)校验是否能解压缩

CompressionCodecFactory factory = new CompressionCodecFactory(new Configuration());

CompressionCodec codec = factory.getCodec(new Path(filename));

if (codec == null) {

System.out.println("cannot find codec for file " + filename);

return;

}

// (1)获取输入流

CompressionInputStream cis = codec.createInputStream(new FileInputStream(new File(filename)));

// (2)获取输出流

FileOutputStream fos = new FileOutputStream(new File(filename + ".decoded"));

// (3)流的对拷

IOUtils.copyBytes(cis, fos, 1024*1024*5, false);

// (4)关闭资源

cis.close();

fos.close();

}

}

4.6.2 Map输出端采用压缩

即使你的MapReduce的输入输出文件都是未压缩的文件,你仍然可以对Map任务的中间结果输出做压缩,因为它要写在硬盘并且通过网络传输到Reduce节点,对其压缩可以提高很多性能,这些工作只要设置两个属性即可,我们来看下代码怎么设置。

1.给大家提供的Hadoop源码支持的压缩格式有:BZip2Codec 、DefaultCodec

package com.atguigu.mapreduce.compress; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.io.compress.BZip2Codec; import org.apache.hadoop.io.compress.CompressionCodec; import org.apache.hadoop.io.compress.GzipCodec; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCountDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration configuration = new Configuration();

// 开启map端输出压缩 configuration.setBoolean("mapreduce.map.output.compress", true); // 设置map端输出压缩方式 configuration.setClass("mapreduce.map.output.compress.codec", BZip2Codec.class, CompressionCodec.class);

Job job = Job.getInstance(configuration);

job.setJarByClass(WordCountDriver.class);

job.setMapperClass(WordCountMapper.class); job.setReducerClass(WordCountReducer.class);

job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class);

FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1]));

boolean result = job.waitForCompletion(true);

System.exit(result ? 1 : 0); } } |

2.Mapper保持不变

package com.atguigu.mapreduce.compress; import java.io.IOException; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper;

public class WordCountMapper extends Mapper<LongWritable, Text, Text, IntWritable>{

Text k = new Text(); IntWritable v = new IntWritable(1);

@Override protected void map(LongWritable key, Text value, Context context)throws IOException, InterruptedException {

// 1 获取一行 String line = value.toString();

// 2 切割 String[] words = line.split(" ");

// 3 循环写出 for(String word:words){ k.set(word); context.write(k, v); } } } |

3.Reducer保持不变

package com.atguigu.mapreduce.compress; import java.io.IOException; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer;

public class WordCountReducer extends Reducer<Text, IntWritable, Text, IntWritable>{

IntWritable v = new IntWritable();

@Override protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int sum = 0;

// 1 汇总 for(IntWritable value:values){ sum += value.get(); }

v.set(sum);

// 2 输出 context.write(key, v); } } |

4.6.3 Reduce输出端采用压缩

基于WordCount案例处理。

1.修改驱动

package com.atguigu.mapreduce.compress; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.io.compress.BZip2Codec; import org.apache.hadoop.io.compress.DefaultCodec; import org.apache.hadoop.io.compress.GzipCodec; import org.apache.hadoop.io.compress.Lz4Codec; import org.apache.hadoop.io.compress.SnappyCodec; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCountDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration configuration = new Configuration();

Job job = Job.getInstance(configuration);

job.setJarByClass(WordCountDriver.class);

job.setMapperClass(WordCountMapper.class); job.setReducerClass(WordCountReducer.class);

job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class);

FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 设置reduce端输出压缩开启 FileOutputFormat.setCompressOutput(job, true);

// 设置压缩的方式 FileOutputFormat.setOutputCompressorClass(job, BZip2Codec.class); // FileOutputFormat.setOutputCompressorClass(job, GzipCodec.class); // FileOutputFormat.setOutputCompressorClass(job, DefaultCodec.class);

boolean result = job.waitForCompletion(true);

System.exit(result?1:0); } } |

2.Mapper和Reducer保持不变(详见4.6.2)

第5章 Yarn资源调度器

Yarn是一个资源调度平台,负责为运算程序提供服务器运算资源,相当于一个分布式的操作系统平台,而MapReduce等运算程序则相当于运行于操作系统之上的应用程序。

5.1 Yarn基本架构

YARN主要由ResourceManager、NodeManager、ApplicationMaster和Container等组件构成,如图4-23所示。

图4-23 Yarn基本架构

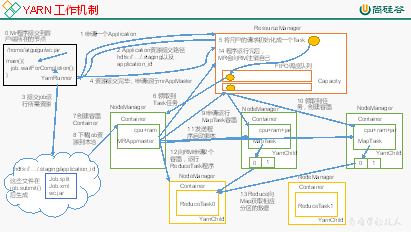

5.3 Yarn工作机制

1.Yarn运行机制,如图4-24所示。

图4-24 Yarn工作机制

2.工作机制详解

(1)MR程序提交到客户端所在的节点。

(2)YarnRunner向ResourceManager申请一个Application。

(3)RM将该应用程序的资源路径返回给YarnRunner。

(4)该程序将运行所需资源提交到HDFS上。

(5)程序资源提交完毕后,申请运行mrAppMaster。

(6)RM将用户的请求初始化成一个Task。

(7)其中一个NodeManager领取到Task任务。

(8)该NodeManager创建容器Container,并产生MRAppmaster。

(9)Container从HDFS上拷贝资源到本地。

(10)MRAppmaster向RM 申请运行MapTask资源。

(11)RM将运行MapTask任务分配给另外两个NodeManager,另两个NodeManager分别领取任务并创建容器。

(12)MR向两个接收到任务的NodeManager发送程序启动脚本,这两个NodeManager分别启动MapTask,MapTask对数据分区排序。

(13)MrAppMaster等待所有MapTask运行完毕后,向RM申请容器,运行ReduceTask。

(14)ReduceTask向MapTask获取相应分区的数据。

(15)程序运行完毕后,MR会向RM申请注销自己。

5.4 作业提交全过程

1.作业提交过程之YARN,如图4-25所示。

图4-25 作业提交过程之Yarn

作业提交全过程详解

(1)作业提交

第1步:Client调用job.waitForCompletion方法,向整个集群提交MapReduce作业。

第2步:Client向RM申请一个作业id。

第3步:RM给Client返回该job资源的提交路径和作业id。

第4步:Client提交jar包、切片信息和配置文件到指定的资源提交路径。

第5步:Client提交完资源后,向RM申请运行MrAppMaster。

(2)作业初始化

第6步:当RM收到Client的请求后,将该job添加到容量调度器中。

第7步:某一个空闲的NM领取到该Job。

第8步:该NM创建Container,并产生MRAppmaster。

第9步:下载Client提交的资源到本地。

(3)任务分配

第10步:MrAppMaster向RM申请运行多个MapTask任务资源。

第11步:RM将运行MapTask任务分配给另外两个NodeManager,另两个NodeManager分别领取任务并创建容器。

(4)任务运行

第12步:MR向两个接收到任务的NodeManager发送程序启动脚本,这两个NodeManager分别启动MapTask,MapTask对数据分区排序。

第13步:MrAppMaster等待所有MapTask运行完毕后,向RM申请容器,运行ReduceTask。

第14步:ReduceTask向MapTask获取相应分区的数据。

第15步:程序运行完毕后,MR会向RM申请注销自己。

(5)进度和状态更新

YARN中的任务将其进度和状态(包括counter)返回给应用管理器, 客户端每秒(通过mapreduce.client.progressmonitor.pollinterval设置)向应用管理器请求进度更新, 展示给用户。

(6)作业完成

除了向应用管理器请求作业进度外, 客户端每5秒都会通过调用waitForCompletion()来检查作业是否完成。时间间隔可以通过mapreduce.client.completion.pollinterval来设置。作业完成之后, 应用管理器和Container会清理工作状态。作业的信息会被作业历史服务器存储以备之后用户核查。

2.作业提交过程之MapReduce,如图4-26所示

图4-26 作业提交过程之MapReduce

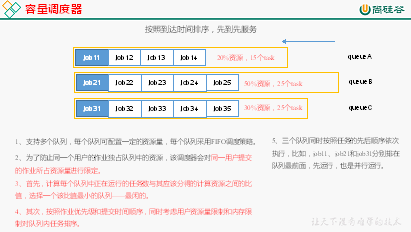

5.5 资源调度器

目前,Hadoop作业调度器主要有三种:FIFO、Capacity Scheduler和Fair Scheduler。Hadoop2.7.2默认的资源调度器是Capacity Scheduler。

具体设置详见:yarn-default.xml文件

<property>

<description>The class to use as the resource scheduler.</description>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler</value>

</property>

1.先进先出调度器(FIFO),如图4-27所示

图4-27 FIFO调度器

图4-27 FIFO调度器

2.容量调度器(Capacity Scheduler),如图4-28所示

图4-28容量调度器

图4-28容量调度器

3.公平调度器(Fair Scheduler),如图4-29所示

图4-29公平调度器

5.6 任务的推测执行

1.作业完成时间取决于最慢的任务完成时间

一个作业由若干个Map任务和Reduce任务构成。因硬件老化、软件Bug等,某些任务可能运行非常慢。

思考:系统中有99%的Map任务都完成了,只有少数几个Map老是进度很慢,完不成,怎么办?

2.推测执行机制

发现拖后腿的任务,比如某个任务运行速度远慢于任务平均速度。为拖后腿任务启动一个备份任务,同时运行。谁先运行完,则采用谁的结果。

3.执行推测任务的前提条件

(1)每个Task只能有一个备份任务

(2)当前Job已完成的Task必须不小于0.05(5%)

(3)开启推测执行参数设置。mapred-site.xml文件中默认是打开的。

<property>

<name>mapreduce.map.speculative</name>

<value>true</value>

<description>If true, then multiple instances of some map tasks may be executed in parallel.</description>

</property>

<property>

<name>mapreduce.reduce.speculative</name>

<value>true</value>

<description>If true, then multiple instances of some reduce tasks may be executed in parallel.</description>

</property>

4.不能启用推测执行机制情况

(1)任务间存在严重的负载倾斜;

(2)特殊任务,比如任务向数据库中写数据。

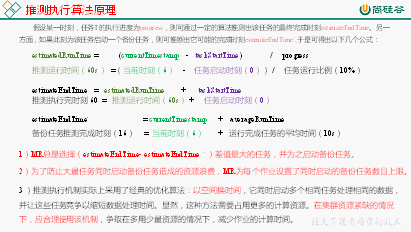

5.算法原理,如图4-20所示

图4-30 推测执行算法原理

第6章 Hadoop企业优化

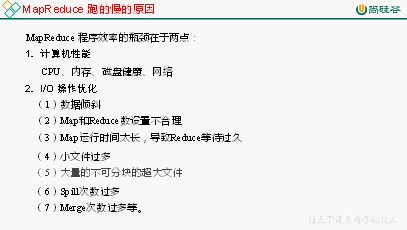

6.1 MapReduce 跑的慢的原因

6.2 MapReduce优化方法

MapReduce优化方法主要从六个方面考虑:数据输入、Map阶段、Reduce阶段、IO传输、数据倾斜问题和常用的调优参数。

6.2.1 数据输入

6.2.2 Map阶段

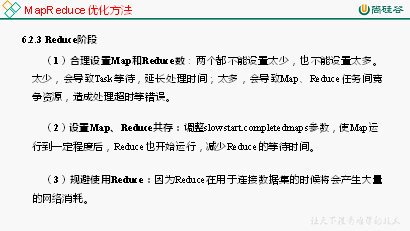

6.2.3 Reduce阶段

6.2.4 I/O传输

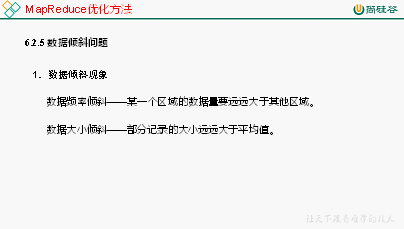

6.2.5 数据倾斜问题

6.2.6 常用的调优参数

1.资源相关参数

(1)以下参数是在用户自己的MR应用程序中配置就可以生效(mapred-default.xml)

表4-12

配置参数 | 参数说明 |

mapreduce.map.memory.mb | 一个MapTask可使用的资源上限(单位:MB),默认为1024。如果MapTask实际使用的资源量超过该值,则会被强制杀死。 |

mapreduce.reduce.memory.mb | 一个ReduceTask可使用的资源上限(单位:MB),默认为1024。如果ReduceTask实际使用的资源量超过该值,则会被强制杀死。 |

mapreduce.map.cpu.vcores | 每个MapTask可使用的最多cpu core数目,默认值: 1 |

mapreduce.reduce.cpu.vcores | 每个ReduceTask可使用的最多cpu core数目,默认值: 1 |

mapreduce.reduce.shuffle.parallelcopies | 每个Reduce去Map中取数据的并行数。默认值是5 |

mapreduce.reduce.shuffle.merge.percent | Buffer中的数据达到多少比例开始写入磁盘。默认值0.66 |

mapreduce.reduce.shuffle.input.buffer.percent | Buffer大小占Reduce可用内存的比例。默认值0.7 |

mapreduce.reduce.input.buffer.percent | 指定多少比例的内存用来存放Buffer中的数据,默认值是0.0 |

(2)应该在YARN启动之前就配置在服务器的配置文件中才能生效(yarn-default.xml)

表4-13

配置参数 | 参数说明 |

yarn.scheduler.minimum-allocation-mb | 给应用程序Container分配的最小内存,默认值:1024 |

yarn.scheduler.maximum-allocation-mb | 给应用程序Container分配的最大内存,默认值:8192 |

yarn.scheduler.minimum-allocation-vcores | 每个Container申请的最小CPU核数,默认值:1 |

yarn.scheduler.maximum-allocation-vcores | 每个Container申请的最大CPU核数,默认值:32 |

yarn.nodemanager.resource.memory-mb | 给Containers分配的最大物理内存,默认值:8192 |

(3)Shuffle性能优化的关键参数,应在YARN启动之前就配置好(mapred-default.xml)

表4-14

配置参数 | 参数说明 |

mapreduce.task.io.sort.mb | Shuffle的环形缓冲区大小,默认100m |

mapreduce.map.sort.spill.percent | 环形缓冲区溢出的阈值,默认80% |

2.容错相关参数(MapReduce性能优化)

表4-15

配置参数 | 参数说明 |

mapreduce.map.maxattempts | 每个Map Task最大重试次数,一旦重试参数超过该值,则认为Map Task运行失败,默认值:4。 |

mapreduce.reduce.maxattempts | 每个Reduce Task最大重试次数,一旦重试参数超过该值,则认为Map Task运行失败,默认值:4。 |

mapreduce.task.timeout | Task超时时间,经常需要设置的一个参数,该参数表达的意思为:如果一个Task在一定时间内没有任何进入,即不会读取新的数据,也没有输出数据,则认为该Task处于Block状态,可能是卡住了,也许永远会卡住,为了防止因为用户程序永远Block住不退出,则强制设置了一个该超时时间(单位毫秒),默认是600000。如果你的程序对每条输入数据的处理时间过长(比如会访问数据库,通过网络拉取数据等),建议将该参数调大,该参数过小常出现的错误提示是"AttemptID:attempt_14267829456721_123456_m_000224_0 Timed out after 300 secsContainer killed by the ApplicationMaster."。 |

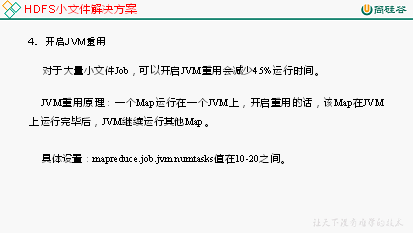

6.3 HDFS小文件优化方法

6.3.1 HDFS小文件弊端

HDFS上每个文件都要在NameNode上建立一个索引,这个索引的大小约为150byte,这样当小文件比较多的时候,就会产生很多的索引文件,一方面会大量占用NameNode的内存空间,另一方面就是索引文件过大使得索引速度变慢。

6.3.2 HDFS小文件解决方案

小文件的优化无非以下几种方式:

(1)在数据采集的时候,就将小文件或小批数据合成大文件再上传HDFS。

(2)在业务处理之前,在HDFS上使用MapReduce程序对小文件进行合并。

(3)在MapReduce处理时,可采用CombineTextInputFormat提高效率。

第7章 MapReduce扩展案例

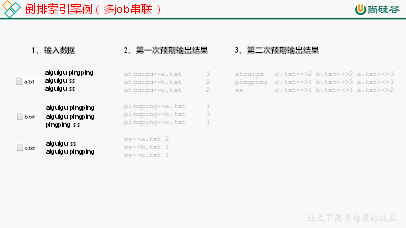

7.1 倒排索引案例(多job串联)

1.需求

有大量的文本(文档、网页),需要建立搜索索引,如图4-31所示。

(1)数据输入

(2)期望输出数据

atguigu c.txt-->2 b.txt-->2 a.txt-->3

pingping c.txt-->1 b.txt-->3 a.txt-->1

ss c.txt-->1 b.txt-->1 a.txt-->2

2.需求分析

3.第一次处理

(1)第一次处理,编写OneIndexMapper类

package com.atguigu.mapreduce.index; import java.io.IOException; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.lib.input.FileSplit;

public class OneIndexMapper extends Mapper<LongWritable, Text, Text, IntWritable>{

String name; Text k = new Text(); IntWritable v = new IntWritable();

@Override protected void setup(Context context)throws IOException, InterruptedException {

// 获取文件名称 FileSplit split = (FileSplit) context.getInputSplit();

name = split.getPath().getName(); }

@Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 1 获取1行 String line = value.toString();

// 2 切割 String[] fields = line.split(" ");

for (String word : fields) {

// 3 拼接 k.set(word+"--"+name); v.set(1);

// 4 写出 context.write(k, v); } } } |

(2)第一次处理,编写OneIndexReducer类

package com.atguigu.mapreduce.index; import java.io.IOException; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer;

public class OneIndexReducer extends Reducer<Text, IntWritable, Text, IntWritable>{

IntWritable v = new IntWritable();

@Override protected void reduce(Text key, Iterable<IntWritable> values,Context context) throws IOException, InterruptedException {

int sum = 0;

// 1 累加求和 for(IntWritable value: values){ sum +=value.get(); }

v.set(sum);

// 2 写出 context.write(key, v); } } |

(3)第一次处理,编写OneIndexDriver类

package com.atguigu.mapreduce.index; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class OneIndexDriver {

public static void main(String[] args) throws Exception {

// 输入输出路径需要根据自己电脑上实际的输入输出路径设置 args = new String[] { "e:/input/inputoneindex", "e:/output5" };

Configuration conf = new Configuration();

Job job = Job.getInstance(conf); job.setJarByClass(OneIndexDriver.class);

job.setMapperClass(OneIndexMapper.class); job.setReducerClass(OneIndexReducer.class);

job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class);

FileInputFormat.setInputPaths(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.waitForCompletion(true); } } |

(4)查看第一次输出结果

atguigu--a.txt 3 atguigu--b.txt 2 atguigu--c.txt 2 pingping--a.txt 1 pingping--b.txt 3 pingping--c.txt 1 ss--a.txt 2 ss--b.txt 1 ss--c.txt 1 |

4.第二次处理

(1)第二次处理,编写TwoIndexMapper类

package com.atguigu.mapreduce.index; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper;

public class TwoIndexMapper extends Mapper<LongWritable, Text, Text, Text>{

Text k = new Text(); Text v = new Text();

@Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 1 获取1行数据 String line = value.toString();

// 2用"--"切割 String[] fields = line.split("--");

k.set(fields[0]); v.set(fields[1]);

// 3 输出数据 context.write(k, v); } } |

(2)第二次处理,编写TwoIndexReducer类

package com.atguigu.mapreduce.index; import java.io.IOException; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; public class TwoIndexReducer extends Reducer<Text, Text, Text, Text> {

Text v = new Text();

@Override protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException { // atguigu a.txt 3 // atguigu b.txt 2 // atguigu c.txt 2

// atguigu c.txt-->2 b.txt-->2 a.txt-->3

StringBuilder sb = new StringBuilder();