Hadoop WordCount程序

一、把所有Hadoop的依赖jar包导入buildpath,不用一个一个调,都导一遍就可以,因为是一个工程,所以覆盖是没有问题的

二、写wordcount程序

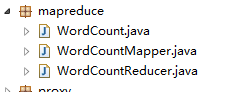

1.工程目录结构如下:

2.写mapper程序:

package mapreduce; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; /** * LongWritable想当于long,这个是Hadoop特有的类型,因为是要网络间通信远程执行的,所以需要序列化,这个封装类型的序列化效果更好 * Text等于String * @author Q * */ public class WordCountMapper extends Mapper<LongWritable, Text, Text, LongWritable>{ @Override protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, LongWritable>.Context context) throws IOException, InterruptedException { // key为字符偏移量 value为一行的内容 String line = value.toString(); String[] words = line.split(" "); //发送 <key,1>键值对到reducer for(String word:words){ context.write(new Text(word), new LongWritable(1)); } } }

2.写reducer程序

package mapreduce; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; public class WordCountReducer extends Reducer<Text, LongWritable, Text, LongWritable>{ @Override protected void reduce(Text key, Iterable<LongWritable> values, Reducer<Text, LongWritable, Text, LongWritable>.Context context) throws IOException, InterruptedException { long sub=0; //接收到<key,value>键值对,此时key为单词,value是一个迭代器,可以看成例如:<"hello",List{1,1,1,1,1}> for(LongWritable value:values){ sub+=value.get(); } context.write(key, new LongWritable(sub)); } }

3.写main函数

package mapreduce; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class WordCount { public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); // 获取job对象,用来提交MapReduce任务 Job job = Job.getInstance(conf); // 通过class路径设置jar job.setJarByClass(WordCount.class); // 设置mapper和reducer类 job.setMapperClass(WordCountMapper.class); job.setReducerClass(WordCountReducer.class); // 设置mapper的输出键值对的类型 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(LongWritable.class); // 设置reducer的输出键值对 job.setOutputKeyClass(Text.class); job.setOutputValueClass(Text.class); // 输入输出文件的路径 FileInputFormat.setInputPaths(job, new Path("hdfs://hadoop1:9000/wordcount/data/")); FileOutputFormat.setOutputPath(job, new Path("hdfs://hadoop1:9000/wordcount/output1")); //提交任务 job.waitForCompletion(true); } }

4.将工程打包成jar文件传到Linux平台上

打包:鼠标放在工程上右键---》点击export--》java--》JAE File

Linux上运行jar包的命令是

hadoop jar wordcount.jar mapreduce.WordCount 有两个参数,第一个参数是你打包的jar的名字,第二个参数是你的main函数的全限定名

小鹏

浙公网安备 33010602011771号

浙公网安备 33010602011771号