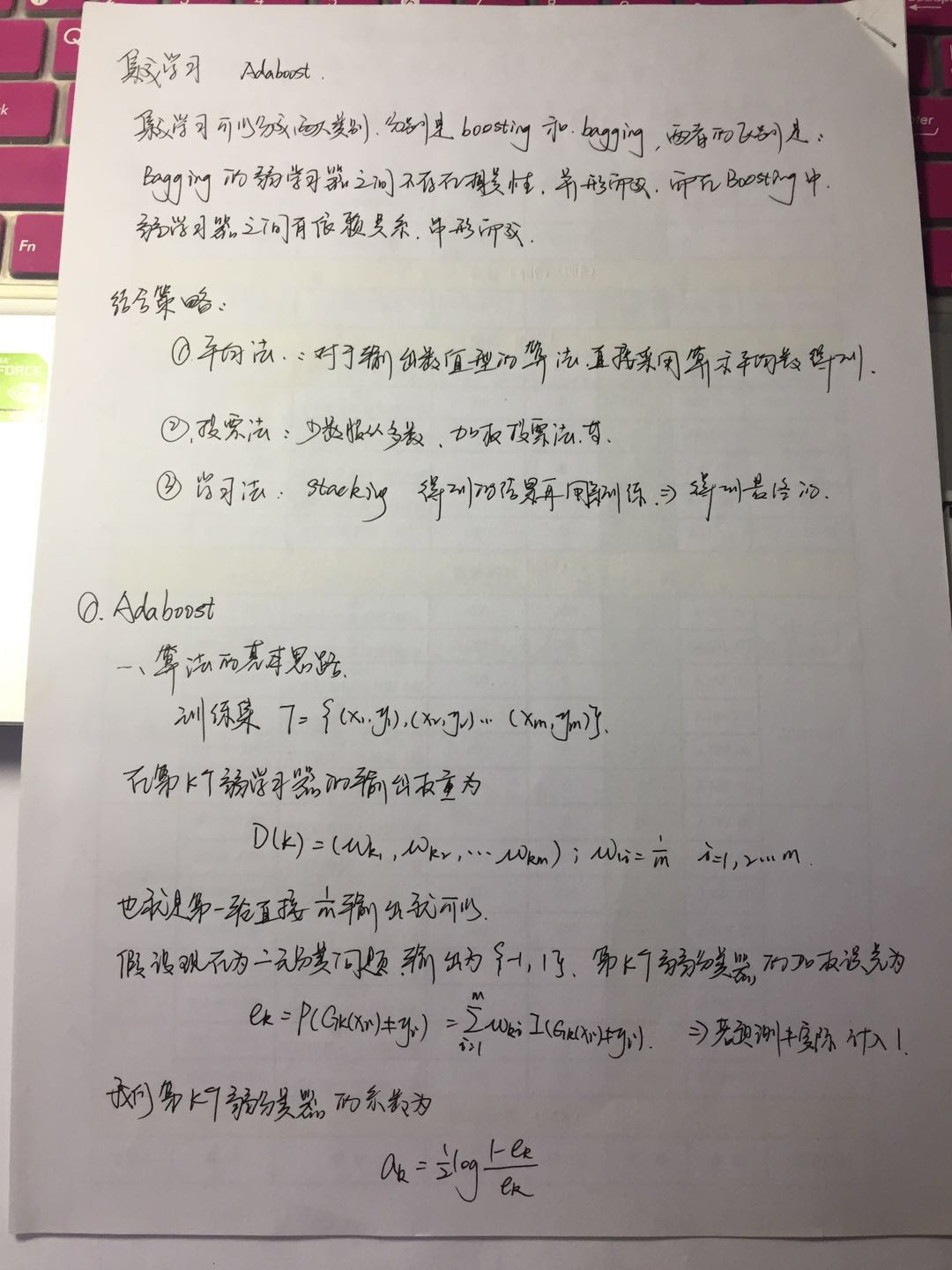

机器学习—集成学习(Adaboost)

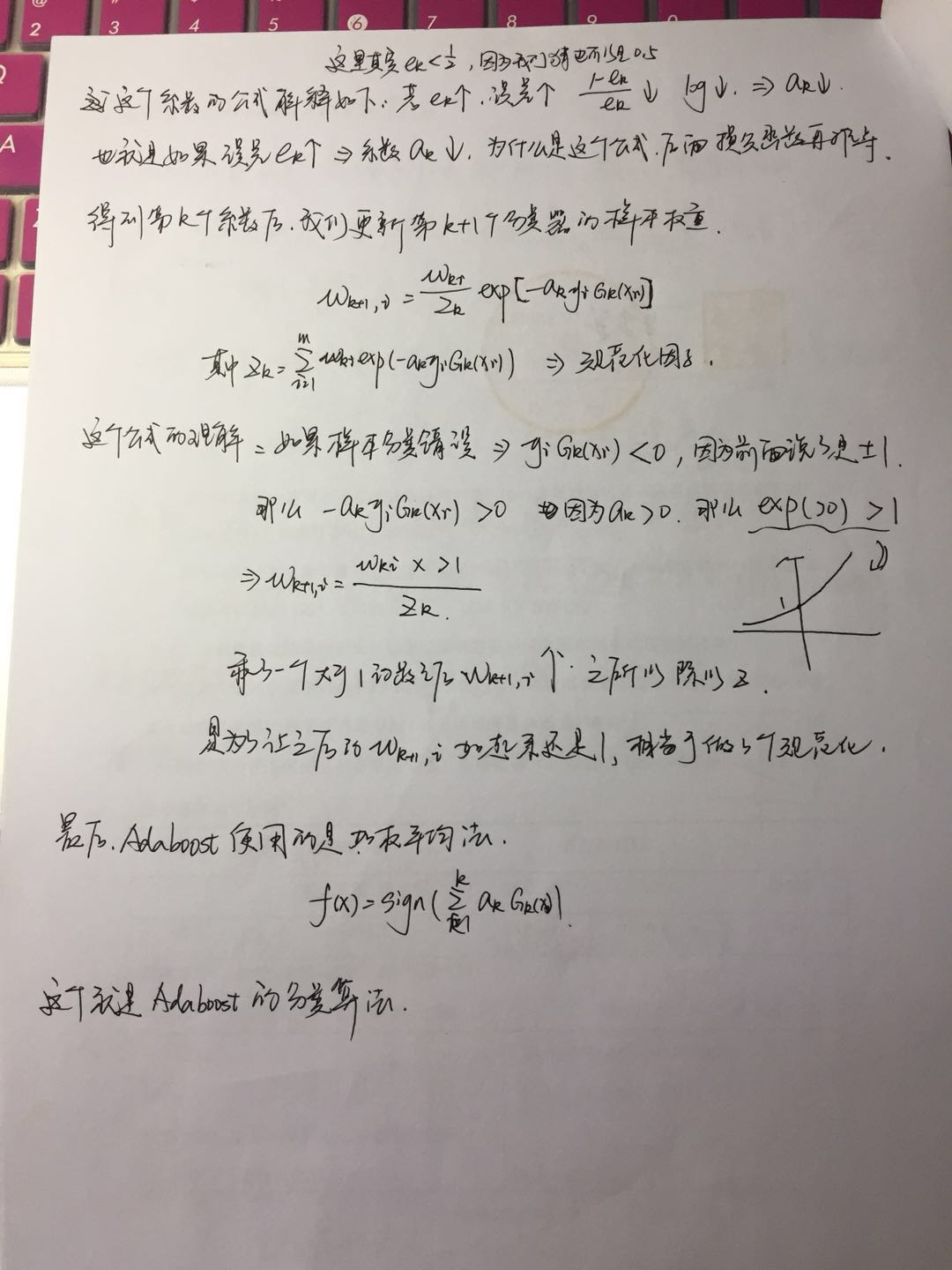

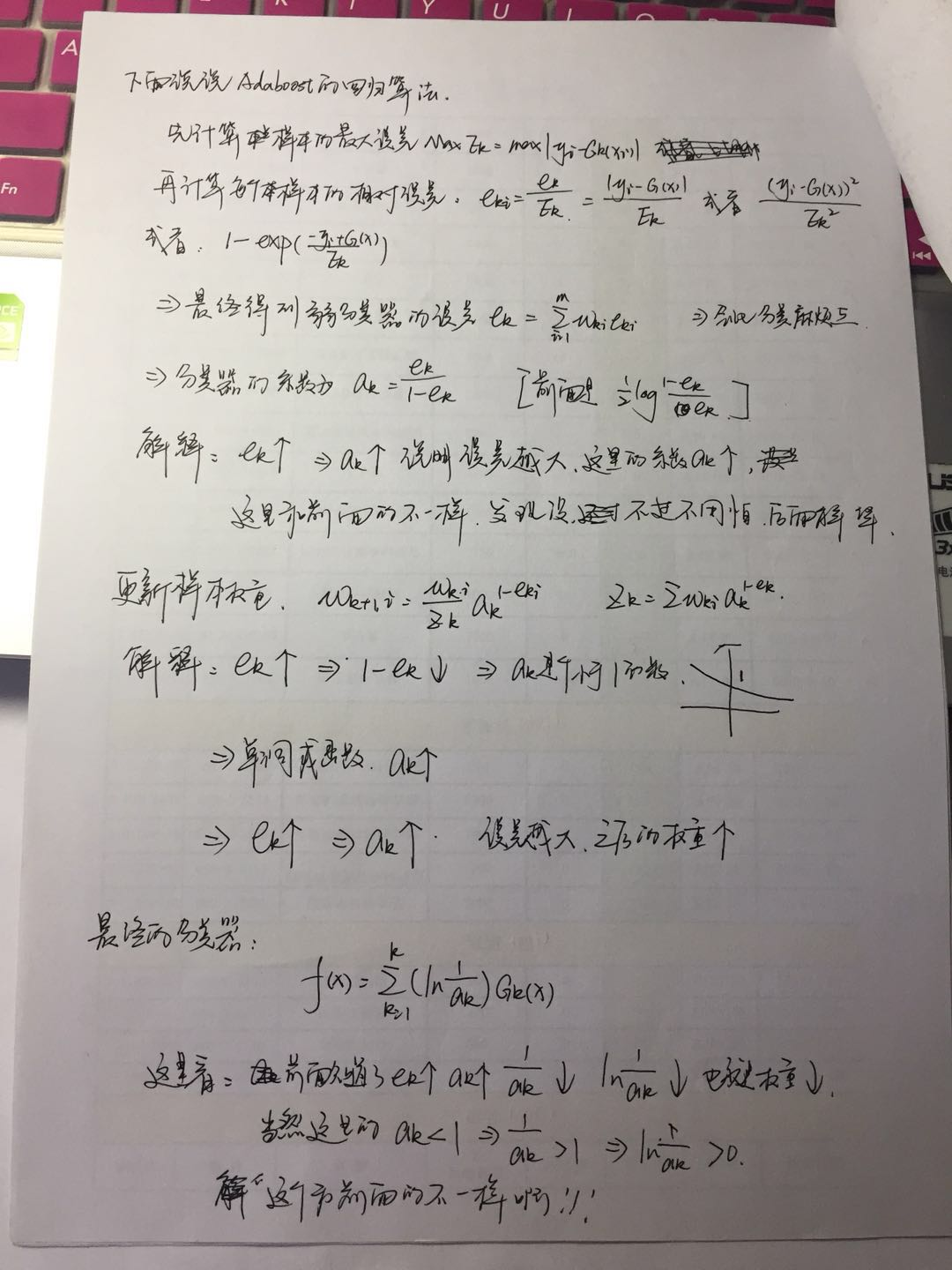

一、原理部分:

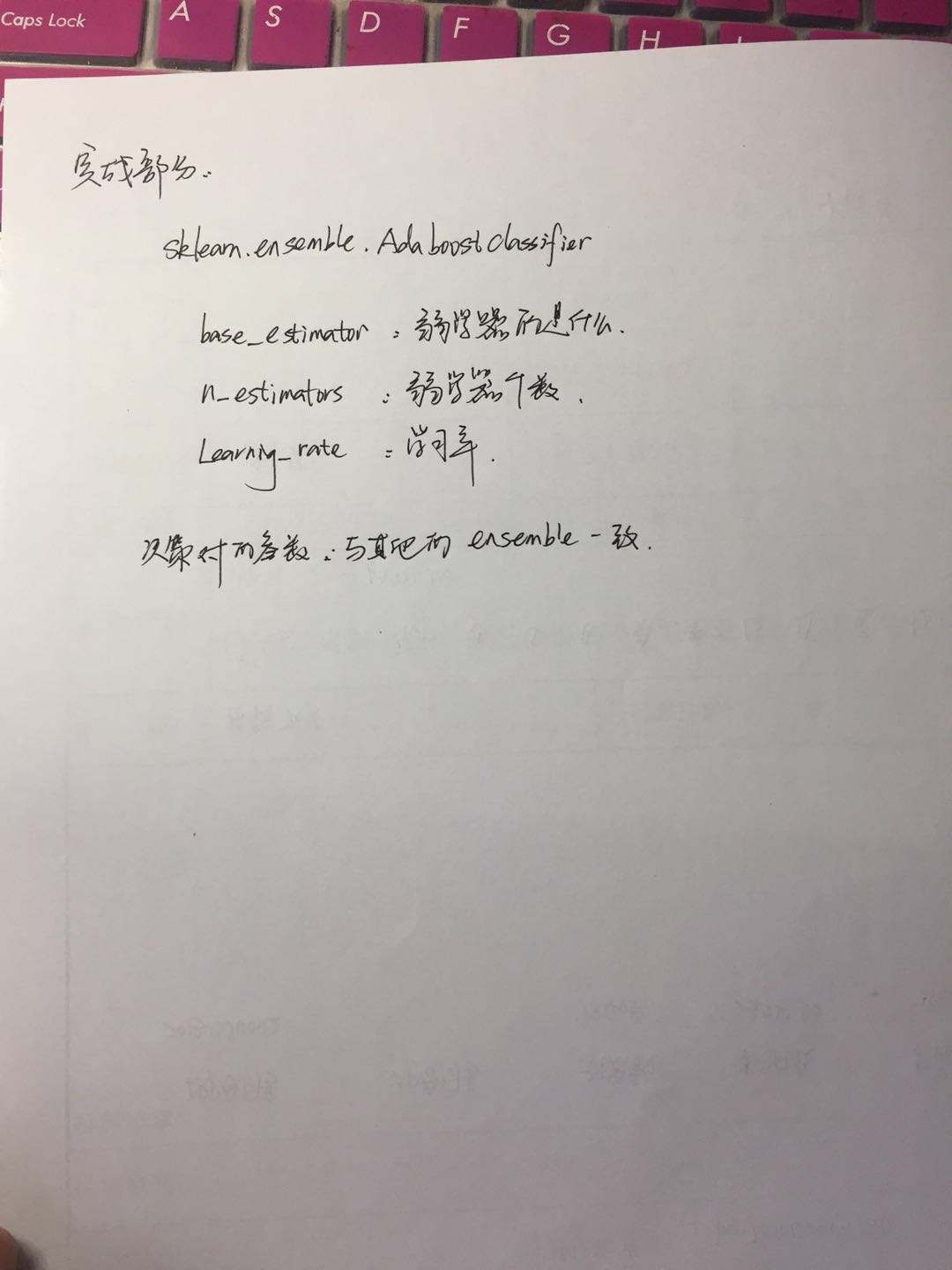

二、sklearn实现:

from sklearn.ensemble import AdaBoostClassifier from sklearn.datasets import load_digits from sklearn.model_selection import train_test_split from sklearn.model_selection import GridSearchCV from sklearn.preprocessing import StandardScaler from sklearn.metrics import accuracy_score import numpy as np from sklearn.tree import DecisionTreeClassifier digits = load_digits() x_data = digits.data y_data = digits.target x_train,x_test,y_train,y_test = train_test_split(x_data,y_data,random_state = 1) #第一轮,确定n adaboost = AdaBoostClassifier(DecisionTreeClassifier(max_depth=6),n_estimators=150) model_ada = GridSearchCV(adaboost,param_grid=({'learning_rate':[0.7,0.8]})) model_ada.fit(x_train,y_train) print(model_ada.best_params_) y_hat = model_ada.predict(x_test) print(accuracy_score(y_hat,y_test)) #adaboost中没有max_depth参数,还要自己加入DecisionTreeClassifier