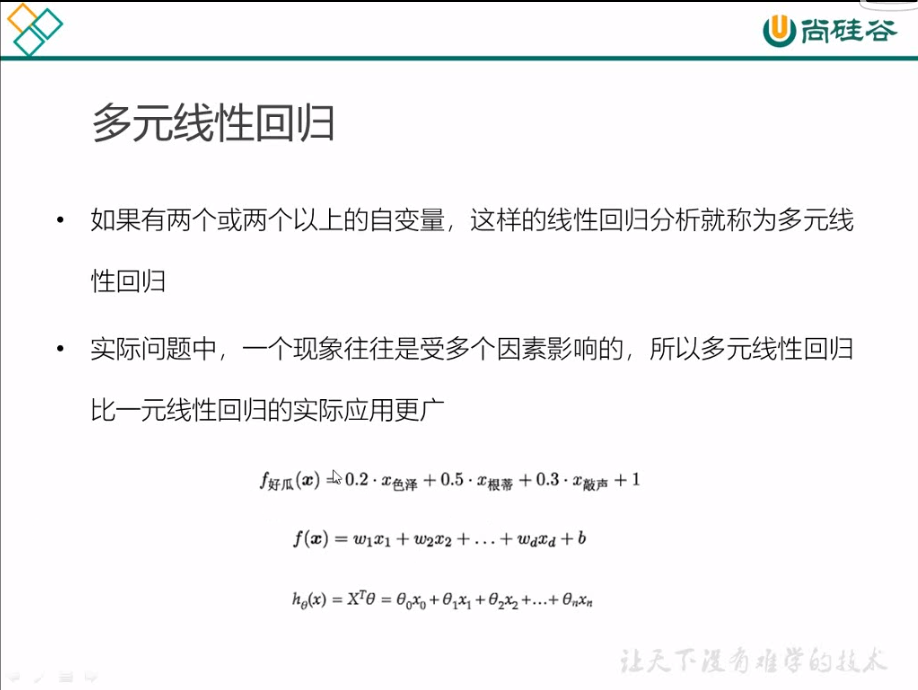

机器学习模型与算法 ----- 多元线性回归

python代码实现:

import numpy as np import matplotlib.pyplot as plt

points = np.genfromtxt('data.csv',delimiter=',') # points x = points[:, 0] y = points[:, 1] plt.scatter(x, y)#散点图 plt.show()

# 定义损失函数

def compute_cost(w, b, points): total_cost = 0 M = len(points) #逐点计算平方损失误差,然后求平均数 for i in range(M): x = points[i, 0] y = points[i, 1] total_cost += (y - w * x -b) ** 2 return total_cost/M

# 定义模型的超参数

alpha = 0.0001 initial_w = 0 initial_b = 0 num_iter = 10

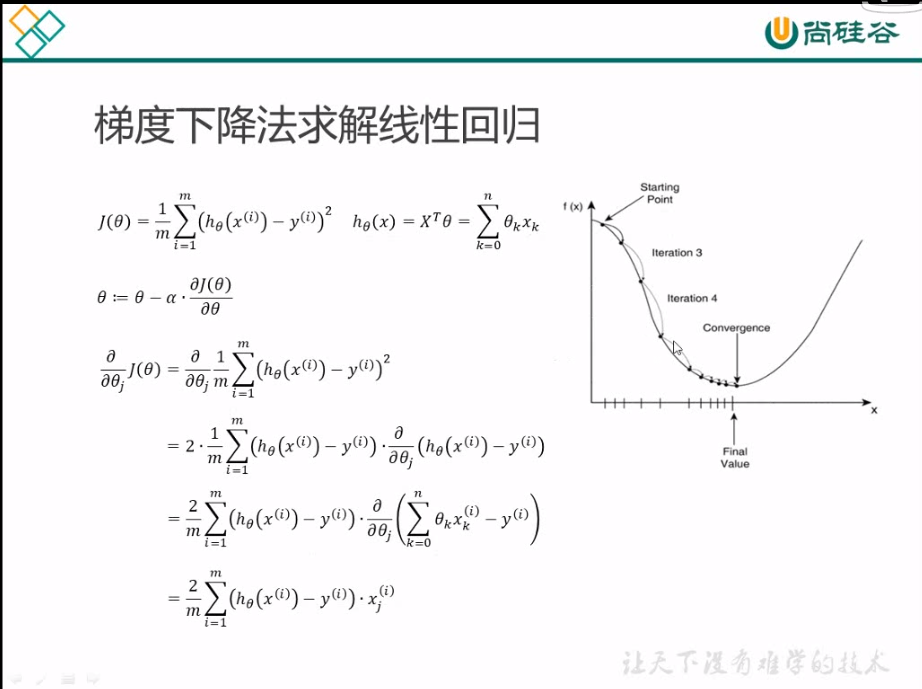

# 定义核心梯度下降函数

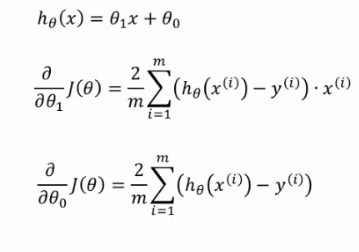

def grad_desc(points, initial_w, initial_b, alpha, num_iter): w = initial_w b = initial_b # 定义一个列表,保存所有的损失函数值,用来显示下降过程 cost_list = [] for i in range(num_iter): # 设置迭代 实现下降 cost_list.append(compute_cost(w, b, points)) w, b = step_grad_desc(w, b, alpha, points) return [w, b, cost_list] def step_grad_desc( current_w, current_b, alpha, points): sum_grad_w = 0 sum_grad_b = 0 M = len(points) #对每个点带入公式求和 for i in range(M): x = points[i, 0] y = points[i, 1] sum_grad_w += ( current_w * x + current_b - y) * x sum_grad_w += current_w * x + current_b - y

# 用公式求当前梯度 grad_w = 2/M * sum_grad_w grad_b = 2/M * sum_grad_b

# 梯度下降,更新当前的w和b updated_w = current_w - alpha * grad_w updated_b = current_b - alpha * grad_b return updated_w, updated_b

# 调用运行梯度下降算法计算最优的w和b

w, b, cost_list = grad_desc(points, initial_w, initial_b, alpha, num_iter) print("w is: ", w) print("b is: ", b) cost = compute_cost(w, b, points)# 损失 print("cost is: ", cost) plt.plot(cost_list) plt.show()

# 画出拟合曲线

plt.scatter(x, y) pred_y = w * x + b plt.plot(x, pred_y, c='r') plt.show()

hello my world

本文来自博客园,作者:slowlydance2me,转载请注明原文链接:https://www.cnblogs.com/slowlydance2me/p/16850249.html

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY